You are viewing a plain text version of this content. The canonical link for it is here.

Posted to issues@iceberg.apache.org by GitBox <gi...@apache.org> on 2020/07/29 07:59:34 UTC

[GitHub] [iceberg] HeartSaVioR opened a new pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

HeartSaVioR opened a new pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261

This patch adds the guide about using structured streaming sink for Iceberg, which is not documented yet.

The usage itself is pretty simple, but there're some points end users would like to know in prior to write the table with streaming query, so the patch also adds some known guides on maintaining the table.

Snapshot follows (this will be added before `inspecting table`):

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] rdblue commented on a change in pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

rdblue commented on a change in pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#discussion_r463337299

##########

File path: site/docs/spark-structured-streaming.md

##########

@@ -0,0 +1,184 @@

+<!--

+ - Licensed to the Apache Software Foundation (ASF) under one or more

+ - contributor license agreements. See the NOTICE file distributed with

+ - this work for additional information regarding copyright ownership.

+ - The ASF licenses this file to You under the Apache License, Version 2.0

+ - (the "License"); you may not use this file except in compliance with

+ - the License. You may obtain a copy of the License at

+ -

+ - http://www.apache.org/licenses/LICENSE-2.0

+ -

+ - Unless required by applicable law or agreed to in writing, software

+ - distributed under the License is distributed on an "AS IS" BASIS,

+ - WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ - See the License for the specific language governing permissions and

+ - limitations under the License.

+ -->

+

+# Spark Structured Streaming

+

+Iceberg uses Apache Spark's DataSourceV2 API for data source and catalog implementations. Spark DSv2 is an evolving API

+with different levels of support in Spark versions.

+

+As of Spark 3.0, the new API on reading/writing table on table identifier is not yet added on streaming query.

+

+| Feature support | Spark 3.0| Spark 2.4 | Notes |

+|--------------------------------------------------|----------|------------|------------------------------------------------|

+| [DataFrame write](#writing-with-streaming-query) | ✔ | ✔ | |

+

+## Writing with streaming query

+

+To write values from streaming query to Iceberg table, use `DataStreamWriter`:

+

+```scala

+data.writeStream

+ .format("iceberg")

+ .outputMode("append")

+ .trigger(Trigger.ProcessingTime(1, TimeUnit.MINUTES))

+ .option("path", pathToTable)

+ .option("checkpointLocation", checkpointPath)

+ .start()

+```

+

+Iceberg supports below output modes:

+

+* append

+* complete

+

+The table should be created in prior to start the streaming query.

+

+## Maintenance

+

+Streaming queries can create new table versions quickly, which creates lots of table metadata to track those versions.

+Maintaining metadata by tuning the rate of commits, expiring old snapshots, and automatically cleaning up metadata files

+is highly recommended.

+

+### Tune the rate of commits

+

+Having high rate of commits would produce lots of data files, manifests, and snapshots which leads the table hard

+to maintain. We encourage having trigger interval 1 minute at minimum, and increase the interval if you encounter

+issues.

Review comment:

How is this configured? A link to the relevant Spark docs and a quick summary would be really useful.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] rdblue commented on a change in pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

rdblue commented on a change in pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#discussion_r463336929

##########

File path: site/docs/spark-structured-streaming.md

##########

@@ -0,0 +1,184 @@

+<!--

+ - Licensed to the Apache Software Foundation (ASF) under one or more

+ - contributor license agreements. See the NOTICE file distributed with

+ - this work for additional information regarding copyright ownership.

+ - The ASF licenses this file to You under the Apache License, Version 2.0

+ - (the "License"); you may not use this file except in compliance with

+ - the License. You may obtain a copy of the License at

+ -

+ - http://www.apache.org/licenses/LICENSE-2.0

+ -

+ - Unless required by applicable law or agreed to in writing, software

+ - distributed under the License is distributed on an "AS IS" BASIS,

+ - WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ - See the License for the specific language governing permissions and

+ - limitations under the License.

+ -->

+

+# Spark Structured Streaming

+

+Iceberg uses Apache Spark's DataSourceV2 API for data source and catalog implementations. Spark DSv2 is an evolving API

+with different levels of support in Spark versions.

+

+As of Spark 3.0, the new API on reading/writing table on table identifier is not yet added on streaming query.

+

+| Feature support | Spark 3.0| Spark 2.4 | Notes |

+|--------------------------------------------------|----------|------------|------------------------------------------------|

+| [DataFrame write](#writing-with-streaming-query) | ✔ | ✔ | |

+

+## Writing with streaming query

+

+To write values from streaming query to Iceberg table, use `DataStreamWriter`:

+

+```scala

+data.writeStream

+ .format("iceberg")

+ .outputMode("append")

+ .trigger(Trigger.ProcessingTime(1, TimeUnit.MINUTES))

+ .option("path", pathToTable)

+ .option("checkpointLocation", checkpointPath)

+ .start()

+```

+

+Iceberg supports below output modes:

+

+* append

+* complete

Review comment:

It would be great to document what these do:

* `append` - appends the output of every micro-batch to the table

* `complete` - replaces the table contents every micro-batch

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] rdblue commented on pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

rdblue commented on pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#issuecomment-680304120

@HeartSaVioR, my main feedback for this PR is that it does a great job describing table maintenance options and that those should be separated into a "Table maintenance" page. Rather than ask you to do far more work to get it in, I did some editing and opened a PR against your branch with the change. Could you take a look at https://github.com/HeartSaVioR/iceberg/pull/1?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] HeartSaVioR commented on a change in pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

HeartSaVioR commented on a change in pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#discussion_r461978682

##########

File path: site/docs/spark.md

##########

@@ -520,6 +520,28 @@ data.writeTo("prod.db.table")

.createOrReplace()

```

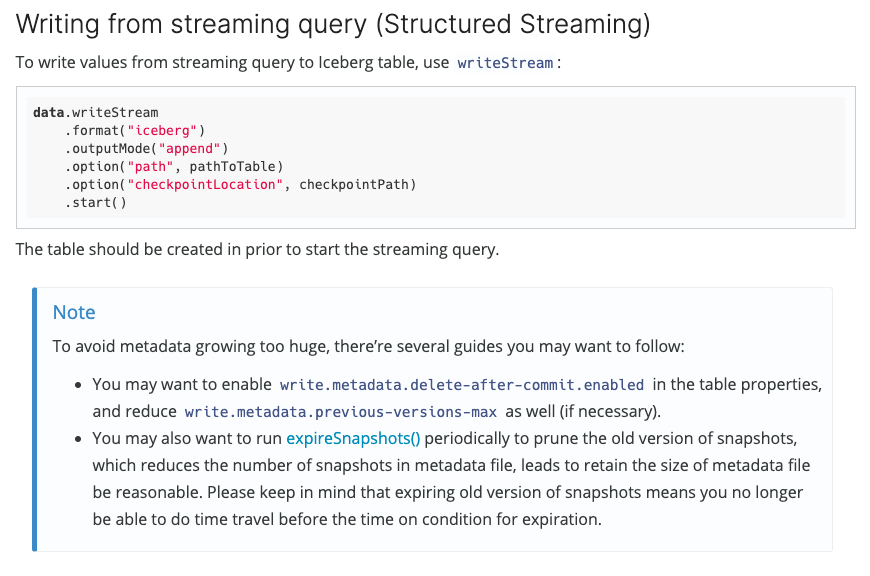

+### Writing from streaming query (Structured Streaming)

+

+To write values from streaming query to Iceberg table, use `writeStream`:

+

+```scala

+data.writeStream

+ .format("iceberg")

+ .outputMode("append")

+ .option("path", pathToTable)

+ .option("checkpointLocation", checkpointPath)

+ .start()

Review comment:

DSv2 still doesn't have table access for streaming. I see TODO in `writeTo`, but no one works on that. I'm planning to look into it. As of now, this is the only way across Spark versions.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] kbendick commented on a change in pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

kbendick commented on a change in pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#discussion_r466053084

##########

File path: site/docs/spark-structured-streaming.md

##########

@@ -0,0 +1,184 @@

+<!--

+ - Licensed to the Apache Software Foundation (ASF) under one or more

+ - contributor license agreements. See the NOTICE file distributed with

+ - this work for additional information regarding copyright ownership.

+ - The ASF licenses this file to You under the Apache License, Version 2.0

+ - (the "License"); you may not use this file except in compliance with

+ - the License. You may obtain a copy of the License at

+ -

+ - http://www.apache.org/licenses/LICENSE-2.0

+ -

+ - Unless required by applicable law or agreed to in writing, software

+ - distributed under the License is distributed on an "AS IS" BASIS,

+ - WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ - See the License for the specific language governing permissions and

+ - limitations under the License.

+ -->

+

+# Spark Structured Streaming

+

+Iceberg uses Apache Spark's DataSourceV2 API for data source and catalog implementations. Spark DSv2 is an evolving API

+with different levels of support in Spark versions.

+

+As of Spark 3.0, the new API on reading/writing table on table identifier is not yet added on streaming query.

+

+| Feature support | Spark 3.0| Spark 2.4 | Notes |

+|--------------------------------------------------|----------|------------|------------------------------------------------|

+| [DataFrame write](#writing-with-streaming-query) | ✔ | ✔ | |

+

+## Writing with streaming query

+

+To write values from streaming query to Iceberg table, use `DataStreamWriter`:

+

+```scala

+data.writeStream

+ .format("iceberg")

+ .outputMode("append")

+ .trigger(Trigger.ProcessingTime(1, TimeUnit.MINUTES))

+ .option("path", pathToTable)

+ .option("checkpointLocation", checkpointPath)

+ .start()

+```

+

+Iceberg supports below output modes:

+

+* append

+* complete

+

+The table should be created in prior to start the streaming query.

+

+## Maintenance

+

+Streaming queries can create new table versions quickly, which creates lots of table metadata to track those versions.

+Maintaining metadata by tuning the rate of commits, expiring old snapshots, and automatically cleaning up metadata files

+is highly recommended.

+

+### Tune the rate of commits

+

+Having high rate of commits would produce lots of data files, manifests, and snapshots which leads the table hard

+to maintain. We encourage having trigger interval 1 minute at minimum, and increase the interval if you encounter

+issues.

Review comment:

I agree with @HeartSaVioR that this is essential knowledge on structured streaming. However, if you wanted to add a link to trigger intervals specifically, the `latest` (kept up to date) link would likely be https://spark.apache.org/docs/latest/structured-streaming-programming-guide.html#triggers

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] HeartSaVioR commented on a change in pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

HeartSaVioR commented on a change in pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#discussion_r463020594

##########

File path: site/docs/spark-structured-streaming.md

##########

@@ -0,0 +1,184 @@

+<!--

+ - Licensed to the Apache Software Foundation (ASF) under one or more

+ - contributor license agreements. See the NOTICE file distributed with

+ - this work for additional information regarding copyright ownership.

+ - The ASF licenses this file to You under the Apache License, Version 2.0

+ - (the "License"); you may not use this file except in compliance with

+ - the License. You may obtain a copy of the License at

+ -

+ - http://www.apache.org/licenses/LICENSE-2.0

+ -

+ - Unless required by applicable law or agreed to in writing, software

+ - distributed under the License is distributed on an "AS IS" BASIS,

+ - WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ - See the License for the specific language governing permissions and

+ - limitations under the License.

+ -->

+

+# Spark Structured Streaming

+

+Iceberg uses Apache Spark's DataSourceV2 API for data source and catalog implementations. Spark DSv2 is an evolving API

+with different levels of support in Spark versions.

+

+As of Spark 3.0, the new API on reading/writing table on table identifier is not yet added on streaming query.

+

+| Feature support | Spark 3.0| Spark 2.4 | Notes |

+|--------------------------------------------------|----------|------------|------------------------------------------------|

+| [DataFrame write](#writing-with-streaming-query) | ✔ | ✔ | |

+

+## Writing with streaming query

+

+To write values from streaming query to Iceberg table, use `DataStreamWriter`:

+

+```scala

+data.writeStream

+ .format("iceberg")

+ .outputMode("append")

+ .trigger(Trigger.ProcessingTime(1, TimeUnit.MINUTES))

+ .option("path", pathToTable)

Review comment:

I just realized I should provide table identifier instead of path in HiveCatalog. I'll update the same.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] zhangdove commented on pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

zhangdove commented on pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#issuecomment-664743990

I wonder if there are any examples of `outputMode("update")`.

There is an example about it. But I'm not sure if it's complete.

https://github.com/apache/iceberg/blob/master/spark/src/test/java/org/apache/iceberg/spark/source/TestStructuredStreaming.java#L268

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] HeartSaVioR commented on a change in pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

HeartSaVioR commented on a change in pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#discussion_r463350311

##########

File path: site/docs/spark-structured-streaming.md

##########

@@ -0,0 +1,184 @@

+<!--

+ - Licensed to the Apache Software Foundation (ASF) under one or more

+ - contributor license agreements. See the NOTICE file distributed with

+ - this work for additional information regarding copyright ownership.

+ - The ASF licenses this file to You under the Apache License, Version 2.0

+ - (the "License"); you may not use this file except in compliance with

+ - the License. You may obtain a copy of the License at

+ -

+ - http://www.apache.org/licenses/LICENSE-2.0

+ -

+ - Unless required by applicable law or agreed to in writing, software

+ - distributed under the License is distributed on an "AS IS" BASIS,

+ - WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ - See the License for the specific language governing permissions and

+ - limitations under the License.

+ -->

+

+# Spark Structured Streaming

+

+Iceberg uses Apache Spark's DataSourceV2 API for data source and catalog implementations. Spark DSv2 is an evolving API

+with different levels of support in Spark versions.

+

+As of Spark 3.0, the new API on reading/writing table on table identifier is not yet added on streaming query.

+

+| Feature support | Spark 3.0| Spark 2.4 | Notes |

+|--------------------------------------------------|----------|------------|------------------------------------------------|

+| [DataFrame write](#writing-with-streaming-query) | ✔ | ✔ | |

+

+## Writing with streaming query

+

+To write values from streaming query to Iceberg table, use `DataStreamWriter`:

+

+```scala

+data.writeStream

+ .format("iceberg")

+ .outputMode("append")

+ .trigger(Trigger.ProcessingTime(1, TimeUnit.MINUTES))

+ .option("path", pathToTable)

+ .option("checkpointLocation", checkpointPath)

+ .start()

+```

+

+Iceberg supports below output modes:

+

+* append

+* complete

+

+The table should be created in prior to start the streaming query.

+

+## Maintenance

+

+Streaming queries can create new table versions quickly, which creates lots of table metadata to track those versions.

+Maintaining metadata by tuning the rate of commits, expiring old snapshots, and automatically cleaning up metadata files

+is highly recommended.

+

+### Tune the rate of commits

+

+Having high rate of commits would produce lots of data files, manifests, and snapshots which leads the table hard

+to maintain. We encourage having trigger interval 1 minute at minimum, and increase the interval if you encounter

+issues.

+

+### Retain recent metadata files in Hadoop catalog

+

+If you are using HadoopCatalog, you may want to enable `write.metadata.delete-after-commit.enabled` in the table

Review comment:

If I understand correctly, this option is cleaning up vXXXXX.metadata.json files, which doesn't seem to be in Hive catalog based metadata directory, because it is not needed - it only retains the latest one in Hive table information. I'm still learning, so please let me know if I'm missing something.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] HeartSaVioR commented on pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

HeartSaVioR commented on pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#issuecomment-680391366

No worries. Thanks for the detailed reviews. Happy to contribute!

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] HeartSaVioR commented on pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

HeartSaVioR commented on pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#issuecomment-664710661

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] rdblue commented on a change in pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

rdblue commented on a change in pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#discussion_r463339341

##########

File path: site/docs/spark-structured-streaming.md

##########

@@ -0,0 +1,184 @@

+<!--

+ - Licensed to the Apache Software Foundation (ASF) under one or more

+ - contributor license agreements. See the NOTICE file distributed with

+ - this work for additional information regarding copyright ownership.

+ - The ASF licenses this file to You under the Apache License, Version 2.0

+ - (the "License"); you may not use this file except in compliance with

+ - the License. You may obtain a copy of the License at

+ -

+ - http://www.apache.org/licenses/LICENSE-2.0

+ -

+ - Unless required by applicable law or agreed to in writing, software

+ - distributed under the License is distributed on an "AS IS" BASIS,

+ - WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ - See the License for the specific language governing permissions and

+ - limitations under the License.

+ -->

+

+# Spark Structured Streaming

+

+Iceberg uses Apache Spark's DataSourceV2 API for data source and catalog implementations. Spark DSv2 is an evolving API

+with different levels of support in Spark versions.

+

+As of Spark 3.0, the new API on reading/writing table on table identifier is not yet added on streaming query.

+

+| Feature support | Spark 3.0| Spark 2.4 | Notes |

+|--------------------------------------------------|----------|------------|------------------------------------------------|

+| [DataFrame write](#writing-with-streaming-query) | ✔ | ✔ | |

+

+## Writing with streaming query

+

+To write values from streaming query to Iceberg table, use `DataStreamWriter`:

+

+```scala

+data.writeStream

+ .format("iceberg")

+ .outputMode("append")

+ .trigger(Trigger.ProcessingTime(1, TimeUnit.MINUTES))

+ .option("path", pathToTable)

+ .option("checkpointLocation", checkpointPath)

+ .start()

+```

+

+Iceberg supports below output modes:

+

+* append

+* complete

+

+The table should be created in prior to start the streaming query.

+

+## Maintenance

+

+Streaming queries can create new table versions quickly, which creates lots of table metadata to track those versions.

+Maintaining metadata by tuning the rate of commits, expiring old snapshots, and automatically cleaning up metadata files

+is highly recommended.

+

+### Tune the rate of commits

+

+Having high rate of commits would produce lots of data files, manifests, and snapshots which leads the table hard

+to maintain. We encourage having trigger interval 1 minute at minimum, and increase the interval if you encounter

+issues.

+

+### Retain recent metadata files in Hadoop catalog

+

+If you are using HadoopCatalog, you may want to enable `write.metadata.delete-after-commit.enabled` in the table

+properties, and reduce `write.metadata.previous-versions-max` as well (if necessary) to retain only specific number of

+metadata files.

+

+Please refer the [table write properties](/configuration/#write-properties) for more details.

+

+### Expire old snapshots

+

+You may want to run [expireSnapshots()](/javadoc/master/org/apache/iceberg/Table.html#expireSnapshots--) periodically

Review comment:

How about "Run `expireSnapshots` regularly to prune . . ."?

That's more direct and avoids the natural question "When may I *not* want to do this?" Since the answer is you always want to clean snapshots, being direct is more clear.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] HeartSaVioR commented on pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

HeartSaVioR commented on pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#issuecomment-666119199

Just added section about removing orphan files. I stopped updating screenshot though (so PR description is not the final shape), as I guess I would get review comments and reflecting the comments would also trigger updating screenshot.

I'm not native speaker in English and probably haven't experienced much of Iceberg in details, so there may be lots of spots we'd like to correct. Please feel free to add comments. Thanks!

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] rdblue commented on a change in pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

rdblue commented on a change in pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#discussion_r463337000

##########

File path: site/docs/spark-structured-streaming.md

##########

@@ -0,0 +1,184 @@

+<!--

+ - Licensed to the Apache Software Foundation (ASF) under one or more

+ - contributor license agreements. See the NOTICE file distributed with

+ - this work for additional information regarding copyright ownership.

+ - The ASF licenses this file to You under the Apache License, Version 2.0

+ - (the "License"); you may not use this file except in compliance with

+ - the License. You may obtain a copy of the License at

+ -

+ - http://www.apache.org/licenses/LICENSE-2.0

+ -

+ - Unless required by applicable law or agreed to in writing, software

+ - distributed under the License is distributed on an "AS IS" BASIS,

+ - WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ - See the License for the specific language governing permissions and

+ - limitations under the License.

+ -->

+

+# Spark Structured Streaming

+

+Iceberg uses Apache Spark's DataSourceV2 API for data source and catalog implementations. Spark DSv2 is an evolving API

+with different levels of support in Spark versions.

+

+As of Spark 3.0, the new API on reading/writing table on table identifier is not yet added on streaming query.

+

+| Feature support | Spark 3.0| Spark 2.4 | Notes |

+|--------------------------------------------------|----------|------------|------------------------------------------------|

+| [DataFrame write](#writing-with-streaming-query) | ✔ | ✔ | |

+

+## Writing with streaming query

+

+To write values from streaming query to Iceberg table, use `DataStreamWriter`:

+

+```scala

+data.writeStream

+ .format("iceberg")

+ .outputMode("append")

+ .trigger(Trigger.ProcessingTime(1, TimeUnit.MINUTES))

+ .option("path", pathToTable)

+ .option("checkpointLocation", checkpointPath)

+ .start()

+```

+

+Iceberg supports below output modes:

+

+* append

+* complete

+

+The table should be created in prior to start the streaming query.

Review comment:

Should this link to the `CREATE TABLE` docs on the Spark page?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] HeartSaVioR commented on pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

HeartSaVioR commented on pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#issuecomment-680349187

Thanks for the PR. I've merged your PR on my repo, and this PR now reflects the change + fixed nit. Please take a look again. Thanks in advance!

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] HeartSaVioR commented on a change in pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

HeartSaVioR commented on a change in pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#discussion_r464063401

##########

File path: site/docs/spark-structured-streaming.md

##########

@@ -0,0 +1,184 @@

+<!--

+ - Licensed to the Apache Software Foundation (ASF) under one or more

+ - contributor license agreements. See the NOTICE file distributed with

+ - this work for additional information regarding copyright ownership.

+ - The ASF licenses this file to You under the Apache License, Version 2.0

+ - (the "License"); you may not use this file except in compliance with

+ - the License. You may obtain a copy of the License at

+ -

+ - http://www.apache.org/licenses/LICENSE-2.0

+ -

+ - Unless required by applicable law or agreed to in writing, software

+ - distributed under the License is distributed on an "AS IS" BASIS,

+ - WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ - See the License for the specific language governing permissions and

+ - limitations under the License.

+ -->

+

+# Spark Structured Streaming

+

+Iceberg uses Apache Spark's DataSourceV2 API for data source and catalog implementations. Spark DSv2 is an evolving API

+with different levels of support in Spark versions.

+

+As of Spark 3.0, the new API on reading/writing table on table identifier is not yet added on streaming query.

+

+| Feature support | Spark 3.0| Spark 2.4 | Notes |

+|--------------------------------------------------|----------|------------|------------------------------------------------|

+| [DataFrame write](#writing-with-streaming-query) | ✔ | ✔ | |

+

+## Writing with streaming query

+

+To write values from streaming query to Iceberg table, use `DataStreamWriter`:

+

+```scala

+data.writeStream

+ .format("iceberg")

+ .outputMode("append")

+ .trigger(Trigger.ProcessingTime(1, TimeUnit.MINUTES))

+ .option("path", pathToTable)

+ .option("checkpointLocation", checkpointPath)

+ .start()

+```

+

+Iceberg supports below output modes:

+

+* append

+* complete

+

+The table should be created in prior to start the streaming query.

+

+## Maintenance

+

+Streaming queries can create new table versions quickly, which creates lots of table metadata to track those versions.

+Maintaining metadata by tuning the rate of commits, expiring old snapshots, and automatically cleaning up metadata files

+is highly recommended.

+

+### Tune the rate of commits

+

+Having high rate of commits would produce lots of data files, manifests, and snapshots which leads the table hard

+to maintain. We encourage having trigger interval 1 minute at minimum, and increase the interval if you encounter

+issues.

+

+### Retain recent metadata files in Hadoop catalog

+

+If you are using HadoopCatalog, you may want to enable `write.metadata.delete-after-commit.enabled` in the table

Review comment:

Somehow I need to look into details on Hive catalog, and realized I was wrong. It still creates the metadata file per version. I'll reflect the comment. Thanks for correcting me.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] HeartSaVioR commented on pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

HeartSaVioR commented on pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#issuecomment-666891310

cc. @aokolnychyi as he provided structured streaming sink.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] rdblue commented on a change in pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

rdblue commented on a change in pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#discussion_r463338478

##########

File path: site/docs/spark-structured-streaming.md

##########

@@ -0,0 +1,184 @@

+<!--

+ - Licensed to the Apache Software Foundation (ASF) under one or more

+ - contributor license agreements. See the NOTICE file distributed with

+ - this work for additional information regarding copyright ownership.

+ - The ASF licenses this file to You under the Apache License, Version 2.0

+ - (the "License"); you may not use this file except in compliance with

+ - the License. You may obtain a copy of the License at

+ -

+ - http://www.apache.org/licenses/LICENSE-2.0

+ -

+ - Unless required by applicable law or agreed to in writing, software

+ - distributed under the License is distributed on an "AS IS" BASIS,

+ - WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ - See the License for the specific language governing permissions and

+ - limitations under the License.

+ -->

+

+# Spark Structured Streaming

+

+Iceberg uses Apache Spark's DataSourceV2 API for data source and catalog implementations. Spark DSv2 is an evolving API

+with different levels of support in Spark versions.

+

+As of Spark 3.0, the new API on reading/writing table on table identifier is not yet added on streaming query.

+

+| Feature support | Spark 3.0| Spark 2.4 | Notes |

+|--------------------------------------------------|----------|------------|------------------------------------------------|

+| [DataFrame write](#writing-with-streaming-query) | ✔ | ✔ | |

+

+## Writing with streaming query

+

+To write values from streaming query to Iceberg table, use `DataStreamWriter`:

+

+```scala

+data.writeStream

+ .format("iceberg")

+ .outputMode("append")

+ .trigger(Trigger.ProcessingTime(1, TimeUnit.MINUTES))

+ .option("path", pathToTable)

+ .option("checkpointLocation", checkpointPath)

+ .start()

+```

+

+Iceberg supports below output modes:

+

+* append

+* complete

+

+The table should be created in prior to start the streaming query.

+

+## Maintenance

+

+Streaming queries can create new table versions quickly, which creates lots of table metadata to track those versions.

+Maintaining metadata by tuning the rate of commits, expiring old snapshots, and automatically cleaning up metadata files

+is highly recommended.

+

+### Tune the rate of commits

+

+Having high rate of commits would produce lots of data files, manifests, and snapshots which leads the table hard

+to maintain. We encourage having trigger interval 1 minute at minimum, and increase the interval if you encounter

+issues.

+

+### Retain recent metadata files in Hadoop catalog

Review comment:

How about "Removed old metadata files"? That matches the wording used for "Expire old snapshots".

Also, we should probably make these recommendations in order of importance, which would mean putting the expire snapshots section first. Those keep a lot more metadata and affect table performance; these don't affect table performance and are smaller if you clean up snapshots.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] HeartSaVioR commented on a change in pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

HeartSaVioR commented on a change in pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#discussion_r466078362

##########

File path: site/docs/spark-structured-streaming.md

##########

@@ -0,0 +1,184 @@

+<!--

+ - Licensed to the Apache Software Foundation (ASF) under one or more

+ - contributor license agreements. See the NOTICE file distributed with

+ - this work for additional information regarding copyright ownership.

+ - The ASF licenses this file to You under the Apache License, Version 2.0

+ - (the "License"); you may not use this file except in compliance with

+ - the License. You may obtain a copy of the License at

+ -

+ - http://www.apache.org/licenses/LICENSE-2.0

+ -

+ - Unless required by applicable law or agreed to in writing, software

+ - distributed under the License is distributed on an "AS IS" BASIS,

+ - WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ - See the License for the specific language governing permissions and

+ - limitations under the License.

+ -->

+

+# Spark Structured Streaming

+

+Iceberg uses Apache Spark's DataSourceV2 API for data source and catalog implementations. Spark DSv2 is an evolving API

+with different levels of support in Spark versions.

+

+As of Spark 3.0, the new API on reading/writing table on table identifier is not yet added on streaming query.

+

+| Feature support | Spark 3.0| Spark 2.4 | Notes |

+|--------------------------------------------------|----------|------------|------------------------------------------------|

+| [DataFrame write](#writing-with-streaming-query) | ✔ | ✔ | |

+

+## Writing with streaming query

+

+To write values from streaming query to Iceberg table, use `DataStreamWriter`:

+

+```scala

+data.writeStream

+ .format("iceberg")

+ .outputMode("append")

+ .trigger(Trigger.ProcessingTime(1, TimeUnit.MINUTES))

+ .option("path", pathToTable)

+ .option("checkpointLocation", checkpointPath)

+ .start()

+```

+

+Iceberg supports below output modes:

+

+* append

+* complete

+

+The table should be created in prior to start the streaming query.

+

+## Maintenance

+

+Streaming queries can create new table versions quickly, which creates lots of table metadata to track those versions.

+Maintaining metadata by tuning the rate of commits, expiring old snapshots, and automatically cleaning up metadata files

+is highly recommended.

+

+### Tune the rate of commits

+

+Having high rate of commits would produce lots of data files, manifests, and snapshots which leads the table hard

+to maintain. We encourage having trigger interval 1 minute at minimum, and increase the interval if you encounter

+issues.

Review comment:

Ah yes probably better to pick up anchor. Thanks :)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] HeartSaVioR commented on pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

HeartSaVioR commented on pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#issuecomment-680317357

@rdblue Great idea! I also agree the maintenance wouldn't be restricted to the streaming cases. I left some comments on your PR.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] rdblue commented on a change in pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

rdblue commented on a change in pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#discussion_r461904586

##########

File path: site/docs/spark.md

##########

@@ -520,6 +520,28 @@ data.writeTo("prod.db.table")

.createOrReplace()

```

+### Writing from streaming query (Structured Streaming)

+

+To write values from streaming query to Iceberg table, use `writeStream`:

+

+```scala

+data.writeStream

+ .format("iceberg")

+ .outputMode("append")

+ .option("path", pathToTable)

+ .option("checkpointLocation", checkpointPath)

+ .start()

Review comment:

This looks specific to 2.4. Should we have a 3.0 example and a separate 2.4 example like the other sections?

An alternative is to create a new page for Spark Streaming and add the docs there. Then we could have a table like the one at the top of the Spark page that explains what is supported in different versions.

##########

File path: site/docs/spark.md

##########

@@ -520,6 +520,28 @@ data.writeTo("prod.db.table")

.createOrReplace()

```

+### Writing from streaming query (Structured Streaming)

+

+To write values from streaming query to Iceberg table, use `writeStream`:

+

+```scala

+data.writeStream

+ .format("iceberg")

+ .outputMode("append")

+ .option("path", pathToTable)

+ .option("checkpointLocation", checkpointPath)

+ .start()

+```

+

+`append` and `complete` modes are supported. The table should be created in prior to start the streaming query.

+

+!!! Note

+ To avoid metadata growing too huge, there're several guides you may want to follow:

Review comment:

I think this is worth a section, not just a note.

> Streaming queries can create new table versions quickly, which creates lots of table metadata to track those versions. Maintaining metadata by tuning the rate of commits, expiring old snapshots, and automatically cleaning up metadata files is highly recommended.

Then you could give an overview of those options and links to further docs, like the table property docs for delete-after-commit.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] rdblue commented on a change in pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

rdblue commented on a change in pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#discussion_r463338478

##########

File path: site/docs/spark-structured-streaming.md

##########

@@ -0,0 +1,184 @@

+<!--

+ - Licensed to the Apache Software Foundation (ASF) under one or more

+ - contributor license agreements. See the NOTICE file distributed with

+ - this work for additional information regarding copyright ownership.

+ - The ASF licenses this file to You under the Apache License, Version 2.0

+ - (the "License"); you may not use this file except in compliance with

+ - the License. You may obtain a copy of the License at

+ -

+ - http://www.apache.org/licenses/LICENSE-2.0

+ -

+ - Unless required by applicable law or agreed to in writing, software

+ - distributed under the License is distributed on an "AS IS" BASIS,

+ - WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ - See the License for the specific language governing permissions and

+ - limitations under the License.

+ -->

+

+# Spark Structured Streaming

+

+Iceberg uses Apache Spark's DataSourceV2 API for data source and catalog implementations. Spark DSv2 is an evolving API

+with different levels of support in Spark versions.

+

+As of Spark 3.0, the new API on reading/writing table on table identifier is not yet added on streaming query.

+

+| Feature support | Spark 3.0| Spark 2.4 | Notes |

+|--------------------------------------------------|----------|------------|------------------------------------------------|

+| [DataFrame write](#writing-with-streaming-query) | ✔ | ✔ | |

+

+## Writing with streaming query

+

+To write values from streaming query to Iceberg table, use `DataStreamWriter`:

+

+```scala

+data.writeStream

+ .format("iceberg")

+ .outputMode("append")

+ .trigger(Trigger.ProcessingTime(1, TimeUnit.MINUTES))

+ .option("path", pathToTable)

+ .option("checkpointLocation", checkpointPath)

+ .start()

+```

+

+Iceberg supports below output modes:

+

+* append

+* complete

+

+The table should be created in prior to start the streaming query.

+

+## Maintenance

+

+Streaming queries can create new table versions quickly, which creates lots of table metadata to track those versions.

+Maintaining metadata by tuning the rate of commits, expiring old snapshots, and automatically cleaning up metadata files

+is highly recommended.

+

+### Tune the rate of commits

+

+Having high rate of commits would produce lots of data files, manifests, and snapshots which leads the table hard

+to maintain. We encourage having trigger interval 1 minute at minimum, and increase the interval if you encounter

+issues.

+

+### Retain recent metadata files in Hadoop catalog

Review comment:

How about `Metadata file cleanup`?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] rdblue commented on pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

rdblue commented on pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#issuecomment-665306899

Thanks, @HeartSaVioR! The main thing is that I think that Spark Streaming should probably be on its own page so we can expand what is covered a bit more and have a section for what people need to know about tuning metadata creation and maintaining tables.

We should also note how to control the rate of commits, since we probably only recommend once-per-minute to reduce the problems you were hitting.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] HeartSaVioR commented on a change in pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

HeartSaVioR commented on a change in pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#discussion_r463354047

##########

File path: site/docs/spark-structured-streaming.md

##########

@@ -0,0 +1,184 @@

+<!--

+ - Licensed to the Apache Software Foundation (ASF) under one or more

+ - contributor license agreements. See the NOTICE file distributed with

+ - this work for additional information regarding copyright ownership.

+ - The ASF licenses this file to You under the Apache License, Version 2.0

+ - (the "License"); you may not use this file except in compliance with

+ - the License. You may obtain a copy of the License at

+ -

+ - http://www.apache.org/licenses/LICENSE-2.0

+ -

+ - Unless required by applicable law or agreed to in writing, software

+ - distributed under the License is distributed on an "AS IS" BASIS,

+ - WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ - See the License for the specific language governing permissions and

+ - limitations under the License.

+ -->

+

+# Spark Structured Streaming

+

+Iceberg uses Apache Spark's DataSourceV2 API for data source and catalog implementations. Spark DSv2 is an evolving API

+with different levels of support in Spark versions.

+

+As of Spark 3.0, the new API on reading/writing table on table identifier is not yet added on streaming query.

+

+| Feature support | Spark 3.0| Spark 2.4 | Notes |

+|--------------------------------------------------|----------|------------|------------------------------------------------|

+| [DataFrame write](#writing-with-streaming-query) | ✔ | ✔ | |

+

+## Writing with streaming query

+

+To write values from streaming query to Iceberg table, use `DataStreamWriter`:

+

+```scala

+data.writeStream

+ .format("iceberg")

+ .outputMode("append")

+ .trigger(Trigger.ProcessingTime(1, TimeUnit.MINUTES))

+ .option("path", pathToTable)

+ .option("checkpointLocation", checkpointPath)

+ .start()

+```

+

+Iceberg supports below output modes:

+

+* append

+* complete

+

+The table should be created in prior to start the streaming query.

+

+## Maintenance

+

+Streaming queries can create new table versions quickly, which creates lots of table metadata to track those versions.

+Maintaining metadata by tuning the rate of commits, expiring old snapshots, and automatically cleaning up metadata files

+is highly recommended.

+

+### Tune the rate of commits

+

+Having high rate of commits would produce lots of data files, manifests, and snapshots which leads the table hard

+to maintain. We encourage having trigger interval 1 minute at minimum, and increase the interval if you encounter

+issues.

Review comment:

It's already in the code example in above - `.trigger(Trigger.ProcessingTime(1, TimeUnit.MINUTES))`. It's an essential knowledge on structured streaming (and DStream) so I feel linking structured streaming guide doc would be sufficient. Please let me know if we still would like to provide some example for here as well.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] rdblue commented on pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

rdblue commented on pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#issuecomment-670794616

@HeartSaVioR, sorry I haven't made it back to continue reviewing this yet. Thanks for your patience!

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] rdblue merged pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

rdblue merged pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] rdblue commented on a change in pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

rdblue commented on a change in pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#discussion_r463337395

##########

File path: site/docs/spark-structured-streaming.md

##########

@@ -0,0 +1,184 @@

+<!--

+ - Licensed to the Apache Software Foundation (ASF) under one or more

+ - contributor license agreements. See the NOTICE file distributed with

+ - this work for additional information regarding copyright ownership.

+ - The ASF licenses this file to You under the Apache License, Version 2.0

+ - (the "License"); you may not use this file except in compliance with

+ - the License. You may obtain a copy of the License at

+ -

+ - http://www.apache.org/licenses/LICENSE-2.0

+ -

+ - Unless required by applicable law or agreed to in writing, software

+ - distributed under the License is distributed on an "AS IS" BASIS,

+ - WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ - See the License for the specific language governing permissions and

+ - limitations under the License.

+ -->

+

+# Spark Structured Streaming

+

+Iceberg uses Apache Spark's DataSourceV2 API for data source and catalog implementations. Spark DSv2 is an evolving API

+with different levels of support in Spark versions.

+

+As of Spark 3.0, the new API on reading/writing table on table identifier is not yet added on streaming query.

+

+| Feature support | Spark 3.0| Spark 2.4 | Notes |

+|--------------------------------------------------|----------|------------|------------------------------------------------|

+| [DataFrame write](#writing-with-streaming-query) | ✔ | ✔ | |

+

+## Writing with streaming query

+

+To write values from streaming query to Iceberg table, use `DataStreamWriter`:

+

+```scala

+data.writeStream

+ .format("iceberg")

+ .outputMode("append")

+ .trigger(Trigger.ProcessingTime(1, TimeUnit.MINUTES))

+ .option("path", pathToTable)

+ .option("checkpointLocation", checkpointPath)

+ .start()

+```

+

+Iceberg supports below output modes:

+

+* append

+* complete

+

+The table should be created in prior to start the streaming query.

+

+## Maintenance

+

+Streaming queries can create new table versions quickly, which creates lots of table metadata to track those versions.

+Maintaining metadata by tuning the rate of commits, expiring old snapshots, and automatically cleaning up metadata files

+is highly recommended.

+

+### Tune the rate of commits

+

+Having high rate of commits would produce lots of data files, manifests, and snapshots which leads the table hard

+to maintain. We encourage having trigger interval 1 minute at minimum, and increase the interval if you encounter

+issues.

+

+### Retain recent metadata files in Hadoop catalog

+

+If you are using HadoopCatalog, you may want to enable `write.metadata.delete-after-commit.enabled` in the table

Review comment:

This applies to all catalogs, not just Hadoop. I think you can simply remove that clause.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] HeartSaVioR commented on pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

HeartSaVioR commented on pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#issuecomment-665656488

I've updated the doc - now the structured streaming support has its own page. Please refer the PR description to see how it would represent.

I'd like to add the tip on expiring snapshots without cleaning up data and manifest files and executing remove orphaned files separately (@aokolnychyi shared the approach as real world workaround - https://github.com/apache/iceberg/pull/1244#issuecomment-665135343), but given the feature is not released and I'm not clear whether the site doc is following the Iceberg version, I'd like to confirm whether it's safe to add.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] rdblue commented on pull request #1261: Spark: [DOC] guide about structured streaming sink for Iceberg

Posted by GitBox <gi...@apache.org>.

rdblue commented on pull request #1261:

URL: https://github.com/apache/iceberg/pull/1261#issuecomment-680379092

Thanks @HeartSaVioR! Nice work and I'm sorry I didn't have the time to put into this sooner.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org