You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2020/06/11 17:14:40 UTC

[GitHub] [hudi] vinothchandar opened a new pull request #1727: [WIP] [Review] refactor hudi-client

vinothchandar opened a new pull request #1727:

URL: https://github.com/apache/hudi/pull/1727

## *Tips*

- *Thank you very much for contributing to Apache Hudi.*

- *Please review https://hudi.apache.org/contributing.html before opening a pull request.*

## What is the purpose of the pull request

*(For example: This pull request adds quick-start document.)*

## Brief change log

*(for example:)*

- *Modify AnnotationLocation checkstyle rule in checkstyle.xml*

## Verify this pull request

*(Please pick either of the following options)*

This pull request is a trivial rework / code cleanup without any test coverage.

*(or)*

This pull request is already covered by existing tests, such as *(please describe tests)*.

(or)

This change added tests and can be verified as follows:

*(example:)*

- *Added integration tests for end-to-end.*

- *Added HoodieClientWriteTest to verify the change.*

- *Manually verified the change by running a job locally.*

## Committer checklist

- [ ] Has a corresponding JIRA in PR title & commit

- [ ] Commit message is descriptive of the change

- [ ] CI is green

- [ ] Necessary doc changes done or have another open PR

- [ ] For large changes, please consider breaking it into sub-tasks under an umbrella JIRA.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] vinothchandar commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

vinothchandar commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r438950191

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/config/HoodieIndexConfig.java

##########

@@ -36,7 +35,7 @@

public class HoodieIndexConfig extends DefaultHoodieConfig {

public static final String INDEX_TYPE_PROP = "hoodie.index.type";

- public static final String DEFAULT_INDEX_TYPE = HoodieIndex.IndexType.BLOOM.name();

+ public static final String DEFAULT_INDEX_TYPE = AbstractHoodieIndex.IndexType.BLOOM.name();

Review comment:

we can probably keep IndexType in `HoodieIndex` itself.. as a interface? anyways, I may have some detailed comments like these.. but we can defer them to final review

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/AbstractHoodieClient.java

##########

@@ -19,52 +19,53 @@

package org.apache.hudi.client;

import org.apache.hadoop.conf.Configuration;

-import org.apache.hudi.client.embedded.EmbeddedTimelineService;

-import org.apache.hudi.client.utils.ClientUtils;

+import org.apache.hadoop.fs.FileSystem;

+import org.apache.hudi.client.embedded.AbstractEmbeddedTimelineService;

+import org.apache.hudi.client.util.ClientUtils;

+import org.apache.hudi.common.HoodieEngineContext;

import org.apache.hudi.common.fs.FSUtils;

import org.apache.hudi.common.table.HoodieTableMetaClient;

import org.apache.hudi.common.util.Option;

import org.apache.hudi.config.HoodieWriteConfig;

+import org.slf4j.Logger;

Review comment:

we don't use sl4j currently.. there was a separate effort for this..

@leesf would know better.. for now, let's keep things in log4j

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/AbstractHoodieWriteClient.java

##########

@@ -0,0 +1,537 @@

+package org.apache.hudi.client;

+

+import com.codahale.metrics.Timer;

+import org.apache.hudi.avro.model.HoodieCleanMetadata;

+import org.apache.hudi.avro.model.HoodieCompactionPlan;

+import org.apache.hudi.avro.model.HoodieRestoreMetadata;

+import org.apache.hudi.avro.model.HoodieRollbackMetadata;

+import org.apache.hudi.client.embedded.AbstractEmbeddedTimelineService;

+import org.apache.hudi.common.HoodieEngineContext;

+import org.apache.hudi.common.model.*;

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.common.table.timeline.HoodieActiveTimeline;

+import org.apache.hudi.common.table.timeline.HoodieInstant;

+import org.apache.hudi.common.table.timeline.HoodieTimeline;

+import org.apache.hudi.common.util.Option;

+import org.apache.hudi.common.util.ValidationUtils;

+import org.apache.hudi.config.HoodieCompactionConfig;

+import org.apache.hudi.config.HoodieWriteConfig;

+import org.apache.hudi.exception.*;

+import org.apache.hudi.index.AbstractHoodieIndex;

+import org.apache.hudi.metrics.HoodieMetrics;

+import org.apache.hudi.table.AbstractHoodieTable;

+import org.apache.hudi.table.HoodieTimelineArchiveLog;

+import org.apache.hudi.table.UserDefinedBulkInsertPartitioner;

+import org.apache.hudi.table.action.HoodieWriteMetadata;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.IOException;

+import java.text.ParseException;

+import java.util.Collection;

+import java.util.List;

+import java.util.Map;

+import java.util.stream.Collectors;

+

+/**

+ * @author xianghu.wang

+ * @time 2020/6/10

+ * @description

+ */

+public abstract class AbstractHoodieWriteClient<T extends HoodieRecordPayload<T>, I, K, O, P> extends AbstractHoodieClient {

Review comment:

please document what I,K,O,P stand for>

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf closed pull request #1727: [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf closed pull request #1727:

URL: https://github.com/apache/hudi/pull/1727

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439718324

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/AbstractHoodieWriteClient.java

##########

@@ -0,0 +1,537 @@

+package org.apache.hudi.client;

+

+import com.codahale.metrics.Timer;

+import org.apache.hudi.avro.model.HoodieCleanMetadata;

+import org.apache.hudi.avro.model.HoodieCompactionPlan;

+import org.apache.hudi.avro.model.HoodieRestoreMetadata;

+import org.apache.hudi.avro.model.HoodieRollbackMetadata;

+import org.apache.hudi.client.embedded.AbstractEmbeddedTimelineService;

+import org.apache.hudi.common.HoodieEngineContext;

+import org.apache.hudi.common.model.*;

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.common.table.timeline.HoodieActiveTimeline;

+import org.apache.hudi.common.table.timeline.HoodieInstant;

+import org.apache.hudi.common.table.timeline.HoodieTimeline;

+import org.apache.hudi.common.util.Option;

+import org.apache.hudi.common.util.ValidationUtils;

+import org.apache.hudi.config.HoodieCompactionConfig;

+import org.apache.hudi.config.HoodieWriteConfig;

+import org.apache.hudi.exception.*;

+import org.apache.hudi.index.AbstractHoodieIndex;

+import org.apache.hudi.metrics.HoodieMetrics;

+import org.apache.hudi.table.AbstractHoodieTable;

+import org.apache.hudi.table.HoodieTimelineArchiveLog;

+import org.apache.hudi.table.UserDefinedBulkInsertPartitioner;

+import org.apache.hudi.table.action.HoodieWriteMetadata;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.IOException;

+import java.text.ParseException;

+import java.util.Collection;

+import java.util.List;

+import java.util.Map;

+import java.util.stream.Collectors;

+

+/**

+ * @author xianghu.wang

+ * @time 2020/6/10

+ * @description

Review comment:

remove the section please.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439719359

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/AbstractHoodieWriteClient.java

##########

@@ -0,0 +1,537 @@

+package org.apache.hudi.client;

+

+import com.codahale.metrics.Timer;

+import org.apache.hudi.avro.model.HoodieCleanMetadata;

+import org.apache.hudi.avro.model.HoodieCompactionPlan;

+import org.apache.hudi.avro.model.HoodieRestoreMetadata;

+import org.apache.hudi.avro.model.HoodieRollbackMetadata;

+import org.apache.hudi.client.embedded.AbstractEmbeddedTimelineService;

+import org.apache.hudi.common.HoodieEngineContext;

+import org.apache.hudi.common.model.*;

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.common.table.timeline.HoodieActiveTimeline;

+import org.apache.hudi.common.table.timeline.HoodieInstant;

+import org.apache.hudi.common.table.timeline.HoodieTimeline;

+import org.apache.hudi.common.util.Option;

+import org.apache.hudi.common.util.ValidationUtils;

+import org.apache.hudi.config.HoodieCompactionConfig;

+import org.apache.hudi.config.HoodieWriteConfig;

+import org.apache.hudi.exception.*;

+import org.apache.hudi.index.AbstractHoodieIndex;

+import org.apache.hudi.metrics.HoodieMetrics;

+import org.apache.hudi.table.AbstractHoodieTable;

+import org.apache.hudi.table.HoodieTimelineArchiveLog;

+import org.apache.hudi.table.UserDefinedBulkInsertPartitioner;

+import org.apache.hudi.table.action.HoodieWriteMetadata;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.IOException;

+import java.text.ParseException;

+import java.util.Collection;

+import java.util.List;

+import java.util.Map;

+import java.util.stream.Collectors;

+

+/**

+ * @author xianghu.wang

+ * @time 2020/6/10

+ * @description

+ */

+public abstract class AbstractHoodieWriteClient<T extends HoodieRecordPayload<T>, I, K, O, P> extends AbstractHoodieClient {

+

+ private static final Logger LOG = LoggerFactory.getLogger(AbstractHoodieWriteClient.class);

+ private static final long serialVersionUID = 1L;

+ private final transient HoodieMetrics metrics;

+ private final transient AbstractHoodieIndex<T, I, K, O, P> index;

+ private final transient AbstractHoodieTable<T, I, K, O, P> table;

+ private transient Timer.Context writeContext = null;

+ private transient WriteOperationType operationType;

+

+ private static final String LOOKUP_STR = "lookup";

+ private final boolean rollbackPending;

+ private transient Timer.Context compactionTimer;

+

+

+ public void setOperationType(WriteOperationType operationType) {

+ this.operationType = operationType;

+ }

+

+ public WriteOperationType getOperationType() {

+ return this.operationType;

+ }

+

+ public AbstractHoodieWriteClient(HoodieEngineContext context, AbstractHoodieIndex<T, I, K, O, P> index,

+ AbstractHoodieTable<T, I, K, O, P> table,HoodieWriteConfig clientConfig) {

+ this(context, index, table,clientConfig, Option.empty());

+ }

+

+ protected AbstractHoodieWriteClient(HoodieEngineContext context, AbstractHoodieIndex<T, I, K, O, P> index,

+ AbstractHoodieTable<T, I, K, O, P> table,HoodieWriteConfig clientConfig,

+ Option<AbstractEmbeddedTimelineService> timelineServer) {

+ this(context, index, table,clientConfig, timelineServer, false);

+ }

+

+ public AbstractHoodieWriteClient(HoodieEngineContext context, AbstractHoodieIndex<T, I, K, O, P> index,AbstractHoodieTable<T, I, K, O, P> table,

+ HoodieWriteConfig clientConfig, Option<AbstractEmbeddedTimelineService> timelineServer, boolean rollbackPending) {

+ super(context, clientConfig, timelineServer);

+ this.index = index;

+ this.table = table;

+ this.metrics = new HoodieMetrics(config, config.getTableName());

+ this.rollbackPending = rollbackPending;

+ }

+

+ public abstract I filterExists(I hoodieRecords);

+

+ public abstract O upsert(I records, final String instantTime);

+

+ public abstract O upsertPreppedRecords(I preppedRecords, final String instantTime);

+

+ public abstract O insert(I records, final String instantTime);

+

+ public abstract O insertPreppedRecords(I preppedRecords, final String instantTime);

+

+ public O bulkInsert(I records, final String instantTime) {

+ return bulkInsert(records, instantTime, Option.empty());

+ }

+

+ public abstract O bulkInsert(I records, final String instantTime,

+ Option<UserDefinedBulkInsertPartitioner<T,I>> bulkInsertPartitioner);

+

+ public abstract O bulkInsertPreppedRecords(I preppedRecords, final String instantTime,

+ Option<UserDefinedBulkInsertPartitioner<T,I>> bulkInsertPartitioner);

+

+ public abstract O delete(K keys, final String instantTime);

+

+ /**

+ * Common method containing steps to be performed after write (upsert/insert/..) operations including auto-commit.

+ * @param result Commit Action Result

+ * @param instantTime Instant Time

+ * @param hoodieTable Hoodie Table

+ * @return Write Status

+ */

+ private O postWrite(HoodieWriteMetadata<O> result, String instantTime, AbstractHoodieTable<T,I,K,O,P> hoodieTable) {

+ if (result.getIndexLookupDuration().isPresent()) {

+ metrics.updateIndexMetrics(getOperationType().name(), result.getIndexUpdateDuration().get().toMillis());

+ }

+ if (result.isCommitted()) {

+ // Perform post commit operations.

+ if (result.getFinalizeDuration().isPresent()) {

+ metrics.updateFinalizeWriteMetrics(result.getFinalizeDuration().get().toMillis(),

+ result.getWriteStats().get().size());

+ }

+

+ postCommit(result.getCommitMetadata().get(), instantTime, Option.empty());

+

+ emitCommitMetrics(instantTime, result.getCommitMetadata().get(),

+ hoodieTable.getMetaClient().getCommitActionType());

+ }

+ return result.getWriteStatuses();

+ }

+

+ protected void postCommit(HoodieCommitMetadata metadata, String instantTime,

+ Option<Map<String, String>> extraMetadata) {

+ try {

+ // Do an inline compaction if enabled

+ if (config.isInlineCompaction()) {

+ metadata.addMetadata(HoodieCompactionConfig.INLINE_COMPACT_PROP, "true");

+ inlineCompact(extraMetadata);

+ } else {

+ metadata.addMetadata(HoodieCompactionConfig.INLINE_COMPACT_PROP, "false");

+ }

+ // We cannot have unbounded commit files. Archive commits if we have to archive

+ HoodieTimelineArchiveLog archiveLog = new HoodieTimelineArchiveLog(config, createMetaClient(true),table);

+ archiveLog.archiveIfRequired(hadoopConf);

+ if (config.isAutoClean()) {

+ // Call clean to cleanup if there is anything to cleanup after the commit,

+ LOG.info("Auto cleaning is enabled. Running cleaner now");

+ clean(instantTime);

+ } else {

+ LOG.info("Auto cleaning is not enabled. Not running cleaner now");

+ }

+ } catch (IOException ioe) {

+ throw new HoodieIOException(ioe.getMessage(), ioe);

+ }

+ }

+

+ /**

+ * Create a savepoint based on the latest commit action on the timeline.

+ *

+ * @param user - User creating the savepoint

+ * @param comment - Comment for the savepoint

+ */

+ public void savepoint(String user, String comment) {

+ if (table.getCompletedCommitsTimeline().empty()) {

+ throw new HoodieSavepointException("Could not savepoint. Commit timeline is empty");

+ }

+

+ String latestCommit = table.getCompletedCommitsTimeline().lastInstant().get().getTimestamp();

+ LOG.info("Savepointing latest commit " + latestCommit);

+ savepoint(latestCommit, user, comment);

+ }

+

+ /**

+ * Savepoint a specific commit instant time. Latest version of data files as of the passed in instantTime

+ * will be referenced in the savepoint and will never be cleaned. The savepointed commit will never be rolledback or archived.

+ * <p>

+ * This gives an option to rollback the state to the savepoint anytime. Savepoint needs to be manually created and

+ * deleted.

+ * <p>

+ * Savepoint should be on a commit that could not have been cleaned.

+ *

+ * @param instantTime - commit that should be savepointed

+ * @param user - User creating the savepoint

+ * @param comment - Comment for the savepoint

+ */

+ public void savepoint(String instantTime, String user, String comment) {

+ table.savepoint(context, instantTime, user, comment);

+ }

+

+ /**

+ * Delete a savepoint that was created. Once the savepoint is deleted, the commit can be rolledback and cleaner may

+ * clean up data files.

+ *

+ * @param savepointTime - delete the savepoint

+ * @return true if the savepoint was deleted successfully

+ */

+ public abstract void deleteSavepoint(String savepointTime);

+

+ /**

+ * Restore the data to the savepoint.

+ *

+ * WARNING: This rolls back recent commits and deleted data files and also pending compactions after savepoint time.

+ * Queries accessing the files will mostly fail. This is expected to be a manual operation and no concurrent write or

+ * compaction is expected to be running

+ *

+ * @param savepointTime - savepoint time to rollback to

+ * @return true if the savepoint was restored to successfully

+ */

+ public abstract void restoreToSavepoint(String savepointTime);

+

+ /**

+ * Rollback the inflight record changes with the given commit time.

+ *

+ * @param commitInstantTime Instant time of the commit

+ * @throws HoodieRollbackException if rollback cannot be performed successfully

+ */

+ public boolean rollback(final String commitInstantTime) throws HoodieRollbackException {

+ LOG.info("Begin rollback of instant " + commitInstantTime);

+ final String rollbackInstantTime = HoodieActiveTimeline.createNewInstantTime();

+ final Timer.Context timerContext = this.metrics.getRollbackCtx();

+ try {

+ Option<HoodieInstant> commitInstantOpt = Option.fromJavaOptional(table.getActiveTimeline().getCommitsTimeline().getInstants()

+ .filter(instant -> HoodieActiveTimeline.EQUALS.test(instant.getTimestamp(), commitInstantTime))

+ .findFirst());

+ if (commitInstantOpt.isPresent()) {

+ HoodieRollbackMetadata rollbackMetadata = table.rollback(context, rollbackInstantTime, commitInstantOpt.get(), true);

+ if (timerContext != null) {

+ long durationInMs = metrics.getDurationInMs(timerContext.stop());

+ metrics.updateRollbackMetrics(durationInMs, rollbackMetadata.getTotalFilesDeleted());

+ }

+ return true;

+ } else {

+ LOG.warn("Cannot find instant " + commitInstantTime + " in the timeline, for rollback");

+ return false;

+ }

+ } catch (Exception e) {

+ throw new HoodieRollbackException("Failed to rollback " + config.getBasePath() + " commits " + commitInstantTime, e);

+ }

+ }

+

+

+ /**

+ * NOTE : This action requires all writers (ingest and compact) to a table to be stopped before proceeding. Revert

+ * the (inflight/committed) record changes for all commits after the provided instant time.

+ *

+ * @param instantTime Instant time to which restoration is requested

+ */

+ public HoodieRestoreMetadata restoreToInstant(final String instantTime) throws HoodieRestoreException {

+ LOG.info("Begin restore to instant " + instantTime);

+ final String restoreInstantTime = HoodieActiveTimeline.createNewInstantTime();

+ Timer.Context timerContext = metrics.getRollbackCtx();

+ try {

+ HoodieRestoreMetadata restoreMetadata = table.restore(context, restoreInstantTime, instantTime);

+ if (timerContext != null) {

+ final long durationInMs = metrics.getDurationInMs(timerContext.stop());

+ final long totalFilesDeleted = restoreMetadata.getHoodieRestoreMetadata().values().stream()

+ .flatMap(Collection::stream)

+ .mapToLong(HoodieRollbackMetadata::getTotalFilesDeleted)

+ .sum();

+ metrics.updateRollbackMetrics(durationInMs, totalFilesDeleted);

+ }

+ return restoreMetadata;

+ } catch (Exception e) {

+ throw new HoodieRestoreException("Failed to restore to " + instantTime, e);

+ }

+ }

+

+ /**

+ * Performs a compaction operation on a table, serially before or after an insert/upsert action.

+ */

+ private Option<String> inlineCompact(Option<Map<String, String>> extraMetadata) {

+ Option<String> compactionInstantTimeOpt = scheduleCompaction(extraMetadata);

+ compactionInstantTimeOpt.ifPresent(compactionInstantTime -> {

+ // inline compaction should auto commit as the user is never given control

+ compact(compactionInstantTime, true);

+ });

+ return compactionInstantTimeOpt;

+ }

+ /**

+ * Ensures compaction instant is in expected state and performs Compaction for the workload stored in instant-time.

+ *

+ * @param compactionInstantTime Compaction Instant Time

+ * @return RDD of Write Status

+ */

+ private O compact(String compactionInstantTime, boolean shouldComplete) {

Review comment:

I think we would change it to public for users to call the API directly

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439720680

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/metrics/JmxReporterServer.java

##########

@@ -18,18 +18,16 @@

package org.apache.hudi.metrics;

+import com.codahale.metrics.MetricRegistry;

+import com.codahale.metrics.jmx.JmxReporter;

import org.apache.hudi.common.util.StringUtils;

import org.apache.hudi.common.util.ValidationUtils;

import org.apache.hudi.exception.HoodieException;

-import com.codahale.metrics.MetricRegistry;

-import com.codahale.metrics.jmx.JmxReporter;

-

Review comment:

is the import reordered by idea?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] Mathieu1124 edited a comment on pull request #1727: [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

Mathieu1124 edited a comment on pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#issuecomment-658150685

refactor is finished, review goes to https://github.com/apache/hudi/pull/1827

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#issuecomment-656126156

@vinothchandar @smarthi @vinothchandar This PR is ready for review, please take a look when free.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439720773

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/metrics/datadog/DatadogReporter.java

##########

@@ -18,22 +18,13 @@

package org.apache.hudi.metrics.datadog;

-import org.apache.hudi.common.util.Option;

-import org.apache.hudi.common.util.ValidationUtils;

-

-import com.codahale.metrics.Clock;

-import com.codahale.metrics.Counter;

-import com.codahale.metrics.Gauge;

-import com.codahale.metrics.Histogram;

-import com.codahale.metrics.Meter;

-import com.codahale.metrics.MetricFilter;

-import com.codahale.metrics.MetricRegistry;

-import com.codahale.metrics.ScheduledReporter;

-import com.codahale.metrics.Timer;

+import com.codahale.metrics.*;

Review comment:

ditto

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439721033

##########

File path: hudi-client/hudi-client-spark/src/main/java/org/apache/hudi/common/HoodieSparkEngineContext.java

##########

@@ -0,0 +1,34 @@

+package org.apache.hudi.common;

+

+import org.apache.hudi.common.config.SerializableConfiguration;

+import org.apache.spark.SparkConf;

+import org.apache.spark.api.java.JavaSparkContext;

+

+/**

+ * @author xianghu.wang

+ * @time 2020/6/10

+ * @description

+ */

Review comment:

ditto

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r444761321

##########

File path: hudi-client/hudi-client-spark/src/main/java/org/apache/hudi/table/HoodieSparkTableFactory.java

##########

@@ -0,0 +1,56 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.table;

+

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.common.table.timeline.versioning.TimelineLayoutVersion;

+import org.apache.hudi.common.util.Option;

+import org.apache.hudi.config.HoodieWriteConfig;

+import org.apache.hudi.exception.HoodieException;

+

+import org.apache.hadoop.conf.Configuration;

+

+/**

+ * A factory to create different type tables from config.

+ */

+public class HoodieSparkTableFactory {

+ public static BaseHoodieTable create(HoodieWriteConfig config, Configuration hadoopConf) {

+ HoodieTableMetaClient metaClient = new HoodieTableMetaClient(

+ hadoopConf,

+ config.getBasePath(),

+ true,

+ config.getConsistencyGuardConfig(),

+ Option.of(new TimelineLayoutVersion(config.getTimelineLayoutVersion()))

+ );

+ return create(metaClient, config, hadoopConf);

+ }

+

+ public static BaseHoodieTable create(HoodieTableMetaClient metaClient, HoodieWriteConfig config, Configuration hadoopConf) {

+ switch (metaClient.getTableType()) {

+ case COPY_ON_WRITE:

+ return new HoodieSparkCopyOnWriteTable<>(config, hadoopConf, metaClient);

+ case MERGE_ON_READ:

+ // TODO

+ return null;

Review comment:

also this code snippet would be merge with `HoodieSparkTable#create` ?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r443962715

##########

File path: hudi-client/hudi-client-spark/src/main/java/org/apache/hudi/client/HoodieSparkWriteClient.java

##########

@@ -481,6 +585,11 @@ public void close() {

super.close();

}

+ @Override

+ public void startEmbeddedServerView() {

+

+ }

Review comment:

missing implement and would share with SparkCompactionAdminClient.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#issuecomment-643021903

> We may have to coordinate with the bootstrap pr bit more on conflicts/rebasng, so that either of your life is not hell :)

will keep an eye on the bootstrap pr, thanks for reminding :)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439718342

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/AbstractHoodieWriteClient.java

##########

@@ -0,0 +1,537 @@

+package org.apache.hudi.client;

+

+import com.codahale.metrics.Timer;

+import org.apache.hudi.avro.model.HoodieCleanMetadata;

+import org.apache.hudi.avro.model.HoodieCompactionPlan;

+import org.apache.hudi.avro.model.HoodieRestoreMetadata;

+import org.apache.hudi.avro.model.HoodieRollbackMetadata;

+import org.apache.hudi.client.embedded.AbstractEmbeddedTimelineService;

+import org.apache.hudi.common.HoodieEngineContext;

+import org.apache.hudi.common.model.*;

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.common.table.timeline.HoodieActiveTimeline;

+import org.apache.hudi.common.table.timeline.HoodieInstant;

+import org.apache.hudi.common.table.timeline.HoodieTimeline;

+import org.apache.hudi.common.util.Option;

+import org.apache.hudi.common.util.ValidationUtils;

+import org.apache.hudi.config.HoodieCompactionConfig;

+import org.apache.hudi.config.HoodieWriteConfig;

+import org.apache.hudi.exception.*;

Review comment:

please avoid using *

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439159813

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/AbstractHoodieClient.java

##########

@@ -19,52 +19,53 @@

package org.apache.hudi.client;

import org.apache.hadoop.conf.Configuration;

-import org.apache.hudi.client.embedded.EmbeddedTimelineService;

-import org.apache.hudi.client.utils.ClientUtils;

+import org.apache.hadoop.fs.FileSystem;

+import org.apache.hudi.client.embedded.AbstractEmbeddedTimelineService;

+import org.apache.hudi.client.util.ClientUtils;

+import org.apache.hudi.common.HoodieEngineContext;

import org.apache.hudi.common.fs.FSUtils;

import org.apache.hudi.common.table.HoodieTableMetaClient;

import org.apache.hudi.common.util.Option;

import org.apache.hudi.config.HoodieWriteConfig;

+import org.slf4j.Logger;

Review comment:

Hi @vinothchandar, Thanks for feedback!

yes, HoodieEngineContext is thin, It holds only common things, while spark related goes to HoodieSparkEngineContext, flink related goes to HoodieFlinkEngineContext... which both extends HoodieEngineContext .

As it is already huge, We don't want to make too many changes. So we made no API/functionality changes for Spark RDD client, just abstracted it. BTW, I have verified it in flink engine before(replace RDD with List), it is doable.

I'll roll back the log with log4j.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439733435

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/metrics/JmxReporterServer.java

##########

@@ -18,18 +18,16 @@

package org.apache.hudi.metrics;

+import com.codahale.metrics.MetricRegistry;

+import com.codahale.metrics.jmx.JmxReporter;

import org.apache.hudi.common.util.StringUtils;

import org.apache.hudi.common.util.ValidationUtils;

import org.apache.hudi.exception.HoodieException;

-import com.codahale.metrics.MetricRegistry;

-import com.codahale.metrics.jmx.JmxReporter;

-

Review comment:

> is the import reordered by idea? I found some files just change the import order, would we keep the same as before?

yes, idea ordered it. I will rollback.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r444763429

##########

File path: hudi-client/hudi-client-spark/src/main/java/org/apache/hudi/table/action/commit/WriteHelper.java

##########

@@ -18,27 +18,32 @@

package org.apache.hudi.table.action.commit;

+import org.apache.hudi.client.WriteStatus;

+import org.apache.hudi.common.HoodieSparkEngineContext;

import org.apache.hudi.common.model.HoodieKey;

import org.apache.hudi.common.model.HoodieRecord;

import org.apache.hudi.common.model.HoodieRecordPayload;

+import org.apache.hudi.common.util.Option;

+import org.apache.hudi.common.util.collection.Pair;

import org.apache.hudi.exception.HoodieUpsertException;

-import org.apache.hudi.index.HoodieIndex;

-import org.apache.hudi.table.HoodieTable;

-

+import org.apache.hudi.index.BaseHoodieIndex;

+import org.apache.hudi.table.BaseHoodieTable;

import org.apache.hudi.table.action.HoodieWriteMetadata;

+

+import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaRDD;

-import org.apache.spark.api.java.JavaSparkContext;

import java.time.Duration;

import java.time.Instant;

+

import scala.Tuple2;

public class WriteHelper<T extends HoodieRecordPayload<T>> {

- public static <T extends HoodieRecordPayload<T>> HoodieWriteMetadata write(String instantTime,

- JavaRDD<HoodieRecord<T>> inputRecordsRDD, JavaSparkContext jsc,

- HoodieTable<T> table, boolean shouldCombine,

- int shuffleParallelism, CommitActionExecutor<T> executor, boolean performTagging) {

+ public static <T extends HoodieRecordPayload<T>> HoodieWriteMetadata<JavaRDD<WriteStatus>> write(String instantTime,

+ JavaRDD<HoodieRecord<T>> inputRecordsRDD, HoodieSparkEngineContext context,

+ BaseHoodieTable<T, JavaRDD<HoodieRecord<T>>, JavaRDD<HoodieKey>, JavaRDD<WriteStatus>, JavaPairRDD<HoodieKey, Option<Pair<String, String>>>> table, boolean shouldCombine,

+ int shuffleParallelism, SparkCommitActionExecutor<T> executor, boolean performTagging) {

Review comment:

should also refactor the WriteHelper

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] yanghua commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

yanghua commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439809413

##########

File path: hudi-client/hudi-client-flink/pom.xml

##########

@@ -0,0 +1,18 @@

+<?xml version="1.0" encoding="UTF-8"?>

+

Review comment:

ditto

##########

File path: hudi-client/hudi-client-common/pom.xml

##########

@@ -0,0 +1,18 @@

+<?xml version="1.0" encoding="UTF-8"?>

+

Review comment:

Missing Apache License header.

##########

File path: hudi-client/hudi-client-spark/pom.xml

##########

@@ -0,0 +1,37 @@

+<?xml version="1.0" encoding="UTF-8"?>

+

Review comment:

ditto

##########

File path: hudi-client/hudi-client-java/pom.xml

##########

@@ -0,0 +1,17 @@

+<?xml version="1.0" encoding="UTF-8"?>

+

Review comment:

ditto

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439720808

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/table/AbstractWorkloadProfile.java

##########

@@ -18,81 +18,45 @@

package org.apache.hudi.table;

-import org.apache.hudi.common.model.HoodieRecord;

-import org.apache.hudi.common.model.HoodieRecordLocation;

import org.apache.hudi.common.model.HoodieRecordPayload;

-import org.apache.hudi.common.util.Option;

-

-import org.apache.spark.api.java.JavaRDD;

import java.io.Serializable;

import java.util.HashMap;

-import java.util.Map;

import java.util.Set;

-import scala.Tuple2;

-

/**

* Information about incoming records for upsert/insert obtained either via sampling or introspecting the data fully.

* <p>

* TODO(vc): Think about obtaining this directly from index.tagLocation

*/

-public class WorkloadProfile<T extends HoodieRecordPayload> implements Serializable {

+public abstract class AbstractWorkloadProfile<I> implements Serializable {

/**

* Input workload.

*/

- private final JavaRDD<HoodieRecord<T>> taggedRecords;

+ public final I taggedRecords;

Review comment:

protected

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439732647

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/AbstractHoodieWriteClient.java

##########

@@ -0,0 +1,537 @@

+package org.apache.hudi.client;

+

+import com.codahale.metrics.Timer;

+import org.apache.hudi.avro.model.HoodieCleanMetadata;

+import org.apache.hudi.avro.model.HoodieCompactionPlan;

+import org.apache.hudi.avro.model.HoodieRestoreMetadata;

+import org.apache.hudi.avro.model.HoodieRollbackMetadata;

+import org.apache.hudi.client.embedded.AbstractEmbeddedTimelineService;

+import org.apache.hudi.common.HoodieEngineContext;

+import org.apache.hudi.common.model.*;

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.common.table.timeline.HoodieActiveTimeline;

+import org.apache.hudi.common.table.timeline.HoodieInstant;

+import org.apache.hudi.common.table.timeline.HoodieTimeline;

+import org.apache.hudi.common.util.Option;

+import org.apache.hudi.common.util.ValidationUtils;

+import org.apache.hudi.config.HoodieCompactionConfig;

+import org.apache.hudi.config.HoodieWriteConfig;

+import org.apache.hudi.exception.*;

+import org.apache.hudi.index.AbstractHoodieIndex;

+import org.apache.hudi.metrics.HoodieMetrics;

+import org.apache.hudi.table.AbstractHoodieTable;

+import org.apache.hudi.table.HoodieTimelineArchiveLog;

+import org.apache.hudi.table.UserDefinedBulkInsertPartitioner;

+import org.apache.hudi.table.action.HoodieWriteMetadata;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.IOException;

+import java.text.ParseException;

+import java.util.Collection;

+import java.util.List;

+import java.util.Map;

+import java.util.stream.Collectors;

+

+/**

+ * @author xianghu.wang

+ * @time 2020/6/10

+ * @description

+ */

+public abstract class AbstractHoodieWriteClient<T extends HoodieRecordPayload<T>, I, K, O, P> extends AbstractHoodieClient {

+

+ private static final Logger LOG = LoggerFactory.getLogger(AbstractHoodieWriteClient.class);

+ private static final long serialVersionUID = 1L;

+ private final transient HoodieMetrics metrics;

+ private final transient AbstractHoodieIndex<T, I, K, O, P> index;

+ private final transient AbstractHoodieTable<T, I, K, O, P> table;

+ private transient Timer.Context writeContext = null;

+ private transient WriteOperationType operationType;

+

+ private static final String LOOKUP_STR = "lookup";

+ private final boolean rollbackPending;

+ private transient Timer.Context compactionTimer;

+

+

+ public void setOperationType(WriteOperationType operationType) {

+ this.operationType = operationType;

+ }

+

+ public WriteOperationType getOperationType() {

+ return this.operationType;

+ }

+

+ public AbstractHoodieWriteClient(HoodieEngineContext context, AbstractHoodieIndex<T, I, K, O, P> index,

+ AbstractHoodieTable<T, I, K, O, P> table,HoodieWriteConfig clientConfig) {

+ this(context, index, table,clientConfig, Option.empty());

+ }

+

+ protected AbstractHoodieWriteClient(HoodieEngineContext context, AbstractHoodieIndex<T, I, K, O, P> index,

+ AbstractHoodieTable<T, I, K, O, P> table,HoodieWriteConfig clientConfig,

+ Option<AbstractEmbeddedTimelineService> timelineServer) {

+ this(context, index, table,clientConfig, timelineServer, false);

+ }

+

+ public AbstractHoodieWriteClient(HoodieEngineContext context, AbstractHoodieIndex<T, I, K, O, P> index,AbstractHoodieTable<T, I, K, O, P> table,

+ HoodieWriteConfig clientConfig, Option<AbstractEmbeddedTimelineService> timelineServer, boolean rollbackPending) {

+ super(context, clientConfig, timelineServer);

+ this.index = index;

+ this.table = table;

+ this.metrics = new HoodieMetrics(config, config.getTableName());

+ this.rollbackPending = rollbackPending;

+ }

+

+ public abstract I filterExists(I hoodieRecords);

+

+ public abstract O upsert(I records, final String instantTime);

+

+ public abstract O upsertPreppedRecords(I preppedRecords, final String instantTime);

+

+ public abstract O insert(I records, final String instantTime);

+

+ public abstract O insertPreppedRecords(I preppedRecords, final String instantTime);

Review comment:

> these are methods from HoodieWriteClient, and users would use HoodieWriteClient to upsert/insert records directly using the APIs, right now the HoodieWriteClient has been removed, so it breaks the compatibility.

@leesf Yes, it is not finished yet. I have noticed that HoodieWriteClient has been referenced in many places(eg hudi-cli,hudi-utilities...). When hudi-client module is ready, the other modules which rely on hudi-client should make appropriate changes to adapt to.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

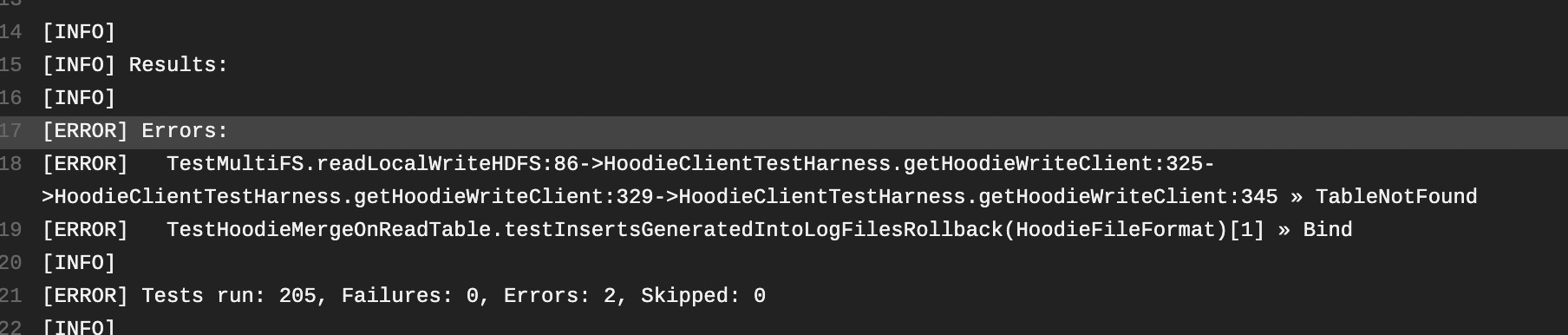

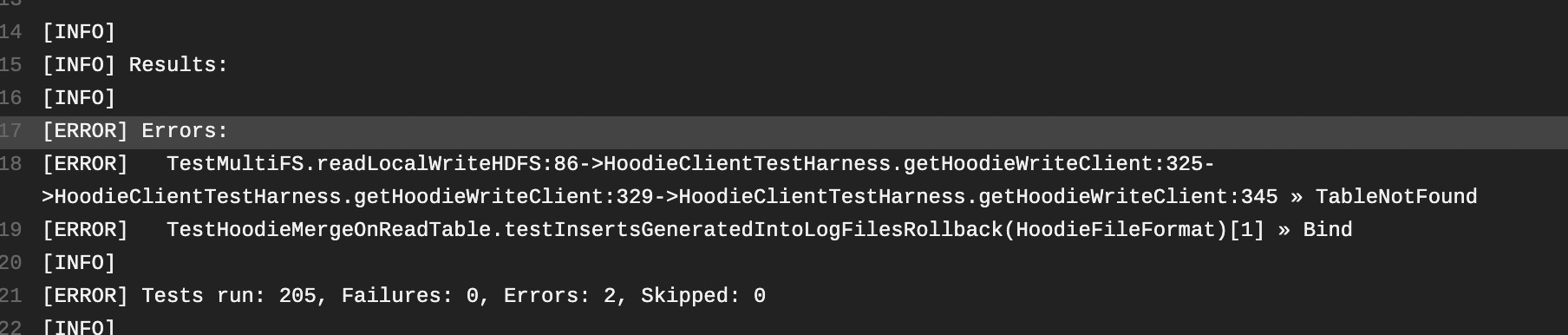

[GitHub] [hudi] Mathieu1124 commented on pull request #1727: [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

Mathieu1124 commented on pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#issuecomment-657409985

It is strange, Both these two unit tests run correctly in my local environment.

@yanghua @leesf would you please have a look at this :)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r443962715

##########

File path: hudi-client/hudi-client-spark/src/main/java/org/apache/hudi/client/HoodieSparkWriteClient.java

##########

@@ -481,6 +585,11 @@ public void close() {

super.close();

}

+ @Override

+ public void startEmbeddedServerView() {

+

+ }

Review comment:

missing implement.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r444755524

##########

File path: hudi-client/hudi-client-spark/src/main/java/org/apache/hudi/table/HoodieSparkTableFactory.java

##########

@@ -0,0 +1,56 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.hudi.table;

+

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.common.table.timeline.versioning.TimelineLayoutVersion;

+import org.apache.hudi.common.util.Option;

+import org.apache.hudi.config.HoodieWriteConfig;

+import org.apache.hudi.exception.HoodieException;

+

+import org.apache.hadoop.conf.Configuration;

+

+/**

+ * A factory to create different type tables from config.

+ */

+public class HoodieSparkTableFactory {

+ public static BaseHoodieTable create(HoodieWriteConfig config, Configuration hadoopConf) {

+ HoodieTableMetaClient metaClient = new HoodieTableMetaClient(

+ hadoopConf,

+ config.getBasePath(),

+ true,

+ config.getConsistencyGuardConfig(),

+ Option.of(new TimelineLayoutVersion(config.getTimelineLayoutVersion()))

+ );

+ return create(metaClient, config, hadoopConf);

+ }

+

+ public static BaseHoodieTable create(HoodieTableMetaClient metaClient, HoodieWriteConfig config, Configuration hadoopConf) {

+ switch (metaClient.getTableType()) {

+ case COPY_ON_WRITE:

+ return new HoodieSparkCopyOnWriteTable<>(config, hadoopConf, metaClient);

+ case MERGE_ON_READ:

+ // TODO

+ return null;

Review comment:

HoodieSparkMergeOnReadTable has been implemented, right?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439719225

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/AbstractHoodieWriteClient.java

##########

@@ -0,0 +1,537 @@

+package org.apache.hudi.client;

+

+import com.codahale.metrics.Timer;

+import org.apache.hudi.avro.model.HoodieCleanMetadata;

+import org.apache.hudi.avro.model.HoodieCompactionPlan;

+import org.apache.hudi.avro.model.HoodieRestoreMetadata;

+import org.apache.hudi.avro.model.HoodieRollbackMetadata;

+import org.apache.hudi.client.embedded.AbstractEmbeddedTimelineService;

+import org.apache.hudi.common.HoodieEngineContext;

+import org.apache.hudi.common.model.*;

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.common.table.timeline.HoodieActiveTimeline;

+import org.apache.hudi.common.table.timeline.HoodieInstant;

+import org.apache.hudi.common.table.timeline.HoodieTimeline;

+import org.apache.hudi.common.util.Option;

+import org.apache.hudi.common.util.ValidationUtils;

+import org.apache.hudi.config.HoodieCompactionConfig;

+import org.apache.hudi.config.HoodieWriteConfig;

+import org.apache.hudi.exception.*;

+import org.apache.hudi.index.AbstractHoodieIndex;

+import org.apache.hudi.metrics.HoodieMetrics;

+import org.apache.hudi.table.AbstractHoodieTable;

+import org.apache.hudi.table.HoodieTimelineArchiveLog;

+import org.apache.hudi.table.UserDefinedBulkInsertPartitioner;

+import org.apache.hudi.table.action.HoodieWriteMetadata;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.IOException;

+import java.text.ParseException;

+import java.util.Collection;

+import java.util.List;

+import java.util.Map;

+import java.util.stream.Collectors;

+

+/**

+ * @author xianghu.wang

+ * @time 2020/6/10

+ * @description

+ */

+public abstract class AbstractHoodieWriteClient<T extends HoodieRecordPayload<T>, I, K, O, P> extends AbstractHoodieClient {

+

+ private static final Logger LOG = LoggerFactory.getLogger(AbstractHoodieWriteClient.class);

+ private static final long serialVersionUID = 1L;

+ private final transient HoodieMetrics metrics;

+ private final transient AbstractHoodieIndex<T, I, K, O, P> index;

+ private final transient AbstractHoodieTable<T, I, K, O, P> table;

+ private transient Timer.Context writeContext = null;

+ private transient WriteOperationType operationType;

+

+ private static final String LOOKUP_STR = "lookup";

+ private final boolean rollbackPending;

+ private transient Timer.Context compactionTimer;

+

+

+ public void setOperationType(WriteOperationType operationType) {

+ this.operationType = operationType;

+ }

+

+ public WriteOperationType getOperationType() {

+ return this.operationType;

+ }

+

+ public AbstractHoodieWriteClient(HoodieEngineContext context, AbstractHoodieIndex<T, I, K, O, P> index,

+ AbstractHoodieTable<T, I, K, O, P> table,HoodieWriteConfig clientConfig) {

+ this(context, index, table,clientConfig, Option.empty());

+ }

+

+ protected AbstractHoodieWriteClient(HoodieEngineContext context, AbstractHoodieIndex<T, I, K, O, P> index,

+ AbstractHoodieTable<T, I, K, O, P> table,HoodieWriteConfig clientConfig,

+ Option<AbstractEmbeddedTimelineService> timelineServer) {

+ this(context, index, table,clientConfig, timelineServer, false);

+ }

+

+ public AbstractHoodieWriteClient(HoodieEngineContext context, AbstractHoodieIndex<T, I, K, O, P> index,AbstractHoodieTable<T, I, K, O, P> table,

+ HoodieWriteConfig clientConfig, Option<AbstractEmbeddedTimelineService> timelineServer, boolean rollbackPending) {

+ super(context, clientConfig, timelineServer);

+ this.index = index;

+ this.table = table;

+ this.metrics = new HoodieMetrics(config, config.getTableName());

+ this.rollbackPending = rollbackPending;

+ }

+

+ public abstract I filterExists(I hoodieRecords);

+

+ public abstract O upsert(I records, final String instantTime);

+

+ public abstract O upsertPreppedRecords(I preppedRecords, final String instantTime);

+

+ public abstract O insert(I records, final String instantTime);

+

+ public abstract O insertPreppedRecords(I preppedRecords, final String instantTime);

Review comment:

these are methods from HoodieWriteClient, and users would use HoodieWriteClient to upsert/insert records directly using the APIs, right now the HoodieWriteClient has been removed, so it breaks the compatibility.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439827612

##########

File path: hudi-client/hudi-client-spark/pom.xml

##########

@@ -0,0 +1,37 @@

+<?xml version="1.0" encoding="UTF-8"?>

+

Review comment:

copy, @yanghua thanks for your review.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] vinothchandar commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

vinothchandar commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r438953612

##########

File path: hudi-client/hudi-client-spark/src/main/java/org/apache/hudi/client/SparkCompactionAdminClient.java

##########

@@ -0,0 +1,131 @@

+package org.apache.hudi.client;

+

+import org.apache.hudi.avro.model.HoodieCompactionPlan;

+import org.apache.hudi.client.embedded.SparkEmbeddedTimelineService;

+import org.apache.hudi.common.HoodieEngineContext;

+import org.apache.hudi.common.HoodieSparkEngineContext;

+import org.apache.hudi.common.model.CompactionOperation;

+import org.apache.hudi.common.model.HoodieLogFile;

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.common.table.view.HoodieTableFileSystemView;

+import org.apache.hudi.common.util.Option;

+import org.apache.hudi.common.util.collection.Pair;

+import org.apache.hudi.exception.HoodieException;

+import org.apache.hudi.exception.HoodieIOException;

+import org.apache.spark.api.java.JavaSparkContext;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.IOException;

+import java.util.ArrayList;

+import java.util.List;

+import java.util.stream.Collectors;

+

+public class SparkCompactionAdminClient extends AbstractCompactionAdminClient {

+ private static final Logger LOG = LoggerFactory.getLogger(SparkCompactionAdminClient.class);

+

+ public SparkCompactionAdminClient(HoodieEngineContext context, String basePath) {

+ super(context, basePath);

+ }

+

+ @Override

+ public List<ValidationOpResult> validateCompactionPlan(HoodieTableMetaClient metaClient, String compactionInstant, int parallelism) throws IOException {

+ JavaSparkContext jsc = ((HoodieSparkEngineContext) context).getJavaSparkContext();

Review comment:

pull this into a helper like `HoodieSparkEngineContext.getSparkContext(engineCtx)` ?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] vinothchandar commented on pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

vinothchandar commented on pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#issuecomment-642829232

We may have to coordinate with the bootstrap pr bit more on conflicts/rebasng, so that either of your life is not hell :)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439719736

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/TaskContextSupplier.java

##########

@@ -0,0 +1,19 @@

+package org.apache.hudi.client;

+

+import java.io.Serializable;

+import java.util.function.Supplier;

+

+/**

+ * @author xianghu.wang

+ * @time 2020/6/10

+ * @description

+ */

Review comment:

ditoo

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439160108

##########

File path: hudi-client/hudi-client-spark/src/main/java/org/apache/hudi/client/SparkCompactionAdminClient.java

##########

@@ -0,0 +1,131 @@

+package org.apache.hudi.client;

+

+import org.apache.hudi.avro.model.HoodieCompactionPlan;

+import org.apache.hudi.client.embedded.SparkEmbeddedTimelineService;

+import org.apache.hudi.common.HoodieEngineContext;

+import org.apache.hudi.common.HoodieSparkEngineContext;

+import org.apache.hudi.common.model.CompactionOperation;

+import org.apache.hudi.common.model.HoodieLogFile;

+import org.apache.hudi.common.table.HoodieTableMetaClient;

+import org.apache.hudi.common.table.view.HoodieTableFileSystemView;

+import org.apache.hudi.common.util.Option;

+import org.apache.hudi.common.util.collection.Pair;

+import org.apache.hudi.exception.HoodieException;

+import org.apache.hudi.exception.HoodieIOException;

+import org.apache.spark.api.java.JavaSparkContext;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.IOException;

+import java.util.ArrayList;

+import java.util.List;

+import java.util.stream.Collectors;

+

+public class SparkCompactionAdminClient extends AbstractCompactionAdminClient {

+ private static final Logger LOG = LoggerFactory.getLogger(SparkCompactionAdminClient.class);

+

+ public SparkCompactionAdminClient(HoodieEngineContext context, String basePath) {

+ super(context, basePath);

+ }

+

+ @Override

+ public List<ValidationOpResult> validateCompactionPlan(HoodieTableMetaClient metaClient, String compactionInstant, int parallelism) throws IOException {

+ JavaSparkContext jsc = ((HoodieSparkEngineContext) context).getJavaSparkContext();

Review comment:

> pull this into a helper like `HoodieSparkEngineContext.getSparkContext(engineCtx)` ?

will do

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439720012

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/embedded/AbstractEmbeddedTimelineService.java

##########

@@ -108,4 +77,5 @@ public void stop() {

LOG.info("Closed Timeline server");

}

}

+

Review comment:

remove empty line

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r444754297

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/index/BaseHoodieIndex.java

##########

@@ -18,90 +18,45 @@

package org.apache.hudi.index;

-import org.apache.hudi.client.WriteStatus;

+import org.apache.hudi.common.HoodieEngineContext;

import org.apache.hudi.common.model.FileSlice;

import org.apache.hudi.common.model.HoodieKey;

-import org.apache.hudi.common.model.HoodieRecord;

import org.apache.hudi.common.model.HoodieRecordPayload;

-import org.apache.hudi.common.util.Option;

-import org.apache.hudi.common.util.ReflectionUtils;

-import org.apache.hudi.common.util.StringUtils;

-import org.apache.hudi.common.util.collection.Pair;

import org.apache.hudi.config.HoodieWriteConfig;

import org.apache.hudi.exception.HoodieIndexException;

-import org.apache.hudi.index.bloom.HoodieBloomIndex;

-import org.apache.hudi.index.bloom.HoodieGlobalBloomIndex;

-import org.apache.hudi.index.hbase.HBaseIndex;

-import org.apache.hudi.index.simple.HoodieGlobalSimpleIndex;

-import org.apache.hudi.index.simple.HoodieSimpleIndex;

-import org.apache.hudi.table.HoodieTable;

-

-import org.apache.spark.api.java.JavaPairRDD;

-import org.apache.spark.api.java.JavaRDD;

-import org.apache.spark.api.java.JavaSparkContext;

-

-import java.io.Serializable;

+import org.apache.hudi.table.BaseHoodieTable;

/**

* Base class for different types of indexes to determine the mapping from uuid.

*/

-public abstract class HoodieIndex<T extends HoodieRecordPayload> implements Serializable {

-

+public abstract class BaseHoodieIndex<T extends HoodieRecordPayload<T>, I, K, O, P> {

Review comment:

please also describe I, K, O, P

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r443960252

##########

File path: hudi-client/hudi-client-common/src/main/java/org/apache/hudi/client/BaseHoodieClient.java

##########

@@ -18,53 +18,55 @@

package org.apache.hudi.client;

-import org.apache.hadoop.conf.Configuration;

-import org.apache.hudi.client.embedded.EmbeddedTimelineService;

+import org.apache.hudi.client.embedded.AbstractEmbeddedTimelineService;

import org.apache.hudi.client.utils.ClientUtils;

+import org.apache.hudi.common.HoodieEngineContext;

import org.apache.hudi.common.fs.FSUtils;

import org.apache.hudi.common.table.HoodieTableMetaClient;

import org.apache.hudi.common.util.Option;

import org.apache.hudi.config.HoodieWriteConfig;

+import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.log4j.LogManager;

import org.apache.log4j.Logger;

-import org.apache.spark.api.java.JavaSparkContext;

-import java.io.IOException;

import java.io.Serializable;

+import java.util.concurrent.locks.Lock;

+import java.util.concurrent.locks.ReentrantLock;

/**

* Abstract class taking care of holding common member variables (FileSystem, SparkContext, HoodieConfigs) Also, manages

* embedded timeline-server if enabled.

*/

Review comment:

please also modify the description.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] Mathieu1124 edited a comment on pull request #1727: [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

Mathieu1124 edited a comment on pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#issuecomment-657409985

It is strange, Both these two unit tests run correctly in my local environment.

@yanghua @leesf would you please take a look at this :)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] wangxianghu commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

wangxianghu commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r444315593

##########

File path: hudi-client/hudi-client-spark/src/main/java/org/apache/hudi/client/HoodieSparkWriteClient.java

##########

@@ -481,6 +585,11 @@ public void close() {

super.close();

}

+ @Override

+ public void startEmbeddedServerView() {

+

+ }

Review comment:

> missing implement and would reuse code in SparkCompactionAdminClient.

done

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] leesf commented on a change in pull request #1727: [WIP] [Review] refactor hudi-client

Posted by GitBox <gi...@apache.org>.

leesf commented on a change in pull request #1727:

URL: https://github.com/apache/hudi/pull/1727#discussion_r439721071

##########

File path: hudi-client/hudi-client-spark/src/main/java/org/apache/hudi/io/AppendHandleFactory.java

##########

@@ -0,0 +1,28 @@

+package org.apache.hudi.io;

+

+import org.apache.hudi.client.SparkTaskContextSupplier;

+import org.apache.hudi.client.TaskContextSupplier;

+import org.apache.hudi.client.WriteStatus;

+import org.apache.hudi.common.model.HoodieKey;

+import org.apache.hudi.common.model.HoodieRecord;

+import org.apache.hudi.common.model.HoodieRecordPayload;

+import org.apache.hudi.common.util.Option;

+import org.apache.hudi.common.util.collection.Pair;

+import org.apache.hudi.config.HoodieWriteConfig;

+import org.apache.hudi.table.AbstractHoodieTable;

+import org.apache.spark.api.java.JavaPairRDD;

+import org.apache.spark.api.java.JavaRDD;

+

+/**

+ * @author xianghu.wang

+ * @time 2020/6/11

+ * @description

+ */

Review comment:

ditto

----------------------------------------------------------------

This is an automated message from the Apache Git Service.