You are viewing a plain text version of this content. The canonical link for it is here.

Posted to issues@kylin.apache.org by GitBox <gi...@apache.org> on 2020/12/03 01:24:04 UTC

[GitHub] [kylin] zzcclp opened a new pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

zzcclp opened a new pull request #1495:

URL: https://github.com/apache/kylin/pull/1495

## Proposed changes

Describe the big picture of your changes here to communicate to the maintainers why we should accept this pull request. If it fixes a bug or resolves a feature request, be sure to link to that issue.

## Types of changes

What types of changes does your code introduce to Kylin?

_Put an `x` in the boxes that apply_

- [ ] Bugfix (non-breaking change which fixes an issue)

- [ ] New feature (non-breaking change which adds functionality)

- [ ] Breaking change (fix or feature that would cause existing functionality to not work as expected)

- [ ] Documentation Update (if none of the other choices apply)

## Checklist

_Put an `x` in the boxes that apply. You can also fill these out after creating the PR. If you're unsure about any of them, don't hesitate to ask. We're here to help! This is simply a reminder of what we are going to look for before merging your code._

- [ ] I have create an issue on [Kylin's jira](https://issues.apache.org/jira/browse/KYLIN), and have described the bug/feature there in detail

- [ ] Commit messages in my PR start with the related jira ID, like "KYLIN-0000 Make Kylin project open-source"

- [ ] Compiling and unit tests pass locally with my changes

- [ ] I have added tests that prove my fix is effective or that my feature works

- [ ] If this change need a document change, I will prepare another pr against the `document` branch

- [ ] Any dependent changes have been merged

## Further comments

If this is a relatively large or complex change, kick off the discussion at user@kylin or dev@kylin by explaining why you chose the solution you did and what alternatives you considered, etc...

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] zzcclp commented on pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

zzcclp commented on pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#issuecomment-738628576

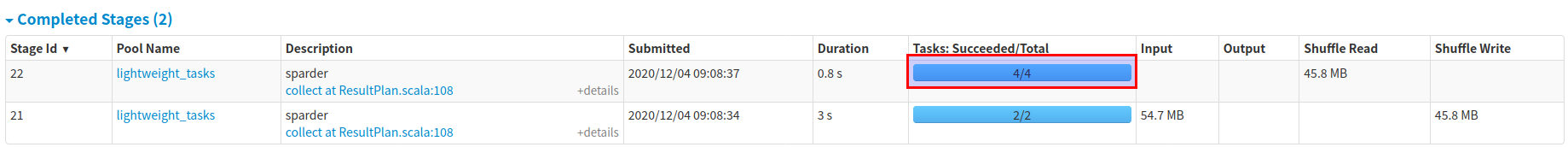

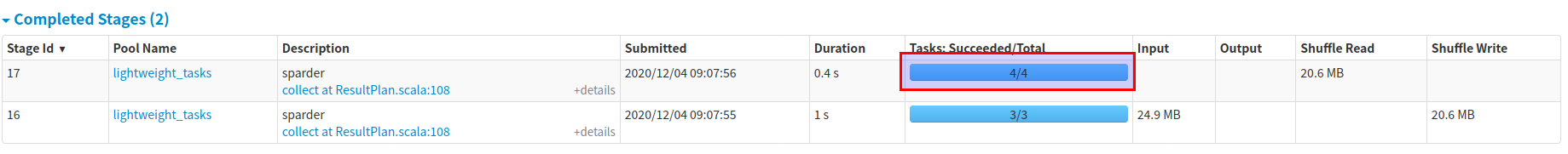

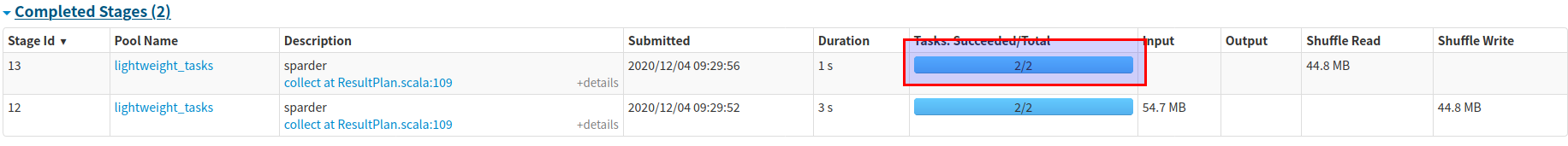

## The Results of Testing Manually

### Test Env

- Hadoop 2.7.0 on docker.

- Commit : [3b3786c5c](https://github.com/apache/kylin/commit/3b3786c5c9602838cd4abd0a6d40574550ec8622)

- Sparder Env :

spark.executor.cores=1

spark.executor.instances=4

spark.executor.memory=2G

spark.executor.memoryOverhead=1G

spark.sql.shuffle.partitions=4

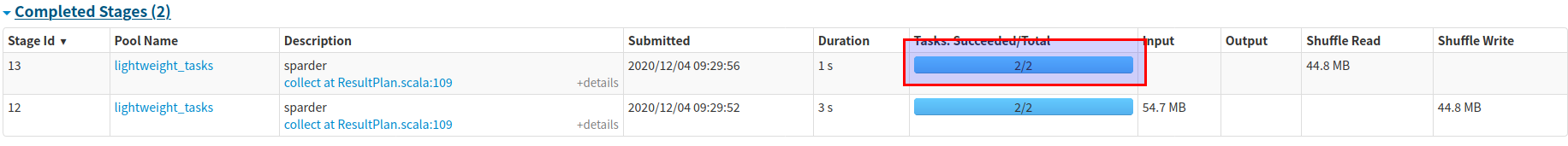

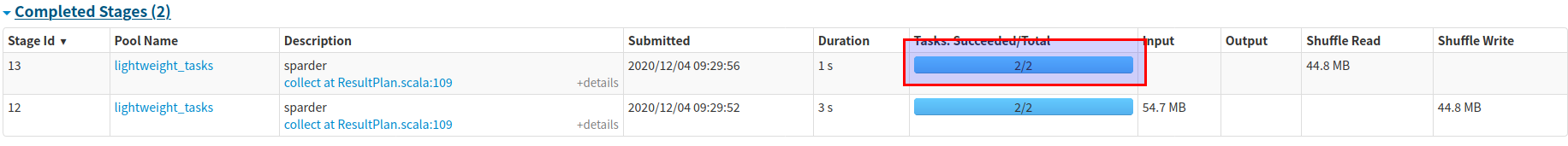

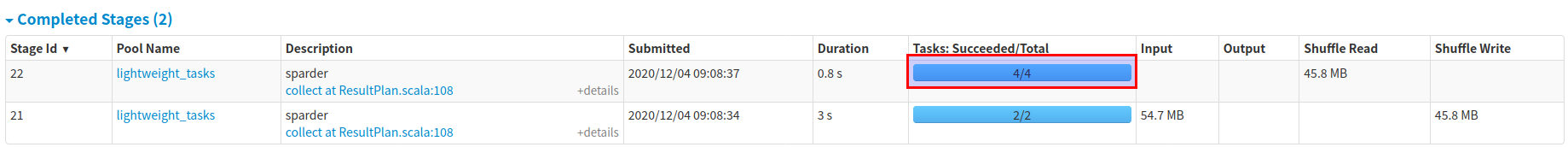

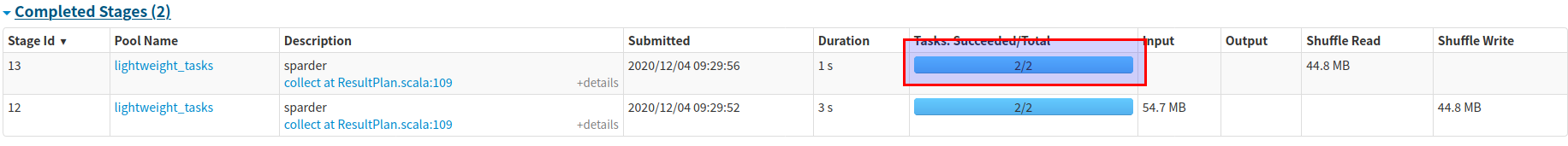

### Before this patch

The shuffle partition number of all querys is 4, which equals to the total cores number.

### After this patch

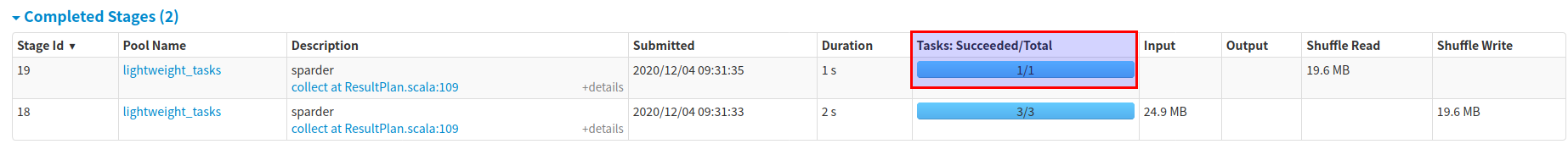

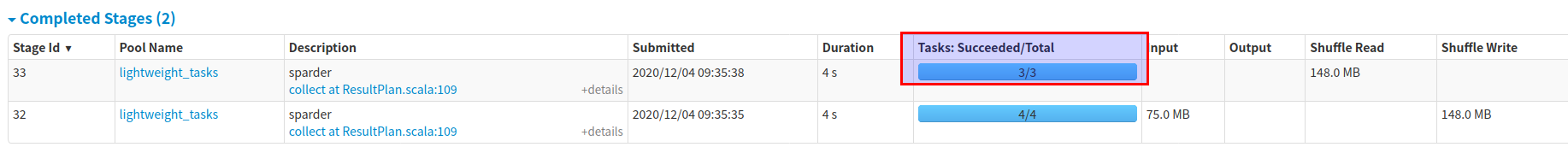

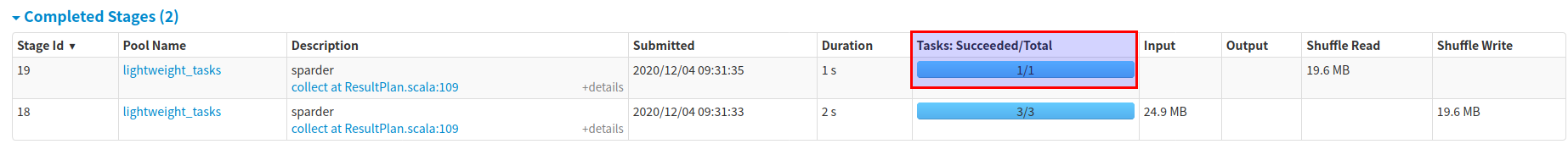

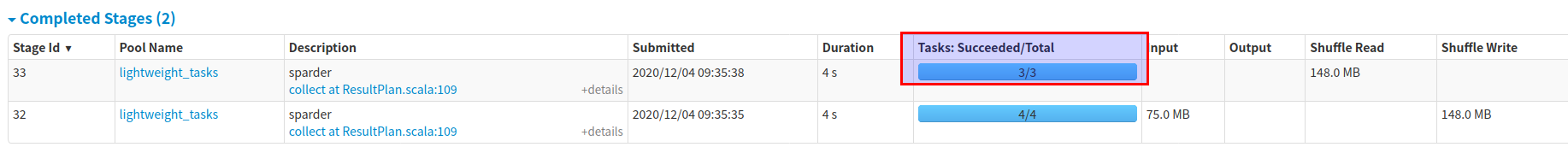

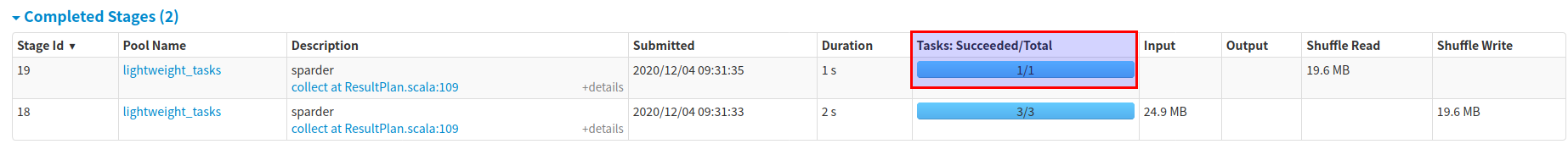

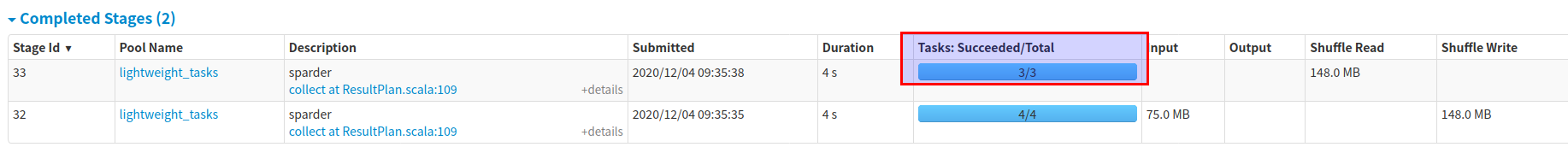

The shuffle partition number of each query is calculated according to the scanned bytes of each query:

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] hit-lacus commented on a change in pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

hit-lacus commented on a change in pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#discussion_r538307174

##########

File path: kylin-spark-project/kylin-spark-common/src/main/scala/org/apache/spark/sql/execution/datasource/ResetShufflePartition.scala

##########

@@ -17,25 +17,26 @@

*/

package org.apache.spark.sql.execution.datasource

-import org.apache.kylin.common.{KylinConfig, QueryContext, QueryContextFacade}

+import org.apache.kylin.common.{KylinConfig, QueryContextFacade}

import org.apache.spark.internal.Logging

import org.apache.spark.sql.SparkSession

+import org.apache.spark.utils.SparderUtils

trait ResetShufflePartition extends Logging {

+ val PARTITION_SPLIT_BYTES: Long = KylinConfig.getInstanceFromEnv.getQueryPartitionSplitSizeMB * 1024 * 1024 // 64MB

def setShufflePartitions(bytes: Long, sparkSession: SparkSession): Unit = {

QueryContextFacade.current().addAndGetSourceScanBytes(bytes)

- val defaultParallelism = sparkSession.sparkContext.defaultParallelism

+ val defaultParallelism = SparderUtils.getTotalCore(sparkSession.sparkContext.getConf)

val kylinConfig = KylinConfig.getInstanceFromEnv

val partitionsNum = if (kylinConfig.getSparkSqlShufflePartitions != -1) {

kylinConfig.getSparkSqlShufflePartitions

} else {

- Math.min(QueryContextFacade.current().getSourceScanBytes / (

- KylinConfig.getInstanceFromEnv.getQueryPartitionSplitSizeMB * 1024 * 1024 * 2) + 1,

Review comment:

You remove `* 2`, is it correct ?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] zzcclp commented on a change in pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

zzcclp commented on a change in pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#discussion_r538415776

##########

File path: kylin-spark-project/kylin-spark-common/src/main/scala/org/apache/spark/sql/execution/datasource/ResetShufflePartition.scala

##########

@@ -17,25 +17,26 @@

*/

package org.apache.spark.sql.execution.datasource

-import org.apache.kylin.common.{KylinConfig, QueryContext, QueryContextFacade}

+import org.apache.kylin.common.{KylinConfig, QueryContextFacade}

import org.apache.spark.internal.Logging

import org.apache.spark.sql.SparkSession

+import org.apache.spark.utils.SparderUtils

trait ResetShufflePartition extends Logging {

+ val PARTITION_SPLIT_BYTES: Long = KylinConfig.getInstanceFromEnv.getQueryPartitionSplitSizeMB * 1024 * 1024 // 64MB

def setShufflePartitions(bytes: Long, sparkSession: SparkSession): Unit = {

QueryContextFacade.current().addAndGetSourceScanBytes(bytes)

- val defaultParallelism = sparkSession.sparkContext.defaultParallelism

+ val defaultParallelism = SparderUtils.getTotalCore(sparkSession.sparkContext.getConf)

Review comment:

Make it the same algorithm as the code of 'ResultPlan.collectInternal'.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] zzcclp edited a comment on pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

zzcclp edited a comment on pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#issuecomment-738628576

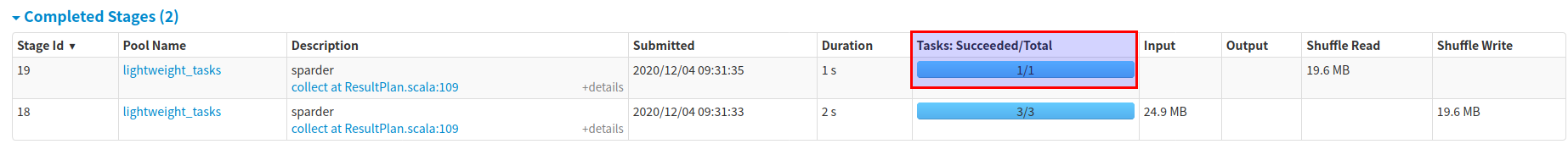

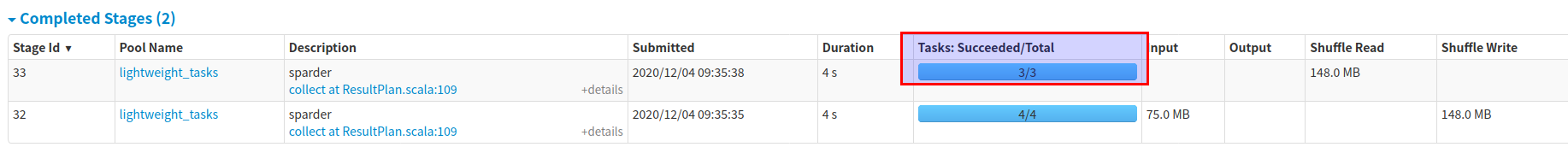

## The Results of Testing

### Test Env

- Hadoop 2.7.0 on docker.

- Commit : [3b3786c5c](https://github.com/apache/kylin/commit/3b3786c5c9602838cd4abd0a6d40574550ec8622)

- Sparder Env :

spark.executor.cores=1

spark.executor.instances=4

spark.executor.memory=2G

spark.executor.memoryOverhead=1G

spark.sql.shuffle.partitions=4

### Before this patch

The shuffle partition number of all querys is 4, which equals to the total cores number.

### After this patch

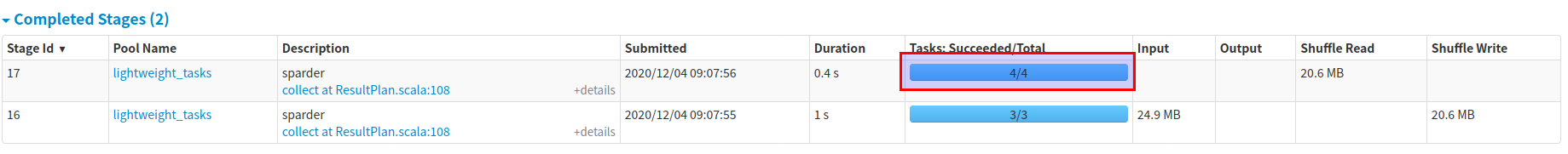

The shuffle partition number of each query is calculated according to the scanned bytes of each query:

The log messages shown as below:

`2020-12-04 05:28:11,991 INFO [Query a5e841ba-c430-383b-dfa8-5694cd6d282b-122] datasource.FilePruner:51 : Set partition to 2, total bytes 92610534`

The log messages shown as below:

`2020-12-04 05:28:12,112 INFO [Query 534a7afb-4857-6e0c-67b8-bd6a8da155a8-130] datasource.FilePruner:51 : Set partition to 1, total bytes 42133710`

The log messages shown as below:

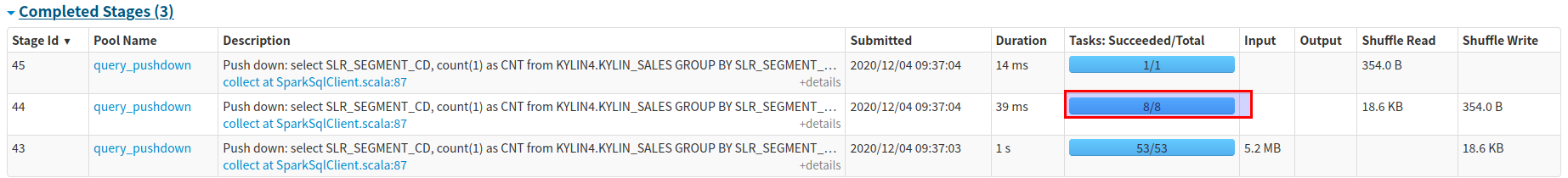

`2020-12-04 05:28:12,141 INFO [Query 744d34d1-0d06-11c8-fdee-1f260388117f-131] datasource.FilePruner:51 : Set partition to 3, total bytes 158775868`

The log messages shown as below:

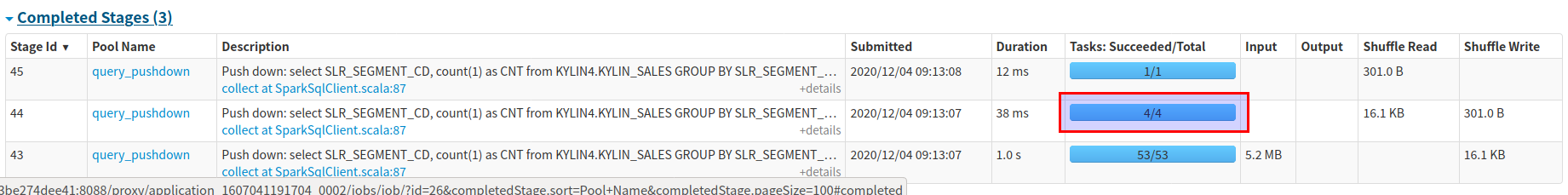

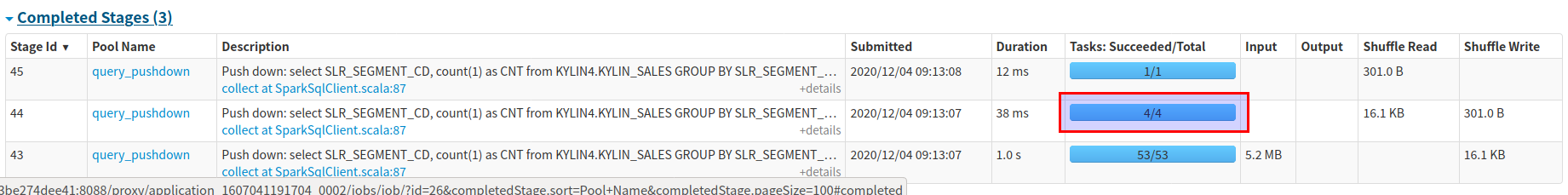

`2020-12-04 08:16:43,746 INFO [Query e117ceb8-53c1-959e-9cb0-75ee3901e271-126] pushdown.SparkSqlClient:68 : Auto set spark.sql.shuffle.partitions to 8, the total sources size is 415631445b`

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] zzcclp commented on a change in pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

zzcclp commented on a change in pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#discussion_r538418158

##########

File path: kylin-spark-project/kylin-spark-common/src/main/scala/org/apache/spark/sql/execution/datasource/ResetShufflePartition.scala

##########

@@ -17,25 +17,26 @@

*/

package org.apache.spark.sql.execution.datasource

-import org.apache.kylin.common.{KylinConfig, QueryContext, QueryContextFacade}

+import org.apache.kylin.common.{KylinConfig, QueryContextFacade}

import org.apache.spark.internal.Logging

import org.apache.spark.sql.SparkSession

+import org.apache.spark.utils.SparderUtils

trait ResetShufflePartition extends Logging {

+ val PARTITION_SPLIT_BYTES: Long = KylinConfig.getInstanceFromEnv.getQueryPartitionSplitSizeMB * 1024 * 1024 // 64MB

def setShufflePartitions(bytes: Long, sparkSession: SparkSession): Unit = {

QueryContextFacade.current().addAndGetSourceScanBytes(bytes)

- val defaultParallelism = sparkSession.sparkContext.defaultParallelism

+ val defaultParallelism = SparderUtils.getTotalCore(sparkSession.sparkContext.getConf)

val kylinConfig = KylinConfig.getInstanceFromEnv

val partitionsNum = if (kylinConfig.getSparkSqlShufflePartitions != -1) {

kylinConfig.getSparkSqlShufflePartitions

} else {

- Math.min(QueryContextFacade.current().getSourceScanBytes / (

Review comment:

Using 'Math.min' is right, it makes sure that the max partition number is not larger than the total cores.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] zzcclp commented on a change in pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

zzcclp commented on a change in pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#discussion_r538420711

##########

File path: kylin-spark-project/kylin-spark-common/src/main/scala/org/apache/spark/sql/execution/datasource/ResetShufflePartition.scala

##########

@@ -17,25 +17,26 @@

*/

package org.apache.spark.sql.execution.datasource

-import org.apache.kylin.common.{KylinConfig, QueryContext, QueryContextFacade}

+import org.apache.kylin.common.{KylinConfig, QueryContextFacade}

import org.apache.spark.internal.Logging

import org.apache.spark.sql.SparkSession

+import org.apache.spark.utils.SparderUtils

trait ResetShufflePartition extends Logging {

+ val PARTITION_SPLIT_BYTES: Long = KylinConfig.getInstanceFromEnv.getQueryPartitionSplitSizeMB * 1024 * 1024 // 64MB

def setShufflePartitions(bytes: Long, sparkSession: SparkSession): Unit = {

QueryContextFacade.current().addAndGetSourceScanBytes(bytes)

- val defaultParallelism = sparkSession.sparkContext.defaultParallelism

+ val defaultParallelism = SparderUtils.getTotalCore(sparkSession.sparkContext.getConf)

val kylinConfig = KylinConfig.getInstanceFromEnv

val partitionsNum = if (kylinConfig.getSparkSqlShufflePartitions != -1) {

kylinConfig.getSparkSqlShufflePartitions

} else {

- Math.min(QueryContextFacade.current().getSourceScanBytes / (

- KylinConfig.getInstanceFromEnv.getQueryPartitionSplitSizeMB * 1024 * 1024 * 2) + 1,

Review comment:

'* 2' doesn't make sense. If users want to use larger partition split size, they can increase the value of 'kylin.query.spark-engine.partition-split-size-mb' .

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] hit-lacus commented on a change in pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

hit-lacus commented on a change in pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#discussion_r538285677

##########

File path: kylin-spark-project/kylin-spark-engine/src/main/scala/org/apache/spark/sql/KylinSparkEnv.scala

##########

@@ -25,96 +25,27 @@ object KylinSparkEnv extends Logging {

@volatile

private var spark: SparkSession = _

Review comment:

Are you plan to delete `KylinSparkEnv` ?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] zzcclp commented on pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

zzcclp commented on pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#issuecomment-740415145

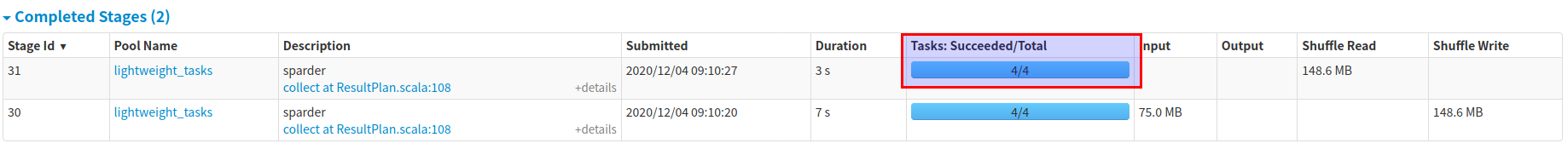

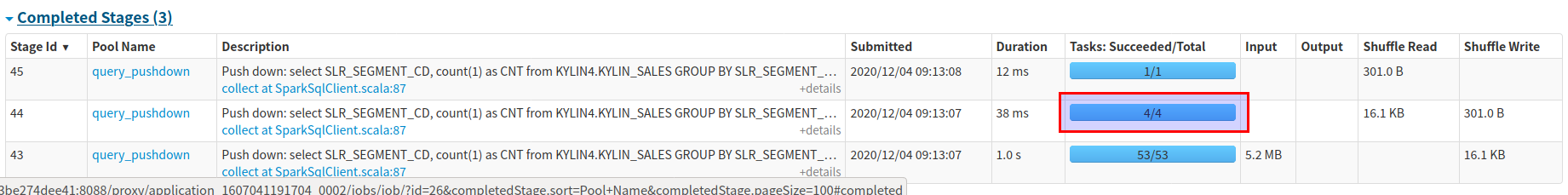

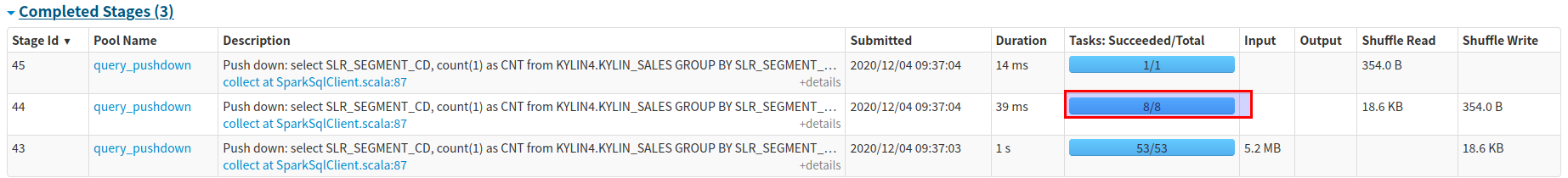

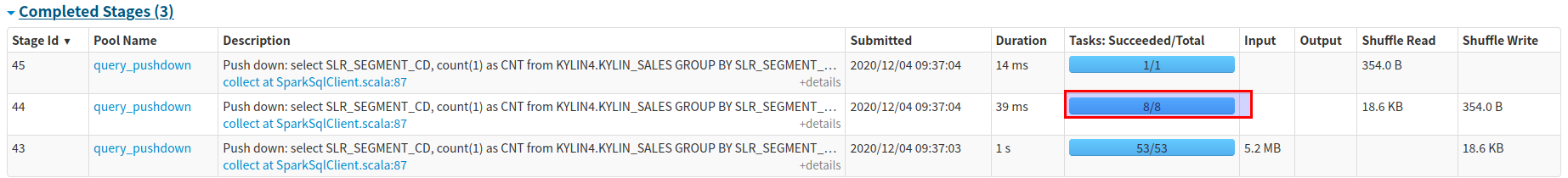

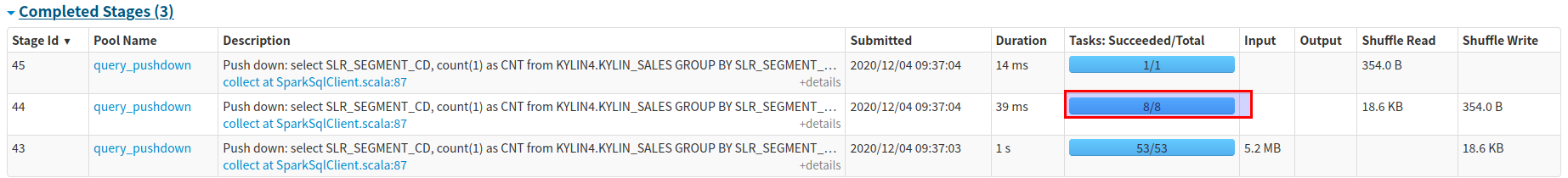

## Performance Testing

### Test Env

- Hadoop 2.7.0 on docker.

- Commit : [ed0649b1](https://github.com/apache/kylin/pull/1495/commits/ed0649b140529bdfafea8cce846962b6ca9c3f73)

- Sparder Env :

spark.executor.cores=1

spark.executor.instances=6

spark.executor.memory=2G

spark.executor.memoryOverhead=1G

spark.sql.shuffle.partitions=6

### Test

Sends 5 SQLs at the same time

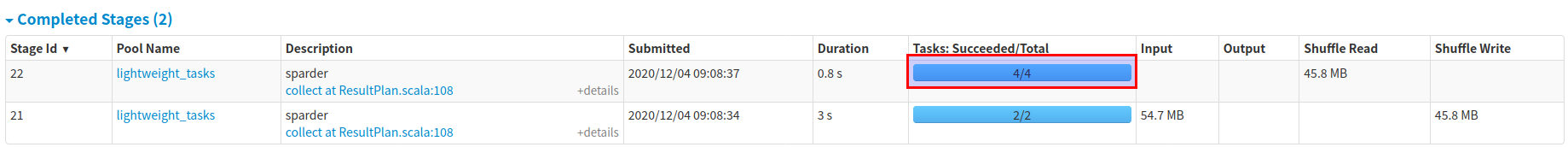

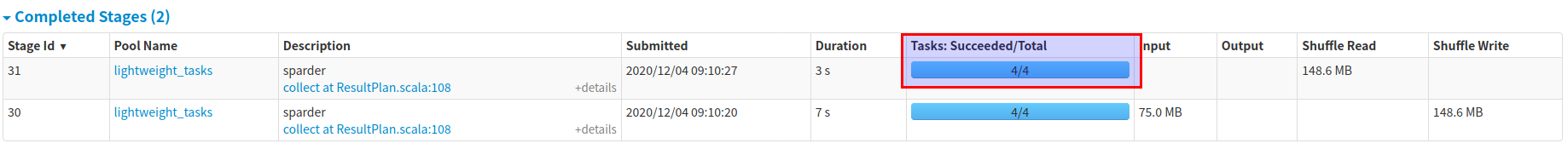

### Before this patch

The shuffle partition number of all querys is 6, which equals to the total cores number, and totally spent 3.09s to finish these 5 SQLs.

5 SQLs submitted at the same time.

SQL1, there are 6 partitions at the second stage, even though there are only 216KB data to read.

SQL2, there are still 6 partitions at the second stage, even though there are only 262KB data to read.

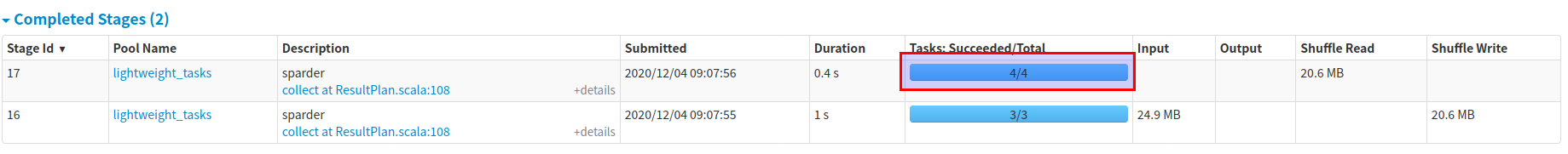

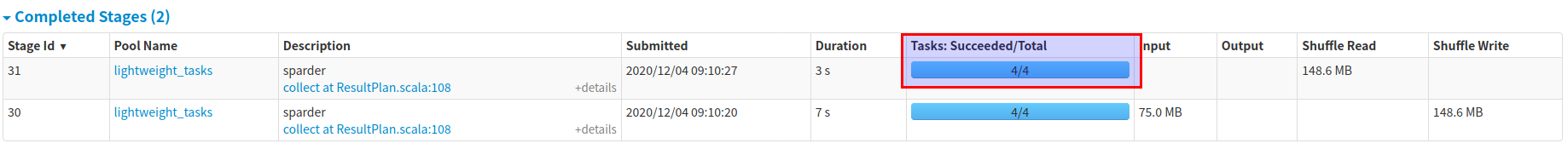

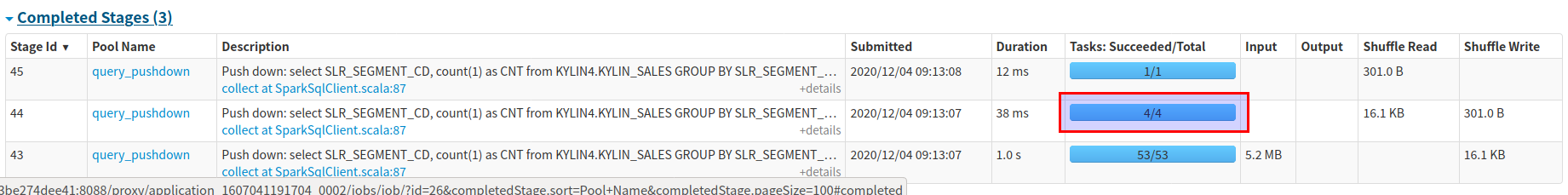

### After this patch

The shuffle partition number of each query is calculated according to the scanned bytes of each query, and totally spent 2.55s to finish these 5 SQLs.

5 SQLs submitted at the same time.

SQL1, there is only 1 partition at the second stage.

SQL2, there is only 1 partition at the second stage.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] hit-lacus commented on a change in pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

hit-lacus commented on a change in pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#discussion_r538295941

##########

File path: kylin-spark-project/kylin-spark-common/src/main/scala/org/apache/spark/sql/execution/datasource/ResetShufflePartition.scala

##########

@@ -17,25 +17,26 @@

*/

package org.apache.spark.sql.execution.datasource

-import org.apache.kylin.common.{KylinConfig, QueryContext, QueryContextFacade}

+import org.apache.kylin.common.{KylinConfig, QueryContextFacade}

import org.apache.spark.internal.Logging

import org.apache.spark.sql.SparkSession

+import org.apache.spark.utils.SparderUtils

trait ResetShufflePartition extends Logging {

+ val PARTITION_SPLIT_BYTES: Long = KylinConfig.getInstanceFromEnv.getQueryPartitionSplitSizeMB * 1024 * 1024 // 64MB

def setShufflePartitions(bytes: Long, sparkSession: SparkSession): Unit = {

QueryContextFacade.current().addAndGetSourceScanBytes(bytes)

- val defaultParallelism = sparkSession.sparkContext.defaultParallelism

+ val defaultParallelism = SparderUtils.getTotalCore(sparkSession.sparkContext.getConf)

Review comment:

Why change from `defaultParallelism` to `getTotalCore` ?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] hit-lacus commented on a change in pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

hit-lacus commented on a change in pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#discussion_r538299640

##########

File path: kylin-spark-project/kylin-spark-common/src/main/scala/org/apache/spark/sql/execution/datasource/ResetShufflePartition.scala

##########

@@ -17,25 +17,26 @@

*/

package org.apache.spark.sql.execution.datasource

-import org.apache.kylin.common.{KylinConfig, QueryContext, QueryContextFacade}

+import org.apache.kylin.common.{KylinConfig, QueryContextFacade}

import org.apache.spark.internal.Logging

import org.apache.spark.sql.SparkSession

+import org.apache.spark.utils.SparderUtils

trait ResetShufflePartition extends Logging {

+ val PARTITION_SPLIT_BYTES: Long = KylinConfig.getInstanceFromEnv.getQueryPartitionSplitSizeMB * 1024 * 1024 // 64MB

def setShufflePartitions(bytes: Long, sparkSession: SparkSession): Unit = {

QueryContextFacade.current().addAndGetSourceScanBytes(bytes)

- val defaultParallelism = sparkSession.sparkContext.defaultParallelism

+ val defaultParallelism = SparderUtils.getTotalCore(sparkSession.sparkContext.getConf)

val kylinConfig = KylinConfig.getInstanceFromEnv

val partitionsNum = if (kylinConfig.getSparkSqlShufflePartitions != -1) {

kylinConfig.getSparkSqlShufflePartitions

} else {

- Math.min(QueryContextFacade.current().getSourceScanBytes / (

Review comment:

Looks like the original code is wrong, if `sparkContext.defaultParallelism` is 1, `partitionsNum` will always be 1.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] zzcclp commented on a change in pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

zzcclp commented on a change in pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#discussion_r538412431

##########

File path: kylin-spark-project/kylin-spark-engine/src/main/scala/org/apache/spark/sql/KylinSparkEnv.scala

##########

@@ -25,96 +25,27 @@ object KylinSparkEnv extends Logging {

@volatile

private var spark: SparkSession = _

Review comment:

Yeah, will raise another pr to do this, it needs to refactor some modules

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] zzcclp edited a comment on pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

zzcclp edited a comment on pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#issuecomment-738628576

## The Results of Testing Manually

### Test Env

- Hadoop 2.7.0 on docker.

- Commit : [3b3786c5c](https://github.com/apache/kylin/commit/3b3786c5c9602838cd4abd0a6d40574550ec8622)

- Sparder Env :

spark.executor.cores=1

spark.executor.instances=4

spark.executor.memory=2G

spark.executor.memoryOverhead=1G

spark.sql.shuffle.partitions=4

### Before this patch

The shuffle partition number of all querys is 4, which equals to the total cores number.

### After this patch

The shuffle partition number of each query is calculated according to the scanned bytes of each query:

The log messages shown as below:

`2020-12-04 05:28:11,991 INFO [Query a5e841ba-c430-383b-dfa8-5694cd6d282b-122] datasource.FilePruner:51 : Set partition to 2, total bytes 92610534`

The log messages shown as below:

`2020-12-04 05:28:12,112 INFO [Query 534a7afb-4857-6e0c-67b8-bd6a8da155a8-130] datasource.FilePruner:51 : Set partition to 1, total bytes 42133710`

The log messages shown as below:

`2020-12-04 05:28:12,141 INFO [Query 744d34d1-0d06-11c8-fdee-1f260388117f-131] datasource.FilePruner:51 : Set partition to 3, total bytes 158775868`

The log messages shown as below:

`2020-12-04 08:16:43,746 INFO [Query e117ceb8-53c1-959e-9cb0-75ee3901e271-126] pushdown.SparkSqlClient:68 : Auto set spark.sql.shuffle.partitions to 8, the total sources size is 415631445b`

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] zzcclp edited a comment on pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

zzcclp edited a comment on pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#issuecomment-738628576

## The Results of Testing Manually

### Test Env

- Hadoop 2.7.0 on docker.

- Commit : [3b3786c5c](https://github.com/apache/kylin/commit/3b3786c5c9602838cd4abd0a6d40574550ec8622)

- Sparder Env :

spark.executor.cores=1

spark.executor.instances=4

spark.executor.memory=2G

spark.executor.memoryOverhead=1G

spark.sql.shuffle.partitions=4

### Before this patch

The shuffle partition number of all querys is 4, which equals to the total cores number.

### After this patch

The shuffle partition number of each query is calculated according to the scanned bytes of each query:

The log messages shown as below:

`2020-12-04 05:28:11,991 INFO [Query a5e841ba-c430-383b-dfa8-5694cd6d282b-122] datasource.FilePruner:51 : Set partition to 2, total bytes 92610534`

The log messages shown as below:

`2020-12-04 05:28:12,112 INFO [Query 534a7afb-4857-6e0c-67b8-bd6a8da155a8-130] datasource.FilePruner:51 : Set partition to 1, total bytes 42133710`

The log messages shown as below:

`2020-12-04 05:28:12,141 INFO [Query 744d34d1-0d06-11c8-fdee-1f260388117f-131] datasource.FilePruner:51 : Set partition to 3, total bytes 158775868`

The log messages shown as below:

`2020-12-04 08:16:43,746 INFO [Query e117ceb8-53c1-959e-9cb0-75ee3901e271-126] pushdown.SparkSqlClient:68 : Auto set spark.sql.shuffle.partitions to 8, the total sources size is 415631445 b`

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] codecov-io commented on pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

codecov-io commented on pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#issuecomment-738045171

# [Codecov](https://codecov.io/gh/apache/kylin/pull/1495?src=pr&el=h1) Report

> :exclamation: No coverage uploaded for pull request base (`kylin-on-parquet-v2@d46fc4a`). [Click here to learn what that means](https://docs.codecov.io/docs/error-reference#section-missing-base-commit).

> The diff coverage is `n/a`.

[](https://codecov.io/gh/apache/kylin/pull/1495?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## kylin-on-parquet-v2 #1495 +/- ##

======================================================

Coverage ? 24.28%

Complexity ? 4616

======================================================

Files ? 1138

Lines ? 64441

Branches ? 9507

======================================================

Hits ? 15651

Misses ? 47156

Partials ? 1634

```

------

[Continue to review full report at Codecov](https://codecov.io/gh/apache/kylin/pull/1495?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/apache/kylin/pull/1495?src=pr&el=footer). Last update [d46fc4a...3b3786c](https://codecov.io/gh/apache/kylin/pull/1495?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] hit-lacus commented on a change in pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

hit-lacus commented on a change in pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#discussion_r538274411

##########

File path: kylin-spark-project/kylin-spark-query/src/test/java/org/apache/spark/sql/SparderContextFacadeTest.java

##########

@@ -0,0 +1,143 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql;

+

+import org.apache.kylin.common.KylinConfig;

+import org.apache.kylin.engine.spark.LocalWithSparkSessionTest;

+import org.apache.kylin.job.exception.SchedulerException;

+

+import java.util.ArrayList;

+import java.util.List;

+import java.util.Random;

+import java.util.concurrent.Callable;

+import java.util.concurrent.CompletionService;

+import java.util.concurrent.ExecutionException;

+import java.util.concurrent.ExecutorCompletionService;

+import java.util.concurrent.LinkedBlockingQueue;

+import java.util.concurrent.ThreadPoolExecutor;

+import java.util.concurrent.TimeUnit;

+import org.junit.After;

+import org.junit.Assert;

+import org.junit.Before;

+import org.junit.Test;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+public class SparderContextFacadeTest extends LocalWithSparkSessionTest {

+

+ private static final Logger logger = LoggerFactory.getLogger(SparderContextFacadeTest.class);

+ private static final Integer TEST_SIZE = 16 * 1024 * 1024;

+

+ @Override

+ @Before

+ public void setup() throws SchedulerException {

+ super.setup();

+ KylinConfig conf = KylinConfig.getInstanceFromEnv();

+ // the default value of kylin.query.spark-conf.spark.master is yarn,

+ // which will read from kylin-defaults.properties

+ conf.setProperty("kylin.query.spark-conf.spark.master", "local");

+ // Init Sparder

+ SparderContext.getOriginalSparkSession();

+ }

+

+ @After

+ public void after() {

+ SparderContext.stopSpark();

+ KylinConfig.getInstanceFromEnv()

+ .setProperty("kylin.query.spark-conf.spark.master", "yarn");

+ super.after();

+ }

+

+ @Test

+ public void testThreadSparkSession() throws InterruptedException, ExecutionException {

+ ThreadPoolExecutor executor = new ThreadPoolExecutor(5, 5, 1,

+ TimeUnit.DAYS, new LinkedBlockingQueue<>(5));

+

+ // test the thread local SparkSession

+ CompletionService<Throwable> service = runThreadSparkSessionTest(executor, false);

+

+ for (int i = 1; i <= 5; i++) {

+ Assert.assertNull(service.take().get());

+ }

+

+ // test the original SparkSession, it must throw errors.

+ service = runThreadSparkSessionTest(executor, true);

+ boolean hasError = false;

+ for (int i = 1; i <= 5; i++) {

+ if (service.take().get() != null) {

+ hasError = true;

+ }

+ }

+ Assert.assertTrue(hasError);

+

+ executor.shutdown();

+ }

+

+ protected CompletionService<Throwable> runThreadSparkSessionTest(ThreadPoolExecutor executor,

Review comment:

Looks like `isOriginal` is always `false` ?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] hit-lacus merged pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

hit-lacus merged pull request #1495:

URL: https://github.com/apache/kylin/pull/1495

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] codecov-io edited a comment on pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

codecov-io edited a comment on pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#issuecomment-738045171

# [Codecov](https://codecov.io/gh/apache/kylin/pull/1495?src=pr&el=h1) Report

> :exclamation: No coverage uploaded for pull request base (`kylin-on-parquet-v2@cd449ea`). [Click here to learn what that means](https://docs.codecov.io/docs/error-reference#section-missing-base-commit).

> The diff coverage is `n/a`.

[](https://codecov.io/gh/apache/kylin/pull/1495?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## kylin-on-parquet-v2 #1495 +/- ##

======================================================

Coverage ? 24.32%

Complexity ? 4618

======================================================

Files ? 1138

Lines ? 64371

Branches ? 9497

======================================================

Hits ? 15658

Misses ? 47079

Partials ? 1634

```

------

[Continue to review full report at Codecov](https://codecov.io/gh/apache/kylin/pull/1495?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/apache/kylin/pull/1495?src=pr&el=footer). Last update [cd449ea...ed0649b](https://codecov.io/gh/apache/kylin/pull/1495?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] zzcclp commented on pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

zzcclp commented on pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#issuecomment-738583017

Ran test cases local successfully.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] zzcclp edited a comment on pull request #1495: KYLIN-4829 Support to use thread-level SparkSession to execute query

Posted by GitBox <gi...@apache.org>.

zzcclp edited a comment on pull request #1495:

URL: https://github.com/apache/kylin/pull/1495#issuecomment-740415145

## Performance Testing

### Test Env

- Hadoop 2.7.0 on docker.

- Commit : [ed0649b1](https://github.com/apache/kylin/pull/1495/commits/ed0649b140529bdfafea8cce846962b6ca9c3f73)

- Sparder Env :

spark.executor.cores=1

spark.executor.instances=6

spark.executor.memory=2G

spark.executor.memoryOverhead=1G

spark.sql.shuffle.partitions=6

### Test

Sends 5 SQLs at the same time

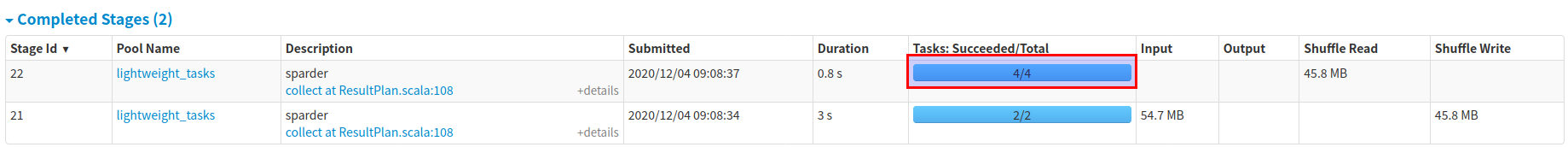

### Before this patch

The shuffle partition number of all querys is 6, which equals to the total cores number, and totally spent 3.09s to finish these 5 SQLs.

5 SQLs submitted at the same time.

SQL1, there are 6 partitions at the second stage, even though there are only 216KB data to read.

SQL2, there are still 6 partitions at the second stage, even though there are only 262KB data to read.

### After this patch

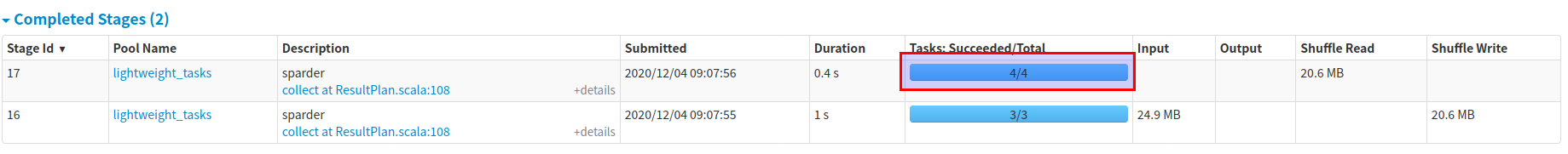

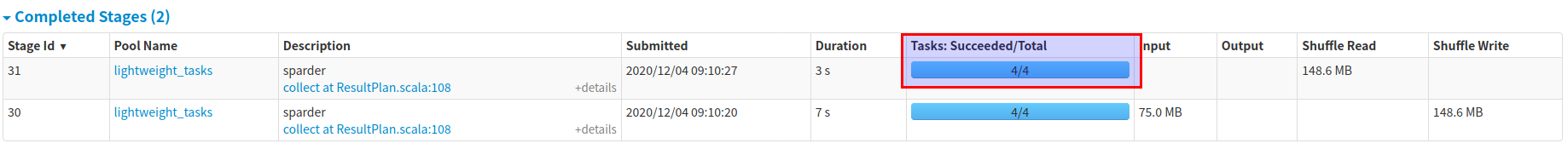

The shuffle partition number of each query is calculated according to the scanned bytes of each query, and totally spent 2.55s to finish these 5 SQLs.

5 SQLs submitted at the same time.

SQL1, there is only 1 partition at the second stage, and spent the half of the time it took before this patch.

SQL2, there is only 1 partition at the second stage, and spent the half of the time it took before this patch.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org