You are viewing a plain text version of this content. The canonical link for it is here.

Posted to reviews@spark.apache.org by "laglangyue (via GitHub)" <gi...@apache.org> on 2023/10/25 09:11:16 UTC

[PR] [WIP][SPARK-45629]Fix `Implicit definition should have explicit type` [spark]

laglangyue opened a new pull request, #43526:

URL: https://github.com/apache/spark/pull/43526

<!--

Thanks for sending a pull request! Here are some tips for you:

1. If this is your first time, please read our contributor guidelines: https://spark.apache.org/contributing.html

2. Ensure you have added or run the appropriate tests for your PR: https://spark.apache.org/developer-tools.html

3. If the PR is unfinished, add '[WIP]' in your PR title, e.g., '[WIP][SPARK-XXXX] Your PR title ...'.

4. Be sure to keep the PR description updated to reflect all changes.

5. Please write your PR title to summarize what this PR proposes.

6. If possible, provide a concise example to reproduce the issue for a faster review.

7. If you want to add a new configuration, please read the guideline first for naming configurations in

'core/src/main/scala/org/apache/spark/internal/config/ConfigEntry.scala'.

8. If you want to add or modify an error type or message, please read the guideline first in

'core/src/main/resources/error/README.md'.

-->

### What changes were proposed in this pull request?

This pr includes:

add type for implicit variable if it not defined

### Why are the changes needed?

<!--

Please clarify why the changes are needed. For instance,

1. If you propose a new API, clarify the use case for a new API.

2. If you fix a bug, you can clarify why it is a bug.

-->

it's subtask of SPARK-45314.

https://issues.apache.org/jira/browse/SPARK-45629

### Does this PR introduce _any_ user-facing change?

<!--

Note that it means *any* user-facing change including all aspects such as the documentation fix.

If yes, please clarify the previous behavior and the change this PR proposes - provide the console output, description and/or an example to show the behavior difference if possible.

If possible, please also clarify if this is a user-facing change compared to the released Spark versions or within the unreleased branches such as master.

If no, write 'No'.

-->

no

### How was this patch tested?

<!--

If tests were added, say they were added here. Please make sure to add some test cases that check the changes thoroughly including negative and positive cases if possible.

If it was tested in a way different from regular unit tests, please clarify how you tested step by step, ideally copy and paste-able, so that other reviewers can test and check, and descendants can verify in the future.

If tests were not added, please describe why they were not added and/or why it was difficult to add.

If benchmark tests were added, please run the benchmarks in GitHub Actions for the consistent environment, and the instructions could accord to: https://spark.apache.org/developer-tools.html#github-workflow-benchmarks.

-->

ci

### Was this patch authored or co-authored using generative AI tooling?

<!--

If generative AI tooling has been used in the process of authoring this patch, please include the

phrase: 'Generated-by: ' followed by the name of the tool and its version.

If no, write 'No'.

Please refer to the [ASF Generative Tooling Guidance](https://www.apache.org/legal/generative-tooling.html) for details.

-->

no

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [WIP][SPARK-45629]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1371513630

##########

connector/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/JsonUtils.scala:

##########

@@ -65,9 +68,9 @@ private object JsonUtils {

def partitionOffsets(str: String): Map[TopicPartition, Long] = {

try {

Serialization.read[Map[String, Map[Int, Long]]](str).flatMap { case (topic, partOffsets) =>

- partOffsets.map { case (part, offset) =>

- new TopicPartition(topic, part) -> offset

- }

+ partOffsets.map { case (part, offset) =>

Review Comment:

Please do not touch unrelated code.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1401545560

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/HDFSMetadataLog.scala:

##########

@@ -47,16 +46,16 @@ import org.apache.spark.util.ArrayImplicits._

* Note: [[HDFSMetadataLog]] doesn't support S3-like file systems as they don't guarantee listing

* files in a directory always shows the latest files.

*/

-class HDFSMetadataLog[T <: AnyRef : ClassTag](sparkSession: SparkSession, path: String)

- extends MetadataLog[T] with Logging {

-

- private implicit val formats = Serialization.formats(NoTypeHints)

+class HDFSMetadataLog[T <: AnyRef: ClassTag](sparkSession: SparkSession, path: String)(

+ private final implicit val manifest: Manifest[T])

Review Comment:

for class `CompactibleFileStreamLog` and `HDFSMetadataLog`, they has `T`, and implement method `serialize` ,`deserialize`. And `deserialize` use `org.json4s.jackson.JacksonSerialization#read`

`def read[A](in: Reader)(implicit formats: Formats, mf: Manifest[A]): A = ...`

if we use `private implicit val manifest = Manifest.classType[T](implicitly[ClassTag[T]].runtimeClass)`,

the manifest can get T from ClassTag by type inference.

if we use `private implicit val manifest : Manifest[T] = Manifest.classType[T](implicitly[ClassTag[T]].runtimeClass)`

the T is will be Object, then manifest can't get `T`.

We should declare Maintest [T] in the constructor signature, or use a variable to save Classtag and use Classtag at runtime.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1401536784

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/HDFSMetadataLog.scala:

##########

@@ -35,7 +35,6 @@ import org.apache.spark.sql.errors.QueryExecutionErrors

import org.apache.spark.sql.internal.SQLConf

import org.apache.spark.util.ArrayImplicits._

-

Review Comment:

reverted

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1408654883

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/state/OperatorStateMetadata.scala:

##########

@@ -64,8 +65,9 @@ case class OperatorStateMetadataV1(

object OperatorStateMetadataV1 {

- private implicit val formats = Serialization.formats(NoTypeHints)

+ private implicit val formats: Formats = Serialization.formats(NoTypeHints)

+ @nowarn

Review Comment:

done

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang closed pull request #43526: [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type`

URL: https://github.com/apache/spark/pull/43526

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1822024412

> Thank you very much @laglangyue , I will review this PR as soon as possible

>

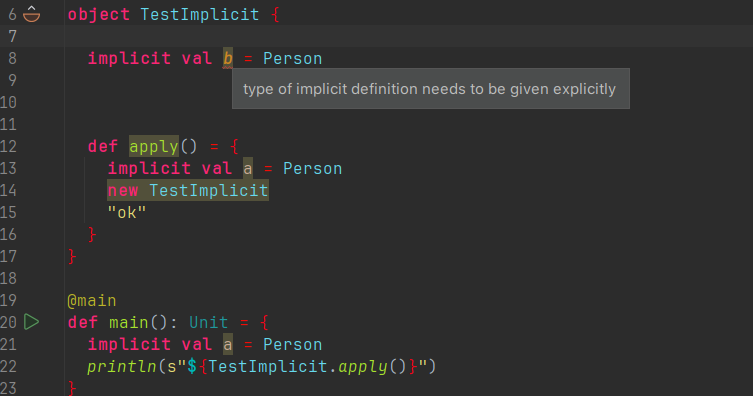

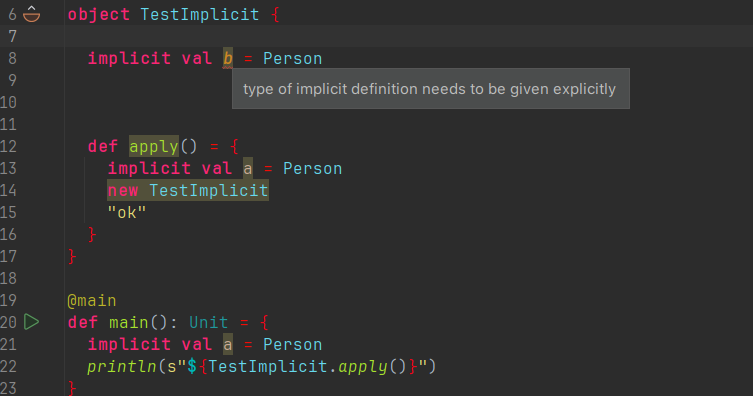

> > > I new a project and test code as bellow,and `sbt compile`,and it will not cause error, why it will cause error for spark. I also test them is scala3 , it also compile successful,and there are be no warning.Surely, according to the scala3 community, it is recommended to use `Using` and `Given` instead of implicits, while retaining implicits and removing them in the future. I don't know how to compile spark for scala3. Replace scala's related dependencies and compile them?

> >

> >

> > ```scala

> > Welcome to Scala 3.3.1 (17.0.8, Java OpenJDK 64-Bit Server VM).

> > Type in expressions for evaluation. Or try :help.

> >

> > scala> implicit val ix = 1

> > -- Error: ----------------------------------------------------------------------

> > 1 |implicit val ix = 1

> > | ^

> > | type of implicit definition needs to be given explicitly

> > 1 error found

> > ```

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> > But why does the above statement report an error in Scala 3.3.1?

>

> Additionally, if this is confirmed, we should mention this in the pr description.

>

> also cc @srowen FYI

It is ok to use implicit within a method without declaring a type,but not for global variables

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1401534260

##########

mllib/src/main/scala/org/apache/spark/mllib/clustering/BisectingKMeansModel.scala:

##########

@@ -188,7 +188,7 @@ object BisectingKMeansModel extends Loader[BisectingKMeansModel] {

}

def load(sc: SparkContext, path: String): BisectingKMeansModel = {

- implicit val formats: DefaultFormats = DefaultFormats

Review Comment:

reverted

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1384639064

##########

project/SparkBuild.scala:

##########

@@ -247,8 +247,6 @@ object SparkBuild extends PomBuild {

"-Wconf:cat=deprecation&msg=Auto-application to \\`\\(\\)\\` is deprecated&site=org.apache.spark.streaming.kafka010.KafkaRDDSuite:s",

// SPARK-35574 Prevent the recurrence of compilation warnings related to `procedure syntax is deprecated`

"-Wconf:cat=deprecation&msg=procedure syntax is deprecated:e",

- // SPARK-40497 Upgrade Scala to 2.13.11 and suppress `Implicit definition should have explicit type`

- "-Wconf:msg=Implicit definition should have explicit type:s",

Review Comment:

done

##########

core/src/main/scala/org/apache/spark/resource/ResourceInformation.scala:

##########

@@ -69,7 +69,7 @@ private[spark] object ResourceInformation {

* Parses a JSON string into a [[ResourceInformation]] instance.

*/

def parseJson(json: String): ResourceInformation = {

- implicit val formats = DefaultFormats

+ implicit val formats: DefaultFormats.type = DefaultFormats

Review Comment:

done

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1793338970

@laglangyue Additionally, GA's testing has not yet passed, we need to make them all pass

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1815875474

Thank you very much @laglangyue , I will review this PR as soon as possible

> > I new a project and test code as bellow,and `sbt compile`,and it will not cause error, why it will cause error for spark. I also test them is scala3 , it also compile successful,and there are be no warning.Surely, according to the scala3 community, it is recommended to use `Using` and `Given` instead of implicits, while retaining implicits and removing them in the future. I don't know how to compile spark for scala3. Replace scala's related dependencies and compile them?

>

> ```scala

> Welcome to Scala 3.3.1 (17.0.8, Java OpenJDK 64-Bit Server VM).

> Type in expressions for evaluation. Or try :help.

>

> scala> implicit val ix = 1

> -- Error: ----------------------------------------------------------------------

> 1 |implicit val ix = 1

> | ^

> | type of implicit definition needs to be given explicitly

> 1 error found

> ```

>

> But why does the above statement report an error in Scala 3.3.1?

Additionally, if this is confirmed, we should mention this in the pr description.

also cc @srowen FYI

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1401536527

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/HDFSMetadataLog.scala:

##########

@@ -47,16 +46,16 @@ import org.apache.spark.util.ArrayImplicits._

* Note: [[HDFSMetadataLog]] doesn't support S3-like file systems as they don't guarantee listing

* files in a directory always shows the latest files.

*/

-class HDFSMetadataLog[T <: AnyRef : ClassTag](sparkSession: SparkSession, path: String)

- extends MetadataLog[T] with Logging {

-

- private implicit val formats = Serialization.formats(NoTypeHints)

+class HDFSMetadataLog[T <: AnyRef: ClassTag](sparkSession: SparkSession, path: String)(

+ private final implicit val manifest: Manifest[T])

+ extends MetadataLog[T]

+ with Logging {

- /** Needed to serialize type T into JSON when using Jackson */

- private implicit val manifest = Manifest.classType[T](implicitly[ClassTag[T]].runtimeClass)

+ private implicit val formats: Formats = Serialization.formats(NoTypeHints)

// Avoid serializing generic sequences, see SPARK-17372

- require(implicitly[ClassTag[T]].runtimeClass != classOf[Seq[_]],

+ require(

Review Comment:

reverted

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [WIP][SPARK-45629]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1788391495

Hi, brother

@LuciferYang

How can I reproduce this? I would like to check if I have made all the necessary modifications

```shell

[error] /Users/yangjie01/SourceCode/git/spark-mine-sbt/core/src/main/scala/org/apache/spark/deploy/FaultToleranceTest.scala:343:16: Implicit definition should have explicit type (inferred org.json4s.DefaultFormats.type) [quickfixable]

[error] Applicable -Wconf / @nowarn filters for this fatal warning: msg=<part of the message>, cat=other-implicit-type, site=org.apache.spark.deploy.TestMasterInfo.formats

[error] implicit val formats = org.json4s.DefaultFormats

[error]

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [WIP][SPARK-45629]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1788436569

https://github.com/apache/spark/blob/e1bc48b729e40390a4b0f977eec4a9050c7cac77/project/SparkBuild.scala#L251

@laglangyue laglangyueYou can delete the above line and then compile with

```

build/sbt -Phadoop-3 -Pdocker-integration-tests -Pspark-ganglia-lgpl -Pkinesis-asl -Pkubernetes -Phive-thriftserver -Pconnect -Pyarn -Phive -Phadoop-cloud -Pvolcano -Pkubernetes-integration-tests Test/package streaming-kinesis-asl-assembly/assembly connect/assembly

```

If no further compilation errors occur, we can consider the task completed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [WIP][SPARK-45629]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1372535480

##########

connector/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/JsonUtils.scala:

##########

@@ -65,9 +68,9 @@ private object JsonUtils {

def partitionOffsets(str: String): Map[TopicPartition, Long] = {

try {

Serialization.read[Map[String, Map[Int, Long]]](str).flatMap { case (topic, partOffsets) =>

- partOffsets.map { case (part, offset) =>

- new TopicPartition(topic, part) -> offset

- }

+ partOffsets.map { case (part, offset) =>

Review Comment:

Currently, only the connect-related modules are required to use scalafmt.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1402002175

##########

sql/core/src/test/scala/org/apache/spark/sql/streaming/StreamingJoinSuite.scala:

##########

@@ -541,7 +542,7 @@ class StreamingInnerJoinSuite extends StreamingJoinSuite {

Utils.createDirectory(tempDir.getAbsolutePath, Random.nextFloat().toString).toString

val stateInfo = StatefulOperatorStateInfo(path, queryId, opId, 0L, 5)

- implicit val sqlContext = spark.sqlContext

+ implicit val sqlContext: SQLContext = spark.sqlContext

val coordinatorRef = sqlContext.streams.stateStoreCoordinator

Review Comment:

how about remove line 545 and change line 546 to

```scala

val coordinatorRef = spark.streams.stateStoreCoordinator

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1829597139

> Is this one ready to review?

yes.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1829248800

I think we can just retry the failed task

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1389149886

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/HDFSMetadataLog.scala:

##########

@@ -49,10 +49,11 @@ import org.apache.spark.sql.internal.SQLConf

class HDFSMetadataLog[T <: AnyRef : ClassTag](sparkSession: SparkSession, path: String)

extends MetadataLog[T] with Logging {

- private implicit val formats = Serialization.formats(NoTypeHints)

+ private implicit val formats: Formats = Serialization.formats(NoTypeHints)

/** Needed to serialize type T into JSON when using Jackson */

- private implicit val manifest = Manifest.classType[T](implicitly[ClassTag[T]].runtimeClass)

+ private implicit val manifest: Manifest[T] =

Review Comment:

log is

```shell

java.lang.ClassCastException: class [Ljava.lang.Object; cannot be cast to class [Lorg.apache.spark.sql.execution.streaming.FileStreamSource$FileEntry; ([Ljava.lang.Object; is in module java.base of loader 'bootstrap'; [Lorg.apache.spark.sql.execution.streaming.FileStreamSource$FileEntry; is in unnamed module of loader 'app')

at org.apache.spark.sql.execution.streaming.FileStreamSourceLog.restore(FileStreamSourceLog.scala:119)

at org.apache.spark.sql.execution.streaming.FileStreamSource.<init>(FileStreamSource.scala:117)

at org.apache.spark.sql.execution.datasources.DataSource.createSource(DataSource.scala:303)

at org.apache.spark.sql.execution.streaming.MicroBatchExecution$$anonfun$1.$anonfun$applyOrElse$1(MicroBatchExecution.scala:124)

at scala.collection.mutable.HashMap.getOrElseUpdate(HashMap.scala:469)

at org.apache.spark.sql.execution.streaming.MicroBatchExecution$$anonfun$1.applyOrElse(MicroBatchExecution.scala:121)

at org.apache.spark.sql.execution.streaming.MicroBatchExecution$$anonfun$1.applyOrElse(MicroBatchExecution.scala:119)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1408707826

##########

core/src/main/scala/org/apache/spark/deploy/StandaloneResourceUtils.scala:

##########

@@ -23,7 +23,7 @@ import java.nio.file.Files

import scala.collection.mutable

import scala.util.control.NonFatal

-import org.json4s.{DefaultFormats, Extraction}

+import org.json4s._

Review Comment:

hmm... seems like this has been revert again?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1822026592

> > Thank you very much @laglangyue , I will review this PR as soon as possible

> > > > I new a project and test code as bellow,and `sbt compile`,and it will not cause error, why it will cause error for spark. I also test them is scala3 , it also compile successful,and there are be no warning.Surely, according to the scala3 community, it is recommended to use `Using` and `Given` instead of implicits, while retaining implicits and removing them in the future. I don't know how to compile spark for scala3. Replace scala's related dependencies and compile them?

> > >

> > >

> > > ```scala

> > > Welcome to Scala 3.3.1 (17.0.8, Java OpenJDK 64-Bit Server VM).

> > > Type in expressions for evaluation. Or try :help.

> > >

> > > scala> implicit val ix = 1

> > > -- Error: ----------------------------------------------------------------------

> > > 1 |implicit val ix = 1

> > > | ^

> > > | type of implicit definition needs to be given explicitly

> > > 1 error found

> > > ```

> > >

> > >

> > >

> > >

> > >

> > >

> > >

> > >

> > >

> > >

> > >

> > > But why does the above statement report an error in Scala 3.3.1?

> >

> >

> > Additionally, if this is confirmed, we should mention this in the pr description.

> > also cc @srowen FYI

>

> It is ok to use implicit within a method without declaring a type,but not for global variables

>

>

So, was it my testing approach in the Scala shell that was incorrect, or is there another reason we came to different conclusions?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1401762130

##########

mllib/src/main/scala/org/apache/spark/mllib/clustering/BisectingKMeansModel.scala:

##########

@@ -188,7 +188,7 @@ object BisectingKMeansModel extends Loader[BisectingKMeansModel] {

}

def load(sc: SparkContext, path: String): BisectingKMeansModel = {

- implicit val formats: DefaultFormats = DefaultFormats

Review Comment:

Seems this file still two more cases needs to be reverted?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1401986588

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/HDFSMetadataLog.scala:

##########

@@ -47,16 +46,16 @@ import org.apache.spark.util.ArrayImplicits._

* Note: [[HDFSMetadataLog]] doesn't support S3-like file systems as they don't guarantee listing

* files in a directory always shows the latest files.

*/

-class HDFSMetadataLog[T <: AnyRef : ClassTag](sparkSession: SparkSession, path: String)

- extends MetadataLog[T] with Logging {

-

- private implicit val formats = Serialization.formats(NoTypeHints)

+class HDFSMetadataLog[T <: AnyRef: ClassTag](sparkSession: SparkSession, path: String)(

+ private final implicit val manifest: Manifest[T])

Review Comment:

Understood, but I don't want to change the definitions of these two classes for this purpose in this pr. Perhaps we can use `scala.annotation.nowarn` in this PR to suppress these two cases in a fine-grained way, and in the future, we can replace `json4s` with the native Jackson API, as using the Jackson API should avoid the use of `manifests`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1389154774

##########

core/src/main/scala/org/apache/spark/executor/CoarseGrainedExecutorBackend.scala:

##########

@@ -60,7 +60,7 @@ private[spark] class CoarseGrainedExecutorBackend(

import CoarseGrainedExecutorBackend._

- private implicit val formats = DefaultFormats

+ private implicit val formats: DefaultFormats.type = DefaultFormats

Review Comment:

yes,removed

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1808025083

hi @LuciferYang . Do you have any ideas?

`org.apache.spark.sql.execution.streaming.CompactibleFileStreamLog#allFiles()`

the method will return `Array.empty` will return `Array[Object]`,but not `Array[FileEntry]`.

ClassTag=null means that implicit types cannot be found from the context,

`FileStreamSourceLog -> CompactibleFileStreamLog,`ClassTag` cannot be found during generic passing.

hope to get some help.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1402950365

##########

sql/core/src/test/scala/org/apache/spark/sql/streaming/StreamingJoinSuite.scala:

##########

@@ -541,7 +542,7 @@ class StreamingInnerJoinSuite extends StreamingJoinSuite {

Utils.createDirectory(tempDir.getAbsolutePath, Random.nextFloat().toString).toString

val stateInfo = StatefulOperatorStateInfo(path, queryId, opId, 0L, 5)

- implicit val sqlContext = spark.sqlContext

+ implicit val sqlContext: SQLContext = spark.sqlContext

val coordinatorRef = sqlContext.streams.stateStoreCoordinator

Review Comment:

well, maybe. For local variable, we don't need to explicit type, I revert some change. Under the this case, just remove line 545, it's ok. what do you think?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1402951709

##########

examples/src/main/scala/org/apache/spark/examples/sql/SparkSQLExample.scala:

##########

@@ -220,7 +220,8 @@ object SparkSQLExample {

// +------------+

// No pre-defined encoders for Dataset[Map[K,V]], define explicitly

- implicit val mapEncoder = org.apache.spark.sql.Encoders.kryo[Map[String, Any]]

+ implicit val mapEncoder: Encoder[Map[String, Any]] =

Review Comment:

done

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1408655902

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/FileStreamSourceLog.scala:

##########

@@ -36,8 +33,8 @@ class FileStreamSourceLog(

path: String)

extends CompactibleFileStreamLog[FileEntry](metadataLogVersion, sparkSession, path) {

- import CompactibleFileStreamLog._

- import FileStreamSourceLog._

+ import org.apache.spark.sql.execution.streaming.CompactibleFileStreamLog._

Review Comment:

maybe changed by Idea. I have reverted

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1822095997

> > > Thank you very much @laglangyue , I will review this PR as soon as possible

> > > > > I new a project and test code as bellow,and `sbt compile`,and it will not cause error, why it will cause error for spark. I also test them is scala3 , it also compile successful,and there are be no warning.Surely, according to the scala3 community, it is recommended to use `Using` and `Given` instead of implicits, while retaining implicits and removing them in the future. I don't know how to compile spark for scala3. Replace scala's related dependencies and compile them?

> > > >

> > > >

> > > > ```scala

> > > > Welcome to Scala 3.3.1 (17.0.8, Java OpenJDK 64-Bit Server VM).

> > > > Type in expressions for evaluation. Or try :help.

> > > >

> > > > scala> implicit val ix = 1

> > > > -- Error: ----------------------------------------------------------------------

> > > > 1 |implicit val ix = 1

> > > > | ^

> > > > | type of implicit definition needs to be given explicitly

> > > > 1 error found

> > > > ```

> > > >

> > > >

> > > >

> > > >

> > > >

> > > >

> > > >

> > > >

> > > >

> > > >

> > > >

> > > > But why does the above statement report an error in Scala 3.3.1?

> > >

> > >

> > > Additionally, if this is confirmed, we should mention this in the pr description.

> > > also cc @srowen FYI

> >

> >

> > It is ok to use implicit within a method without declaring a type,but not for global variables

> >

>

> So, was it my testing approach in the Scala shell that was incorrect, or is there another reason we came to different conclusions?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1401554599

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/HDFSMetadataLog.scala:

##########

@@ -47,16 +46,16 @@ import org.apache.spark.util.ArrayImplicits._

* Note: [[HDFSMetadataLog]] doesn't support S3-like file systems as they don't guarantee listing

* files in a directory always shows the latest files.

*/

-class HDFSMetadataLog[T <: AnyRef : ClassTag](sparkSession: SparkSession, path: String)

- extends MetadataLog[T] with Logging {

-

- private implicit val formats = Serialization.formats(NoTypeHints)

+class HDFSMetadataLog[T <: AnyRef: ClassTag](sparkSession: SparkSession, path: String)(

+ private final implicit val manifest: Manifest[T])

Review Comment:

talk is cheap, show code :

manifest is used to deserialize for Json4s

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [WIP][SPARK-45629]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1372512228

##########

connector/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/JsonUtils.scala:

##########

@@ -65,9 +68,9 @@ private object JsonUtils {

def partitionOffsets(str: String): Map[TopicPartition, Long] = {

try {

Serialization.read[Map[String, Map[Int, Long]]](str).flatMap { case (topic, partOffsets) =>

- partOffsets.map { case (part, offset) =>

- new TopicPartition(topic, part) -> offset

- }

+ partOffsets.map { case (part, offset) =>

Review Comment:

I used dev/scalafmt because there were quite a few modified files, but it seems that this tool is not suitable for old code.I will roll back

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1389148448

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/HDFSMetadataLog.scala:

##########

@@ -49,10 +49,11 @@ import org.apache.spark.sql.internal.SQLConf

class HDFSMetadataLog[T <: AnyRef : ClassTag](sparkSession: SparkSession, path: String)

extends MetadataLog[T] with Logging {

- private implicit val formats = Serialization.formats(NoTypeHints)

+ private implicit val formats: Formats = Serialization.formats(NoTypeHints)

/** Needed to serialize type T into JSON when using Jackson */

- private implicit val manifest = Manifest.classType[T](implicitly[ClassTag[T]].runtimeClass)

+ private implicit val manifest: Manifest[T] =

Review Comment:

it maybe cause this error?

class [Ljava.lang.Object; cannot be cast to class [Lorg.apache.spark.sql.execution.streaming.FileStreamSource$FileEntry; ([Ljava.lang.Object;

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1832530609

@laglangyue Could you modify the description of this PR again? Need to focus on the description of the `Why are the changes needed?` part.

In addition to fixing a compilation warning in Scala 2.13, you may need to summarize the key points in https://github.com/apache/spark/pull/43526#issuecomment-1822095997, and add it to the pr description so that the changes in this pr can be considered necessary. Note, this is just an example, if you have a more solid reason to describe `Why are the changes needed?`, that would be better.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1798457027

> Can you update the pr title to add the corresponding module label? Can you update the pr description to specifically explain why this work needs to be done (I don't think the subtask of [SPARK-45314](https://issues.apache.org/jira/browse/SPARK-45314) is a rigorous reason)? Also, is it still a compile warning in Scala 3, or will it turn into a compile error?

I new a project and test code as bellow,and `sbt compile',and it will not cause error, why it will cause error for spark.

I also test them is scala3 , it also compile successful,and there are be no warning.Surely, according to the scala3 community, it is recommended to use `Using` and `Given` instead of implicits, while retaining implicits and removing them in the future.

I don't know how to compile spark for scala3. Replace scala's related dependencies and compile them?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1384643072

##########

sql/hive-thriftserver/src/test/scala/org/apache/spark/sql/hive/thriftserver/HiveThriftServer2Suites.scala:

##########

@@ -441,7 +441,7 @@ class HiveThriftBinaryServerSuite extends HiveThriftServer2Test {

s"LOAD DATA LOCAL INPATH '${TestData.smallKv}' OVERWRITE INTO TABLE test_map")

queries.foreach(statement.execute)

- implicit val ec = ExecutionContext.fromExecutorService(

+ implicit val ec: ExecutionContextExecutorService = ExecutionContext.fromExecutorService(

Review Comment:

no, ExecutionContext hasn't the method `ec.shutdownNow()`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1389187153

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/HDFSMetadataLog.scala:

##########

@@ -49,10 +49,11 @@ import org.apache.spark.sql.internal.SQLConf

class HDFSMetadataLog[T <: AnyRef : ClassTag](sparkSession: SparkSession, path: String)

extends MetadataLog[T] with Logging {

- private implicit val formats = Serialization.formats(NoTypeHints)

+ private implicit val formats: Formats = Serialization.formats(NoTypeHints)

/** Needed to serialize type T into JSON when using Jackson */

- private implicit val manifest = Manifest.classType[T](implicitly[ClassTag[T]].runtimeClass)

+ private implicit val manifest: Manifest[T] =

Review Comment:

refferences to FileMetadataStructSuite:

I think this is the generation of generic loss. I am learning scala reflection and related code these days。

org.apache.spark.sql.execution.streaming.CompactibleFileStreamLog#allFiles

the method will return Array.empty,it loss T.

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/HDFSMetadataLog.scala:

##########

@@ -49,10 +49,11 @@ import org.apache.spark.sql.internal.SQLConf

class HDFSMetadataLog[T <: AnyRef : ClassTag](sparkSession: SparkSession, path: String)

extends MetadataLog[T] with Logging {

- private implicit val formats = Serialization.formats(NoTypeHints)

+ private implicit val formats: Formats = Serialization.formats(NoTypeHints)

/** Needed to serialize type T into JSON when using Jackson */

- private implicit val manifest = Manifest.classType[T](implicitly[ClassTag[T]].runtimeClass)

+ private implicit val manifest: Manifest[T] =

Review Comment:

refferences to FileMetadataStructSuite:

I think this is the generation of generic loss. I am learning scala reflection and related code these days。

org.apache.spark.sql.execution.streaming.CompactibleFileStreamLog#allFiles

the method will return Array.empty,it loss T.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1809580426

@LuciferYang

I write a case for this:

```scala

class Parent[T <: AnyRef: ClassTag] {

private implicit val manifest: Manifest[T] = {

val classTag = implicitly[ClassTag[T]]

if (classTag == null) {

Manifest.classType[T](classOf[AnyRef])

} else {

Manifest.classType[T](classTag.runtimeClass)

}

}

def hello(): Array[T] = {

println(manifest)

Array.empty

}

}

class Sun extends Parent[File] {

def test(): Array[File] = {

hello()

}

}

```

use `mplicit val manifest =` is ok.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1389175179

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/HDFSMetadataLog.scala:

##########

@@ -49,10 +49,11 @@ import org.apache.spark.sql.internal.SQLConf

class HDFSMetadataLog[T <: AnyRef : ClassTag](sparkSession: SparkSession, path: String)

extends MetadataLog[T] with Logging {

- private implicit val formats = Serialization.formats(NoTypeHints)

+ private implicit val formats: Formats = Serialization.formats(NoTypeHints)

/** Needed to serialize type T into JSON when using Jackson */

- private implicit val manifest = Manifest.classType[T](implicitly[ClassTag[T]].runtimeClass)

+ private implicit val manifest: Manifest[T] =

Review Comment:

I'm not sure, I didn't run all the tests

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1401534482

##########

core/src/test/scala/org/apache/spark/SparkContextSuite.scala:

##########

@@ -32,7 +32,7 @@ import org.apache.hadoop.io.{BytesWritable, LongWritable, Text}

import org.apache.hadoop.mapred.TextInputFormat

import org.apache.hadoop.mapreduce.lib.input.{TextInputFormat => NewTextInputFormat}

import org.apache.logging.log4j.{Level, LogManager}

-import org.json4s.{DefaultFormats, Extraction}

+import org.json4s._

Review Comment:

modified

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1401534708

##########

core/src/test/scala/org/apache/spark/deploy/worker/WorkerSuite.scala:

##########

@@ -23,7 +23,7 @@ import java.util.function.Supplier

import scala.concurrent.duration._

-import org.json4s.{DefaultFormats, Extraction}

+import org.json4s._

Review Comment:

done

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1829014448

@LuciferYang there is a CI error,I don't know why.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1407936648

##########

core/src/main/scala/org/apache/spark/deploy/StandaloneResourceUtils.scala:

##########

@@ -23,7 +23,7 @@ import java.nio.file.Files

import scala.collection.mutable

import scala.util.control.NonFatal

-import org.json4s.{DefaultFormats, Extraction}

+import org.json4s._

Review Comment:

```suggestion

import org.json4s.{DefaultFormats, Extraction, Formats}

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1407950170

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/HDFSMetadataLog.scala:

##########

@@ -50,9 +51,10 @@ import org.apache.spark.util.ArrayImplicits._

class HDFSMetadataLog[T <: AnyRef : ClassTag](sparkSession: SparkSession, path: String)

extends MetadataLog[T] with Logging {

- private implicit val formats = Serialization.formats(NoTypeHints)

+ private implicit val formats: Formats = Serialization.formats(NoTypeHints)

/** Needed to serialize type T into JSON when using Jackson */

+ @nowarn

Review Comment:

```suggestion

@scala.annotation.nowarn

```

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/state/OperatorStateMetadata.scala:

##########

@@ -64,8 +65,9 @@ case class OperatorStateMetadataV1(

object OperatorStateMetadataV1 {

- private implicit val formats = Serialization.formats(NoTypeHints)

+ private implicit val formats: Formats = Serialization.formats(NoTypeHints)

+ @nowarn

Review Comment:

```suggestion

@scala.annotation.nowarn

```

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/CompactibleFileStreamLog.scala:

##########

@@ -49,9 +50,10 @@ abstract class CompactibleFileStreamLog[T <: AnyRef : ClassTag](

import CompactibleFileStreamLog._

- private implicit val formats = Serialization.formats(NoTypeHints)

+ private implicit val formats: Formats = Serialization.formats(NoTypeHints)

/** Needed to serialize type T into JSON when using Jackson */

+ @nowarn

Review Comment:

```suggestion

@scala.annotation.nowarn

```

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/CompactibleFileStreamLog.scala:

##########

@@ -49,9 +50,10 @@ abstract class CompactibleFileStreamLog[T <: AnyRef : ClassTag](

import CompactibleFileStreamLog._

- private implicit val formats = Serialization.formats(NoTypeHints)

+ private implicit val formats: Formats = Serialization.formats(NoTypeHints)

/** Needed to serialize type T into JSON when using Jackson */

+ @nowarn

Review Comment:

When this annotation is only needed in one place in this class, it is customary to use xx directly.

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/FileStreamSourceLog.scala:

##########

@@ -36,8 +33,8 @@ class FileStreamSourceLog(

path: String)

extends CompactibleFileStreamLog[FileEntry](metadataLogVersion, sparkSession, path) {

- import CompactibleFileStreamLog._

- import FileStreamSourceLog._

+ import org.apache.spark.sql.execution.streaming.CompactibleFileStreamLog._

Review Comment:

Why is it necessary to change to import with the full name here?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1810216032

> > hi @LuciferYang . Do you have any ideas? `org.apache.spark.sql.execution.streaming.CompactibleFileStreamLog#allFiles()` the method will return `Array.empty` will return `Array[Object]`,but not `Array[FileEntry]`. ClassTag=null means that implicit types cannot be found from the context, `FileStreamSourceLog -> CompactibleFileStreamLog,`ClassTag` cannot be found during generic passing. hope to get some help.

>

> A bit busy today and tomorrow, so I'll have to take a look at this issue later.

It's okay, I've already resolved this case and I've discovered a new CI error, I will resolve it tomorrow.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on code in PR #43526:

URL: https://github.com/apache/spark/pull/43526#discussion_r1401539215

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/CompactibleFileStreamLog.scala:

##########

@@ -140,7 +137,9 @@ abstract class CompactibleFileStreamLog[T <: AnyRef : ClassTag](

out.write(Serialization.write(entry).getBytes(UTF_8))

}

- private def deserializeEntry(line: String): T = Serialization.read[T](line)

+ private def deserializeEntry(line: String): T = {

Review Comment:

reverted, This is test how to use 'Manifest [T]', but I forgot how to reverse it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629][CORE][SQL][CONNECT][ML][STREAMING][BUILD][EXAMPLES]Fix `Implicit definition should have explicit type` [spark]

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1822114074

Since Spark 4.0 currently does not support Scala 3, and it is not a compilation error in Scala 3, I think we can remove the part related to Scala 3 from the pr description.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

Re: [PR] [SPARK-45629]Fix `Implicit definition should have explicit type` [spark]

Posted by "laglangyue (via GitHub)" <gi...@apache.org>.

laglangyue commented on PR #43526:

URL: https://github.com/apache/spark/pull/43526#issuecomment-1791953214

> https://github.com/apache/spark/blob/e1bc48b729e40390a4b0f977eec4a9050c7cac77/project/SparkBuild.scala#L251

>

> @laglangyue You can delete the above line and then compile with

>

> ```

> build/sbt -Phadoop-3 -Pdocker-integration-tests -Pspark-ganglia-lgpl -Pkinesis-asl -Pkubernetes -Phive-thriftserver -Pconnect -Pyarn -Phive -Phadoop-cloud -Pvolcano -Pkubernetes-integration-tests Test/package streaming-kinesis-asl-assembly/assembly connect/assembly

> ```

>

> If no further compilation errors occur, we can consider the task completed.