You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@mxnet.apache.org by GitBox <gi...@apache.org> on 2022/08/23 13:14:14 UTC

[GitHub] [incubator-mxnet] anko-intel opened a new pull request, #21127: [DOC] Add tutotrial about improving accuracy of quantization with oneDNN

anko-intel opened a new pull request, #21127:

URL: https://github.com/apache/incubator-mxnet/pull/21127

## Description ##

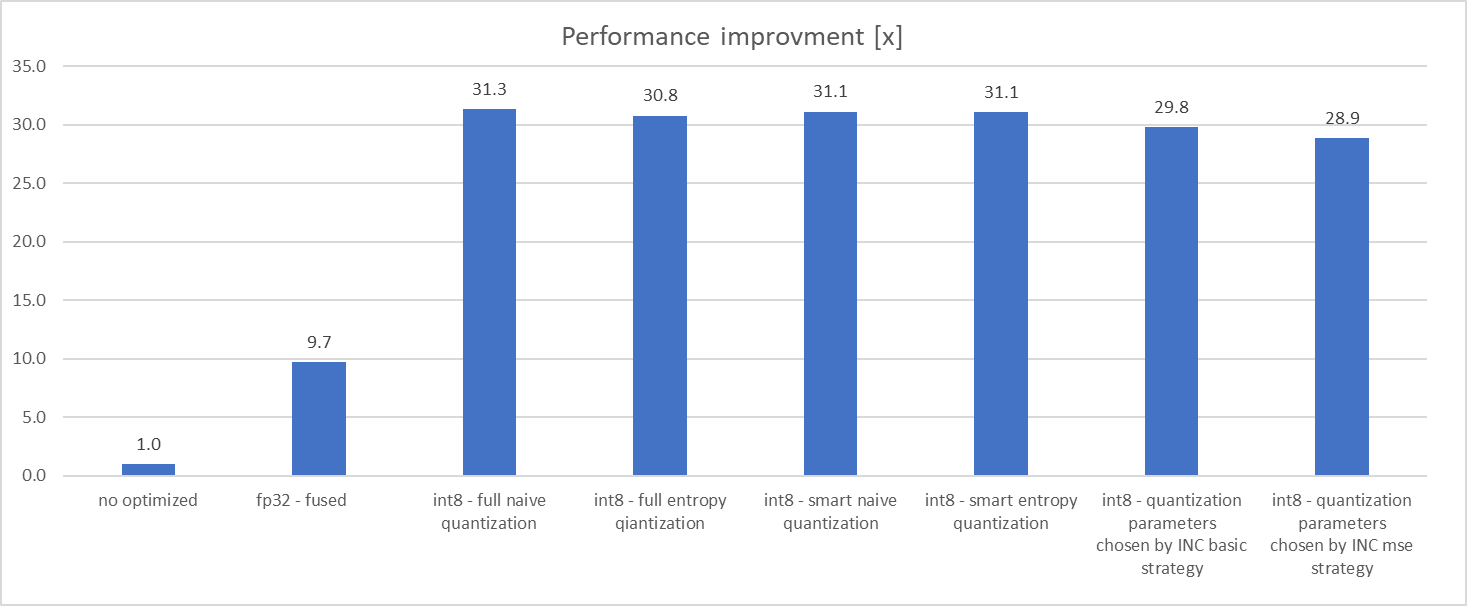

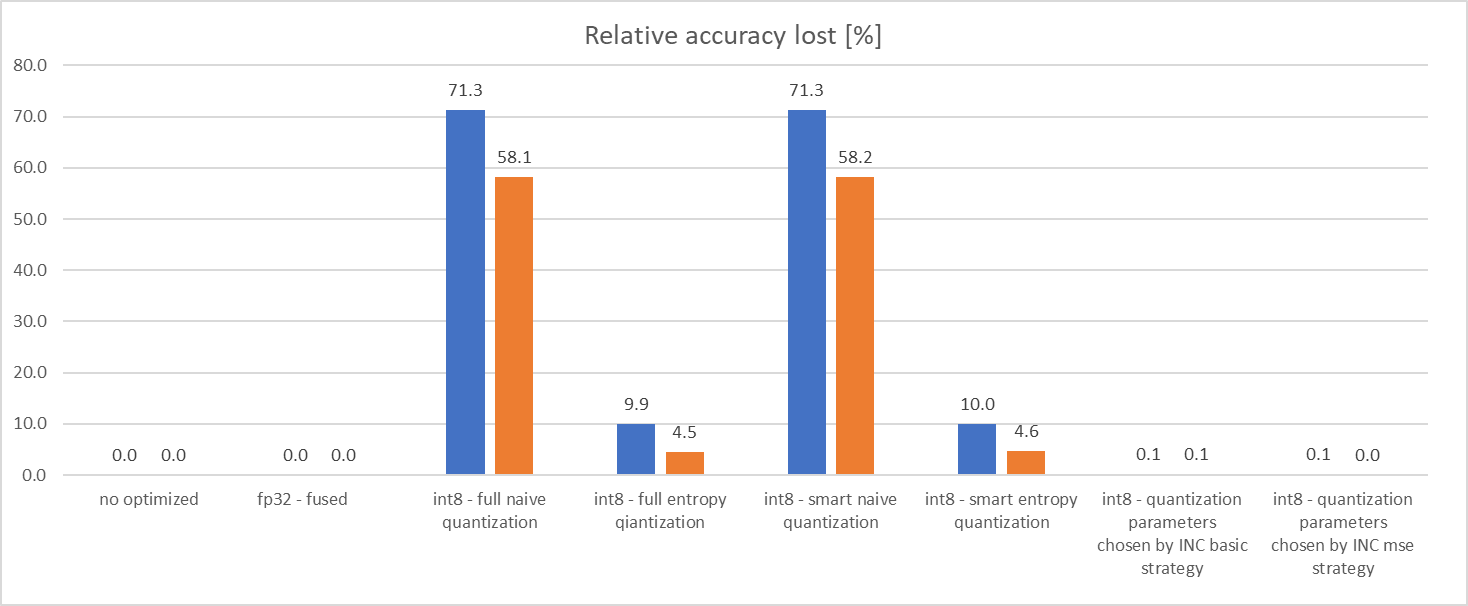

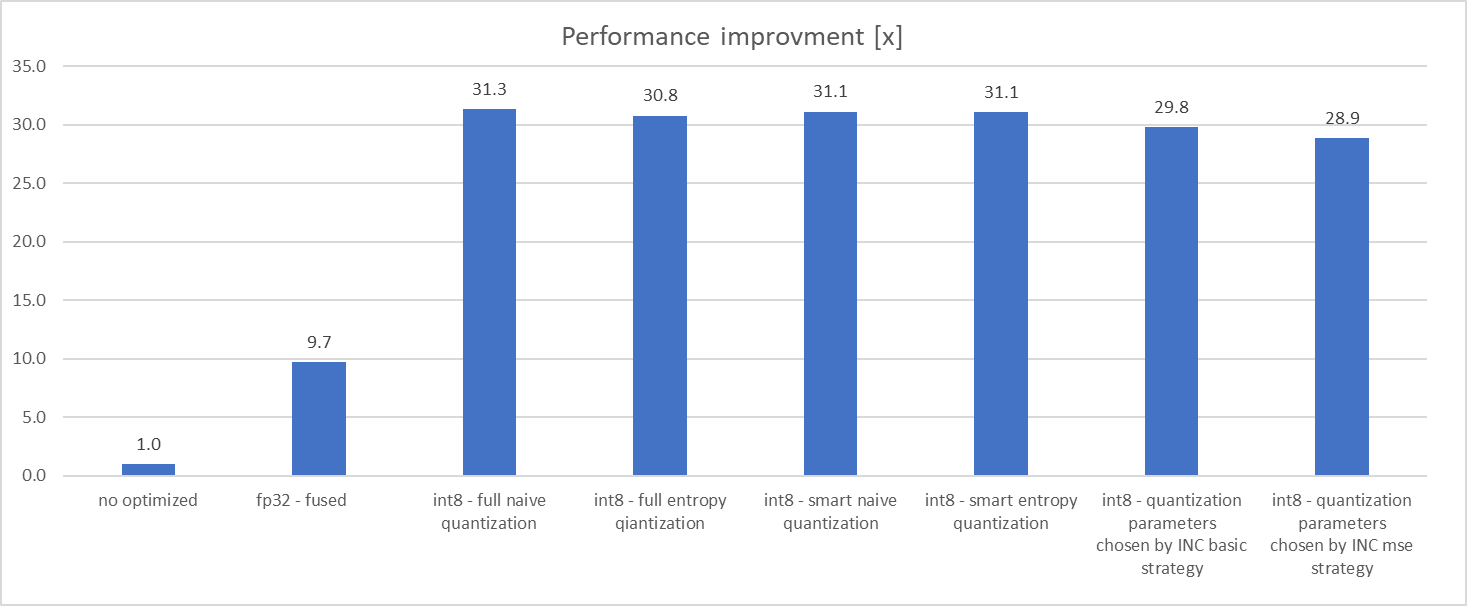

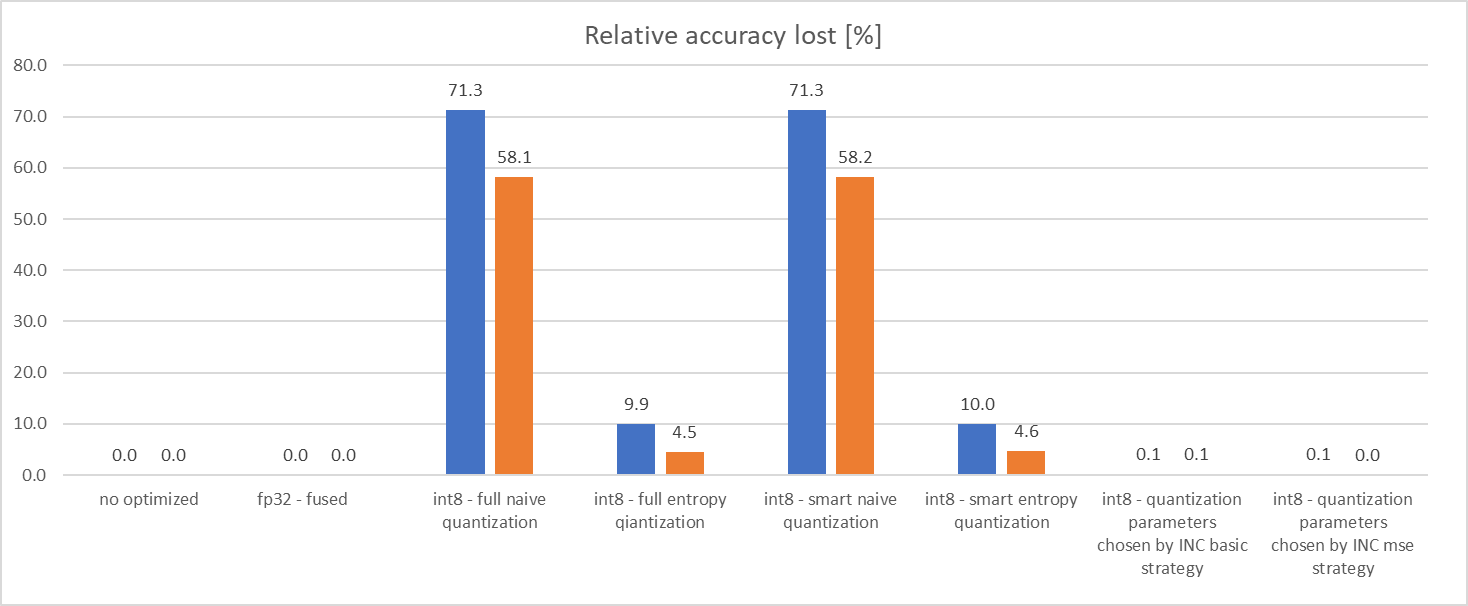

Added tutorial showing advantage of using INC with MXNet for quantization. It shows that INC can find operators mostly introduced loss of accuracy and eliminate it from quantization. This way partially quantized model achieves accuracy results almost the same as original floating point model, but with about 3 times performance improvement in comparison to optimized floating point model (or 30 times in comparison to not optimized floating point model)

## Checklist ##

### Essentials ###

- [ ] PR's title starts with a category (e.g. [BUGFIX], [MODEL], [TUTORIAL], [FEATURE], [DOC], etc)

- [ ] Changes are complete (i.e. I finished coding on this PR)

- [ ] All changes have test coverage

- [ ] Code is well-documented

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@mxnet.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-mxnet] mxnet-bot commented on pull request #21127: [DOC] Add tutotrial about improving accuracy of quantization with oneDNN

Posted by GitBox <gi...@apache.org>.

mxnet-bot commented on PR #21127:

URL: https://github.com/apache/incubator-mxnet/pull/21127#issuecomment-1224059750

Hey @anko-intel , Thanks for submitting the PR

All tests are already queued to run once. If tests fail, you can trigger one or more tests again with the following commands:

- To trigger all jobs: @mxnet-bot run ci [all]

- To trigger specific jobs: @mxnet-bot run ci [job1, job2]

***

**CI supported jobs**: [windows-cpu, website, centos-gpu, unix-gpu, windows-gpu, edge, sanity, miscellaneous, unix-cpu, clang, centos-cpu]

***

_Note_:

Only following 3 categories can trigger CI :PR Author, MXNet Committer, Jenkins Admin.

All CI tests must pass before the PR can be merged.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@mxnet.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-mxnet] bartekkuncer commented on a diff in pull request #21127: [DOC] Add tutotrial about improving accuracy of quantization with oneDNN

Posted by GitBox <gi...@apache.org>.

bartekkuncer commented on code in PR #21127:

URL: https://github.com/apache/incubator-mxnet/pull/21127#discussion_r954852841

##########

docs/python_docs/python/tutorials/performance/backend/dnnl/dnnl_quantization_inc.md:

##########

@@ -0,0 +1,290 @@

+<!--- Licensed to the Apache Software Foundation (ASF) under one -->

+<!--- or more contributor license agreements. See the NOTICE file -->

+<!--- distributed with this work for additional information -->

+<!--- regarding copyright ownership. The ASF licenses this file -->

+<!--- to you under the Apache License, Version 2.0 (the -->

+<!--- "License"); you may not use this file except in compliance -->

+<!--- with the License. You may obtain a copy of the License at -->

+

+<!--- http://www.apache.org/licenses/LICENSE-2.0 -->

+

+<!--- Unless required by applicable law or agreed to in writing, -->

+<!--- software distributed under the License is distributed on an -->

+<!--- "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY -->

+<!--- KIND, either express or implied. See the License for the -->

+<!--- specific language governing permissions and limitations -->

+<!--- under the License. -->

+

+

+# Improving accuracy with Intel® Neural Compressor

+

+The accuracy of a model can decrease as a result of quantization. When the accuracy drop is significant, we can try to manually find a better quantization configuration (exclude some layers, try different calibration methods, etc.), but for bigger models this might prove to be a difficult and time consuming task. [Intel® Neural Compressor](https://github.com/intel/neural-compressor) (INC) tries to automate this process using several tuning heuristics, which aim to find the quantization configuration that satisfies the specified accuracy requirement.

+

+**NOTE:**

+

+Most tuning strategies will try different configurations on an evaluation dataset in order to find out how each layer affects the accuracy of the model. This means that for larger models, it may take a long time to find a solution (as the tuning space is usually larger and the evaluation itself takes longer).

+

+## Installation and Prerequisites

+

+- Install MXNet with oneDNN enabled as described in the [Get started](https://mxnet.apache.org/versions/master/get_started?platform=linux&language=python&processor=cpu&environ=pip&). (Until the 2.0 release you can use the nightly build version: `pip install --pre mxnet -f https://dist.mxnet.io/python`)

Review Comment:

```suggestion

- Install MXNet with oneDNN enabled as described in the [Get started](https://mxnet.apache.org/versions/master/get_started?platform=linux&language=python&processor=cpu&environ=pip&). (Until the 2.0 release you can use the nightly build version: `pip install --pre mxnet -f https://dist.mxnet.io/python`.)

```

##########

docs/python_docs/python/tutorials/performance/backend/dnnl/dnnl_quantization_inc.md:

##########

@@ -0,0 +1,290 @@

+<!--- Licensed to the Apache Software Foundation (ASF) under one -->

+<!--- or more contributor license agreements. See the NOTICE file -->

+<!--- distributed with this work for additional information -->

+<!--- regarding copyright ownership. The ASF licenses this file -->

+<!--- to you under the Apache License, Version 2.0 (the -->

+<!--- "License"); you may not use this file except in compliance -->

+<!--- with the License. You may obtain a copy of the License at -->

+

+<!--- http://www.apache.org/licenses/LICENSE-2.0 -->

+

+<!--- Unless required by applicable law or agreed to in writing, -->

+<!--- software distributed under the License is distributed on an -->

+<!--- "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY -->

+<!--- KIND, either express or implied. See the License for the -->

+<!--- specific language governing permissions and limitations -->

+<!--- under the License. -->

+

+

+# Improving accuracy with Intel® Neural Compressor

+

+The accuracy of a model can decrease as a result of quantization. When the accuracy drop is significant, we can try to manually find a better quantization configuration (exclude some layers, try different calibration methods, etc.), but for bigger models this might prove to be a difficult and time consuming task. [Intel® Neural Compressor](https://github.com/intel/neural-compressor) (INC) tries to automate this process using several tuning heuristics, which aim to find the quantization configuration that satisfies the specified accuracy requirement.

+

+**NOTE:**

+

+Most tuning strategies will try different configurations on an evaluation dataset in order to find out how each layer affects the accuracy of the model. This means that for larger models, it may take a long time to find a solution (as the tuning space is usually larger and the evaluation itself takes longer).

+

+## Installation and Prerequisites

+

+- Install MXNet with oneDNN enabled as described in the [Get started](https://mxnet.apache.org/versions/master/get_started?platform=linux&language=python&processor=cpu&environ=pip&). (Until the 2.0 release you can use the nightly build version: `pip install --pre mxnet -f https://dist.mxnet.io/python`)

+

+- Install Intel® Neural Compressor:

+

+ Use one of the commands below to install INC (supported python versions are: 3.6, 3.7, 3.8, 3.9):

+

+ ```bash

+ # install stable version from pip

+ pip install neural-compressor

+

+ # install nightly version from pip

+ pip install -i https://test.pypi.org/simple/ neural-compressor

+

+ # install stable version from conda

+ conda install neural-compressor -c conda-forge -c intel

+ ```

+ If you come into trouble with dependencies on `cv2` library you can run: `apt-get update && apt-get install -y python3-opencv`

Review Comment:

```suggestion

If you get into trouble with dependencies on `cv2` library you can run: `apt-get update && apt-get install -y python3-opencv`.

```

##########

docs/python_docs/python/tutorials/performance/backend/dnnl/dnnl_quantization_inc.md:

##########

@@ -0,0 +1,290 @@

+<!--- Licensed to the Apache Software Foundation (ASF) under one -->

+<!--- or more contributor license agreements. See the NOTICE file -->

+<!--- distributed with this work for additional information -->

+<!--- regarding copyright ownership. The ASF licenses this file -->

+<!--- to you under the Apache License, Version 2.0 (the -->

+<!--- "License"); you may not use this file except in compliance -->

+<!--- with the License. You may obtain a copy of the License at -->

+

+<!--- http://www.apache.org/licenses/LICENSE-2.0 -->

+

+<!--- Unless required by applicable law or agreed to in writing, -->

+<!--- software distributed under the License is distributed on an -->

+<!--- "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY -->

+<!--- KIND, either express or implied. See the License for the -->

+<!--- specific language governing permissions and limitations -->

+<!--- under the License. -->

+

+

+# Improving accuracy with Intel® Neural Compressor

+

+The accuracy of a model can decrease as a result of quantization. When the accuracy drop is significant, we can try to manually find a better quantization configuration (exclude some layers, try different calibration methods, etc.), but for bigger models this might prove to be a difficult and time consuming task. [Intel® Neural Compressor](https://github.com/intel/neural-compressor) (INC) tries to automate this process using several tuning heuristics, which aim to find the quantization configuration that satisfies the specified accuracy requirement.

+

+**NOTE:**

+

+Most tuning strategies will try different configurations on an evaluation dataset in order to find out how each layer affects the accuracy of the model. This means that for larger models, it may take a long time to find a solution (as the tuning space is usually larger and the evaluation itself takes longer).

+

+## Installation and Prerequisites

+

+- Install MXNet with oneDNN enabled as described in the [Get started](https://mxnet.apache.org/versions/master/get_started?platform=linux&language=python&processor=cpu&environ=pip&). (Until the 2.0 release you can use the nightly build version: `pip install --pre mxnet -f https://dist.mxnet.io/python`)

+

+- Install Intel® Neural Compressor:

+

+ Use one of the commands below to install INC (supported python versions are: 3.6, 3.7, 3.8, 3.9):

+

+ ```bash

+ # install stable version from pip

+ pip install neural-compressor

+

+ # install nightly version from pip

+ pip install -i https://test.pypi.org/simple/ neural-compressor

+

+ # install stable version from conda

+ conda install neural-compressor -c conda-forge -c intel

+ ```

+ If you come into trouble with dependencies on `cv2` library you can run: `apt-get update && apt-get install -y python3-opencv`

+

+## Configuration file

+

+Quantization tuning process can be customized in the yaml configuration file. Below is a simple example:

+

+```yaml

+# cnn.yaml

+

+version: 1.0

+

+model:

+ name: cnn

+ framework: mxnet

+

+quantization:

+ calibration:

+ sampling_size: 160 # number of samples for calibration

+

+tuning:

+ strategy:

+ name: basic

+ accuracy_criterion:

+ relative: 0.01

+ exit_policy:

+ timeout: 0

+ random_seed: 9527

+```

+

+We are using the `basic` strategy, but you could also try out different ones. [Here](https://github.com/intel/neural-compressor/blob/master/docs/tuning_strategies.md) you can find a list of strategies available in INC and details of how they work. You can also add your own strategy if the existing ones do not suit your needs.

+

+Since the value of `timeout` is 0, INC will run until it finds a configuration that satisfies the accuracy criterion and then exit. Depending on the strategy this may not be ideal, as sometimes it would be better to further explore the tuning space to find a superior configuration both in terms of accuracy and speed. To achieve this, we can set a specific `timeout` value, which will tell INC how long (in seconds) it should run.

Review Comment:

```suggestion

Since the value of `timeout` in the example above is 0, INC will run until it finds a configuration that satisfies the accuracy criterion and then exit. Depending on the strategy this may not be ideal, as sometimes it would be better to further explore the tuning space to find a superior configuration both in terms of accuracy and speed. To achieve this, we can set a specific `timeout` value, which will tell INC how long (in seconds) it should run.

```

##########

docs/python_docs/python/tutorials/performance/backend/dnnl/dnnl_quantization_inc.md:

##########

@@ -0,0 +1,290 @@

+<!--- Licensed to the Apache Software Foundation (ASF) under one -->

+<!--- or more contributor license agreements. See the NOTICE file -->

+<!--- distributed with this work for additional information -->

+<!--- regarding copyright ownership. The ASF licenses this file -->

+<!--- to you under the Apache License, Version 2.0 (the -->

+<!--- "License"); you may not use this file except in compliance -->

+<!--- with the License. You may obtain a copy of the License at -->

+

+<!--- http://www.apache.org/licenses/LICENSE-2.0 -->

+

+<!--- Unless required by applicable law or agreed to in writing, -->

+<!--- software distributed under the License is distributed on an -->

+<!--- "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY -->

+<!--- KIND, either express or implied. See the License for the -->

+<!--- specific language governing permissions and limitations -->

+<!--- under the License. -->

+

+

+# Improving accuracy with Intel® Neural Compressor

+

+The accuracy of a model can decrease as a result of quantization. When the accuracy drop is significant, we can try to manually find a better quantization configuration (exclude some layers, try different calibration methods, etc.), but for bigger models this might prove to be a difficult and time consuming task. [Intel® Neural Compressor](https://github.com/intel/neural-compressor) (INC) tries to automate this process using several tuning heuristics, which aim to find the quantization configuration that satisfies the specified accuracy requirement.

+

+**NOTE:**

+

+Most tuning strategies will try different configurations on an evaluation dataset in order to find out how each layer affects the accuracy of the model. This means that for larger models, it may take a long time to find a solution (as the tuning space is usually larger and the evaluation itself takes longer).

+

+## Installation and Prerequisites

+

+- Install MXNet with oneDNN enabled as described in the [Get started](https://mxnet.apache.org/versions/master/get_started?platform=linux&language=python&processor=cpu&environ=pip&). (Until the 2.0 release you can use the nightly build version: `pip install --pre mxnet -f https://dist.mxnet.io/python`)

+

+- Install Intel® Neural Compressor:

+

+ Use one of the commands below to install INC (supported python versions are: 3.6, 3.7, 3.8, 3.9):

+

+ ```bash

+ # install stable version from pip

+ pip install neural-compressor

+

+ # install nightly version from pip

+ pip install -i https://test.pypi.org/simple/ neural-compressor

+

+ # install stable version from conda

+ conda install neural-compressor -c conda-forge -c intel

+ ```

+ If you come into trouble with dependencies on `cv2` library you can run: `apt-get update && apt-get install -y python3-opencv`

+

+## Configuration file

+

+Quantization tuning process can be customized in the yaml configuration file. Below is a simple example:

+

+```yaml

+# cnn.yaml

+

+version: 1.0

+

+model:

+ name: cnn

+ framework: mxnet

+

+quantization:

+ calibration:

+ sampling_size: 160 # number of samples for calibration

+

+tuning:

+ strategy:

+ name: basic

+ accuracy_criterion:

+ relative: 0.01

+ exit_policy:

+ timeout: 0

+ random_seed: 9527

+```

+

+We are using the `basic` strategy, but you could also try out different ones. [Here](https://github.com/intel/neural-compressor/blob/master/docs/tuning_strategies.md) you can find a list of strategies available in INC and details of how they work. You can also add your own strategy if the existing ones do not suit your needs.

+

+Since the value of `timeout` is 0, INC will run until it finds a configuration that satisfies the accuracy criterion and then exit. Depending on the strategy this may not be ideal, as sometimes it would be better to further explore the tuning space to find a superior configuration both in terms of accuracy and speed. To achieve this, we can set a specific `timeout` value, which will tell INC how long (in seconds) it should run.

+

+For more information about the configuration file, see the [template](https://github.com/intel/neural-compressor/blob/master/neural_compressor/template/ptq.yaml) from the official INC repo. Keep in mind that only the `post training quantization` is currently supported for MXNet.

+

+## Model quantization and tuning

+

+In general, Intel® Neural Compressor requires 4 elements in order to run:

+1. Config file - like the example above

Review Comment:

```suggestion

1. Configuration file - like the example above

```

##########

docs/python_docs/python/tutorials/performance/backend/dnnl/dnnl_quantization_inc.md:

##########

@@ -0,0 +1,290 @@

+<!--- Licensed to the Apache Software Foundation (ASF) under one -->

+<!--- or more contributor license agreements. See the NOTICE file -->

+<!--- distributed with this work for additional information -->

+<!--- regarding copyright ownership. The ASF licenses this file -->

+<!--- to you under the Apache License, Version 2.0 (the -->

+<!--- "License"); you may not use this file except in compliance -->

+<!--- with the License. You may obtain a copy of the License at -->

+

+<!--- http://www.apache.org/licenses/LICENSE-2.0 -->

+

+<!--- Unless required by applicable law or agreed to in writing, -->

+<!--- software distributed under the License is distributed on an -->

+<!--- "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY -->

+<!--- KIND, either express or implied. See the License for the -->

+<!--- specific language governing permissions and limitations -->

+<!--- under the License. -->

+

+

+# Improving accuracy with Intel® Neural Compressor

+

+The accuracy of a model can decrease as a result of quantization. When the accuracy drop is significant, we can try to manually find a better quantization configuration (exclude some layers, try different calibration methods, etc.), but for bigger models this might prove to be a difficult and time consuming task. [Intel® Neural Compressor](https://github.com/intel/neural-compressor) (INC) tries to automate this process using several tuning heuristics, which aim to find the quantization configuration that satisfies the specified accuracy requirement.

+

+**NOTE:**

+

+Most tuning strategies will try different configurations on an evaluation dataset in order to find out how each layer affects the accuracy of the model. This means that for larger models, it may take a long time to find a solution (as the tuning space is usually larger and the evaluation itself takes longer).

+

+## Installation and Prerequisites

+

+- Install MXNet with oneDNN enabled as described in the [Get started](https://mxnet.apache.org/versions/master/get_started?platform=linux&language=python&processor=cpu&environ=pip&). (Until the 2.0 release you can use the nightly build version: `pip install --pre mxnet -f https://dist.mxnet.io/python`)

+

+- Install Intel® Neural Compressor:

+

+ Use one of the commands below to install INC (supported python versions are: 3.6, 3.7, 3.8, 3.9):

+

+ ```bash

+ # install stable version from pip

+ pip install neural-compressor

+

+ # install nightly version from pip

+ pip install -i https://test.pypi.org/simple/ neural-compressor

+

+ # install stable version from conda

+ conda install neural-compressor -c conda-forge -c intel

+ ```

+ If you come into trouble with dependencies on `cv2` library you can run: `apt-get update && apt-get install -y python3-opencv`

+

+## Configuration file

+

+Quantization tuning process can be customized in the yaml configuration file. Below is a simple example:

+

+```yaml

+# cnn.yaml

+

+version: 1.0

+

+model:

+ name: cnn

+ framework: mxnet

+

+quantization:

+ calibration:

+ sampling_size: 160 # number of samples for calibration

+

+tuning:

+ strategy:

+ name: basic

+ accuracy_criterion:

+ relative: 0.01

+ exit_policy:

+ timeout: 0

+ random_seed: 9527

+```

+

+We are using the `basic` strategy, but you could also try out different ones. [Here](https://github.com/intel/neural-compressor/blob/master/docs/tuning_strategies.md) you can find a list of strategies available in INC and details of how they work. You can also add your own strategy if the existing ones do not suit your needs.

+

+Since the value of `timeout` is 0, INC will run until it finds a configuration that satisfies the accuracy criterion and then exit. Depending on the strategy this may not be ideal, as sometimes it would be better to further explore the tuning space to find a superior configuration both in terms of accuracy and speed. To achieve this, we can set a specific `timeout` value, which will tell INC how long (in seconds) it should run.

+

+For more information about the configuration file, see the [template](https://github.com/intel/neural-compressor/blob/master/neural_compressor/template/ptq.yaml) from the official INC repo. Keep in mind that only the `post training quantization` is currently supported for MXNet.

+

+## Model quantization and tuning

+

+In general, Intel® Neural Compressor requires 4 elements in order to run:

+1. Config file - like the example above

+2. Model to be quantized

+3. Calibration dataloader

+4. Evaluation function - a function that takes a model as an argument and returns the accuracy it achieves on a certain evaluation dataset.

+

+### Quantizing ResNet

+

+The [quantization](https://mxnet.apache.org/versions/master/api/python/docs/tutorials/performance/backend/dnnl/dnnl_quantization.html#Quantization) sections described how to quantize ResNet using the native MXNet quantization. This example shows how we can achieve the similar results (with the auto-tuning) using INC.

+

+1. Get the model

+

+```python

+import logging

+import mxnet as mx

+from mxnet.gluon.model_zoo import vision

+

+logging.basicConfig()

+logger = logging.getLogger('logger')

+logger.setLevel(logging.INFO)

+

+batch_shape = (1, 3, 224, 224)

+resnet18 = vision.resnet18_v1(pretrained=True)

+```

+

+2. Prepare the dataset:

+

+```python

+mx.test_utils.download('http://data.mxnet.io/data/val_256_q90.rec', 'data/val_256_q90.rec')

+

+batch_size = 16

+mean_std = {'mean_r': 123.68, 'mean_g': 116.779, 'mean_b': 103.939,

+ 'std_r': 58.393, 'std_g': 57.12, 'std_b': 57.375}

+

+data = mx.io.ImageRecordIter(path_imgrec='data/val_256_q90.rec',

+ batch_size=batch_size,

+ data_shape=batch_shape[1:],

+ rand_crop=False,

+ rand_mirror=False,

+ shuffle=False,

+ **mean_std)

+data.batch_size = batch_size

+```

+

+3. Prepare the evaluation function:

+

+```python

+eval_samples = batch_size*10

+

+def eval_func(model):

+ data.reset()

+ metric = mx.metric.Accuracy()

+ for i, batch in enumerate(data):

+ if i * batch_size >= eval_samples:

+ break

+ x = batch.data[0].as_in_context(mx.cpu())

+ label = batch.label[0].as_in_context(mx.cpu())

+ outputs = model.forward(x)

+ metric.update(label, outputs)

+ return metric.get()[1]

+```

+

+4. Run Intel® Neural Compressor:

+

+```python

+from neural_compressor.experimental import Quantization

+quantizer = Quantization("./cnn.yaml")

+quantizer.model = resnet18

+quantizer.calib_dataloader = data

+quantizer.eval_func = eval_func

+qnet = quantizer.fit().model

+```

+

+Since this model already achieves good accuracy using native quantization (less than 1% accuracy drop), for the given configuration file, INC will end on the first configuration, quantizing all layers using `naive` calibration mode for each. To see the true potential of INC, we need a model which suffers from a larger accuracy drop after quantization.

+

+### Quantizing ResNet50v2

+

+This example shows how to use INC to quantize ResNet50 v2. In this case, the native MXNet quantization introduce a huge accuracy drop (70% using `naive` calibration mode) and INC allows automatically find better solution.

+

+This is the (TODO link to INC configuration file) for this example:

Review Comment:

TODO?

##########

docs/python_docs/python/tutorials/performance/backend/dnnl/dnnl_quantization_inc.md:

##########

@@ -0,0 +1,290 @@

+<!--- Licensed to the Apache Software Foundation (ASF) under one -->

+<!--- or more contributor license agreements. See the NOTICE file -->

+<!--- distributed with this work for additional information -->

+<!--- regarding copyright ownership. The ASF licenses this file -->

+<!--- to you under the Apache License, Version 2.0 (the -->

+<!--- "License"); you may not use this file except in compliance -->

+<!--- with the License. You may obtain a copy of the License at -->

+

+<!--- http://www.apache.org/licenses/LICENSE-2.0 -->

+

+<!--- Unless required by applicable law or agreed to in writing, -->

+<!--- software distributed under the License is distributed on an -->

+<!--- "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY -->

+<!--- KIND, either express or implied. See the License for the -->

+<!--- specific language governing permissions and limitations -->

+<!--- under the License. -->

+

+

+# Improving accuracy with Intel® Neural Compressor

+

+The accuracy of a model can decrease as a result of quantization. When the accuracy drop is significant, we can try to manually find a better quantization configuration (exclude some layers, try different calibration methods, etc.), but for bigger models this might prove to be a difficult and time consuming task. [Intel® Neural Compressor](https://github.com/intel/neural-compressor) (INC) tries to automate this process using several tuning heuristics, which aim to find the quantization configuration that satisfies the specified accuracy requirement.

+

+**NOTE:**

+

+Most tuning strategies will try different configurations on an evaluation dataset in order to find out how each layer affects the accuracy of the model. This means that for larger models, it may take a long time to find a solution (as the tuning space is usually larger and the evaluation itself takes longer).

+

+## Installation and Prerequisites

+

+- Install MXNet with oneDNN enabled as described in the [Get started](https://mxnet.apache.org/versions/master/get_started?platform=linux&language=python&processor=cpu&environ=pip&). (Until the 2.0 release you can use the nightly build version: `pip install --pre mxnet -f https://dist.mxnet.io/python`)

+

+- Install Intel® Neural Compressor:

+

+ Use one of the commands below to install INC (supported python versions are: 3.6, 3.7, 3.8, 3.9):

+

+ ```bash

+ # install stable version from pip

+ pip install neural-compressor

+

+ # install nightly version from pip

+ pip install -i https://test.pypi.org/simple/ neural-compressor

+

+ # install stable version from conda

+ conda install neural-compressor -c conda-forge -c intel

+ ```

+ If you come into trouble with dependencies on `cv2` library you can run: `apt-get update && apt-get install -y python3-opencv`

+

+## Configuration file

+

+Quantization tuning process can be customized in the yaml configuration file. Below is a simple example:

+

+```yaml

+# cnn.yaml

+

+version: 1.0

+

+model:

+ name: cnn

+ framework: mxnet

+

+quantization:

+ calibration:

+ sampling_size: 160 # number of samples for calibration

+

+tuning:

+ strategy:

+ name: basic

+ accuracy_criterion:

+ relative: 0.01

+ exit_policy:

+ timeout: 0

+ random_seed: 9527

+```

+

+We are using the `basic` strategy, but you could also try out different ones. [Here](https://github.com/intel/neural-compressor/blob/master/docs/tuning_strategies.md) you can find a list of strategies available in INC and details of how they work. You can also add your own strategy if the existing ones do not suit your needs.

+

+Since the value of `timeout` is 0, INC will run until it finds a configuration that satisfies the accuracy criterion and then exit. Depending on the strategy this may not be ideal, as sometimes it would be better to further explore the tuning space to find a superior configuration both in terms of accuracy and speed. To achieve this, we can set a specific `timeout` value, which will tell INC how long (in seconds) it should run.

+

+For more information about the configuration file, see the [template](https://github.com/intel/neural-compressor/blob/master/neural_compressor/template/ptq.yaml) from the official INC repo. Keep in mind that only the `post training quantization` is currently supported for MXNet.

+

+## Model quantization and tuning

+

+In general, Intel® Neural Compressor requires 4 elements in order to run:

+1. Config file - like the example above

+2. Model to be quantized

+3. Calibration dataloader

+4. Evaluation function - a function that takes a model as an argument and returns the accuracy it achieves on a certain evaluation dataset.

+

+### Quantizing ResNet

+

+The [quantization](https://mxnet.apache.org/versions/master/api/python/docs/tutorials/performance/backend/dnnl/dnnl_quantization.html#Quantization) sections described how to quantize ResNet using the native MXNet quantization. This example shows how we can achieve the similar results (with the auto-tuning) using INC.

+

+1. Get the model

+

+```python

+import logging

+import mxnet as mx

+from mxnet.gluon.model_zoo import vision

+

+logging.basicConfig()

+logger = logging.getLogger('logger')

+logger.setLevel(logging.INFO)

+

+batch_shape = (1, 3, 224, 224)

+resnet18 = vision.resnet18_v1(pretrained=True)

+```

+

+2. Prepare the dataset:

+

+```python

+mx.test_utils.download('http://data.mxnet.io/data/val_256_q90.rec', 'data/val_256_q90.rec')

+

+batch_size = 16

+mean_std = {'mean_r': 123.68, 'mean_g': 116.779, 'mean_b': 103.939,

+ 'std_r': 58.393, 'std_g': 57.12, 'std_b': 57.375}

+

+data = mx.io.ImageRecordIter(path_imgrec='data/val_256_q90.rec',

+ batch_size=batch_size,

+ data_shape=batch_shape[1:],

+ rand_crop=False,

+ rand_mirror=False,

+ shuffle=False,

+ **mean_std)

+data.batch_size = batch_size

+```

+

+3. Prepare the evaluation function:

+

+```python

+eval_samples = batch_size*10

+

+def eval_func(model):

+ data.reset()

+ metric = mx.metric.Accuracy()

+ for i, batch in enumerate(data):

+ if i * batch_size >= eval_samples:

+ break

+ x = batch.data[0].as_in_context(mx.cpu())

+ label = batch.label[0].as_in_context(mx.cpu())

+ outputs = model.forward(x)

+ metric.update(label, outputs)

+ return metric.get()[1]

+```

+

+4. Run Intel® Neural Compressor:

+

+```python

+from neural_compressor.experimental import Quantization

+quantizer = Quantization("./cnn.yaml")

+quantizer.model = resnet18

+quantizer.calib_dataloader = data

+quantizer.eval_func = eval_func

+qnet = quantizer.fit().model

+```

+

+Since this model already achieves good accuracy using native quantization (less than 1% accuracy drop), for the given configuration file, INC will end on the first configuration, quantizing all layers using `naive` calibration mode for each. To see the true potential of INC, we need a model which suffers from a larger accuracy drop after quantization.

+

+### Quantizing ResNet50v2

+

+This example shows how to use INC to quantize ResNet50 v2. In this case, the native MXNet quantization introduce a huge accuracy drop (70% using `naive` calibration mode) and INC allows automatically find better solution.

+

+This is the (TODO link to INC configuration file) for this example:

+```yaml

+version: 1.0

+

+model:

+ name: resnet50_v2

+ framework: mxnet

+

+quantization:

+ calibration:

+ sampling_size: 192 # number of samples for calibration

+

+tuning:

+ strategy:

+ name: mse

+ accuracy_criterion:

+ relative: 0.015

+ exit_policy:

+ timeout: 0

+ max_trials: 500

+ random_seed: 9527

+```

+

+It could be used with script below

+(TODO link to resnet_mse.py)

+to find operator which mostly influence accuracy drops and disable it from quantization.

+You can find description of MSE strategy

+[here](https://github.com/intel/neural-compressor/blob/master/docs/tuning_strategies.md#user-content-mse).

+

+```python

+import mxnet as mx

+from mxnet.gluon.model_zoo.vision import resnet50_v2

+from mxnet.gluon.data.vision import transforms

+from mxnet.contrib.quantization import quantize_net

+

+# Preparing input data

+rgb_mean = (0.485, 0.456, 0.406)

+rgb_std = (0.229, 0.224, 0.225)

+batch_size = 64

+num_calib_batches = 9

+# set below proper path to ImageNet data set

Review Comment:

```suggestion

# set proper path to ImageNet data set below

```

##########

docs/python_docs/python/tutorials/performance/backend/dnnl/dnnl_quantization_inc.md:

##########

@@ -0,0 +1,290 @@

+<!--- Licensed to the Apache Software Foundation (ASF) under one -->

+<!--- or more contributor license agreements. See the NOTICE file -->

+<!--- distributed with this work for additional information -->

+<!--- regarding copyright ownership. The ASF licenses this file -->

+<!--- to you under the Apache License, Version 2.0 (the -->

+<!--- "License"); you may not use this file except in compliance -->

+<!--- with the License. You may obtain a copy of the License at -->

+

+<!--- http://www.apache.org/licenses/LICENSE-2.0 -->

+

+<!--- Unless required by applicable law or agreed to in writing, -->

+<!--- software distributed under the License is distributed on an -->

+<!--- "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY -->

+<!--- KIND, either express or implied. See the License for the -->

+<!--- specific language governing permissions and limitations -->

+<!--- under the License. -->

+

+

+# Improving accuracy with Intel® Neural Compressor

+

+The accuracy of a model can decrease as a result of quantization. When the accuracy drop is significant, we can try to manually find a better quantization configuration (exclude some layers, try different calibration methods, etc.), but for bigger models this might prove to be a difficult and time consuming task. [Intel® Neural Compressor](https://github.com/intel/neural-compressor) (INC) tries to automate this process using several tuning heuristics, which aim to find the quantization configuration that satisfies the specified accuracy requirement.

+

+**NOTE:**

+

+Most tuning strategies will try different configurations on an evaluation dataset in order to find out how each layer affects the accuracy of the model. This means that for larger models, it may take a long time to find a solution (as the tuning space is usually larger and the evaluation itself takes longer).

+

+## Installation and Prerequisites

+

+- Install MXNet with oneDNN enabled as described in the [Get started](https://mxnet.apache.org/versions/master/get_started?platform=linux&language=python&processor=cpu&environ=pip&). (Until the 2.0 release you can use the nightly build version: `pip install --pre mxnet -f https://dist.mxnet.io/python`)

+

+- Install Intel® Neural Compressor:

+

+ Use one of the commands below to install INC (supported python versions are: 3.6, 3.7, 3.8, 3.9):

+

+ ```bash

+ # install stable version from pip

+ pip install neural-compressor

+

+ # install nightly version from pip

+ pip install -i https://test.pypi.org/simple/ neural-compressor

+

+ # install stable version from conda

+ conda install neural-compressor -c conda-forge -c intel

+ ```

+ If you come into trouble with dependencies on `cv2` library you can run: `apt-get update && apt-get install -y python3-opencv`

+

+## Configuration file

+

+Quantization tuning process can be customized in the yaml configuration file. Below is a simple example:

+

+```yaml

+# cnn.yaml

+

+version: 1.0

+

+model:

+ name: cnn

+ framework: mxnet

+

+quantization:

+ calibration:

+ sampling_size: 160 # number of samples for calibration

+

+tuning:

+ strategy:

+ name: basic

+ accuracy_criterion:

+ relative: 0.01

+ exit_policy:

+ timeout: 0

+ random_seed: 9527

+```

+

+We are using the `basic` strategy, but you could also try out different ones. [Here](https://github.com/intel/neural-compressor/blob/master/docs/tuning_strategies.md) you can find a list of strategies available in INC and details of how they work. You can also add your own strategy if the existing ones do not suit your needs.

+

+Since the value of `timeout` is 0, INC will run until it finds a configuration that satisfies the accuracy criterion and then exit. Depending on the strategy this may not be ideal, as sometimes it would be better to further explore the tuning space to find a superior configuration both in terms of accuracy and speed. To achieve this, we can set a specific `timeout` value, which will tell INC how long (in seconds) it should run.

+

+For more information about the configuration file, see the [template](https://github.com/intel/neural-compressor/blob/master/neural_compressor/template/ptq.yaml) from the official INC repo. Keep in mind that only the `post training quantization` is currently supported for MXNet.

+

+## Model quantization and tuning

+

+In general, Intel® Neural Compressor requires 4 elements in order to run:

+1. Config file - like the example above

+2. Model to be quantized

+3. Calibration dataloader

+4. Evaluation function - a function that takes a model as an argument and returns the accuracy it achieves on a certain evaluation dataset.

+

+### Quantizing ResNet

+

+The [quantization](https://mxnet.apache.org/versions/master/api/python/docs/tutorials/performance/backend/dnnl/dnnl_quantization.html#Quantization) sections described how to quantize ResNet using the native MXNet quantization. This example shows how we can achieve the similar results (with the auto-tuning) using INC.

+

+1. Get the model

+

+```python

+import logging

+import mxnet as mx

+from mxnet.gluon.model_zoo import vision

+

+logging.basicConfig()

+logger = logging.getLogger('logger')

+logger.setLevel(logging.INFO)

+

+batch_shape = (1, 3, 224, 224)

+resnet18 = vision.resnet18_v1(pretrained=True)

+```

+

+2. Prepare the dataset:

+

+```python

+mx.test_utils.download('http://data.mxnet.io/data/val_256_q90.rec', 'data/val_256_q90.rec')

+

+batch_size = 16

+mean_std = {'mean_r': 123.68, 'mean_g': 116.779, 'mean_b': 103.939,

+ 'std_r': 58.393, 'std_g': 57.12, 'std_b': 57.375}

+

+data = mx.io.ImageRecordIter(path_imgrec='data/val_256_q90.rec',

+ batch_size=batch_size,

+ data_shape=batch_shape[1:],

+ rand_crop=False,

+ rand_mirror=False,

+ shuffle=False,

+ **mean_std)

+data.batch_size = batch_size

+```

+

+3. Prepare the evaluation function:

+

+```python

+eval_samples = batch_size*10

+

+def eval_func(model):

+ data.reset()

+ metric = mx.metric.Accuracy()

+ for i, batch in enumerate(data):

+ if i * batch_size >= eval_samples:

+ break

+ x = batch.data[0].as_in_context(mx.cpu())

+ label = batch.label[0].as_in_context(mx.cpu())

+ outputs = model.forward(x)

+ metric.update(label, outputs)

+ return metric.get()[1]

+```

+

+4. Run Intel® Neural Compressor:

+

+```python

+from neural_compressor.experimental import Quantization

+quantizer = Quantization("./cnn.yaml")

+quantizer.model = resnet18

+quantizer.calib_dataloader = data

+quantizer.eval_func = eval_func

+qnet = quantizer.fit().model

+```

+

+Since this model already achieves good accuracy using native quantization (less than 1% accuracy drop), for the given configuration file, INC will end on the first configuration, quantizing all layers using `naive` calibration mode for each. To see the true potential of INC, we need a model which suffers from a larger accuracy drop after quantization.

+

+### Quantizing ResNet50v2

+

+This example shows how to use INC to quantize ResNet50 v2. In this case, the native MXNet quantization introduce a huge accuracy drop (70% using `naive` calibration mode) and INC allows automatically find better solution.

+

+This is the (TODO link to INC configuration file) for this example:

+```yaml

+version: 1.0

+

+model:

+ name: resnet50_v2

+ framework: mxnet

+

+quantization:

+ calibration:

+ sampling_size: 192 # number of samples for calibration

+

+tuning:

+ strategy:

+ name: mse

+ accuracy_criterion:

+ relative: 0.015

+ exit_policy:

+ timeout: 0

+ max_trials: 500

+ random_seed: 9527

+```

+

+It could be used with script below

+(TODO link to resnet_mse.py)

+to find operator which mostly influence accuracy drops and disable it from quantization.

Review Comment:

```suggestion

to find operator, which caused the most significant accuracy drop and disable it from quantization.

```

##########

docs/python_docs/python/tutorials/performance/backend/dnnl/dnnl_quantization_inc.md:

##########

@@ -0,0 +1,290 @@

+<!--- Licensed to the Apache Software Foundation (ASF) under one -->

+<!--- or more contributor license agreements. See the NOTICE file -->

+<!--- distributed with this work for additional information -->

+<!--- regarding copyright ownership. The ASF licenses this file -->

+<!--- to you under the Apache License, Version 2.0 (the -->

+<!--- "License"); you may not use this file except in compliance -->

+<!--- with the License. You may obtain a copy of the License at -->

+

+<!--- http://www.apache.org/licenses/LICENSE-2.0 -->

+

+<!--- Unless required by applicable law or agreed to in writing, -->

+<!--- software distributed under the License is distributed on an -->

+<!--- "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY -->

+<!--- KIND, either express or implied. See the License for the -->

+<!--- specific language governing permissions and limitations -->

+<!--- under the License. -->

+

+

+# Improving accuracy with Intel® Neural Compressor

+

+The accuracy of a model can decrease as a result of quantization. When the accuracy drop is significant, we can try to manually find a better quantization configuration (exclude some layers, try different calibration methods, etc.), but for bigger models this might prove to be a difficult and time consuming task. [Intel® Neural Compressor](https://github.com/intel/neural-compressor) (INC) tries to automate this process using several tuning heuristics, which aim to find the quantization configuration that satisfies the specified accuracy requirement.

+

+**NOTE:**

+

+Most tuning strategies will try different configurations on an evaluation dataset in order to find out how each layer affects the accuracy of the model. This means that for larger models, it may take a long time to find a solution (as the tuning space is usually larger and the evaluation itself takes longer).

+

+## Installation and Prerequisites

+

+- Install MXNet with oneDNN enabled as described in the [Get started](https://mxnet.apache.org/versions/master/get_started?platform=linux&language=python&processor=cpu&environ=pip&). (Until the 2.0 release you can use the nightly build version: `pip install --pre mxnet -f https://dist.mxnet.io/python`)

+

+- Install Intel® Neural Compressor:

+

+ Use one of the commands below to install INC (supported python versions are: 3.6, 3.7, 3.8, 3.9):

+

+ ```bash

+ # install stable version from pip

+ pip install neural-compressor

+

+ # install nightly version from pip

+ pip install -i https://test.pypi.org/simple/ neural-compressor

+

+ # install stable version from conda

+ conda install neural-compressor -c conda-forge -c intel

+ ```

+ If you come into trouble with dependencies on `cv2` library you can run: `apt-get update && apt-get install -y python3-opencv`

+

+## Configuration file

+

+Quantization tuning process can be customized in the yaml configuration file. Below is a simple example:

+

+```yaml

+# cnn.yaml

+

+version: 1.0

+

+model:

+ name: cnn

+ framework: mxnet

+

+quantization:

+ calibration:

+ sampling_size: 160 # number of samples for calibration

+

+tuning:

+ strategy:

+ name: basic

+ accuracy_criterion:

+ relative: 0.01

+ exit_policy:

+ timeout: 0

+ random_seed: 9527

+```

+

+We are using the `basic` strategy, but you could also try out different ones. [Here](https://github.com/intel/neural-compressor/blob/master/docs/tuning_strategies.md) you can find a list of strategies available in INC and details of how they work. You can also add your own strategy if the existing ones do not suit your needs.

+

+Since the value of `timeout` is 0, INC will run until it finds a configuration that satisfies the accuracy criterion and then exit. Depending on the strategy this may not be ideal, as sometimes it would be better to further explore the tuning space to find a superior configuration both in terms of accuracy and speed. To achieve this, we can set a specific `timeout` value, which will tell INC how long (in seconds) it should run.

+

+For more information about the configuration file, see the [template](https://github.com/intel/neural-compressor/blob/master/neural_compressor/template/ptq.yaml) from the official INC repo. Keep in mind that only the `post training quantization` is currently supported for MXNet.

+

+## Model quantization and tuning

+

+In general, Intel® Neural Compressor requires 4 elements in order to run:

+1. Config file - like the example above

+2. Model to be quantized

+3. Calibration dataloader

+4. Evaluation function - a function that takes a model as an argument and returns the accuracy it achieves on a certain evaluation dataset.

+

+### Quantizing ResNet

+

+The [quantization](https://mxnet.apache.org/versions/master/api/python/docs/tutorials/performance/backend/dnnl/dnnl_quantization.html#Quantization) sections described how to quantize ResNet using the native MXNet quantization. This example shows how we can achieve the similar results (with the auto-tuning) using INC.

+

+1. Get the model

+

+```python

+import logging

+import mxnet as mx

+from mxnet.gluon.model_zoo import vision

+

+logging.basicConfig()

+logger = logging.getLogger('logger')

+logger.setLevel(logging.INFO)

+

+batch_shape = (1, 3, 224, 224)

+resnet18 = vision.resnet18_v1(pretrained=True)

+```

+

+2. Prepare the dataset:

+

+```python

+mx.test_utils.download('http://data.mxnet.io/data/val_256_q90.rec', 'data/val_256_q90.rec')

+

+batch_size = 16

+mean_std = {'mean_r': 123.68, 'mean_g': 116.779, 'mean_b': 103.939,

+ 'std_r': 58.393, 'std_g': 57.12, 'std_b': 57.375}

+

+data = mx.io.ImageRecordIter(path_imgrec='data/val_256_q90.rec',

+ batch_size=batch_size,

+ data_shape=batch_shape[1:],

+ rand_crop=False,

+ rand_mirror=False,

+ shuffle=False,

+ **mean_std)

+data.batch_size = batch_size

+```

+

+3. Prepare the evaluation function:

+

+```python

+eval_samples = batch_size*10

+

+def eval_func(model):

+ data.reset()

+ metric = mx.metric.Accuracy()

+ for i, batch in enumerate(data):

+ if i * batch_size >= eval_samples:

+ break

+ x = batch.data[0].as_in_context(mx.cpu())

+ label = batch.label[0].as_in_context(mx.cpu())

+ outputs = model.forward(x)

+ metric.update(label, outputs)

+ return metric.get()[1]

+```

+

+4. Run Intel® Neural Compressor:

+

+```python

+from neural_compressor.experimental import Quantization

+quantizer = Quantization("./cnn.yaml")

+quantizer.model = resnet18

+quantizer.calib_dataloader = data

+quantizer.eval_func = eval_func

+qnet = quantizer.fit().model

+```

+

+Since this model already achieves good accuracy using native quantization (less than 1% accuracy drop), for the given configuration file, INC will end on the first configuration, quantizing all layers using `naive` calibration mode for each. To see the true potential of INC, we need a model which suffers from a larger accuracy drop after quantization.

+

+### Quantizing ResNet50v2

+

+This example shows how to use INC to quantize ResNet50 v2. In this case, the native MXNet quantization introduce a huge accuracy drop (70% using `naive` calibration mode) and INC allows automatically find better solution.

Review Comment:

```suggestion

This example shows how to use INC to quantize ResNet50 v2. In this case, the native MXNet quantization introduce a huge accuracy drop (70% using `naive` calibration mode) and INC allows to automatically find better solution.

```

##########

docs/python_docs/python/tutorials/performance/backend/dnnl/dnnl_quantization_inc.md:

##########

@@ -0,0 +1,290 @@

+<!--- Licensed to the Apache Software Foundation (ASF) under one -->

+<!--- or more contributor license agreements. See the NOTICE file -->

+<!--- distributed with this work for additional information -->

+<!--- regarding copyright ownership. The ASF licenses this file -->

+<!--- to you under the Apache License, Version 2.0 (the -->

+<!--- "License"); you may not use this file except in compliance -->

+<!--- with the License. You may obtain a copy of the License at -->

+

+<!--- http://www.apache.org/licenses/LICENSE-2.0 -->

+

+<!--- Unless required by applicable law or agreed to in writing, -->

+<!--- software distributed under the License is distributed on an -->

+<!--- "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY -->

+<!--- KIND, either express or implied. See the License for the -->

+<!--- specific language governing permissions and limitations -->

+<!--- under the License. -->

+

+

+# Improving accuracy with Intel® Neural Compressor

+

+The accuracy of a model can decrease as a result of quantization. When the accuracy drop is significant, we can try to manually find a better quantization configuration (exclude some layers, try different calibration methods, etc.), but for bigger models this might prove to be a difficult and time consuming task. [Intel® Neural Compressor](https://github.com/intel/neural-compressor) (INC) tries to automate this process using several tuning heuristics, which aim to find the quantization configuration that satisfies the specified accuracy requirement.

+

+**NOTE:**

+

+Most tuning strategies will try different configurations on an evaluation dataset in order to find out how each layer affects the accuracy of the model. This means that for larger models, it may take a long time to find a solution (as the tuning space is usually larger and the evaluation itself takes longer).

+

+## Installation and Prerequisites

+

+- Install MXNet with oneDNN enabled as described in the [Get started](https://mxnet.apache.org/versions/master/get_started?platform=linux&language=python&processor=cpu&environ=pip&). (Until the 2.0 release you can use the nightly build version: `pip install --pre mxnet -f https://dist.mxnet.io/python`)

+

+- Install Intel® Neural Compressor:

+

+ Use one of the commands below to install INC (supported python versions are: 3.6, 3.7, 3.8, 3.9):

+

+ ```bash

+ # install stable version from pip

+ pip install neural-compressor

+

+ # install nightly version from pip

+ pip install -i https://test.pypi.org/simple/ neural-compressor

+

+ # install stable version from conda

+ conda install neural-compressor -c conda-forge -c intel

+ ```

+ If you come into trouble with dependencies on `cv2` library you can run: `apt-get update && apt-get install -y python3-opencv`

+

+## Configuration file

+

+Quantization tuning process can be customized in the yaml configuration file. Below is a simple example:

+

+```yaml

+# cnn.yaml

+

+version: 1.0

+

+model:

+ name: cnn

+ framework: mxnet

+

+quantization:

+ calibration:

+ sampling_size: 160 # number of samples for calibration

+

+tuning:

+ strategy:

+ name: basic

+ accuracy_criterion:

+ relative: 0.01

+ exit_policy:

+ timeout: 0

+ random_seed: 9527

+```

+

+We are using the `basic` strategy, but you could also try out different ones. [Here](https://github.com/intel/neural-compressor/blob/master/docs/tuning_strategies.md) you can find a list of strategies available in INC and details of how they work. You can also add your own strategy if the existing ones do not suit your needs.

+

+Since the value of `timeout` is 0, INC will run until it finds a configuration that satisfies the accuracy criterion and then exit. Depending on the strategy this may not be ideal, as sometimes it would be better to further explore the tuning space to find a superior configuration both in terms of accuracy and speed. To achieve this, we can set a specific `timeout` value, which will tell INC how long (in seconds) it should run.

+

+For more information about the configuration file, see the [template](https://github.com/intel/neural-compressor/blob/master/neural_compressor/template/ptq.yaml) from the official INC repo. Keep in mind that only the `post training quantization` is currently supported for MXNet.

+

+## Model quantization and tuning

+

+In general, Intel® Neural Compressor requires 4 elements in order to run:

+1. Config file - like the example above

+2. Model to be quantized

+3. Calibration dataloader

+4. Evaluation function - a function that takes a model as an argument and returns the accuracy it achieves on a certain evaluation dataset.

+

+### Quantizing ResNet

+

+The [quantization](https://mxnet.apache.org/versions/master/api/python/docs/tutorials/performance/backend/dnnl/dnnl_quantization.html#Quantization) sections described how to quantize ResNet using the native MXNet quantization. This example shows how we can achieve the similar results (with the auto-tuning) using INC.

+

+1. Get the model

+

+```python

+import logging

+import mxnet as mx

+from mxnet.gluon.model_zoo import vision

+

+logging.basicConfig()

+logger = logging.getLogger('logger')

+logger.setLevel(logging.INFO)

+

+batch_shape = (1, 3, 224, 224)

+resnet18 = vision.resnet18_v1(pretrained=True)

+```

+

+2. Prepare the dataset:

+

+```python

+mx.test_utils.download('http://data.mxnet.io/data/val_256_q90.rec', 'data/val_256_q90.rec')

+

+batch_size = 16

+mean_std = {'mean_r': 123.68, 'mean_g': 116.779, 'mean_b': 103.939,

+ 'std_r': 58.393, 'std_g': 57.12, 'std_b': 57.375}

+

+data = mx.io.ImageRecordIter(path_imgrec='data/val_256_q90.rec',

+ batch_size=batch_size,

+ data_shape=batch_shape[1:],

+ rand_crop=False,

+ rand_mirror=False,

+ shuffle=False,

+ **mean_std)

+data.batch_size = batch_size

+```

+

+3. Prepare the evaluation function:

+

+```python

+eval_samples = batch_size*10

+

+def eval_func(model):

+ data.reset()

+ metric = mx.metric.Accuracy()

+ for i, batch in enumerate(data):

+ if i * batch_size >= eval_samples:

+ break

+ x = batch.data[0].as_in_context(mx.cpu())

+ label = batch.label[0].as_in_context(mx.cpu())

+ outputs = model.forward(x)

+ metric.update(label, outputs)

+ return metric.get()[1]

+```

+

+4. Run Intel® Neural Compressor:

+

+```python

+from neural_compressor.experimental import Quantization

+quantizer = Quantization("./cnn.yaml")

+quantizer.model = resnet18

+quantizer.calib_dataloader = data

+quantizer.eval_func = eval_func

+qnet = quantizer.fit().model

+```

+

+Since this model already achieves good accuracy using native quantization (less than 1% accuracy drop), for the given configuration file, INC will end on the first configuration, quantizing all layers using `naive` calibration mode for each. To see the true potential of INC, we need a model which suffers from a larger accuracy drop after quantization.

+

+### Quantizing ResNet50v2

+

+This example shows how to use INC to quantize ResNet50 v2. In this case, the native MXNet quantization introduce a huge accuracy drop (70% using `naive` calibration mode) and INC allows automatically find better solution.

+

+This is the (TODO link to INC configuration file) for this example:

+```yaml

+version: 1.0

+

+model:

+ name: resnet50_v2

+ framework: mxnet

+

+quantization:

+ calibration:

+ sampling_size: 192 # number of samples for calibration

+

+tuning:

+ strategy:

+ name: mse

+ accuracy_criterion:

+ relative: 0.015

+ exit_policy:

+ timeout: 0

+ max_trials: 500

+ random_seed: 9527

+```

+

+It could be used with script below

+(TODO link to resnet_mse.py)

Review Comment:

TODO?

##########

docs/python_docs/python/tutorials/performance/backend/dnnl/dnnl_quantization_inc.md:

##########

@@ -0,0 +1,290 @@

+<!--- Licensed to the Apache Software Foundation (ASF) under one -->

+<!--- or more contributor license agreements. See the NOTICE file -->

+<!--- distributed with this work for additional information -->

+<!--- regarding copyright ownership. The ASF licenses this file -->

+<!--- to you under the Apache License, Version 2.0 (the -->

+<!--- "License"); you may not use this file except in compliance -->

+<!--- with the License. You may obtain a copy of the License at -->

+

+<!--- http://www.apache.org/licenses/LICENSE-2.0 -->

+

+<!--- Unless required by applicable law or agreed to in writing, -->

+<!--- software distributed under the License is distributed on an -->

+<!--- "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY -->

+<!--- KIND, either express or implied. See the License for the -->

+<!--- specific language governing permissions and limitations -->

+<!--- under the License. -->

+

+

+# Improving accuracy with Intel® Neural Compressor

+

+The accuracy of a model can decrease as a result of quantization. When the accuracy drop is significant, we can try to manually find a better quantization configuration (exclude some layers, try different calibration methods, etc.), but for bigger models this might prove to be a difficult and time consuming task. [Intel® Neural Compressor](https://github.com/intel/neural-compressor) (INC) tries to automate this process using several tuning heuristics, which aim to find the quantization configuration that satisfies the specified accuracy requirement.

+

+**NOTE:**

+

+Most tuning strategies will try different configurations on an evaluation dataset in order to find out how each layer affects the accuracy of the model. This means that for larger models, it may take a long time to find a solution (as the tuning space is usually larger and the evaluation itself takes longer).

+

+## Installation and Prerequisites

+

+- Install MXNet with oneDNN enabled as described in the [Get started](https://mxnet.apache.org/versions/master/get_started?platform=linux&language=python&processor=cpu&environ=pip&). (Until the 2.0 release you can use the nightly build version: `pip install --pre mxnet -f https://dist.mxnet.io/python`)

+

+- Install Intel® Neural Compressor:

+

+ Use one of the commands below to install INC (supported python versions are: 3.6, 3.7, 3.8, 3.9):

+

+ ```bash

+ # install stable version from pip

+ pip install neural-compressor

+

+ # install nightly version from pip

+ pip install -i https://test.pypi.org/simple/ neural-compressor

+

+ # install stable version from conda

+ conda install neural-compressor -c conda-forge -c intel

+ ```

+ If you come into trouble with dependencies on `cv2` library you can run: `apt-get update && apt-get install -y python3-opencv`

+

+## Configuration file

+

+Quantization tuning process can be customized in the yaml configuration file. Below is a simple example:

+

+```yaml

+# cnn.yaml

+

+version: 1.0

+

+model:

+ name: cnn

+ framework: mxnet

+

+quantization:

+ calibration:

+ sampling_size: 160 # number of samples for calibration

+

+tuning:

+ strategy:

+ name: basic

+ accuracy_criterion:

+ relative: 0.01

+ exit_policy:

+ timeout: 0

+ random_seed: 9527

+```

+

+We are using the `basic` strategy, but you could also try out different ones. [Here](https://github.com/intel/neural-compressor/blob/master/docs/tuning_strategies.md) you can find a list of strategies available in INC and details of how they work. You can also add your own strategy if the existing ones do not suit your needs.

+

+Since the value of `timeout` is 0, INC will run until it finds a configuration that satisfies the accuracy criterion and then exit. Depending on the strategy this may not be ideal, as sometimes it would be better to further explore the tuning space to find a superior configuration both in terms of accuracy and speed. To achieve this, we can set a specific `timeout` value, which will tell INC how long (in seconds) it should run.

+

+For more information about the configuration file, see the [template](https://github.com/intel/neural-compressor/blob/master/neural_compressor/template/ptq.yaml) from the official INC repo. Keep in mind that only the `post training quantization` is currently supported for MXNet.

+

+## Model quantization and tuning

+

+In general, Intel® Neural Compressor requires 4 elements in order to run:

+1. Config file - like the example above

+2. Model to be quantized

+3. Calibration dataloader

+4. Evaluation function - a function that takes a model as an argument and returns the accuracy it achieves on a certain evaluation dataset.

+

+### Quantizing ResNet

+

+The [quantization](https://mxnet.apache.org/versions/master/api/python/docs/tutorials/performance/backend/dnnl/dnnl_quantization.html#Quantization) sections described how to quantize ResNet using the native MXNet quantization. This example shows how we can achieve the similar results (with the auto-tuning) using INC.

+

+1. Get the model

+

+```python

+import logging

+import mxnet as mx

+from mxnet.gluon.model_zoo import vision

+

+logging.basicConfig()

+logger = logging.getLogger('logger')

+logger.setLevel(logging.INFO)

+

+batch_shape = (1, 3, 224, 224)

+resnet18 = vision.resnet18_v1(pretrained=True)

+```

+

+2. Prepare the dataset:

+

+```python

+mx.test_utils.download('http://data.mxnet.io/data/val_256_q90.rec', 'data/val_256_q90.rec')

+

+batch_size = 16

+mean_std = {'mean_r': 123.68, 'mean_g': 116.779, 'mean_b': 103.939,

+ 'std_r': 58.393, 'std_g': 57.12, 'std_b': 57.375}

+

+data = mx.io.ImageRecordIter(path_imgrec='data/val_256_q90.rec',

+ batch_size=batch_size,

+ data_shape=batch_shape[1:],

+ rand_crop=False,

+ rand_mirror=False,

+ shuffle=False,

+ **mean_std)

+data.batch_size = batch_size

+```

+

+3. Prepare the evaluation function:

+

+```python

+eval_samples = batch_size*10

+

+def eval_func(model):

+ data.reset()

+ metric = mx.metric.Accuracy()

+ for i, batch in enumerate(data):

+ if i * batch_size >= eval_samples:

+ break

+ x = batch.data[0].as_in_context(mx.cpu())

+ label = batch.label[0].as_in_context(mx.cpu())

+ outputs = model.forward(x)

+ metric.update(label, outputs)

+ return metric.get()[1]

+```

+

+4. Run Intel® Neural Compressor:

+

+```python

+from neural_compressor.experimental import Quantization

+quantizer = Quantization("./cnn.yaml")

+quantizer.model = resnet18

+quantizer.calib_dataloader = data

+quantizer.eval_func = eval_func

+qnet = quantizer.fit().model

+```

+

+Since this model already achieves good accuracy using native quantization (less than 1% accuracy drop), for the given configuration file, INC will end on the first configuration, quantizing all layers using `naive` calibration mode for each. To see the true potential of INC, we need a model which suffers from a larger accuracy drop after quantization.

+

+### Quantizing ResNet50v2

+

+This example shows how to use INC to quantize ResNet50 v2. In this case, the native MXNet quantization introduce a huge accuracy drop (70% using `naive` calibration mode) and INC allows automatically find better solution.

+

+This is the (TODO link to INC configuration file) for this example:

+```yaml

+version: 1.0

+

+model:

+ name: resnet50_v2

+ framework: mxnet

+

+quantization:

+ calibration:

+ sampling_size: 192 # number of samples for calibration

+

+tuning:

+ strategy:

+ name: mse

+ accuracy_criterion:

+ relative: 0.015

+ exit_policy:

+ timeout: 0

+ max_trials: 500

+ random_seed: 9527

+```

+

+It could be used with script below

+(TODO link to resnet_mse.py)

+to find operator which mostly influence accuracy drops and disable it from quantization.

+You can find description of MSE strategy

+[here](https://github.com/intel/neural-compressor/blob/master/docs/tuning_strategies.md#user-content-mse).

+

+```python

+import mxnet as mx

+from mxnet.gluon.model_zoo.vision import resnet50_v2

+from mxnet.gluon.data.vision import transforms

+from mxnet.contrib.quantization import quantize_net

+

+# Preparing input data

+rgb_mean = (0.485, 0.456, 0.406)

+rgb_std = (0.229, 0.224, 0.225)

+batch_size = 64

+num_calib_batches = 9

+# set below proper path to ImageNet data set

+dataset = mx.gluon.data.vision.ImageRecordDataset('../imagenet/rec/val.rec')

+# Tuning in INC on whole data set takes too long time so we take only part of the whole data set

Review Comment:

```suggestion

# Tuning with INC on whole data set takes loads of time. Therefore, we take only a part of the data set

```

##########

docs/python_docs/python/tutorials/performance/backend/dnnl/dnnl_quantization_inc.md:

##########

@@ -0,0 +1,290 @@

+<!--- Licensed to the Apache Software Foundation (ASF) under one -->

+<!--- or more contributor license agreements. See the NOTICE file -->

+<!--- distributed with this work for additional information -->

+<!--- regarding copyright ownership. The ASF licenses this file -->

+<!--- to you under the Apache License, Version 2.0 (the -->

+<!--- "License"); you may not use this file except in compliance -->

+<!--- with the License. You may obtain a copy of the License at -->

+

+<!--- http://www.apache.org/licenses/LICENSE-2.0 -->

+

+<!--- Unless required by applicable law or agreed to in writing, -->

+<!--- software distributed under the License is distributed on an -->

+<!--- "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY -->

+<!--- KIND, either express or implied. See the License for the -->

+<!--- specific language governing permissions and limitations -->

+<!--- under the License. -->

+

+

+# Improving accuracy with Intel® Neural Compressor

+

+The accuracy of a model can decrease as a result of quantization. When the accuracy drop is significant, we can try to manually find a better quantization configuration (exclude some layers, try different calibration methods, etc.), but for bigger models this might prove to be a difficult and time consuming task. [Intel® Neural Compressor](https://github.com/intel/neural-compressor) (INC) tries to automate this process using several tuning heuristics, which aim to find the quantization configuration that satisfies the specified accuracy requirement.

+

+**NOTE:**

+

+Most tuning strategies will try different configurations on an evaluation dataset in order to find out how each layer affects the accuracy of the model. This means that for larger models, it may take a long time to find a solution (as the tuning space is usually larger and the evaluation itself takes longer).

+

+## Installation and Prerequisites

+

+- Install MXNet with oneDNN enabled as described in the [Get started](https://mxnet.apache.org/versions/master/get_started?platform=linux&language=python&processor=cpu&environ=pip&). (Until the 2.0 release you can use the nightly build version: `pip install --pre mxnet -f https://dist.mxnet.io/python`)

+

+- Install Intel® Neural Compressor:

+

+ Use one of the commands below to install INC (supported python versions are: 3.6, 3.7, 3.8, 3.9):

+

+ ```bash

+ # install stable version from pip

+ pip install neural-compressor

+

+ # install nightly version from pip

+ pip install -i https://test.pypi.org/simple/ neural-compressor

+

+ # install stable version from conda

+ conda install neural-compressor -c conda-forge -c intel

+ ```

+ If you come into trouble with dependencies on `cv2` library you can run: `apt-get update && apt-get install -y python3-opencv`

+

+## Configuration file

+

+Quantization tuning process can be customized in the yaml configuration file. Below is a simple example:

+

+```yaml

+# cnn.yaml

+

+version: 1.0

+

+model:

+ name: cnn

+ framework: mxnet

+

+quantization:

+ calibration:

+ sampling_size: 160 # number of samples for calibration

+

+tuning:

+ strategy:

+ name: basic

+ accuracy_criterion:

+ relative: 0.01

+ exit_policy:

+ timeout: 0

+ random_seed: 9527

+```

+

+We are using the `basic` strategy, but you could also try out different ones. [Here](https://github.com/intel/neural-compressor/blob/master/docs/tuning_strategies.md) you can find a list of strategies available in INC and details of how they work. You can also add your own strategy if the existing ones do not suit your needs.

+

+Since the value of `timeout` is 0, INC will run until it finds a configuration that satisfies the accuracy criterion and then exit. Depending on the strategy this may not be ideal, as sometimes it would be better to further explore the tuning space to find a superior configuration both in terms of accuracy and speed. To achieve this, we can set a specific `timeout` value, which will tell INC how long (in seconds) it should run.

+

+For more information about the configuration file, see the [template](https://github.com/intel/neural-compressor/blob/master/neural_compressor/template/ptq.yaml) from the official INC repo. Keep in mind that only the `post training quantization` is currently supported for MXNet.

+

+## Model quantization and tuning

+

+In general, Intel® Neural Compressor requires 4 elements in order to run:

+1. Config file - like the example above

+2. Model to be quantized

+3. Calibration dataloader

+4. Evaluation function - a function that takes a model as an argument and returns the accuracy it achieves on a certain evaluation dataset.

+

+### Quantizing ResNet

+

+The [quantization](https://mxnet.apache.org/versions/master/api/python/docs/tutorials/performance/backend/dnnl/dnnl_quantization.html#Quantization) sections described how to quantize ResNet using the native MXNet quantization. This example shows how we can achieve the similar results (with the auto-tuning) using INC.

+

+1. Get the model

+

+```python

+import logging

+import mxnet as mx

+from mxnet.gluon.model_zoo import vision

+

+logging.basicConfig()

+logger = logging.getLogger('logger')

+logger.setLevel(logging.INFO)

+

+batch_shape = (1, 3, 224, 224)

+resnet18 = vision.resnet18_v1(pretrained=True)

+```

+

+2. Prepare the dataset:

+

+```python