You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2021/09/30 14:16:52 UTC

[GitHub] [hudi] mauropelucchi opened a new issue #3739: Hoodie clean is not deleting old files

mauropelucchi opened a new issue #3739:

URL: https://github.com/apache/hudi/issues/3739

I am trying to see if hudi clean is triggering and cleaning my files, but however I do not see any action being performed on cleaning the old log files.

**To Reproduce**

I am writing some files to S3 using hudi with below configuration multiple times (more than 4 times to see the cleaning triggered.)

**My hudi config**

`

hudi_options_prodcode = {

'hoodie.table.name': table_name,

'hoodie.datasource.write.recordkey.field': 'posting_key',

'hoodie.datasource.write.partitionpath.field': 'range_partition',

'hoodie.datasource.write.table.name': table_name,

'hoodie.datasource.write.precombine.field': 'update_date',

'hoodie.datasource.write.table.type': 'MERGE_ON_READ',

'hoodie.cleaner.policy': 'KEEP_LATEST_COMMITS',

'hoodie.consistency.check.enabled': True,

'hoodie.bloom.index.filter.type': 'dynamic_v0',

'hoodie.bloom.index.bucketized.checking': False,

'hoodie.memory.merge.max.size': '2004857600000',

'hoodie.upsert.shuffle.parallelism': parallelism,

'hoodie.insert.shuffle.parallelism': parallelism,

'hoodie.bulkinsert.shuffle.parallelism': parallelism,

'hoodie.parquet.small.file.limit': '204857600',

'hoodie.parquet.max.file.size': str(self.__parquet_max_file_size_byte),

'hoodie.memory.compaction.fraction': '384402653184',

'hoodie.write.buffer.limit.bytes': str(128 * 1024 * 1024),

'hoodie.compact.inline': True,

'hoodie.compact.inline.max.delta.commits': 1,

'hoodie.datasource.compaction.async.enable': False,

'hoodie.parquet.compression.ratio': '0.35',

'hoodie.logfile.max.size': '268435456',

'hoodie.logfile.to.parquet.compression.ratio': '0.5',

'hoodie.datasource.write.hive_style_partitioning': True,

'hoodie.keep.min.commits': 5,

'hoodie.keep.max.commits': 6,

'hoodie.copyonwrite.record.size.estimate': 32,

'hoodie.cleaner.commits.retained': 4,

'hoodie.clean.automatic': True

}`

**Writing to s3**

path_to_delta_table = "s3://..../<my test folder>/" df.write.format("org.apache.hudi").options(**hudi_options_prodcode).mode("append").save(path_to_delta_table)

**Expected behavior**

As per my understanding the logs should be deleted after max commit which is 4 and will keep only one commit at time.

**Environment Description**

Hudi version : 0.6.0 (Amazon version)

Spark version : 2.4

Storage (HDFS/S3/GCS..) : S3

Running on Docker? (yes/no) : No

EMR : 5.31.0

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-936731947

to clarify, archival touches only the timeline and cleaner touches only the data files.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] mauropelucchi commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

mauropelucchi commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-931369093

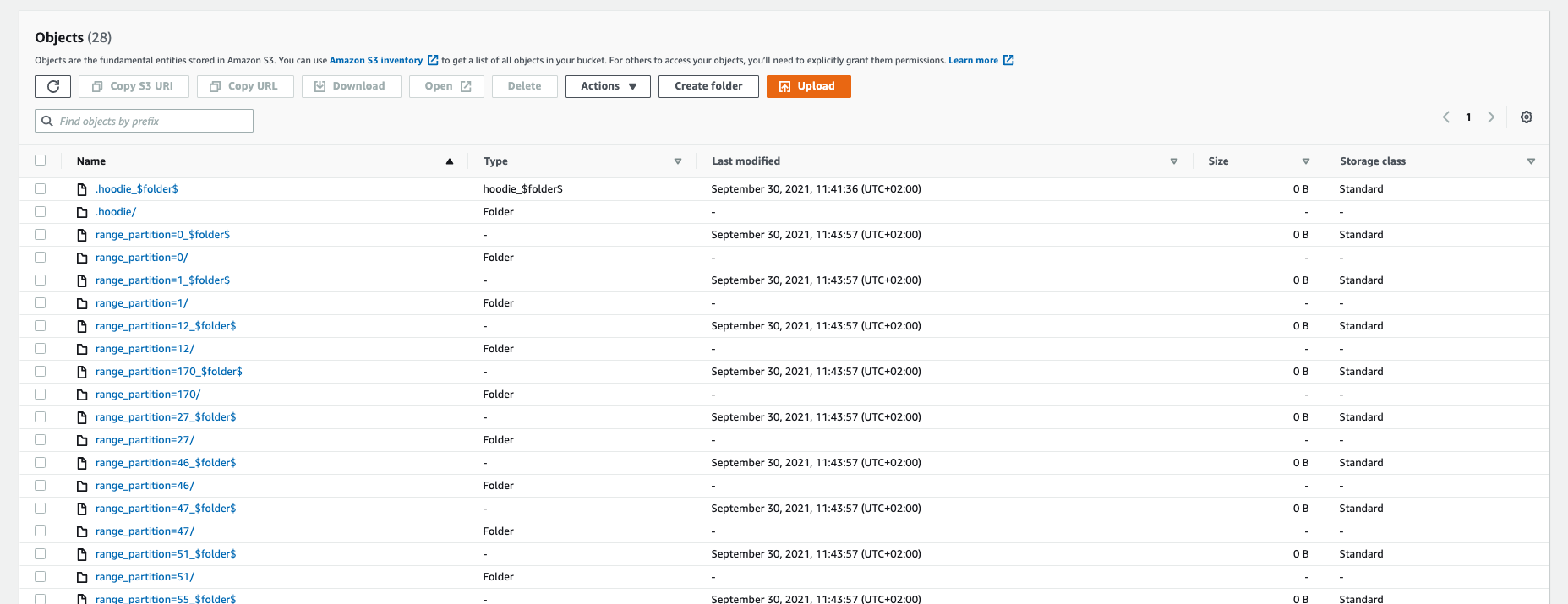

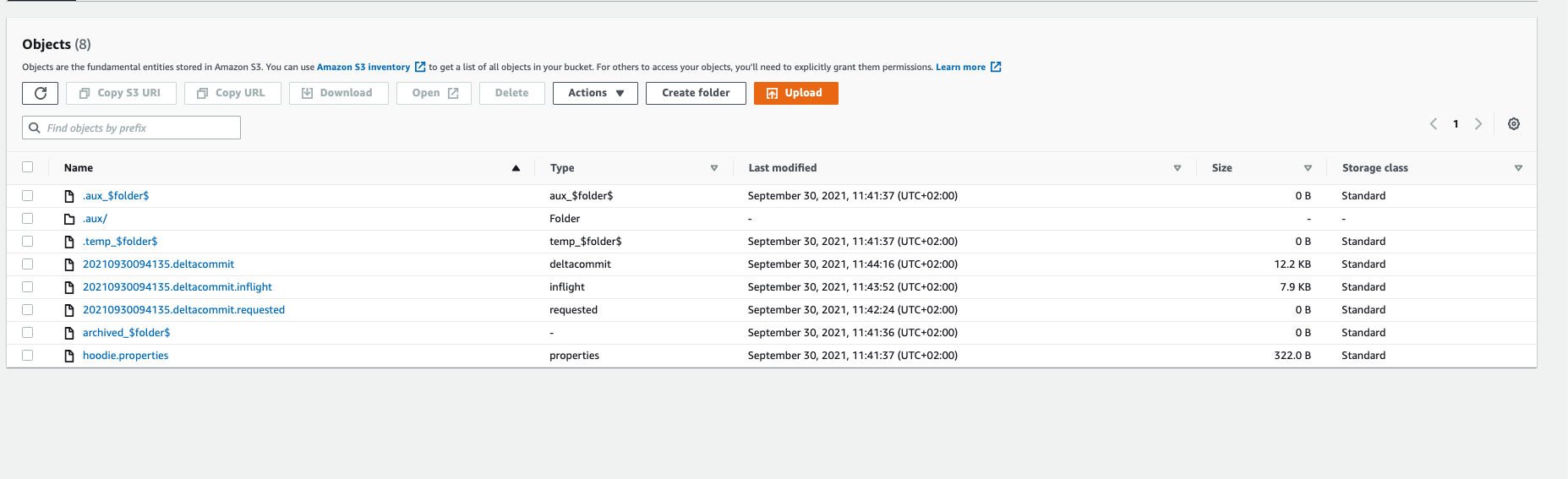

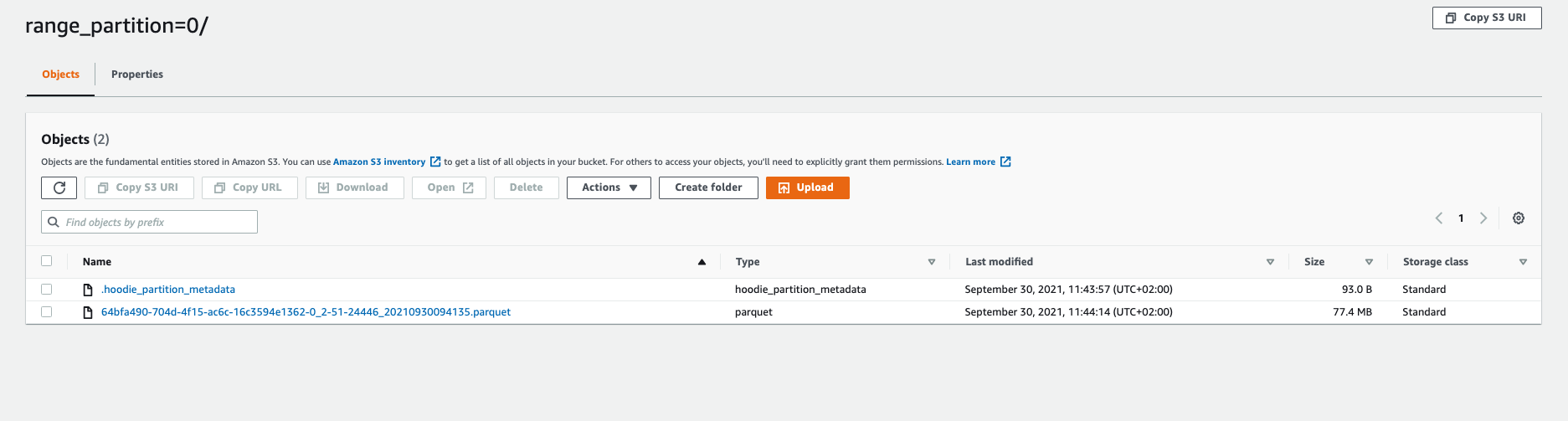

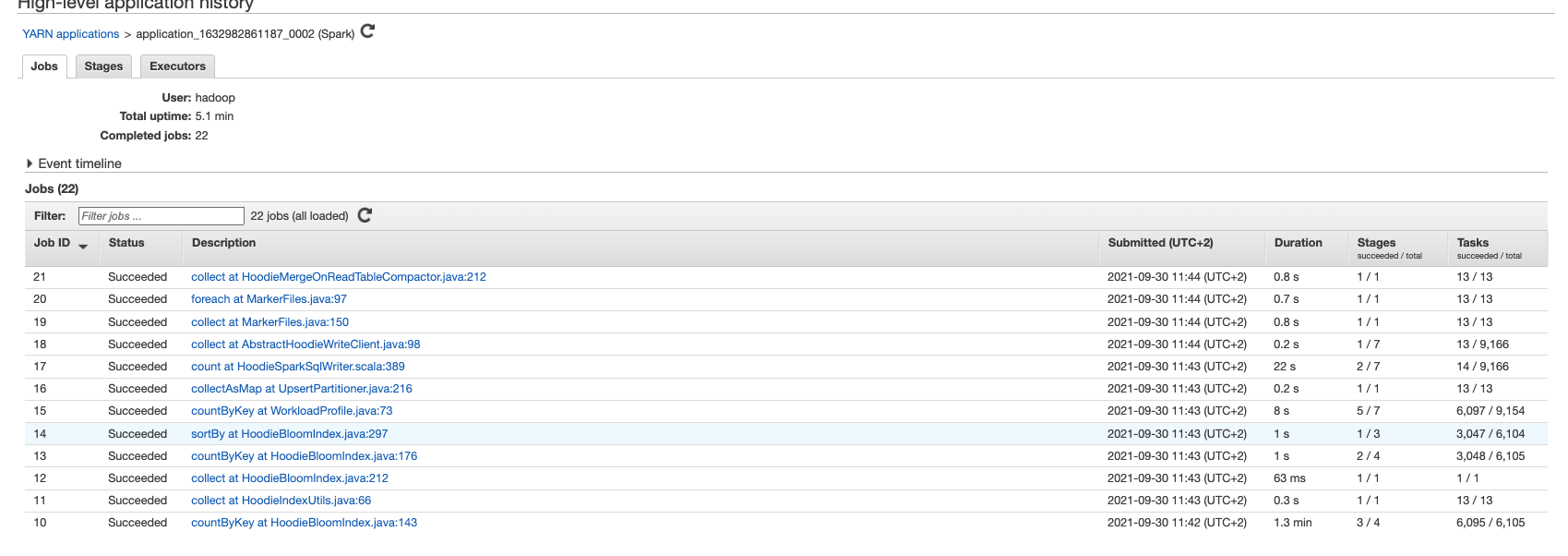

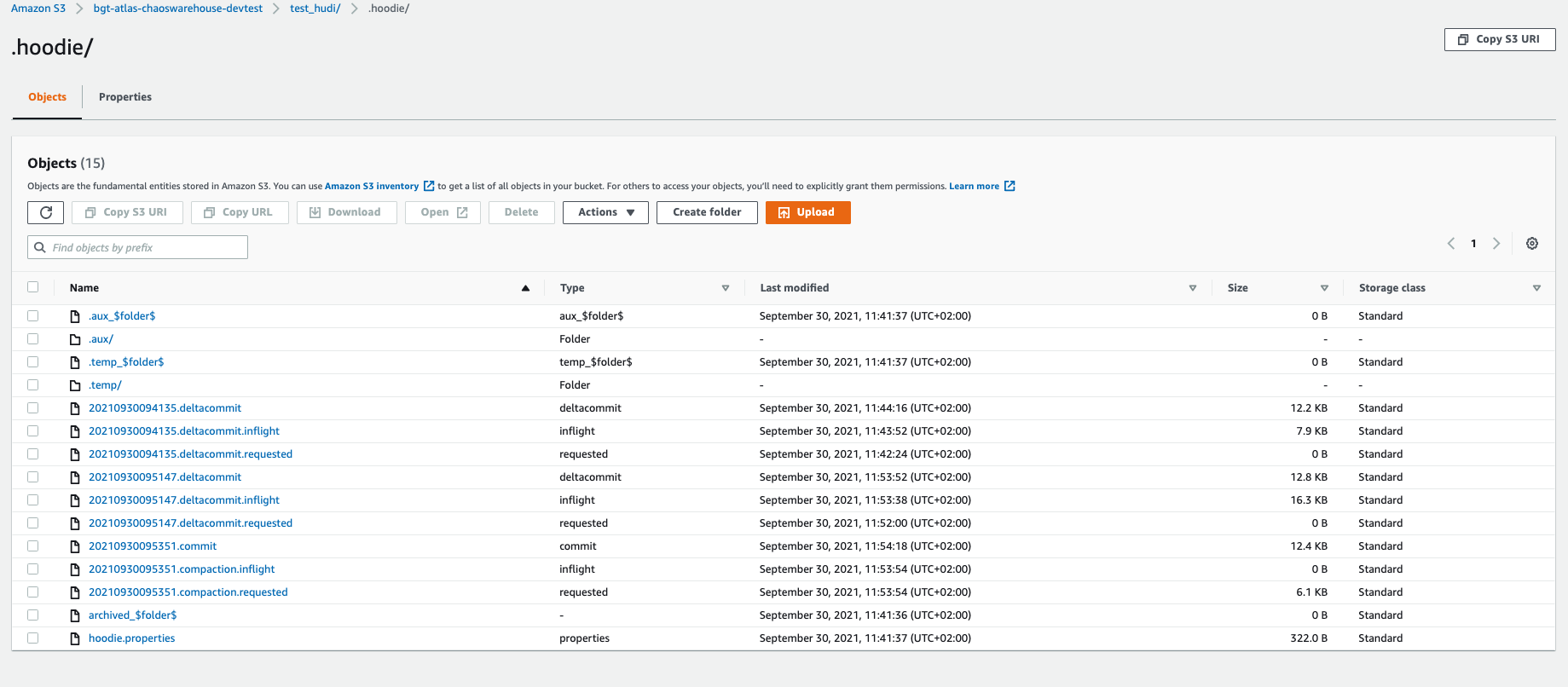

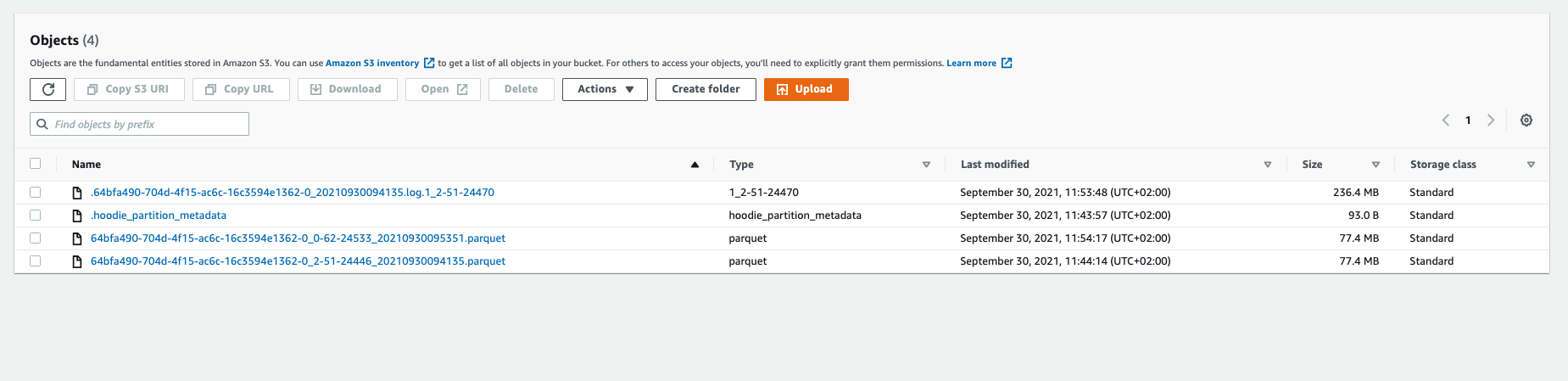

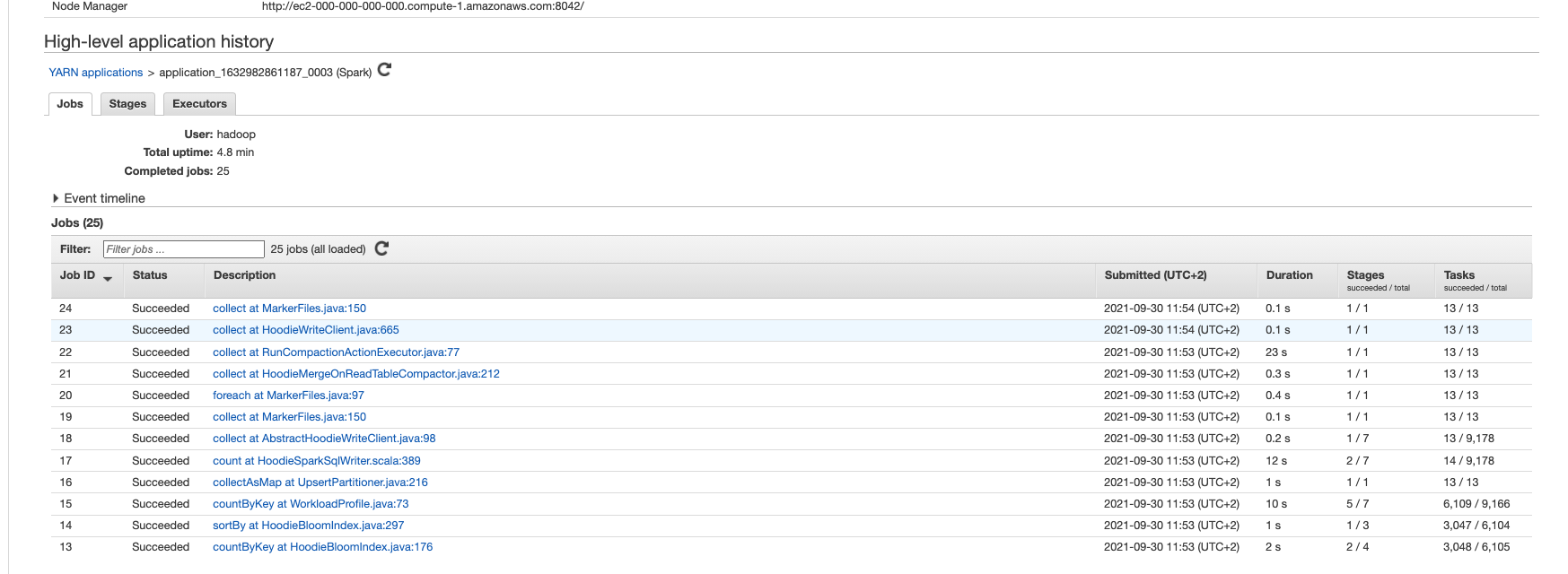

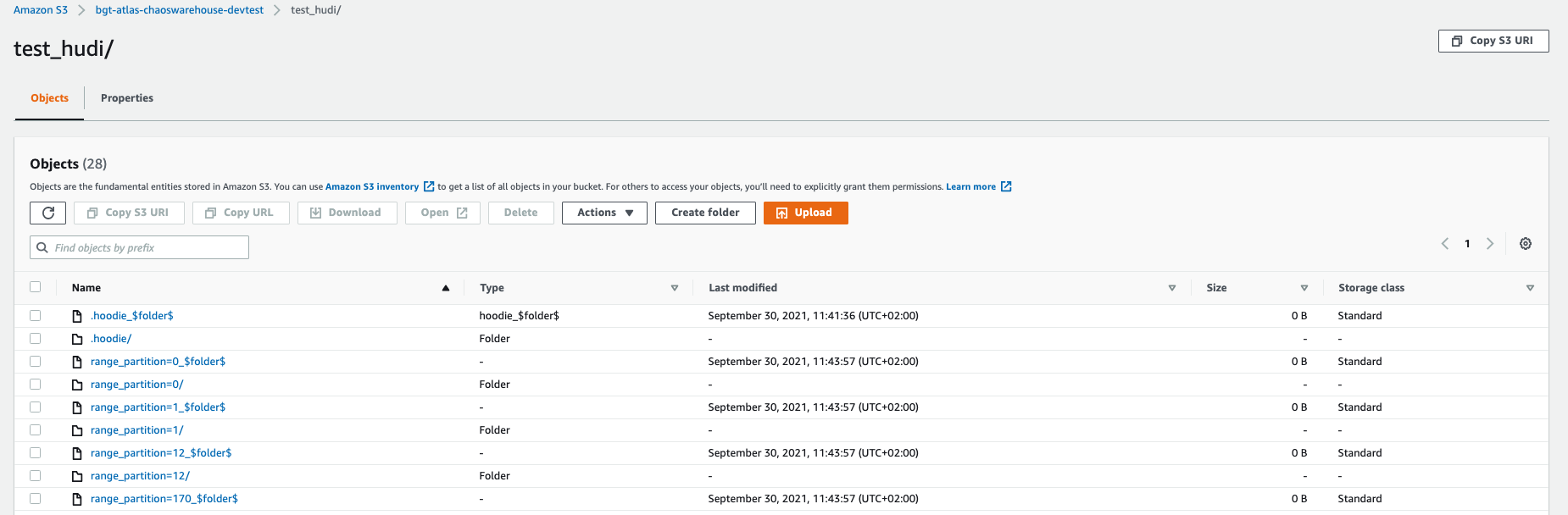

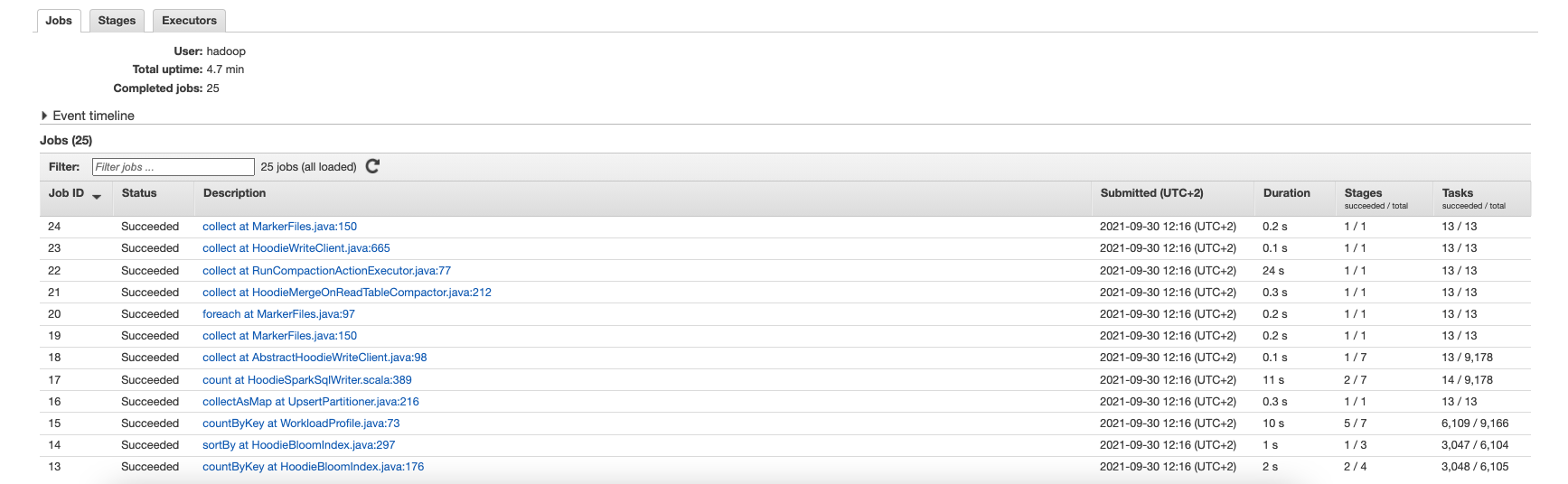

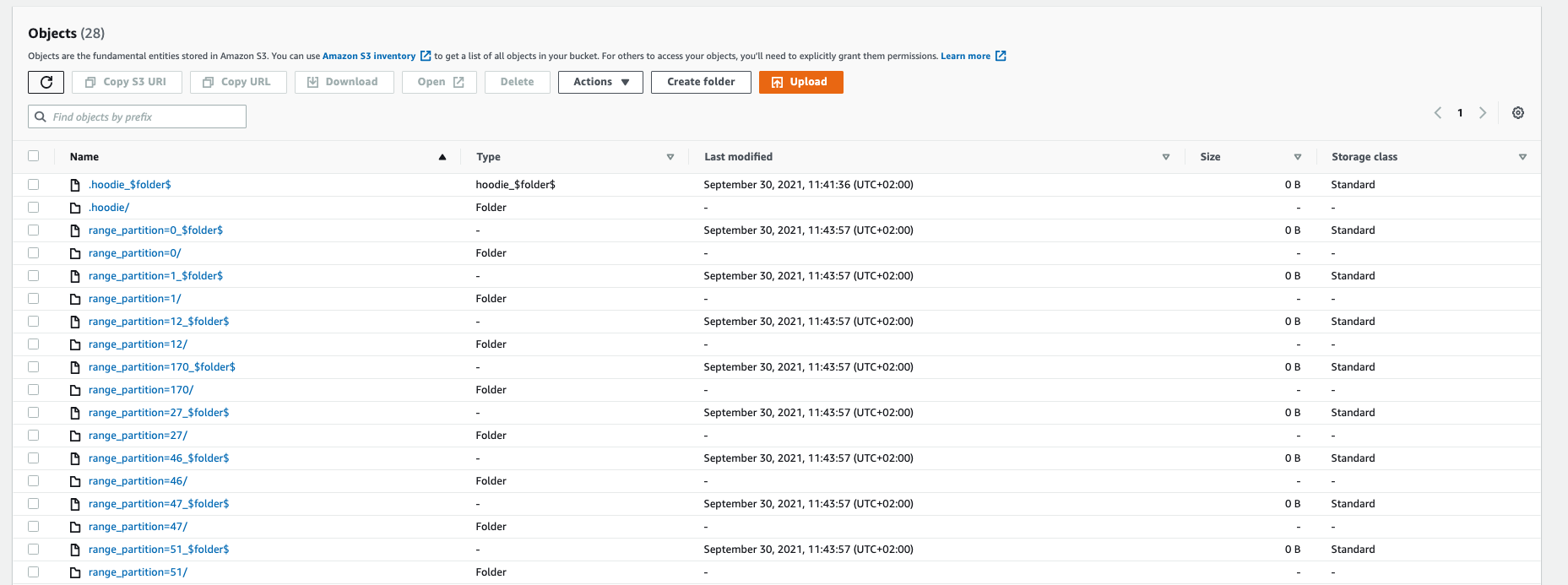

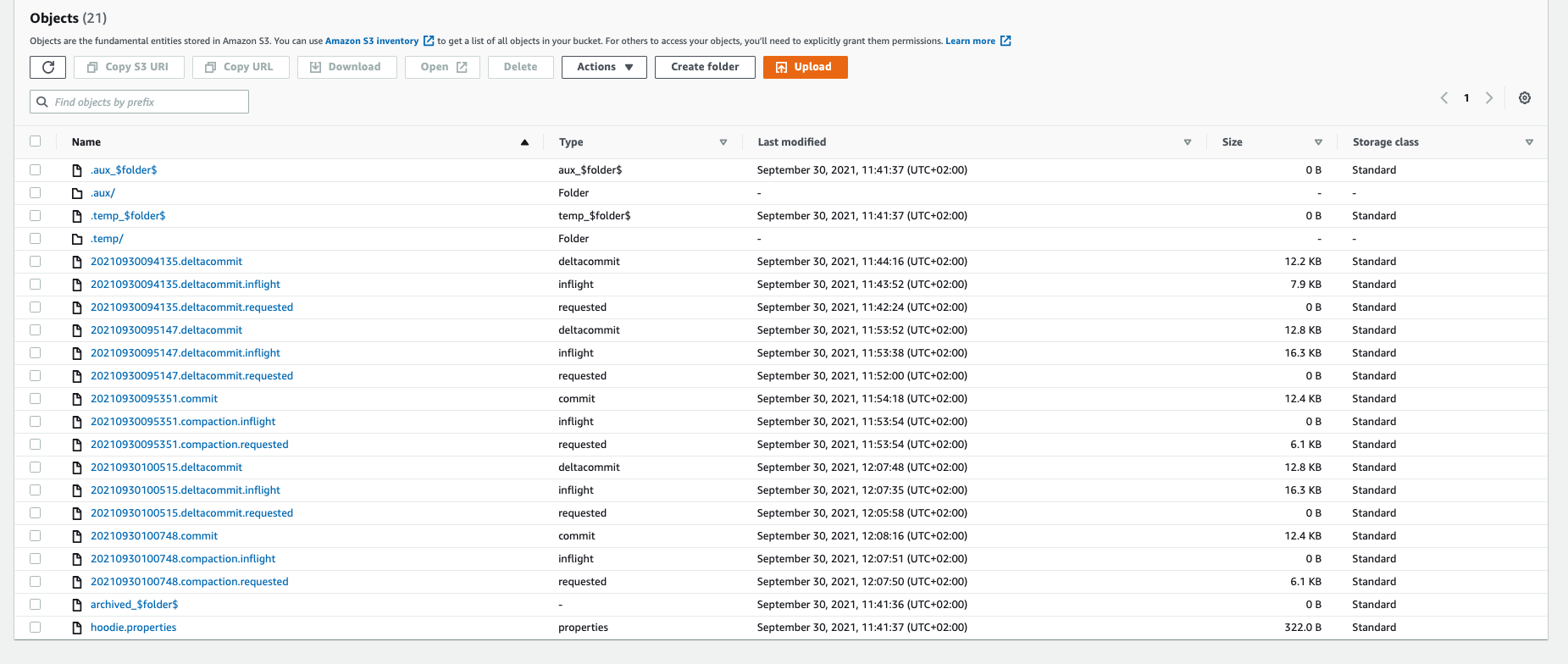

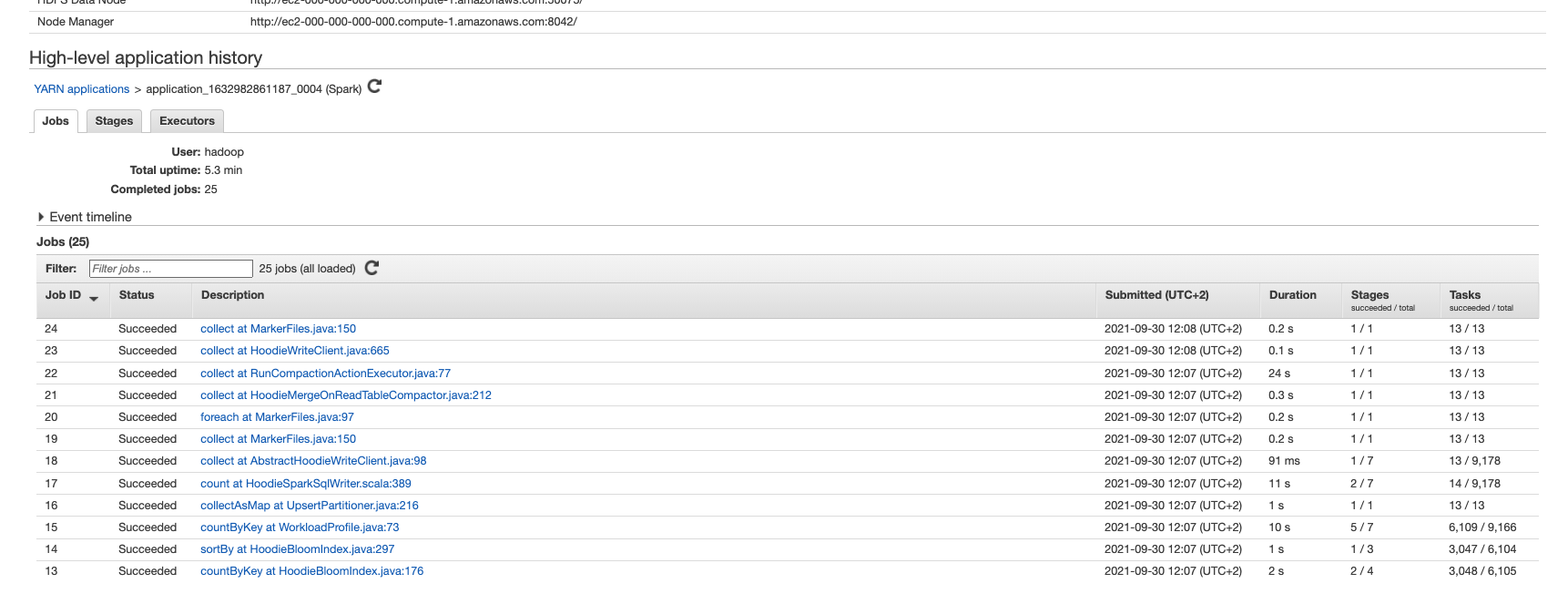

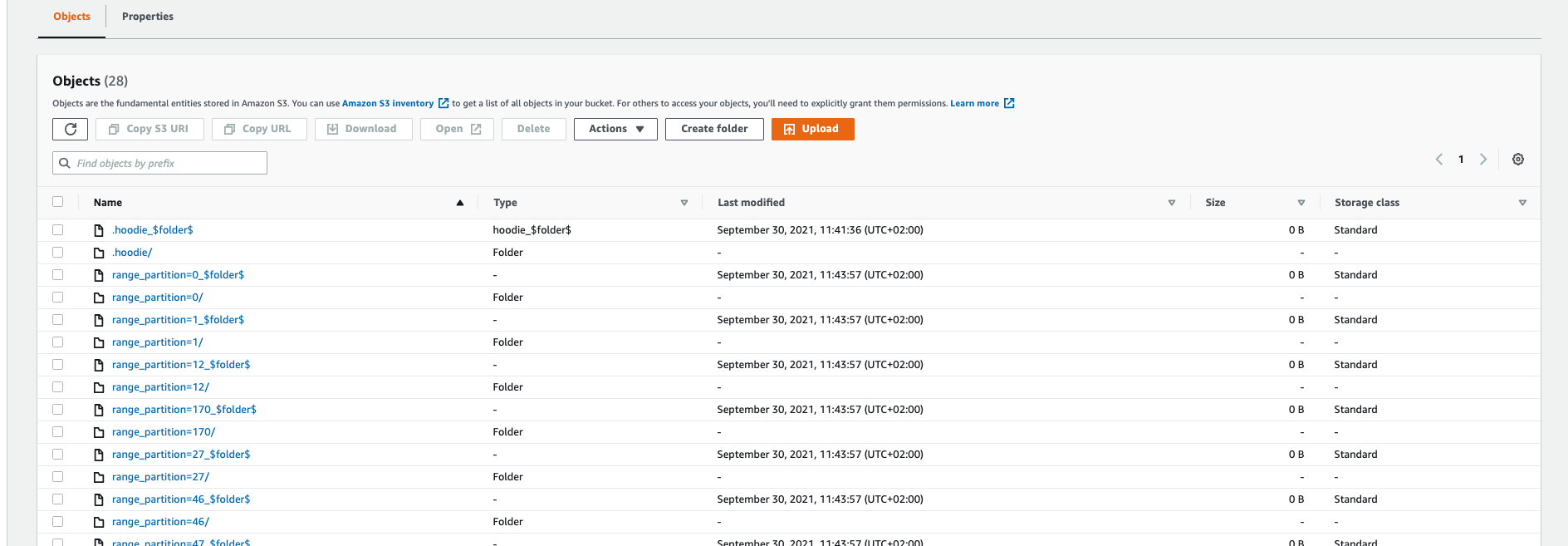

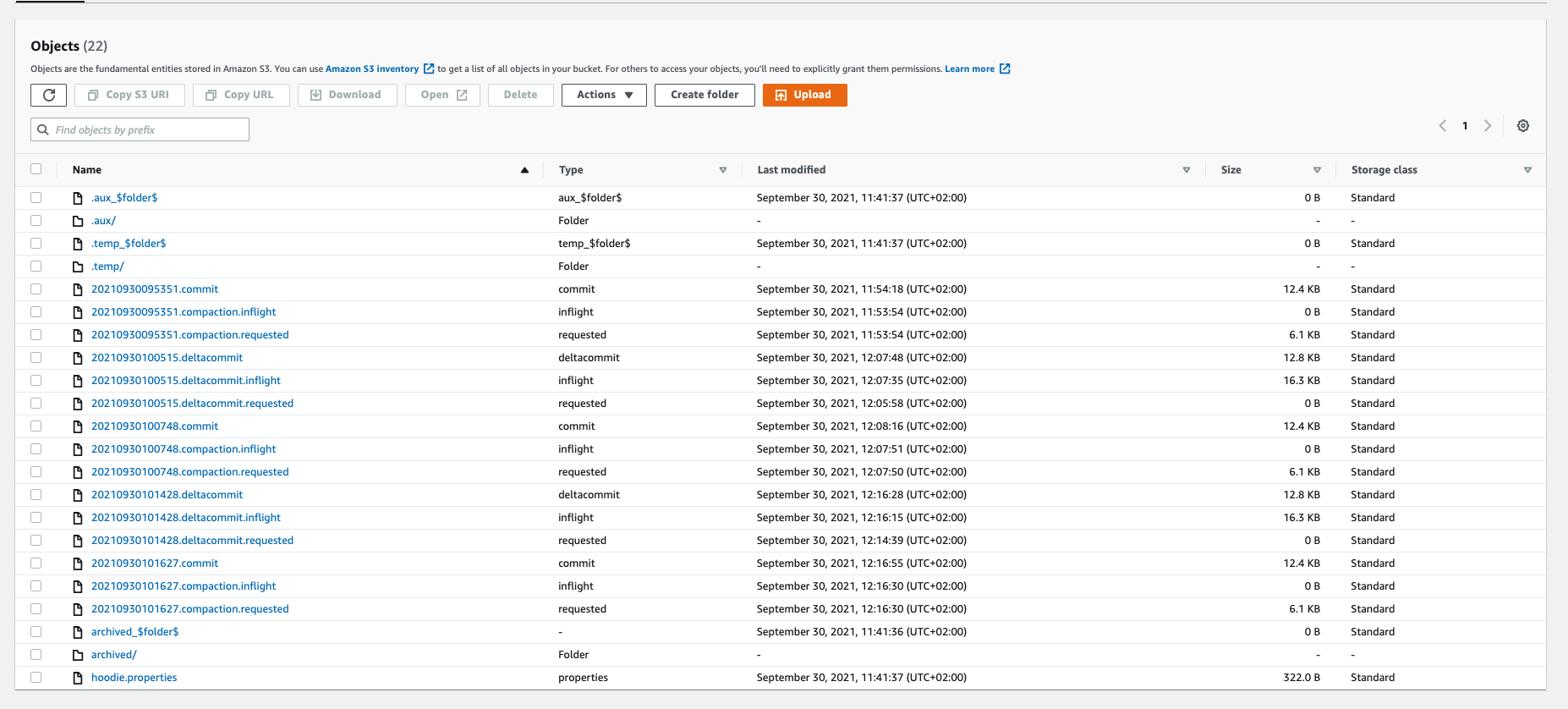

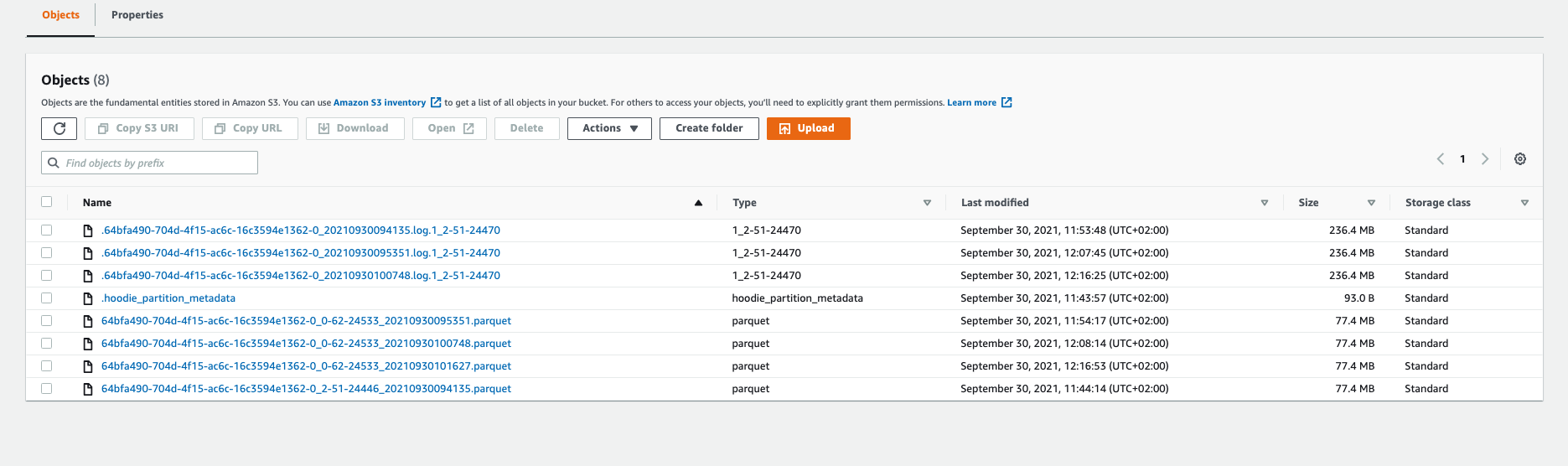

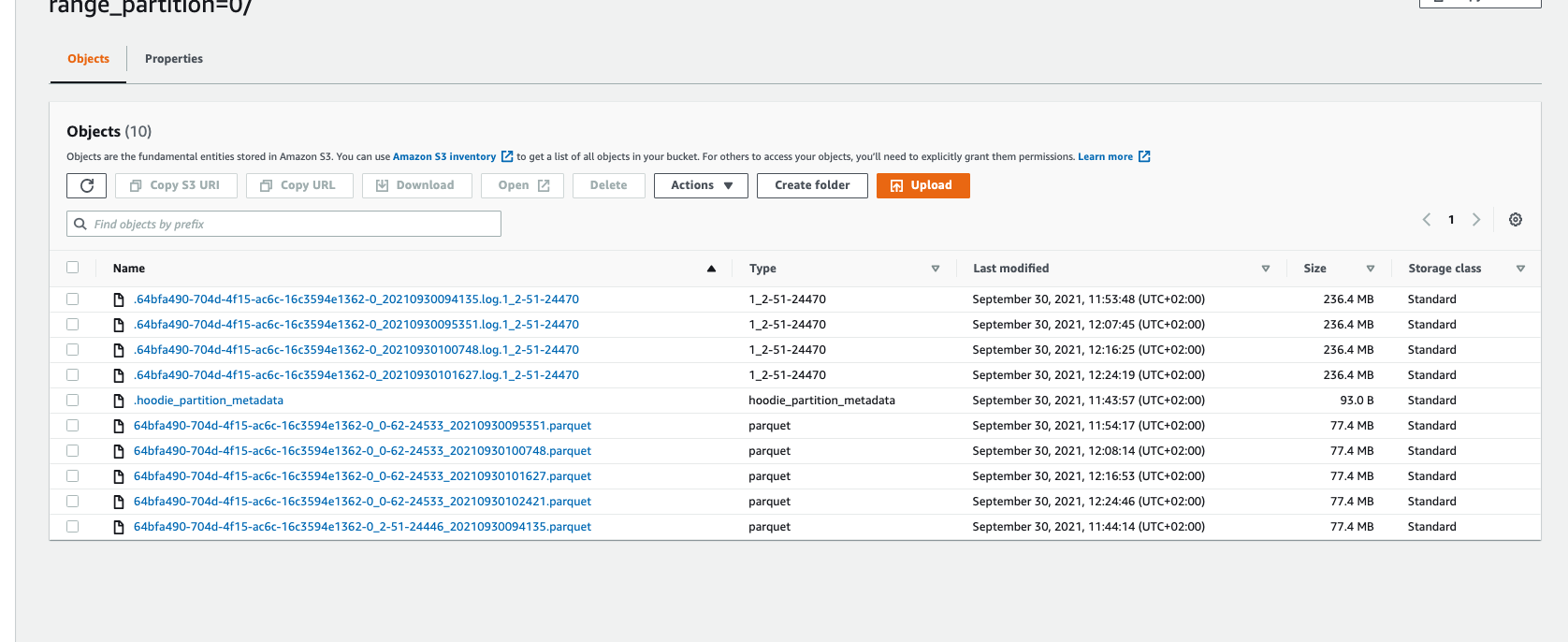

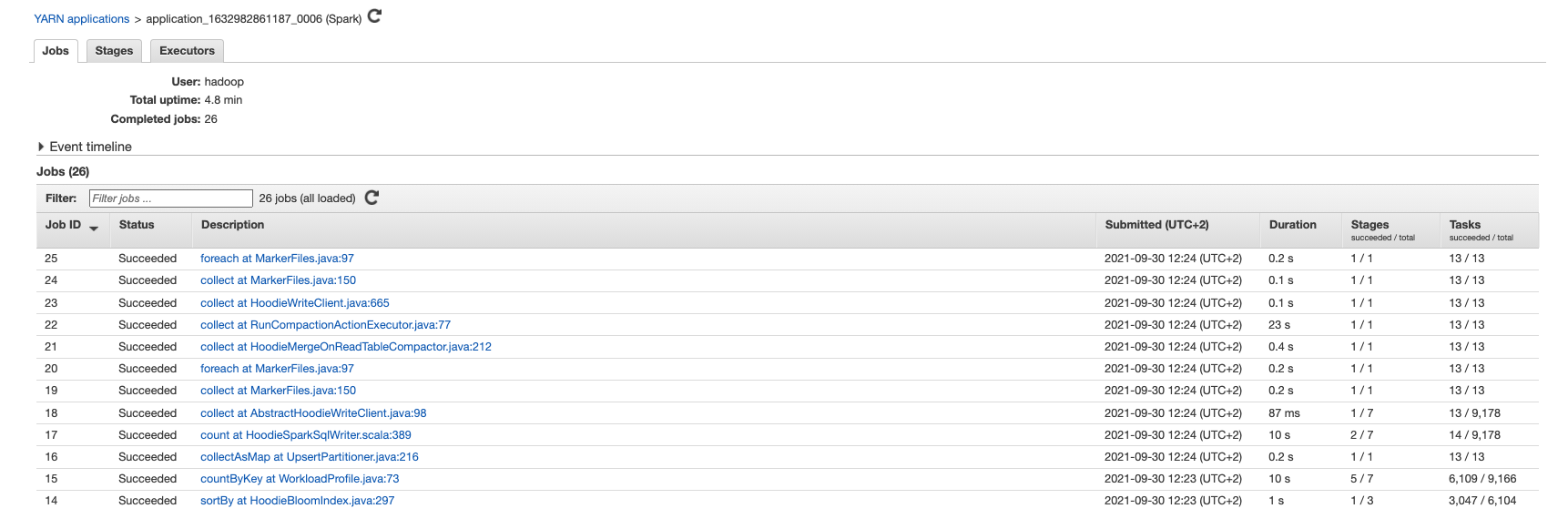

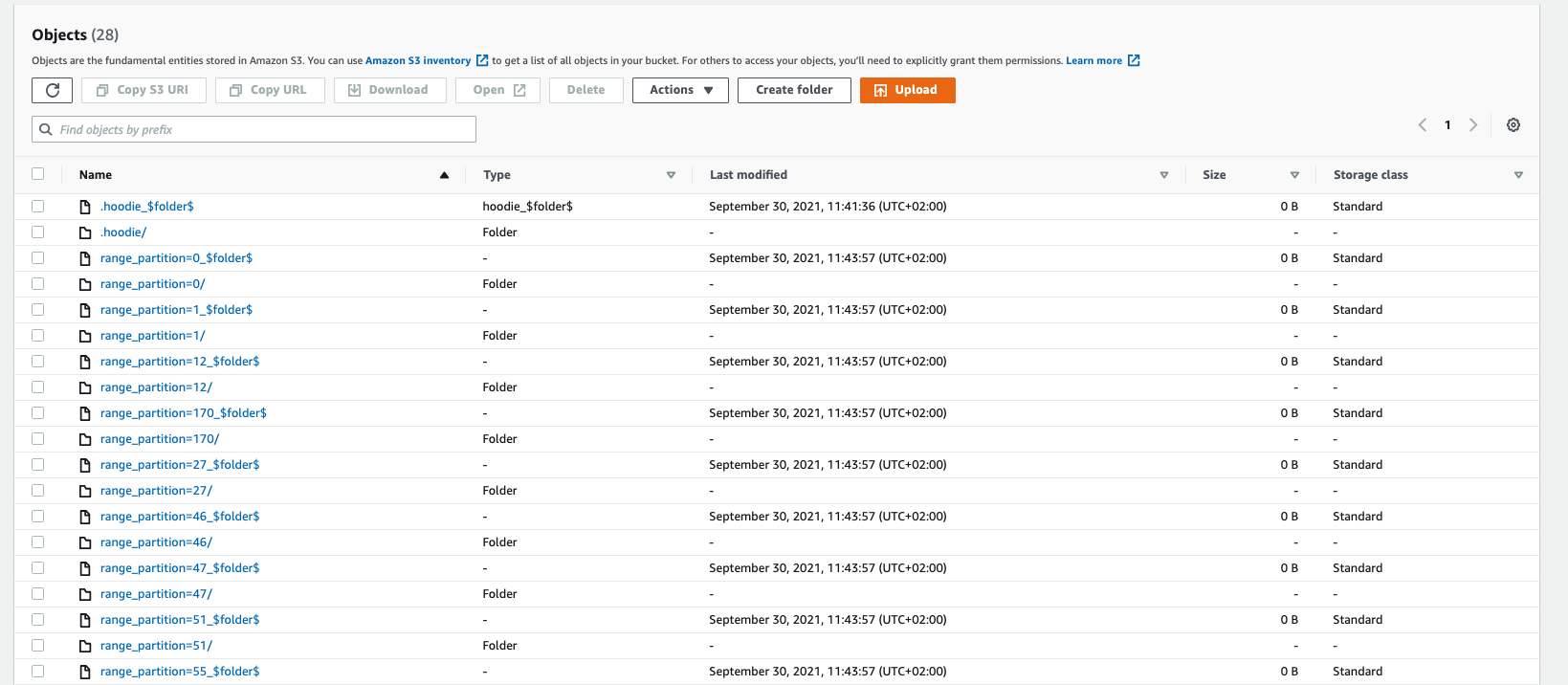

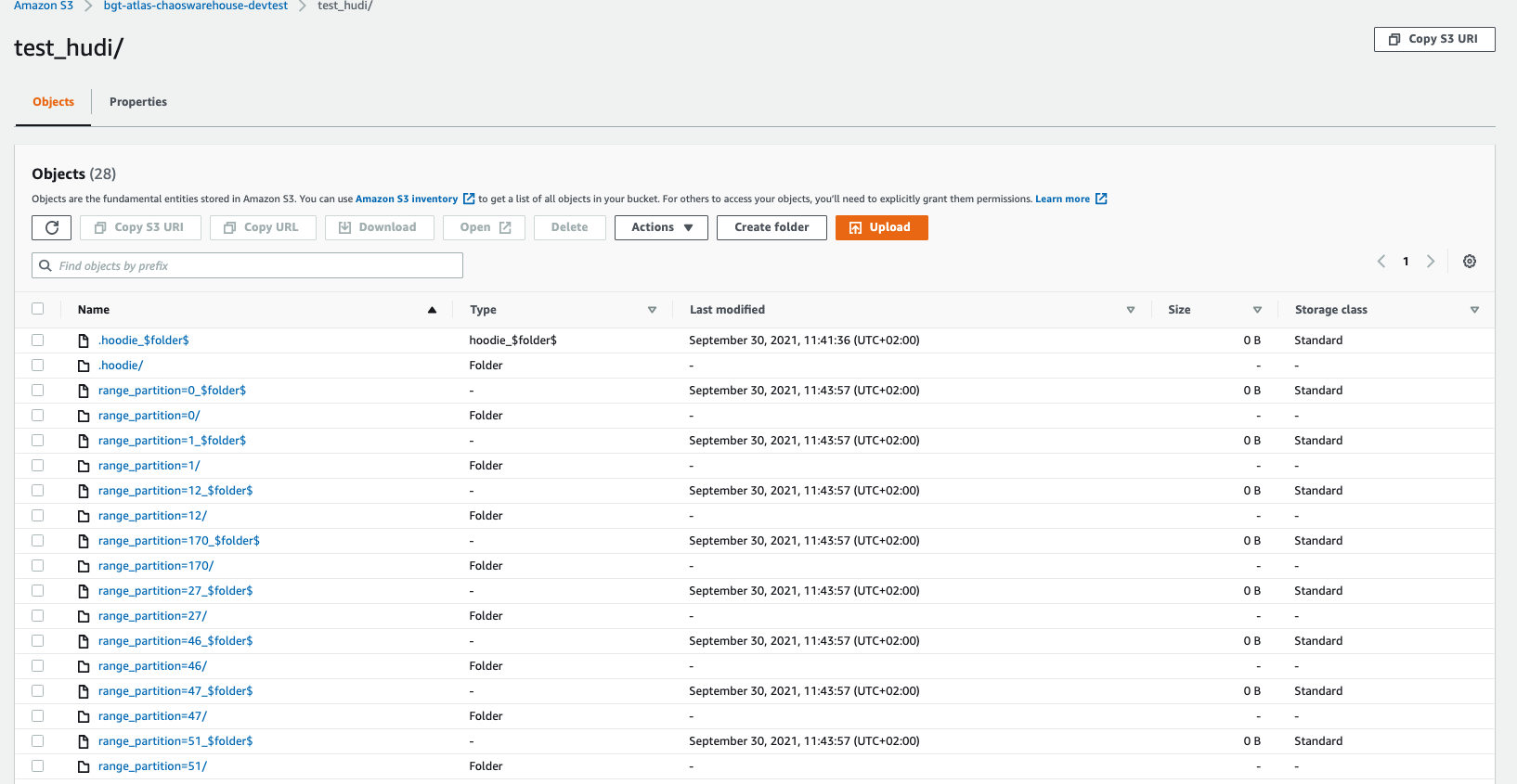

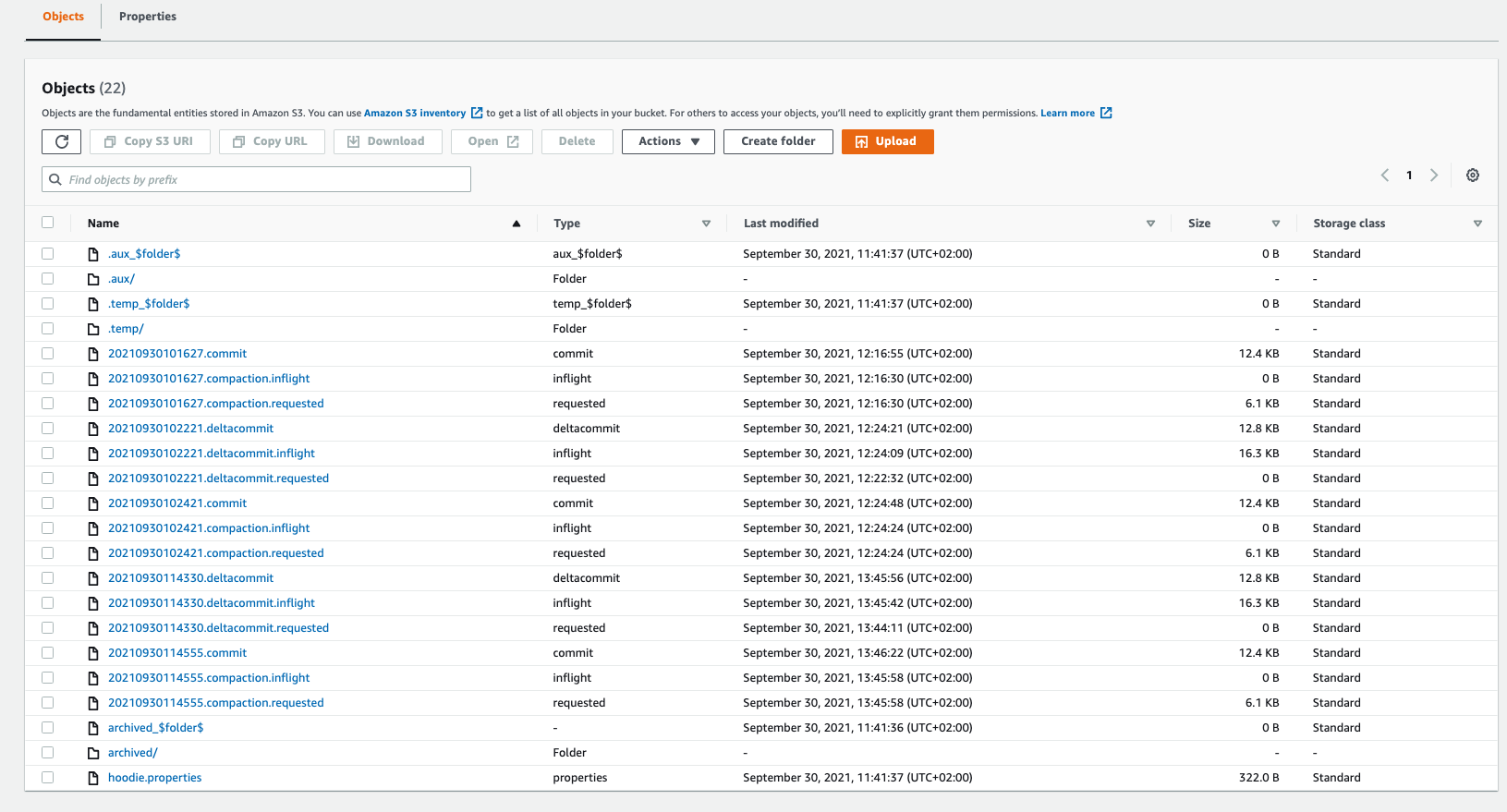

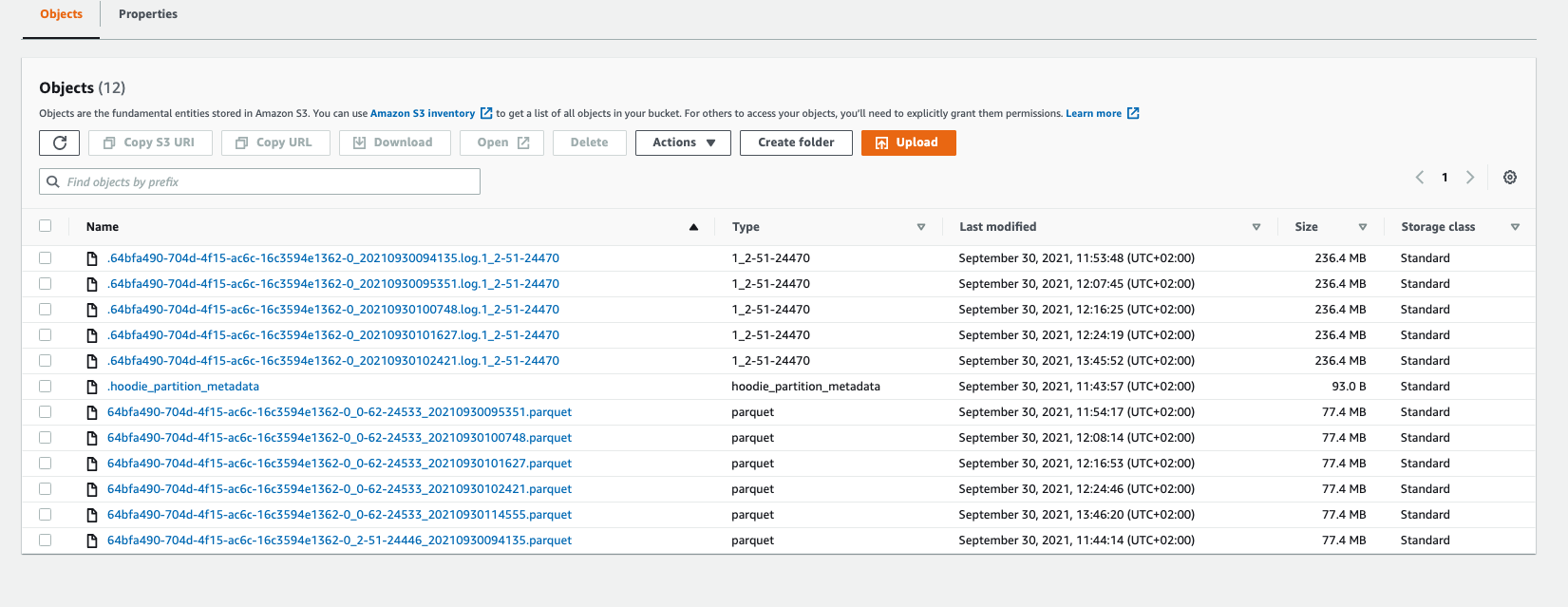

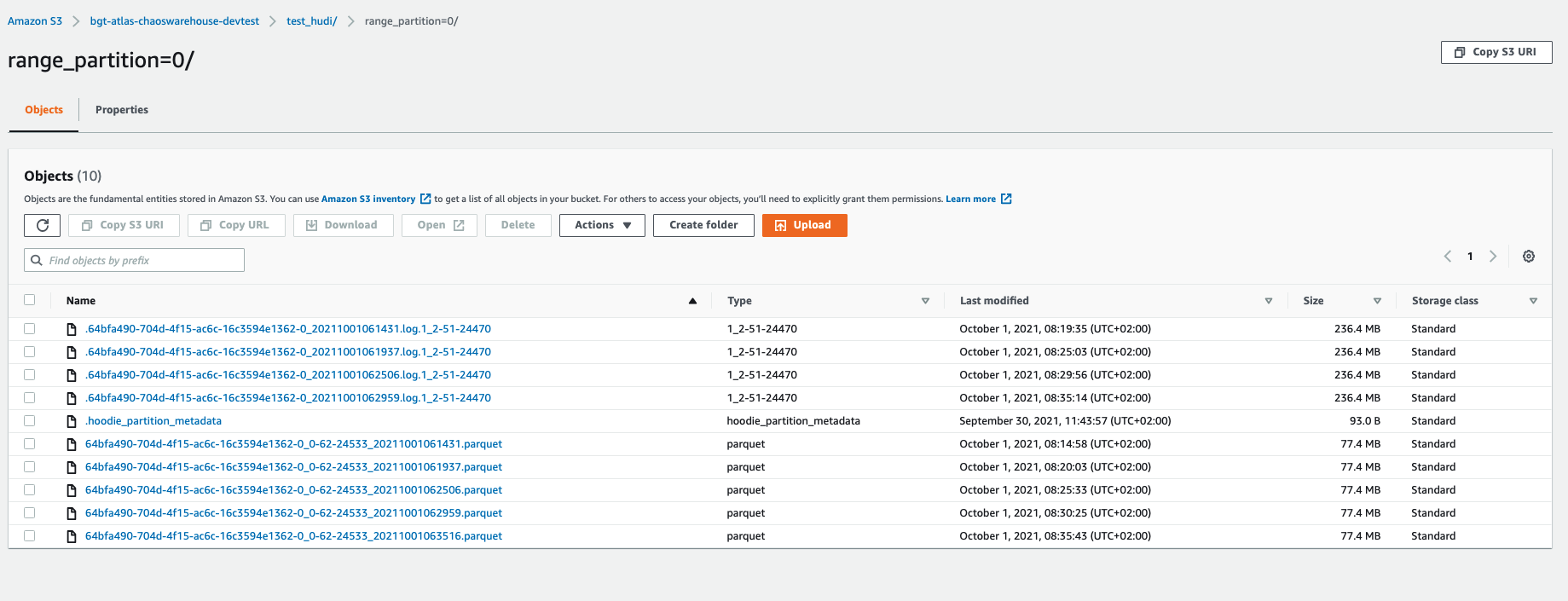

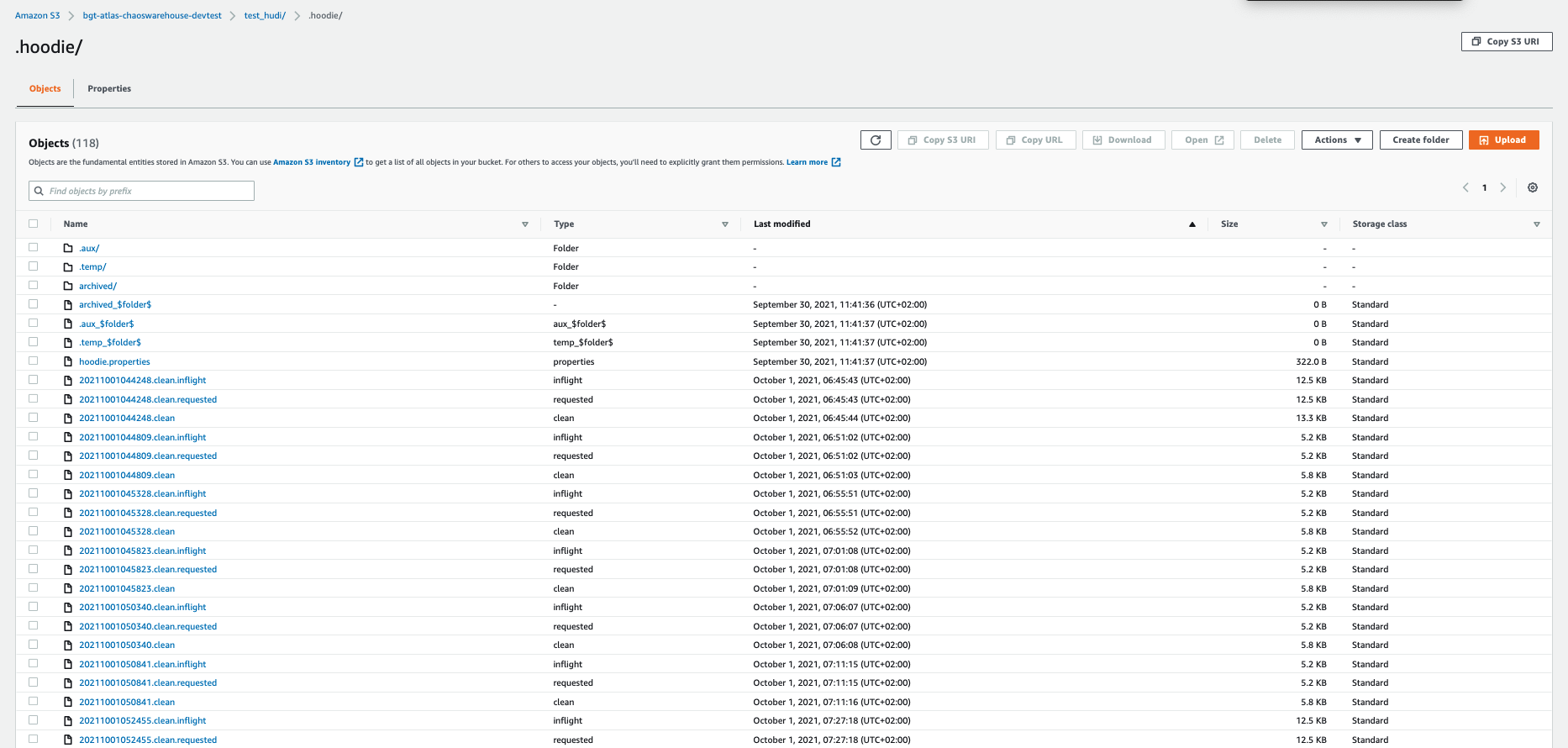

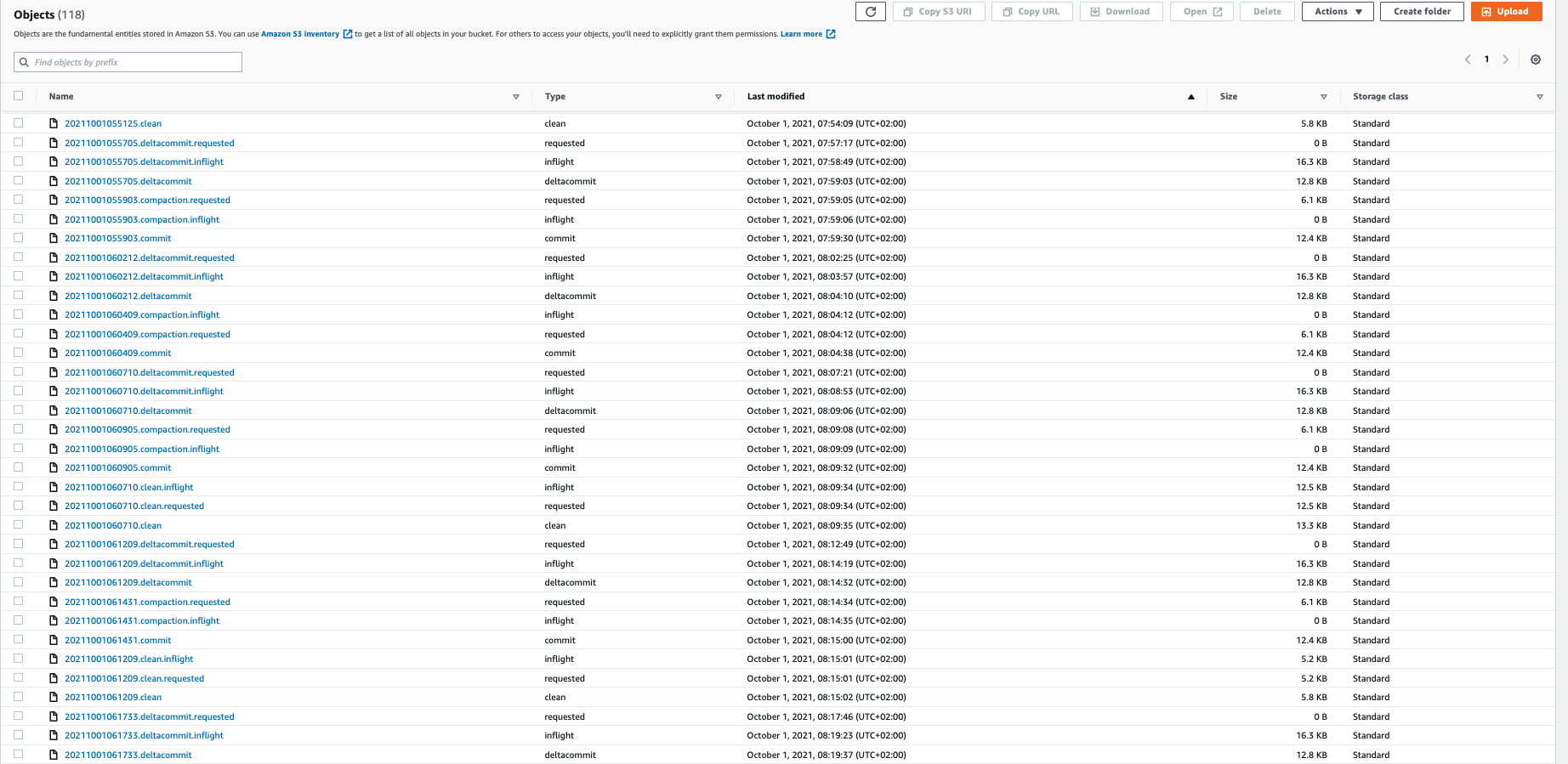

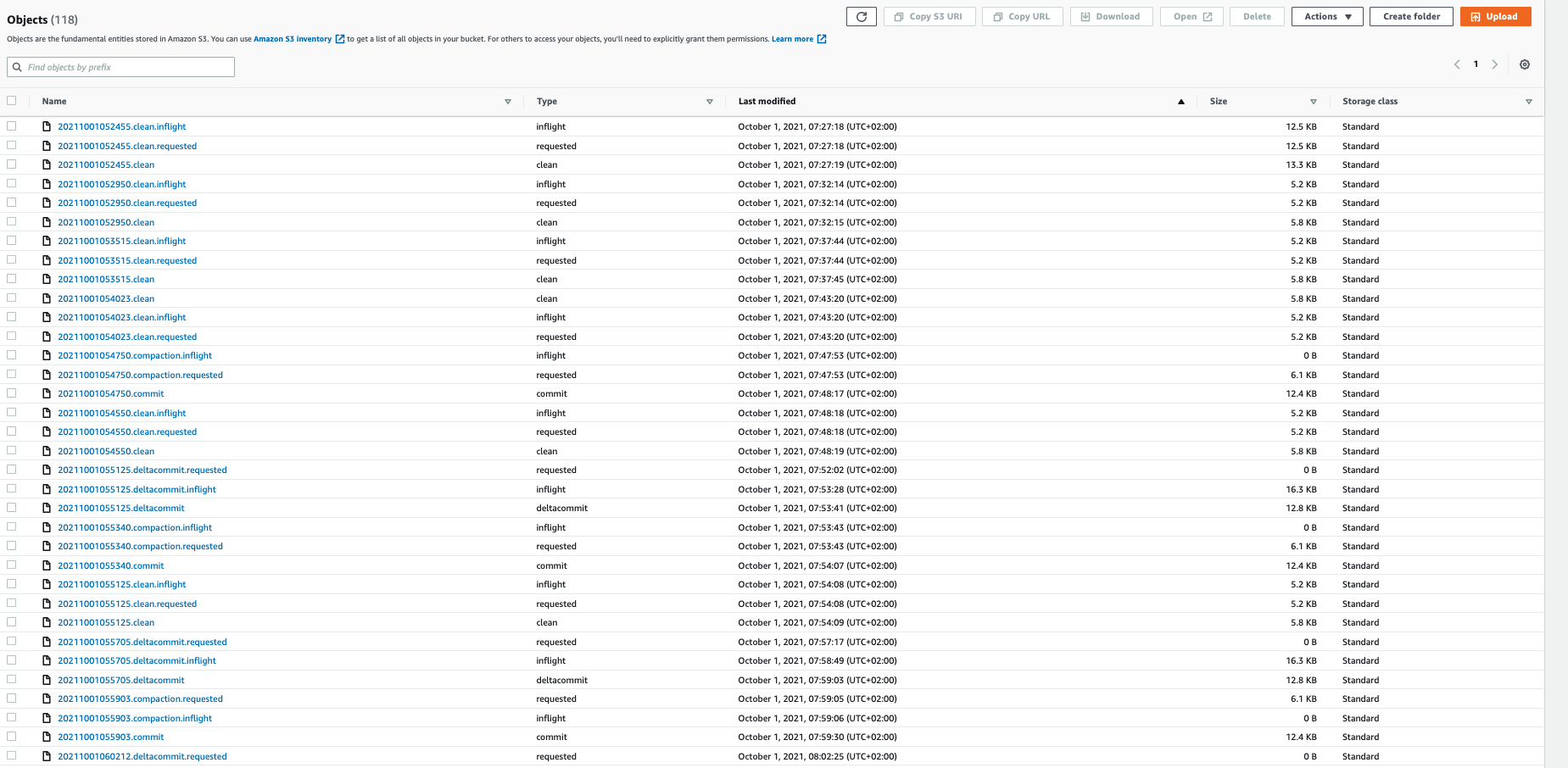

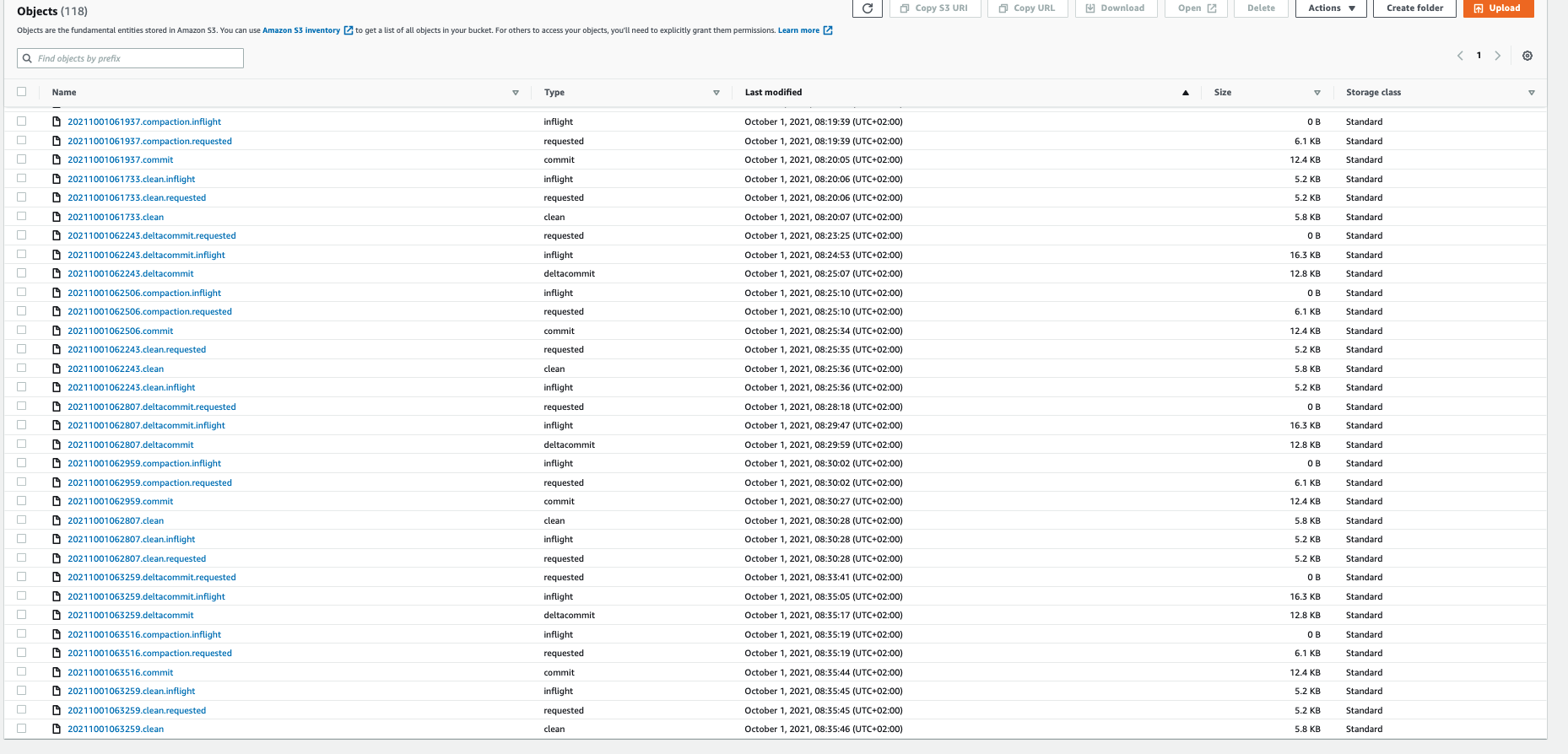

Attached the data for the

1 - first execution of the spark job

2 - second execution of the spark job

3 - 3

4 - 4° execution of the spark job

5 - 5° execution of the spark job

6 - 6° execution of the spark job

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-946363380

@codope : Can you create a ticket for adding ability via hudi-cli to clean up dangling data files.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] bgt-cdedels commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

bgt-cdedels commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-937333824

Yes, my question is: if our configuration was trimming commits from timeline but files were not deleted by cleaner, is there a way to remove (manually clean) those files without losing any data?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan edited a comment on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan edited a comment on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-931525996

thanks. Here is what is possibly happening. If you can tigger more updates, eventually you will see cleaning kicking in.

In short, this has something to do w/ MOR table. cleaner need to see N commits before it can clean things up not delta commits. I will try to explain what that means, but its gonna be lengthy.

Let me first try to explains data files and delta log files.

In hudi, base or data files are parquet format and delta log files are avro with .log extension. base files are created w/ commits and log files are created w/ delta commits.

Each data file could have 0 or more log files. They represent updates to data in the respective data files/base files.

For instance, here is a simple example.

```

base_file_1_c1,

log_file_1_c1_v1

log_file_1_c1_v2

base_file_2_c2

```

In above example, there are two commits made, c1, c2 and c3.

C1 :

base_file_1_c1

C2:

base_file_2_c2

and add some updates to base_file_1 and so log_file_1_c1_v1 got created.

C3:

Added some updates to base_file_1 and so log_file_1_c1_v2 got created.

So, if we make making more commits similar to C3, only new log files will be added. These are not considered as commits from a cleaning stand point.

Hudi has something called compaction which compacts base files and corresponding log files into a new version of the base file.

Lets say compaction kicks in with commit time C4.

```

base_file_1_c1,

log_file_1_c1_v1

log_file_1_c1_v2

base_file_2_c2

base_file_1_c4

```

base_file_1_c4 is nothing but (base_file_1_c1 + log_file_1_c1_v1 + log_file_1_c1_v2)

Now, lets say you have configured cleaner commits retained as 1, then (base_file_1_c1 + log_file_1_c1_v1 + log_file_1_c1_v2) would have been cleaned up. But as you could see, compaction created a newer version of this base file and hence older version is eligible to be cleaned up. which is not the case for base_file_2_c2. Bcoz, there is only one version.

So, in your case, only when 4 or 5 compactions happen, you could possibly see cleaner kicking in. From your last snapshot, I see only 3 compactions so far.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-936736174

or is your questions, due to mis-configuration, if archival trimmed some commits from timeline which cleaner did not get a chance to clean, is there a way to go about cleaning them neatly?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] bgt-cdedels commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

bgt-cdedels commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-936650190

@nsivabalan - thanks for the help. If we increase hoodie.keep.max.commits to 10, will that also delete any old commits from the archival timeline when the cleaner is run the next time? If not, is there a way to manually remove the files associated with those old commits?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-933549686

Let me know if you need anything. else we can close this issue out.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan edited a comment on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan edited a comment on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-931533803

sorry, I see that you do have 5 compactions. Let me dig in more.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] codope commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

codope commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-946810769

https://issues.apache.org/jira/browse/HUDI-2580

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-931540799

also, can you try setting this to higher no

hoodie.keep.max.commits: may be 10.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-937434037

I am not aware of any easier option or not hudi-cli has any option for this.

@vinothchandar @bhasudha @bvaradar @n3nash : any suggestions here. Here is the question:

if archival configs were set aggressively compared to cleaner, and if archival trimmed some commits from timeline, cleaner will not have a chance to clean the data files pertaining to those commits. If this happened for a table, what suggestion do we have for users to clean up those data files.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] mauropelucchi commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

mauropelucchi commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-932016039

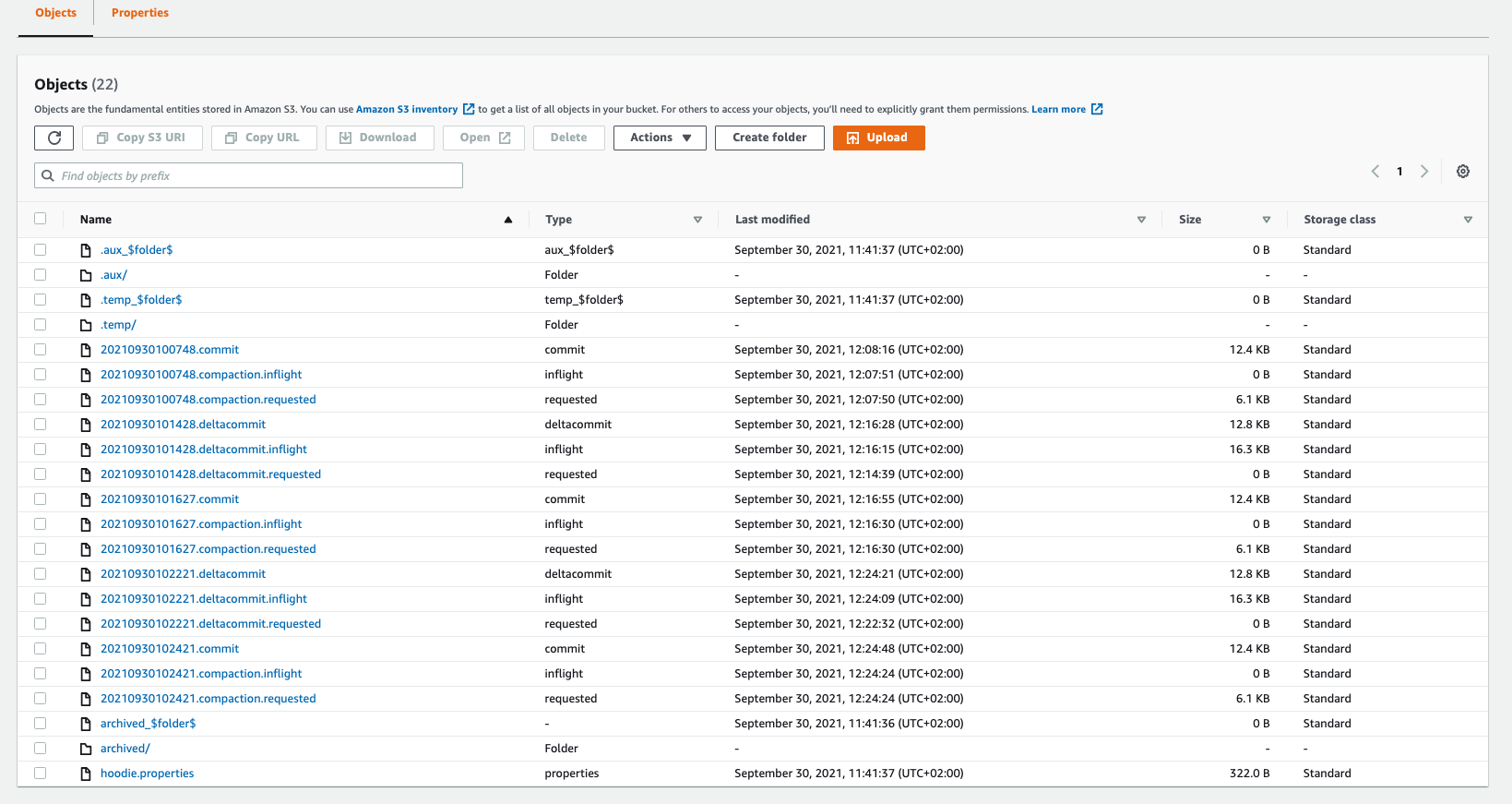

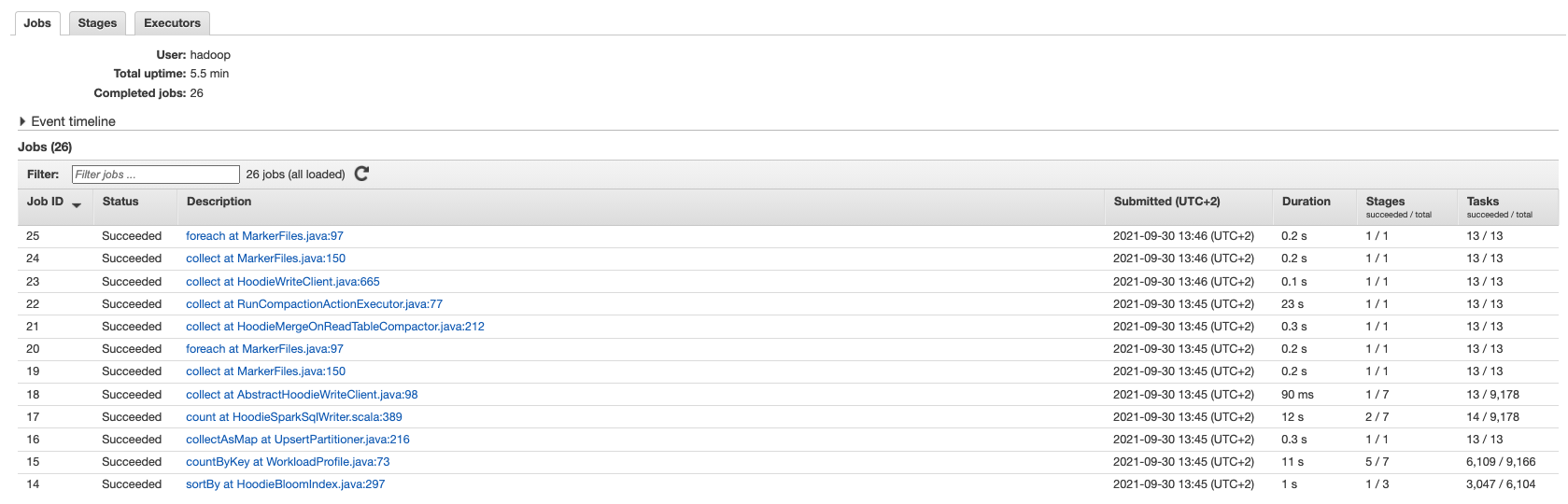

Hi @nsivabalan... something is moving! With your suggestion (**hoodie.keep.max.commits: 20**)

this is the result:

Can you explain what is happening and how each parameter of our hudi conf is involved?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan edited a comment on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan edited a comment on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-936729727

let me illustrate w/ an example.

archival works with timeline, where as cleaner deals with data files. this difference is important to understand the interplays here.

lets say these are the 3 config values.

cleaner commits retained: 4

keep.min.commits for archival: 5

keep.min.commits for archival: 10

lets say you starting making commits to hudi.

C1, C2, C3, C4.

When C5 is added, cleaner will clean up all data files pertaining to C1.

After this, timeline will still show C1, C2, C3, C4, C5 but data files for C1 would have been deleted.

and then more commits happens.

C6, C7, C8, C9...

So cleaner will ensure except last 4 commits, all data files pertaining to older commits are cleaned up.

After C10, here is how storage looks like.

C1, C2 -> C10 in timeline.

all data files pertaining to C1, C2,. ... until C6 are cleaned up. rest of the commits are intact.

After C11, archival kicks in. And since we have 11 commits > keep.max.commits config value,

archival will remove C1 to C6 from timeline. basically leave the timeline with keep.min.commits (i.e. 5)

So, here is how the timeline will be after archival

C7, C8, C9, C10, C11.

And then cleaner kicks in. will clean up data files pertaining to C7.

Hope this clarifies things. Let me know if you need more details.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-931533803

sorry, I see that you do have 5 compactions.

Can you try enabling inline cleaning?

hoodie.compact.inline=true

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] mauropelucchi commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

mauropelucchi commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-932269697

@nsivabalan Ok, what is the right conf in our scenario?

It is unusual for us to have 20 commits by partition, usually we have from 4 to 10...

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] mauropelucchi commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

mauropelucchi commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-931730823

@nsivabalan We already use your suggestions, so our actual conf is

'hoodie.table.name': table_name,

'hoodie.datasource.write.recordkey.field': 'posting_key',

'hoodie.datasource.write.partitionpath.field': 'range_partition',

'hoodie.datasource.write.table.name': table_name,

'hoodie.datasource.write.precombine.field': 'update_date',

'hoodie.datasource.write.table.type': 'MERGE_ON_READ',

'hoodie.cleaner.policy': 'KEEP_LATEST_COMMITS',

'hoodie.consistency.check.enabled': True,

'hoodie.bloom.index.filter.type': 'dynamic_v0',

'hoodie.bloom.index.bucketized.checking': False,

'hoodie.memory.merge.max.size': '2004857600000',

'hoodie.upsert.shuffle.parallelism': parallelism,

'hoodie.insert.shuffle.parallelism': parallelism,

'hoodie.bulkinsert.shuffle.parallelism': parallelism,

'hoodie.parquet.small.file.limit': '204857600',

'hoodie.parquet.max.file.size': str(self.__parquet_max_file_size_byte),

'hoodie.memory.compaction.fraction': '384402653184',

'hoodie.write.buffer.limit.bytes': str(128 * 1024 * 1024),

**'hoodie.compact.inline': True,**

'hoodie.compact.inline.max.delta.commits': 1,

'hoodie.datasource.compaction.async.enable': False,

'hoodie.parquet.compression.ratio': '0.35',

'hoodie.logfile.max.size': '268435456',

'hoodie.logfile.to.parquet.compression.ratio': '0.5',

'hoodie.datasource.write.hive_style_partitioning': True,

'hoodie.keep.min.commits': 5,

**'hoodie.keep.max.commits': 6,**

'hoodie.copyonwrite.record.size.estimate': 32,

**'hoodie.cleaner.commits.retained': 4,**

'hoodie.clean.automatic': True

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] bgt-cdedels commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

bgt-cdedels commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-937333824

Yes, my question is: if our configuration was trimming commits from timeline but files were not deleted by cleaner, is there a way to remove (manually clean) those files without losing any data?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] xushiyan closed issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

xushiyan closed issue #3739:

URL: https://github.com/apache/hudi/issues/3739

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-931544474

likely its the archival thats coming into play. let me know how it goes if you set higher value for hoodie.keep.max.commits.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-931743495

sure. can we try

hoodie.keep.max.commits: 20

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan edited a comment on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan edited a comment on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-931525996

thanks. Here is what is possibly happening. If you can tigger more updates, eventually you will see cleaning kicking in.

In short, this has something to do w/ MOR table. cleaner need to see N commits before it can clean things up not delta commits. I will try to explain what that means, but its gonna be lengthy.

Let me first try to explains data files and delta log files.

In hudi, base or data files are parquet format and delta log files are avro with .log extension. base files are created w/ commits and log files are created w/ delta commits.

Each data file could have 0 or more log files. They represent updates to data in the respective data files/base files.

For instance, here is a simple example.

```

base_file_1_c1,

log_file_1_c1_v1

log_file_1_c1_v2

base_file_2_c2

```

In above example, there are two commits made, c1, c2 and c3.

C1 :

base_file_1_c1

C2:

base_file_2_c2

and add some updates to base_file_1 and so log_file_1_c1_v1 got created.

C3:

Added some updates to base_file_1 and so log_file_1_c1_v2 got created.

So, if we make making more commits similar to C3, only new log files will be added. These are not considered as commits from a cleaning stand point.

Hudi has something called compaction which compacts base files and corresponding log files into a new version of the base file.

Lets say compaction kicks in with commit time C4.

```

base_file_1_c1,

log_file_1_c1_v1

log_file_1_c1_v2

base_file_2_c2

base_file_1_c4

```

base_file_1_c4 is nothing but (base_file_1_c1 + log_file_1_c1_v1 + log_file_1_c1_v2)

Now, lets say you have configured cleaner commits retained as 1, then (base_file_1_c1 + log_file_1_c1_v1 + log_file_1_c1_v2) would have been cleaned up. But as you could see, compaction created a newer version of this base file and hence older version is eligible to be cleaned up. which is not the case for base_file_2_c2. Bcoz, there is only one version.

So, in your case, only when 4 or 5 compactions happen, you could possibly see cleaner kicking in.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan edited a comment on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan edited a comment on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-932199950

awesome, good to know. So here is the thing.

Hudi has something called active timeline and archived timeline. Archival will kick in for every commit and move some older commits from active to archived. This is to keep the no of active commits within bounds. (contents of .hoodie folder)

all operations within hudi will operate only on commits in active timeline(inserts, upserts, compaction, cleaning etc).

Since we have very aggressive archival configs, archival keeps the no of commits in active timeline to a lower no always. And so when cleaner tries to check if there are any eligible commits to be cleaned up, it can't find more than the configured value. so, if we increase the max commits for archival to kick in, lets say 20, until there are 20 commits, archival will not kick in. And so cleaner should be able to find more than configured value for cleaning to trigger.

May be we can add a warning or even throw exception if archival configs are aggressive compared to cleaner. bcoz, then silently cleaning becomes moot.

I have filed a [jira](https://issues.apache.org/jira/browse/HUDI-2511) on this regard and will follow up.

Let me know if you need any more details. If not, can we close this issue out.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan edited a comment on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan edited a comment on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-931533803

sorry, I see that you do have 5 compactions. Let me dig in more.

Can you enable info logs and share it here. I don't even see cleaning getting triggered. else we would have seen a clean.requested meta file atleast.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-931525996

thanks. Here is what is possibly happening. If you can tigger more updates, eventually you will see cleaning kicking in.

In short, this has something to do w/ MOR table. cleaner need to see N commits before it can clean things up not delta commits. I will try to explain what that means, but its gonna be lengthy.

Let me first try to explains data files and delta log files.

In hudi, base or data files are parquet format and delta log files are avro with .log extension. base files are created w/ commits and log files are created w/ delta commits.

Each data file could have 0 or more log files. They represent updates to data in the respective data files/base files.

For instance, here is a simple example.

base_file_1_c1,

log_file_1_c1_v1

log_file_1_c1_v2

base_file_2_c2

In above example, there are two commits made, c1, c2 and c3.

C1 :

base_file_1_c1

C2:

base_file_2_c2

and add some updates to base_file_1 and so log_file_1_c1_v1 got created.

C3:

Added some updates to base_file_1 and so log_file_1_c1_v2 got created.

So, if we make making more commits similar to C3, only new log files will be added. These are not considered as commits from a cleaning stand point.

Hudi has something called compaction which compacts base files and corresponding log files into a new version of the base file.

Lets say compaction kicks in with commit time C4.

base_file_1_c1,

log_file_1_c1_v1

log_file_1_c1_v2

base_file_1_c4,

base_file_2_c2

base_file_1_c4 is nothing but (base_file_1_c1 + log_file_1_c1_v1 + log_file_1_c1_v2)

Now, lets say you have configured cleaner commits retained as 1, then (base_file_1_c1 + log_file_1_c1_v1 + log_file_1_c1_v2) would have been cleaned up. But as you could see, compaction created a newer version of this base file and hence older version is eligible to be cleaned up. which is not the case for base_file_2_c2. Bcoz, there is only one version.

So, in your case, only when 4 or 5 compactions happen, you could possibly see cleaner kicking in.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-937434037

I am not aware of any easier option or not hudi-cli has any option for this.

@vinothchandar @bhasudha @bvaradar @n3nash : any suggestions here. Here is the question:

if archival configs were set aggressively compared to cleaner, and if archival trimmed some commits from timeline, cleaner will not have a chance to clean the data files pertaining to those commits. If this happened for a table, what suggestion do we have for users to clean up those data files.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-936729727

let me illustrate w/ an example.

archival works with timeline, where as cleaner deals with data files. this difference is important to understand the interplays here.

lets say these are the 3 config values.

cleaner commits: 4

keep.min.commits for archival: 5

keep.min.commits for archival: 10

lets say you starting making commits to hudi.

C1, C2, C3, C4.

When C5 is added, cleaner will clean up all data files pertaining to C1.

After this, timeline will still show C1, C2, C3, C4, C5 but data files for C1 would have been deleted.

and then more commits happens.

C6, C7, C8, C9...

So cleaner will ensure except last 4 commits, all data files pertaining to older commits are cleaned up.

After C10, here is how storage looks like.

C1, C2 -> C10 in timeline.

all data files pertaining to C1, C2,. ... until C6 are cleaned up. rest of the commits are intact.

After C11, archival kicks in. And since we have 11 commits > keep.max.commits config value,

archival will remove C1 to C6 from timeline. basically leave the timeline with keep.min.commits (i.e. 5)

So, here is how the timeline will be after archival

C7, C8, C9, C10, C11.

And then cleaner kicks in. will clean up data files pertaining to C7.

Hope this clarifies things. Let me know if you need more details.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-931537607

may be a dumb thought. can you try setting the config values as string.

'hoodie.compact.inline': 'true'

'hoodie.cleaner.commits.retained':'4'

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-932199950

awesome, good to know. So here is the thing.

Hudi has something called active timeline and archived timeline. Archival will kick in for every commit and move some older commits from active to archived. This is to keep the no of active commits within bounds. (contents of .hoodie folder)

all operations within hudi will operation only on commits in active timeline(inserts, upserts, compaction, cleaning etc).

Since we have very aggressive archival configs, archival keeps the no of commits in active timeline to a lower no always. And so when cleaner tries to check if there are any eligible commits to be cleaned up, it can't find more than the configured value. so, if we increase the max commits for archival to kick in, lets say 20, until there are 20 commits, archival will not kick in. And so cleaner should be able to find more than configured value for cleaning to trigger.

May be we can add a warning or even throw exception if archival configs are aggressive compared to cleaner. bcoz, then silently cleaning becomes moot.

I have filed a [jira](https://issues.apache.org/jira/browse/HUDI-2511) on this regard and will follow up.

Let me know if you need any more details. If not, can we close this issue out.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-948247852

@bgt-cdedels : sorry, don't think there is any easier way. I can only think of a naive solution. you can try to recreate a new table w/ data from this table and delete old one.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on issue #3739: Hoodie clean is not deleting old files

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on issue #3739:

URL: https://github.com/apache/hudi/issues/3739#issuecomment-933549405

You can set it to 10. should work out.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org