You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@spark.apache.org by li...@apache.org on 2018/07/24 18:31:34 UTC

spark git commit: [SPARK-24812][SQL] Last Access Time in the table

description is not valid

Repository: spark

Updated Branches:

refs/heads/master 9d27541a8 -> d4a277f0c

[SPARK-24812][SQL] Last Access Time in the table description is not valid

## What changes were proposed in this pull request?

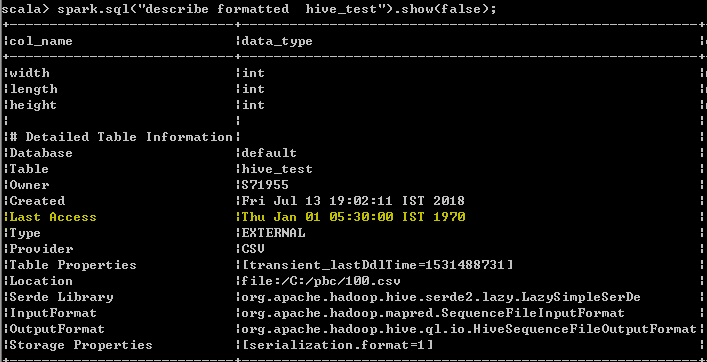

Last Access Time will always displayed wrong date Thu Jan 01 05:30:00 IST 1970 when user run DESC FORMATTED table command

In hive its displayed as "UNKNOWN" which makes more sense than displaying wrong date. seems to be a limitation as of now even from hive, better we can follow the hive behavior unless the limitation has been resolved from hive.

spark client output

Hive client output

## How was this patch tested?

UT has been added which makes sure that the wrong date "Thu Jan 01 05:30:00 IST 1970 "

shall not be added as value for the Last Access property

Author: s71955 <su...@gmail.com>

Closes #21775 from sujith71955/master_hive.

Project: http://git-wip-us.apache.org/repos/asf/spark/repo

Commit: http://git-wip-us.apache.org/repos/asf/spark/commit/d4a277f0

Tree: http://git-wip-us.apache.org/repos/asf/spark/tree/d4a277f0

Diff: http://git-wip-us.apache.org/repos/asf/spark/diff/d4a277f0

Branch: refs/heads/master

Commit: d4a277f0ce2d6e1832d87cae8faec38c5bc730f4

Parents: 9d27541

Author: s71955 <su...@gmail.com>

Authored: Tue Jul 24 11:31:27 2018 -0700

Committer: Xiao Li <ga...@gmail.com>

Committed: Tue Jul 24 11:31:27 2018 -0700

----------------------------------------------------------------------

docs/sql-programming-guide.md | 1 +

.../apache/spark/sql/catalyst/catalog/interface.scala | 5 ++++-

.../apache/spark/sql/hive/execution/HiveDDLSuite.scala | 13 +++++++++++++

3 files changed, 18 insertions(+), 1 deletion(-)

----------------------------------------------------------------------

http://git-wip-us.apache.org/repos/asf/spark/blob/d4a277f0/docs/sql-programming-guide.md

----------------------------------------------------------------------

diff --git a/docs/sql-programming-guide.md b/docs/sql-programming-guide.md

index 4bab58a..e815e5b 100644

--- a/docs/sql-programming-guide.md

+++ b/docs/sql-programming-guide.md

@@ -1850,6 +1850,7 @@ working with timestamps in `pandas_udf`s to get the best performance, see

## Upgrading From Spark SQL 2.3 to 2.4

+ - Since Spark 2.4, Spark will display table description column Last Access value as UNKNOWN when the value was Jan 01 1970.

- Since Spark 2.4, Spark maximizes the usage of a vectorized ORC reader for ORC files by default. To do that, `spark.sql.orc.impl` and `spark.sql.orc.filterPushdown` change their default values to `native` and `true` respectively.

- In PySpark, when Arrow optimization is enabled, previously `toPandas` just failed when Arrow optimization is unable to be used whereas `createDataFrame` from Pandas DataFrame allowed the fallback to non-optimization. Now, both `toPandas` and `createDataFrame` from Pandas DataFrame allow the fallback by default, which can be switched off by `spark.sql.execution.arrow.fallback.enabled`.

- Since Spark 2.4, writing an empty dataframe to a directory launches at least one write task, even if physically the dataframe has no partition. This introduces a small behavior change that for self-describing file formats like Parquet and Orc, Spark creates a metadata-only file in the target directory when writing a 0-partition dataframe, so that schema inference can still work if users read that directory later. The new behavior is more reasonable and more consistent regarding writing empty dataframe.

http://git-wip-us.apache.org/repos/asf/spark/blob/d4a277f0/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/interface.scala

----------------------------------------------------------------------

diff --git a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/interface.scala b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/interface.scala

index c6105c5..a4ead53 100644

--- a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/interface.scala

+++ b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/interface.scala

@@ -114,7 +114,10 @@ case class CatalogTablePartition(

map.put("Partition Parameters", s"{${parameters.map(p => p._1 + "=" + p._2).mkString(", ")}}")

}

map.put("Created Time", new Date(createTime).toString)

- map.put("Last Access", new Date(lastAccessTime).toString)

+ val lastAccess = {

+ if (-1 == lastAccessTime) "UNKNOWN" else new Date(lastAccessTime).toString

+ }

+ map.put("Last Access", lastAccess)

stats.foreach(s => map.put("Partition Statistics", s.simpleString))

map

}

http://git-wip-us.apache.org/repos/asf/spark/blob/d4a277f0/sql/hive/src/test/scala/org/apache/spark/sql/hive/execution/HiveDDLSuite.scala

----------------------------------------------------------------------

diff --git a/sql/hive/src/test/scala/org/apache/spark/sql/hive/execution/HiveDDLSuite.scala b/sql/hive/src/test/scala/org/apache/spark/sql/hive/execution/HiveDDLSuite.scala

index 31fd4c5..0b3de3d 100644

--- a/sql/hive/src/test/scala/org/apache/spark/sql/hive/execution/HiveDDLSuite.scala

+++ b/sql/hive/src/test/scala/org/apache/spark/sql/hive/execution/HiveDDLSuite.scala

@@ -19,6 +19,7 @@ package org.apache.spark.sql.hive.execution

import java.io.File

import java.net.URI

+import java.util.Date

import scala.language.existentials

@@ -2250,6 +2251,18 @@ class HiveDDLSuite

}

}

+ test("SPARK-24812: desc formatted table for last access verification") {

+ withTable("t1") {

+ sql(

+ "CREATE TABLE IF NOT EXISTS t1 (c1_int INT, c2_string STRING, c3_float FLOAT)")

+ val desc = sql("DESC FORMATTED t1").filter($"col_name".startsWith("Last Access"))

+ .select("data_type")

+ // check if the last access time doesnt have the default date of year

+ // 1970 as its a wrong access time

+ assert(!(desc.first.toString.contains("1970")))

+ }

+ }

+

test("SPARK-24681 checks if nested column names do not include ',', ':', and ';'") {

val expectedMsg = "Cannot create a table having a nested column whose name contains invalid " +

"characters (',', ':', ';') in Hive metastore."

---------------------------------------------------------------------

To unsubscribe, e-mail: commits-unsubscribe@spark.apache.org

For additional commands, e-mail: commits-help@spark.apache.org