You are viewing a plain text version of this content. The canonical link for it is here.

Posted to reviews@spark.apache.org by sarojchand <gi...@git.apache.org> on 2018/10/27 12:38:44 UTC

[GitHub] spark pull request #22860: Branch 2.4

GitHub user sarojchand opened a pull request:

https://github.com/apache/spark/pull/22860

Branch 2.4

## What changes were proposed in this pull request?

(Please fill in changes proposed in this fix)

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

You can merge this pull request into a Git repository by running:

$ git pull https://github.com/apache/spark branch-2.4

Alternatively you can review and apply these changes as the patch at:

https://github.com/apache/spark/pull/22860.patch

To close this pull request, make a commit to your master/trunk branch

with (at least) the following in the commit message:

This closes #22860

----

commit b632e775cc057492ebba6b65647d90908aa00421

Author: Marco Gaido <ma...@...>

Date: 2018-09-06T07:27:59Z

[SPARK-25317][CORE] Avoid perf regression in Murmur3 Hash on UTF8String

## What changes were proposed in this pull request?

SPARK-10399 introduced a performance regression on the hash computation for UTF8String.

The regression can be evaluated with the code attached in the JIRA. That code runs in about 120 us per method on my laptop (MacBook Pro 2.5 GHz Intel Core i7, RAM 16 GB 1600 MHz DDR3) while the code from branch 2.3 takes on the same machine about 45 us for me. After the PR, the code takes about 45 us on the master branch too.

## How was this patch tested?

running the perf test from the JIRA

Closes #22338 from mgaido91/SPARK-25317.

Authored-by: Marco Gaido <ma...@gmail.com>

Signed-off-by: Wenchen Fan <we...@databricks.com>

(cherry picked from commit 64c314e22fecca1ca3fe32378fc9374d8485deec)

Signed-off-by: Wenchen Fan <we...@databricks.com>

commit 085f731adb9b8c82a2bf4bbcae6d889a967fbd53

Author: Shahid <sh...@...>

Date: 2018-09-06T16:52:58Z

[SPARK-25268][GRAPHX] run Parallel Personalized PageRank throws serialization Exception

## What changes were proposed in this pull request?

mapValues in scala is currently not serializable. To avoid the serialization issue while running pageRank, we need to use map instead of mapValues.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes #22271 from shahidki31/master_latest.

Authored-by: Shahid <sh...@gmail.com>

Signed-off-by: Joseph K. Bradley <jo...@databricks.com>

(cherry picked from commit 3b6591b0b064b13a411e5b8f8ee4883a69c39e2d)

Signed-off-by: Joseph K. Bradley <jo...@databricks.com>

commit f2d5022233b637eb50567f7945042b3a8c9c6b25

Author: hyukjinkwon <gu...@...>

Date: 2018-09-06T15:18:49Z

[SPARK-25328][PYTHON] Add an example for having two columns as the grouping key in group aggregate pandas UDF

## What changes were proposed in this pull request?

This PR proposes to add another example for multiple grouping key in group aggregate pandas UDF since this feature could make users still confused.

## How was this patch tested?

Manually tested and documentation built.

Closes #22329 from HyukjinKwon/SPARK-25328.

Authored-by: hyukjinkwon <gu...@apache.org>

Signed-off-by: Bryan Cutler <cu...@gmail.com>

(cherry picked from commit 7ef6d1daf858cc9a2c390074f92aaf56c219518a)

Signed-off-by: Bryan Cutler <cu...@gmail.com>

commit 3682d29f45870031d9dc4e812accbfbb583cc52a

Author: liyuanjian <li...@...>

Date: 2018-09-06T17:17:29Z

[SPARK-25072][PYSPARK] Forbid extra value for custom Row

## What changes were proposed in this pull request?

Add value length check in `_create_row`, forbid extra value for custom Row in PySpark.

## How was this patch tested?

New UT in pyspark-sql

Closes #22140 from xuanyuanking/SPARK-25072.

Lead-authored-by: liyuanjian <li...@baidu.com>

Co-authored-by: Yuanjian Li <xy...@gmail.com>

Signed-off-by: Bryan Cutler <cu...@gmail.com>

(cherry picked from commit c84bc40d7f33c71eca1c08f122cd60517f34c1f8)

Signed-off-by: Bryan Cutler <cu...@gmail.com>

commit a7cfe5158f5c25ae5f774e1fb45d63a67a4bb89c

Author: xuejianbest <38...@...>

Date: 2018-09-06T14:17:37Z

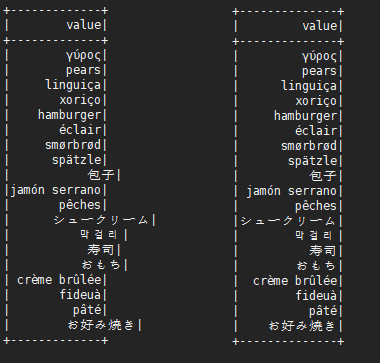

[SPARK-25108][SQL] Fix the show method to display the wide character alignment problem

This is not a perfect solution. It is designed to minimize complexity on the basis of solving problems.

It is effective for English, Chinese characters, Japanese, Korean and so on.

```scala

before:

+---+---------------------------+-------------+

|id |中国 |s2 |

+---+---------------------------+-------------+

|1 |ab |[a] |

|2 |null |[中国, abc] |

|3 |ab1 |[hello world]|

|4 |か行 きゃ(kya) きゅ(kyu) きょ(kyo) |[“中国] |

|5 |中国(你好)a |[“中(国), 312] |

|6 |中国山(东)服务区 |[“中(国)] |

|7 |中国山东服务区 |[中(国)] |

|8 | |[中国] |

+---+---------------------------+-------------+

after:

+---+-----------------------------------+----------------+

|id |中国 |s2 |

+---+-----------------------------------+----------------+

|1 |ab |[a] |

|2 |null |[中国, abc] |

|3 |ab1 |[hello world] |

|4 |か行 きゃ(kya) きゅ(kyu) きょ(kyo) |[“中国] |

|5 |中国(你好)a |[“中(国), 312]|

|6 |中国山(东)服务区 |[“中(国)] |

|7 |中国山东服务区 |[中(国)] |

|8 | |[中国] |

+---+-----------------------------------+----------------+

```

## What changes were proposed in this pull request?

When there are wide characters such as Chinese characters or Japanese characters in the data, the show method has a alignment problem.

Try to fix this problem.

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes #22048 from xuejianbest/master.

Authored-by: xuejianbest <38...@qq.com>

Signed-off-by: Sean Owen <se...@databricks.com>

commit ff832beee0c55c11ac110261a3c48010b81a1e5f

Author: Takuya UESHIN <ue...@...>

Date: 2018-09-07T02:12:20Z

[SPARK-25208][SQL][FOLLOW-UP] Reduce code size.

## What changes were proposed in this pull request?

This is a follow-up pr of #22200.

When casting to decimal type, if `Cast.canNullSafeCastToDecimal()`, overflow won't happen, so we don't need to check the result of `Decimal.changePrecision()`.

## How was this patch tested?

Existing tests.

Closes #22352 from ueshin/issues/SPARK-25208/reduce_code_size.

Authored-by: Takuya UESHIN <ue...@databricks.com>

Signed-off-by: Wenchen Fan <we...@databricks.com>

(cherry picked from commit 1b1711e0532b1a1521054ef3b5980cdb3d70cdeb)

Signed-off-by: Wenchen Fan <we...@databricks.com>

commit 24a32612bdd1136c647aa321b1c1418a43d85bf4

Author: Yuming Wang <yu...@...>

Date: 2018-09-07T04:41:13Z

[SPARK-25330][BUILD][BRANCH-2.3] Revert Hadoop 2.7 to 2.7.3

## What changes were proposed in this pull request?

How to reproduce permission issue:

```sh

# build spark

./dev/make-distribution.sh --name SPARK-25330 --tgz -Phadoop-2.7 -Phive -Phive-thriftserver -Pyarn

tar -zxf spark-2.4.0-SNAPSHOT-bin-SPARK-25330.tar && cd spark-2.4.0-SNAPSHOT-bin-SPARK-25330

export HADOOP_PROXY_USER=user_a

bin/spark-sql

export HADOOP_PROXY_USER=user_b

bin/spark-sql

```

```java

Exception in thread "main" java.lang.RuntimeException: org.apache.hadoop.security.AccessControlException: Permission denied: user=user_b, access=EXECUTE, inode="/tmp/hive-$%7Buser.name%7D/user_b/668748f2-f6c5-4325-a797-fd0a7ee7f4d4":user_b:hadoop:drwx------

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:319)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkTraverse(FSPermissionChecker.java:259)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:205)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:190)

```

The issue occurred in this commit: https://github.com/apache/hadoop/commit/feb886f2093ea5da0cd09c69bd1360a335335c86. This pr revert Hadoop 2.7 to 2.7.3 to avoid this issue.

## How was this patch tested?

unit tests and manual tests.

Closes #22327 from wangyum/SPARK-25330.

Authored-by: Yuming Wang <yu...@ebay.com>

Signed-off-by: Sean Owen <se...@databricks.com>

(cherry picked from commit b0ada7dce02d101b6a04323d8185394e997caca4)

Signed-off-by: Sean Owen <se...@databricks.com>

commit 3644c84f51ba8e5fd2c6607afda06f5291bdf435

Author: Sean Owen <se...@...>

Date: 2018-09-07T04:43:14Z

[SPARK-22357][CORE][FOLLOWUP] SparkContext.binaryFiles ignore minPartitions parameter

## What changes were proposed in this pull request?

This adds a test following https://github.com/apache/spark/pull/21638

## How was this patch tested?

Existing tests and new test.

Closes #22356 from srowen/SPARK-22357.2.

Authored-by: Sean Owen <se...@databricks.com>

Signed-off-by: Sean Owen <se...@databricks.com>

(cherry picked from commit 4e3365b577fbc9021fa237ea4e8792f5aea5d80c)

Signed-off-by: Sean Owen <se...@databricks.com>

commit f9b476c6ad629007d9334409e4dda99119cf0053

Author: dujunling <du...@...>

Date: 2018-09-07T04:44:46Z

[SPARK-25237][SQL] Remove updateBytesReadWithFileSize in FileScanRDD

## What changes were proposed in this pull request?

This pr removed the method `updateBytesReadWithFileSize` in `FileScanRDD` because it computes input metrics by file size supported in Hadoop 2.5 and earlier. The current Spark does not support the versions, so it causes wrong input metric numbers.

This is rework from #22232.

Closes #22232

## How was this patch tested?

Added tests in `FileBasedDataSourceSuite`.

Closes #22324 from maropu/pr22232-2.

Lead-authored-by: dujunling <du...@huawei.com>

Co-authored-by: Takeshi Yamamuro <ya...@apache.org>

Signed-off-by: Sean Owen <se...@databricks.com>

(cherry picked from commit ed249db9c464062fbab7c6f68ad24caaa95cec82)

Signed-off-by: Sean Owen <se...@databricks.com>

commit 872bad161f1dbe6acd89b75f60053bfc8b621687

Author: Dilip Biswal <db...@...>

Date: 2018-09-07T06:35:02Z

[SPARK-25267][SQL][TEST] Disable ConvertToLocalRelation in the test cases of sql/core and sql/hive

## What changes were proposed in this pull request?

In SharedSparkSession and TestHive, we need to disable the rule ConvertToLocalRelation for better test case coverage.

## How was this patch tested?

Identify the failures after excluding "ConvertToLocalRelation" rule.

Closes #22270 from dilipbiswal/SPARK-25267-final.

Authored-by: Dilip Biswal <db...@us.ibm.com>

Signed-off-by: gatorsmile <ga...@gmail.com>

(cherry picked from commit 6d7bc5af454341f6d9bfc1e903148ad7ba8de6f9)

Signed-off-by: gatorsmile <ga...@gmail.com>

commit 95a48b909d103e59602e883d472cb03c7c434168

Author: fjh100456 <fu...@...>

Date: 2018-09-07T16:28:33Z

[SPARK-21786][SQL][FOLLOWUP] Add compressionCodec test for CTAS

## What changes were proposed in this pull request?

Before Apache Spark 2.3, table properties were ignored when writing data to a hive table(created with STORED AS PARQUET/ORC syntax), because the compression configurations were not passed to the FileFormatWriter in hadoopConf. Then it was fixed in #20087. But actually for CTAS with USING PARQUET/ORC syntax, table properties were ignored too when convertMastore, so the test case for CTAS not supported.

Now it has been fixed in #20522 , the test case should be enabled too.

## How was this patch tested?

This only re-enables the test cases of previous PR.

Closes #22302 from fjh100456/compressionCodec.

Authored-by: fjh100456 <fu...@zte.com.cn>

Signed-off-by: Dongjoon Hyun <do...@apache.org>

(cherry picked from commit 473f2fb3bfd0e51c40a87e475392f2e2c8f912dd)

Signed-off-by: Dongjoon Hyun <do...@apache.org>

commit 80567fad4e3d8d4573d4095b1e460452e597d81f

Author: Lee Dongjin <do...@...>

Date: 2018-09-07T17:36:15Z

[MINOR][SS] Fix kafka-0-10-sql trivials

## What changes were proposed in this pull request?

Fix unused imports & outdated comments on `kafka-0-10-sql` module. (Found while I was working on [SPARK-23539](https://github.com/apache/spark/pull/22282))

## How was this patch tested?

Existing unit tests.

Closes #22342 from dongjinleekr/feature/fix-kafka-sql-trivials.

Authored-by: Lee Dongjin <do...@apache.org>

Signed-off-by: Sean Owen <se...@databricks.com>

(cherry picked from commit 458f5011bd52851632c3592ac35f1573bc904d50)

Signed-off-by: Sean Owen <se...@databricks.com>

commit 904192ad18ff09cc5874e09b03447dd5f7754963

Author: WeichenXu <we...@...>

Date: 2018-09-08T16:09:14Z

[SPARK-25345][ML] Deprecate public APIs from ImageSchema

## What changes were proposed in this pull request?

Deprecate public APIs from ImageSchema.

## How was this patch tested?

N/A

Closes #22349 from WeichenXu123/image_api_deprecate.

Authored-by: WeichenXu <we...@databricks.com>

Signed-off-by: Xiangrui Meng <me...@databricks.com>

(cherry picked from commit 08c02e637ac601df2fe890b8b5a7a049bdb4541b)

Signed-off-by: Xiangrui Meng <me...@databricks.com>

commit 8f7d8a0977647dc96ab9259d306555bbe1c32873

Author: Dongjoon Hyun <do...@...>

Date: 2018-09-08T17:21:55Z

[SPARK-25375][SQL][TEST] Reenable qualified perm. function checks in UDFSuite

## What changes were proposed in this pull request?

At Spark 2.0.0, SPARK-14335 adds some [commented-out test coverages](https://github.com/apache/spark/pull/12117/files#diff-dd4b39a56fac28b1ced6184453a47358R177

). This PR enables them because it's supported since 2.0.0.

## How was this patch tested?

Pass the Jenkins with re-enabled test coverage.

Closes #22363 from dongjoon-hyun/SPARK-25375.

Authored-by: Dongjoon Hyun <do...@apache.org>

Signed-off-by: gatorsmile <ga...@gmail.com>

(cherry picked from commit 26f74b7cb16869079aa7b60577ac05707101ee68)

Signed-off-by: gatorsmile <ga...@gmail.com>

commit a00a160e1e63ef2aaf3eaeebf2a3e5a5eb05d076

Author: gatorsmile <ga...@...>

Date: 2018-09-09T13:25:19Z

Revert [SPARK-10399] [SPARK-23879] [SPARK-23762] [SPARK-25317]

## What changes were proposed in this pull request?

When running TPC-DS benchmarks on 2.4 release, npoggi and winglungngai saw more than 10% performance regression on the following queries: q67, q24a and q24b. After we applying the PR https://github.com/apache/spark/pull/22338, the performance regression still exists. If we revert the changes in https://github.com/apache/spark/pull/19222, npoggi and winglungngai found the performance regression was resolved. Thus, this PR is to revert the related changes for unblocking the 2.4 release.

In the future release, we still can continue the investigation and find out the root cause of the regression.

## How was this patch tested?

The existing test cases

Closes #22361 from gatorsmile/revertMemoryBlock.

Authored-by: gatorsmile <ga...@gmail.com>

Signed-off-by: Wenchen Fan <we...@databricks.com>

(cherry picked from commit 0b9ccd55c2986957863dcad3b44ce80403eecfa1)

Signed-off-by: Wenchen Fan <we...@databricks.com>

commit 6b7ea78aec73b8f24c2e1161254edd5ebb6c82bf

Author: WeichenXu <we...@...>

Date: 2018-09-09T14:49:13Z

[MINOR][ML] Remove `BisectingKMeansModel.setDistanceMeasure` method

## What changes were proposed in this pull request?

Remove `BisectingKMeansModel.setDistanceMeasure` method.

In `BisectingKMeansModel` set this param is meaningless.

## How was this patch tested?

N/A

Closes #22360 from WeichenXu123/bkmeans_update.

Authored-by: WeichenXu <we...@databricks.com>

Signed-off-by: Sean Owen <se...@databricks.com>

(cherry picked from commit 88a930dfab56c15df02c7bb944444745c2921fa5)

Signed-off-by: Sean Owen <se...@databricks.com>

commit c1c1bda3cecd82a926526e5e5ee24d9909cb7e49

Author: Yuming Wang <yu...@...>

Date: 2018-09-09T16:07:31Z

[SPARK-25368][SQL] Incorrect predicate pushdown returns wrong result

## What changes were proposed in this pull request?

How to reproduce:

```scala

val df1 = spark.createDataFrame(Seq(

(1, 1)

)).toDF("a", "b").withColumn("c", lit(null).cast("int"))

val df2 = df1.union(df1).withColumn("d", spark_partition_id).filter($"c".isNotNull)

df2.show

+---+---+----+---+

| a| b| c| d|

+---+---+----+---+

| 1| 1|null| 0|

| 1| 1|null| 1|

+---+---+----+---+

```

`filter($"c".isNotNull)` was transformed to `(null <=> c#10)` before https://github.com/apache/spark/pull/19201, but it is transformed to `(c#10 = null)` since https://github.com/apache/spark/pull/20155. This pr revert it to `(null <=> c#10)` to fix this issue.

## How was this patch tested?

unit tests

Closes #22368 from wangyum/SPARK-25368.

Authored-by: Yuming Wang <yu...@ebay.com>

Signed-off-by: gatorsmile <ga...@gmail.com>

(cherry picked from commit 77c996403d5c761f0dfea64c5b1cb7480ba1d3ac)

Signed-off-by: gatorsmile <ga...@gmail.com>

commit 0782dfa14c524131c04320e26d2b607777fe3b06

Author: seancxmao <se...@...>

Date: 2018-09-10T02:22:47Z

[SPARK-25175][SQL] Field resolution should fail if there is ambiguity for ORC native data source table persisted in metastore

## What changes were proposed in this pull request?

Apache Spark doesn't create Hive table with duplicated fields in both case-sensitive and case-insensitive mode. However, if Spark creates ORC files in case-sensitive mode first and create Hive table on that location, where it's created. In this situation, field resolution should fail in case-insensitive mode. Otherwise, we don't know which columns will be returned or filtered. Previously, SPARK-25132 fixed the same issue in Parquet.

Here is a simple example:

```

val data = spark.range(5).selectExpr("id as a", "id * 2 as A")

spark.conf.set("spark.sql.caseSensitive", true)

data.write.format("orc").mode("overwrite").save("/user/hive/warehouse/orc_data")

sql("CREATE TABLE orc_data_source (A LONG) USING orc LOCATION '/user/hive/warehouse/orc_data'")

spark.conf.set("spark.sql.caseSensitive", false)

sql("select A from orc_data_source").show

+---+

| A|

+---+

| 3|

| 2|

| 4|

| 1|

| 0|

+---+

```

See #22148 for more details about parquet data source reader.

## How was this patch tested?

Unit tests added.

Closes #22262 from seancxmao/SPARK-25175.

Authored-by: seancxmao <se...@gmail.com>

Signed-off-by: Dongjoon Hyun <do...@apache.org>

(cherry picked from commit a0aed475c54079665a8e5c5cd53a2e990a4f47b4)

Signed-off-by: Dongjoon Hyun <do...@apache.org>

commit c9ca3594345610148ef5d993262d3090d5b2c658

Author: Yuming Wang <yu...@...>

Date: 2018-09-10T05:47:19Z

[SPARK-25313][SQL][FOLLOW-UP] Fix InsertIntoHiveDirCommand output schema in Parquet issue

## What changes were proposed in this pull request?

How to reproduce:

```scala

spark.sql("CREATE TABLE tbl(id long)")

spark.sql("INSERT OVERWRITE TABLE tbl VALUES 4")

spark.sql("CREATE VIEW view1 AS SELECT id FROM tbl")

spark.sql(s"INSERT OVERWRITE LOCAL DIRECTORY '/tmp/spark/parquet' " +

"STORED AS PARQUET SELECT ID FROM view1")

spark.read.parquet("/tmp/spark/parquet").schema

scala> spark.read.parquet("/tmp/spark/parquet").schema

res10: org.apache.spark.sql.types.StructType = StructType(StructField(id,LongType,true))

```

The schema should be `StructType(StructField(ID,LongType,true))` as we `SELECT ID FROM view1`.

This pr fix this issue.

## How was this patch tested?

unit tests

Closes #22359 from wangyum/SPARK-25313-FOLLOW-UP.

Authored-by: Yuming Wang <yu...@ebay.com>

Signed-off-by: Wenchen Fan <we...@databricks.com>

(cherry picked from commit f8b4d5aafd1923d9524415601469f8749b3d0811)

Signed-off-by: Wenchen Fan <we...@databricks.com>

commit 67bc7ef7b70b6b654433bd5e56cff2f5ec6ae9bd

Author: gatorsmile <ga...@...>

Date: 2018-09-10T11:18:00Z

[SPARK-24849][SPARK-24911][SQL][FOLLOW-UP] Converting a value of StructType to a DDL string

## What changes were proposed in this pull request?

Add the version number for the new APIs.

## How was this patch tested?

N/A

Closes #22377 from gatorsmile/followup24849.

Authored-by: gatorsmile <ga...@gmail.com>

Signed-off-by: Wenchen Fan <we...@databricks.com>

(cherry picked from commit 6f6517837ba9934a280b11aba9d9be58bc131f25)

Signed-off-by: Wenchen Fan <we...@databricks.com>

commit 5d98c31941471bdcdc54a68f55ddaaab48f82161

Author: Marco Gaido <ma...@...>

Date: 2018-09-10T11:41:51Z

[SPARK-25278][SQL] Avoid duplicated Exec nodes when the same logical plan appears in the query

## What changes were proposed in this pull request?

In the Planner, we collect the placeholder which need to be substituted in the query execution plan and once we plan them, we substitute the placeholder with the effective plan.

In this second phase, we rely on the `==` comparison, ie. the `equals` method. This means that if two placeholder plans - which are different instances - have the same attributes (so that they are equal, according to the equal method) they are both substituted with their corresponding new physical plans. So, in such a situation, the first time we substitute both them with the first of the 2 new generated plan and the second time we substitute nothing.

This is usually of no harm for the execution of the query itself, as the 2 plans are identical. But since they are the same instance, now, the local variables are shared (which is unexpected). This causes issues for the metrics collected, as the same node is executed 2 times, so the metrics are accumulated 2 times, wrongly.

The PR proposes to use the `eq` method in checking which placeholder needs to be substituted,; thus in the previous situation, actually both the two different physical nodes which are created (one for each time the logical plan appears in the query plan) are used and the metrics are collected properly for each of them.

## How was this patch tested?

added UT

Closes #22284 from mgaido91/SPARK-25278.

Authored-by: Marco Gaido <ma...@gmail.com>

Signed-off-by: Wenchen Fan <we...@databricks.com>

(cherry picked from commit 12e3e9f17dca11a2cddf0fb99d72b4b97517fb56)

Signed-off-by: Wenchen Fan <we...@databricks.com>

commit ffd036a6d13814ebcc332990be1e286939cc6abe

Author: Holden Karau <ho...@...>

Date: 2018-09-10T18:01:51Z

[SPARK-23672][PYTHON] Document support for nested return types in scalar with arrow udfs

## What changes were proposed in this pull request?

Clarify docstring for Scalar functions

## How was this patch tested?

Adds a unit test showing use similar to wordcount, there's existing unit test for array of floats as well.

Closes #20908 from holdenk/SPARK-23672-document-support-for-nested-return-types-in-scalar-with-arrow-udfs.

Authored-by: Holden Karau <ho...@pigscanfly.ca>

Signed-off-by: Bryan Cutler <cu...@gmail.com>

(cherry picked from commit da5685b5bb9ee7daaeb4e8f99c488ebd50c7aac3)

Signed-off-by: Bryan Cutler <cu...@gmail.com>

commit fb4965a41941f3a196de77a870a8a1f29c96dac0

Author: Marco Gaido <ma...@...>

Date: 2018-09-11T06:16:56Z

[SPARK-25371][SQL] struct() should allow being called with 0 args

## What changes were proposed in this pull request?

SPARK-21281 introduced a check for the inputs of `CreateStructLike` to be non-empty. This means that `struct()`, which was previously considered valid, now throws an Exception. This behavior change was introduced in 2.3.0. The change may break users' application on upgrade and it causes `VectorAssembler` to fail when an empty `inputCols` is defined.

The PR removes the added check making `struct()` valid again.

## How was this patch tested?

added UT

Closes #22373 from mgaido91/SPARK-25371.

Authored-by: Marco Gaido <ma...@gmail.com>

Signed-off-by: Wenchen Fan <we...@databricks.com>

(cherry picked from commit 0736e72a66735664b191fc363f54e3c522697dba)

Signed-off-by: Wenchen Fan <we...@databricks.com>

commit b7efca7ece484ee85091b1b50bbc84ad779f9bfe

Author: Mario Molina <mm...@...>

Date: 2018-09-11T12:47:14Z

[SPARK-17916][SPARK-25241][SQL][FOLLOW-UP] Fix empty string being parsed as null when nullValue is set.

## What changes were proposed in this pull request?

In the PR, I propose new CSV option `emptyValue` and an update in the SQL Migration Guide which describes how to revert previous behavior when empty strings were not written at all. Since Spark 2.4, empty strings are saved as `""` to distinguish them from saved `null`s.

Closes #22234

Closes #22367

## How was this patch tested?

It was tested by `CSVSuite` and new tests added in the PR #22234

Closes #22389 from MaxGekk/csv-empty-value-master.

Lead-authored-by: Mario Molina <mm...@gmail.com>

Co-authored-by: Maxim Gekk <ma...@databricks.com>

Signed-off-by: hyukjinkwon <gu...@apache.org>

(cherry picked from commit c9cb393dc414ae98093c1541d09fa3c8663ce276)

Signed-off-by: hyukjinkwon <gu...@apache.org>

commit 0b8bfbe12b8a368836d7ddc8445de18b7ee42cde

Author: Dongjoon Hyun <do...@...>

Date: 2018-09-11T15:57:42Z

[SPARK-25389][SQL] INSERT OVERWRITE DIRECTORY STORED AS should prevent duplicate fields

## What changes were proposed in this pull request?

Like `INSERT OVERWRITE DIRECTORY USING` syntax, `INSERT OVERWRITE DIRECTORY STORED AS` should not generate files with duplicate fields because Spark cannot read those files back.

**INSERT OVERWRITE DIRECTORY USING**

```scala

scala> sql("INSERT OVERWRITE DIRECTORY 'file:///tmp/parquet' USING parquet SELECT 'id', 'id2' id")

... ERROR InsertIntoDataSourceDirCommand: Failed to write to directory ...

org.apache.spark.sql.AnalysisException: Found duplicate column(s) when inserting into file:/tmp/parquet: `id`;

```

**INSERT OVERWRITE DIRECTORY STORED AS**

```scala

scala> sql("INSERT OVERWRITE DIRECTORY 'file:///tmp/parquet' STORED AS parquet SELECT 'id', 'id2' id")

// It generates corrupted files

scala> spark.read.parquet("/tmp/parquet").show

18/09/09 22:09:57 WARN DataSource: Found duplicate column(s) in the data schema and the partition schema: `id`;

```

## How was this patch tested?

Pass the Jenkins with newly added test cases.

Closes #22378 from dongjoon-hyun/SPARK-25389.

Authored-by: Dongjoon Hyun <do...@apache.org>

Signed-off-by: Dongjoon Hyun <do...@apache.org>

(cherry picked from commit 77579aa8c35b0d98bbeac3c828bf68a1d190d13e)

Signed-off-by: Dongjoon Hyun <do...@apache.org>

commit 4414e026097c74aadd252b541c9d3009cd7e9d09

Author: Gera Shegalov <ge...@...>

Date: 2018-09-11T16:28:32Z

[SPARK-25221][DEPLOY] Consistent trailing whitespace treatment of conf values

## What changes were proposed in this pull request?

Stop trimming values of properties loaded from a file

## How was this patch tested?

Added unit test demonstrating the issue hit in production.

Closes #22213 from gerashegalov/gera/SPARK-25221.

Authored-by: Gera Shegalov <ge...@apache.org>

Signed-off-by: Marcelo Vanzin <va...@cloudera.com>

(cherry picked from commit bcb9a8c83f4e6835af5dc51f1be7f964b8fa49a3)

Signed-off-by: Marcelo Vanzin <va...@cloudera.com>

commit 16127e844f8334e1152b2e3ed3d878ec8de13dfa

Author: Liang-Chi Hsieh <vi...@...>

Date: 2018-09-11T17:31:06Z

[SPARK-24889][CORE] Update block info when unpersist rdds

## What changes were proposed in this pull request?

We will update block info coming from executors, at the timing like caching a RDD. However, when removing RDDs with unpersisting, we don't ask to update block info. So the block info is not updated.

We can fix this with few options:

1. Ask to update block info when unpersisting

This is simplest but changes driver-executor communication a bit.

2. Update block info when processing the event of unpersisting RDD

We send a `SparkListenerUnpersistRDD` event when unpersisting RDD. When processing this event, we can update block info of the RDD. This only changes event processing code so the risk seems to be lower.

Currently this patch takes option 2 for lower risk. If we agree first option has no risk, we can change to it.

## How was this patch tested?

Unit tests.

Closes #22341 from viirya/SPARK-24889.

Authored-by: Liang-Chi Hsieh <vi...@gmail.com>

Signed-off-by: Marcelo Vanzin <va...@cloudera.com>

(cherry picked from commit 14f3ad20932535fe952428bf255e7eddd8fa1b58)

Signed-off-by: Marcelo Vanzin <va...@cloudera.com>

commit 99b37a91871f8bf070d43080f1c58475548c99fd

Author: Sean Owen <se...@...>

Date: 2018-09-11T19:46:03Z

[SPARK-25398] Minor bugs from comparing unrelated types

## What changes were proposed in this pull request?

Correct some comparisons between unrelated types to what they seem to… have been trying to do

## How was this patch tested?

Existing tests.

Closes #22384 from srowen/SPARK-25398.

Authored-by: Sean Owen <se...@databricks.com>

Signed-off-by: Sean Owen <se...@databricks.com>

(cherry picked from commit cfbdd6a1f5906b848c520d3365cc4034992215d9)

Signed-off-by: Sean Owen <se...@databricks.com>

commit 3a6ef8b7e2d17fe22458bfd249f45b5a5ce269ec

Author: Sean Owen <se...@...>

Date: 2018-09-11T19:52:58Z

Revert "[SPARK-23820][CORE] Enable use of long form of callsite in logs"

This reverts commit e58dadb77ed6cac3e1b2a037a6449e5a6e7f2cec.

commit 0dbf1450f7965c27ce9329c7dad351ff8b8072dc

Author: Mukul Murthy <mu...@...>

Date: 2018-09-11T22:53:15Z

[SPARK-25399][SS] Continuous processing state should not affect microbatch execution jobs

## What changes were proposed in this pull request?

The leftover state from running a continuous processing streaming job should not affect later microbatch execution jobs. If a continuous processing job runs and the same thread gets reused for a microbatch execution job in the same environment, the microbatch job could get wrong answers because it can attempt to load the wrong version of the state.

## How was this patch tested?

New and existing unit tests

Closes #22386 from mukulmurthy/25399-streamthread.

Authored-by: Mukul Murthy <mu...@gmail.com>

Signed-off-by: Tathagata Das <ta...@gmail.com>

(cherry picked from commit 9f5c5b4cca7d4eaa30a3f8adb4cb1eebe3f77c7a)

Signed-off-by: Tathagata Das <ta...@gmail.com>

----

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22860: Branch 2.4

Posted by asfgit <gi...@git.apache.org>.

Github user asfgit closed the pull request at:

https://github.com/apache/spark/pull/22860

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22860: Branch 2.4

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22860

Can one of the admins verify this patch?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22860: Branch 2.4

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22860

Can one of the admins verify this patch?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22860: Branch 2.4

Posted by srowen <gi...@git.apache.org>.

Github user srowen commented on the issue:

https://github.com/apache/spark/pull/22860

@sarojchand close this

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22860: Branch 2.4

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22860

Can one of the admins verify this patch?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org