You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@linkis.apache.org by pe...@apache.org on 2022/09/23 09:54:30 UTC

[incubator-linkis] branch dev-1.3.1 updated: Formatting fixes for all markdown type documents in linkis (#3395)

This is an automated email from the ASF dual-hosted git repository.

peacewong pushed a commit to branch dev-1.3.1

in repository https://gitbox.apache.org/repos/asf/incubator-linkis.git

The following commit(s) were added to refs/heads/dev-1.3.1 by this push:

new 74506af7d Formatting fixes for all markdown type documents in linkis (#3395)

74506af7d is described below

commit 74506af7df21696894f51cfaaf851ed35a6e7134

Author: 成彬彬 <10...@users.noreply.github.com>

AuthorDate: Fri Sep 23 17:54:25 2022 +0800

Formatting fixes for all markdown type documents in linkis (#3395)

---

CONTRIBUTING.md | 27 ++++++++---

CONTRIBUTING_CN.md | 41 ++++++++++------

README.md | 27 +++++------

README_CN.md | 55 +++++++++++-----------

docs/configuration/accessible-executor.md | 10 +---

docs/configuration/elasticsearch.md | 5 --

docs/configuration/executor-core.md | 6 +--

docs/configuration/flink.md | 2 -

docs/configuration/index.md | 6 +--

docs/configuration/info-1.1.3.md | 3 +-

docs/configuration/info-1.2.1.md | 6 +--

docs/configuration/jdbc.md | 7 ++-

docs/configuration/linkis-application-manager.md | 6 +--

docs/configuration/linkis-bml-server.md | 4 +-

docs/configuration/linkis-common.md | 2 +-

docs/configuration/linkis-computation-client.md | 4 +-

.../configuration/linkis-computation-engineconn.md | 7 +--

.../linkis-computation-governance-common.md | 6 +--

.../linkis-computation-orchestrator.md | 3 --

docs/configuration/linkis-configuration.md | 4 +-

docs/configuration/linkis-engineconn-common.md | 6 +--

.../linkis-engineconn-manager-core.md | 4 +-

.../linkis-engineconn-manager-server.md | 8 +---

.../configuration/linkis-engineconn-plugin-core.md | 1 -

.../linkis-engineconn-plugin-server.md | 16 +------

docs/configuration/linkis-entrance.md | 10 ++--

docs/configuration/linkis-gateway-core.md | 7 ++-

.../linkis-gateway-httpclient-support.md | 3 +-

docs/configuration/linkis-hadoop-common.md | 3 +-

docs/configuration/linkis-httpclient.md | 4 --

docs/configuration/linkis-instance-label-client.md | 5 +-

docs/configuration/linkis-io-file-client.md | 9 +---

docs/configuration/linkis-jdbc-driver.md | 4 +-

docs/configuration/linkis-jobhistory.md | 5 --

docs/configuration/linkis-manager-common.md | 11 +----

docs/configuration/linkis-metadata.md | 5 +-

docs/configuration/linkis-module.md | 3 --

docs/configuration/linkis-orchestrator-core.md | 3 --

docs/configuration/linkis-protocol.md | 3 --

docs/configuration/linkis-rpc.md | 4 --

docs/configuration/linkis-scheduler.md | 2 -

docs/configuration/linkis-spring-cloud-gateway.md | 4 +-

docs/configuration/linkis-storage.md | 6 +--

docs/configuration/linkis-udf.md | 2 -

docs/configuration/pipeline.md | 6 +--

docs/configuration/presto.md | 5 +-

docs/configuration/python.md | 2 -

docs/configuration/spark.md | 5 --

docs/configuration/sqoop.md | 3 --

docs/errorcode/linkis-common-errorcode.md | 7 ---

linkis-dist/helm/README.md | 51 +++++++++++---------

51 files changed, 164 insertions(+), 274 deletions(-)

diff --git a/CONTRIBUTING.md b/CONTRIBUTING.md

index df448ad85..f5ed5970d 100644

--- a/CONTRIBUTING.md

+++ b/CONTRIBUTING.md

@@ -23,6 +23,7 @@ Helping answering the questions in the Linkis community is a very valuable way t

You can find linkis documentations at [linkis-Website](https://linkis.apache.org/docs/latest/introduction), and the supplement of the document is also crucial to the development of Linkis.

### 1.5 Other

+

Including participating in and helping to organize community exchanges, community operation activities, etc., and other activities that can help the Linkis project and the community.

## 2. How to Contribution

@@ -30,16 +31,18 @@ Including participating in and helping to organize community exchanges, communit

### 2.1 Branch structure

The Linkis source code may have some temporary branches, but only the following three branches are really meaningful:

+

- master: The source code of the latest stable release, and occasionally several hotfix submissions;

- release-*: stable release version;

- dev-*: main development branch;

#### 2.1.1 Concept

-- Upstream repository: https://github.com/apache/incubator-linkis The apache repository of linkis is called Upstream repository in the text

-- Fork repository: From https://github.com/apache/incubator-linkis fork to your own personal repository called Fork repository

+- Upstream repository: <https://github.com/apache/incubator-linkis> The apache repository of linkis is called Upstream repository in the text

+- Fork repository: From <https://github.com/apache/incubator-linkis> fork to your own personal repository called Fork repository

#### 2.1.2 Synchronize Upstream Repository

+

> Synchronize the latest code of the Upstream repository branch to your own Fork repository

- Step1 Enter the user project page and select the branch to be updated

@@ -47,6 +50,7 @@ The Linkis source code may have some temporary branches, but only the following

#### 2.1.3 Synchronize New Branch

+

>Synchronize the new branch of the Upstream repository to your own Fork repository

Scenario: There is a new branch in the Upstream warehouse, but the forked library does not have this branch (you can choose to delete and re-fork, but the changes that have not been merged to the original warehouse will be lost)

@@ -58,25 +62,33 @@ Operate in your own clone's local project

```shell script

git remote add apache git@github.com:apache/incubator-linkis.git

```

+

- Step2 Pull the apache image information to the local

```shell script

git fetch apache

```

+

- Step3 Create a local branch based on the new branch that needs to be synced

```shell script

git checkout -b dev-1.1.4 apache/dev-1.1.4

```

+

- Step4 Push the local branch to your own warehouse. If your own warehouse does not have the dev-1.1.4 branch, the dev-1.1.4 branch will be created

+

```shell script

git push origin dev-1.1.4:dev-1.1.4

```

+

- Step5 Delete the upstream branch

+

```shell script

git remote remove apache

```

+

- Step6 Update the branch

+

```shell script

git pull

```

@@ -85,23 +97,25 @@ git pull

- Step1 Confirm the base branch of the current development (usually the current version in progress, such as the version 1.1.0 currently under development by the community, then the branch is dev-1.1.0, if you are not sure, you can ask in the community group or at @relevant classmates in the issue)

-- Step2 Synchronize the latest code of the Upstream warehouse branch to your own Fork warehouse branch, see the guide [2.1.2 Synchronize Upstream Repository]

+- Step2 Synchronize the latest code of the Upstream warehouse branch to your own Fork warehouse branch, see the guide [2.1.2 Synchronize Upstream Repository]

- Step3 Based on the development branch, pull the new fix/feature branch (do not modify it directly on the original branch, if the subsequent PR is merged in the squash method, the submitted commit records will be merged into one)

+

```shell script

git checkout -b dev-1.1.4-fix dev-1.1.4

git push origin dev-1.1.4-fix:dev-1.1.4-fix

```

+

- Step4 Develop

-- Step5 Submit pr (if it is in progress and the development has not been completely completed, please add the WIP logo to the pr title, such as `[WIP] Dev 1.1.1 Add junit test code for [linkis-common] `; associate the corresponding issue, etc.)

+- Step5 Submit pr (if it is in progress and the development has not been completely completed, please add the WIP logo to the pr title, such as `[WIP] Dev 1.1.1 Add junit test code for [linkis-common]`; associate the corresponding issue, etc.)

- Step6 Waiting to be merged

- Step7 Delete the fix/future branch (you can do this on the github page)

+

```shell script

git branch -d dev-1.1.4-fix

git push

```

-

Please note: For the dev branch of major features, in addition to the version number, the corresponding naming description will be added, such as: dev-0.10.0-flink, which refers to the flink feature development branch of 0.10.0.

### 2.2 Development Guidelines

@@ -122,6 +136,7 @@ git push origin dev-fix dev-fix

```

### 2.3 Issue submission guidelines

+

- If you still don’t know how to initiate a PR to an open source project, please refer to [About issues](https://docs.github.com/en/github/managing-your-work-on-github/about-issues)

- Issue name, which should briefly describe your problem or suggestion in one sentence; for the international promotion of the project, please write the issue in English or both Chinese and English

- For each Issue, please bring at least two labels, component and type, such as component=Computation Governance/EngineConn, type=Improvement. Reference: [issue #590](https://github.com/apache/incubator-linkis/issues/590)

@@ -193,4 +208,4 @@ If you are the Committer of the Linkis project, and all your contributions have

- You can merge PRs submitted by other Committers and contributors to the dev-** branch

- Participate in determining the roadmap and development direction of the Linkis project

-- Can participate in the new version release

\ No newline at end of file

+- Can participate in the new version release

diff --git a/CONTRIBUTING_CN.md b/CONTRIBUTING_CN.md

index 117c4e8f7..a728e4ccc 100644

--- a/CONTRIBUTING_CN.md

+++ b/CONTRIBUTING_CN.md

@@ -1,10 +1,9 @@

# 如何参与项目贡献

-> 更多信息可以见官网[如何参与项目贡献 ](https://linkis.apache.org/community/how-to-contribute)

+> 更多信息可以见官网[如何参与项目贡献](https://linkis.apache.org/community/how-to-contribute)

非常感谢贡献 Linkis 项目!在参与贡献之前,请仔细阅读以下指引。

-

## 一、贡献范畴

### 1.1 Bug 反馈与修复

@@ -21,27 +20,28 @@

### 1.4 文档改进

-Linkis 文档位于[Linkis 官网 ](https://linkis.apache.org/zh-CN/docs/latest/introduction/) ,文档的补充完善对于 Linkis 的发展也至关重要。

+Linkis 文档位于[Linkis 官网](https://linkis.apache.org/zh-CN/docs/latest/introduction/) ,文档的补充完善对于 Linkis 的发展也至关重要。

### 1.5 其他

-包括参与和帮助组织社区交流、社区运营活动等,其他能够帮助 Linkis 项目和社区的活动。

+包括参与和帮助组织社区交流、社区运营活动等,其他能够帮助 Linkis 项目和社区的活动。

## 二、贡献流程

### 2.1 分支结构

Linkis 源码可能会产生一些临时分支,但真正有明确意义的只有以下三个分支:

+

- master: 最近一次稳定 release 的源码,偶尔会多几次 hotfix 提交;

-- release-*: 稳定的 release 版本;

+- release-*: 稳定的 release 版本;

- dev-*: 主要开发分支;

-#### 2.1.1 概念

+#### 2.1.1 概念

-- Upstream 仓库:https://github.com/apache/incubator-linkis linkis 的 apache 仓库文中称为 Upstream 仓库

-- Fork 仓库: 从 https://github.com/apache/incubator-linkis fork 到自己个人仓库 称为 Fork 仓库

+- Upstream 仓库:<https://github.com/apache/incubator-linkis> linkis 的 apache 仓库文中称为 Upstream 仓库

+- Fork 仓库: 从 <https://github.com/apache/incubator-linkis> fork 到自己个人仓库 称为 Fork 仓库

-#### 2.1.2 同步 Upstream 仓库分支最新代码到自己的 Fork 仓库

+#### 2.1.2 同步 Upstream 仓库分支最新代码到自己的 Fork 仓库

- step1 进入用户项目页面,选中要更新的分支

- step2 点击 code 下载按钮下方的 Fetch upstream,选择 Fetch and merge (如自己的 Fork 仓库 该分支不小心污染了,可以删除该分支后,同步 Upstream 仓库新分支到自己的 Fork 仓库 ,参见指引[同步 Upstream 仓库分支最新代码到自己的 Fork 仓库 ](#213-同步 Upstream 仓库新分支到自己的 Fork 仓库 ))

@@ -58,47 +58,58 @@ Linkis 源码可能会产生一些临时分支,但真正有明确意义的只

```shell script

git remote add apache git@github.com:apache/incubator-linkis.git

```

+

- step2 拉去 apache 镜像信息到本地

```shell script

git fetch apache

```

+

- step3 根据需要同步的新分支来创建本地分支

```shell script

git checkout -b dev-1.1.4 apache/dev-1.1.4

```

+

- step4 把本地分支 push 到自己的仓库,如果自己的仓库没有 dev-1.1.4 分支,则会创建 dev-1.1.4 分支

+

```shell script

git push origin dev-1.1.4:dev-1.1.4

```

+

- step5 删除 upstream 的分支

+

```shell script

git remote remove apache

```

+

- step6 更新分支

+

```shell script

git pull

```

-#### 2.1.4 一个 pr 的流程

+#### 2.1.4 一个 pr 的流程

- step1 确认当前开发的基础分支(一般是当前进行的中版本,如当前社区开发中的版本 1.1.0,那么分支就是 dev-1.1.0,不确定的话可以在社区群里问下或则在 issue 中@相关同学)

- step2 同步 Upstream 仓库分支最新代码到自己的 Fork 仓库 分支,参见指引 [2.1.2 同步 Upstream 仓库分支最新代码到自己的 Fork 仓库 ]

- step3 基于开发分支,拉取新 fix/feature 分支 (不要直接在原分支上修改,如果后续 pr 以 squash 方式 merge 后,提交的 commit 记录会被合并成一个)

+

```shell script

git checkout -b dev-1.1.4-fix dev-1.1.4

git push origin dev-1.1.4-fix:dev-1.1.4-fix

```

+

- step4 进行开发

-- step5 提交 pr(如果是正在进行中,开发还未完全结束,请在 pr 标题上加上 WIP 标识 如 `[WIP] Dev 1.1.1 Add junit test code for [linkis-common] ` ;关联对应的 issue 等)

+- step5 提交 pr(如果是正在进行中,开发还未完全结束,请在 pr 标题上加上 WIP 标识 如 `[WIP] Dev 1.1.1 Add junit test code for [linkis-common]` ;关联对应的 issue 等)

- step6 等待被合并

-- step7 删除 fix/future 分支 (可以在 github 页面上进行操作)

+- step7 删除 fix/future 分支 (可以在 github 页面上进行操作)

+

```shell script

-git branch -d dev-1.1.4-fix

-git push

+git branch -d dev-1.1.4-fix

+git push

```

请注意:大特性的 dev 分支,在命名时除了版本号,还会加上相应的命名说明,如:dev-0.10.0-flink,指 0.10.0 的 flink 特性开发分支。

@@ -128,7 +139,7 @@ git push origin dev-fix dev-fix

- 如果您还不知道怎样向开源项目发起 PR,请参考[About pull requests](https://docs.github.com/en/github/collaborating-with-issues-and-pull-requests/about-pull-requests)

- 无论是 Bug 修复,还是新功能开发,请将 PR 提交到 dev-* 分支

-- PR 和提交名称遵循 `<type>(<scope>): <subject>` 原则,详情可以参考[Commit message 和 Change log 编写指南 ](https://linkis.apache.org/zh-CN/community/development-specification/commit-message)

+- PR 和提交名称遵循 `<type>(<scope>): <subject>` 原则,详情可以参考[Commit message 和 Change log 编写指南](https://linkis.apache.org/zh-CN/community/development-specification/commit-message)

- 如果 PR 中包含新功能,理应将文档更新包含在本次 PR 中

- 如果本次 PR 尚未准备好合并,请在名称头部加上 [WIP] 前缀(WIP = work-in-progress)

- 所有提交到 dev-* 分支的提交至少需要经过一次 Review 才可以被合并

diff --git a/README.md b/README.md

index 464d34dd4..0ca801d7b 100644

--- a/README.md

+++ b/README.md

@@ -3,8 +3,8 @@

</h2>

<p align="center">

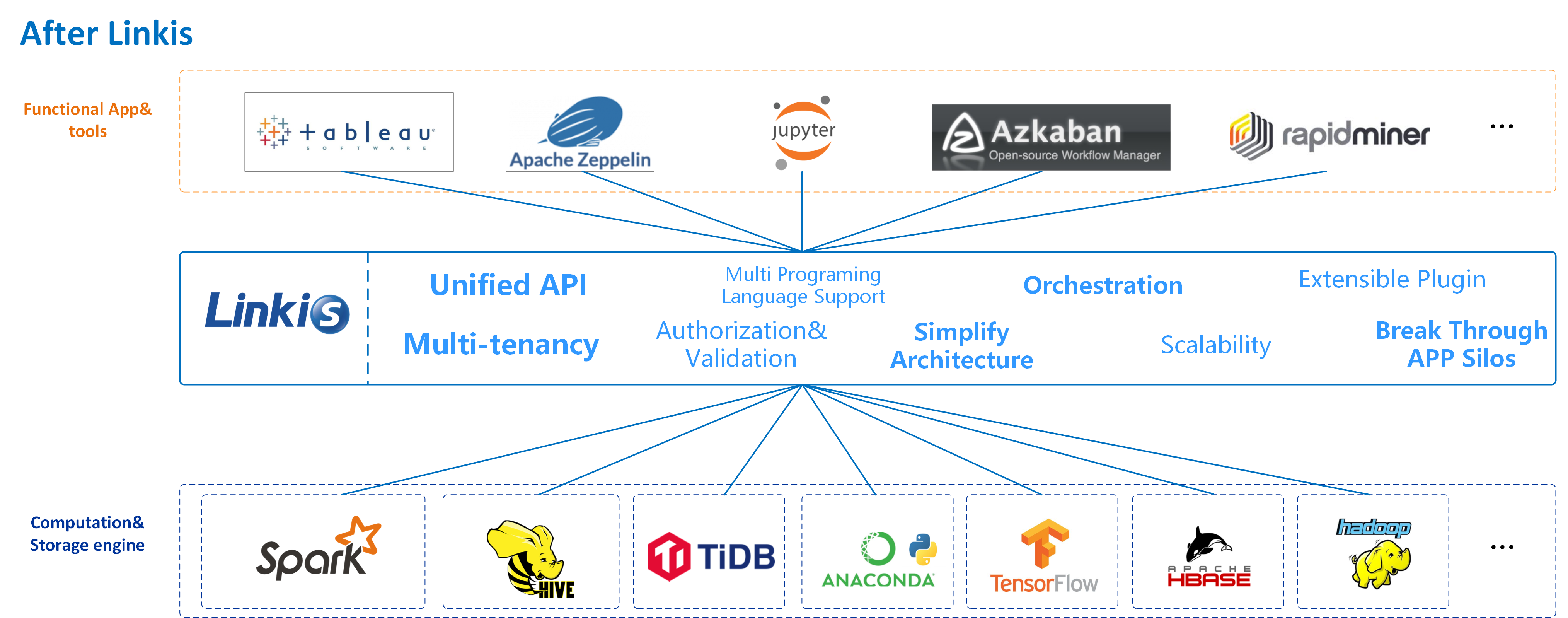

- <strong>Linkis builds a computation middleware layer to decouple the upper applications and the underlying data engines,

- provides standardized interfaces (REST, JDBC, WebSocket etc.) to easily connect to various underlying engines (Spark, Presto, Flink, etc.),

+ <strong>Linkis builds a computation middleware layer to decouple the upper applications and the underlying data engines,

+ provides standardized interfaces (REST, JDBC, WebSocket etc.) to easily connect to various underlying engines (Spark, Presto, Flink, etc.),

while enables cross engine context sharing, unified job& engine governance and orchestration.</strong>

</p>

<p align="center">

@@ -33,7 +33,7 @@

<a target="_blank" href="https://github.com/apache/incubator-linkis/actions">

<img src="https://github.com/apache/incubator-linkis/actions/workflows/build.yml/badge.svg" />

</a>

-

+

<a target="_blank" href='https://github.com/apache/incubator-linkis'>

<img src="https://img.shields.io/github/forks/apache/incubator-linkis.svg" alt="github forks"/>

</a>

@@ -64,7 +64,6 @@ As a computation middleware, Linkis provides powerful connectivity, reuse, orche

Since the first release of Linkis in 2019, it has accumulated more than **700** trial companies and **1000+** sandbox trial users, which involving diverse industries, from finance, banking, tele-communication, to manufactory, internet companies and so on. Lots of companies have already used Linkis as a unified entrance for the underlying computation and storage engines of the big data platform.

-

@@ -72,11 +71,11 @@ Since the first release of Linkis in 2019, it has accumulated more than **700**

# Features

- **Support for diverse underlying computation storage engines**

- - Currently supported computation/storage engines: Spark、Hive、Flink、Python、Pipeline、Sqoop、openLooKeng、Presto、ElasticSearch、JDBC, Shell, etc

- - Computation/storage engines to be supported: Trino (planned 1.3.1), SeaTunnel (planned 1.3.1), etc

- - Supported scripting languages: SparkSQL、HiveQL、Python、Shell、Pyspark、R、Scala and JDBC, etc

+ - Currently supported computation/storage engines: Spark、Hive、Flink、Python、Pipeline、Sqoop、openLooKeng、Presto、ElasticSearch、JDBC, Shell, etc

+ - Computation/storage engines to be supported: Trino (planned 1.3.1), SeaTunnel (planned 1.3.1), etc

+ - Supported scripting languages: SparkSQL、HiveQL、Python、Shell、Pyspark、R、Scala and JDBC, etc

-- **Powerful task/request governance capabilities** With services such as Orchestrator, Label Manager and customized Spring Cloud Gateway, Linkis is able to provide multi-level labels based, cross-cluster/cross-IDC fine-grained routing, load balance, multi-tenancy, traffic control, resource control, and orchestration strategies like dual-active, active-standby, etc

+- **Powerful task/request governance capabilities** With services such as Orchestrator, Label Manager and customized Spring Cloud Gateway, Linkis is able to provide multi-level labels based, cross-cluster/cross-IDC fine-grained routing, load balance, multi-tenancy, traffic control, resource control, and orchestration strategies like dual-active, active-standby, etc

- **Support full stack computation/storage engine** As a computation middleware, it will receive, execute and manage tasks and requests for various computation storage engines, including batch tasks, interactive query tasks, real-time streaming tasks and storage tasks

@@ -106,7 +105,6 @@ Since the first release of Linkis in 2019, it has accumulated more than **700**

|Hadoop|Apache >=2.6.0, <br/>CDH >=5.4.0|ongoing|-|Hadoop EngineConn, supports Hadoop MR/YARN application|

|TiSpark|1.1|ongoing|-|TiSpark EngineConn, supports querying TiDB with SparkSQL|

-

# Ecosystem

| Component | Description | Linkis 1.x(recommend 1.1.1) Compatible |

@@ -127,6 +125,7 @@ Please go to the [Linkis Releases Page](https://linkis.apache.org/download/main)

# Compile and Deploy

> For more detailed guidance see:

+>

>- [[Backend Compile]](https://linkis.apache.org/docs/latest/development/linkis-compile-and-package)

>- [[Management Console Build]](https://linkis.apache.org/docs/latest/development/web-build)

@@ -156,12 +155,13 @@ cd incubator-linkis/linkis-web

npm install

npm run build

```

-

+

Please refer to [Quick Deployment](https://linkis.apache.org/docs/latest/deployment/quick-deploy) to do the deployment.

# Examples and Guidance

+

- [User Manual](https://linkis.apache.org/docs/latest/user-guide/overview)

-- [Engine Usage Documents](https://linkis.apache.org/docs/latest/engine-usage/overview)

+- [Engine Usage Documents](https://linkis.apache.org/docs/latest/engine-usage/overview)

- [API Documents](https://linkis.apache.org/docs/latest/api/overview)

# Documentation & Vedio

@@ -170,7 +170,9 @@ Please refer to [Quick Deployment](https://linkis.apache.org/docs/latest/deploym

- Meetup videos on [Bilibili](https://space.bilibili.com/598542776?from=search&seid=14344213924133040656)

# Architecture

+

Linkis services could be divided into three categories: computation governance services, public enhancement services and microservice governance services

+

- The computation governance services, support the 3 major stages of processing a task/request: submission -> preparation -> execution

- The public enhancement services, including the material library service, context service, and data source service

- The microservice governance services, including Spring Cloud Gateway, Eureka and Open Feign

@@ -189,15 +191,12 @@ For code and documentation contributions, please follow the [contribution guide]

# Contact Us

-

- Any questions or suggestions please kindly submit an [issue](https://github.com/apache/incubator-linkis/issues).

- By mail [dev@linkis.apache.org](mailto:dev@linkis.apache.org)

- You can scan the QR code below to join our WeChat group to get more immediate response

-

-

# Who is Using Linkis

We opened an issue [[Who is Using Linkis]](https://github.com/apache/incubator-linkis/issues/23) for users to feedback and record who is using Linkis.

diff --git a/README_CN.md b/README_CN.md

index 566d21238..f307ff6f5 100644

--- a/README_CN.md

+++ b/README_CN.md

@@ -32,7 +32,7 @@

<a target="_blank" href="https://github.com/apache/incubator-linkis/actions">

<img src="https://github.com/apache/incubator-linkis/actions/workflows/build.yml/badge.svg" />

</a>

-

+

<a target="_blank" href='https://github.com/apache/incubator-linkis'>

<img src="https://img.shields.io/github/forks/apache/incubator-linkis.svg" alt="github forks"/>

</a>

@@ -53,7 +53,7 @@

<br/>

---

-[English](README.md) | [中文 ](README_CN.md)

+[English](README.md) | [中文](README_CN.md)

# 介绍

@@ -66,14 +66,14 @@ Linkis 自 2019 年开源发布以来,已累计积累了 700 多家试验企

-

## 核心特点

+

- **丰富的底层计算存储引擎支持**

- - **目前支持的计算存储引擎** Spark、Hive、Flink、Python、Pipeline、Sqoop、openLooKeng、Presto、ElasticSearch、JDBC 和 Shell 等

- - **正在支持中的计算存储引擎** Trino(计划 1.3.1)、SeaTunnel(计划 1.3.1) 等

- - **支持的脚本语言** SparkSQL、HiveQL、Python、Shell、Pyspark、R、Scala 和 JDBC 等

+ - **目前支持的计算存储引擎** Spark、Hive、Flink、Python、Pipeline、Sqoop、openLooKeng、Presto、ElasticSearch、JDBC 和 Shell 等

+ - **正在支持中的计算存储引擎** Trino(计划 1.3.1)、SeaTunnel(计划 1.3.1) 等

+ - **支持的脚本语言** SparkSQL、HiveQL、Python、Shell、Pyspark、R、Scala 和 JDBC 等

- **强大的计算治理能力** 基于 Orchestrator、Label Manager 和定制的 Spring Cloud Gateway 等服务,Linkis 能够提供基于多级标签的跨集群/跨 IDC 细粒度路由、负载均衡、多租户、流量控制、资源控制和编排策略 (如双活、主备等) 支持能力

- **全栈计算存储引擎架构支持** 能够接收、执行和管理针对各种计算存储引擎的任务和请求,包括离线批量任务、交互式查询任务、实时流式任务和存储型任务

- **资源管理能力** ResourceManager 不仅具备对 Yarn 和 Linkis EngineManager 的资源管理能力,还将提供基于标签的多级资源分配和回收能力,让 ResourceManager 具备跨集群、跨计算资源类型的强大资源管理能力

@@ -102,11 +102,10 @@ Linkis 自 2019 年开源发布以来,已累计积累了 700 多家试验企

|Hadoop|Apache >=2.6.0, <br/>CDH >=5.4.0|ongoing|-|Hadoop EngineConn, 支持 Hadoop MR/YARN application|

|TiSpark|1.1|ongoing|-|TiSpark EngineConn, 支持用 SparkSQL 查询 TiDB|

-

# 生态组件

-| 应用工具 | 描述 | Linkis 1.X(推荐 1.1.1) 兼容版本 |

-| --------------- | -------------------------------------------------------------------- | ---------- |

+| 应用工具 | 描述 | Linkis 1.X(推荐 1.1.1) 兼容版本 |

+| --------------- | -------------------------------------------------------------------- | ---------- |

| [**DataSphere Studio**](https://github.com/WeBankFinTech/DataSphereStudio/blob/master/README-ZH.md) | DataSphere Studio(简称 DSS)数据应用开发管理集成框架 | **DSS 1.0.1[已发布 ][Linkis 推荐 1.1.1]** |

| [**Scriptis**](https://github.com/WeBankFinTech/Scriptis) | 支持在线写 SQL、Pyspark、HiveQL 等脚本,提交给[Linkis](https://github.com/apache/incubator-linkis) 执行的数据分析 Web 工具 | 在 DSS 1.0.1 中[已发布 ] |

| [**Schedulis**](https://github.com/WeBankFinTech/Schedulis) | 基于 Azkaban 二次开发的工作流任务调度系统,具备高性能,高可用和多租户资源隔离等金融级特性 | **Schedulis0.6.2 [已发布 ]** |

@@ -118,13 +117,14 @@ Linkis 自 2019 年开源发布以来,已累计积累了 700 多家试验企

# 下载

-请前往[Linkis Releases 页面 ](https://linkis.apache.org/download/main) 下载 Linkis 的已编译版本或源码包。

+请前往[Linkis Releases 页面](https://linkis.apache.org/download/main) 下载 Linkis 的已编译版本或源码包。

# 编译和安装部署

> 更详细的步骤参见:

->- [后端编译打包 ](https://linkis.apache.org/zh-CN/docs/latest/development/linkis-compile-and-package)

->- [管理台编译 ](https://linkis.apache.org/zh-CN/docs/latest/development/web-build)

+>

+>- [后端编译打包](https://linkis.apache.org/zh-CN/docs/latest/development/linkis-compile-and-package)

+>- [管理台编译](https://linkis.apache.org/zh-CN/docs/latest/development/web-build)

```shell script

## 后端编译

@@ -143,23 +143,24 @@ npm install

npm run build

```

-请参考[快速安装部署 ](https://linkis.apache.org/zh-CN/docs/latest/deployment/quick-deploy) 来部署 Linkis

+请参考[快速安装部署](https://linkis.apache.org/zh-CN/docs/latest/deployment/quick-deploy) 来部署 Linkis

# 示例和使用指引

-- [用户手册 ](https://linkis.apache.org/zh-CN/docs/latest/user-guide/overview),

-- [各引擎使用指引 ](https://linkis.apache.org/zh-CN/docs/latest/engine-usage/overview)

-- [API 文档 ](https://linkis.apache.org/zh-CN/docs/latest/api/overview)

+- [用户手册](https://linkis.apache.org/zh-CN/docs/latest/user-guide/overview),

+- [各引擎使用指引](https://linkis.apache.org/zh-CN/docs/latest/engine-usage/overview)

+- [API 文档](https://linkis.apache.org/zh-CN/docs/latest/api/overview)

# 文档&视频

-- 完整的 Linkis 文档代码存放在[linkis-website 仓库中 ](https://github.com/apache/incubator-linkis-website)

+- 完整的 Linkis 文档代码存放在[linkis-website 仓库中](https://github.com/apache/incubator-linkis-website)

- Meetup 视频 [Bilibili](https://space.bilibili.com/598542776?from=search&seid=14344213924133040656)

-

# 架构概要

+

Linkis 基于微服务架构开发,其服务可以分为 3 类:计算治理服务、公共增强服务和微服务治理服务。

+

- 计算治理服务,支持计算任务/请求处理流程的 3 个主要阶段:提交-> 准备-> 执行

- 公共增强服务,包括上下文服务、物料管理服务及数据源服务等

- 微服务治理服务,包括定制化的 Spring Cloud Gateway、Eureka、Open Feign

@@ -171,35 +172,33 @@ Linkis 基于微服务架构开发,其服务可以分为 3 类:计算治理服

-- [**DataSphere Studio** - 数据应用集成开发框架 ](https://github.com/WeBankFinTech/DataSphereStudio)

+- [DataSphere Studio - 数据应用集成开发框架](https://github.com/WeBankFinTech/DataSphereStudio)

-- [**Scriptis** - 数据研发 IDE 工具 ](https://github.com/WeBankFinTech/Scriptis)

+- [Scriptis - 数据研发 IDE 工具](https://github.com/WeBankFinTech/Scriptis)

-- [**Visualis** - 数据可视化工具 ](https://github.com/WeBankFinTech/Visualis)

+- [Visualis - 数据可视化工具](https://github.com/WeBankFinTech/Visualis)

-- [**Schedulis** - 工作流调度工具 ](https://github.com/WeBankFinTech/Schedulis)

+- [Schedulis - 工作流调度工具](https://github.com/WeBankFinTech/Schedulis)

-- [**Qualitis** - 数据质量工具 ](https://github.com/WeBankFinTech/Qualitis)

+- [Qualitis - 数据质量工具](https://github.com/WeBankFinTech/Qualitis)

-- [**MLLabis** - 容器化机器学习 notebook 开发环境 ](https://github.com/WeBankFinTech/prophecis)

+- [MLLabis - 容器化机器学习 notebook 开发环境](https://github.com/WeBankFinTech/prophecis)

更多项目开源准备中,敬请期待。

# 贡献

我们非常欢迎和期待更多的贡献者参与共建 Linkis, 不论是代码、文档,或是其他能够帮助到社区的贡献形式。

-代码和文档相关的贡献请参照[贡献指引 ](https://linkis.apache.org/zh-CN/community/how-to-contribute).

+代码和文档相关的贡献请参照[贡献指引](https://linkis.apache.org/zh-CN/community/how-to-contribute).

# 联系我们

- 对 Linkis 的任何问题和建议,可以提交 issue,以便跟踪处理和经验沉淀共享

-- 通过邮件方式 [dev@linkis.apache.org](mailto:dev@linkis.apache.org)

+- 通过邮件方式 [dev@linkis.apache.org](mailto:dev@linkis.apache.org)

- 可以扫描下面的二维码,加入我们的微信群,以获得更快速的响应

-

-

# 谁在使用 Linkis

我们创建了一个 issue [[Who is Using Linkis]](https://github.com/apache/incubator-linkis/issues/23) 以便用户反馈和记录谁在使用 Linkis.

diff --git a/docs/configuration/accessible-executor.md b/docs/configuration/accessible-executor.md

index fea3432cc..3c9c77a2a 100644

--- a/docs/configuration/accessible-executor.md

+++ b/docs/configuration/accessible-executor.md

@@ -1,14 +1,13 @@

## accessible-executor 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|accessible-executor|wds.linkis.engineconn.log.cache.default|500|cache.default|

-|accessible-executor|wds.linkis.engineconn.ignore.words|org.apache.spark.deploy.yarn.Client |ignore.words|

+|accessible-executor|wds.linkis.engineconn.ignore.words|org.apache.spark.deploy.yarn.Client |ignore.words|

|accessible-executor|wds.linkis.engineconn.pass.words|org.apache.hadoop.hive.ql.exec.Task |pass.words|

|accessible-executor|wds.linkis.engineconn.log.send.once| 100|send.once|

|accessible-executor|wds.linkis.engineconn.log.send.time.interval |200 |time.interval|

-|accessible-executor|wds.linkis.engineconn.log.send.cache.size| 300 |send.cache.size |

+|accessible-executor|wds.linkis.engineconn.log.send.cache.size| 300 |send.cache.size |

|accessible-executor|wds.linkis.engineconn.max.free.time|30m| max.free.time|

|accessible-executor|wds.linkis.engineconn.lock.free.interval| 3m |lock.free.interval|

|accessible-executor|wds.linkis.engineconn.support.parallelism| false |support.parallelism |

@@ -16,8 +15,3 @@

|accessible-executor|wds.linkis.engineconn.status.scan.time|1m|status.scan.time|

|accessible-executor|wds.linkis.engineconn.maintain.enable|false| maintain.enable|

|accessible-executor|wds.linkis.engineconn.maintain.cretors|IDE| maintain.cretors|

-

-

-

-

-

diff --git a/docs/configuration/elasticsearch.md b/docs/configuration/elasticsearch.md

index f6e4cf738..f7eba8836 100644

--- a/docs/configuration/elasticsearch.md

+++ b/docs/configuration/elasticsearch.md

@@ -1,6 +1,5 @@

## elasticsearch 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|elasticsearch|linkis.es.cluster|127.0.0.1:9200|cluster|

@@ -19,7 +18,3 @@

|elasticsearch|linkis.resultSet.cache.max|512k|resultSet.cache.max|

|elasticsearch|linkis.engineconn.concurrent.limit| 100 |engineconn.concurrent.limit|

|elasticsearch|linkis.engineconn.io.version| 7.6.2| engineconn.io.version|

-

-

-

-

diff --git a/docs/configuration/executor-core.md b/docs/configuration/executor-core.md

index 9af0d2bb9..4be73e93b 100644

--- a/docs/configuration/executor-core.md

+++ b/docs/configuration/executor-core.md

@@ -1,19 +1,17 @@

## executor-core 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|executor-core|wds.linkis.dataworkclod.engine.tmp.path|file:///tmp/|engine.tmp.path|

-|executor-core|wds.linkis.engine.application.name | |application.name|

+|executor-core|wds.linkis.engine.application.name | |application.name|

|executor-core|wds.linkis.log4j2.prefix| |log4j2.prefix|

|executor-core|wds.linkis.log.clear| |log.clear|

|executor-core|wds.linkis.engine.ignore.words|org.apache.spark.deploy.yarn.Client |ignore.words|

|executor-core|wds.linkis.engine.pass.words| org.apache.hadoop.hive.ql.exec.Task|pass.words|

|executor-core|wds.linkis.entrance.application.name|linkis-cg-entrance| entrance.application.name|

|executor-core|wds.linkis.engine.listener.async.queue.size.max| 300 |size.max|

-|executor-core|wds.linkis.engine.listener.async.consumer.thread.max| 5|thread.max |

+|executor-core|wds.linkis.engine.listener.async.consumer.thread.max| 5|thread.max |

|executor-core|wds.linkis.engine.listener.async.consumer.freetime.max|5000ms|freetime.max|

|executor-core|wds.linkis.engineconn.executor.manager.service.class|org.apache.linkis.engineconn.acessible.executor.service.DefaultManagerService|manager.service.class|

|executor-core|wds.linkis.engineconn.executor.manager.class|org.apache.linkis.engineconn.core.executor.LabelExecutorManagerImpl| executor.manager.class|

|executor-core|wds.linkis.engineconn.executor.default.name|ComputationExecutor| default.name|

-

diff --git a/docs/configuration/flink.md b/docs/configuration/flink.md

index 92bd21650..bf4738dbc 100644

--- a/docs/configuration/flink.md

+++ b/docs/configuration/flink.md

@@ -1,6 +1,5 @@

## flink 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|flink|flink.client.memory |1024|client.memory |

@@ -50,4 +49,3 @@

|flink|linkis.flink.params.placeholder.blank|\\0x001 |params.placeholder.blank|

|flink|linkis.flink.hudi.enable|false | hudi.enable|

|flink|linkis.flink.hudi.extra.yarn.classpath| |hudi.extra.yarn.classpath|

-

\ No newline at end of file

diff --git a/docs/configuration/index.md b/docs/configuration/index.md

index 28254c297..6872247cb 100644

--- a/docs/configuration/index.md

+++ b/docs/configuration/index.md

@@ -1,7 +1,7 @@

This docs folder is only used to temporarily store some version documents.

-For detailed documentation, please visit the official website

-https://linkis.apache.org/docs/latest/introduction

+For detailed documentation, please visit the official website

+<https://linkis.apache.org/docs/latest/introduction>

------

这个docs文件夹,只是用来临时存放一些版本文档,

-详细文档 请到官网查看 https://linkis.apache.org/zh-CN/docs/latest/introduction/

\ No newline at end of file

+详细文档 请到官网查看 https://linkis.apache.org/zh-CN/docs/latest/introduction/

diff --git a/docs/configuration/info-1.1.3.md b/docs/configuration/info-1.1.3.md

index e0cdfc4e8..faca3daf2 100644

--- a/docs/configuration/info-1.1.3.md

+++ b/docs/configuration/info-1.1.3.md

@@ -1,5 +1,4 @@

-## 参数变化

-

+## 参数变化

| 模块名(服务名)| 类型 | 参数名 | 默认值 | 描述 |

| ----------- | ----- | -------------------------------------------------------- | ---------------- | ------------------------------------------------------- |

diff --git a/docs/configuration/info-1.2.1.md b/docs/configuration/info-1.2.1.md

index e2da12446..4553a1bbc 100644

--- a/docs/configuration/info-1.2.1.md

+++ b/docs/configuration/info-1.2.1.md

@@ -1,5 +1,4 @@

-## 参数变化

-

+## 参数变化

| 模块名(服务名)| 类型 | 参数名 | 默认值 | 描述 |

| ----------- | ----- | -------------------------------------------------------- | ---------------- | ------------------------------------------------------- |

@@ -8,7 +7,7 @@

|cg-engineplugin | 新增 | wds.linkis.trino.http.connectTimeout | 60 | 连接Trino服务器的超时时间 |

|cg-engineplugin | 新增 | wds.linkis.trino.http.readTimeout | 60 | 等待Trino服务器返回数据的超时时间 |

|cg-engineplugin | 新增 | wds.linkis.trino.resultSet.cache.max | 512k | Trino结果集缓冲区大小 |

-|cg-engineplugin | 新增 | wds.linkis.trino.url | http://127.0.0.1:8080 | Trino服务器URL |

+|cg-engineplugin | 新增 | wds.linkis.trino.url | <http://127.0.0.1:8080> | Trino服务器URL |

|cg-engineplugin | 新增 | wds.linkis.trino.user | null | 用于连接Trino查询服务的用户名 |

|cg-engineplugin | 新增 | wds.linkis.trino.password | null | 用于连接Trino查询服务的密码 |

|cg-engineplugin | 新增 | wds.linkis.trino.passwordCmd | null | 用于连接Trino查询服务的密码回调命令 |

@@ -23,6 +22,7 @@

|cg-engineplugin | 新增 | wds.linkis.trino.ssl.truststore.password | null | truststore密码 |

## 特性说明

+

| 模块名(服务名)| 类型 | 特性 |

| ----------- | ---------------- | ------------------------------------------------------- |

|linkis-metadata-query-service-mysql | 新增 | 基于mysql 模块融合dm,greenplum,kingbase,oracle,postgres,sqlserver ,协议和sql 区分开,metadata-query 反射多个数据源,基于mysql模块扩展,融合为一个模块。|

diff --git a/docs/configuration/jdbc.md b/docs/configuration/jdbc.md

index 4caea6c88..24418f436 100644

--- a/docs/configuration/jdbc.md

+++ b/docs/configuration/jdbc.md

@@ -2,9 +2,8 @@

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

-|jdbc|wds.linkis.resultSet.cache.max| 0k |cache.max|

-|jdbc|wds.linkis.jdbc.default.limit |5000 |jdbc.default.limit|

+|jdbc|wds.linkis.resultSet.cache.max| 0k |cache.max|

+|jdbc|wds.linkis.jdbc.default.limit |5000 |jdbc.default.limit|

|jdbc|wds.linkis.jdbc.query.timeout|1800|jdbc.query.timeout|

|jdbc|wds.linkis.engineconn.jdbc.concurrent.limit|100| jdbc.concurrent.limit |

-|jdbc|wds.linkis.keytab.enable|false|eytab.enable|

-

\ No newline at end of file

+|jdbc|wds.linkis.keytab.enable|false|eytab.enable|

diff --git a/docs/configuration/linkis-application-manager.md b/docs/configuration/linkis-application-manager.md

index fe78e026f..42487cfdc 100644

--- a/docs/configuration/linkis-application-manager.md

+++ b/docs/configuration/linkis-application-manager.md

@@ -1,6 +1,5 @@

## linkis-application-manager 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-application-manager|wds.linkis.label.node.long.lived.label.keys |tenant|lived.label.keys|

@@ -18,7 +17,7 @@

|linkis-application-manager|wds.linkis.engineconn.application.name | linkis-cg-engineplugin| engineconn.application.name |

|linkis-application-manager|wds.linkis.engineconn.debug.mode.enable|jdbc,es,presto,io_file,appconn,openlookeng,trino| debug.mode.enable|

|linkis-application-manager|wds.linkis.multi.user.engine.user| |engine.user|

-|linkis-application-manager|wds.linkis.manager.am.engine.locker.max.time| 1000 * 60 * 5|locker.max.time|

+|linkis-application-manager|wds.linkis.manager.am.engine.locker.max.time| 1000 *60* 5|locker.max.time|

|linkis-application-manager|wds.linkis.manager.am.can.retry.logs|already in use;Cannot allocate memory|retry.logs|

|linkis-application-manager|wds.linkis.ecm.launch.max.thread.size| 200 |thread.size|

|linkis-application-manager|wds.linkis.async.stop.engine.size| 20|engine.size|

@@ -33,10 +32,9 @@ linkis-application-manager|wds.linkis.manager.am.node.create.time|12m| node.crea

|linkis-application-manager|wds.linkis.manager.am.ecm.heartbeat| 1m|am.ecm.heartbeat|

|linkis-application-manager|wds.linkis.manager.rm.kill.engine.wait|30s |kill.engine.wait|

|linkis-application-manager|wds.linkis.manager.rm.request.enable| true|request.enable|

-|linkis-application-manager|wds.linkis.manager.rm.lock.wait|5 * 60 * 1000|rm.lock.wait|

+|linkis-application-manager|wds.linkis.manager.rm.lock.wait|5 *60* 1000|rm.lock.wait|

|linkis-application-manager|wds.linkis.manager.rm.debug.enable| false |rm.debug.enable|

|linkis-application-manager|wds.linkis.manager.rm.debug.log.path| file:///tmp/linkis/rmLog" |rm.debug.log.path|

|linkis-application-manager|wds.linkis.manager.rm.external.resource.regresh.time| 30m|regresh.time|

|linkis-application-manager|wds.linkis.configuration.engine.type| |engine.type|

|linkis-application-manager|wds.linkis.manager.rm.resource.action.record| true|resource.action.record|

-

diff --git a/docs/configuration/linkis-bml-server.md b/docs/configuration/linkis-bml-server.md

index 9a28b9b76..0a2626839 100644

--- a/docs/configuration/linkis-bml-server.md

+++ b/docs/configuration/linkis-bml-server.md

@@ -1,12 +1,10 @@

## linkis-bml-server 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 |

-| -------- | -------- | ----- |----- |

+| -------- | -------- | ----- |----- |

|linkis-bml-server|wds.linkis.bml.hdfs.prefix| /apps-data | bml.hdfs.prefix|

|linkis-bml-server|wds.linkis.bml.local.prefix|true|bml.local.prefix|

|linkis-bml-server|wds.linkis.bml.cleanExpired.time|100 |bml.cleanExpired.time|

|linkis-bml-server|wds.linkis.bml.clean.time.type| TimeUnit.HOURS | bml.clean.time.type |

|linkis-bml-server|wds.linkis.server.maxThreadSize| 30 |maxThreadSize|

|linkis-bml-server|wds.linkis.bml.default.proxy.user| | bml.default.proxy.user |

-

\ No newline at end of file

diff --git a/docs/configuration/linkis-common.md b/docs/configuration/linkis-common.md

index af4b708ad..1e12df3d1 100644

--- a/docs/configuration/linkis-common.md

+++ b/docs/configuration/linkis-common.md

@@ -9,7 +9,7 @@

|linkis-common|wds.linkis.prometheus.enable|false|prometheus.enable|true|

|linkis-common|wds.linkis.prometheus.endpoint| /actuator/prometheus|prometheus.endpoint |true|

|linkis-common|wds.linkis.home|false| test.mode |true|

-|linkis-common|wds.linkis.gateway.url|http://127.0.0.1:9001/|gateway.url|true|

+|linkis-common|wds.linkis.gateway.url|<http://127.0.0.1:9001/>|gateway.url|true|

|linkis-common|wds.linkis.reflect.scan.package| org.apache.linkis,com.webank.wedatasphere |scan.package |true|

|linkis-common|wds.linkis.console.configuration.application.name|linkis-ps-configuration|configuration.application.name|true|

|linkis-common|wds.linkis.console.variable.application.name|linkis-ps-publicservice|variable.application.name|true|

diff --git a/docs/configuration/linkis-computation-client.md b/docs/configuration/linkis-computation-client.md

index 49d1edbe4..bf7167710 100644

--- a/docs/configuration/linkis-computation-client.md

+++ b/docs/configuration/linkis-computation-client.md

@@ -1,7 +1,5 @@

## linkis-computation-client 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 |

-| -------- | -------- | ----- |----- |

+| -------- | -------- | ----- |----- |

| linkis-computation-client |linkis.client.operator.once.log.enable|true|once.log.enable|

-

diff --git a/docs/configuration/linkis-computation-engineconn.md b/docs/configuration/linkis-computation-engineconn.md

index 8ed71a815..c192e0913 100644

--- a/docs/configuration/linkis-computation-engineconn.md

+++ b/docs/configuration/linkis-computation-engineconn.md

@@ -1,10 +1,9 @@

## linkis-computation-engineconn 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-computation-engineconn|wds.linkis.engine.resultSet.cache.max |0k|engine.resultSet.cache.max|

-|linkis-computation-engineconn|wds.linkis.engine.lock.expire.time|2 * 60 * 1000 |lock.expire.time|

+|linkis-computation-engineconn|wds.linkis.engine.lock.expire.time|2 *60* 1000 |lock.expire.time|

|linkis-computation-engineconn|wds.linkis.engineconn.max.task.execute.num|0|task.execute.num|

|linkis-computation-engineconn|wds.linkis.engineconn.progresss.fetch.interval-in-seconds| 5|interval-in-seconds|

|linkis-computation-engineconn|wds.linkis.engineconn.udf.load.ignore|true |load.ignore|

@@ -22,7 +21,3 @@

|linkis-computation-engineconn|linkis.ec.task.submit.wait.time.ms|22|wait.time.ms|

|linkis-computation-engineconn|wds.linkis.bdp.hive.init.sql.enable| false |sql.enable|

|linkis-computation-engineconn|wds.linkis.bdp.use.default.db.enable| true|db.enable|

-

-

-

-

diff --git a/docs/configuration/linkis-computation-governance-common.md b/docs/configuration/linkis-computation-governance-common.md

index 2687b60a3..493bf73fb 100644

--- a/docs/configuration/linkis-computation-governance-common.md

+++ b/docs/configuration/linkis-computation-governance-common.md

@@ -1,8 +1,7 @@

## linkis-computation-governance-common 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 |

-| -------- | -------- | ----- |----- |

+| -------- | -------- | ----- |----- |

|linkis-computation-governance-common|wds.linkis.rm| | wds.linkis.rm |

|linkis-computation-governance-common|wds.linkis.spark.engine.version|2.4.3 |spark.engine.version|

|linkis-computation-governance-common|wds.linkis.hive.engine.version| 1.2.1 |hive.engine.version|

@@ -19,6 +18,3 @@

|linkis-computation-governance-common|wds.linkis.filesystem.hdfs.root.path| hdfs:///tmp/linkis/ | filesystem.hdfs.root.path |

|linkis-computation-governance-common|wds.linkis.engine.yarn.app.kill.scripts.path| /sbin/kill-yarn-jobs.sh |engine.yarn.app.kill.scripts.path|

|linkis-computation-governance-common|wds.linkis.engineconn.env.keys| | engineconn.env.keys |

-

-

-

diff --git a/docs/configuration/linkis-computation-orchestrator.md b/docs/configuration/linkis-computation-orchestrator.md

index d46ada115..c614d4646 100644

--- a/docs/configuration/linkis-computation-orchestrator.md

+++ b/docs/configuration/linkis-computation-orchestrator.md

@@ -1,6 +1,5 @@

## linkis-computation-orchestrator 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-computation-orchestrator|wds.linkis.computation.orchestrator.create.service |dss|orchestrator.create.service|

@@ -17,5 +16,3 @@

|linkis-computation-orchestrator|wds.linkis.orchestrator.engine.lastupdate.timeout|5s| orchestrator.engine.lastupdate.timeout |

|linkis-computation-orchestrator|wds.linkis.orchestrator.engine.timeout| 10s| orchestrator.engine.timeout|

|linkis-computation-orchestrator|wds.linkis.orchestrator.engine.activity_monitor.interval|10s| orchestrator.engine.activity_monitor.interval||

-

-

diff --git a/docs/configuration/linkis-configuration.md b/docs/configuration/linkis-configuration.md

index eecca3179..816383ef8 100644

--- a/docs/configuration/linkis-configuration.md

+++ b/docs/configuration/linkis-configuration.md

@@ -1,9 +1,7 @@

## linkis-configuration 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 |

-| -------- | -------- | ----- |----- |

+| -------- | -------- | ----- |----- |

| linkis-configuration |wds.linkis.configuration.engine.type| |configuration.engine.type|

| linkis-configuration |wds.linkis.engineconn.manager.name|linkis-cg-linkismanager |engineconn.manager.name|

| linkis-configuration |wds.linkis.configuration.use.creator.default.value|true |configuration.use.creator.default.value|

-

\ No newline at end of file

diff --git a/docs/configuration/linkis-engineconn-common.md b/docs/configuration/linkis-engineconn-common.md

index ca45af2ce..fc2acc690 100644

--- a/docs/configuration/linkis-engineconn-common.md

+++ b/docs/configuration/linkis-engineconn-common.md

@@ -1,6 +1,5 @@

## linkis-engineconn-common 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-engineconn-common|wds.linkis.engine.connector.executions|org.apache.linkis.engineconn.computation.executor.execute.ComputationEngineConnExecution|connector.executions|

@@ -9,10 +8,9 @@

|linkis-engineconn-common|wds.linkis.engine.parallelism.support.enabled| false|support.enabled |

|linkis-engineconn-common|wds.linkis.engine.push.log.enable|true |log.enable|

|linkis-engineconn-common|wds.linkis.engineconn.plugin.default.class| org.apache.linkis.engineplugin.hive.HiveEngineConnPlugin|plugin.default.class |

-|linkis-engineconn-common|wds.linkis.engine.task.expire.time|1000 * 3600 * 24| task.expire.time|

-|linkis-engineconn-common|wds.linkis.engine.lock.refresh.time| 1000 * 60 * 3 |lock.refresh.time|

+|linkis-engineconn-common|wds.linkis.engine.task.expire.time|1000 *3600* 24| task.expire.time|

+|linkis-engineconn-common|wds.linkis.engine.lock.refresh.time| 1000 *60* 3 |lock.refresh.time|

|linkis-engineconn-common|wds.linkis.engine.work.home.key| PWD |work.home.key |

|linkis-engineconn-common|wds.linkis.engine.logs.dir.key|LOG_DIRS|logs.dir.key|

|linkis-engineconn-common|wds.linkis.engine.connector.init.time|8m|init.time|

|linkis-engineconn-common|wds.linkis.spark.engine.yarn.app.id.parse.regex| | parse.regex |

-

diff --git a/docs/configuration/linkis-engineconn-manager-core.md b/docs/configuration/linkis-engineconn-manager-core.md

index 883029874..b5262579d 100644

--- a/docs/configuration/linkis-engineconn-manager-core.md

+++ b/docs/configuration/linkis-engineconn-manager-core.md

@@ -1,7 +1,5 @@

## linkis-engineconn-manager-core 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 |

-| -------- | -------- | ----- |----- |

+| -------- | -------- | ----- |----- |

| linkis-engineconn-manager-core |linkis.ec.core.dump.disable|true|dump.disable|

-

diff --git a/docs/configuration/linkis-engineconn-manager-server.md b/docs/configuration/linkis-engineconn-manager-server.md

index c3d34cbf3..f89d9048f 100644

--- a/docs/configuration/linkis-engineconn-manager-server.md

+++ b/docs/configuration/linkis-engineconn-manager-server.md

@@ -1,6 +1,5 @@

## linkis-engineconn-manager-server 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-engineconn-manager-server|wds.linkis.ecm.async.bus.capacity |500|bus.capacity|

@@ -22,8 +21,8 @@

|linkis-engineconn-manager-server|wds.linkis.engineconn.root.dir| |root.dir|

|linkis-engineconn-manager-server|wds.linkis.engineconn.public.dir| |ngineconn.public.dir|

|linkis-engineconn-manager-server|wds.linkis.ecm.launch.max.thread.size| 100|thread.size|

-|linkis-engineconn-manager-server|wds.linkis.eureka.defaultZone| http://127.0.0.1:20303/eureka/ |eureka.defaultZone|

-|linkis-engineconn-manager-server|wds.linkis.ecm.engineconn.create.duration| 1000 * 60 * 10 |engineconn.create.duration|

+|linkis-engineconn-manager-server|wds.linkis.eureka.defaultZone| <http://127.0.0.1:20303/eureka/> |eureka.defaultZone|

+|linkis-engineconn-manager-server|wds.linkis.ecm.engineconn.create.duration| 1000 *60* 10 |engineconn.create.duration|

|linkis-engineconn-manager-server|wds.linkis.engineconn.wait.callback.pid|3s |wait.callback.pid|

|linkis-engineconn-manager-server|wds.linkis.ecm.engine.start.error.msg.max.len| 500 |msg.max.len|

|linkis-engineconn-manager-server|wds.linkis.ecm.script.kill.engineconn| true|kill.engineconn|

@@ -32,6 +31,3 @@

|linkis-engineconn-manager-server|linkis.engineconn.log.tail.start.size|20000 |start.size|

|linkis-engineconn-manager-server|linkis.engineconn.log.multiline.pattern| |multiline.pattern|

|linkis-engineconn-manager-server| linkis.engineconn.log.multiline.max| 500|log.multiline.max|

-

-

-

\ No newline at end of file

diff --git a/docs/configuration/linkis-engineconn-plugin-core.md b/docs/configuration/linkis-engineconn-plugin-core.md

index 88b1146f3..79cc5c94b 100644

--- a/docs/configuration/linkis-engineconn-plugin-core.md

+++ b/docs/configuration/linkis-engineconn-plugin-core.md

@@ -1,6 +1,5 @@

## linkis-engineconn-plugin-core 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-engineconn-plugin-core|wds.linkis.engineConn.jars|engineConn额外的Jars|engineConn.jars|

diff --git a/docs/configuration/linkis-engineconn-plugin-server.md b/docs/configuration/linkis-engineconn-plugin-server.md

index 4c8f301cf..e67aa9a6b 100644

--- a/docs/configuration/linkis-engineconn-plugin-server.md

+++ b/docs/configuration/linkis-engineconn-plugin-server.md

@@ -1,8 +1,7 @@

## linkis-engineconn-plugin-server 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 |

-| -------- | -------- | ----- |----- |

+| -------- | -------- | ----- |----- |

| wds.linkis.engineconn.plugin.loader.classname | |plugin.loader.classname|

| wds.linkis.engineconn.plugin.loader.defaultUser | hadoop |wds.linkis.engineconn.plugin.loader.defaultUser|

| wds.linkis.engineconn.plugin.loader.store.path | |loader.store.path|

@@ -12,16 +11,3 @@

| wds.linkis.engineconn.home | |engineconn.home|

| wds.linkis.engineconn.dist.load.enable |true |dist.load.enable|

| wds.linkis.engineconn.bml.upload.failed.enable |true |upload.failed.enable|

-

-

-

-

-

-

-

-

-

-

-

-

-

\ No newline at end of file

diff --git a/docs/configuration/linkis-entrance.md b/docs/configuration/linkis-entrance.md

index ec928f222..27b97f57b 100644

--- a/docs/configuration/linkis-entrance.md

+++ b/docs/configuration/linkis-entrance.md

@@ -1,6 +1,5 @@

## linkis-entrance 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-entrance|wds.linkis.entrance.scheduler.maxParallelismUsers |1000| scheduler.maxParallelismUsers|

@@ -41,7 +40,7 @@

|linkis-entrance|wds.linkis.entrance.push.progress| false |push.progress|

|linkis-entrance|wds.linkis.concurrent.group.factory.capacity| 1000|factory.capacity|

|linkis-entrance|wds.linkis.concurrent.group.factory.running.jobs| 30 | running.jobs|

-|linkis-entrance|wds.linkis.concurrent.group.factory.executor.time| 5 * 60 * 1000 | factory.executor.time|

+|linkis-entrance|wds.linkis.concurrent.group.factory.executor.time| 5 *60* 1000 | factory.executor.time|

|linkis-entrance|wds.linkis.entrance.engine.lastupdate.timeout| 5s |lastupdate.timeout|

|linkis-entrance|wds.linkis.entrance.engine.timeout| 10s|engine.timeout|

|linkis-entrance|wds.linkis.entrance.engine.activity_monitor.interval| 3s|activity_monitor.interval|

@@ -50,17 +49,14 @@

|linkis-entrance|wds.linkis.user.parallel.reflesh.time| 30|user.parallel.reflesh.time|

|linkis-entrance|wds.linkis.entrance.jobinfo.update.retry| true | jobinfo.update.retry|

|linkis-entrance|wds.linkis.entrance.jobinfo.update.retry.max.times| 3 | update.retry.max.times|

-|linkis-entrance|wds.linkis.entrance.jobinfo.update.retry.interval| 2 * 60 * 1000| update.retry.interval|

+|linkis-entrance|wds.linkis.entrance.jobinfo.update.retry.interval| 2 *60* 1000| update.retry.interval|

|linkis-entrance|wds.linkis.entrance.code.parser.selective.ignored| true|parser.selective.ignored|

|linkis-entrance|wds.linkis.entrance.code.parser.enable| false|parser.enable|

|linkis-entrance|wds.linkis.entrance.yarn.queue.core.max| 300|yarn.queue.core.max|

|linkis-entrance|wds.linkis.entrance.yarn.queue.memory.max.g| 1000|yarn.queue.memory.max.g|

|linkis-entrance|linkis.entrance.enable.hdfs.log.cache|true|hdfs.log.cache|

|linkis-entrance|linkis.entrance.cli.heartbeat.threshold.sec| 30L |heartbeat.threshold.sec|

-|linkis-entrance|wds.linkis.entrance.log.push.interval.time| 5 * 60 * 1000|push.interval.time|

+|linkis-entrance|wds.linkis.entrance.log.push.interval.time| 5 *60* 1000|push.interval.time|

|linkis-entrance|wds.linkis.consumer.group.cache.capacity| 5000 | group.cache.capacity|

|linkis-entrance|wds.linkis.consumer.group.expire.time.hour| 50 | expire.time.hour|

|linkis-entrance|wds.linkis.entrance.client.monitor.creator|LINKISCLI| client.monitor.creator|

-

-

-

diff --git a/docs/configuration/linkis-gateway-core.md b/docs/configuration/linkis-gateway-core.md

index 983bcc918..509df1be7 100644

--- a/docs/configuration/linkis-gateway-core.md

+++ b/docs/configuration/linkis-gateway-core.md

@@ -1,20 +1,19 @@

## linkis-gateway-core 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-gateway-core|wds.linkis.gateway.conf.enable.proxy.user |false|gateway.conf.enable.proxy.user|

|linkis-gateway-core|wds.linkis.gateway.conf.proxy.user.config|proxy.properties|proxy.user.config|

-|linkis-gateway-core|wds.linkis.gateway.conf.proxy.user.scan.interval|1000 * 60 * 10|gateway.conf.proxy.user.scan.interval|

+|linkis-gateway-core|wds.linkis.gateway.conf.proxy.user.scan.interval|1000 *60* 10|gateway.conf.proxy.user.scan.interval|

|linkis-gateway-core|wds.linkis.gateway.conf.enable.token.auth| false |gateway.conf.enable.token.auth|

-|linkis-gateway-core|wds.linkis.gateway.conf.token.auth.scan.interval|1000 * 60 * 10 |gateway.conf.token.auth.scan.interval|

+|linkis-gateway-core|wds.linkis.gateway.conf.token.auth.scan.interval|1000 *60* 10 |gateway.conf.token.auth.scan.interval|

|linkis-gateway-core|wds.linkis.gateway.conf.url.pass.auth| |gateway.conf.url.pass.auth |

|linkis-gateway-core|wds.linkis.gateway.conf.enable.sso|false| gateway.conf.enable.sso |

|linkis-gateway-core|wds.linkis.gateway.conf.sso.interceptor| |gateway.conf.sso.interceptor|

|linkis-gateway-core|wds.linkis.admin.user| hadoop |admin.user |

|linkis-gateway-core|wds.linkis.admin.password| |admin.password|

|linkis-gateway-core|wds.linkis.gateway.usercontrol_switch_on|false|gateway.usercontrol_switch_on|

-|linkis-gateway-core|wds.linkis.gateway.publickey.url|http://127.0.0.1:8088| gateway.publickey.url |

+|linkis-gateway-core|wds.linkis.gateway.publickey.url|<http://127.0.0.1:8088>| gateway.publickey.url |

|linkis-gateway-core|wds.linkis.gateway.conf.proxy.user.list| | gateway.conf.proxy.user.list|

|linkis-gateway-core|wds.linkis.usercontrol.application.name|cloud-usercontrol| usercontrol.application.name|

|linkis-gateway-core|wds.linkis.login_encrypt.enable| false |login_encrypt.enable|

diff --git a/docs/configuration/linkis-gateway-httpclient-support.md b/docs/configuration/linkis-gateway-httpclient-support.md

index 04e138331..88008e533 100644

--- a/docs/configuration/linkis-gateway-httpclient-support.md

+++ b/docs/configuration/linkis-gateway-httpclient-support.md

@@ -1,6 +1,5 @@

## linkis-gateway-httpclient-support 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 |

-| -------- | -------- | ----- |----- |

+| -------- | -------- | ----- |----- |

| linkis-gateway-httpclient-support |linkis.gateway.enabled.defalut.discovery|true|gateway.enabled.defalut.discovery|

diff --git a/docs/configuration/linkis-hadoop-common.md b/docs/configuration/linkis-hadoop-common.md

index d30ea5aba..66b39601a 100644

--- a/docs/configuration/linkis-hadoop-common.md

+++ b/docs/configuration/linkis-hadoop-common.md

@@ -1,6 +1,5 @@

## linkis-hadoop-common 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-hadoop-common|wds.linkis.hadoop.root.user|hadoop-8|hadoop.root.user|true|

@@ -13,5 +12,5 @@

|linkis-hadoop-common|hadoop.config.dir| |config.dir|true|

|linkis-hadoop-common|wds.linkis.hadoop.external.conf.dir.prefix| /appcom/config/external-conf/hadoop|scan.package |true|

|linkis-hadoop-common|wds.linkis.hadoop.hdfs.cache.enable|false|hdfs.cache.enable|true|

-|linkis-hadoop-common|wds.linkis.hadoop.hdfs.cache.idle.time|3 * 60 * 1000|idle.time|true|

+|linkis-hadoop-common|wds.linkis.hadoop.hdfs.cache.idle.time|3 *60* 1000|idle.time|true|

|linkis-hadoop-common|wds.linkis.hadoop.hdfs.cache.max.time|12h| max.time |true|

diff --git a/docs/configuration/linkis-httpclient.md b/docs/configuration/linkis-httpclient.md

index 695cb2e80..89e43c5fd 100644

--- a/docs/configuration/linkis-httpclient.md

+++ b/docs/configuration/linkis-httpclient.md

@@ -1,9 +1,5 @@

## linkis-httpclient 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-httpclient|wds.linkis.httpclient.default.connect.timeOut| 50000 | httpclient.default.connect.timeOut |true|

-

-

-

diff --git a/docs/configuration/linkis-instance-label-client.md b/docs/configuration/linkis-instance-label-client.md

index 11a521362..b9938003e 100644

--- a/docs/configuration/linkis-instance-label-client.md

+++ b/docs/configuration/linkis-instance-label-client.md

@@ -1,8 +1,5 @@

## linkis-instance-label-client 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 |

-| -------- | -------- | ----- |----- |

+| -------- | -------- | ----- |----- |

| linkis-instance-label-client |wds.linkis.instance.label.server.name|linkis-ps-publicservice|instance.label.server.name|

-

-

\ No newline at end of file

diff --git a/docs/configuration/linkis-io-file-client.md b/docs/configuration/linkis-io-file-client.md

index 07045e9f4..ddfcd7e0c 100644

--- a/docs/configuration/linkis-io-file-client.md

+++ b/docs/configuration/linkis-io-file-client.md

@@ -1,17 +1,10 @@

## linkis-io-file-client 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-io-file-client|wds.linkis.io.group.factory.capacity |1000|group.factory.capacity|

|linkis-io-file-client|wds.linkis.io.group.factory.running.jobs|30 |group.factory.running.jobs|

-|linkis-io-file-client|wds.linkis.io.group.factory.executor.time|5 * 60 * 1000|group.factory.executor.time|

+|linkis-io-file-client|wds.linkis.io.group.factory.executor.time|5 *60* 1000|group.factory.executor.time|

|linkis-io-file-client|wds.linkis.io.loadbalance.capacity| 1 |loadbalance.capacity|

|linkis-io-file-client|wds.linkis.io.extra.labels| |extra.labels|

|linkis-io-file-client|wds.linkis.io.job.wait.second| 30 | job.wait.second |

-

-

-

-

-

-

diff --git a/docs/configuration/linkis-jdbc-driver.md b/docs/configuration/linkis-jdbc-driver.md

index cf063f00e..656e4a76c 100644

--- a/docs/configuration/linkis-jdbc-driver.md

+++ b/docs/configuration/linkis-jdbc-driver.md

@@ -1,7 +1,5 @@

## linkis-jdbc-driver 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 |

-| -------- | -------- | ----- |----- |

+| -------- | -------- | ----- |----- |

| linkis-jdbc-driver |wds.linkis.jdbc.pre.hook.class|org.apache.linkis.ujes.jdbc.hook.impl.TableauPreExecutionHook|pre.hook.class|

-

diff --git a/docs/configuration/linkis-jobhistory.md b/docs/configuration/linkis-jobhistory.md

index 8046b185b..27e37cf15 100644

--- a/docs/configuration/linkis-jobhistory.md

+++ b/docs/configuration/linkis-jobhistory.md

@@ -1,6 +1,5 @@

## linkis-jobhistory 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-jobhistory|wds.linkis.jobhistory.safe.trigger |true|jobhistory.safe.trigger|

@@ -15,7 +14,3 @@

|linkis-jobhistory|wds.linkis.env.is.viewfs| true|env.is.viewfs|

|linkis-jobhistory|wds.linkis.query.store.suffix| |linkis.query.store.suffix|

|linkis-jobhistory|wds.linkis.query.code.store.length|50000| query.code.store.length|

-

-

-

-

diff --git a/docs/configuration/linkis-manager-common.md b/docs/configuration/linkis-manager-common.md

index 776521ea9..8d44074c8 100644

--- a/docs/configuration/linkis-manager-common.md

+++ b/docs/configuration/linkis-manager-common.md

@@ -1,13 +1,12 @@

## linkis-manager-common 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-manager-common|wds.linkis.default.engine.type |spark|engine.type|

|linkis-manager-common|wds.linkis.manager.admin|2.4.3|manager.admin|

|linkis-manager-common|wds.linkis.rm.application.name|ResourceManager|rm.application.name|

-|linkis-manager-common|wds.linkis.rm.wait.event.time.out| 1000 * 60 * 12L |event.time.out|

-|linkis-manager-common|wds.linkis.rm.register.interval.time|1000 * 60 * 2L |interval.time|

+|linkis-manager-common|wds.linkis.rm.wait.event.time.out| 1000 *60* 12L |event.time.out|

+|linkis-manager-common|wds.linkis.rm.register.interval.time|1000 *60* 2L |interval.time|

|linkis-manager-common|wds.linkis.manager.am.node.heartbeat| 3m|node.heartbeat|

|linkis-manager-common|wds.linkis.manager.rm.lock.release.timeou|5m| lock.release.timeou|

|linkis-manager-common|wds.linkis.manager.rm.lock.release.check.interval| 5m |release.check.interval|

@@ -36,9 +35,3 @@

|linkis-manager-common|wds.linkis.rm.default.yarn.cluster.type| Yarn|yarn.cluster.type|

|linkis-manager-common|wds.linkis.rm.external.retry.num|3|external.retry.num|

|linkis-manager-common|wds.linkis.rm.default.yarn.webaddress.delimiter| ; | yarn.webaddress.delimiter|

-

-

-

-

-

-

diff --git a/docs/configuration/linkis-metadata.md b/docs/configuration/linkis-metadata.md

index 612e56d20..b98958c64 100644

--- a/docs/configuration/linkis-metadata.md

+++ b/docs/configuration/linkis-metadata.md

@@ -1,8 +1,7 @@

## linkis-metadata 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 |

-| -------- | -------- | ----- |----- |

+| -------- | -------- | ----- |----- |

| linkis-metadata |bdp.dataworkcloud.datasource.store.type|orc|datasource.store.type|

| linkis-metadata |bdp.dataworkcloud.datasource.default.par.name|ds|datasource.default.par.name|

-| linkis-metadata |wds.linkis.spark.mdq.import.clazz|org.apache.linkis.engineplugin.spark.imexport.LoadData|spark.mdq.import.clazz|

\ No newline at end of file

+| linkis-metadata |wds.linkis.spark.mdq.import.clazz|org.apache.linkis.engineplugin.spark.imexport.LoadData|spark.mdq.import.clazz|

diff --git a/docs/configuration/linkis-module.md b/docs/configuration/linkis-module.md

index 6e8fc96d9..c3ffffecd 100644

--- a/docs/configuration/linkis-module.md

+++ b/docs/configuration/linkis-module.md

@@ -1,6 +1,5 @@

## linkis-module 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-module|wds.linkis.server.component.exclude.packages| | exclude.packages |true|

@@ -47,5 +46,3 @@

|linkis-module|wds.linkis.session.proxy.user.ticket.key|linkis_user_session_proxy_ticket_id_v1 |ticket.key |true|

|linkis-module|wds.linkis.proxy.ticket.header.crypt.key| linkis-trust-key |crypt.key |true|

|linkis-module|wds.linkis.proxy.ticket.header.crypt.key| bfs_ | crypt.key|true|

-

-

diff --git a/docs/configuration/linkis-orchestrator-core.md b/docs/configuration/linkis-orchestrator-core.md

index fed8229a2..ef59b38b3 100644

--- a/docs/configuration/linkis-orchestrator-core.md

+++ b/docs/configuration/linkis-orchestrator-core.md

@@ -1,6 +1,5 @@

## linkis-orchestrator-core 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-orchestrator-core|wds.linkis.orchestrator.builder.class | |orchestrator.builder.class|

@@ -24,5 +23,3 @@

|linkis-orchestrator-core|wds.linkis.orchestrator.task.schedulis.creator| schedulis,nodeexecution|task.schedulis.creator|

|linkis-orchestrator-core|wds.linkis.orchestrator.metric.log.enable|true|orchestrator.metric.log.enable|

|linkis-orchestrator-core|wds.linkis.orchestrator.metric.log.time| 1h |orchestrator.metric.log.time|

-

-

diff --git a/docs/configuration/linkis-protocol.md b/docs/configuration/linkis-protocol.md

index 980fe061a..c1c7a7624 100644

--- a/docs/configuration/linkis-protocol.md

+++ b/docs/configuration/linkis-protocol.md

@@ -1,8 +1,5 @@

## linkis-protocol 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-protocol|wds.linkis.service.suffix| engineManager,entrance,engine | service.suffix |true|

-

-

diff --git a/docs/configuration/linkis-rpc.md b/docs/configuration/linkis-rpc.md

index e5d74d2d0..3929d7301 100644

--- a/docs/configuration/linkis-rpc.md

+++ b/docs/configuration/linkis-rpc.md

@@ -1,6 +1,5 @@

## linkis-rpc 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-rpc|wds.linkis.rpc.broadcast.thread.num| 25 | thread.num |true|

@@ -23,6 +22,3 @@

|linkis-rpc|wds.linkis.ms.service.scan.package|org.apache.linkis |scan.package|true|

|linkis-rpc|wds.linkis.rpc.spring.params.enable| false | params.enable |true|

|linkis-rpc|wds.linkis.rpc.cache.expire.time|120000L |expire.time|true|

-

-

-

diff --git a/docs/configuration/linkis-scheduler.md b/docs/configuration/linkis-scheduler.md

index c01ac8c74..2c82c35f4 100644

--- a/docs/configuration/linkis-scheduler.md

+++ b/docs/configuration/linkis-scheduler.md

@@ -1,10 +1,8 @@

## linkis-scheduler 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-scheduler|wds.linkis.fifo.consumer.auto.clear.enabled|true | auto.clear.enabled|true|

|linkis-scheduler|wds.linkis.fifo.consumer.max.idle.time|1h |max.idle.time|true|

|linkis-scheduler|wds.linkis.fifo.consumer.idle.scan.interval|2h |idle.scan.interval|true|

|linkis-scheduler|wds.linkis.fifo.consumer.idle.scan.init.time|1s | idle.scan.init.time |true|

-

diff --git a/docs/configuration/linkis-spring-cloud-gateway.md b/docs/configuration/linkis-spring-cloud-gateway.md

index d6209b33a..37bb005f0 100644

--- a/docs/configuration/linkis-spring-cloud-gateway.md

+++ b/docs/configuration/linkis-spring-cloud-gateway.md

@@ -1,7 +1,5 @@

## linkis-spring-cloud-gateway 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 |

-| -------- | -------- | ----- |----- |

+| -------- | -------- | ----- |----- |

| linkis-spring-cloud-gateway |wds.linkis.gateway.websocket.heartbeat|5s|gateway.websocket.heartbeat|

-

diff --git a/docs/configuration/linkis-storage.md b/docs/configuration/linkis-storage.md

index d773c0f61..ee4f37232 100644

--- a/docs/configuration/linkis-storage.md

+++ b/docs/configuration/linkis-storage.md

@@ -1,6 +1,5 @@

## linkis-storage 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-storage|wds.linkis.storage.proxy.user| | storage.proxy.user |true|

@@ -16,7 +15,7 @@

|linkis-storage|wds.linkis.storage.is.share.node|true |share.node|true|

|linkis-storage|wds.linkis.storage.enable.io.proxy|false| proxy |true|

|linkis-storage|wds.linkis.storage.io.user|root |io.user |true|

-|linkis-storage|wds.linkis.storage.io.fs.num| 1000 * 60 * 10 |fs.num |true|

+|linkis-storage|wds.linkis.storage.io.fs.num| 1000 *60* 10 |fs.num |true|

|linkis-storage|wds.linkis.storage.io.read.fetch.size| 100k |fetch.size |true|

|linkis-storage|wds.linkis.storage.io.write.cache.size |64k | cache.size |true|

|linkis-storage|wds.linkis.storage.io.default.creator| IDE| default.creator |true|

@@ -30,6 +29,3 @@

|linkis-storage|wds.linkis.hdfs.rest.errs| |rest.errs|true|

|linkis-storage|wds.linkis.resultset.row.max.str | 2m | max.str |true|

|linkis-storage|wds.linkis.storage.file.type | dolphin,sql,scala,py,hql,python,out,log,text,sh,jdbc,ngql,psql,fql,tsql | file.type |true|

-

-

-

diff --git a/docs/configuration/linkis-udf.md b/docs/configuration/linkis-udf.md

index b21020e23..711477679 100644

--- a/docs/configuration/linkis-udf.md

+++ b/docs/configuration/linkis-udf.md

@@ -1,6 +1,5 @@

## linkis-udf 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|linkis-udf|wds.linkis.udf.hive.exec.path |/appcom/Install/DataWorkCloudInstall/linkis-linkis-Udf-0.0.3-SNAPSHOT/lib/hive-exec-1.2.1.jar|udf.hive.exec.path|

@@ -8,4 +7,3 @@

|linkis-udf|wds.linkis.udf.share.path|/mnt/bdap/udf/|udf.share.path|

|linkis-udf|wds.linkis.udf.share.proxy.user| hadoop|udf.share.proxy.user|

|linkis-udf|wds.linkis.udf.service.name|linkis-ps-publicservice |udf.service.name|

-

\ No newline at end of file

diff --git a/docs/configuration/pipeline.md b/docs/configuration/pipeline.md

index 03f1f1cdc..e495037e6 100644

--- a/docs/configuration/pipeline.md

+++ b/docs/configuration/pipeline.md

@@ -1,13 +1,9 @@

## pipeline 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 |

-| -------- | -------- | ----- |----- |

+| -------- | -------- | ----- |----- |

|pipeline| pipeline.output.isoverwtite | true | isoverwtite |

|pipeline|pipeline.output.charset|UTF-8|charset|

|pipeline|pipeline.field.split| , |split|

|pipeline|wds.linkis.engine.pipeline.field.quote.retoch.enable|false | field.quote.retoch.enable |

|pipeline|wds.linkis.pipeline.export.excel.auto_format.enable| false | auto_format.enable

-

-

-

diff --git a/docs/configuration/presto.md b/docs/configuration/presto.md

index fca90759a..aa26c5ee4 100644

--- a/docs/configuration/presto.md

+++ b/docs/configuration/presto.md

@@ -1,6 +1,5 @@

## presto 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|presto|wds.linkis.engineconn.concurrent.limit|100|engineconn.concurrent.limit|

@@ -10,7 +9,7 @@

|presto|wds.linkis.presto.http.connectTimeout|60 |presto.http.connectTimeout|

|presto|wds.linkis.presto.http.readTimeout| 60|presto.http.readTimeout |

|presto|wds.linkis.presto.default.limit|5000| presto.default.limit |

-|presto|wds.linkis.presto.url| http://127.0.0.1:8080 |presto.url|

+|presto|wds.linkis.presto.url| <http://127.0.0.1:8080> |presto.url|

|presto|wds.linkis.presto.resource.config| | presto.resource.config |

|presto|wds.linkis.presto.username|default|presto.username|

|presto|wds.linkis.presto.password| | presto.password |

@@ -19,5 +18,3 @@

|presto|wds.linkis.presto.source| |presto.source|

|presto|wds.linkis.presto.source| global |presto.source|

|presto|presto.session.query_max_total_memory|8GB|query_max_total_memory|

-

-

diff --git a/docs/configuration/python.md b/docs/configuration/python.md

index 5e3687377..11804bfbb 100644

--- a/docs/configuration/python.md

+++ b/docs/configuration/python.md

@@ -1,10 +1,8 @@

## python 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|python|wds.linkis.python.line.limit|10|python.line.limit|

|python|wds.linkis.python.py4j.home |getPy4jHome |python.py4j.home|

|python|wds.linkis.engine.python.language-repl.init.time|30s|python.language-repl.init.time|

|python|pythonVersion|python3|pythonVersion|

-

diff --git a/docs/configuration/spark.md b/docs/configuration/spark.md

index 499064346..e7cc82207 100644

--- a/docs/configuration/spark.md

+++ b/docs/configuration/spark.md

@@ -1,6 +1,5 @@

## spark 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|spark|linkis.bgservice.store.prefix|hdfs:///tmp/bdp-ide/|bgservice.store.prefix|

@@ -25,7 +24,3 @@

|spark|wds.linkis.spark.engine.is.viewfs.env| true | spark.engine.is.viewfs.env|

|spark|wds.linkis.spark.engineconn.fatal.log|error writing class;OutOfMemoryError|spark.engineconn.fatal.log|

|spark|wds.linkis.spark.engine.scala.replace_package_header.enable| true |spark.engine.scala.replace_package_header.enable|

-

-

-

-

\ No newline at end of file

diff --git a/docs/configuration/sqoop.md b/docs/configuration/sqoop.md

index 85eaa87d9..fe241fbe8 100644

--- a/docs/configuration/sqoop.md

+++ b/docs/configuration/sqoop.md

@@ -1,6 +1,5 @@

## sqoop 配置

-

| 模块名(服务名) | 参数名 | 默认值 | 描述 | 是否引用|

| -------- | -------- | ----- |----- | ----- |

|sqoop|wds.linkis.hadoop.site.xml |core-site.xml;hdfs-site.xml;yarn-site.xml;mapred-site.xml| hadoop.site.xml|

@@ -13,5 +12,3 @@

|sqoop|sqoop.params.name.data-source| sqoop.args.datasource.name |params.name.data-source|

|sqoop|sqoop.params.name.prefix| sqoop.args. | params.name.prefix |

|sqoop|sqoop.params.name.env.prefix| sqoop.env. |params.name.env.prefix|

-

-

diff --git a/docs/errorcode/linkis-common-errorcode.md b/docs/errorcode/linkis-common-errorcode.md

index 041860fe1..989d57759 100644

--- a/docs/errorcode/linkis-common-errorcode.md

+++ b/docs/errorcode/linkis-common-errorcode.md

@@ -1,6 +1,5 @@

## linkis-common errorcode

-

| 模块名(服务名) | 错误码 | 描述 | module|

| -------- | -------- | ----- |-----|

|linkis-common|11000|Engine failed to start(引擎启动失败)|hiveEngineConn|

@@ -10,9 +9,3 @@

|linkis-common|20100|User is empty in the parameters of the request engine(请求引擎的参数中user为空)|EngineConnManager|

|linkis-common|321|Failed to start under certain circumstances(在某种情况下启动失败)|EngineConnManager|

|linkis-common|10000|Error code definition exceeds the maximum value or is less than the minimum value(错误码定义超过最大值或者小于最小值)|linkis-frame|

-

-

-

-

-

-

diff --git a/linkis-dist/helm/README.md b/linkis-dist/helm/README.md

index 22818decd..c4cbb7daf 100644

--- a/linkis-dist/helm/README.md

+++ b/linkis-dist/helm/README.md

@@ -1,37 +1,38 @@

-Helm charts for Linkis

+Helm charts for Linkis

==========

[](https://www.apache.org/licenses/LICENSE-2.0.html)

# Pre-requisites

+

> Note: KinD is required only for development and testing.

+

* [Kubernetes](https://kubernetes.io/docs/setup/), minimum version v1.21.0+

* [Helm](https://helm.sh/docs/intro/install/), minimum version v3.0.0+.

* [KinD](https://kind.sigs.k8s.io/docs/user/quick-start/), minimum version v0.11.0+.

-

# Installation

```shell

-# Deploy Apache Linkis on kubernetes, kubernetes

+# Deploy Apache Linkis on kubernetes, kubernetes

# namespace is 'linkis', helm release is 'linkis-demo'

# Option 1, use build-in script

$> ./scripts/install-charts.sh linkis linkis-demo

# Option 2, use `helm` command line

-$> helm install --create-namespace -f ./charts/linkis/values.yaml --namespace linkis linkis-demo ./charts/linkis

+$> helm install --create-namespace -f ./charts/linkis/values.yaml --namespace linkis linkis-demo ./charts/linkis

```

# Uninstallation

```shell

-$> helm delete --namespace linkis linkis-demo

+$> helm delete --namespace linkis linkis-demo

```

# For developers

-We recommend using [KinD](https://kind.sigs.k8s.io/docs/user/quick-start/) for development and testing.

+We recommend using [KinD](https://kind.sigs.k8s.io/docs/user/quick-start/) for development and testing.

KinD is a tool for running local Kubernetes clusters using Docker container as “Kubernetes nodes”.