You are viewing a plain text version of this content. The canonical link for it is here.

Posted to dev@ambari.apache.org by "smallyao (via GitHub)" <gi...@apache.org> on 2023/03/29 12:39:39 UTC

[GitHub] [ambari] smallyao opened a new pull request, #3677: AMBARI-25894: Missing file service_advisor.py in some serivces

smallyao opened a new pull request, #3677:

URL: https://github.com/apache/ambari/pull/3677

## What changes were proposed in this pull request?

hdfs/yarn/zookeeper don't have service advisor

(Please fill in changes proposed in this fix)

## How was this patch tested?

Tested locally

(Please explain how this patch was tested. Ex: unit tests, manual tests)

(If this patch involves UI changes, please attach a screen-shot; otherwise, remove this)

Please review [Ambari Contributing Guide](https://cwiki.apache.org/confluence/display/AMBARI/How+to+Contribute) before opening a pull request.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] JiaLiangC commented on pull request #3677: AMBARI-25894: Missing file service_advisor.py in some serivces

Posted by "JiaLiangC (via GitHub)" <gi...@apache.org>.

JiaLiangC commented on PR #3677:

URL: https://github.com/apache/ambari/pull/3677#issuecomment-1538119637

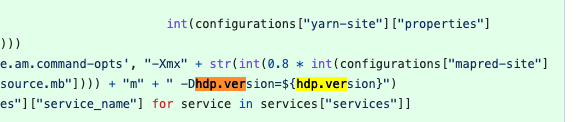

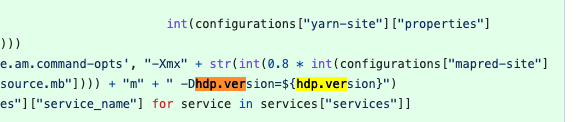

In YARN's service advisor.py, an opt option 'hdp.version' was added to MR tasks, which caused the MR tasks to fail to run.

`

/hadoop/yarn/local/usercache/ambari-qa/appcache/application_1682216948797_0003/container_1682216948797_0003_02_000001/launch_container.sh:行57: $JAVA_HOME/bin/java -Djava.io.tmpdir=$PWD/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/data1/hadoop/yarn/log/application_1682216948797_0003/container_1682216948797_0003_02_000001 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -server -XX:NewRatio=8 -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx1638m -Dhdp.version=${hdp.version} org.apache.hadoop.mapreduce.v2.app.MRAppMaster 1>/data1/hadoop/yarn/log/application_1682216948797_0003/container_1682216948797_0003_02_000001/stdout 2>/data1/hadoop/yarn/log/application_1682216948797_0003/container_1682216948797_0003_02_000001/stderr : 坏的替换`

Because the 'hdp.version' environment variable in this command does not exist in the bigtop stack, it is recommended to modify this commit by removing 'hdp.version'.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] smallyao commented on pull request #3677: AMBARI-25894: Missing file service_advisor.py in some serivces

Posted by "smallyao (via GitHub)" <gi...@apache.org>.

smallyao commented on PR #3677:

URL: https://github.com/apache/ambari/pull/3677#issuecomment-1554115538

Thanks for your attention @virajjasani

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] timyuer commented on pull request #3677: AMBARI-25894: Missing file service_advisor.py in some serivces

Posted by "timyuer (via GitHub)" <gi...@apache.org>.

timyuer commented on PR #3677:

URL: https://github.com/apache/ambari/pull/3677#issuecomment-1563696976

Whether can consider will HIVE users into ` getServiceHadoopProxyUsersConfigurationDict ` method, so that a unified set.

https://github.com/smallyao/ambari/blob/b152e84fbf77eafa34e0ade508ba9c475cd4d420/ambari-server/src/main/resources/stacks/stack_advisor.py#LL2675C1-L2688C1

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] timyuer merged pull request #3677: AMBARI-25894: Missing file service_advisor.py in some serivces

Posted by "timyuer (via GitHub)" <gi...@apache.org>.

timyuer merged PR #3677:

URL: https://github.com/apache/ambari/pull/3677

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] timyuer commented on a diff in pull request #3677: AMBARI-25894: Missing file service_advisor.py in some serivces

Posted by "timyuer (via GitHub)" <gi...@apache.org>.

timyuer commented on code in PR #3677:

URL: https://github.com/apache/ambari/pull/3677#discussion_r1206141661

##########

ambari-server/src/main/resources/stacks/BIGTOP/3.2.0/services/stack_advisor.py:

##########

@@ -1,1809 +0,0 @@

-#!/usr/bin/env ambari-python-wrap

-"""

-Licensed to the Apache Software Foundation (ASF) under one

-or more contributor license agreements. See the NOTICE file

-distributed with this work for additional information

-regarding copyright ownership. The ASF licenses this file

-to you under the Apache License, Version 2.0 (the

-"License"); you may not use this file except in compliance

-with the License. You may obtain a copy of the License at

-

- http://www.apache.org/licenses/LICENSE-2.0

-

-Unless required by applicable law or agreed to in writing, software

-distributed under the License is distributed on an "AS IS" BASIS,

-WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-See the License for the specific language governing permissions and

-limitations under the License.

-"""

-

-import re

-import os

-import sys

-import socket

-

-from math import ceil, floor

-

-from resource_management.libraries.functions.mounted_dirs_helper import get_mounts_with_multiple_data_dirs

-

-from stack_advisor import DefaultStackAdvisor

-

-

-class BIGTOP320StackAdvisor(DefaultStackAdvisor):

-

- def __init__(self):

- super(BIGTOP320StackAdvisor, self).__init__()

- self.initialize_logger("BIGTOP320StackAdvisor")

-

- def getComponentLayoutValidations(self, services, hosts):

- """Returns array of Validation objects about issues with hostnames components assigned to"""

- items = super(BIGTOP320StackAdvisor, self).getComponentLayoutValidations(services, hosts)

-

- # Validating NAMENODE and SECONDARY_NAMENODE are on different hosts if possible

- # Use a set for fast lookup

- hostsSet = set(super(BIGTOP320StackAdvisor, self).getActiveHosts([host["Hosts"] for host in hosts["items"]])) #[host["Hosts"]["host_name"] for host in hosts["items"]]

- hostsCount = len(hostsSet)

-

- componentsListList = [service["components"] for service in services["services"]]

- componentsList = [item for sublist in componentsListList for item in sublist]

- nameNodeHosts = [component["StackServiceComponents"]["hostnames"] for component in componentsList if component["StackServiceComponents"]["component_name"] == "NAMENODE"]

- secondaryNameNodeHosts = [component["StackServiceComponents"]["hostnames"] for component in componentsList if component["StackServiceComponents"]["component_name"] == "SECONDARY_NAMENODE"]

-

- # Validating cardinality

- for component in componentsList:

- if component["StackServiceComponents"]["cardinality"] is not None:

- componentName = component["StackServiceComponents"]["component_name"]

- componentDisplayName = component["StackServiceComponents"]["display_name"]

- componentHosts = []

- if component["StackServiceComponents"]["hostnames"] is not None:

- componentHosts = [componentHost for componentHost in component["StackServiceComponents"]["hostnames"] if componentHost in hostsSet]

- componentHostsCount = len(componentHosts)

- cardinality = str(component["StackServiceComponents"]["cardinality"])

- # cardinality types: null, 1+, 1-2, 1, ALL

- message = None

- if "+" in cardinality:

- hostsMin = int(cardinality[:-1])

- if componentHostsCount < hostsMin:

- message = "At least {0} {1} components should be installed in cluster.".format(hostsMin, componentDisplayName)

- elif "-" in cardinality:

- nums = cardinality.split("-")

- hostsMin = int(nums[0])

- hostsMax = int(nums[1])

- if componentHostsCount > hostsMax or componentHostsCount < hostsMin:

- message = "Between {0} and {1} {2} components should be installed in cluster.".format(hostsMin, hostsMax, componentDisplayName)

- elif "ALL" == cardinality:

- if componentHostsCount != hostsCount:

- message = "{0} component should be installed on all hosts in cluster.".format(componentDisplayName)

- else:

- if componentHostsCount != int(cardinality):

- message = "Exactly {0} {1} components should be installed in cluster.".format(int(cardinality), componentDisplayName)

-

- if message is not None:

- items.append({"type": 'host-component', "level": 'ERROR', "message": message, "component-name": componentName})

-

- # Validating host-usage

- usedHostsListList = [component["StackServiceComponents"]["hostnames"] for component in componentsList if not self.isComponentNotValuable(component)]

- usedHostsList = [item for sublist in usedHostsListList for item in sublist]

- nonUsedHostsList = [item for item in hostsSet if item not in usedHostsList]

- for host in nonUsedHostsList:

- items.append( { "type": 'host-component', "level": 'ERROR', "message": 'Host is not used', "host": str(host) } )

-

- return items

-

- def getServiceConfigurationRecommenderDict(self):

- return {

- "YARN": self.recommendYARNConfigurations,

- "MAPREDUCE2": self.recommendMapReduce2Configurations,

- "HDFS": self.recommendHDFSConfigurations,

- "HBASE": self.recommendHbaseConfigurations,

- "AMBARI_METRICS": self.recommendAmsConfigurations

- }

-

- def recommendYARNConfigurations(self, configurations, clusterData, services, hosts):

- putYarnProperty = self.putProperty(configurations, "yarn-site", services)

- putYarnPropertyAttribute = self.putPropertyAttribute(configurations, "yarn-site")

- putYarnEnvProperty = self.putProperty(configurations, "yarn-env", services)

- nodemanagerMinRam = 1048576 # 1TB in mb

- if "referenceNodeManagerHost" in clusterData:

- nodemanagerMinRam = min(clusterData["referenceNodeManagerHost"]["total_mem"]/1024, nodemanagerMinRam)

- putYarnProperty('yarn.nodemanager.resource.memory-mb', int(round(min(clusterData['containers'] * clusterData['ramPerContainer'], nodemanagerMinRam))))

- putYarnProperty('yarn.scheduler.minimum-allocation-mb', int(clusterData['ramPerContainer']))

- putYarnProperty('yarn.scheduler.maximum-allocation-mb', int(configurations["yarn-site"]["properties"]["yarn.nodemanager.resource.memory-mb"]))

- putYarnEnvProperty('min_user_id', self.get_system_min_uid())

-

- sc_queue_name = self.recommendYarnQueue(services, "yarn-env", "service_check.queue.name")

- if sc_queue_name is not None:

- putYarnEnvProperty("service_check.queue.name", sc_queue_name)

-

- containerExecutorGroup = 'hadoop'

- if 'cluster-env' in services['configurations'] and 'user_group' in services['configurations']['cluster-env']['properties']:

- containerExecutorGroup = services['configurations']['cluster-env']['properties']['user_group']

- putYarnProperty("yarn.nodemanager.linux-container-executor.group", containerExecutorGroup)

-

- servicesList = [service["StackServices"]["service_name"] for service in services["services"]]

- if "TEZ" in servicesList:

- ambari_user = self.getAmbariUser(services)

- ambariHostName = socket.getfqdn()

- putYarnProperty("yarn.timeline-service.http-authentication.proxyuser.{0}.hosts".format(ambari_user), ambariHostName)

- putYarnProperty("yarn.timeline-service.http-authentication.proxyuser.{0}.groups".format(ambari_user), "*")

- old_ambari_user = self.getOldAmbariUser(services)

- if old_ambari_user is not None:

- putYarnPropertyAttribute("yarn.timeline-service.http-authentication.proxyuser.{0}.hosts".format(old_ambari_user), 'delete', 'true')

- putYarnPropertyAttribute("yarn.timeline-service.http-authentication.proxyuser.{0}.groups".format(old_ambari_user), 'delete', 'true')

-

-

- def recommendMapReduce2Configurations(self, configurations, clusterData, services, hosts):

- putMapredProperty = self.putProperty(configurations, "mapred-site", services)

- putMapredProperty('yarn.app.mapreduce.am.resource.mb', int(clusterData['amMemory']))

- putMapredProperty('yarn.app.mapreduce.am.command-opts', "-Xmx" + str(int(round(0.8 * clusterData['amMemory']))) + "m")

- putMapredProperty('mapreduce.map.memory.mb', clusterData['mapMemory'])

- putMapredProperty('mapreduce.reduce.memory.mb', int(clusterData['reduceMemory']))

- putMapredProperty('mapreduce.map.java.opts', "-Xmx" + str(int(round(0.8 * clusterData['mapMemory']))) + "m")

- putMapredProperty('mapreduce.reduce.java.opts', "-Xmx" + str(int(round(0.8 * clusterData['reduceMemory']))) + "m")

- putMapredProperty('mapreduce.task.io.sort.mb', min(int(round(0.4 * clusterData['mapMemory'])), 1024))

- mr_queue = self.recommendYarnQueue(services, "mapred-site", "mapreduce.job.queuename")

- if mr_queue is not None:

- putMapredProperty("mapreduce.job.queuename", mr_queue)

-

- def getAmbariUser(self, services):

- ambari_user = services['ambari-server-properties']['ambari-server.user']

- if "cluster-env" in services["configurations"] \

- and "ambari_principal_name" in services["configurations"]["cluster-env"]["properties"] \

- and "security_enabled" in services["configurations"]["cluster-env"]["properties"] \

- and services["configurations"]["cluster-env"]["properties"]["security_enabled"].lower() == "true":

- ambari_user = services["configurations"]["cluster-env"]["properties"]["ambari_principal_name"]

- ambari_user = ambari_user.split('@')[0]

- return ambari_user

-

- def getOldAmbariUser(self, services):

- ambari_user = None

- if "cluster-env" in services["configurations"]:

- if "security_enabled" in services["configurations"]["cluster-env"]["properties"] \

- and services["configurations"]["cluster-env"]["properties"]["security_enabled"].lower() == "true":

- ambari_user = services['ambari-server-properties']['ambari-server.user']

- elif "ambari_principal_name" in services["configurations"]["cluster-env"]["properties"]:

- ambari_user = services["configurations"]["cluster-env"]["properties"]["ambari_principal_name"]

- ambari_user = ambari_user.split('@')[0]

- return ambari_user

-

- def recommendAmbariProxyUsersForHDFS(self, services, servicesList, putCoreSiteProperty, putCoreSitePropertyAttribute):

- if "HDFS" in servicesList:

- ambari_user = self.getAmbariUser(services)

- ambariHostName = socket.getfqdn()

- putCoreSiteProperty("hadoop.proxyuser.{0}.hosts".format(ambari_user), ambariHostName)

- putCoreSiteProperty("hadoop.proxyuser.{0}.groups".format(ambari_user), "*")

- old_ambari_user = self.getOldAmbariUser(services)

- if old_ambari_user is not None:

- putCoreSitePropertyAttribute("hadoop.proxyuser.{0}.hosts".format(old_ambari_user), 'delete', 'true')

- putCoreSitePropertyAttribute("hadoop.proxyuser.{0}.groups".format(old_ambari_user), 'delete', 'true')

-

- def recommendHadoopProxyUsers (self, configurations, services, hosts):

- servicesList = [service["StackServices"]["service_name"] for service in services["services"]]

- users = {}

-

- if 'forced-configurations' not in services:

- services["forced-configurations"] = []

-

- if "HDFS" in servicesList:

- hdfs_user = None

- if "hadoop-env" in services["configurations"] and "hdfs_user" in services["configurations"]["hadoop-env"]["properties"]:

- hdfs_user = services["configurations"]["hadoop-env"]["properties"]["hdfs_user"]

- if not hdfs_user in users and hdfs_user is not None:

- users[hdfs_user] = {"propertyHosts" : "*","propertyGroups" : "*", "config" : "hadoop-env", "propertyName" : "hdfs_user"}

-

- if "OOZIE" in servicesList:

- oozie_user = None

- if "oozie-env" in services["configurations"] and "oozie_user" in services["configurations"]["oozie-env"]["properties"]:

- oozie_user = services["configurations"]["oozie-env"]["properties"]["oozie_user"]

- oozieServerrHosts = self.getHostsWithComponent("OOZIE", "OOZIE_SERVER", services, hosts)

- if oozieServerrHosts is not None:

- oozieServerHostsNameList = []

- for oozieServerHost in oozieServerrHosts:

- oozieServerHostsNameList.append(oozieServerHost["Hosts"]["host_name"])

- oozieServerHostsNames = ",".join(oozieServerHostsNameList)

- if not oozie_user in users and oozie_user is not None:

- users[oozie_user] = {"propertyHosts" : oozieServerHostsNames,"propertyGroups" : "*", "config" : "oozie-env", "propertyName" : "oozie_user"}

-

- hive_user = None

- if "HIVE" in servicesList:

- webhcat_user = None

- if "hive-env" in services["configurations"] and "hive_user" in services["configurations"]["hive-env"]["properties"] \

- and "webhcat_user" in services["configurations"]["hive-env"]["properties"]:

- hive_user = services["configurations"]["hive-env"]["properties"]["hive_user"]

- webhcat_user = services["configurations"]["hive-env"]["properties"]["webhcat_user"]

- hiveServerHosts = self.getHostsWithComponent("HIVE", "HIVE_SERVER", services, hosts)

- hiveServerInteractiveHosts = self.getHostsWithComponent("HIVE", "HIVE_SERVER_INTERACTIVE", services, hosts)

- webHcatServerHosts = self.getHostsWithComponent("HIVE", "WEBHCAT_SERVER", services, hosts)

-

- if hiveServerHosts is not None:

- hiveServerHostsNameList = []

- for hiveServerHost in hiveServerHosts:

- hiveServerHostsNameList.append(hiveServerHost["Hosts"]["host_name"])

- # Append Hive Server Interactive host as well, as it is Hive2/HiveServer2 component.

- if hiveServerInteractiveHosts:

- for hiveServerInteractiveHost in hiveServerInteractiveHosts:

- hiveServerInteractiveHostName = hiveServerInteractiveHost["Hosts"]["host_name"]

- if hiveServerInteractiveHostName not in hiveServerHostsNameList:

- hiveServerHostsNameList.append(hiveServerInteractiveHostName)

- self.logger.info("Appended (if not exiting), Hive Server Interactive Host : '{0}', to Hive Server Host List : '{1}'".format(hiveServerInteractiveHostName, hiveServerHostsNameList))

-

- hiveServerHostsNames = ",".join(hiveServerHostsNameList) # includes Hive Server interactive host also.

- self.logger.info("Hive Server and Hive Server Interactive (if enabled) Host List : {0}".format(hiveServerHostsNameList))

- if not hive_user in users and hive_user is not None:

- users[hive_user] = {"propertyHosts" : hiveServerHostsNames,"propertyGroups" : "*", "config" : "hive-env", "propertyName" : "hive_user"}

-

- if webHcatServerHosts is not None:

- webHcatServerHostsNameList = []

- for webHcatServerHost in webHcatServerHosts:

- webHcatServerHostsNameList.append(webHcatServerHost["Hosts"]["host_name"])

- webHcatServerHostsNames = ",".join(webHcatServerHostsNameList)

- if not webhcat_user in users and webhcat_user is not None:

- users[webhcat_user] = {"propertyHosts" : webHcatServerHostsNames,"propertyGroups" : "*", "config" : "hive-env", "propertyName" : "webhcat_user"}

Review Comment:

I didn't found where to set up the `webhcat_user` proxy like this in the new code.

##########

ambari-server/src/main/resources/stacks/BIGTOP/3.2.0/services/stack_advisor.py:

##########

@@ -1,1809 +0,0 @@

-#!/usr/bin/env ambari-python-wrap

-"""

-Licensed to the Apache Software Foundation (ASF) under one

-or more contributor license agreements. See the NOTICE file

-distributed with this work for additional information

-regarding copyright ownership. The ASF licenses this file

-to you under the Apache License, Version 2.0 (the

-"License"); you may not use this file except in compliance

-with the License. You may obtain a copy of the License at

-

- http://www.apache.org/licenses/LICENSE-2.0

-

-Unless required by applicable law or agreed to in writing, software

-distributed under the License is distributed on an "AS IS" BASIS,

-WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-See the License for the specific language governing permissions and

-limitations under the License.

-"""

-

-import re

-import os

-import sys

-import socket

-

-from math import ceil, floor

-

-from resource_management.libraries.functions.mounted_dirs_helper import get_mounts_with_multiple_data_dirs

-

-from stack_advisor import DefaultStackAdvisor

-

-

-class BIGTOP320StackAdvisor(DefaultStackAdvisor):

-

- def __init__(self):

- super(BIGTOP320StackAdvisor, self).__init__()

- self.initialize_logger("BIGTOP320StackAdvisor")

-

- def getComponentLayoutValidations(self, services, hosts):

- """Returns array of Validation objects about issues with hostnames components assigned to"""

- items = super(BIGTOP320StackAdvisor, self).getComponentLayoutValidations(services, hosts)

-

- # Validating NAMENODE and SECONDARY_NAMENODE are on different hosts if possible

- # Use a set for fast lookup

- hostsSet = set(super(BIGTOP320StackAdvisor, self).getActiveHosts([host["Hosts"] for host in hosts["items"]])) #[host["Hosts"]["host_name"] for host in hosts["items"]]

- hostsCount = len(hostsSet)

-

- componentsListList = [service["components"] for service in services["services"]]

- componentsList = [item for sublist in componentsListList for item in sublist]

- nameNodeHosts = [component["StackServiceComponents"]["hostnames"] for component in componentsList if component["StackServiceComponents"]["component_name"] == "NAMENODE"]

- secondaryNameNodeHosts = [component["StackServiceComponents"]["hostnames"] for component in componentsList if component["StackServiceComponents"]["component_name"] == "SECONDARY_NAMENODE"]

-

- # Validating cardinality

- for component in componentsList:

- if component["StackServiceComponents"]["cardinality"] is not None:

- componentName = component["StackServiceComponents"]["component_name"]

- componentDisplayName = component["StackServiceComponents"]["display_name"]

- componentHosts = []

- if component["StackServiceComponents"]["hostnames"] is not None:

- componentHosts = [componentHost for componentHost in component["StackServiceComponents"]["hostnames"] if componentHost in hostsSet]

- componentHostsCount = len(componentHosts)

- cardinality = str(component["StackServiceComponents"]["cardinality"])

- # cardinality types: null, 1+, 1-2, 1, ALL

- message = None

- if "+" in cardinality:

- hostsMin = int(cardinality[:-1])

- if componentHostsCount < hostsMin:

- message = "At least {0} {1} components should be installed in cluster.".format(hostsMin, componentDisplayName)

- elif "-" in cardinality:

- nums = cardinality.split("-")

- hostsMin = int(nums[0])

- hostsMax = int(nums[1])

- if componentHostsCount > hostsMax or componentHostsCount < hostsMin:

- message = "Between {0} and {1} {2} components should be installed in cluster.".format(hostsMin, hostsMax, componentDisplayName)

- elif "ALL" == cardinality:

- if componentHostsCount != hostsCount:

- message = "{0} component should be installed on all hosts in cluster.".format(componentDisplayName)

- else:

- if componentHostsCount != int(cardinality):

- message = "Exactly {0} {1} components should be installed in cluster.".format(int(cardinality), componentDisplayName)

-

- if message is not None:

- items.append({"type": 'host-component', "level": 'ERROR', "message": message, "component-name": componentName})

-

- # Validating host-usage

- usedHostsListList = [component["StackServiceComponents"]["hostnames"] for component in componentsList if not self.isComponentNotValuable(component)]

- usedHostsList = [item for sublist in usedHostsListList for item in sublist]

- nonUsedHostsList = [item for item in hostsSet if item not in usedHostsList]

- for host in nonUsedHostsList:

- items.append( { "type": 'host-component', "level": 'ERROR', "message": 'Host is not used', "host": str(host) } )

-

- return items

-

- def getServiceConfigurationRecommenderDict(self):

- return {

- "YARN": self.recommendYARNConfigurations,

- "MAPREDUCE2": self.recommendMapReduce2Configurations,

- "HDFS": self.recommendHDFSConfigurations,

- "HBASE": self.recommendHbaseConfigurations,

- "AMBARI_METRICS": self.recommendAmsConfigurations

- }

-

- def recommendYARNConfigurations(self, configurations, clusterData, services, hosts):

- putYarnProperty = self.putProperty(configurations, "yarn-site", services)

- putYarnPropertyAttribute = self.putPropertyAttribute(configurations, "yarn-site")

- putYarnEnvProperty = self.putProperty(configurations, "yarn-env", services)

- nodemanagerMinRam = 1048576 # 1TB in mb

- if "referenceNodeManagerHost" in clusterData:

- nodemanagerMinRam = min(clusterData["referenceNodeManagerHost"]["total_mem"]/1024, nodemanagerMinRam)

- putYarnProperty('yarn.nodemanager.resource.memory-mb', int(round(min(clusterData['containers'] * clusterData['ramPerContainer'], nodemanagerMinRam))))

- putYarnProperty('yarn.scheduler.minimum-allocation-mb', int(clusterData['ramPerContainer']))

- putYarnProperty('yarn.scheduler.maximum-allocation-mb', int(configurations["yarn-site"]["properties"]["yarn.nodemanager.resource.memory-mb"]))

- putYarnEnvProperty('min_user_id', self.get_system_min_uid())

-

- sc_queue_name = self.recommendYarnQueue(services, "yarn-env", "service_check.queue.name")

- if sc_queue_name is not None:

- putYarnEnvProperty("service_check.queue.name", sc_queue_name)

-

- containerExecutorGroup = 'hadoop'

- if 'cluster-env' in services['configurations'] and 'user_group' in services['configurations']['cluster-env']['properties']:

- containerExecutorGroup = services['configurations']['cluster-env']['properties']['user_group']

- putYarnProperty("yarn.nodemanager.linux-container-executor.group", containerExecutorGroup)

-

- servicesList = [service["StackServices"]["service_name"] for service in services["services"]]

- if "TEZ" in servicesList:

- ambari_user = self.getAmbariUser(services)

- ambariHostName = socket.getfqdn()

- putYarnProperty("yarn.timeline-service.http-authentication.proxyuser.{0}.hosts".format(ambari_user), ambariHostName)

- putYarnProperty("yarn.timeline-service.http-authentication.proxyuser.{0}.groups".format(ambari_user), "*")

- old_ambari_user = self.getOldAmbariUser(services)

- if old_ambari_user is not None:

- putYarnPropertyAttribute("yarn.timeline-service.http-authentication.proxyuser.{0}.hosts".format(old_ambari_user), 'delete', 'true')

- putYarnPropertyAttribute("yarn.timeline-service.http-authentication.proxyuser.{0}.groups".format(old_ambari_user), 'delete', 'true')

-

-

- def recommendMapReduce2Configurations(self, configurations, clusterData, services, hosts):

- putMapredProperty = self.putProperty(configurations, "mapred-site", services)

- putMapredProperty('yarn.app.mapreduce.am.resource.mb', int(clusterData['amMemory']))

- putMapredProperty('yarn.app.mapreduce.am.command-opts', "-Xmx" + str(int(round(0.8 * clusterData['amMemory']))) + "m")

- putMapredProperty('mapreduce.map.memory.mb', clusterData['mapMemory'])

- putMapredProperty('mapreduce.reduce.memory.mb', int(clusterData['reduceMemory']))

- putMapredProperty('mapreduce.map.java.opts', "-Xmx" + str(int(round(0.8 * clusterData['mapMemory']))) + "m")

- putMapredProperty('mapreduce.reduce.java.opts', "-Xmx" + str(int(round(0.8 * clusterData['reduceMemory']))) + "m")

- putMapredProperty('mapreduce.task.io.sort.mb', min(int(round(0.4 * clusterData['mapMemory'])), 1024))

- mr_queue = self.recommendYarnQueue(services, "mapred-site", "mapreduce.job.queuename")

- if mr_queue is not None:

- putMapredProperty("mapreduce.job.queuename", mr_queue)

-

- def getAmbariUser(self, services):

- ambari_user = services['ambari-server-properties']['ambari-server.user']

- if "cluster-env" in services["configurations"] \

- and "ambari_principal_name" in services["configurations"]["cluster-env"]["properties"] \

- and "security_enabled" in services["configurations"]["cluster-env"]["properties"] \

- and services["configurations"]["cluster-env"]["properties"]["security_enabled"].lower() == "true":

- ambari_user = services["configurations"]["cluster-env"]["properties"]["ambari_principal_name"]

- ambari_user = ambari_user.split('@')[0]

- return ambari_user

-

- def getOldAmbariUser(self, services):

- ambari_user = None

- if "cluster-env" in services["configurations"]:

- if "security_enabled" in services["configurations"]["cluster-env"]["properties"] \

- and services["configurations"]["cluster-env"]["properties"]["security_enabled"].lower() == "true":

- ambari_user = services['ambari-server-properties']['ambari-server.user']

- elif "ambari_principal_name" in services["configurations"]["cluster-env"]["properties"]:

- ambari_user = services["configurations"]["cluster-env"]["properties"]["ambari_principal_name"]

- ambari_user = ambari_user.split('@')[0]

- return ambari_user

-

- def recommendAmbariProxyUsersForHDFS(self, services, servicesList, putCoreSiteProperty, putCoreSitePropertyAttribute):

- if "HDFS" in servicesList:

- ambari_user = self.getAmbariUser(services)

- ambariHostName = socket.getfqdn()

- putCoreSiteProperty("hadoop.proxyuser.{0}.hosts".format(ambari_user), ambariHostName)

- putCoreSiteProperty("hadoop.proxyuser.{0}.groups".format(ambari_user), "*")

- old_ambari_user = self.getOldAmbariUser(services)

- if old_ambari_user is not None:

- putCoreSitePropertyAttribute("hadoop.proxyuser.{0}.hosts".format(old_ambari_user), 'delete', 'true')

- putCoreSitePropertyAttribute("hadoop.proxyuser.{0}.groups".format(old_ambari_user), 'delete', 'true')

-

- def recommendHadoopProxyUsers (self, configurations, services, hosts):

- servicesList = [service["StackServices"]["service_name"] for service in services["services"]]

- users = {}

-

- if 'forced-configurations' not in services:

- services["forced-configurations"] = []

-

- if "HDFS" in servicesList:

- hdfs_user = None

- if "hadoop-env" in services["configurations"] and "hdfs_user" in services["configurations"]["hadoop-env"]["properties"]:

- hdfs_user = services["configurations"]["hadoop-env"]["properties"]["hdfs_user"]

- if not hdfs_user in users and hdfs_user is not None:

- users[hdfs_user] = {"propertyHosts" : "*","propertyGroups" : "*", "config" : "hadoop-env", "propertyName" : "hdfs_user"}

-

- if "OOZIE" in servicesList:

- oozie_user = None

- if "oozie-env" in services["configurations"] and "oozie_user" in services["configurations"]["oozie-env"]["properties"]:

- oozie_user = services["configurations"]["oozie-env"]["properties"]["oozie_user"]

- oozieServerrHosts = self.getHostsWithComponent("OOZIE", "OOZIE_SERVER", services, hosts)

- if oozieServerrHosts is not None:

- oozieServerHostsNameList = []

- for oozieServerHost in oozieServerrHosts:

- oozieServerHostsNameList.append(oozieServerHost["Hosts"]["host_name"])

- oozieServerHostsNames = ",".join(oozieServerHostsNameList)

- if not oozie_user in users and oozie_user is not None:

- users[oozie_user] = {"propertyHosts" : oozieServerHostsNames,"propertyGroups" : "*", "config" : "oozie-env", "propertyName" : "oozie_user"}

-

- hive_user = None

- if "HIVE" in servicesList:

- webhcat_user = None

- if "hive-env" in services["configurations"] and "hive_user" in services["configurations"]["hive-env"]["properties"] \

- and "webhcat_user" in services["configurations"]["hive-env"]["properties"]:

- hive_user = services["configurations"]["hive-env"]["properties"]["hive_user"]

- webhcat_user = services["configurations"]["hive-env"]["properties"]["webhcat_user"]

- hiveServerHosts = self.getHostsWithComponent("HIVE", "HIVE_SERVER", services, hosts)

- hiveServerInteractiveHosts = self.getHostsWithComponent("HIVE", "HIVE_SERVER_INTERACTIVE", services, hosts)

- webHcatServerHosts = self.getHostsWithComponent("HIVE", "WEBHCAT_SERVER", services, hosts)

-

- if hiveServerHosts is not None:

- hiveServerHostsNameList = []

- for hiveServerHost in hiveServerHosts:

- hiveServerHostsNameList.append(hiveServerHost["Hosts"]["host_name"])

- # Append Hive Server Interactive host as well, as it is Hive2/HiveServer2 component.

- if hiveServerInteractiveHosts:

- for hiveServerInteractiveHost in hiveServerInteractiveHosts:

- hiveServerInteractiveHostName = hiveServerInteractiveHost["Hosts"]["host_name"]

- if hiveServerInteractiveHostName not in hiveServerHostsNameList:

- hiveServerHostsNameList.append(hiveServerInteractiveHostName)

- self.logger.info("Appended (if not exiting), Hive Server Interactive Host : '{0}', to Hive Server Host List : '{1}'".format(hiveServerInteractiveHostName, hiveServerHostsNameList))

-

- hiveServerHostsNames = ",".join(hiveServerHostsNameList) # includes Hive Server interactive host also.

- self.logger.info("Hive Server and Hive Server Interactive (if enabled) Host List : {0}".format(hiveServerHostsNameList))

- if not hive_user in users and hive_user is not None:

- users[hive_user] = {"propertyHosts" : hiveServerHostsNames,"propertyGroups" : "*", "config" : "hive-env", "propertyName" : "hive_user"}

-

- if webHcatServerHosts is not None:

- webHcatServerHostsNameList = []

- for webHcatServerHost in webHcatServerHosts:

- webHcatServerHostsNameList.append(webHcatServerHost["Hosts"]["host_name"])

- webHcatServerHostsNames = ",".join(webHcatServerHostsNameList)

- if not webhcat_user in users and webhcat_user is not None:

- users[webhcat_user] = {"propertyHosts" : webHcatServerHostsNames,"propertyGroups" : "*", "config" : "hive-env", "propertyName" : "webhcat_user"}

Review Comment:

oops,I found it in stack_advisor.py.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] timyuer commented on pull request #3677: AMBARI-25894: Missing file service_advisor.py in some serivces

Posted by "timyuer (via GitHub)" <gi...@apache.org>.

timyuer commented on PR #3677:

URL: https://github.com/apache/ambari/pull/3677#issuecomment-1584017338

@smallyao

+1, thank you for your work.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] virajjasani commented on pull request #3677: AMBARI-25894: Missing file service_advisor.py in some serivces

Posted by "virajjasani (via GitHub)" <gi...@apache.org>.

virajjasani commented on PR #3677:

URL: https://github.com/apache/ambari/pull/3677#issuecomment-1554087741

Sorry @smallyao, I feel the changes look good but some of the changes are out of my area and hence it will be difficult for me to review complete PR.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] smallyao commented on pull request #3677: AMBARI-25894: Missing file service_advisor.py in some serivces

Posted by "smallyao (via GitHub)" <gi...@apache.org>.

smallyao commented on PR #3677:

URL: https://github.com/apache/ambari/pull/3677#issuecomment-1554104382

>  In YARN's service advisor.py, an opt option 'hdp.version' was added to MR tasks, which caused the MR tasks to fail to run. ` /hadoop/yarn/local/usercache/ambari-qa/appcache/application_1682216948797_0003/container_1682216948797_0003_02_000001/launch_container.sh:行57: $JAVA_HOME/bin/java -Djava.io.tmpdir=$PWD/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/data1/hadoop/yarn/log/application_1682216948797_0003/container_1682216948797_0003_02_000001 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -server -XX:NewRatio=8 -Djava.net.preferIPv4Stack=true -Dhadoop.metrics.log.level=WARN -Xmx1638m -Dhdp.version=${hdp.version} org.apache.hadoop.mapreduce.v2.app.MRAppMaster 1>/data1/hadoop/yarn/log/application_1682216948797_0003/container_1682216948797_0003_02_000001/stdout 2>/data1/hadoop/yarn/log

/application_1682216948797_0003/container_1682216948797_0003_02_000001/stderr : 坏的替换`

>

> Because the 'hdp.version' environment variable in this command does not exist in the bigtop stack, it is recommended to modify this commit by removing 'hdp.version'.

@JiaLiangC Thanks for your correction, "-Dhdp.version" is removed

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org

[GitHub] [ambari] timyuer commented on pull request #3677: AMBARI-25894: Missing file service_advisor.py in some serivces

Posted by "timyuer (via GitHub)" <gi...@apache.org>.

timyuer commented on PR #3677:

URL: https://github.com/apache/ambari/pull/3677#issuecomment-1584016857

After apply this PR, services setup successful.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: dev-unsubscribe@ambari.apache.org

For additional commands, e-mail: dev-help@ambari.apache.org