You are viewing a plain text version of this content. The canonical link for it is here.

Posted to reviews@spark.apache.org by GitBox <gi...@apache.org> on 2019/05/23 04:08:53 UTC

[GitHub] [spark] zhengruifeng commented on issue #24648: [SPARK-27777][ML]

Eliminate uncessary sliding job in AreaUnderCurve

zhengruifeng commented on issue #24648: [SPARK-27777][ML] Eliminate uncessary sliding job in AreaUnderCurve

URL: https://github.com/apache/spark/pull/24648#issuecomment-495060029

@srowen Oh, not a pass. My expression was not correct.

Sliding need a separate job to collect head rows on each partitions, which can be eliminated.

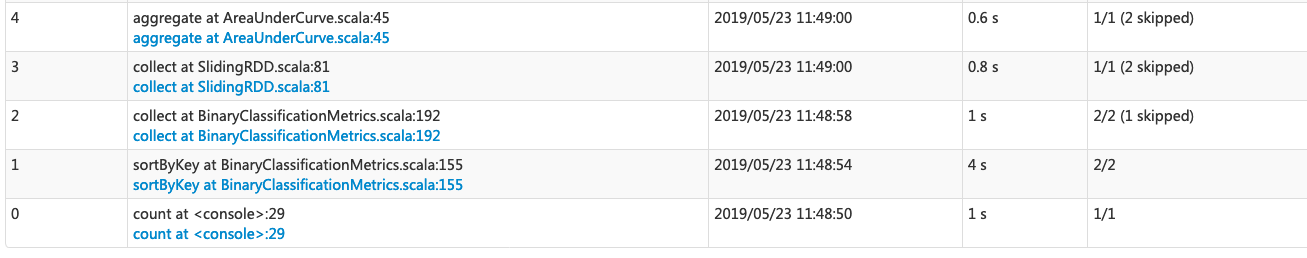

When the number of points is small, e.g. 1000, the difference is tiny.

As shown in the first fig, only 0.8 sec is saved.

Serveral reasons will result in more points in curve:

1, when I want a more accurate score

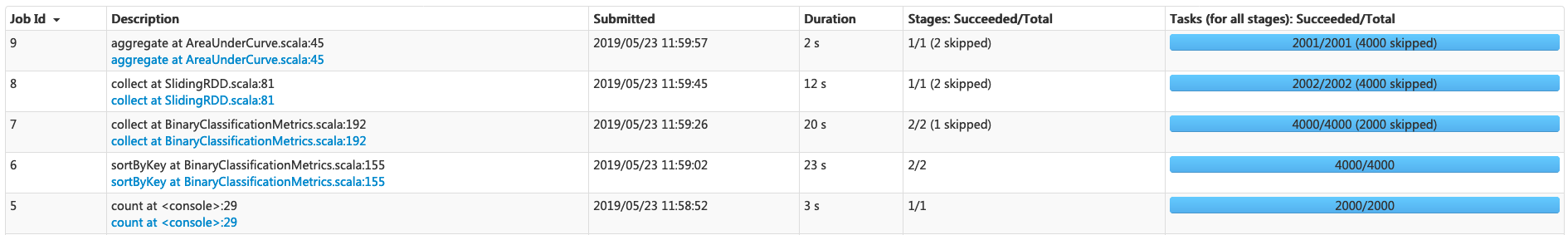

2, if we evaluate on a big dataset, then the points easily exceed 1000 even if we set `numBins`=1000. Since the grouping in the curve is limiit in partitions, or each partition will contains at least on partition. In many practional cases, there are tens of thounds of partitions, so there are tens of thounds of points.

As shown in the second fig, we set `numBins` to default value, and repartition the input data to 2000 partitions. Then the sliding job will take 12 sec, which is much longer than the computation time of AUC (2s)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org