You are viewing a plain text version of this content. The canonical link for it is here.

Posted to issues@flink.apache.org by GitBox <gi...@apache.org> on 2022/11/22 09:39:49 UTC

[GitHub] [flink-connector-aws] dannycranmer opened a new pull request, #26: [FLINK-25859][Connectors/DynamoDB][docs] DataStream sink documentation

dannycranmer opened a new pull request, #26:

URL: https://github.com/apache/flink-connector-aws/pull/26

## What is the purpose of the change

- Add documentation for DynamoDB datastream sink

## Verifying this change

- Manually copied into the `flink-web` project and built from source

## Does this pull request potentially affect one of the following parts:

* Dependencies (does it add or upgrade a dependency): no

* The public API, i.e., is any changed class annotated with `@Public(Evolving)`: no

* The serializers: no

* The runtime per-record code paths (performance sensitive): no

* Anything that affects deployment or recovery: JobManager (and its components), Checkpointing, Kubernetes/Yarn, ZooKeeper: no

## Documentation

* Does this pull request introduce a new feature? no

* If yes, how is the feature documented?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-connector-aws] hlteoh37 commented on a diff in pull request #26: [FLINK-25859][Connectors/DynamoDB][docs] DynamoDB sink documentation

Posted by GitBox <gi...@apache.org>.

hlteoh37 commented on code in PR #26:

URL: https://github.com/apache/flink-connector-aws/pull/26#discussion_r1030171950

##########

docs/content.zh/docs/connectors/table/dynamodb.md:

##########

@@ -0,0 +1,284 @@

+---

+title: Firehose

+weight: 5

+type: docs

+aliases:

+- /dev/table/connectors/dynamodb.html

+---

+

+<!--

+Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+-->

+

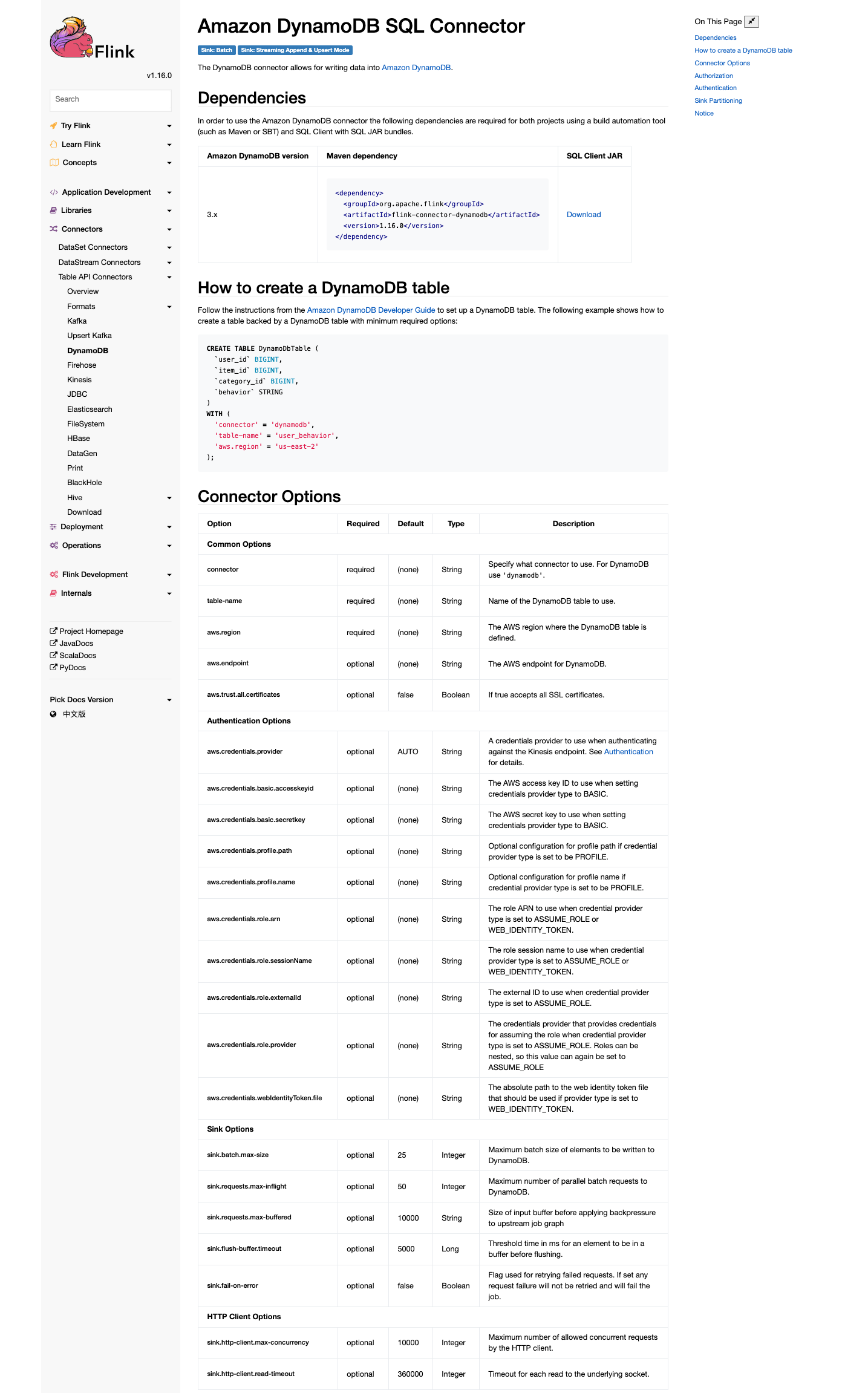

+# Amazon DynamoDB SQL Connector

+

+{{< label "Sink: Streaming Append & Upsert Mode" >}}

+

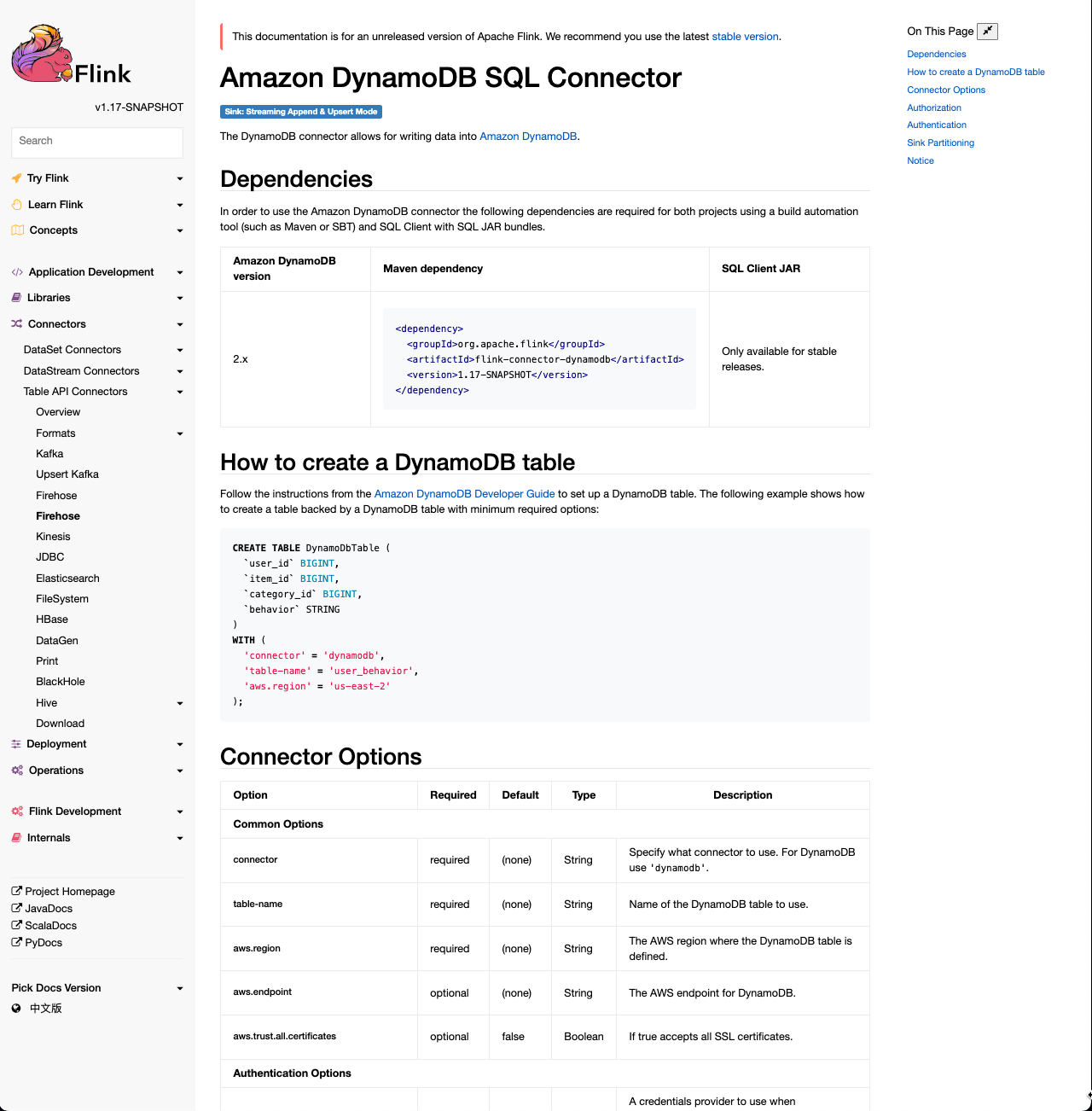

+The DynamoDB connector allows for writing data into [Amazon DynamoDB](https://aws.amazon.com/dynamodb).

+

+Dependencies

+------------

+

+{{< sql_download_table "dynamodb" >}}

+

+How to create a DynamoDB table

+-----------------------------------------

+

+Follow the instructions from the [Amazon DynamoDB Developer Guide](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/getting-started-step-1.html)

+to set up a DynamoDB table. The following example shows how to create a table backed by a DynamoDB table with minimum required options:

+

+```sql

+CREATE TABLE DynamoDbTable (

+ `user_id` BIGINT,

+ `item_id` BIGINT,

+ `category_id` BIGINT,

+ `behavior` STRING

+)

+WITH (

+ 'connector' = 'dynamodb',

+ 'table-name' = 'user_behavior',

+ 'aws.region' = 'us-east-2'

+);

+```

+

+Connector Options

+-----------------

+

+<table class="table table-bordered">

+ <thead>

+ <tr>

+ <th class="text-left" style="width: 25%">Option</th>

+ <th class="text-center" style="width: 8%">Required</th>

+ <th class="text-center" style="width: 7%">Default</th>

+ <th class="text-center" style="width: 10%">Type</th>

+ <th class="text-center" style="width: 50%">Description</th>

+ </tr>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Common Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>connector</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Specify what connector to use. For DynamoDB use <code>'dynamodb'</code>.</td>

+ </tr>

+ <tr>

+ <td><h5>table-name</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Name of the DynamoDB table to use.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.region</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS region where the DynamoDB table is defined.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.endpoint</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS endpoint for DynamoDB.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.trust.all.certificates</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">false</td>

+ <td>Boolean</td>

+ <td>If true accepts all SSL certificates.</td>

+ </tr>

+ </tbody>

+ <thead>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Authentication Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>aws.credentials.provider</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">AUTO</td>

+ <td>String</td>

+ <td>A credentials provider to use when authenticating against the Kinesis endpoint. See <a href="#authentication">Authentication</a> for details.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.basic.accesskeyid</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS access key ID to use when setting credentials provider type to BASIC.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.basic.secretkey</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS secret key to use when setting credentials provider type to BASIC.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.profile.path</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Optional configuration for profile path if credential provider type is set to be PROFILE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.profile.name</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Optional configuration for profile name if credential provider type is set to be PROFILE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.arn</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The role ARN to use when credential provider type is set to ASSUME_ROLE or WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.sessionName</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The role session name to use when credential provider type is set to ASSUME_ROLE or WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.externalId</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The external ID to use when credential provider type is set to ASSUME_ROLE.</td>

+ </tr>

Review Comment:

we're missing `aws.credentials.role.stsEndpoint` here

##########

docs/content.zh/docs/connectors/table/dynamodb.md:

##########

@@ -0,0 +1,284 @@

+---

+title: Firehose

+weight: 5

+type: docs

+aliases:

+- /dev/table/connectors/dynamodb.html

+---

+

+<!--

+Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+-->

+

+# Amazon DynamoDB SQL Connector

+

+{{< label "Sink: Streaming Append & Upsert Mode" >}}

+

+The DynamoDB connector allows for writing data into [Amazon DynamoDB](https://aws.amazon.com/dynamodb).

+

+Dependencies

+------------

+

+{{< sql_download_table "dynamodb" >}}

+

+How to create a DynamoDB table

+-----------------------------------------

+

+Follow the instructions from the [Amazon DynamoDB Developer Guide](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/getting-started-step-1.html)

+to set up a DynamoDB table. The following example shows how to create a table backed by a DynamoDB table with minimum required options:

+

+```sql

+CREATE TABLE DynamoDbTable (

+ `user_id` BIGINT,

+ `item_id` BIGINT,

+ `category_id` BIGINT,

+ `behavior` STRING

+)

+WITH (

+ 'connector' = 'dynamodb',

+ 'table-name' = 'user_behavior',

+ 'aws.region' = 'us-east-2'

+);

+```

+

+Connector Options

+-----------------

+

+<table class="table table-bordered">

+ <thead>

+ <tr>

+ <th class="text-left" style="width: 25%">Option</th>

+ <th class="text-center" style="width: 8%">Required</th>

+ <th class="text-center" style="width: 7%">Default</th>

+ <th class="text-center" style="width: 10%">Type</th>

+ <th class="text-center" style="width: 50%">Description</th>

+ </tr>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Common Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>connector</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Specify what connector to use. For DynamoDB use <code>'dynamodb'</code>.</td>

+ </tr>

+ <tr>

+ <td><h5>table-name</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Name of the DynamoDB table to use.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.region</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS region where the DynamoDB table is defined.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.endpoint</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS endpoint for DynamoDB.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.trust.all.certificates</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">false</td>

+ <td>Boolean</td>

+ <td>If true accepts all SSL certificates.</td>

+ </tr>

+ </tbody>

+ <thead>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Authentication Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>aws.credentials.provider</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">AUTO</td>

+ <td>String</td>

+ <td>A credentials provider to use when authenticating against the Kinesis endpoint. See <a href="#authentication">Authentication</a> for details.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.basic.accesskeyid</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS access key ID to use when setting credentials provider type to BASIC.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.basic.secretkey</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS secret key to use when setting credentials provider type to BASIC.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.profile.path</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Optional configuration for profile path if credential provider type is set to be PROFILE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.profile.name</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Optional configuration for profile name if credential provider type is set to be PROFILE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.arn</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The role ARN to use when credential provider type is set to ASSUME_ROLE or WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.sessionName</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The role session name to use when credential provider type is set to ASSUME_ROLE or WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.externalId</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The external ID to use when credential provider type is set to ASSUME_ROLE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.provider</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The credentials provider that provides credentials for assuming the role when credential provider type is set to ASSUME_ROLE. Roles can be nested, so this value can again be set to ASSUME_ROLE</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.webIdentityToken.file</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The absolute path to the web identity token file that should be used if provider type is set to WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ </tbody>

+ <thead>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Sink Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>sink.batch.max-size</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">25</td>

+ <td>Integer</td>

+ <td>Maximum batch size of elements to be written to DynamoDB.</td>

+ </tr>

+ <tr>

+ <td><h5>sink.requests.max-inflight</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">50</td>

+ <td>Integer</td>

+ <td>Maximum number of parallel batch requests to DynamoDB.</td>

+ </tr>

+ <tr>

Review Comment:

Missing 1 config here:

`sink.flush-buffer.size`

##########

docs/content.zh/docs/connectors/table/dynamodb.md:

##########

@@ -0,0 +1,284 @@

+---

+title: Firehose

+weight: 5

+type: docs

+aliases:

+- /dev/table/connectors/dynamodb.html

+---

+

+<!--

+Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+-->

+

+# Amazon DynamoDB SQL Connector

+

+{{< label "Sink: Streaming Append & Upsert Mode" >}}

Review Comment:

Should we add `sink:Batch` too?

##########

docs/content.zh/docs/connectors/table/dynamodb.md:

##########

@@ -0,0 +1,284 @@

+---

+title: Firehose

+weight: 5

+type: docs

+aliases:

+- /dev/table/connectors/dynamodb.html

+---

+

+<!--

+Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+-->

+

+# Amazon DynamoDB SQL Connector

+

+{{< label "Sink: Streaming Append & Upsert Mode" >}}

+

+The DynamoDB connector allows for writing data into [Amazon DynamoDB](https://aws.amazon.com/dynamodb).

+

+Dependencies

+------------

+

+{{< sql_download_table "dynamodb" >}}

+

+How to create a DynamoDB table

+-----------------------------------------

+

+Follow the instructions from the [Amazon DynamoDB Developer Guide](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/getting-started-step-1.html)

+to set up a DynamoDB table. The following example shows how to create a table backed by a DynamoDB table with minimum required options:

+

+```sql

+CREATE TABLE DynamoDbTable (

+ `user_id` BIGINT,

+ `item_id` BIGINT,

+ `category_id` BIGINT,

+ `behavior` STRING

+)

+WITH (

+ 'connector' = 'dynamodb',

+ 'table-name' = 'user_behavior',

+ 'aws.region' = 'us-east-2'

+);

+```

+

+Connector Options

+-----------------

+

+<table class="table table-bordered">

+ <thead>

+ <tr>

+ <th class="text-left" style="width: 25%">Option</th>

+ <th class="text-center" style="width: 8%">Required</th>

+ <th class="text-center" style="width: 7%">Default</th>

+ <th class="text-center" style="width: 10%">Type</th>

+ <th class="text-center" style="width: 50%">Description</th>

+ </tr>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Common Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>connector</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Specify what connector to use. For DynamoDB use <code>'dynamodb'</code>.</td>

+ </tr>

+ <tr>

+ <td><h5>table-name</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Name of the DynamoDB table to use.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.region</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS region where the DynamoDB table is defined.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.endpoint</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS endpoint for DynamoDB.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.trust.all.certificates</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">false</td>

+ <td>Boolean</td>

+ <td>If true accepts all SSL certificates.</td>

+ </tr>

+ </tbody>

+ <thead>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Authentication Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>aws.credentials.provider</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">AUTO</td>

+ <td>String</td>

+ <td>A credentials provider to use when authenticating against the Kinesis endpoint. See <a href="#authentication">Authentication</a> for details.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.basic.accesskeyid</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS access key ID to use when setting credentials provider type to BASIC.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.basic.secretkey</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS secret key to use when setting credentials provider type to BASIC.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.profile.path</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Optional configuration for profile path if credential provider type is set to be PROFILE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.profile.name</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Optional configuration for profile name if credential provider type is set to be PROFILE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.arn</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The role ARN to use when credential provider type is set to ASSUME_ROLE or WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.sessionName</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The role session name to use when credential provider type is set to ASSUME_ROLE or WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.externalId</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The external ID to use when credential provider type is set to ASSUME_ROLE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.provider</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The credentials provider that provides credentials for assuming the role when credential provider type is set to ASSUME_ROLE. Roles can be nested, so this value can again be set to ASSUME_ROLE</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.webIdentityToken.file</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The absolute path to the web identity token file that should be used if provider type is set to WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ </tbody>

+ <thead>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Sink Options</th>

+ </tr>

+ </thead>

+ <tbody>

Review Comment:

We can add the `sink.http-client.*` configs here too

```

sink.http-client.max-concurrency

sink.http-client.read-timeout

sink.http-client.protocol.version

```

Difference is that `sink.http-client.protocol.version` defaults to `HTTP1_1`

##########

docs/content.zh/docs/connectors/table/dynamodb.md:

##########

@@ -0,0 +1,284 @@

+---

+title: Firehose

Review Comment:

```suggestion

title: DynamoDB

```

##########

docs/content.zh/docs/connectors/table/dynamodb.md:

##########

@@ -0,0 +1,284 @@

+---

+title: Firehose

+weight: 5

+type: docs

+aliases:

+- /dev/table/connectors/dynamodb.html

+---

+

+<!--

+Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+-->

+

+# Amazon DynamoDB SQL Connector

+

+{{< label "Sink: Streaming Append & Upsert Mode" >}}

+

+The DynamoDB connector allows for writing data into [Amazon DynamoDB](https://aws.amazon.com/dynamodb).

+

+Dependencies

+------------

+

+{{< sql_download_table "dynamodb" >}}

+

+How to create a DynamoDB table

+-----------------------------------------

+

+Follow the instructions from the [Amazon DynamoDB Developer Guide](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/getting-started-step-1.html)

+to set up a DynamoDB table. The following example shows how to create a table backed by a DynamoDB table with minimum required options:

+

+```sql

+CREATE TABLE DynamoDbTable (

+ `user_id` BIGINT,

+ `item_id` BIGINT,

+ `category_id` BIGINT,

+ `behavior` STRING

+)

+WITH (

+ 'connector' = 'dynamodb',

+ 'table-name' = 'user_behavior',

+ 'aws.region' = 'us-east-2'

+);

+```

+

+Connector Options

+-----------------

+

+<table class="table table-bordered">

+ <thead>

+ <tr>

+ <th class="text-left" style="width: 25%">Option</th>

+ <th class="text-center" style="width: 8%">Required</th>

+ <th class="text-center" style="width: 7%">Default</th>

+ <th class="text-center" style="width: 10%">Type</th>

+ <th class="text-center" style="width: 50%">Description</th>

+ </tr>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Common Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>connector</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Specify what connector to use. For DynamoDB use <code>'dynamodb'</code>.</td>

+ </tr>

+ <tr>

+ <td><h5>table-name</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Name of the DynamoDB table to use.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.region</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS region where the DynamoDB table is defined.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.endpoint</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS endpoint for DynamoDB.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.trust.all.certificates</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">false</td>

+ <td>Boolean</td>

+ <td>If true accepts all SSL certificates.</td>

+ </tr>

+ </tbody>

+ <thead>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Authentication Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>aws.credentials.provider</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">AUTO</td>

+ <td>String</td>

+ <td>A credentials provider to use when authenticating against the Kinesis endpoint. See <a href="#authentication">Authentication</a> for details.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.basic.accesskeyid</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS access key ID to use when setting credentials provider type to BASIC.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.basic.secretkey</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS secret key to use when setting credentials provider type to BASIC.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.profile.path</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Optional configuration for profile path if credential provider type is set to be PROFILE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.profile.name</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Optional configuration for profile name if credential provider type is set to be PROFILE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.arn</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The role ARN to use when credential provider type is set to ASSUME_ROLE or WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.sessionName</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The role session name to use when credential provider type is set to ASSUME_ROLE or WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.externalId</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The external ID to use when credential provider type is set to ASSUME_ROLE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.provider</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The credentials provider that provides credentials for assuming the role when credential provider type is set to ASSUME_ROLE. Roles can be nested, so this value can again be set to ASSUME_ROLE</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.webIdentityToken.file</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The absolute path to the web identity token file that should be used if provider type is set to WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ </tbody>

+ <thead>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Sink Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>sink.batch.max-size</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">25</td>

+ <td>Integer</td>

+ <td>Maximum batch size of elements to be written to DynamoDB.</td>

+ </tr>

+ <tr>

+ <td><h5>sink.requests.max-inflight</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">50</td>

+ <td>Integer</td>

+ <td>Maximum number of parallel batch requests to DynamoDB.</td>

+ </tr>

+ <tr>

+ <td><h5>sink.requests.max-buffered</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">10000</td>

+ <td>String</td>

+ <td>Size of input buffer before applying backpressure to upstream job graph</td>

+ </tr>

+ <tr>

+ <td><h5>sink.flush-buffer.timeout</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">5000</td>

+ <td>Long</td>

+ <td>Threshold time in ms for an element to be in a buffer before flushing.</td>

+ </tr>

+ <tr>

+ <td><h5>sink.fail-on-error</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">false</td>

+ <td>Boolean</td>

+ <td>Flag used for retrying failed requests. If set any request failure will not be retried and will fail the job.</td>

+ </tr>

+ </tbody>

+</table>

+

+## Authorization

+

+Make sure to [create an appropriate IAM policy](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/using-identity-based-policies.html) to allow writing to the DynamoDB table.

+

+## Authentication

+

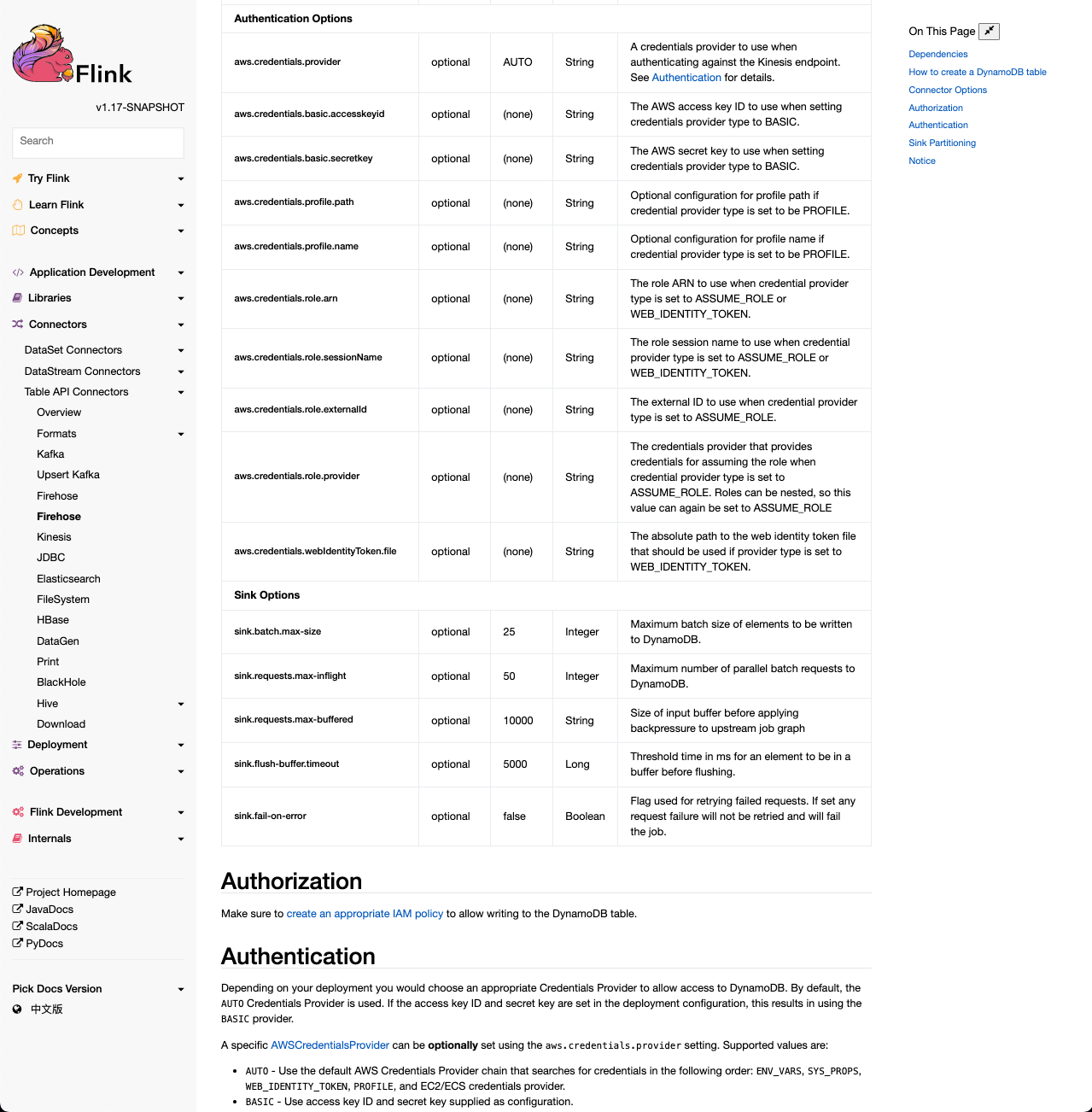

+Depending on your deployment you would choose an appropriate Credentials Provider to allow access to DynamoDB.

+By default, the `AUTO` Credentials Provider is used.

+If the access key ID and secret key are set in the deployment configuration, this results in using the `BASIC` provider.

+

+A specific [AWSCredentialsProvider](https://docs.aws.amazon.com/AWSJavaSDK/latest/javadoc/index.html?com/amazonaws/auth/AWSCredentialsProvider.html) can be **optionally** set using the `aws.credentials.provider` setting.

+Supported values are:

+

+- `AUTO` - Use the default AWS Credentials Provider chain that searches for credentials in the following order: `ENV_VARS`, `SYS_PROPS`, `WEB_IDENTITY_TOKEN`, `PROFILE`, and EC2/ECS credentials provider.

+- `BASIC` - Use access key ID and secret key supplied as configuration.

+- `ENV_VAR` - Use `AWS_ACCESS_KEY_ID` & `AWS_SECRET_ACCESS_KEY` environment variables.

+- `SYS_PROP` - Use Java system properties `aws.accessKeyId` and `aws.secretKey`.

+- `PROFILE` - Use an AWS credentials profile to create the AWS credentials.

+- `ASSUME_ROLE` - Create AWS credentials by assuming a role. The credentials for assuming the role must be supplied.

+- `WEB_IDENTITY_TOKEN` - Create AWS credentials by assuming a role using Web Identity Token.

+

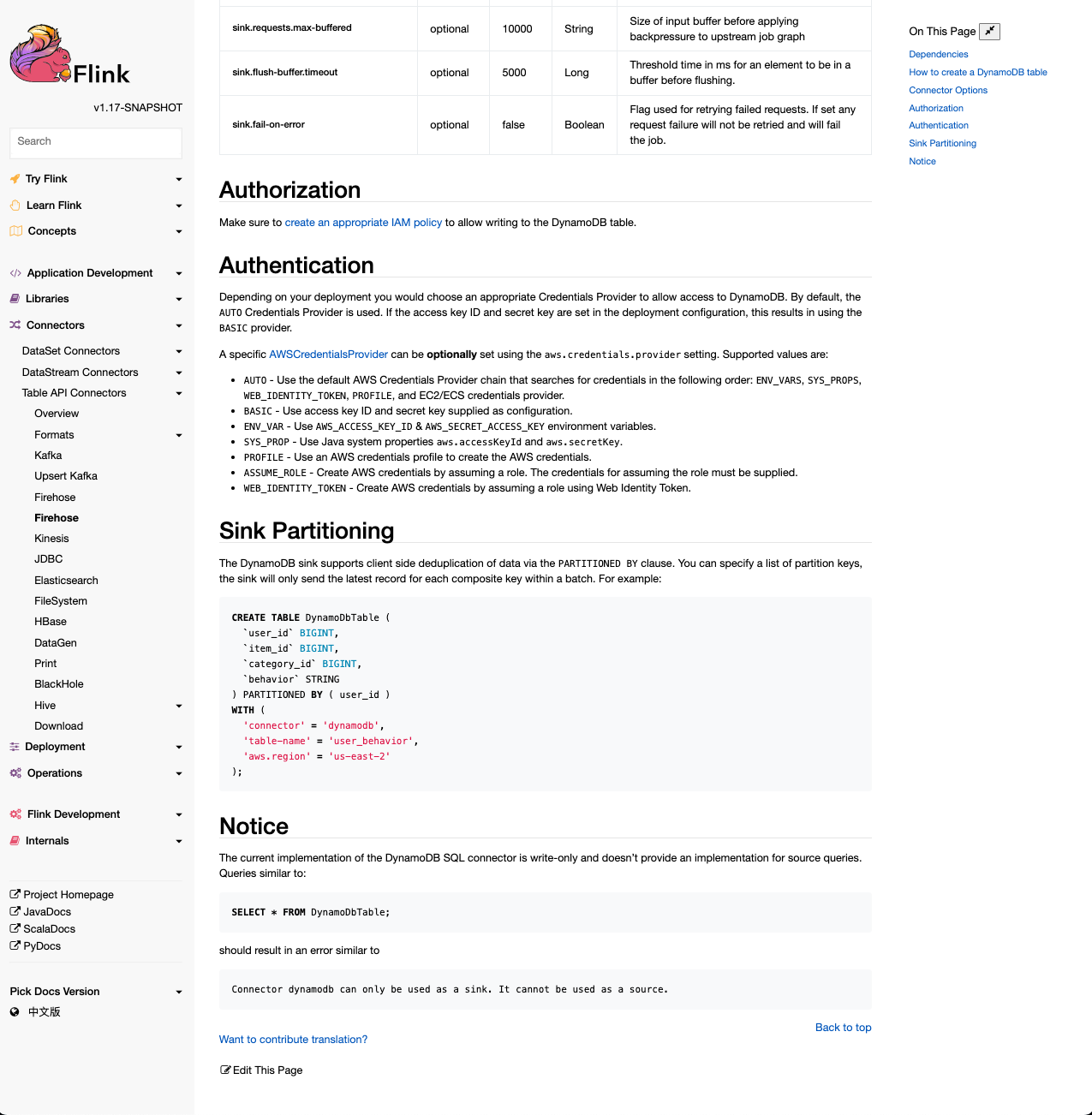

+## Sink Partitioning

+

+The DynamoDB sink supports client side deduplication of data via the `PARTITIONED BY` clause. You can specify a list of

+partition keys, the sink will only send the latest record for each composite key within a batch. For example:

Review Comment:

We can also add static partitioning

```sql

INSERT INTO dynamo_db_table PARTITION (partition_key='123',sort_key='345') SELECT <>

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-connector-aws] zentol commented on a diff in pull request #26: [FLINK-25859][Connectors/DynamoDB][docs] DynamoDB sink documentation

Posted by GitBox <gi...@apache.org>.

zentol commented on code in PR #26:

URL: https://github.com/apache/flink-connector-aws/pull/26#discussion_r1032447973

##########

docs/content.zh/docs/connectors/datastream/dynamodb.md:

##########

@@ -0,0 +1,171 @@

+---

+title: DynamoDB

+weight: 5

+type: docs

+aliases:

+- /zh/dev/connectors/dynamodb.html

+---

+<!--

+Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+-->

+

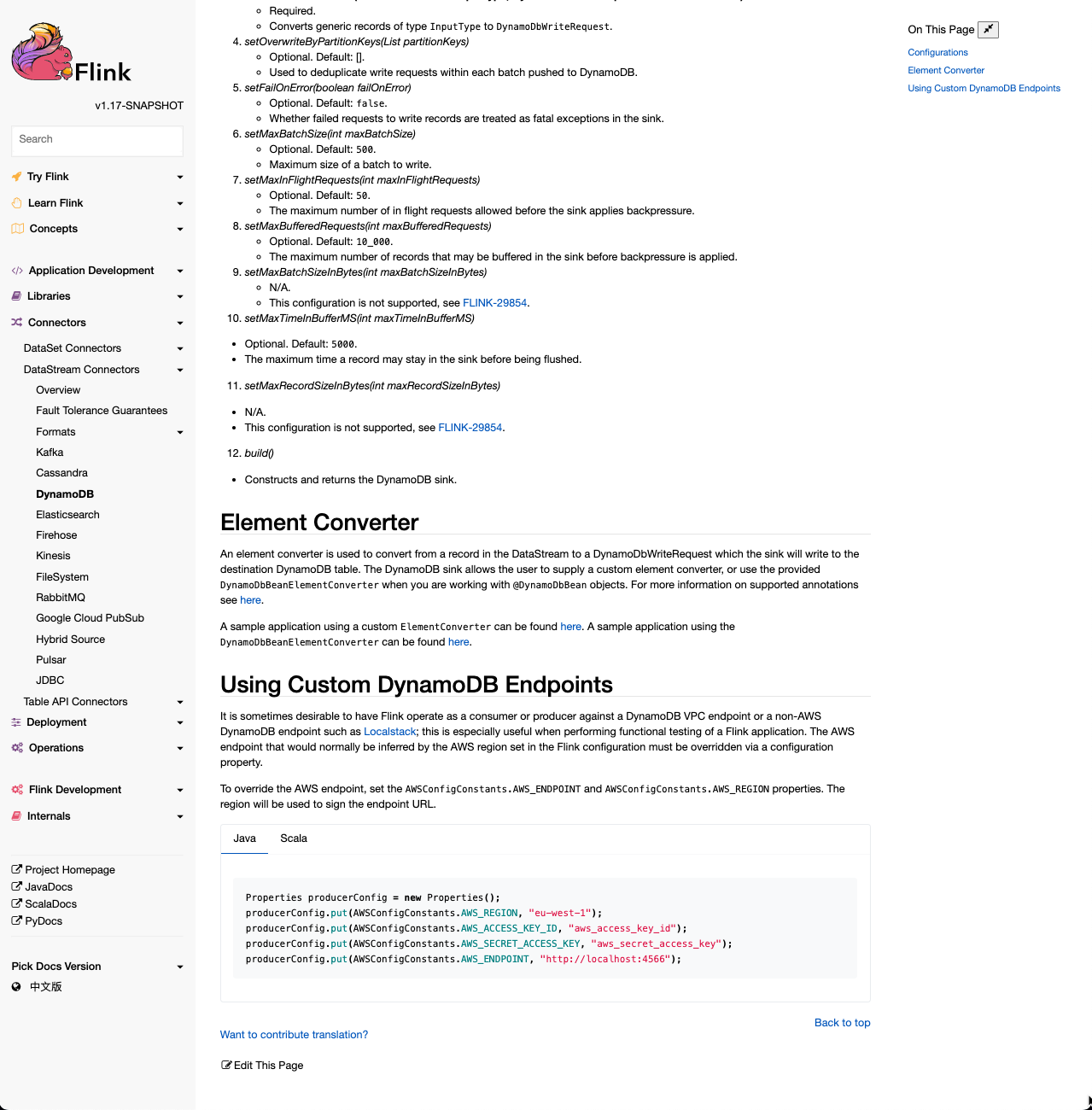

+# Amazon DynamoDB Sink

+

+The DynamoDB sink writes to [Amazon DynamoDB](https://aws.amazon.com/dynamodb) using the [AWS v2 SDK for Java](https://docs.aws.amazon.com/sdk-for-java/latest/developer-guide/home.html). Follow the instructions from the [Amazon DynamoDB Developer Guide](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/getting-started-step-1.html)

+to setup a table.

+

+To use the connector, add the following Maven dependency to your project:

+

+{{< connector_artifact flink-connector-dynamodb 2.0.0 >}}

Review Comment:

Flink implementation:

https://github.com/apache/flink/blob/release-1.16/docs/layouts/shortcodes/connector_artifact.html

ES usage: https://github.com/apache/flink-connector-elasticsearch/commit/b32f832f19ee52c43cddc0f32e835ef9c208a770

However, it does indeed not show correctly in the live docs for some reason. Will look into it...

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-connector-aws] dannycranmer commented on a diff in pull request #26: [FLINK-25859][Connectors/DynamoDB][docs] DataStream sink documentation

Posted by GitBox <gi...@apache.org>.

dannycranmer commented on code in PR #26:

URL: https://github.com/apache/flink-connector-aws/pull/26#discussion_r1029845175

##########

docs/content.zh/docs/connectors/datastream/dynamodb.md:

##########

@@ -0,0 +1,170 @@

+---

+title: DynamoDB

+weight: 5

+type: docs

+---

+<!--

+Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+-->

+

+# Amazon DynamoDB Sink

+

+The DynamoDB sink writes to [Amazon DynamoDB](https://aws.amazon.com/dynamodb) using the [AWS v2 SDK for Java](https://docs.aws.amazon.com/sdk-for-java/latest/developer-guide/home.html).

+

+Follow the instructions from the [Amazon DynamoDB Developer Guide](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/getting-started-step-1.html)

+to setup a table.

+

+To use the connector, add the following Maven dependency to your project:

+

+{{< artifact flink-connector-dynamodb >}}

Review Comment:

These docs are included into the Flink docs, so it should resolve the variables ok

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-connector-aws] dannycranmer commented on a diff in pull request #26: [FLINK-25859][Connectors/DynamoDB][docs] DynamoDB sink documentation

Posted by GitBox <gi...@apache.org>.

dannycranmer commented on code in PR #26:

URL: https://github.com/apache/flink-connector-aws/pull/26#discussion_r1030228599

##########

docs/content.zh/docs/connectors/table/dynamodb.md:

##########

@@ -0,0 +1,284 @@

+---

+title: Firehose

+weight: 5

+type: docs

+aliases:

+- /dev/table/connectors/dynamodb.html

+---

+

+<!--

+Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+-->

+

+# Amazon DynamoDB SQL Connector

+

+{{< label "Sink: Streaming Append & Upsert Mode" >}}

+

+The DynamoDB connector allows for writing data into [Amazon DynamoDB](https://aws.amazon.com/dynamodb).

+

+Dependencies

+------------

+

+{{< sql_download_table "dynamodb" >}}

+

+How to create a DynamoDB table

+-----------------------------------------

+

+Follow the instructions from the [Amazon DynamoDB Developer Guide](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/getting-started-step-1.html)

+to set up a DynamoDB table. The following example shows how to create a table backed by a DynamoDB table with minimum required options:

+

+```sql

+CREATE TABLE DynamoDbTable (

+ `user_id` BIGINT,

+ `item_id` BIGINT,

+ `category_id` BIGINT,

+ `behavior` STRING

+)

+WITH (

+ 'connector' = 'dynamodb',

+ 'table-name' = 'user_behavior',

+ 'aws.region' = 'us-east-2'

+);

+```

+

+Connector Options

+-----------------

+

+<table class="table table-bordered">

+ <thead>

+ <tr>

+ <th class="text-left" style="width: 25%">Option</th>

+ <th class="text-center" style="width: 8%">Required</th>

+ <th class="text-center" style="width: 7%">Default</th>

+ <th class="text-center" style="width: 10%">Type</th>

+ <th class="text-center" style="width: 50%">Description</th>

+ </tr>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Common Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>connector</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Specify what connector to use. For DynamoDB use <code>'dynamodb'</code>.</td>

+ </tr>

+ <tr>

+ <td><h5>table-name</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Name of the DynamoDB table to use.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.region</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS region where the DynamoDB table is defined.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.endpoint</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS endpoint for DynamoDB.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.trust.all.certificates</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">false</td>

+ <td>Boolean</td>

+ <td>If true accepts all SSL certificates.</td>

+ </tr>

+ </tbody>

+ <thead>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Authentication Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>aws.credentials.provider</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">AUTO</td>

+ <td>String</td>

+ <td>A credentials provider to use when authenticating against the Kinesis endpoint. See <a href="#authentication">Authentication</a> for details.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.basic.accesskeyid</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS access key ID to use when setting credentials provider type to BASIC.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.basic.secretkey</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS secret key to use when setting credentials provider type to BASIC.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.profile.path</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Optional configuration for profile path if credential provider type is set to be PROFILE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.profile.name</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Optional configuration for profile name if credential provider type is set to be PROFILE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.arn</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The role ARN to use when credential provider type is set to ASSUME_ROLE or WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.sessionName</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The role session name to use when credential provider type is set to ASSUME_ROLE or WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.externalId</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The external ID to use when credential provider type is set to ASSUME_ROLE.</td>

+ </tr>

Review Comment:

STS endpoint is for Flink 1.17 only

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-connector-aws] zentol commented on a diff in pull request #26: [FLINK-25859][Connectors/DynamoDB][docs] DynamoDB sink documentation

Posted by GitBox <gi...@apache.org>.

zentol commented on code in PR #26:

URL: https://github.com/apache/flink-connector-aws/pull/26#discussion_r1030155492

##########

docs/content.zh/docs/connectors/datastream/dynamodb.md:

##########

@@ -0,0 +1,170 @@

+---

+title: DynamoDB

+weight: 5

+type: docs

+---

+<!--

+Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+-->

+

+# Amazon DynamoDB Sink

+

+The DynamoDB sink writes to [Amazon DynamoDB](https://aws.amazon.com/dynamodb) using the [AWS v2 SDK for Java](https://docs.aws.amazon.com/sdk-for-java/latest/developer-guide/home.html).

+

+Follow the instructions from the [Amazon DynamoDB Developer Guide](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/getting-started-step-1.html)

+to setup a table.

+

+To use the connector, add the following Maven dependency to your project:

+

+{{< artifact flink-connector-dynamodb >}}

Review Comment:

use the `connector_artifact` shortcode and supply the version of the connector _without_ the Flink version suffix (`1.0.0`).

The Flink docs then integrate the docs of the connector version that is compatible with Flink, and the connector_artifact shortcode injects the corresponding version suffix.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-connector-aws] dannycranmer commented on pull request #26: [FLINK-25859][Connectors/DynamoDB][docs] DynamoDB sink documentation

Posted by GitBox <gi...@apache.org>.

dannycranmer commented on PR #26:

URL: https://github.com/apache/flink-connector-aws/pull/26#issuecomment-1328899767

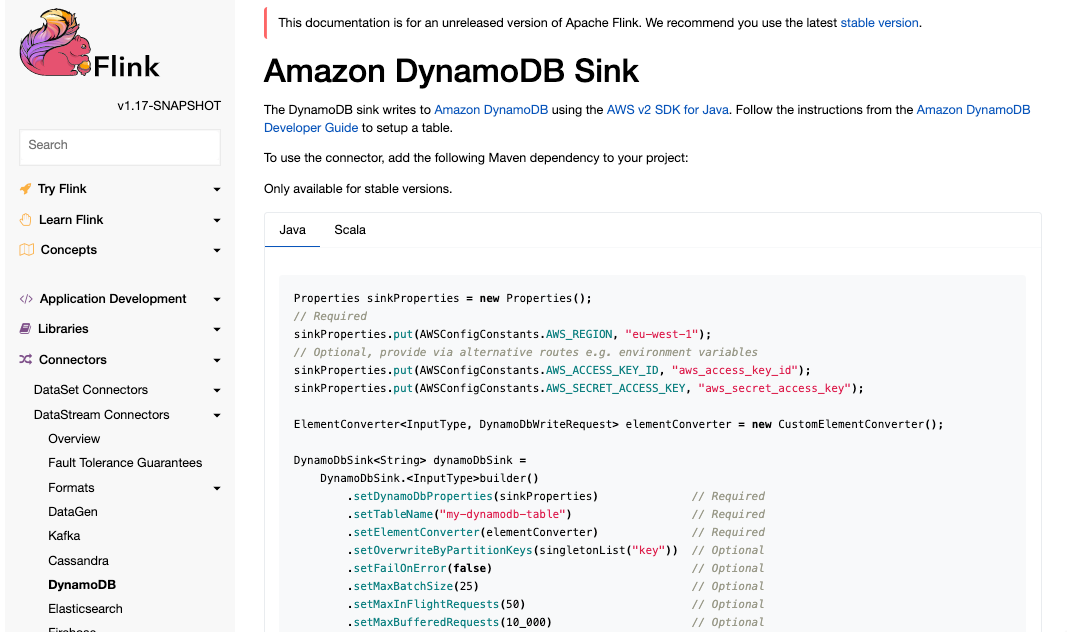

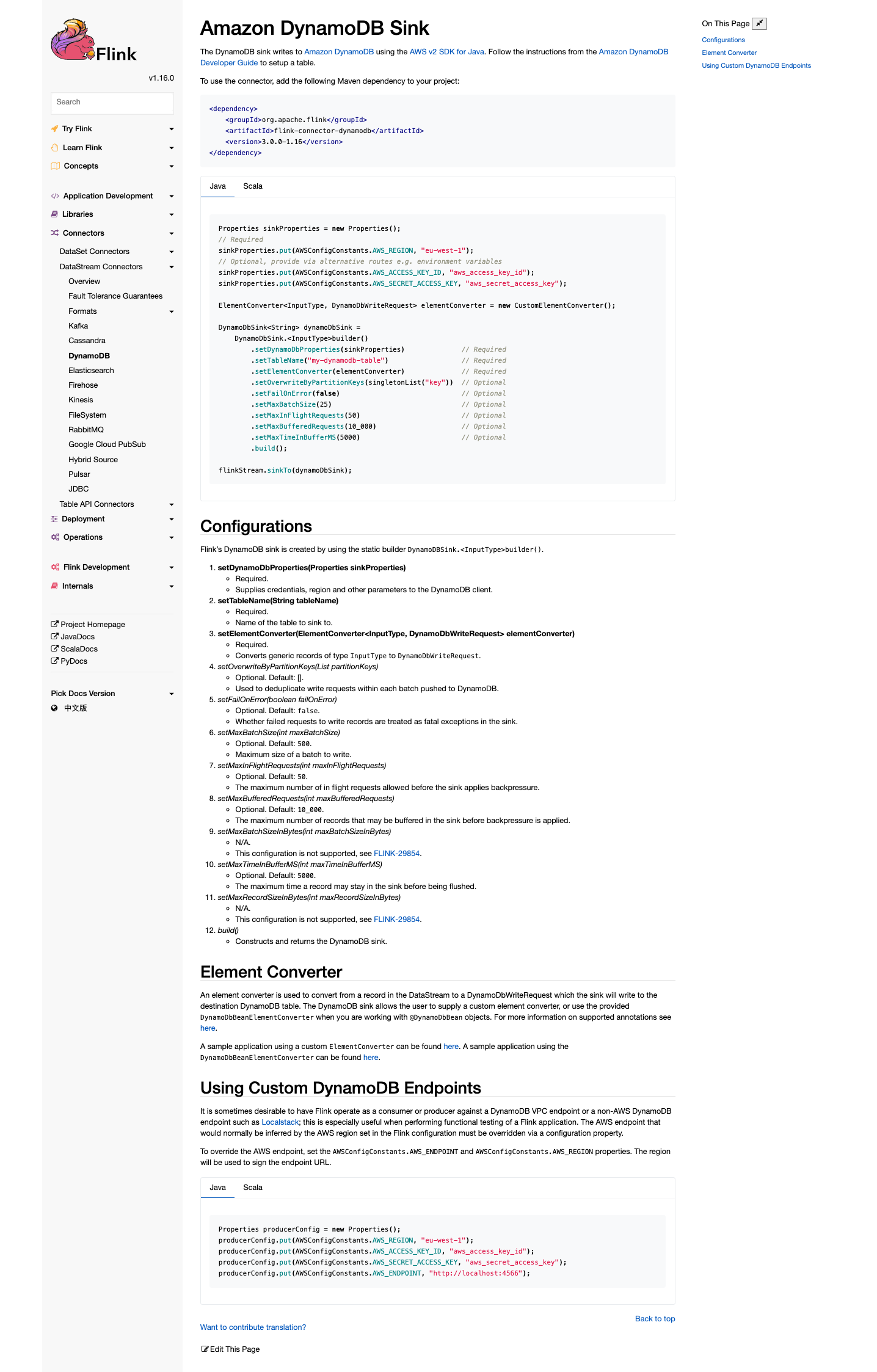

Datastream connector reference looks good

Flink 1.16

<img width="781" alt="DynamoDB___Apache_Flink" src="https://user-images.githubusercontent.com/4950503/204263527-655660e0-efeb-4405-ae30-030a94d58602.png">

Flink 1.17

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-connector-aws] dannycranmer commented on a diff in pull request #26: [FLINK-25859][Connectors/DynamoDB][docs] DynamoDB sink documentation

Posted by GitBox <gi...@apache.org>.

dannycranmer commented on code in PR #26:

URL: https://github.com/apache/flink-connector-aws/pull/26#discussion_r1030782976

##########

docs/content.zh/docs/connectors/table/dynamodb.md:

##########

@@ -0,0 +1,284 @@

+---

+title: Firehose

+weight: 5

+type: docs

+aliases:

+- /dev/table/connectors/dynamodb.html

+---

+

+<!--

+Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+-->

+

+# Amazon DynamoDB SQL Connector

+

+{{< label "Sink: Streaming Append & Upsert Mode" >}}

+

+The DynamoDB connector allows for writing data into [Amazon DynamoDB](https://aws.amazon.com/dynamodb).

+

+Dependencies

+------------

+

+{{< sql_download_table "dynamodb" >}}

+

+How to create a DynamoDB table

+-----------------------------------------

+

+Follow the instructions from the [Amazon DynamoDB Developer Guide](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/getting-started-step-1.html)

+to set up a DynamoDB table. The following example shows how to create a table backed by a DynamoDB table with minimum required options:

+

+```sql

+CREATE TABLE DynamoDbTable (

+ `user_id` BIGINT,

+ `item_id` BIGINT,

+ `category_id` BIGINT,

+ `behavior` STRING

+)

+WITH (

+ 'connector' = 'dynamodb',

+ 'table-name' = 'user_behavior',

+ 'aws.region' = 'us-east-2'

+);

+```

+

+Connector Options

+-----------------

+

+<table class="table table-bordered">

+ <thead>

+ <tr>

+ <th class="text-left" style="width: 25%">Option</th>

+ <th class="text-center" style="width: 8%">Required</th>

+ <th class="text-center" style="width: 7%">Default</th>

+ <th class="text-center" style="width: 10%">Type</th>

+ <th class="text-center" style="width: 50%">Description</th>

+ </tr>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Common Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>connector</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Specify what connector to use. For DynamoDB use <code>'dynamodb'</code>.</td>

+ </tr>

+ <tr>

+ <td><h5>table-name</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Name of the DynamoDB table to use.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.region</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS region where the DynamoDB table is defined.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.endpoint</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS endpoint for DynamoDB.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.trust.all.certificates</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">false</td>

+ <td>Boolean</td>

+ <td>If true accepts all SSL certificates.</td>

+ </tr>

+ </tbody>

+ <thead>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Authentication Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>aws.credentials.provider</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">AUTO</td>

+ <td>String</td>

+ <td>A credentials provider to use when authenticating against the Kinesis endpoint. See <a href="#authentication">Authentication</a> for details.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.basic.accesskeyid</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS access key ID to use when setting credentials provider type to BASIC.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.basic.secretkey</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS secret key to use when setting credentials provider type to BASIC.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.profile.path</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Optional configuration for profile path if credential provider type is set to be PROFILE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.profile.name</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Optional configuration for profile name if credential provider type is set to be PROFILE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.arn</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The role ARN to use when credential provider type is set to ASSUME_ROLE or WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.sessionName</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The role session name to use when credential provider type is set to ASSUME_ROLE or WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.externalId</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The external ID to use when credential provider type is set to ASSUME_ROLE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.provider</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The credentials provider that provides credentials for assuming the role when credential provider type is set to ASSUME_ROLE. Roles can be nested, so this value can again be set to ASSUME_ROLE</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.webIdentityToken.file</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The absolute path to the web identity token file that should be used if provider type is set to WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ </tbody>

+ <thead>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Sink Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>sink.batch.max-size</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">25</td>

+ <td>Integer</td>

+ <td>Maximum batch size of elements to be written to DynamoDB.</td>

+ </tr>

+ <tr>

+ <td><h5>sink.requests.max-inflight</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">50</td>

+ <td>Integer</td>

+ <td>Maximum number of parallel batch requests to DynamoDB.</td>

+ </tr>

+ <tr>

Review Comment:

Missed on purpose because this config is not supported

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-connector-aws] dannycranmer commented on a diff in pull request #26: [FLINK-25859][Connectors/DynamoDB][docs] DynamoDB sink documentation

Posted by GitBox <gi...@apache.org>.

dannycranmer commented on code in PR #26:

URL: https://github.com/apache/flink-connector-aws/pull/26#discussion_r1030782399

##########

docs/content.zh/docs/connectors/table/dynamodb.md:

##########

@@ -0,0 +1,284 @@

+---

+title: Firehose

+weight: 5

+type: docs

+aliases:

+- /dev/table/connectors/dynamodb.html

+---

+

+<!--

+Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+-->

+

+# Amazon DynamoDB SQL Connector

+

+{{< label "Sink: Streaming Append & Upsert Mode" >}}

+

+The DynamoDB connector allows for writing data into [Amazon DynamoDB](https://aws.amazon.com/dynamodb).

+

+Dependencies

+------------

+

+{{< sql_download_table "dynamodb" >}}

+

+How to create a DynamoDB table

+-----------------------------------------

+

+Follow the instructions from the [Amazon DynamoDB Developer Guide](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/getting-started-step-1.html)

+to set up a DynamoDB table. The following example shows how to create a table backed by a DynamoDB table with minimum required options:

+

+```sql

+CREATE TABLE DynamoDbTable (

+ `user_id` BIGINT,

+ `item_id` BIGINT,

+ `category_id` BIGINT,

+ `behavior` STRING

+)

+WITH (

+ 'connector' = 'dynamodb',

+ 'table-name' = 'user_behavior',

+ 'aws.region' = 'us-east-2'

+);

+```

+

+Connector Options

+-----------------

+

+<table class="table table-bordered">

+ <thead>

+ <tr>

+ <th class="text-left" style="width: 25%">Option</th>

+ <th class="text-center" style="width: 8%">Required</th>

+ <th class="text-center" style="width: 7%">Default</th>

+ <th class="text-center" style="width: 10%">Type</th>

+ <th class="text-center" style="width: 50%">Description</th>

+ </tr>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Common Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>connector</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Specify what connector to use. For DynamoDB use <code>'dynamodb'</code>.</td>

+ </tr>

+ <tr>

+ <td><h5>table-name</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Name of the DynamoDB table to use.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.region</h5></td>

+ <td>required</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS region where the DynamoDB table is defined.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.endpoint</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS endpoint for DynamoDB.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.trust.all.certificates</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">false</td>

+ <td>Boolean</td>

+ <td>If true accepts all SSL certificates.</td>

+ </tr>

+ </tbody>

+ <thead>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Authentication Options</th>

+ </tr>

+ </thead>

+ <tbody>

+ <tr>

+ <td><h5>aws.credentials.provider</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">AUTO</td>

+ <td>String</td>

+ <td>A credentials provider to use when authenticating against the Kinesis endpoint. See <a href="#authentication">Authentication</a> for details.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.basic.accesskeyid</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS access key ID to use when setting credentials provider type to BASIC.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.basic.secretkey</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The AWS secret key to use when setting credentials provider type to BASIC.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.profile.path</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Optional configuration for profile path if credential provider type is set to be PROFILE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.profile.name</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>Optional configuration for profile name if credential provider type is set to be PROFILE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.arn</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The role ARN to use when credential provider type is set to ASSUME_ROLE or WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.sessionName</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The role session name to use when credential provider type is set to ASSUME_ROLE or WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.externalId</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The external ID to use when credential provider type is set to ASSUME_ROLE.</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.role.provider</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The credentials provider that provides credentials for assuming the role when credential provider type is set to ASSUME_ROLE. Roles can be nested, so this value can again be set to ASSUME_ROLE</td>

+ </tr>

+ <tr>

+ <td><h5>aws.credentials.webIdentityToken.file</h5></td>

+ <td>optional</td>

+ <td style="word-wrap: break-word;">(none)</td>

+ <td>String</td>

+ <td>The absolute path to the web identity token file that should be used if provider type is set to WEB_IDENTITY_TOKEN.</td>

+ </tr>

+ </tbody>

+ <thead>

+ <tr>

+ <th colspan="5" class="text-left" style="width: 100%">Sink Options</th>

+ </tr>

+ </thead>

+ <tbody>

Review Comment:

Will add all but `sink.http-client.protocol.version`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-connector-aws] dannycranmer commented on a diff in pull request #26: [FLINK-25859][Connectors/DynamoDB][docs] DataStream sink documentation

Posted by GitBox <gi...@apache.org>.

dannycranmer commented on code in PR #26:

URL: https://github.com/apache/flink-connector-aws/pull/26#discussion_r1029850320

##########

docs/content.zh/docs/connectors/datastream/dynamodb.md:

##########

@@ -0,0 +1,170 @@

+---

+title: DynamoDB

+weight: 5

+type: docs

+---

+<!--

+Licensed to the Apache Software Foundation (ASF) under one

+or more contributor license agreements. See the NOTICE file

+distributed with this work for additional information

+regarding copyright ownership. The ASF licenses this file

+to you under the Apache License, Version 2.0 (the

+"License"); you may not use this file except in compliance

+with the License. You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing,

+software distributed under the License is distributed on an

+"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+KIND, either express or implied. See the License for the

+specific language governing permissions and limitations

+under the License.

+-->

+

+# Amazon DynamoDB Sink

+

+The DynamoDB sink writes to [Amazon DynamoDB](https://aws.amazon.com/dynamodb) using the [AWS v2 SDK for Java](https://docs.aws.amazon.com/sdk-for-java/latest/developer-guide/home.html).

+

+Follow the instructions from the [Amazon DynamoDB Developer Guide](https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/getting-started-step-1.html)

+to setup a table.

+

+To use the connector, add the following Maven dependency to your project:

+

+{{< artifact flink-connector-dynamodb >}}

+

+{{< tabs "ec24a4ae-6a47-11ed-a1eb-0242ac120002" >}}

+{{< tab "Java" >}}

+```java

+Properties sinkProperties = new Properties();

+// Required

+ sinkProperties.put(AWSConfigConstants.AWS_REGION, "eu-west-1");

+// Optional, provide via alternative routes e.g. environment variables

+ sinkProperties.put(AWSConfigConstants.AWS_ACCESS_KEY_ID, "aws_access_key_id");

+ sinkProperties.put(AWSConfigConstants.AWS_SECRET_ACCESS_KEY, "aws_secret_access_key");

+

+ ElementConverter<InputType, DynamoDbWriteRequest> elementConverter = new CustomElementConverter();

+

+ DynamoDbSink<String> dynamoDbSink =

+ DynamoDbSink.<InputType>builder()

+ .setDynamoDbProperties(sinkProperties) // Required

+ .setTableName("my-dynamodb-table") // Required

+ .setElementConverter(elementConverter) // Required

+ .setOverwriteByPartitionKeys(singletonList("key")) // Optional

+ .setFailOnError(false) // Optional

+ .setMaxBatchSize(25) // Optional

+ .setMaxInFlightRequests(50) // Optional

+ .setMaxBufferedRequests(10_000) // Optional

+ .setMaxTimeInBufferMS(5000) // Optional

+ .build();

+

+ flinkStream.sinkTo(dynamoDbSink);

+```

+{{< /tab >}}

+{{< tab "Scala" >}}

+```scala

+Properties sinkProperties = new Properties()

+// Required

+sinkProperties.put(AWSConfigConstants.AWS_REGION, "eu-west-1")

+// Optional, provide via alternative routes e.g. environment variables

+sinkProperties.put(AWSConfigConstants.AWS_ACCESS_KEY_ID, "aws_access_key_id")

+sinkProperties.put(AWSConfigConstants.AWS_SECRET_ACCESS_KEY, "aws_secret_access_key")

+

+val elementConverter = new CustomElementConverter();

+

+val dynamoDbSink =

+ DynamoDbSink.<InputType>builder()

+ .setDynamoDbProperties(sinkProperties) // Required

+ .setTableName("my-dynamodb-table") // Required

+ .setElementConverter(elementConverter) // Required

+ .setOverwriteByPartitionKeys(singletonList("key")) // Optional

+ .setFailOnError(false) // Optional

+ .setMaxBatchSize(25) // Optional

+ .setMaxInFlightRequests(50) // Optional

+ .setMaxBufferedRequests(10_000) // Optional

+ .setMaxTimeInBufferMS(5000) // Optional

+ .build()

+

+flinkStream.sinkTo(dynamoDbSink)

+```

+{{< /tab >}}

+{{< /tabs >}}

+

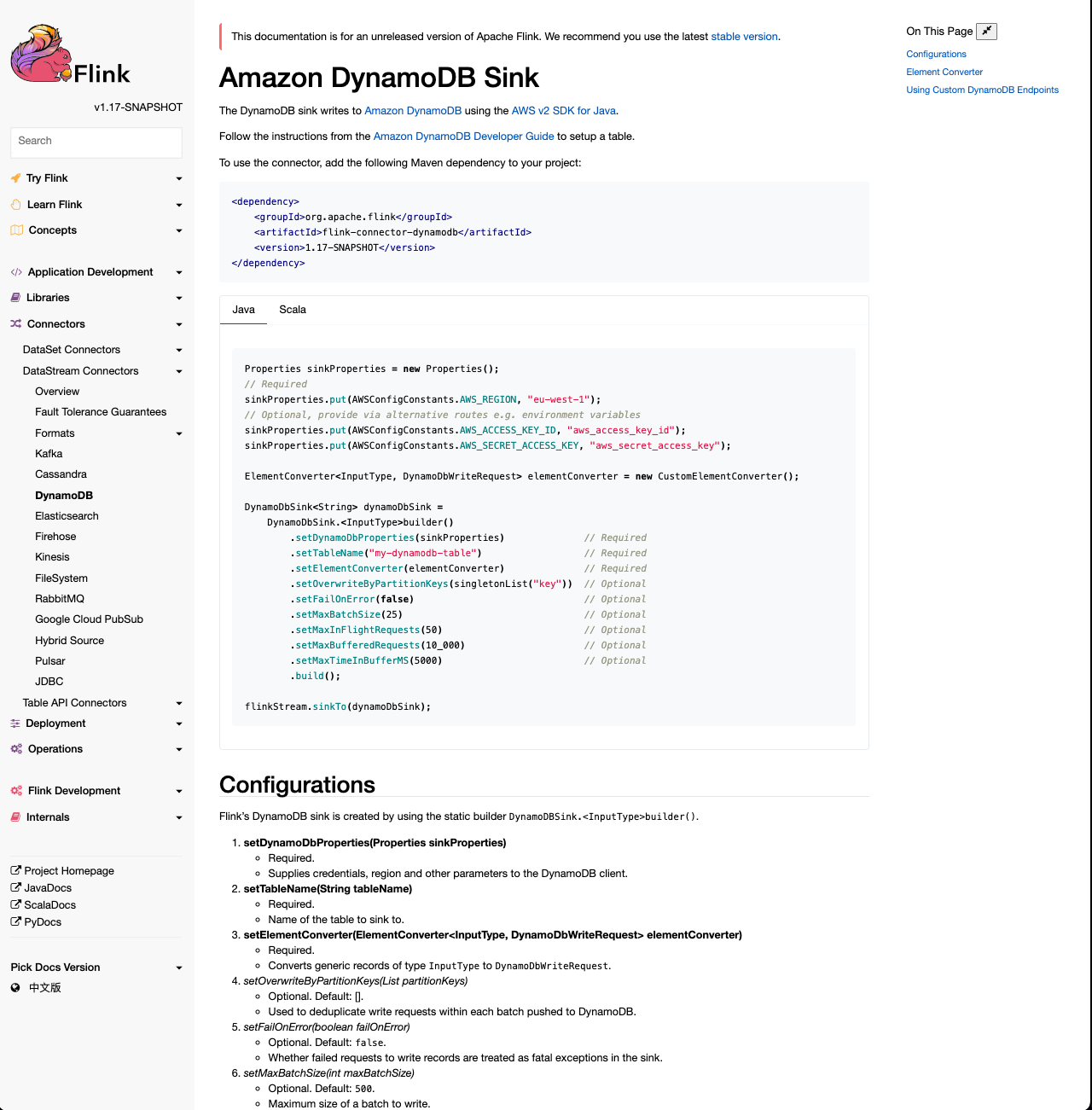

+## Configurations

+

+Flink's DynamoDB sink is created by using the static builder `DynamoDBSink.<InputType>builder()`.

+

+1. __setDynamoDbProperties(Properties sinkProperties)__

+ * Required.

+ * Supplies credentials, region and other parameters to the DynamoDB client.

+2. __setTableName(String tableName)__

+ * Required.

+ * Name of the table to sink to.

+3. __setElementConverter(ElementConverter<InputType, DynamoDbWriteRequest> elementConverter)__

+ * Required.

+ * Converts generic records of type `InputType` to `DynamoDbWriteRequest`.

+4. __setOverwriteByPartitionKeys(List<String> partitionKeys)__

+ * Optional.

+ * Used to deduplicate write requests within each batch pushed to DynamoDB.

+5. _setFailOnError(boolean failOnError)_

+ * Optional. Default: `false`.

+ * Whether failed requests to write records are treated as fatal exceptions in the sink.

+6. _setMaxBatchSize(int maxBatchSize)_

+ * Optional. Default: `500`.

+ * Maximum size of a batch to write.

+7. _setMaxInFlightRequests(int maxInFlightRequests)_

+ * Optional. Default: `50`.

+ * The maximum number of in flight requests allowed before the sink applies backpressure.

+8. _setMaxBufferedRequests(int maxBufferedRequests)_

+ * Optional. Default: `10_000`.

+ * The maximum number of records that may be buffered in the sink before backpressure is applied.

+9. _setMaxBatchSizeInBytes(int maxBatchSizeInBytes)_

+ * N/A.

+ * This configuration is not supported, see [FLINK-29854](https://issues.apache.org/jira/browse/FLINK-29854).

+10. _setMaxTimeInBufferMS(int maxTimeInBufferMS)_

+* Optional. Default: `5000`.

+* The maximum time a record may stay in the sink before being flushed.

+11. _setMaxRecordSizeInBytes(int maxRecordSizeInBytes)_

+* N/A.

+* This configuration is not supported, see [FLINK-29854](https://issues.apache.org/jira/browse/FLINK-29854).

+12. _build()_

+* Constructs and returns the DynamoDB sink.

Review Comment:

Sorry this was intellij trying to be helpful and formatting my copy and paste

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-connector-aws] dannycranmer commented on pull request #26: [FLINK-25859][Connectors/DynamoDB][docs] DynamoDB sink documentation

Posted by GitBox <gi...@apache.org>.

dannycranmer commented on PR #26:

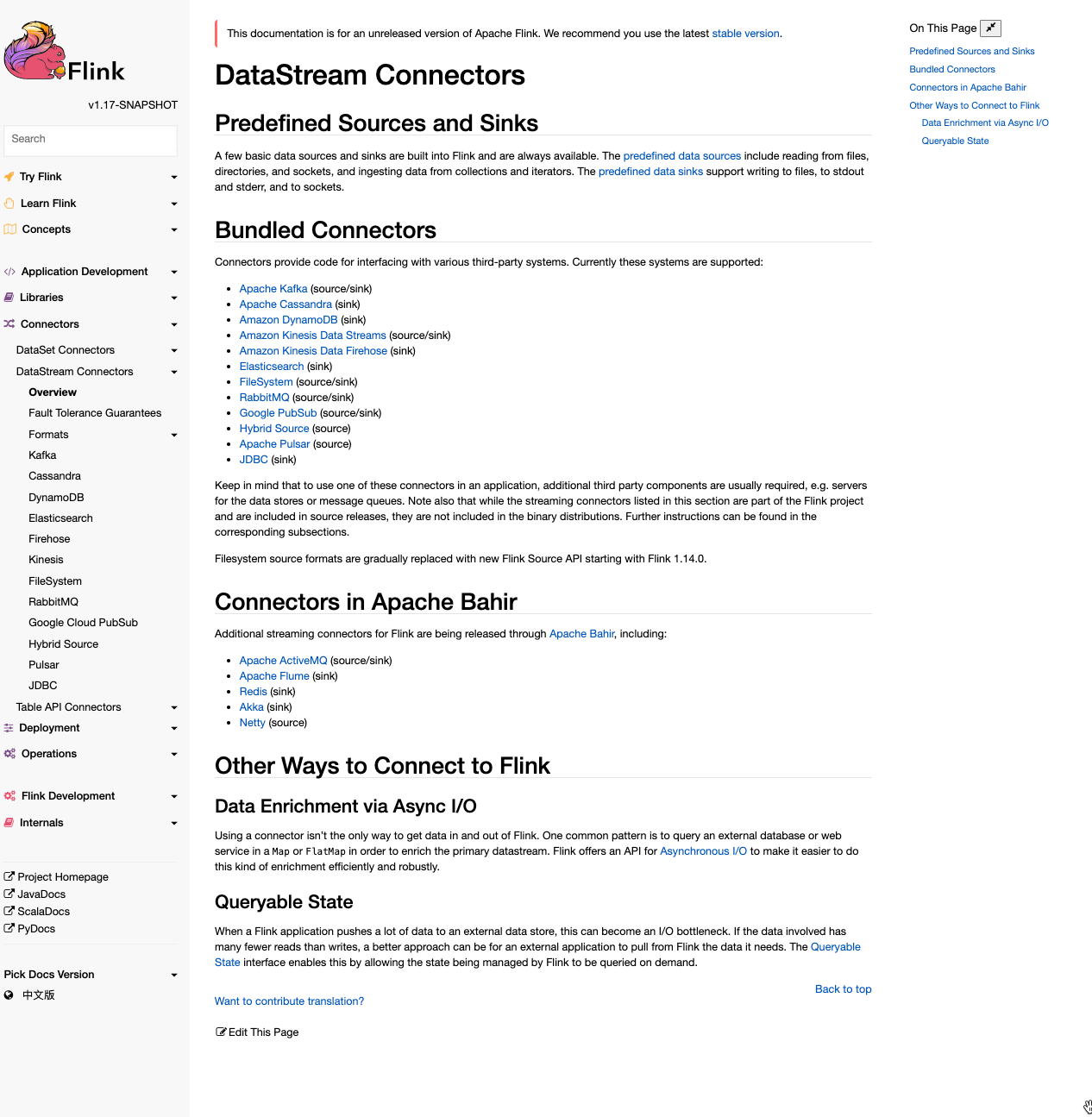

URL: https://github.com/apache/flink-connector-aws/pull/26#issuecomment-1328849223

Data Stream artifact renders as expected:

Flink 1.16

<img width="794" alt="DynamoDB___Apache_Flink" src="https://user-images.githubusercontent.com/4950503/204254587-f51783b0-5ab0-4ecc-8c30-08d60ba30e2f.png">

Flink 1.17

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@flink.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [flink-connector-aws] zentol commented on a diff in pull request #26: [FLINK-25859][Connectors/DynamoDB][docs] DynamoDB sink documentation

Posted by GitBox <gi...@apache.org>.

zentol commented on code in PR #26:

URL: https://github.com/apache/flink-connector-aws/pull/26#discussion_r1032447531

##########

docs/content.zh/docs/connectors/table/dynamodb.md:

##########

@@ -0,0 +1,306 @@

+---

+title: DynamoDB

+weight: 5

+type: docs

+aliases:

+- /zh/dev/table/connectors/dynamodb.html

+---

+

+<!--