You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@kyuubi.apache.org by ch...@apache.org on 2023/01/04 12:43:14 UTC

[kyuubi] branch master updated: [KYUUBI #3851][SPARK] Support auto set up `spark.master` when Kyuubi running inside Pod

This is an automated email from the ASF dual-hosted git repository.

chengpan pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/kyuubi.git

The following commit(s) were added to refs/heads/master by this push:

new 5fdd44fe6 [KYUUBI #3851][SPARK] Support auto set up `spark.master` when Kyuubi running inside Pod

5fdd44fe6 is described below

commit 5fdd44fe6b20ab1a4a85b80156bbb8d0bb14d214

Author: xuefeimiaoao <12...@qq.com>

AuthorDate: Wed Jan 4 20:42:28 2023 +0800

[KYUUBI #3851][SPARK] Support auto set up `spark.master` when Kyuubi running inside Pod

### _Why are the changes needed?_

to close https://github.com/apache/incubator-kyuubi/issues/3851 .

We can deploy spark on kubernetes without configure spark.master explicitly when api-server url is not exposed for us.

### _How was this patch tested?_

- [ ] Add some test cases that check the changes thoroughly including negative and positive cases if possible

- [x] Add screenshots for manual tests if appropriate

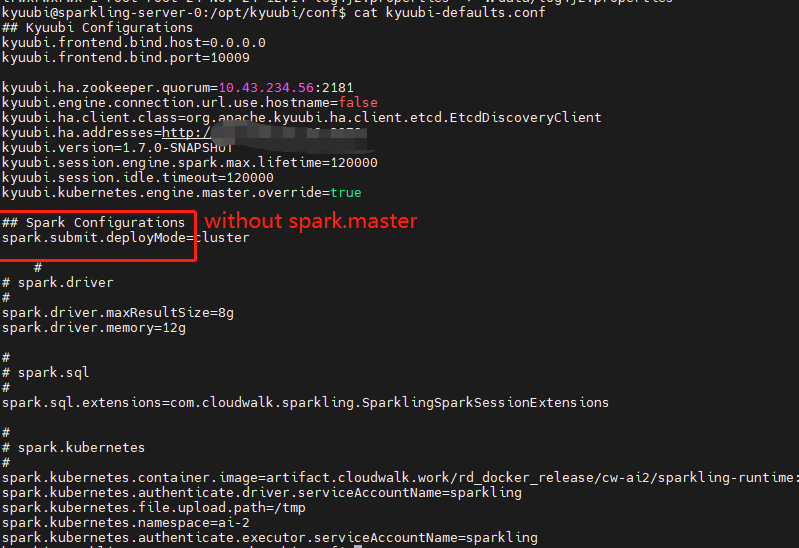

we do not set `spark.master`:

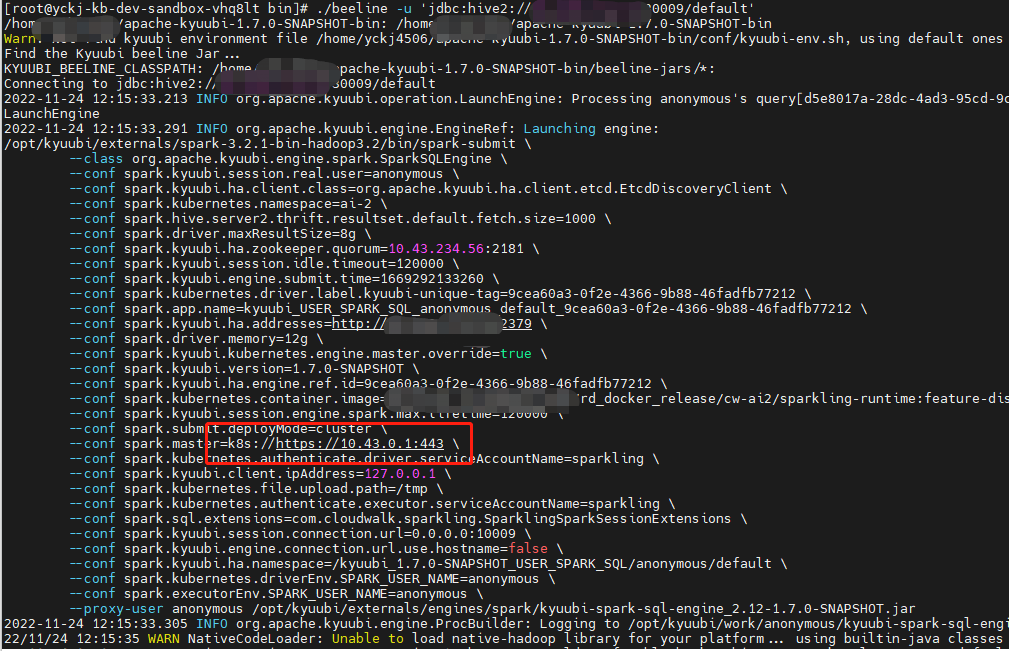

kyuubi add `spark.master` when setting `kyuubi.kubernetes.engine.master.override` to true.

- [ ] [Run test](https://kyuubi.apache.org/docs/latest/develop_tools/testing.html#running-tests) locally before make a pull request

Closes #3852 from xuefeimiaoao/feature/override_spark_master_with_pod_env.

Closes #3851

f3c126d8 [xuefeimiaoao] [KYUUBI #3851] [Improvement] Support AutoComplete of `spark.master` with kubernetes environment

a6395631 [xuefeimiaoao] [KYUUBI #4058] [IT][Test][K8S] Fix the missing of connectionConf of SparkQueryTests

Authored-by: xuefeimiaoao <12...@qq.com>

Signed-off-by: Cheng Pan <ch...@apache.org>

---

.../engine/KubernetesApplicationOperation.scala | 2 ++

.../org/apache/kyuubi/engine/ProcBuilder.scala | 8 ++++++

.../engine/spark/SparkBatchProcessBuilder.scala | 2 ++

.../kyuubi/engine/spark/SparkProcessBuilder.scala | 30 ++++++++++++++++++++++

4 files changed, 42 insertions(+)

diff --git a/kyuubi-server/src/main/scala/org/apache/kyuubi/engine/KubernetesApplicationOperation.scala b/kyuubi-server/src/main/scala/org/apache/kyuubi/engine/KubernetesApplicationOperation.scala

index 81e353b84..bee69b117 100644

--- a/kyuubi-server/src/main/scala/org/apache/kyuubi/engine/KubernetesApplicationOperation.scala

+++ b/kyuubi-server/src/main/scala/org/apache/kyuubi/engine/KubernetesApplicationOperation.scala

@@ -138,6 +138,8 @@ class KubernetesApplicationOperation extends ApplicationOperation with Logging {

object KubernetesApplicationOperation extends Logging {

val LABEL_KYUUBI_UNIQUE_KEY = "kyuubi-unique-tag"

val SPARK_APP_ID_LABEL = "spark-app-selector"

+ val KUBERNETES_SERVICE_HOST = "KUBERNETES_SERVICE_HOST"

+ val KUBERNETES_SERVICE_PORT = "KUBERNETES_SERVICE_PORT"

def toApplicationState(state: String): ApplicationState = state match {

// https://github.com/kubernetes/kubernetes/blob/master/pkg/apis/core/types.go#L2396

diff --git a/kyuubi-server/src/main/scala/org/apache/kyuubi/engine/ProcBuilder.scala b/kyuubi-server/src/main/scala/org/apache/kyuubi/engine/ProcBuilder.scala

index 7dc295ce5..5b69b02f5 100644

--- a/kyuubi-server/src/main/scala/org/apache/kyuubi/engine/ProcBuilder.scala

+++ b/kyuubi-server/src/main/scala/org/apache/kyuubi/engine/ProcBuilder.scala

@@ -106,6 +106,14 @@ trait ProcBuilder {

protected val extraEngineLog: Option[OperationLog]

+ /**

+ * Add `engine.master` if KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT

+ * are defined. So we can deploy engine on kubernetes without setting `engine.master`

+ * explicitly when kyuubi-servers are on kubernetes, which also helps in case that

+ * api-server is not exposed to us.

+ */

+ protected def completeMasterUrl(conf: KyuubiConf) = {}

+

protected val workingDir: Path = {

env.get("KYUUBI_WORK_DIR_ROOT").map { root =>

val workingRoot = Paths.get(root).toAbsolutePath

diff --git a/kyuubi-server/src/main/scala/org/apache/kyuubi/engine/spark/SparkBatchProcessBuilder.scala b/kyuubi-server/src/main/scala/org/apache/kyuubi/engine/spark/SparkBatchProcessBuilder.scala

index e7de6baa4..98f9ea5a3 100644

--- a/kyuubi-server/src/main/scala/org/apache/kyuubi/engine/spark/SparkBatchProcessBuilder.scala

+++ b/kyuubi-server/src/main/scala/org/apache/kyuubi/engine/spark/SparkBatchProcessBuilder.scala

@@ -45,6 +45,8 @@ class SparkBatchProcessBuilder(

}

val batchKyuubiConf = new KyuubiConf(false)

+ // complete `spark.master` if absent on kubernetes

+ completeMasterUrl(batchKyuubiConf)

batchConf.foreach(entry => { batchKyuubiConf.set(entry._1, entry._2) })

// tag batch application

KyuubiApplicationManager.tagApplication(batchId, "spark", clusterManager(), batchKyuubiConf)

diff --git a/kyuubi-server/src/main/scala/org/apache/kyuubi/engine/spark/SparkProcessBuilder.scala b/kyuubi-server/src/main/scala/org/apache/kyuubi/engine/spark/SparkProcessBuilder.scala

index 41fd9b2ad..874a36c00 100644

--- a/kyuubi-server/src/main/scala/org/apache/kyuubi/engine/spark/SparkProcessBuilder.scala

+++ b/kyuubi-server/src/main/scala/org/apache/kyuubi/engine/spark/SparkProcessBuilder.scala

@@ -28,6 +28,7 @@ import org.apache.hadoop.security.UserGroupInformation

import org.apache.kyuubi._

import org.apache.kyuubi.config.KyuubiConf

import org.apache.kyuubi.engine.{KyuubiApplicationManager, ProcBuilder}

+import org.apache.kyuubi.engine.KubernetesApplicationOperation.{KUBERNETES_SERVICE_HOST, KUBERNETES_SERVICE_PORT}

import org.apache.kyuubi.ha.HighAvailabilityConf

import org.apache.kyuubi.ha.client.AuthTypes

import org.apache.kyuubi.operation.log.OperationLog

@@ -55,6 +56,32 @@ class SparkProcessBuilder(

override def mainClass: String = "org.apache.kyuubi.engine.spark.SparkSQLEngine"

+ /**

+ * Add `spark.master` if KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT

+ * are defined. So we can deploy spark on kubernetes without setting `spark.master`

+ * explicitly when kyuubi-servers are on kubernetes, which also helps in case that

+ * api-server is not exposed to us.

+ */

+ override protected def completeMasterUrl(conf: KyuubiConf): Unit = {

+ try {

+ (

+ clusterManager(),

+ sys.env.get(KUBERNETES_SERVICE_HOST),

+ sys.env.get(KUBERNETES_SERVICE_PORT)) match {

+ case (None, Some(kubernetesServiceHost), Some(kubernetesServicePort)) =>

+ // According to "https://kubernetes.io/docs/concepts/architecture/control-plane-

+ // node-communication/#node-to-control-plane", the API server is configured to listen

+ // for remote connections on a secure HTTPS port (typically 443), so we set https here.

+ val masterURL = s"k8s://https://${kubernetesServiceHost}:${kubernetesServicePort}"

+ conf.set(MASTER_KEY, masterURL)

+ case _ =>

+ }

+ } catch {

+ case e: Exception =>

+ warn("Failed when setting up spark.master with kubernetes environment automatically.", e)

+ }

+ }

+

/**

* Converts kyuubi config key so that Spark could identify.

* - If the key is start with `spark.`, keep it AS IS as it is a Spark Conf

@@ -72,6 +99,9 @@ class SparkProcessBuilder(

}

override protected val commands: Array[String] = {

+ // complete `spark.master` if absent on kubernetes

+ completeMasterUrl(conf)

+

KyuubiApplicationManager.tagApplication(engineRefId, shortName, clusterManager(), conf)

val buffer = new ArrayBuffer[String]()

buffer += executable