You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@spark.apache.org by sr...@apache.org on 2023/03/02 14:43:51 UTC

[spark] branch master updated: [SPARK-42622][CORE] Disable substitution in values

This is an automated email from the ASF dual-hosted git repository.

srowen pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 258746f7f89 [SPARK-42622][CORE] Disable substitution in values

258746f7f89 is described below

commit 258746f7f89352266e4ac367e29aac9fe542dd15

Author: Jelmer Kuperus <je...@miro.com>

AuthorDate: Thu Mar 2 08:43:32 2023 -0600

[SPARK-42622][CORE] Disable substitution in values

https://issues.apache.org/jira/browse/SPARK-42622

### What changes were proposed in this pull request?

Disable substitution in values for the `StringSubstitutor` used to resolve error messages

### Why are the changes needed?

when constructing an error message `ErrorClasssesJSONReader`

1. reads the error message from core/src/main/resources/error/error-classes.json

2. replaces `<fieldValue>` with `${fieldValue}` in the error message it read

3. uses `StringSubstitutor` to replace `${fieldValue}` with the actual value

If `fieldValue` is defined as `"${foo}"` then it will also try and resolve foo.

When foo is undefined it will throw an IllegalArgumentException

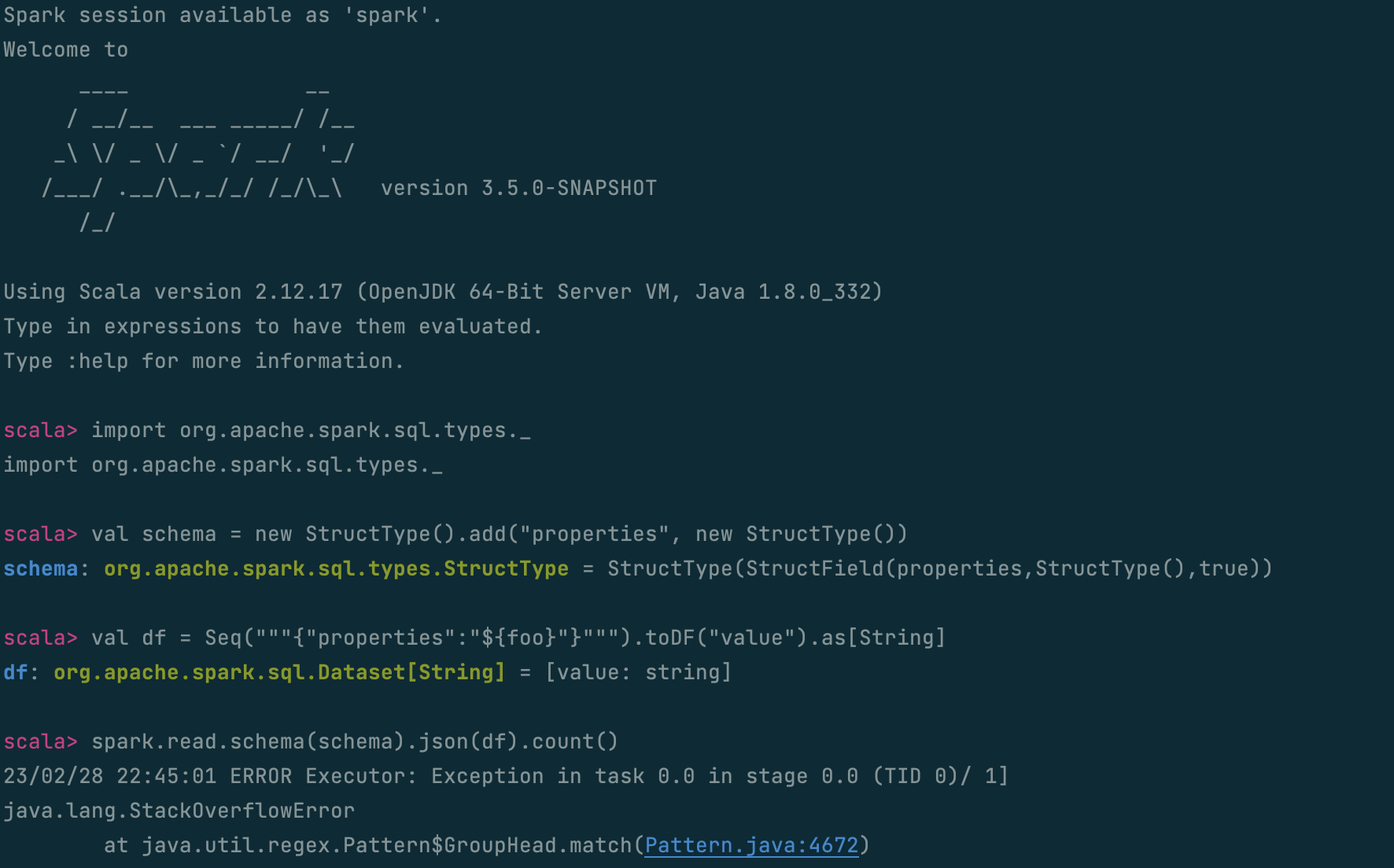

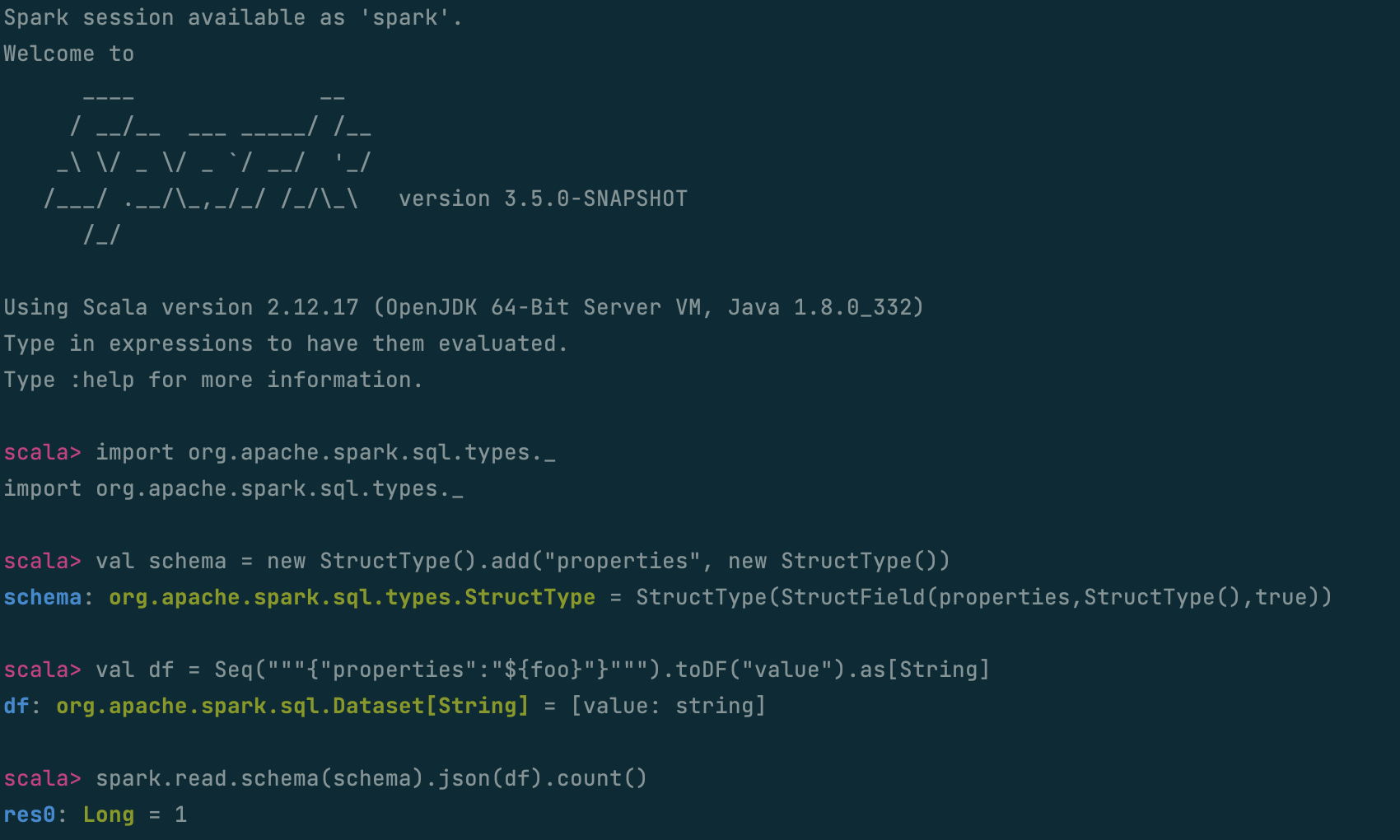

This is very problematic instance in this scenario. Where parsing json will fail if it does not adhere to the correct schema

Here the error message produced will be something like

`Cannot parse the field name properties and the value ${foo} of the JSON token type string to target Spark data type struct.`

And because foo is undefined another error will be triggered, and another, and another.. until you have a stackoverflow.

It could be employed as a denial of service attack on data pipelines

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Locally

Before

After

Closes #40219 from jelmerk/jelmer/no_string_substitutor.

Authored-by: Jelmer Kuperus <je...@miro.com>

Signed-off-by: Sean Owen <sr...@gmail.com>

---

.../main/scala/org/apache/spark/ErrorClassesJSONReader.scala | 1 +

.../src/test/scala/org/apache/spark/SparkThrowableSuite.scala | 11 +++++++++++

2 files changed, 12 insertions(+)

diff --git a/core/src/main/scala/org/apache/spark/ErrorClassesJSONReader.scala b/core/src/main/scala/org/apache/spark/ErrorClassesJSONReader.scala

index 34dd13addff..ca7b9cc1bd6 100644

--- a/core/src/main/scala/org/apache/spark/ErrorClassesJSONReader.scala

+++ b/core/src/main/scala/org/apache/spark/ErrorClassesJSONReader.scala

@@ -47,6 +47,7 @@ class ErrorClassesJsonReader(jsonFileURLs: Seq[URL]) {

val messageTemplate = getMessageTemplate(errorClass)

val sub = new StringSubstitutor(messageParameters.asJava)

sub.setEnableUndefinedVariableException(true)

+ sub.setDisableSubstitutionInValues(true)

try {

sub.replace(messageTemplate.replaceAll("<([a-zA-Z0-9_-]+)>", "\\$\\{$1\\}"))

} catch {

diff --git a/core/src/test/scala/org/apache/spark/SparkThrowableSuite.scala b/core/src/test/scala/org/apache/spark/SparkThrowableSuite.scala

index c20c287c564..a8c56cf1460 100644

--- a/core/src/test/scala/org/apache/spark/SparkThrowableSuite.scala

+++ b/core/src/test/scala/org/apache/spark/SparkThrowableSuite.scala

@@ -210,6 +210,17 @@ class SparkThrowableSuite extends SparkFunSuite {

)

}

+ test("Error message does not do substitution on values") {

+ assert(

+ getMessage(

+ "UNRESOLVED_COLUMN.WITH_SUGGESTION",

+ Map("objectName" -> "`foo`", "proposal" -> "`${bar}`, `baz`")

+ ) ==

+ "[UNRESOLVED_COLUMN.WITH_SUGGESTION] A column or function parameter with " +

+ "name `foo` cannot be resolved. Did you mean one of the following? [`${bar}`, `baz`]."

+ )

+ }

+

test("Try catching legacy SparkError") {

try {

throw new SparkException("Arbitrary legacy message")

---------------------------------------------------------------------

To unsubscribe, e-mail: commits-unsubscribe@spark.apache.org

For additional commands, e-mail: commits-help@spark.apache.org