You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@airflow.apache.org by GitBox <gi...@apache.org> on 2021/01/30 00:37:56 UTC

[GitHub] [airflow] RNHTTR opened a new pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

RNHTTR opened a new pull request #13982:

URL: https://github.com/apache/airflow/pull/13982

… drive hook, and supporting tests

closes: #13966

<!--

Thank you for contributing! Please make sure that your code changes

are covered with tests. And in case of new features or big changes

remember to adjust the documentation.

Feel free to ping committers for the review!

In case of existing issue, reference it using one of the following:

closes: #ISSUE

related: #ISSUE

How to write a good git commit message:

http://chris.beams.io/posts/git-commit/

-->

---

**^ Add meaningful description above**

Read the **[Pull Request Guidelines](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#pull-request-guidelines)** for more information.

In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed.

In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x).

In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/master/UPDATING.md).

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] RNHTTR commented on a change in pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

RNHTTR commented on a change in pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#discussion_r567499142

##########

File path: airflow/providers/google/cloud/transfers/gdrive_to_gcs.py

##########

@@ -0,0 +1,134 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+from io import BytesIO

+from typing import Optional, Sequence, Union

+

+from airflow.exceptions import AirflowException

+from airflow.models import BaseOperator

+from airflow.providers.google.cloud.hooks.gcs import GCSHook

+from airflow.providers.google.suite.hooks.drive import GoogleDriveHook

+from airflow.utils.decorators import apply_defaults

+

+

+class GoogleDriveToGCSOperator(BaseOperator):

+ """

+ Writes a Google Drive file into Google Cloud Storage.

+

+ .. seealso::

+ For more information on how to use this operator, take a look at the guide:

+ :ref:`howto/operator:GoogleDriveToGCSOperator` FIXME

+

+ :param destination_bucket: The destination Google cloud storage bucket where the

+ report should be written to. (templated)

+ :param destination_bucket: str

+ :param destination_object: The Google Cloud Storage object name for the object created by the operator.

+ For example: ``path/to/my/file/file.txt``.

+ :type destination_object: str

+ :param folder_id: The folder id of the folder in whhich the Google Drive file resides

+ :type folder_id: str

+ :param file_name: The name of the file residing in Google Drive

+ :type file_name: str

+ :param gcp_conn_id: The GCP connection ID to use when fetching connection info.

+ :type gcp_conn_id: str

+ :param delegate_to: The account to impersonate using domain-wide delegation of authority,

+ if any. For this to work, the service account making the request must have

+ domain-wide delegation enabled.

+ :type delegate_to: str

+ :param impersonation_chain: Optional service account to impersonate using short-term

+ credentials, or chained list of accounts required to get the access_token

+ of the last account in the list, which will be impersonated in the request.

+ If set as a string, the account must grant the originating account

+ the Service Account Token Creator IAM role.

+ If set as a sequence, the identities from the list must grant

+ Service Account Token Creator IAM role to the directly preceding identity, with first

+ account from the list granting this role to the originating account (templated).

+ :type impersonation_chain: Union[str, Sequence[str]]

+ """

+

+ template_fields = [

+ "destination_bucket",

+ "destination_object",

+ "folder_id",

+ "file_name",

+ "impersonation_chain",

+ ]

+

+ @apply_defaults

+ def __init__(

+ self,

+ *,

+ destination_bucket: str,

+ destination_object: str,

+ folder_id: Optional[str] = None,

+ file_name: Optional[str] = None,

+ gcp_conn_id: str = "google_cloud_default",

+ delegate_to: Optional[str] = None,

+ impersonation_chain: Optional[Union[str, Sequence[str]]] = None,

+ **kwargs,

+ ) -> None:

+ if folder_id and not file_name:

+ raise AirflowException("Both folder_id and file_name must be set. Received only folder_id")

Review comment:

I might've overlooked the `driveId` param in the files.list method. I don't have a Google Workspace account, so I can't test this without access to a shared drive. Do you know how I can get access to one?

I think this would only slightly change the implementation -- ~`folder_id` would be optional, the files.list `query` param would be dynamic based on the presence or absence of `folder_id`, and~ `drive_id` would be added as an optional init param.

`folder_id` should remain required. Imagine a scenario where a user has an arbitrary number of folders, and each folder contains a file `abc.csv`. If `folder_id` is optional, the file that gets returned would be ambiguous (or at least confusing -- the documentation doesn't make clear how the list of files is ordered in the response to `files.list()`)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] RNHTTR commented on pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

RNHTTR commented on pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#issuecomment-772200828

```#################### Docs build errors summary ####################

==================== apache-airflow-providers-google ====================

-------------------- Error 1 --------------------

/opt/airflow/docs/apache-airflow-providers-google/_api/drive/index.rst: WARNING: document isn't included in any toctree

-------------------- Error 2 --------------------

WARNING: undefined label: howto/operator:googledrivetogcsoperator

File path: apache-airflow-providers-google/_api/airflow/providers/google/cloud/transfers/gdrive_to_gcs/index.rst (17)

12 | Bases: :class:`airflow.models.BaseOperator`

13 |

14 | Writes a Google Drive file into Google Cloud Storage.

15 |

16 | .. seealso::

> 17 | For more information on how to use this operator, take a look at the guide:

18 | :ref:`howto/operator:GoogleDriveToGCSOperator`

19 |

20 | :param destination_bucket: The destination Google cloud storage bucket where the

21 | file should be written to

22 | :type destination_bucket: str

##################################################

#################### Spelling errors summary ####################

==================== apache-airflow-providers-google ====================

-------------------- Error 1 --------------------

Sphinx spellcheck returned non-zero exit status: 2.

==================================================

If the spelling is correct, add the spelling to docs/spelling_wordlist.txt

or use the spelling directive.

Check https://sphinxcontrib-spelling.readthedocs.io/en/latest/customize.html#private-dictionaries

for more details.

##################################################```

It seems the Google Drive sensor is being added to `/opt/airflow/docs/apache-airflow-providers-google/_api/drive/index.rst` instead of `/opt/airflow/docs/apache-airflow-providers-google/_api/airflow/providers/google/suite/sensors/index.rst` which doesn't seem to get created.

I have no idea what's happening with the spelling issue or the issue in the `GoogleDriveToGCSOperator` docstring. I copied it from the `GoogleSheetsToGCSOperator`, but I'm not really sure how restructuredtext works.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] RNHTTR commented on pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

RNHTTR commented on pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#issuecomment-772172762

> @RNHTTR Where do you see this error? Do you build the documentation according to the instructions? https://github.com/apache/airflow/blob/master/docs/README.rst

I see it in every DAG documentation .rst file:

Thanks for the link to the documentation instructions. I tried `./breeze build-docs -- --package-filter apache-airflow-providers-google`, but this results in an error that I can't reconcile:

```#################### Docs build errors summary ####################

==================== apache-airflow-providers-google ====================

-------------------- Error 1 --------------------

Sphinx returned non-zero exit status: -9.

##################################################

#################### Spelling errors summary ####################

==================== apache-airflow-providers-google ====================

-------------------- Error 1 --------------------

Sphinx spellcheck returned non-zero exit status: -9.

==================================================

If the spelling is correct, add the spelling to docs/spelling_wordlist.txt

or use the spelling directive.

Check https://sphinxcontrib-spelling.readthedocs.io/en/latest/customize.html#private-dictionaries

for more details.

##################################################

The documentation has errors.

#################### Packages errors summary ####################

Package name Count of doc build errors Count of spelling errors

------------------------------- --------------------------- --------------------------

apache-airflow-providers-google 1 1

##################################################

If you need help, write to #documentation channel on Airflow's Slack.

Channel link: https://apache-airflow.slack.com/archives/CJ1LVREHX

Invitation link: https://s.apache.org/airflow-slack

###########################################################################################

EXIT CODE: 1 in container (See above for error message). Below is the output of the last action!

*** BEGINNING OF THE LAST COMMAND OUTPUT ***

*** END OF THE LAST COMMAND OUTPUT ***

EXIT CODE: 1 in container. The actual error might be above the output!```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] boring-cyborg[bot] commented on pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

boring-cyborg[bot] commented on pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#issuecomment-774730636

Awesome work, congrats on your first merged pull request!

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] RNHTTR commented on a change in pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

RNHTTR commented on a change in pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#discussion_r567499142

##########

File path: airflow/providers/google/cloud/transfers/gdrive_to_gcs.py

##########

@@ -0,0 +1,134 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+from io import BytesIO

+from typing import Optional, Sequence, Union

+

+from airflow.exceptions import AirflowException

+from airflow.models import BaseOperator

+from airflow.providers.google.cloud.hooks.gcs import GCSHook

+from airflow.providers.google.suite.hooks.drive import GoogleDriveHook

+from airflow.utils.decorators import apply_defaults

+

+

+class GoogleDriveToGCSOperator(BaseOperator):

+ """

+ Writes a Google Drive file into Google Cloud Storage.

+

+ .. seealso::

+ For more information on how to use this operator, take a look at the guide:

+ :ref:`howto/operator:GoogleDriveToGCSOperator` FIXME

+

+ :param destination_bucket: The destination Google cloud storage bucket where the

+ report should be written to. (templated)

+ :param destination_bucket: str

+ :param destination_object: The Google Cloud Storage object name for the object created by the operator.

+ For example: ``path/to/my/file/file.txt``.

+ :type destination_object: str

+ :param folder_id: The folder id of the folder in whhich the Google Drive file resides

+ :type folder_id: str

+ :param file_name: The name of the file residing in Google Drive

+ :type file_name: str

+ :param gcp_conn_id: The GCP connection ID to use when fetching connection info.

+ :type gcp_conn_id: str

+ :param delegate_to: The account to impersonate using domain-wide delegation of authority,

+ if any. For this to work, the service account making the request must have

+ domain-wide delegation enabled.

+ :type delegate_to: str

+ :param impersonation_chain: Optional service account to impersonate using short-term

+ credentials, or chained list of accounts required to get the access_token

+ of the last account in the list, which will be impersonated in the request.

+ If set as a string, the account must grant the originating account

+ the Service Account Token Creator IAM role.

+ If set as a sequence, the identities from the list must grant

+ Service Account Token Creator IAM role to the directly preceding identity, with first

+ account from the list granting this role to the originating account (templated).

+ :type impersonation_chain: Union[str, Sequence[str]]

+ """

+

+ template_fields = [

+ "destination_bucket",

+ "destination_object",

+ "folder_id",

+ "file_name",

+ "impersonation_chain",

+ ]

+

+ @apply_defaults

+ def __init__(

+ self,

+ *,

+ destination_bucket: str,

+ destination_object: str,

+ folder_id: Optional[str] = None,

+ file_name: Optional[str] = None,

+ gcp_conn_id: str = "google_cloud_default",

+ delegate_to: Optional[str] = None,

+ impersonation_chain: Optional[Union[str, Sequence[str]]] = None,

+ **kwargs,

+ ) -> None:

+ if folder_id and not file_name:

+ raise AirflowException("Both folder_id and file_name must be set. Received only folder_id")

Review comment:

I overlooked the `driveId` param in the files.list method for searching shared Drives. I don't have a Google Workspace account, so I can't test this without access to a shared Drive. Do you know how I can get access to one?

I think this would only slightly change the implementation -- ~`folder_id` would be optional, the files.list `query` param would be dynamic based on the presence or absence of `folder_id`, and~ `drive_id` would be added as an optional init param.

`folder_id` should remain required. Imagine a scenario where a user has an arbitrary number of folders, and each folder contains a file `abc.csv`. If `folder_id` is optional, the file that gets returned would be ambiguous (or at least confusing -- the documentation doesn't make clear how the list of files is ordered in the response to `files.list()`)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] mik-laj edited a comment on pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

mik-laj edited a comment on pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#issuecomment-772174700

I'm in bed, but...can you run ``./breeze build-docs -- --package-filter apache-airflow-providers-google -v`` for more verbose logs?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] RNHTTR commented on a change in pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

RNHTTR commented on a change in pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#discussion_r567497681

##########

File path: airflow/providers/google/cloud/transfers/gdrive_to_gcs.py

##########

@@ -0,0 +1,134 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+from io import BytesIO

+from typing import Optional, Sequence, Union

+

+from airflow.exceptions import AirflowException

+from airflow.models import BaseOperator

+from airflow.providers.google.cloud.hooks.gcs import GCSHook

+from airflow.providers.google.suite.hooks.drive import GoogleDriveHook

+from airflow.utils.decorators import apply_defaults

+

+

+class GoogleDriveToGCSOperator(BaseOperator):

+ """

+ Writes a Google Drive file into Google Cloud Storage.

+

+ .. seealso::

+ For more information on how to use this operator, take a look at the guide:

+ :ref:`howto/operator:GoogleDriveToGCSOperator` FIXME

+

+ :param destination_bucket: The destination Google cloud storage bucket where the

+ report should be written to. (templated)

+ :param destination_bucket: str

+ :param destination_object: The Google Cloud Storage object name for the object created by the operator.

+ For example: ``path/to/my/file/file.txt``.

+ :type destination_object: str

+ :param folder_id: The folder id of the folder in whhich the Google Drive file resides

+ :type folder_id: str

+ :param file_name: The name of the file residing in Google Drive

+ :type file_name: str

+ :param gcp_conn_id: The GCP connection ID to use when fetching connection info.

+ :type gcp_conn_id: str

+ :param delegate_to: The account to impersonate using domain-wide delegation of authority,

+ if any. For this to work, the service account making the request must have

+ domain-wide delegation enabled.

+ :type delegate_to: str

+ :param impersonation_chain: Optional service account to impersonate using short-term

+ credentials, or chained list of accounts required to get the access_token

+ of the last account in the list, which will be impersonated in the request.

+ If set as a string, the account must grant the originating account

+ the Service Account Token Creator IAM role.

+ If set as a sequence, the identities from the list must grant

+ Service Account Token Creator IAM role to the directly preceding identity, with first

+ account from the list granting this role to the originating account (templated).

+ :type impersonation_chain: Union[str, Sequence[str]]

+ """

+

+ template_fields = [

+ "destination_bucket",

+ "destination_object",

+ "folder_id",

+ "file_name",

+ "impersonation_chain",

+ ]

+

+ @apply_defaults

+ def __init__(

+ self,

+ *,

+ destination_bucket: str,

+ destination_object: str,

+ folder_id: Optional[str] = None,

+ file_name: Optional[str] = None,

+ gcp_conn_id: str = "google_cloud_default",

+ delegate_to: Optional[str] = None,

+ impersonation_chain: Optional[Union[str, Sequence[str]]] = None,

+ **kwargs,

+ ) -> None:

+ if folder_id and not file_name:

+ raise AirflowException("Both folder_id and file_name must be set. Received only folder_id")

Review comment:

I'll try to verify that service accounts can't access shared drives...

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] potiuk merged pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

potiuk merged pull request #13982:

URL: https://github.com/apache/airflow/pull/13982

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] RNHTTR commented on a change in pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

RNHTTR commented on a change in pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#discussion_r567496492

##########

File path: airflow/providers/google/cloud/transfers/gdrive_to_gcs.py

##########

@@ -0,0 +1,134 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+from io import BytesIO

+from typing import Optional, Sequence, Union

+

+from airflow.exceptions import AirflowException

+from airflow.models import BaseOperator

+from airflow.providers.google.cloud.hooks.gcs import GCSHook

+from airflow.providers.google.suite.hooks.drive import GoogleDriveHook

+from airflow.utils.decorators import apply_defaults

+

+

+class GoogleDriveToGCSOperator(BaseOperator):

+ """

+ Writes a Google Drive file into Google Cloud Storage.

+

+ .. seealso::

+ For more information on how to use this operator, take a look at the guide:

+ :ref:`howto/operator:GoogleDriveToGCSOperator` FIXME

+

+ :param destination_bucket: The destination Google cloud storage bucket where the

+ report should be written to. (templated)

+ :param destination_bucket: str

+ :param destination_object: The Google Cloud Storage object name for the object created by the operator.

+ For example: ``path/to/my/file/file.txt``.

+ :type destination_object: str

+ :param folder_id: The folder id of the folder in whhich the Google Drive file resides

+ :type folder_id: str

+ :param file_name: The name of the file residing in Google Drive

+ :type file_name: str

+ :param gcp_conn_id: The GCP connection ID to use when fetching connection info.

+ :type gcp_conn_id: str

+ :param delegate_to: The account to impersonate using domain-wide delegation of authority,

+ if any. For this to work, the service account making the request must have

+ domain-wide delegation enabled.

+ :type delegate_to: str

+ :param impersonation_chain: Optional service account to impersonate using short-term

+ credentials, or chained list of accounts required to get the access_token

+ of the last account in the list, which will be impersonated in the request.

+ If set as a string, the account must grant the originating account

+ the Service Account Token Creator IAM role.

+ If set as a sequence, the identities from the list must grant

+ Service Account Token Creator IAM role to the directly preceding identity, with first

+ account from the list granting this role to the originating account (templated).

+ :type impersonation_chain: Union[str, Sequence[str]]

+ """

+

+ template_fields = [

+ "destination_bucket",

+ "destination_object",

+ "folder_id",

+ "file_name",

+ "impersonation_chain",

+ ]

+

+ @apply_defaults

+ def __init__(

+ self,

+ *,

+ destination_bucket: str,

+ destination_object: str,

+ folder_id: Optional[str] = None,

+ file_name: Optional[str] = None,

+ gcp_conn_id: str = "google_cloud_default",

+ delegate_to: Optional[str] = None,

+ impersonation_chain: Optional[Union[str, Sequence[str]]] = None,

+ **kwargs,

+ ) -> None:

+ if folder_id and not file_name:

+ raise AirflowException("Both folder_id and file_name must be set. Received only folder_id")

+ if not folder_id and file_name:

+ raise AirflowException("Both folder_id and file_name must be set. Received only file_name")

+ if not folder_id and not file_name:

+ raise AirflowException("Both folder_id and file_name must be set. Received neither.")

Review comment:

Good point

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] RNHTTR commented on a change in pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

RNHTTR commented on a change in pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#discussion_r567499142

##########

File path: airflow/providers/google/cloud/transfers/gdrive_to_gcs.py

##########

@@ -0,0 +1,134 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+from io import BytesIO

+from typing import Optional, Sequence, Union

+

+from airflow.exceptions import AirflowException

+from airflow.models import BaseOperator

+from airflow.providers.google.cloud.hooks.gcs import GCSHook

+from airflow.providers.google.suite.hooks.drive import GoogleDriveHook

+from airflow.utils.decorators import apply_defaults

+

+

+class GoogleDriveToGCSOperator(BaseOperator):

+ """

+ Writes a Google Drive file into Google Cloud Storage.

+

+ .. seealso::

+ For more information on how to use this operator, take a look at the guide:

+ :ref:`howto/operator:GoogleDriveToGCSOperator` FIXME

+

+ :param destination_bucket: The destination Google cloud storage bucket where the

+ report should be written to. (templated)

+ :param destination_bucket: str

+ :param destination_object: The Google Cloud Storage object name for the object created by the operator.

+ For example: ``path/to/my/file/file.txt``.

+ :type destination_object: str

+ :param folder_id: The folder id of the folder in whhich the Google Drive file resides

+ :type folder_id: str

+ :param file_name: The name of the file residing in Google Drive

+ :type file_name: str

+ :param gcp_conn_id: The GCP connection ID to use when fetching connection info.

+ :type gcp_conn_id: str

+ :param delegate_to: The account to impersonate using domain-wide delegation of authority,

+ if any. For this to work, the service account making the request must have

+ domain-wide delegation enabled.

+ :type delegate_to: str

+ :param impersonation_chain: Optional service account to impersonate using short-term

+ credentials, or chained list of accounts required to get the access_token

+ of the last account in the list, which will be impersonated in the request.

+ If set as a string, the account must grant the originating account

+ the Service Account Token Creator IAM role.

+ If set as a sequence, the identities from the list must grant

+ Service Account Token Creator IAM role to the directly preceding identity, with first

+ account from the list granting this role to the originating account (templated).

+ :type impersonation_chain: Union[str, Sequence[str]]

+ """

+

+ template_fields = [

+ "destination_bucket",

+ "destination_object",

+ "folder_id",

+ "file_name",

+ "impersonation_chain",

+ ]

+

+ @apply_defaults

+ def __init__(

+ self,

+ *,

+ destination_bucket: str,

+ destination_object: str,

+ folder_id: Optional[str] = None,

+ file_name: Optional[str] = None,

+ gcp_conn_id: str = "google_cloud_default",

+ delegate_to: Optional[str] = None,

+ impersonation_chain: Optional[Union[str, Sequence[str]]] = None,

+ **kwargs,

+ ) -> None:

+ if folder_id and not file_name:

+ raise AirflowException("Both folder_id and file_name must be set. Received only folder_id")

Review comment:

I overlooked the `driveId` param in the files.list method for searching shared Drives. ~I don't have a Google Workspace account, so I can't test this without access to a shared Drive. Do you know how I can get access to one?~ Resolved

I think this would only slightly change the implementation -- ~`folder_id` would be optional, the files.list `query` param would be dynamic based on the presence or absence of `folder_id`, and~ `drive_id` would be added as an optional init param.

`folder_id` should remain required. Imagine a scenario where a user has an arbitrary number of folders, and each folder contains a file `abc.csv`. If `folder_id` is optional, the file that gets returned would be ambiguous (or at least confusing -- the documentation doesn't make clear how the list of files is ordered in the response to `files.list()`)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] RNHTTR commented on pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

RNHTTR commented on pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#issuecomment-771784101

@potiuk I've locally resolved the integration test issues, but I have a question on the failing docs tests.

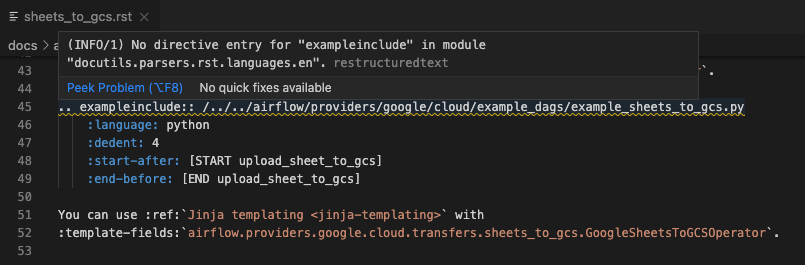

In all of the provider operator documentations I've looked at, they all show the linting error `No directive entry for "exampleinclude" in module "docutils.parsers.rst.languages.en".restructuredtext`, although I can see the directive defined in /docs/exts/exampleinclude.py. It might be something on my end (wonky installation or otherwise), but the docs don't look how I imagine they're supposed to look:

<img width="1035" alt="image" src="https://user-images.githubusercontent.com/25823361/106633550-f0fd8b00-654c-11eb-91e4-9e7fa1989663.png">

This example was pulled from the [gcs_to_gcs documentation](https://github.com/apache/airflow/blob/master/docs/apache-airflow-providers-google/operators/transfer/gcs_to_gcs.rst). Isn't this supposed to show a snippet of the operator being used? All of the documentation examples show rst source code rather than actual sample implementations of plugins.

Thoughts?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] mik-laj commented on pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

mik-laj commented on pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#issuecomment-772069045

@RNHTTR Where do you see this error? Do you build the documentation according to the instructions? https://github.com/apache/airflow/blob/master/docs/README.rst

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] potiuk commented on pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

potiuk commented on pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#issuecomment-774730800

Thanks @RNHTTR ! Good job - sorrry for small delays with review. What's next on your list :)?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] RNHTTR commented on a change in pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

RNHTTR commented on a change in pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#discussion_r568280170

##########

File path: airflow/providers/google/cloud/transfers/gdrive_to_gcs.py

##########

@@ -0,0 +1,134 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+from io import BytesIO

+from typing import Optional, Sequence, Union

+

+from airflow.exceptions import AirflowException

+from airflow.models import BaseOperator

+from airflow.providers.google.cloud.hooks.gcs import GCSHook

+from airflow.providers.google.suite.hooks.drive import GoogleDriveHook

+from airflow.utils.decorators import apply_defaults

+

+

+class GoogleDriveToGCSOperator(BaseOperator):

+ """

+ Writes a Google Drive file into Google Cloud Storage.

+

+ .. seealso::

+ For more information on how to use this operator, take a look at the guide:

+ :ref:`howto/operator:GoogleDriveToGCSOperator` FIXME

+

+ :param destination_bucket: The destination Google cloud storage bucket where the

+ report should be written to. (templated)

+ :param destination_bucket: str

+ :param destination_object: The Google Cloud Storage object name for the object created by the operator.

+ For example: ``path/to/my/file/file.txt``.

+ :type destination_object: str

+ :param folder_id: The folder id of the folder in whhich the Google Drive file resides

+ :type folder_id: str

+ :param file_name: The name of the file residing in Google Drive

+ :type file_name: str

+ :param gcp_conn_id: The GCP connection ID to use when fetching connection info.

+ :type gcp_conn_id: str

+ :param delegate_to: The account to impersonate using domain-wide delegation of authority,

+ if any. For this to work, the service account making the request must have

+ domain-wide delegation enabled.

+ :type delegate_to: str

+ :param impersonation_chain: Optional service account to impersonate using short-term

+ credentials, or chained list of accounts required to get the access_token

+ of the last account in the list, which will be impersonated in the request.

+ If set as a string, the account must grant the originating account

+ the Service Account Token Creator IAM role.

+ If set as a sequence, the identities from the list must grant

+ Service Account Token Creator IAM role to the directly preceding identity, with first

+ account from the list granting this role to the originating account (templated).

+ :type impersonation_chain: Union[str, Sequence[str]]

+ """

+

+ template_fields = [

+ "destination_bucket",

+ "destination_object",

+ "folder_id",

+ "file_name",

+ "impersonation_chain",

+ ]

+

+ @apply_defaults

+ def __init__(

+ self,

+ *,

+ destination_bucket: str,

+ destination_object: str,

+ folder_id: Optional[str] = None,

+ file_name: Optional[str] = None,

+ gcp_conn_id: str = "google_cloud_default",

+ delegate_to: Optional[str] = None,

+ impersonation_chain: Optional[Union[str, Sequence[str]]] = None,

+ **kwargs,

+ ) -> None:

+ if folder_id and not file_name:

+ raise AirflowException("Both folder_id and file_name must be set. Received only folder_id")

Review comment:

I created a trial Google Workspace account to figure out the functionality. I plan to implement the strategy in my previous comment:

1. Retain `folder_id` as a required parameter to remove any ambiguity or confusion

2. Add `drive_id` as an optional parameter to allow users to search Shared Drives.

**NOTE:** It is possible to search for a file at the root of a shared drive. The user would just have to pass the shared drive's Id to the `drive_id` and `folder_id` parameters. I'll be sure to call this out in the documentation.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] RNHTTR commented on a change in pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

RNHTTR commented on a change in pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#discussion_r567499142

##########

File path: airflow/providers/google/cloud/transfers/gdrive_to_gcs.py

##########

@@ -0,0 +1,134 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+from io import BytesIO

+from typing import Optional, Sequence, Union

+

+from airflow.exceptions import AirflowException

+from airflow.models import BaseOperator

+from airflow.providers.google.cloud.hooks.gcs import GCSHook

+from airflow.providers.google.suite.hooks.drive import GoogleDriveHook

+from airflow.utils.decorators import apply_defaults

+

+

+class GoogleDriveToGCSOperator(BaseOperator):

+ """

+ Writes a Google Drive file into Google Cloud Storage.

+

+ .. seealso::

+ For more information on how to use this operator, take a look at the guide:

+ :ref:`howto/operator:GoogleDriveToGCSOperator` FIXME

+

+ :param destination_bucket: The destination Google cloud storage bucket where the

+ report should be written to. (templated)

+ :param destination_bucket: str

+ :param destination_object: The Google Cloud Storage object name for the object created by the operator.

+ For example: ``path/to/my/file/file.txt``.

+ :type destination_object: str

+ :param folder_id: The folder id of the folder in whhich the Google Drive file resides

+ :type folder_id: str

+ :param file_name: The name of the file residing in Google Drive

+ :type file_name: str

+ :param gcp_conn_id: The GCP connection ID to use when fetching connection info.

+ :type gcp_conn_id: str

+ :param delegate_to: The account to impersonate using domain-wide delegation of authority,

+ if any. For this to work, the service account making the request must have

+ domain-wide delegation enabled.

+ :type delegate_to: str

+ :param impersonation_chain: Optional service account to impersonate using short-term

+ credentials, or chained list of accounts required to get the access_token

+ of the last account in the list, which will be impersonated in the request.

+ If set as a string, the account must grant the originating account

+ the Service Account Token Creator IAM role.

+ If set as a sequence, the identities from the list must grant

+ Service Account Token Creator IAM role to the directly preceding identity, with first

+ account from the list granting this role to the originating account (templated).

+ :type impersonation_chain: Union[str, Sequence[str]]

+ """

+

+ template_fields = [

+ "destination_bucket",

+ "destination_object",

+ "folder_id",

+ "file_name",

+ "impersonation_chain",

+ ]

+

+ @apply_defaults

+ def __init__(

+ self,

+ *,

+ destination_bucket: str,

+ destination_object: str,

+ folder_id: Optional[str] = None,

+ file_name: Optional[str] = None,

+ gcp_conn_id: str = "google_cloud_default",

+ delegate_to: Optional[str] = None,

+ impersonation_chain: Optional[Union[str, Sequence[str]]] = None,

+ **kwargs,

+ ) -> None:

+ if folder_id and not file_name:

+ raise AirflowException("Both folder_id and file_name must be set. Received only folder_id")

Review comment:

I might've overlooked the `driveId` param in the files.list method. I don't have a Google Workspace account, so I can't test this without access to a shared drive. Do you know how I can get access to one?

I think this would only slightly change the implementation -- `folder_id` would be optional, the files.list `query` param would be dynamic based on the presence or absence of `folder_id`, and `drive_id` would be an optional init param.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] RNHTTR commented on a change in pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

RNHTTR commented on a change in pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#discussion_r567499142

##########

File path: airflow/providers/google/cloud/transfers/gdrive_to_gcs.py

##########

@@ -0,0 +1,134 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+from io import BytesIO

+from typing import Optional, Sequence, Union

+

+from airflow.exceptions import AirflowException

+from airflow.models import BaseOperator

+from airflow.providers.google.cloud.hooks.gcs import GCSHook

+from airflow.providers.google.suite.hooks.drive import GoogleDriveHook

+from airflow.utils.decorators import apply_defaults

+

+

+class GoogleDriveToGCSOperator(BaseOperator):

+ """

+ Writes a Google Drive file into Google Cloud Storage.

+

+ .. seealso::

+ For more information on how to use this operator, take a look at the guide:

+ :ref:`howto/operator:GoogleDriveToGCSOperator` FIXME

+

+ :param destination_bucket: The destination Google cloud storage bucket where the

+ report should be written to. (templated)

+ :param destination_bucket: str

+ :param destination_object: The Google Cloud Storage object name for the object created by the operator.

+ For example: ``path/to/my/file/file.txt``.

+ :type destination_object: str

+ :param folder_id: The folder id of the folder in whhich the Google Drive file resides

+ :type folder_id: str

+ :param file_name: The name of the file residing in Google Drive

+ :type file_name: str

+ :param gcp_conn_id: The GCP connection ID to use when fetching connection info.

+ :type gcp_conn_id: str

+ :param delegate_to: The account to impersonate using domain-wide delegation of authority,

+ if any. For this to work, the service account making the request must have

+ domain-wide delegation enabled.

+ :type delegate_to: str

+ :param impersonation_chain: Optional service account to impersonate using short-term

+ credentials, or chained list of accounts required to get the access_token

+ of the last account in the list, which will be impersonated in the request.

+ If set as a string, the account must grant the originating account

+ the Service Account Token Creator IAM role.

+ If set as a sequence, the identities from the list must grant

+ Service Account Token Creator IAM role to the directly preceding identity, with first

+ account from the list granting this role to the originating account (templated).

+ :type impersonation_chain: Union[str, Sequence[str]]

+ """

+

+ template_fields = [

+ "destination_bucket",

+ "destination_object",

+ "folder_id",

+ "file_name",

+ "impersonation_chain",

+ ]

+

+ @apply_defaults

+ def __init__(

+ self,

+ *,

+ destination_bucket: str,

+ destination_object: str,

+ folder_id: Optional[str] = None,

+ file_name: Optional[str] = None,

+ gcp_conn_id: str = "google_cloud_default",

+ delegate_to: Optional[str] = None,

+ impersonation_chain: Optional[Union[str, Sequence[str]]] = None,

+ **kwargs,

+ ) -> None:

+ if folder_id and not file_name:

+ raise AirflowException("Both folder_id and file_name must be set. Received only folder_id")

Review comment:

I might've overlooked the `driveId` param in the files.list method. I don't have a Google Workspace account, so I can't test this without access to a shared drive. Do you know how I can get access to one?

I think this would only slightly change the implementation -- ~`folder_id` would be optional, the files.list `query` param would be dynamic based on the presence or absence of `folder_id`, and~ `drive_id` would be added as an optional init param.

`folder_id` should remain required. Imagine a scenario where a user has an arbitrary number of folders, and each folder contains a file `abc.csv`. If `folder_id` is optional, the file that gets returned would be ambiguous (or at least confusing -- the documentation doesn't make clear how the list of files is ordered in the response to `files.list()`)

##########

File path: airflow/providers/google/cloud/transfers/gdrive_to_gcs.py

##########

@@ -0,0 +1,134 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+from io import BytesIO

+from typing import Optional, Sequence, Union

+

+from airflow.exceptions import AirflowException

+from airflow.models import BaseOperator

+from airflow.providers.google.cloud.hooks.gcs import GCSHook

+from airflow.providers.google.suite.hooks.drive import GoogleDriveHook

+from airflow.utils.decorators import apply_defaults

+

+

+class GoogleDriveToGCSOperator(BaseOperator):

+ """

+ Writes a Google Drive file into Google Cloud Storage.

+

+ .. seealso::

+ For more information on how to use this operator, take a look at the guide:

+ :ref:`howto/operator:GoogleDriveToGCSOperator` FIXME

+

+ :param destination_bucket: The destination Google cloud storage bucket where the

+ report should be written to. (templated)

+ :param destination_bucket: str

+ :param destination_object: The Google Cloud Storage object name for the object created by the operator.

+ For example: ``path/to/my/file/file.txt``.

+ :type destination_object: str

+ :param folder_id: The folder id of the folder in whhich the Google Drive file resides

+ :type folder_id: str

+ :param file_name: The name of the file residing in Google Drive

+ :type file_name: str

+ :param gcp_conn_id: The GCP connection ID to use when fetching connection info.

+ :type gcp_conn_id: str

+ :param delegate_to: The account to impersonate using domain-wide delegation of authority,

+ if any. For this to work, the service account making the request must have

+ domain-wide delegation enabled.

+ :type delegate_to: str

+ :param impersonation_chain: Optional service account to impersonate using short-term

+ credentials, or chained list of accounts required to get the access_token

+ of the last account in the list, which will be impersonated in the request.

+ If set as a string, the account must grant the originating account

+ the Service Account Token Creator IAM role.

+ If set as a sequence, the identities from the list must grant

+ Service Account Token Creator IAM role to the directly preceding identity, with first

+ account from the list granting this role to the originating account (templated).

+ :type impersonation_chain: Union[str, Sequence[str]]

+ """

+

+ template_fields = [

+ "destination_bucket",

+ "destination_object",

+ "folder_id",

+ "file_name",

+ "impersonation_chain",

+ ]

+

+ @apply_defaults

+ def __init__(

+ self,

+ *,

+ destination_bucket: str,

+ destination_object: str,

+ folder_id: Optional[str] = None,

+ file_name: Optional[str] = None,

+ gcp_conn_id: str = "google_cloud_default",

+ delegate_to: Optional[str] = None,

+ impersonation_chain: Optional[Union[str, Sequence[str]]] = None,

+ **kwargs,

+ ) -> None:

+ if folder_id and not file_name:

+ raise AirflowException("Both folder_id and file_name must be set. Received only folder_id")

Review comment:

I created a trial Google Workspace account to figure out the functionality. I plan to implement the strategy in my previous comment:

1. Retain `folder_id` as a required parameter to remove any ambiguity or confusion

2. Add `drive_id` as an optional parameter to allow users to search Shared Drives.

**NOTE:** It is possible to search for a file at the root of a shared drive. The user would just have to pass the shared drive's Id to the `drive_id` and `folder_id` parameters. I'll be sure to call this out in the documentation.

##########

File path: airflow/providers/google/cloud/transfers/gdrive_to_gcs.py

##########

@@ -0,0 +1,134 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+from io import BytesIO

+from typing import Optional, Sequence, Union

+

+from airflow.exceptions import AirflowException

+from airflow.models import BaseOperator

+from airflow.providers.google.cloud.hooks.gcs import GCSHook

+from airflow.providers.google.suite.hooks.drive import GoogleDriveHook

+from airflow.utils.decorators import apply_defaults

+

+

+class GoogleDriveToGCSOperator(BaseOperator):

+ """

+ Writes a Google Drive file into Google Cloud Storage.

+

+ .. seealso::

+ For more information on how to use this operator, take a look at the guide:

+ :ref:`howto/operator:GoogleDriveToGCSOperator` FIXME

+

+ :param destination_bucket: The destination Google cloud storage bucket where the

+ report should be written to. (templated)

+ :param destination_bucket: str

+ :param destination_object: The Google Cloud Storage object name for the object created by the operator.

+ For example: ``path/to/my/file/file.txt``.

+ :type destination_object: str

+ :param folder_id: The folder id of the folder in whhich the Google Drive file resides

+ :type folder_id: str

+ :param file_name: The name of the file residing in Google Drive

+ :type file_name: str

+ :param gcp_conn_id: The GCP connection ID to use when fetching connection info.

+ :type gcp_conn_id: str

+ :param delegate_to: The account to impersonate using domain-wide delegation of authority,

+ if any. For this to work, the service account making the request must have

+ domain-wide delegation enabled.

+ :type delegate_to: str

+ :param impersonation_chain: Optional service account to impersonate using short-term

+ credentials, or chained list of accounts required to get the access_token

+ of the last account in the list, which will be impersonated in the request.

+ If set as a string, the account must grant the originating account

+ the Service Account Token Creator IAM role.

+ If set as a sequence, the identities from the list must grant

+ Service Account Token Creator IAM role to the directly preceding identity, with first

+ account from the list granting this role to the originating account (templated).

+ :type impersonation_chain: Union[str, Sequence[str]]

+ """

+

+ template_fields = [

+ "destination_bucket",

+ "destination_object",

+ "folder_id",

+ "file_name",

+ "impersonation_chain",

+ ]

+

+ @apply_defaults

+ def __init__(

+ self,

+ *,

+ destination_bucket: str,

+ destination_object: str,

+ folder_id: Optional[str] = None,

+ file_name: Optional[str] = None,

+ gcp_conn_id: str = "google_cloud_default",

+ delegate_to: Optional[str] = None,

+ impersonation_chain: Optional[Union[str, Sequence[str]]] = None,

+ **kwargs,

+ ) -> None:

+ if folder_id and not file_name:

+ raise AirflowException("Both folder_id and file_name must be set. Received only folder_id")

Review comment:

I'll try to verify that service accounts can't access shared drives...

##########

File path: airflow/providers/google/cloud/transfers/gdrive_to_gcs.py

##########

@@ -0,0 +1,134 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+from io import BytesIO

+from typing import Optional, Sequence, Union

+

+from airflow.exceptions import AirflowException

+from airflow.models import BaseOperator

+from airflow.providers.google.cloud.hooks.gcs import GCSHook

+from airflow.providers.google.suite.hooks.drive import GoogleDriveHook

+from airflow.utils.decorators import apply_defaults

+

+

+class GoogleDriveToGCSOperator(BaseOperator):

+ """

+ Writes a Google Drive file into Google Cloud Storage.

+

+ .. seealso::

+ For more information on how to use this operator, take a look at the guide:

+ :ref:`howto/operator:GoogleDriveToGCSOperator` FIXME

+

+ :param destination_bucket: The destination Google cloud storage bucket where the

+ report should be written to. (templated)

+ :param destination_bucket: str

+ :param destination_object: The Google Cloud Storage object name for the object created by the operator.

+ For example: ``path/to/my/file/file.txt``.

+ :type destination_object: str

+ :param folder_id: The folder id of the folder in whhich the Google Drive file resides

+ :type folder_id: str

+ :param file_name: The name of the file residing in Google Drive

+ :type file_name: str

+ :param gcp_conn_id: The GCP connection ID to use when fetching connection info.

+ :type gcp_conn_id: str

+ :param delegate_to: The account to impersonate using domain-wide delegation of authority,

+ if any. For this to work, the service account making the request must have

+ domain-wide delegation enabled.

+ :type delegate_to: str

+ :param impersonation_chain: Optional service account to impersonate using short-term

+ credentials, or chained list of accounts required to get the access_token

+ of the last account in the list, which will be impersonated in the request.

+ If set as a string, the account must grant the originating account

+ the Service Account Token Creator IAM role.

+ If set as a sequence, the identities from the list must grant

+ Service Account Token Creator IAM role to the directly preceding identity, with first

+ account from the list granting this role to the originating account (templated).

+ :type impersonation_chain: Union[str, Sequence[str]]

+ """

+

+ template_fields = [

+ "destination_bucket",

+ "destination_object",

+ "folder_id",

+ "file_name",

+ "impersonation_chain",

+ ]

+

+ @apply_defaults

+ def __init__(

+ self,

+ *,

+ destination_bucket: str,

+ destination_object: str,

+ folder_id: Optional[str] = None,

+ file_name: Optional[str] = None,

+ gcp_conn_id: str = "google_cloud_default",

+ delegate_to: Optional[str] = None,

+ impersonation_chain: Optional[Union[str, Sequence[str]]] = None,

+ **kwargs,

+ ) -> None:

+ if folder_id and not file_name:

+ raise AirflowException("Both folder_id and file_name must be set. Received only folder_id")

Review comment:

I overlooked the `driveId` param in the files.list method for searching shared Drives. I don't have a Google Workspace account, so I can't test this without access to a shared Drive. Do you know how I can get access to one?

I think this would only slightly change the implementation -- ~`folder_id` would be optional, the files.list `query` param would be dynamic based on the presence or absence of `folder_id`, and~ `drive_id` would be added as an optional init param.

`folder_id` should remain required. Imagine a scenario where a user has an arbitrary number of folders, and each folder contains a file `abc.csv`. If `folder_id` is optional, the file that gets returned would be ambiguous (or at least confusing -- the documentation doesn't make clear how the list of files is ordered in the response to `files.list()`)

##########

File path: airflow/providers/google/cloud/transfers/gdrive_to_gcs.py

##########

@@ -0,0 +1,134 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+from io import BytesIO

+from typing import Optional, Sequence, Union

+

+from airflow.exceptions import AirflowException

+from airflow.models import BaseOperator

+from airflow.providers.google.cloud.hooks.gcs import GCSHook

+from airflow.providers.google.suite.hooks.drive import GoogleDriveHook

+from airflow.utils.decorators import apply_defaults

+

+

+class GoogleDriveToGCSOperator(BaseOperator):

+ """

+ Writes a Google Drive file into Google Cloud Storage.

+

+ .. seealso::

+ For more information on how to use this operator, take a look at the guide:

+ :ref:`howto/operator:GoogleDriveToGCSOperator` FIXME

+

+ :param destination_bucket: The destination Google cloud storage bucket where the

+ report should be written to. (templated)

+ :param destination_bucket: str

+ :param destination_object: The Google Cloud Storage object name for the object created by the operator.

+ For example: ``path/to/my/file/file.txt``.

+ :type destination_object: str

+ :param folder_id: The folder id of the folder in whhich the Google Drive file resides

+ :type folder_id: str

+ :param file_name: The name of the file residing in Google Drive

+ :type file_name: str

+ :param gcp_conn_id: The GCP connection ID to use when fetching connection info.

+ :type gcp_conn_id: str

+ :param delegate_to: The account to impersonate using domain-wide delegation of authority,

+ if any. For this to work, the service account making the request must have

+ domain-wide delegation enabled.

+ :type delegate_to: str

+ :param impersonation_chain: Optional service account to impersonate using short-term

+ credentials, or chained list of accounts required to get the access_token

+ of the last account in the list, which will be impersonated in the request.

+ If set as a string, the account must grant the originating account

+ the Service Account Token Creator IAM role.

+ If set as a sequence, the identities from the list must grant

+ Service Account Token Creator IAM role to the directly preceding identity, with first

+ account from the list granting this role to the originating account (templated).

+ :type impersonation_chain: Union[str, Sequence[str]]

+ """

+

+ template_fields = [

+ "destination_bucket",

+ "destination_object",

+ "folder_id",

+ "file_name",

+ "impersonation_chain",

+ ]

+

+ @apply_defaults

+ def __init__(

+ self,

+ *,

+ destination_bucket: str,

+ destination_object: str,

+ folder_id: Optional[str] = None,

+ file_name: Optional[str] = None,

+ gcp_conn_id: str = "google_cloud_default",

+ delegate_to: Optional[str] = None,

+ impersonation_chain: Optional[Union[str, Sequence[str]]] = None,

+ **kwargs,

+ ) -> None:

+ if folder_id and not file_name:

+ raise AirflowException("Both folder_id and file_name must be set. Received only folder_id")

Review comment:

I overlooked the `driveId` param in the files.list method for searching shared Drives. ~I don't have a Google Workspace account, so I can't test this without access to a shared Drive. Do you know how I can get access to one?~ Resolved

I think this would only slightly change the implementation -- ~`folder_id` would be optional, the files.list `query` param would be dynamic based on the presence or absence of `folder_id`, and~ `drive_id` would be added as an optional init param.

`folder_id` should remain required. Imagine a scenario where a user has an arbitrary number of folders, and each folder contains a file `abc.csv`. If `folder_id` is optional, the file that gets returned would be ambiguous (or at least confusing -- the documentation doesn't make clear how the list of files is ordered in the response to `files.list()`)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] TobKed commented on a change in pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

TobKed commented on a change in pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#discussion_r567306694

##########

File path: tests/providers/google/cloud/transfers/test_gdrive_to_gcs.py

##########

@@ -0,0 +1,86 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+

+from unittest import mock

+

+from airflow.providers.google.cloud.transfers.gdrive_to_gcs import GoogleDriveToGCSOperator

+

+FILE_ID = "1NHDk661aHxwpnvfVI2FbiFGVOM89FJTI"

+BUCKET = "destination_bucket"

Review comment:

```suggestion

BUCKET = os.environ.get("GCP_GDRIVE_TO_GCS", "gdrive-to-gcs-bucket")

```

Since example dags are being run in system tests they should me made in a way that some variables (such bucket name) could be customized. It will allow other users run them without modifying code (goal is to run system test automatically some day).

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] TobKed commented on a change in pull request #13982: Add gdrive_to_gcs operator, drive sensor, additional functionality to…

Posted by GitBox <gi...@apache.org>.

TobKed commented on a change in pull request #13982:

URL: https://github.com/apache/airflow/pull/13982#discussion_r567306848

##########

File path: tests/providers/google/cloud/transfers/test_gdrive_to_gcs.py

##########

@@ -0,0 +1,86 @@

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#