You are viewing a plain text version of this content. The canonical link for it is here.

Posted to common-issues@hadoop.apache.org by GitBox <gi...@apache.org> on 2022/01/05 10:22:54 UTC

[GitHub] [hadoop] jianghuazhu opened a new pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

jianghuazhu opened a new pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861

### Description of PR

When blk_xxxx and blk_xxxx.meta are not regular files, they will have adverse effects on the cluster, such as errors in the calculation space and the possibility of failure to read data.

For this type of block, it should not be used as a normal block file.

Details:

HDFS-16316

### How was this patch tested?

Need to verify whether a file is a real regular file.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] ferhui commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

ferhui commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r809627226

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/DirectoryScanner.java

##########

@@ -540,21 +541,30 @@ private void scan() {

m++;

continue;

}

- // Block file and/or metadata file exists on the disk

- // Block exists in memory

- if (info.getBlockFile() == null) {

- // Block metadata file exits and block file is missing

- addDifference(diffRecord, statsRecord, info);

- } else if (info.getGenStamp() != memBlock.getGenerationStamp()

- || info.getBlockLength() != memBlock.getNumBytes()) {

- // Block metadata file is missing or has wrong generation stamp,

- // or block file length is different than expected

+

+ // Block and meta must be regular file

+ boolean isRegular = FileUtil.isRegularFile(info.getBlockFile(), false) &&

Review comment:

Thanks for your contribution. I have one question here.

As far as i know, many companies use fast copy. It means that some block files are links.

Does it affect them?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] tomscut commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

tomscut commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r808658893

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/fsdataset/impl/FsDatasetImpl.java

##########

@@ -2812,6 +2816,9 @@ public void checkAndUpdate(String bpid, ScanInfo scanInfo)

+ memBlockInfo.getNumBytes() + " to "

+ memBlockInfo.getBlockDataLength());

memBlockInfo.setNumBytes(memBlockInfo.getBlockDataLength());

+ } else if (!isRegular) {

+ corruptBlock = new Block(memBlockInfo);

+ LOG.warn("Block:{} is not a regular file.", corruptBlock.getBlockId());

Review comment:

Maybe we should print the absolute path so that we can deal with these abnormal files?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] ferhui merged pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

ferhui merged pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1039728159

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 36s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 2 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 49s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 23m 3s | | trunk passed |

| +1 :green_heart: | compile | 24m 37s | | trunk passed with JDK Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 21m 51s | | trunk passed with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 4m 3s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 18s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 24s | | trunk passed with JDK Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 3m 36s | | trunk passed with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 6m 12s | | trunk passed |

| +1 :green_heart: | shadedclient | 23m 10s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 27s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 16s | | the patch passed |

| +1 :green_heart: | compile | 24m 18s | | the patch passed with JDK Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 24m 18s | | the patch passed |

| +1 :green_heart: | compile | 22m 1s | | the patch passed with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 22m 1s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| +1 :green_heart: | checkstyle | 3m 52s | | the patch passed |

| +1 :green_heart: | mvnsite | 3m 18s | | the patch passed |

| +1 :green_heart: | javadoc | 2m 26s | | the patch passed with JDK Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 3m 34s | | the patch passed with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 6m 40s | | the patch passed |

| +1 :green_heart: | shadedclient | 23m 20s | | patch has no errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| +1 :green_heart: | unit | 18m 3s | | hadoop-common in the patch passed. |

| -1 :x: | unit | 243m 20s | [/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/4/artifact/out/patch-unit-hadoop-hdfs-project_hadoop-hdfs.txt) | hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 1m 7s | | The patch does not generate ASF License warnings. |

| | | 479m 3s | | |

| Reason | Tests |

|-------:|:------|

| Failed junit tests | hadoop.hdfs.TestRollingUpgrade |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/4/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3861 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 63415c0a5ad9 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6 11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / adede8620292339919f23c457164b573e0c73aee |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 /usr/lib/jvm/java-8-openjdk-amd64:Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/4/testReport/ |

| Max. process+thread count | 3587 (vs. ulimit of 5500) |

| modules | C: hadoop-common-project/hadoop-common hadoop-hdfs-project/hadoop-hdfs U: . |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/4/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] ferhui commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

ferhui commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r811525503

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/DirectoryScanner.java

##########

@@ -540,21 +541,30 @@ private void scan() {

m++;

continue;

}

- // Block file and/or metadata file exists on the disk

- // Block exists in memory

- if (info.getBlockFile() == null) {

- // Block metadata file exits and block file is missing

- addDifference(diffRecord, statsRecord, info);

- } else if (info.getGenStamp() != memBlock.getGenerationStamp()

- || info.getBlockLength() != memBlock.getNumBytes()) {

- // Block metadata file is missing or has wrong generation stamp,

- // or block file length is different than expected

+

+ // Block and meta must be regular file

+ boolean isRegular = FileUtil.isRegularFile(info.getBlockFile(), false) &&

Review comment:

Thanks for your explanation.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] tomscut commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

tomscut commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r808721730

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/fsdataset/impl/FsDatasetImpl.java

##########

@@ -2812,6 +2816,9 @@ public void checkAndUpdate(String bpid, ScanInfo scanInfo)

+ memBlockInfo.getNumBytes() + " to "

+ memBlockInfo.getBlockDataLength());

memBlockInfo.setNumBytes(memBlockInfo.getBlockDataLength());

+ } else if (!isRegular) {

+ corruptBlock = new Block(memBlockInfo);

+ LOG.warn("Block:{} is not a regular file.", corruptBlock.getBlockId());

Review comment:

This is good.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jianghuazhu commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

jianghuazhu commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r808719728

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/fsdataset/impl/FsDatasetImpl.java

##########

@@ -2812,6 +2816,9 @@ public void checkAndUpdate(String bpid, ScanInfo scanInfo)

+ memBlockInfo.getNumBytes() + " to "

+ memBlockInfo.getBlockDataLength());

memBlockInfo.setNumBytes(memBlockInfo.getBlockDataLength());

+ } else if (!isRegular) {

+ corruptBlock = new Block(memBlockInfo);

+ LOG.warn("Block:{} is not a regular file.", corruptBlock.getBlockId());

Review comment:

Yes, these exception files are cleaned up.

When NameNode obtains such abnormal files, it treats them as invalid Blocks.

When the DataNode sends a heartbeat to the NameNode, the NameNode notifies the DataNode to clean up.

The specific cleaning action is performed by FsDatasetAsyncDiskServic

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jianghuazhu commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

jianghuazhu commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r802247836

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/fsdataset/impl/FsDatasetImpl.java

##########

@@ -2812,6 +2816,10 @@ public void checkAndUpdate(String bpid, ScanInfo scanInfo)

+ memBlockInfo.getNumBytes() + " to "

+ memBlockInfo.getBlockDataLength());

memBlockInfo.setNumBytes(memBlockInfo.getBlockDataLength());

+ } else if (!isRegular) {

Review comment:

Thanks for your comment and review, @jojochuang .

I'll add some unit tests later.

This is indeed a bit too long for the checkAndUpdate() method.

I'll be fine-tuning this later if I can, but will handle this as a new jira.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1040835489

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 43s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 2 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 48s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 25m 22s | | trunk passed |

| +1 :green_heart: | compile | 26m 32s | | trunk passed with JDK Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 23m 0s | | trunk passed with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 4m 8s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 21s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 30s | | trunk passed with JDK Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 3m 36s | | trunk passed with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 5m 57s | | trunk passed |

| +1 :green_heart: | shadedclient | 24m 8s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 29s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 23s | | the patch passed |

| +1 :green_heart: | compile | 23m 11s | | the patch passed with JDK Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 23m 11s | | the patch passed |

| +1 :green_heart: | compile | 21m 53s | | the patch passed with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 21m 53s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| +1 :green_heart: | checkstyle | 3m 33s | | the patch passed |

| +1 :green_heart: | mvnsite | 3m 22s | | the patch passed |

| +1 :green_heart: | javadoc | 2m 18s | | the patch passed with JDK Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 3m 31s | | the patch passed with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 6m 34s | | the patch passed |

| +1 :green_heart: | shadedclient | 24m 1s | | patch has no errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| +1 :green_heart: | unit | 17m 55s | | hadoop-common in the patch passed. |

| +1 :green_heart: | unit | 230m 38s | | hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 1m 7s | | The patch does not generate ASF License warnings. |

| | | 471m 50s | | |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/7/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3861 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux dbbf79d9ac98 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6 11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / f5e27e408d9aa8f1e563d139d35a001375e19f7f |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 /usr/lib/jvm/java-8-openjdk-amd64:Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/7/testReport/ |

| Max. process+thread count | 3544 (vs. ulimit of 5500) |

| modules | C: hadoop-common-project/hadoop-common hadoop-hdfs-project/hadoop-hdfs U: . |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/7/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1039728159

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] tomscut commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

tomscut commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r808711821

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/fsdataset/impl/FsDatasetImpl.java

##########

@@ -2812,6 +2816,9 @@ public void checkAndUpdate(String bpid, ScanInfo scanInfo)

+ memBlockInfo.getNumBytes() + " to "

+ memBlockInfo.getBlockDataLength());

memBlockInfo.setNumBytes(memBlockInfo.getBlockDataLength());

+ } else if (!isRegular) {

+ corruptBlock = new Block(memBlockInfo);

+ LOG.warn("Block:{} is not a regular file.", corruptBlock.getBlockId());

Review comment:

Thank you for explaining this. It looks like the operation related to mount has been performed. Did HDFS automatically clean the abnormal file on your online cluster you mentioned?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jianghuazhu commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

jianghuazhu commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r808697481

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/fsdataset/impl/FsDatasetImpl.java

##########

@@ -2812,6 +2816,9 @@ public void checkAndUpdate(String bpid, ScanInfo scanInfo)

+ memBlockInfo.getNumBytes() + " to "

+ memBlockInfo.getBlockDataLength());

memBlockInfo.setNumBytes(memBlockInfo.getBlockDataLength());

+ } else if (!isRegular) {

+ corruptBlock = new Block(memBlockInfo);

+ LOG.warn("Block:{} is not a regular file.", corruptBlock.getBlockId());

Review comment:

Thanks @tomscut for the comment and review.

This happens occasionally, I've been monitoring it for a long time and still haven't found the root cause.

But I think this situation may be related to the Linux environment. When the normal data flow is working, no exception occurs. (I will continue to monitor this situation)

Here are some more canonical checks to prevent further worse cases on the cluster. This is a good thing for clusters.

When the file is actually cleaned up, the specific path will be printed. Here are some examples of online clusters:

`

2022-02-15 11:24:12,856 INFO org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetAsyncDiskService: Deleted BP-xxxx blk_xxxx file /mnt/dfs/11/data/current/BP-xxxx.xxxx.xxxx/current/finalized/subdir0/subdir0/blk_xxxx

`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jianghuazhu removed a comment on pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

jianghuazhu removed a comment on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1039826045

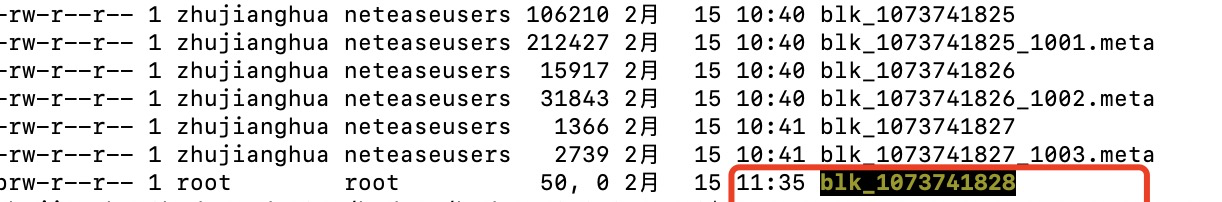

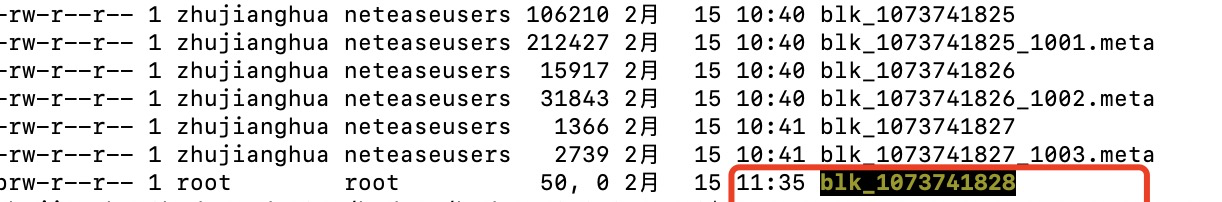

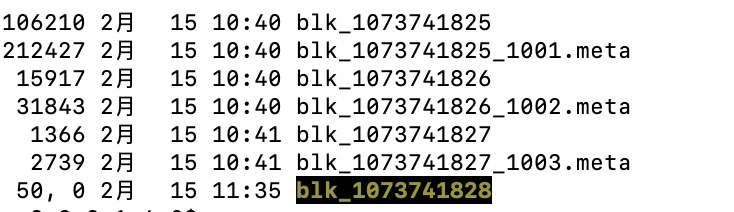

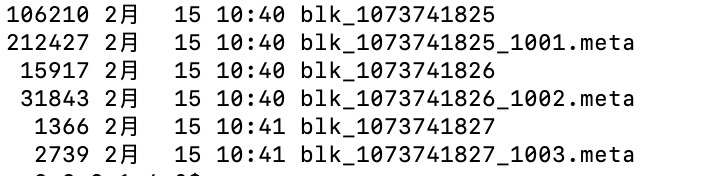

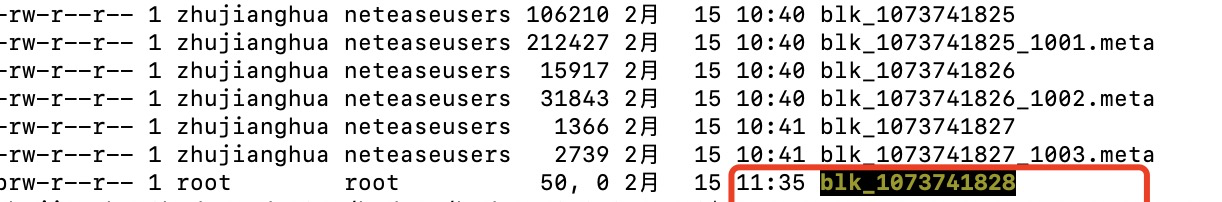

Here are some examples of online clusters.

We construct a block device file such as:

This file is non-standard.

This kind of file is found when DirectoryScanner is working.

log:

`

2022-02-15 11:24:10,286 WARN org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: Block:1073741828 is not a regular file.

`

`

2022-02-15 11:24:10,286 WARN org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: Reporting the block blk_1073741828_0 as corrupt due to length mismatch

`

Then DataNode will tell NameNode that there are some unqualified blocks through NameNodeRpcServer#reportBadBlocks(). After the NameNode gets the data, it will process it further.

After a period of time, the DataNode will automatically clean up these unqualified replica data.

Can you help review this pr again, @jojochuang .

Thank you so much.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jianghuazhu commented on pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

jianghuazhu commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1039826045

Here are some examples of online clusters.

We construct a block device file such as:

This file is non-standard.

This kind of file is found when DirectoryScanner is working.

log:

`

2022-02-15 11:24:10,286 WARN org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: Block:1073741828 is not a regular file.

`

`

2022-02-15 11:24:10,286 WARN org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: Reporting the block blk_1073741828_0 as corrupt due to length mismatch

`

Then DataNode will tell NameNode that there are some unqualified blocks through NameNodeRpcServer#reportBadBlocks(). After the NameNode gets the data, it will process it further.

After a period of time, the DataNode will automatically clean up these unqualified replica data.

Can you help review this pr again, @jojochuang .

Thank you so much.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jianghuazhu commented on pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

jianghuazhu commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1041040826

Thanks for the suggestion, @jojochuang .

I re-updated the unit tests and also did some tests.

When I remove the fix, the newly added unit test does not succeed, which is expected and does not affect the execution of other unit tests.

Here is an example of the test when removing the fix:

Here is an example during normal testing:

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jojochuang commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

jojochuang commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r802235094

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/fsdataset/impl/FsDatasetImpl.java

##########

@@ -2812,6 +2816,10 @@ public void checkAndUpdate(String bpid, ScanInfo scanInfo)

+ memBlockInfo.getNumBytes() + " to "

+ memBlockInfo.getBlockDataLength());

memBlockInfo.setNumBytes(memBlockInfo.getBlockDataLength());

+ } else if (!isRegular) {

Review comment:

Unrelated: the checkAndUpdate() is way too long. We should refactor it in the future.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] tomscut commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

tomscut commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r808711821

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/fsdataset/impl/FsDatasetImpl.java

##########

@@ -2812,6 +2816,9 @@ public void checkAndUpdate(String bpid, ScanInfo scanInfo)

+ memBlockInfo.getNumBytes() + " to "

+ memBlockInfo.getBlockDataLength());

memBlockInfo.setNumBytes(memBlockInfo.getBlockDataLength());

+ } else if (!isRegular) {

+ corruptBlock = new Block(memBlockInfo);

+ LOG.warn("Block:{} is not a regular file.", corruptBlock.getBlockId());

Review comment:

Thank you for explaining this. It looks like the operation related to mount has been performed. Did HDFS successfully clean the abnormal file on your online cluster you mentioned after this change?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1006518967

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 42s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 1 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 54s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 21m 49s | | trunk passed |

| +1 :green_heart: | compile | 22m 19s | | trunk passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 19m 31s | | trunk passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 3m 43s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 24s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 29s | | trunk passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 3m 34s | | trunk passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 5m 53s | | trunk passed |

| +1 :green_heart: | shadedclient | 23m 4s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 29s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 18s | | the patch passed |

| +1 :green_heart: | compile | 21m 39s | | the patch passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 21m 39s | | the patch passed |

| +1 :green_heart: | compile | 19m 45s | | the patch passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 19m 45s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| +1 :green_heart: | checkstyle | 3m 37s | | the patch passed |

| +1 :green_heart: | mvnsite | 3m 22s | | the patch passed |

| +1 :green_heart: | javadoc | 2m 26s | | the patch passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 3m 30s | | the patch passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 6m 18s | | the patch passed |

| +1 :green_heart: | shadedclient | 23m 15s | | patch has no errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| -1 :x: | unit | 17m 53s | [/patch-unit-hadoop-common-project_hadoop-common.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/3/artifact/out/patch-unit-hadoop-common-project_hadoop-common.txt) | hadoop-common in the patch passed. |

| +1 :green_heart: | unit | 240m 24s | | hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 1m 8s | | The patch does not generate ASF License warnings. |

| | | 464m 47s | | |

| Reason | Tests |

|-------:|:------|

| Failed junit tests | hadoop.ipc.TestIPC |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/3/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3861 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 30c0ae66e875 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6 11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 292926186345309ba9a6c26396556b4c2aaf48f8 |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 /usr/lib/jvm/java-8-openjdk-amd64:Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/3/testReport/ |

| Max. process+thread count | 3158 (vs. ulimit of 5500) |

| modules | C: hadoop-common-project/hadoop-common hadoop-hdfs-project/hadoop-hdfs U: . |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/3/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jianghuazhu commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

jianghuazhu commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r808719728

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/fsdataset/impl/FsDatasetImpl.java

##########

@@ -2812,6 +2816,9 @@ public void checkAndUpdate(String bpid, ScanInfo scanInfo)

+ memBlockInfo.getNumBytes() + " to "

+ memBlockInfo.getBlockDataLength());

memBlockInfo.setNumBytes(memBlockInfo.getBlockDataLength());

+ } else if (!isRegular) {

+ corruptBlock = new Block(memBlockInfo);

+ LOG.warn("Block:{} is not a regular file.", corruptBlock.getBlockId());

Review comment:

Yes, exception files are cleaned up.

When NameNode obtains such abnormal files, it treats them as invalid Blocks.

When the DataNode sends a heartbeat to the NameNode, the NameNode notifies the DataNode to clean up.

The specific cleaning action is performed by FsDatasetAsyncDiskService.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] ferhui commented on pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

ferhui commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1047361290

@jianghuazhu Thanks for your contribution. @jojochuang @tomscut Thanks for your reviews! Merged.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jojochuang commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

jojochuang commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r806448723

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/server/datanode/TestDirectoryScanner.java

##########

@@ -507,6 +509,71 @@ public void testDeleteBlockOnTransientStorage() throws Exception {

}

}

+ @Test(timeout = 600000)

+ public void testRegularBlock() throws Exception {

+ // add a logger stream to check what has printed to log

+ ByteArrayOutputStream loggerStream = new ByteArrayOutputStream();

Review comment:

Can you use the Hadoop utility class LogCapturer https://github.com/apache/hadoop/blob/6342d5e523941622a140fd877f06e9b59f48c48b/hadoop-common-project/hadoop-common/src/test/java/org/apache/hadoop/test/GenericTestUtils.java#L533 for this purpose?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jojochuang commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

jojochuang commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r806448723

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/server/datanode/TestDirectoryScanner.java

##########

@@ -507,6 +509,71 @@ public void testDeleteBlockOnTransientStorage() throws Exception {

}

}

+ @Test(timeout = 600000)

+ public void testRegularBlock() throws Exception {

+ // add a logger stream to check what has printed to log

+ ByteArrayOutputStream loggerStream = new ByteArrayOutputStream();

Review comment:

Can you use the Hadoop utility class LogCapturer https://github.com/apache/hadoop/blob/6342d5e523941622a140fd877f06e9b59f48c48b/hadoop-common-project/hadoop-common/src/test/java/org/apache/hadoop/test/GenericTestUtils.java#L533 for this purpose?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jianghuazhu commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

jianghuazhu commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r806465949

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/server/datanode/TestDirectoryScanner.java

##########

@@ -507,6 +509,71 @@ public void testDeleteBlockOnTransientStorage() throws Exception {

}

}

+ @Test(timeout = 600000)

+ public void testRegularBlock() throws Exception {

+ // add a logger stream to check what has printed to log

+ ByteArrayOutputStream loggerStream = new ByteArrayOutputStream();

Review comment:

Yes, it was my mistake.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jojochuang commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

jojochuang commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r802255124

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/fsdataset/impl/FsDatasetImpl.java

##########

@@ -2812,6 +2816,10 @@ public void checkAndUpdate(String bpid, ScanInfo scanInfo)

+ memBlockInfo.getNumBytes() + " to "

+ memBlockInfo.getBlockDataLength());

memBlockInfo.setNumBytes(memBlockInfo.getBlockDataLength());

+ } else if (!isRegular) {

Review comment:

Absolutely. Let's not worry about the refactor now. Thanks.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jianghuazhu commented on pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

jianghuazhu commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1039827275

Here are some examples of online clusters.

We construct a block device file such as:

This file is non-standard.

This kind of file is found when DirectoryScanner is working.

log:

`

2022-02-15 11:24:10,286 WARN org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: Block:1073741828 is not a regular file.

`

`

2022-02-15 11:24:10,286 WARN org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl: Reporting the block blk_1073741828_0 as corrupt due to length mismatch

`

Then DataNode will tell NameNode that there are some unqualified blocks through NameNodeRpcServer#reportBadBlocks(). After the NameNode gets the data, it will process it further.

After a period of time, the DataNode will automatically clean up these unqualified replica data.

Can you help review this pr again, @jojochuang .

Thank you so much.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1040305852

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 45s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 2 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 36s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 22m 44s | | trunk passed |

| +1 :green_heart: | compile | 24m 35s | | trunk passed with JDK Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 21m 11s | | trunk passed with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 3m 45s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 13s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 24s | | trunk passed with JDK Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 3m 12s | | trunk passed with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 6m 0s | | trunk passed |

| +1 :green_heart: | shadedclient | 23m 31s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 24s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 25s | | the patch passed |

| +1 :green_heart: | compile | 24m 48s | | the patch passed with JDK Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 24m 48s | | the patch passed |

| +1 :green_heart: | compile | 22m 16s | | the patch passed with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 22m 16s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| +1 :green_heart: | checkstyle | 3m 51s | | the patch passed |

| +1 :green_heart: | mvnsite | 3m 17s | | the patch passed |

| +1 :green_heart: | javadoc | 2m 21s | | the patch passed with JDK Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 3m 28s | | the patch passed with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 6m 36s | | the patch passed |

| +1 :green_heart: | shadedclient | 24m 22s | | patch has no errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| +1 :green_heart: | unit | 17m 45s | | hadoop-common in the patch passed. |

| +1 :green_heart: | unit | 238m 14s | | hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 1m 2s | | The patch does not generate ASF License warnings. |

| | | 473m 27s | | |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/6/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3861 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux 94871cc29013 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6 11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / ca61b8bee32722ede0c39562b39edeee90521ce0 |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 /usr/lib/jvm/java-8-openjdk-amd64:Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/6/testReport/ |

| Max. process+thread count | 3234 (vs. ulimit of 5500) |

| modules | C: hadoop-common-project/hadoop-common hadoop-hdfs-project/hadoop-hdfs U: . |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/6/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jianghuazhu commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

jianghuazhu commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r806465949

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/test/java/org/apache/hadoop/hdfs/server/datanode/TestDirectoryScanner.java

##########

@@ -507,6 +509,71 @@ public void testDeleteBlockOnTransientStorage() throws Exception {

}

}

+ @Test(timeout = 600000)

+ public void testRegularBlock() throws Exception {

+ // add a logger stream to check what has printed to log

+ ByteArrayOutputStream loggerStream = new ByteArrayOutputStream();

Review comment:

Yes, it was my mistake.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] tomscut commented on a change in pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

tomscut commented on a change in pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#discussion_r808711821

##########

File path: hadoop-hdfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/datanode/fsdataset/impl/FsDatasetImpl.java

##########

@@ -2812,6 +2816,9 @@ public void checkAndUpdate(String bpid, ScanInfo scanInfo)

+ memBlockInfo.getNumBytes() + " to "

+ memBlockInfo.getBlockDataLength());

memBlockInfo.setNumBytes(memBlockInfo.getBlockDataLength());

+ } else if (!isRegular) {

+ corruptBlock = new Block(memBlockInfo);

+ LOG.warn("Block:{} is not a regular file.", corruptBlock.getBlockId());

Review comment:

Thank you for explaining this. It looks like the operation related to mount has been performed. Did HDFS automatically clean the abnormal file on your online cluster you mentioned after this change?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jianghuazhu commented on pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

jianghuazhu commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1041830218

Would you like to help review this PR, @ferhui @tomscut .

Thank you very much.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] tomscut commented on pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

tomscut commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1042553397

> Would you like to help review this PR, @ferhui @tomscut . Thank you very much.

Thanks @jianghuazhu for reminding me.

This check makes sense to me. And what I'm curious about is what was done before the metaFile became a device file? Or what is the root cause of this problem?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1039731742

:confetti_ball: **+1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 47s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 0s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 1s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 2 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 38s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 22m 42s | | trunk passed |

| +1 :green_heart: | compile | 25m 0s | | trunk passed with JDK Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | compile | 21m 33s | | trunk passed with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | checkstyle | 3m 40s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 21s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 30s | | trunk passed with JDK Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 3m 25s | | trunk passed with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 5m 54s | | trunk passed |

| +1 :green_heart: | shadedclient | 22m 58s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 27s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 24s | | the patch passed |

| +1 :green_heart: | compile | 24m 30s | | the patch passed with JDK Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javac | 24m 30s | | the patch passed |

| +1 :green_heart: | compile | 21m 38s | | the patch passed with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | javac | 21m 38s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| +1 :green_heart: | checkstyle | 3m 59s | | the patch passed |

| +1 :green_heart: | mvnsite | 3m 32s | | the patch passed |

| +1 :green_heart: | javadoc | 2m 23s | | the patch passed with JDK Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 |

| +1 :green_heart: | javadoc | 3m 26s | | the patch passed with JDK Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| +1 :green_heart: | spotbugs | 6m 17s | | the patch passed |

| +1 :green_heart: | shadedclient | 23m 31s | | patch has no errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| +1 :green_heart: | unit | 18m 8s | | hadoop-common in the patch passed. |

| +1 :green_heart: | unit | 244m 31s | | hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 1m 11s | | The patch does not generate ASF License warnings. |

| | | 479m 39s | | |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/5/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3861 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux f5704bbd2c85 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6 11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 39177f5f70296fc117b6f5e5bdf01a6acf52e040 |

| Default Java | Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.13+8-Ubuntu-0ubuntu1.20.04 /usr/lib/jvm/java-8-openjdk-amd64:Private Build-1.8.0_312-8u312-b07-0ubuntu1~20.04-b07 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/5/testReport/ |

| Max. process+thread count | 3458 (vs. ulimit of 5500) |

| modules | C: hadoop-common-project/hadoop-common hadoop-hdfs-project/hadoop-hdfs U: . |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/5/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1006241356

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 52s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 1 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +0 :ok: | mvndep | 12m 56s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 22m 41s | | trunk passed |

| +1 :green_heart: | compile | 23m 33s | | trunk passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | compile | 20m 41s | | trunk passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | checkstyle | 4m 3s | | trunk passed |

| +1 :green_heart: | mvnsite | 3m 25s | | trunk passed |

| +1 :green_heart: | javadoc | 2m 24s | | trunk passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javadoc | 3m 26s | | trunk passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | spotbugs | 6m 6s | | trunk passed |

| +1 :green_heart: | shadedclient | 24m 9s | | branch has no errors when building and testing our client artifacts. |

|||| _ Patch Compile Tests _ |

| +0 :ok: | mvndep | 0m 29s | | Maven dependency ordering for patch |

| +1 :green_heart: | mvninstall | 2m 17s | | the patch passed |

| +1 :green_heart: | compile | 22m 24s | | the patch passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| +1 :green_heart: | javac | 22m 24s | | the patch passed |

| +1 :green_heart: | compile | 20m 36s | | the patch passed with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| +1 :green_heart: | javac | 20m 36s | | the patch passed |

| +1 :green_heart: | blanks | 0m 0s | | The patch has no blanks issues. |

| +1 :green_heart: | checkstyle | 3m 53s | | the patch passed |

| +1 :green_heart: | mvnsite | 3m 19s | | the patch passed |

| +1 :green_heart: | javadoc | 2m 27s | | the patch passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |

| -1 :x: | javadoc | 1m 45s | [/results-javadoc-javadoc-hadoop-common-project_hadoop-common-jdkPrivateBuild-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/2/artifact/out/results-javadoc-javadoc-hadoop-common-project_hadoop-common-jdkPrivateBuild-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10.txt) | hadoop-common-project_hadoop-common-jdkPrivateBuild-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 with JDK Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 generated 1 new + 0 unchanged - 0 fixed = 1 total (was 0) |

| -1 :x: | spotbugs | 3m 50s | [/new-spotbugs-hadoop-hdfs-project_hadoop-hdfs.html](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/2/artifact/out/new-spotbugs-hadoop-hdfs-project_hadoop-hdfs.html) | hadoop-hdfs-project/hadoop-hdfs generated 1 new + 0 unchanged - 0 fixed = 1 total (was 0) |

| +1 :green_heart: | shadedclient | 23m 52s | | patch has no errors when building and testing our client artifacts. |

|||| _ Other Tests _ |

| -1 :x: | unit | 17m 44s | [/patch-unit-hadoop-common-project_hadoop-common.txt](https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/2/artifact/out/patch-unit-hadoop-common-project_hadoop-common.txt) | hadoop-common in the patch passed. |

| +1 :green_heart: | unit | 232m 43s | | hadoop-hdfs in the patch passed. |

| +1 :green_heart: | asflicense | 1m 13s | | The patch does not generate ASF License warnings. |

| | | 464m 32s | | |

| Reason | Tests |

|-------:|:------|

| SpotBugs | module:hadoop-hdfs-project/hadoop-hdfs |

| | Dead store to corruptBlock in org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl.checkAndUpdate(String, FsVolumeSpi$ScanInfo) At FsDatasetImpl.java:org.apache.hadoop.hdfs.server.datanode.fsdataset.impl.FsDatasetImpl.checkAndUpdate(String, FsVolumeSpi$ScanInfo) At FsDatasetImpl.java:[line 2814] |

| Failed junit tests | hadoop.ipc.TestIPC |

| Subsystem | Report/Notes |

|----------:|:-------------|

| Docker | ClientAPI=1.41 ServerAPI=1.41 base: https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/2/artifact/out/Dockerfile |

| GITHUB PR | https://github.com/apache/hadoop/pull/3861 |

| Optional Tests | dupname asflicense compile javac javadoc mvninstall mvnsite unit shadedclient spotbugs checkstyle codespell |

| uname | Linux e7380dcf7e87 4.15.0-58-generic #64-Ubuntu SMP Tue Aug 6 11:12:41 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux |

| Build tool | maven |

| Personality | dev-support/bin/hadoop.sh |

| git revision | trunk / 3a0edea82a15a207d2904cf2d2876dcdceeb3c7e |

| Default Java | Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Multi-JDK versions | /usr/lib/jvm/java-11-openjdk-amd64:Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 /usr/lib/jvm/java-8-openjdk-amd64:Private Build-1.8.0_292-8u292-b10-0ubuntu1~20.04-b10 |

| Test Results | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/2/testReport/ |

| Max. process+thread count | 3367 (vs. ulimit of 5500) |

| modules | C: hadoop-common-project/hadoop-common hadoop-hdfs-project/hadoop-hdfs U: . |

| Console output | https://ci-hadoop.apache.org/job/hadoop-multibranch/job/PR-3861/2/console |

| versions | git=2.25.1 maven=3.6.3 spotbugs=4.2.2 |

| Powered by | Apache Yetus 0.14.0-SNAPSHOT https://yetus.apache.org |

This message was automatically generated.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] jianghuazhu commented on pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

jianghuazhu commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1006528984

Here are some unit tests that failed:

org.apache.hadoop.ipc.TestIPC

It seems that these failed tests have little to do with the content I submitted.

Can you help review this pr, @aajisaka @virajjasani .

Thank you very much.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: common-issues-unsubscribe@hadoop.apache.org

For additional commands, e-mail: common-issues-help@hadoop.apache.org

[GitHub] [hadoop] hadoop-yetus commented on pull request #3861: HDFS-16316.Improve DirectoryScanner: add regular file check related block.

Posted by GitBox <gi...@apache.org>.

hadoop-yetus commented on pull request #3861:

URL: https://github.com/apache/hadoop/pull/3861#issuecomment-1005940570

:broken_heart: **-1 overall**

| Vote | Subsystem | Runtime | Logfile | Comment |

|:----:|----------:|--------:|:--------:|:-------:|

| +0 :ok: | reexec | 0m 52s | | Docker mode activated. |

|||| _ Prechecks _ |

| +1 :green_heart: | dupname | 0m 0s | | No case conflicting files found. |

| +0 :ok: | codespell | 0m 1s | | codespell was not available. |

| +1 :green_heart: | @author | 0m 0s | | The patch does not contain any @author tags. |

| +1 :green_heart: | test4tests | 0m 0s | | The patch appears to include 1 new or modified test files. |

|||| _ trunk Compile Tests _ |

| +0 :ok: | mvndep | 11m 39s | | Maven dependency ordering for branch |

| +1 :green_heart: | mvninstall | 26m 0s | | trunk passed |

| +1 :green_heart: | compile | 28m 25s | | trunk passed with JDK Ubuntu-11.0.11+9-Ubuntu-0ubuntu2.20.04 |