You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@mxnet.apache.org by GitBox <gi...@apache.org> on 2018/12/04 19:16:13 UTC

[GitHub] indhub closed pull request #13488: Docs & website sphinx errors squished 🌦

indhub closed pull request #13488: Docs & website sphinx errors squished 🌦

URL: https://github.com/apache/incubator-mxnet/pull/13488

This is a PR merged from a forked repository.

As GitHub hides the original diff on merge, it is displayed below for

the sake of provenance:

As this is a foreign pull request (from a fork), the diff is supplied

below (as it won't show otherwise due to GitHub magic):

diff --git a/docs/api/scala/index.md b/docs/api/scala/index.md

index 8b32c9fe9e2..f7a15001987 100644

--- a/docs/api/scala/index.md

+++ b/docs/api/scala/index.md

@@ -19,6 +19,7 @@ See the [MXNet Scala API Documentation](docs/index.html#org.apache.mxnet.package

symbol.md

```

+

## Image Classification with the Scala Infer API

The Infer API can be used for single and batch image classification. More information can be found at the following locations:

@@ -32,20 +33,19 @@ The Infer API can be used for single and batch image classification. More inform

You can perform tensor or matrix computation in pure Scala:

```scala

- scala> import org.apache.mxnet._

import org.apache.mxnet._

- scala> val arr = NDArray.ones(2, 3)

- arr: org.apache.mxnet.NDArray = org.apache.mxnet.NDArray@f5e74790

+ val arr = NDArray.ones(2, 3)

+ // arr: org.apache.mxnet.NDArray = org.apache.mxnet.NDArray@f5e74790

- scala> arr.shape

- res0: org.apache.mxnet.Shape = (2,3)

+ arr.shape

+ // org.apache.mxnet.Shape = (2,3)

- scala> (arr * 2).toArray

- res2: Array[Float] = Array(2.0, 2.0, 2.0, 2.0, 2.0, 2.0)

+ (arr * 2).toArray

+ // Array[Float] = Array(2.0, 2.0, 2.0, 2.0, 2.0, 2.0)

- scala> (arr * 2).shape

- res3: org.apache.mxnet.Shape = (2,3)

+ (arr * 2).shape

+ // org.apache.mxnet.Shape = (2,3)

```

diff --git a/docs/api/scala/kvstore.md b/docs/api/scala/kvstore.md

index 2157176d23b..e195c4d7e72 100644

--- a/docs/api/scala/kvstore.md

+++ b/docs/api/scala/kvstore.md

@@ -16,13 +16,13 @@ Let's consider a simple example. It initializes

a (`int`, `NDArray`) pair into the store, and then pulls the value out.

```scala

- scala> val kv = KVStore.create("local") // create a local kv store.

- scala> val shape = Shape(2,3)

- scala> kv.init(3, NDArray.ones(shape)*2)

- scala> val a = NDArray.zeros(shape)

- scala> kv.pull(3, out = a)

- scala> a.toArray

- Array[Float] = Array(2.0, 2.0, 2.0, 2.0, 2.0, 2.0)

+val kv = KVStore.create("local") // create a local kv store.

+val shape = Shape(2,3)

+kv.init(3, NDArray.ones(shape)*2)

+val a = NDArray.zeros(shape)

+kv.pull(3, out = a)

+a.toArray

+// Array[Float] = Array(2.0, 2.0, 2.0, 2.0, 2.0, 2.0)

```

### Push, Aggregation, and Updater

@@ -30,10 +30,10 @@ a (`int`, `NDArray`) pair into the store, and then pulls the value out.

For any key that's been initialized, you can push a new value with the same shape to the key, as follows:

```scala

- scala> kv.push(3, NDArray.ones(shape)*8)

- scala> kv.pull(3, out = a) // pull out the value

- scala> a.toArray

- Array[Float] = Array(8.0, 8.0, 8.0, 8.0, 8.0, 8.0)

+kv.push(3, NDArray.ones(shape)*8)

+kv.pull(3, out = a) // pull out the value

+a.toArray

+// Array[Float] = Array(8.0, 8.0, 8.0, 8.0, 8.0, 8.0)

```

The data that you want to push can be stored on any device. Furthermore, you can push multiple

@@ -41,13 +41,13 @@ values into the same key, where KVStore first sums all of these

values, and then pushes the aggregated value, as follows:

```scala

- scala> val gpus = Array(Context.gpu(0), Context.gpu(1), Context.gpu(2), Context.gpu(3))

- scala> val b = Array(NDArray.ones(shape, gpus(0)), NDArray.ones(shape, gpus(1)), \

- scala> NDArray.ones(shape, gpus(2)), NDArray.ones(shape, gpus(3)))

- scala> kv.push(3, b)

- scala> kv.pull(3, out = a)

- scala> a.toArray

- Array[Float] = Array(4.0, 4.0, 4.0, 4.0, 4.0, 4.0)

+val gpus = Array(Context.gpu(0), Context.gpu(1), Context.gpu(2), Context.gpu(3))

+val b = Array(NDArray.ones(shape, gpus(0)), NDArray.ones(shape, gpus(1)), \

+NDArray.ones(shape, gpus(2)), NDArray.ones(shape, gpus(3)))

+kv.push(3, b)

+kv.pull(3, out = a)

+a.toArray

+// Array[Float] = Array(4.0, 4.0, 4.0, 4.0, 4.0, 4.0)

```

For each push command, KVStore applies the pushed value to the value stored by an

@@ -55,22 +55,22 @@ For each push command, KVStore applies the pushed value to the value stored by a

control how data is merged.

```scala

- scala> val updater = new MXKVStoreUpdater {

- override def update(key: Int, input: NDArray, stored: NDArray): Unit = {

- println(s"update on key $key")

- stored += input * 2

- }

- override def dispose(): Unit = {}

- }

- scala> kv.setUpdater(updater)

- scala> kv.pull(3, a)

- scala> a.toArray

- Array[Float] = Array(4.0, 4.0, 4.0, 4.0, 4.0, 4.0)

- scala> kv.push(3, NDArray.ones(shape))

- update on key 3

- scala> kv.pull(3, a)

- scala> a.toArray

- Array[Float] = Array(6.0, 6.0, 6.0, 6.0, 6.0, 6.0)

+val updater = new MXKVStoreUpdater {

+ override def update(key: Int, input: NDArray, stored: NDArray): Unit = {

+ println(s"update on key $key")

+ stored += input * 2

+ }

+ override def dispose(): Unit = {}

+ }

+kv.setUpdater(updater)

+kv.pull(3, a)

+a.toArray

+// Array[Float] = Array(4.0, 4.0, 4.0, 4.0, 4.0, 4.0)

+kv.push(3, NDArray.ones(shape))

+// update on key 3

+kv.pull(3, a)

+a.toArray

+// Array[Float] = Array(6.0, 6.0, 6.0, 6.0, 6.0, 6.0)

```

### Pull

@@ -79,11 +79,11 @@ You've already seen how to pull a single key-value pair. Similar to the way that

pull the value into several devices with a single call.

```scala

- scala> val b = Array(NDArray.ones(shape, gpus(0)), NDArray.ones(shape, gpus(1)),\

- scala> NDArray.ones(shape, gpus(2)), NDArray.ones(shape, gpus(3)))

- scala> kv.pull(3, outs = b)

- scala> b(1).toArray

- Array[Float] = Array(6.0, 6.0, 6.0, 6.0, 6.0, 6.0)

+val b = Array(NDArray.ones(shape, gpus(0)), NDArray.ones(shape, gpus(1)),\

+NDArray.ones(shape, gpus(2)), NDArray.ones(shape, gpus(3)))

+kv.pull(3, outs = b)

+b(1).toArray

+// Array[Float] = Array(6.0, 6.0, 6.0, 6.0, 6.0, 6.0)

```

## List Key-Value Pairs

@@ -92,14 +92,14 @@ All of the operations that we've discussed so far are performed on a single key.

the interface for generating a list of key-value pairs. For a single device, use the following:

```scala

- scala> val keys = Array(5, 7, 9)

- scala> kv.init(keys, Array.fill(keys.length)(NDArray.ones(shape)))

- scala> kv.push(keys, Array.fill(keys.length)(NDArray.ones(shape)))

- update on key: 5

- update on key: 7

- update on key: 9

- scala> val b = Array.fill(keys.length)(NDArray.zeros(shape))

- scala> kv.pull(keys, outs = b)

- scala> b(1).toArray

- Array[Float] = Array(3.0, 3.0, 3.0, 3.0, 3.0, 3.0)

+val keys = Array(5, 7, 9)

+kv.init(keys, Array.fill(keys.length)(NDArray.ones(shape)))

+kv.push(keys, Array.fill(keys.length)(NDArray.ones(shape)))

+// update on key: 5

+// update on key: 7

+// update on key: 9

+val b = Array.fill(keys.length)(NDArray.zeros(shape))

+kv.pull(keys, outs = b)

+b(1).toArray

+// Array[Float] = Array(3.0, 3.0, 3.0, 3.0, 3.0, 3.0)

```

diff --git a/docs/api/scala/ndarray.md b/docs/api/scala/ndarray.md

index 3d4bc37a19e..9e87d397c8b 100644

--- a/docs/api/scala/ndarray.md

+++ b/docs/api/scala/ndarray.md

@@ -14,13 +14,13 @@ Topics:

Create `mxnet.ndarray` as follows:

```scala

- scala> import org.apache.mxnet._

- scala> // all-zero array of dimension 100x50

- scala> val a = NDArray.zeros(100, 50)

- scala> // all-one array of dimension 256x32x128x1

- scala> val b = NDArray.ones(256, 32, 128, 1)

- scala> // initialize array with contents, you can specify dimensions of array using Shape parameter while creating array.

- scala> val c = NDArray.array(Array(1, 2, 3, 4, 5, 6), shape = Shape(2, 3))

+import org.apache.mxnet._

+// all-zero array of dimension 100x50

+val a = NDArray.zeros(100, 50)

+// all-one array of dimension 256x32x128x1

+val b = NDArray.ones(256, 32, 128, 1)

+// initialize array with contents, you can specify dimensions of array using Shape parameter while creating array.

+val c = NDArray.array(Array(1, 2, 3, 4, 5, 6), shape = Shape(2, 3))

```

This is similar to the way you use `numpy`.

## NDArray Operations

@@ -30,77 +30,77 @@ We provide some basic ndarray operations, like arithmetic and slice operations.

### Arithmetic Operations

```scala

- scala> import org.apache.mxnet._

- scala> val a = NDArray.zeros(100, 50)

- scala> a.shape

- org.apache.mxnet.Shape = (100,50)

- scala> val b = NDArray.ones(100, 50)

- scala> // c and d will be calculated in parallel here!

- scala> val c = a + b

- scala> val d = a - b

- scala> // inplace operation, b's contents will be modified, but c and d won't be affected.

- scala> b += d

+import org.apache.mxnet._

+val a = NDArray.zeros(100, 50)

+a.shape

+// org.apache.mxnet.Shape = (100,50)

+val b = NDArray.ones(100, 50)

+// c and d will be calculated in parallel here!

+val c = a + b

+val d = a - b

+// inplace operation, b's contents will be modified, but c and d won't be affected.

+b += d

```

### Multiplication/Division Operations

```scala

- scala> import org.apache.mxnet._

- //Multiplication

- scala> val ndones = NDArray.ones(2, 1)

- scala> val ndtwos = ndones * 2

- scala> ndtwos.toArray

- Array[Float] = Array(2.0, 2.0)

- scala> (ndones * ndones).toArray

- Array[Float] = Array(1.0, 1.0)

- scala> (ndtwos * ndtwos).toArray

- Array[Float] = Array(4.0, 4.0)

- scala> ndtwos *= ndtwos // inplace

- scala> ndtwos.toArray

- Array[Float] = Array(4.0, 4.0)

-

- //Division

- scala> val ndones = NDArray.ones(2, 1)

- scala> val ndzeros = ndones - 1f

- scala> val ndhalves = ndones / 2

- scala> ndhalves.toArray

- Array[Float] = Array(0.5, 0.5)

- scala> (ndhalves / ndhalves).toArray

- Array[Float] = Array(1.0, 1.0)

- scala> (ndones / ndones).toArray

- Array[Float] = Array(1.0, 1.0)

- scala> (ndzeros / ndones).toArray

- Array[Float] = Array(0.0, 0.0)

- scala> ndhalves /= ndhalves

- scala> ndhalves.toArray

- Array[Float] = Array(1.0, 1.0)

+import org.apache.mxnet._

+// Multiplication

+val ndones = NDArray.ones(2, 1)

+val ndtwos = ndones * 2

+ndtwos.toArray

+// Array[Float] = Array(2.0, 2.0)

+(ndones * ndones).toArray

+// Array[Float] = Array(1.0, 1.0)

+(ndtwos * ndtwos).toArray

+// Array[Float] = Array(4.0, 4.0)

+ndtwos *= ndtwos // inplace

+ndtwos.toArray

+// Array[Float] = Array(4.0, 4.0)

+

+//Division

+val ndones = NDArray.ones(2, 1)

+val ndzeros = ndones - 1f

+val ndhalves = ndones / 2

+ndhalves.toArray

+// Array[Float] = Array(0.5, 0.5)

+(ndhalves / ndhalves).toArray

+// Array[Float] = Array(1.0, 1.0)

+(ndones / ndones).toArray

+// Array[Float] = Array(1.0, 1.0)

+(ndzeros / ndones).toArray

+// Array[Float] = Array(0.0, 0.0)

+ndhalves /= ndhalves

+ndhalves.toArray

+// Array[Float] = Array(1.0, 1.0)

```

### Slice Operations

```scala

- scala> import org.apache.mxnet._

- scala> val a = NDArray.array(Array(1f, 2f, 3f, 4f, 5f, 6f), shape = Shape(3, 2))

- scala> val a1 = a.slice(1)

- scala> assert(a1.shape === Shape(1, 2))

- scala> assert(a1.toArray === Array(3f, 4f))

-

- scala> val a2 = arr.slice(1, 3)

- scala> assert(a2.shape === Shape(2, 2))

- scala> assert(a2.toArray === Array(3f, 4f, 5f, 6f))

+import org.apache.mxnet._

+val a = NDArray.array(Array(1f, 2f, 3f, 4f, 5f, 6f), shape = Shape(3, 2))

+val a1 = a.slice(1)

+assert(a1.shape === Shape(1, 2))

+assert(a1.toArray === Array(3f, 4f))

+

+val a2 = arr.slice(1, 3)

+assert(a2.shape === Shape(2, 2))

+assert(a2.toArray === Array(3f, 4f, 5f, 6f))

```

### Dot Product

```scala

- scala> import org.apache.mxnet._

- scala> val arr1 = NDArray.array(Array(1f, 2f), shape = Shape(1, 2))

- scala> val arr2 = NDArray.array(Array(3f, 4f), shape = Shape(2, 1))

- scala> val res = NDArray.dot(arr1, arr2)

- scala> res.shape

- org.apache.mxnet.Shape = (1,1)

- scala> res.toArray

- Array[Float] = Array(11.0)

+import org.apache.mxnet._

+val arr1 = NDArray.array(Array(1f, 2f), shape = Shape(1, 2))

+val arr2 = NDArray.array(Array(3f, 4f), shape = Shape(2, 1))

+val res = NDArray.dot(arr1, arr2)

+res.shape

+// org.apache.mxnet.Shape = (1,1)

+res.toArray

+// Array[Float] = Array(11.0)

```

### Save and Load NDArray

@@ -108,18 +108,18 @@ We provide some basic ndarray operations, like arithmetic and slice operations.

You can use MXNet functions to save and load a list or dictionary of NDArrays from file systems, as follows:

```scala

- scala> import org.apache.mxnet._

- scala> val a = NDArray.zeros(100, 200)

- scala> val b = NDArray.zeros(100, 200)

- scala> // save list of NDArrays

- scala> NDArray.save("/path/to/array/file", Array(a, b))

- scala> // save dictionary of NDArrays to AWS S3

- scala> NDArray.save("s3://path/to/s3/array", Map("A" -> a, "B" -> b))

- scala> // save list of NDArrays to hdfs.

- scala> NDArray.save("hdfs://path/to/hdfs/array", Array(a, b))

- scala> val from_file = NDArray.load("/path/to/array/file")

- scala> val from_s3 = NDArray.load("s3://path/to/s3/array")

- scala> val from_hdfs = NDArray.load("hdfs://path/to/hdfs/array")

+import org.apache.mxnet._

+val a = NDArray.zeros(100, 200)

+val b = NDArray.zeros(100, 200)

+// save list of NDArrays

+NDArray.save("/path/to/array/file", Array(a, b))

+// save dictionary of NDArrays to AWS S3

+NDArray.save("s3://path/to/s3/array", Map("A" -> a, "B" -> b))

+// save list of NDArrays to hdfs.

+NDArray.save("hdfs://path/to/hdfs/array", Array(a, b))

+val from_file = NDArray.load("/path/to/array/file")

+val from_s3 = NDArray.load("s3://path/to/s3/array")

+val from_hdfs = NDArray.load("hdfs://path/to/hdfs/array")

```

The good thing about using the `save` and `load` interface is that you can use the format across all `mxnet` language bindings. They also already support Amazon S3 and HDFS.

@@ -128,29 +128,29 @@ The good thing about using the `save` and `load` interface is that you can use t

Device information is stored in the `mxnet.Context` structure. When creating NDArray in MXNet, you can use the context argument (the default is the CPU context) to create arrays on specific devices as follows:

```scala

- scala> import org.apache.mxnet._

- scala> val cpu_a = NDArray.zeros(100, 200)

- scala> cpu_a.context

- org.apache.mxnet.Context = cpu(0)

- scala> val ctx = Context.gpu(0)

- scala> val gpu_b = NDArray.zeros(Shape(100, 200), ctx)

- scala> gpu_b.context

- org.apache.mxnet.Context = gpu(0)

+import org.apache.mxnet._

+val cpu_a = NDArray.zeros(100, 200)

+cpu_a.context

+// org.apache.mxnet.Context = cpu(0)

+val ctx = Context.gpu(0)

+val gpu_b = NDArray.zeros(Shape(100, 200), ctx)

+gpu_b.context

+// org.apache.mxnet.Context = gpu(0)

```

Currently, we *do not* allow operations among arrays from different contexts. To manually enable this, use the `copyto` member function to copy the content to different devices, and continue computation:

```scala

- scala> import org.apache.mxnet._

- scala> val x = NDArray.zeros(100, 200)

- scala> val ctx = Context.gpu(0)

- scala> val y = NDArray.zeros(Shape(100, 200), ctx)

- scala> val z = x + y

- mxnet.base.MXNetError: [13:29:12] src/ndarray/ndarray.cc:33:

- Check failed: lhs.ctx() == rhs.ctx() operands context mismatch

- scala> val cpu_y = NDArray.zeros(100, 200)

- scala> y.copyto(cpu_y)

- scala> val z = x + cpu_y

+import org.apache.mxnet._

+val x = NDArray.zeros(100, 200)

+val ctx = Context.gpu(0)

+val y = NDArray.zeros(Shape(100, 200), ctx)

+val z = x + y

+// mxnet.base.MXNetError: [13:29:12] src/ndarray/ndarray.cc:33:

+// Check failed: lhs.ctx() == rhs.ctx() operands context mismatch

+val cpu_y = NDArray.zeros(100, 200)

+y.copyto(cpu_y)

+val z = x + cpu_y

```

## Next Steps

diff --git a/docs/api/scala/symbol.md b/docs/api/scala/symbol.md

index 5b73ae5d600..c10d5fb60a2 100644

--- a/docs/api/scala/symbol.md

+++ b/docs/api/scala/symbol.md

@@ -20,14 +20,14 @@ You can configure the graphs either at the level of neural network layer operati

The following example configures a two-layer neural network.

```scala

- scala> import org.apache.mxnet._

- scala> val data = Symbol.Variable("data")

- scala> val fc1 = Symbol.api.FullyConnected(Some(data), num_hidden = 128, name = "fc1")

- scala> val act1 = Symbol.api.Activation(Some(fc1), "relu", "relu1")

- scala> val fc2 = Symbol.api.FullyConnected(some(act1), num_hidden = 64, name = "fc2")

- scala> val net = Symbol.api.SoftmaxOutput(Some(fc2), name = "out")

- scala> :type net

- org.apache.mxnet.Symbol

+ import org.apache.mxnet._

+ val data = Symbol.Variable("data")

+ val fc1 = Symbol.api.FullyConnected(Some(data), num_hidden = 128, name = "fc1")

+ val act1 = Symbol.api.Activation(Some(fc1), "relu", "relu1")

+ val fc2 = Symbol.api.FullyConnected(some(act1), num_hidden = 64, name = "fc2")

+ val net = Symbol.api.SoftmaxOutput(Some(fc2), name = "out")

+ :type net

+ // org.apache.mxnet.Symbol

```

The basic arithmetic operators (plus, minus, div, multiplication) are overloaded for

@@ -36,10 +36,10 @@ The basic arithmetic operators (plus, minus, div, multiplication) are overloaded

The following example creates a computation graph that adds two inputs together.

```scala

- scala> import org.apache.mxnet._

- scala> val a = Symbol.Variable("a")

- scala> val b = Symbol.Variable("b")

- scala> val c = a + b

+ import org.apache.mxnet._

+ val a = Symbol.Variable("a")

+ val b = Symbol.Variable("b")

+ val c = a + b

```

## Symbol Attributes

@@ -54,7 +54,7 @@ For proper communication with the C++ backend, both the key and values of the at

```

data.attr("mood")

- res6: Option[String] = Some(angry)

+ // Option[String] = Some(angry)

```

To attach attributes, you can use ```AttrScope```. ```AttrScope``` automatically adds the specified attributes to all of the symbols created within that scope. The user can also inherit this object to change naming behavior. For example:

@@ -71,7 +71,7 @@ To attach attributes, you can use ```AttrScope```. ```AttrScope``` automatically

val exceedScopeData = Symbol.Variable("data3")

assert(exceedScopeData.attr("group") === None, "No group attr in global attr scope")

-```

+```

## Serialization

@@ -83,14 +83,14 @@ Refer to [API documentation](http://mxnet.incubator.apache.org/api/scala/docs/in

The following example shows how to save a symbol to an S3 bucket, load it back, and compare two symbols using a JSON string.

```scala

- scala> import org.apache.mxnet._

- scala> val a = Symbol.Variable("a")

- scala> val b = Symbol.Variable("b")

- scala> val c = a + b

- scala> c.save("s3://my-bucket/symbol-c.json")

- scala> val c2 = Symbol.load("s3://my-bucket/symbol-c.json")

- scala> c.toJson == c2.toJson

- Boolean = true

+ import org.apache.mxnet._

+ val a = Symbol.Variable("a")

+ val b = Symbol.Variable("b")

+ val c = a + b

+ c.save("s3://my-bucket/symbol-c.json")

+ val c2 = Symbol.load("s3://my-bucket/symbol-c.json")

+ c.toJson == c2.toJson

+ // Boolean = true

```

## Executing Symbols

@@ -101,25 +101,25 @@ handled by the high-level [Model class](model.md) and the [`fit()`] function.

For neural networks used in "feed-forward", "prediction", or "inference" mode (all terms for the same

thing: running a trained network), the input arguments are the

-input data, and the weights of the neural network that were learned during training.

+input data, and the weights of the neural network that were learned during training.

To manually execute a set of symbols, you need to create an [`Executor`] object,

-which is typically constructed by calling the [`simpleBind(<parameters>)`] method on a symbol.

+which is typically constructed by calling the [`simpleBind(<parameters>)`] method on a symbol.

## Multiple Outputs

To group the symbols together, use the [mxnet.symbol.Group](#mxnet.symbol.Group) function.

```scala

- scala> import org.apache.mxnet._

- scala> val data = Symbol.Variable("data")

- scala> val fc1 = Symbol.api.FullyConnected(Some(data), num_hidden = 128, name = "fc1")

- scala> val act1 = Symbol.api.Activation(Some(fc1), "relu", "relu1")

- scala> val fc2 = Symbol.api.FullyConnected(Some(act1), num_hidden = 64, name = "fc2")

- scala> val net = Symbol.api.SoftmaxOutput(Some(fc2), name = "out")

- scala> val group = Symbol.Group(fc1, net)

- scala> group.listOutputs()

- IndexedSeq[String] = ArrayBuffer(fc1_output, out_output)

+ import org.apache.mxnet._

+ val data = Symbol.Variable("data")

+ val fc1 = Symbol.api.FullyConnected(Some(data), num_hidden = 128, name = "fc1")

+ val act1 = Symbol.api.Activation(Some(fc1), "relu", "relu1")

+ val fc2 = Symbol.api.FullyConnected(Some(act1), num_hidden = 64, name = "fc2")

+ val net = Symbol.api.SoftmaxOutput(Some(fc2), name = "out")

+ val group = Symbol.Group(fc1, net)

+ group.listOutputs()

+ // IndexedSeq[String] = ArrayBuffer(fc1_output, out_output)

```

After you get the ```group```, you can bind on ```group``` instead.

diff --git a/docs/gluon/index.md b/docs/gluon/index.md

index 4f6d3c10f38..c34ee9c2273 100644

--- a/docs/gluon/index.md

+++ b/docs/gluon/index.md

@@ -43,7 +43,7 @@ The community is also working on parallel effort to create a foundational resour

Use plug-and-play neural network building blocks, including predefined layers, optimizers, and initializers:

-```python

+```

net = gluon.nn.Sequential()

# When instantiated, Sequential stores a chain of neural network layers.

# Once presented with data, Sequential executes each layer in turn, using

@@ -59,7 +59,7 @@ with net.name_scope():

Prototype, build, and train neural networks in fully imperative manner using the MXNet autograd package and the Gluon trainer method:

-```python

+```

epochs = 10

for e in range(epochs):

@@ -76,7 +76,7 @@ for e in range(epochs):

Build neural networks on the fly for use cases where neural networks must change in size and shape during model training:

-```python

+```

def forward(self, F, inputs, tree):

children_outputs = [self.forward(F, inputs, child)

for child in tree.children]

@@ -89,7 +89,7 @@ def forward(self, F, inputs, tree):

Easily cache the neural network to achieve high performance by defining your neural network with ``HybridSequential`` and calling the ``hybridize`` method:

-```python

+```

net = nn.HybridSequential()

with net.name_scope():

net.add(nn.Dense(256, activation="relu"))

@@ -97,7 +97,7 @@ with net.name_scope():

net.add(nn.Dense(2))

```

-```python

+```

net.hybridize()

```

diff --git a/docs/install/ubuntu_setup.md b/docs/install/ubuntu_setup.md

index 9961c706af1..7d8da182b07 100644

--- a/docs/install/ubuntu_setup.md

+++ b/docs/install/ubuntu_setup.md

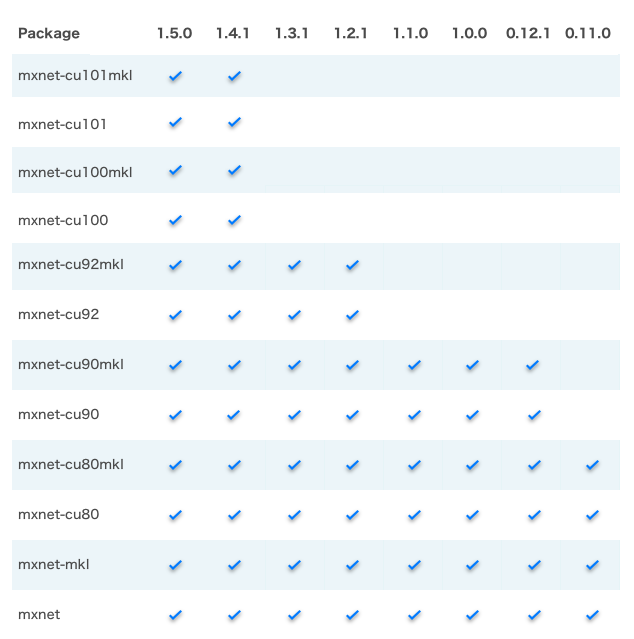

@@ -79,18 +79,6 @@ Alternatively, you can use the table below to select the package that suits your

#### pip Package Availability

The following table presents the pip packages that are recommended for each version of MXNet.

-<!-- Table does not render - using a picture alternative

-| Package / MXNet Version | 1.3.0 | 1.2.1 | 1.1.0 | 1.0.0 | 0.12.1 | 0.11.0 |

-|-|:-:|:-:|:-:|:-:|:-:|:-:|

-| mxnet-cu92mkl | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="far fa-times-circle"></i> | <i class="far fa-times-circle"></i> | <i class="far fa-times-circle"></i> | <i class="far fa-times-circle"></i> |

-| mxnet-cu92 | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="far fa-times-circle"></i> | <i class="far fa-times-circle"></i> | <i class="far fa-times-circle"></i> | <i class="far fa-times-circle"></i> |

-| mxnet-cu90mkl | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="far fa-times-circle"></i> |

-| mxnet-cu90 | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="far fa-times-circle"></i> |

-| mxnet-cu80mkl | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> |

-| mxnet-cu80 | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> |

-| mxnet-mkl | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> |

-| mxnet | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> | <i class="fas fa-check"></i> |

--->

diff --git a/docs/tutorials/r/fiveMinutesNeuralNetwork.md b/docs/tutorials/r/fiveMinutesNeuralNetwork.md

index a2ce5ecd376..6d79cd288d2 100644

--- a/docs/tutorials/r/fiveMinutesNeuralNetwork.md

+++ b/docs/tutorials/r/fiveMinutesNeuralNetwork.md

@@ -1,7 +1,7 @@

Develop a Neural Network with MXNet in Five Minutes

=============================================

-This tutorial is designed for new users of the `mxnet` package for R. It shows how to construct a neural network to do regression in 5 minutes. It shows how to perform classification and regression tasks, respectively. The data we use is in the `mlbench` package. Instructions to install R and MXNet's R package in different environments can be found [here](http://mxnet.incubator.apache.org/install/index.html?platform=Linux&language=R&processor=CPU).

+This tutorial is designed for new users of the `mxnet` package for R. It shows how to construct a neural network to do regression in 5 minutes. It shows how to perform classification and regression tasks, respectively. The data we use is in the `mlbench` package. Instructions to install R and MXNet's R package in different environments can be found [here](http://mxnet.incubator.apache.org/install/index.html?platform=Linux&language=R&processor=CPU).

## Classification

@@ -88,7 +88,7 @@ Note that `mx.set.seed` controls the random process in `mxnet`. You can see the

To get an idea of what is happening, view the computation graph from R:

- ```{r}

+ ```r

graph.viz(model$symbol)

```

diff --git a/python/mxnet/gluon/parameter.py b/python/mxnet/gluon/parameter.py

index b3d8f80318b..2e130d498c1 100644

--- a/python/mxnet/gluon/parameter.py

+++ b/python/mxnet/gluon/parameter.py

@@ -755,7 +755,7 @@ def get_constant(self, name, value=None):

Returns

-------

- Constant

+ :py:class:`.Constant`

The created or retrieved :py:class:`.Constant`.

"""

name = self.prefix + name

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

With regards,

Apache Git Services