You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2020/08/18 21:54:38 UTC

[GitHub] [hudi] jiegzhan opened a new issue #1980: [SUPPORT] Small files (423KB) generated after running delete operation

jiegzhan opened a new issue #1980:

URL: https://github.com/apache/hudi/issues/1980

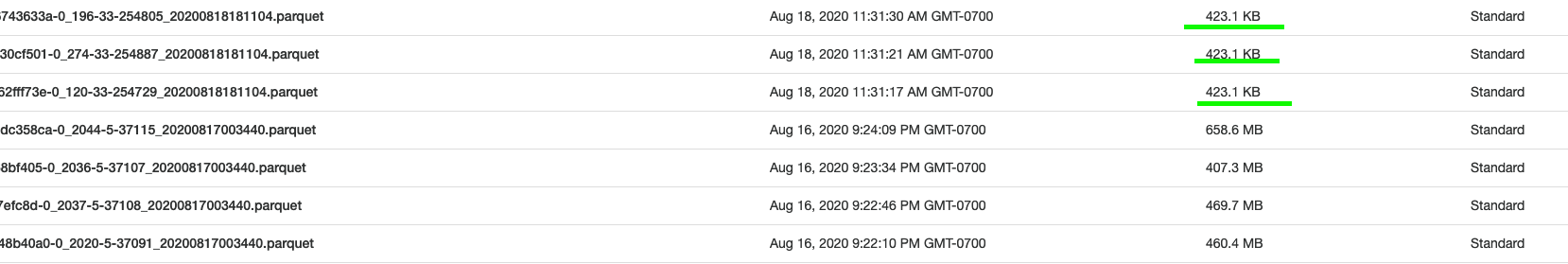

I ran a `delete` query on my hudi table and found out there are a lot newly generated small files (423KB) (see screenshot). Is there a way to control the size of these small files when running `delete` query? Basically I am trying to avoid many small files after running `delete` query.

This is my delete query:

```

val deleteExistingRecords = spark.read.format("org.apache.hudi").load(basePath + "/*/*").where(col("device_id").startsWith("D"))

deleteExistingRecords.

write.format("org.apache.hudi").

option("hoodie.datasource.write.operation", "delete").

option("hoodie.parquet.max.file.size", 2000000000).

option("hoodie.parquet.block.size", 2000000000).

option("hoodie.parquet.small.file.limit", 512000000).

option(TABLE_NAME, tableName).

option(TABLE_TYPE_OPT_KEY, "COPY_ON_WRITE").

option(RECORDKEY_FIELD_OPT_KEY, "device_id").

option(PRECOMBINE_FIELD_OPT_KEY, "device_id").

option(PARTITIONPATH_FIELD_OPT_KEY, "date_key").

option(HIVE_SYNC_ENABLED_OPT_KEY, "true").

option(HIVE_DATABASE_OPT_KEY, "default").

option(HIVE_TABLE_OPT_KEY, "hudi_fact_device_logs").

option(HIVE_USER_OPT_KEY, "hadoop").

option(HIVE_PARTITION_FIELDS_OPT_KEY, "date_key").

option(HIVE_PARTITION_EXTRACTOR_CLASS_OPT_KEY, classOf[MultiPartKeysValueExtractor].getName).

mode(Append).

save(basePath)

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] jiegzhan commented on issue #1980: [SUPPORT] Small files (423KB) generated after running delete query

Posted by GitBox <gi...@apache.org>.

jiegzhan commented on issue #1980:

URL: https://github.com/apache/hudi/issues/1980#issuecomment-676688579

@bvaradar that makes sense, thanks. After ran many delete queries, I got a lot small files in S3. Is there a way to merge these small files? Basically I am trying to clean up S3 folder after running many GDPR delete requests. Thanks.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] bvaradar commented on issue #1980: [SUPPORT] Small files (423KB) generated after running delete query

Posted by GitBox <gi...@apache.org>.

bvaradar commented on issue #1980:

URL: https://github.com/apache/hudi/issues/1980#issuecomment-676454679

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] jiegzhan commented on issue #1980: [SUPPORT] Small files (423KB) generated after running delete query

Posted by GitBox <gi...@apache.org>.

jiegzhan commented on issue #1980:

URL: https://github.com/apache/hudi/issues/1980#issuecomment-676544068

@bvaradar What is the size of new version of the same files after running delete query? For me, they are 423KB.

Step 1: ran bulk_insert query:

```

df.

write.format("org.apache.hudi").

option("hoodie.datasource.write.operation", "bulk_insert").

option("hoodie.bulkinsert.shuffle.parallelism", 5120).

option("hoodie.parquet.max.file.size", 2000000000).

option("hoodie.parquet.block.size", 2000000000).

option("hoodie.parquet.small.file.limit", 512000000).

option("hoodie.combine.before.insert", "false").

option("hoodie.combine.before.upsert", "false").

option(TABLE_NAME, tableName).

option(TABLE_TYPE_OPT_KEY, "COPY_ON_WRITE").

option(RECORDKEY_FIELD_OPT_KEY, "device_id").

option(PRECOMBINE_FIELD_OPT_KEY, "device_id").

option(PARTITIONPATH_FIELD_OPT_KEY, "date_key").

option(HIVE_SYNC_ENABLED_OPT_KEY, "true").

option(HIVE_DATABASE_OPT_KEY, "default").

option(HIVE_TABLE_OPT_KEY, "hudi_fact_device_logs").

option(HIVE_USER_OPT_KEY, "hadoop").

option(HIVE_PARTITION_FIELDS_OPT_KEY, "date_key").

option(HIVE_PARTITION_EXTRACTOR_CLASS_OPT_KEY, classOf[MultiPartKeysValueExtractor].getName).

mode(Append).

save(basePath)

```

**Got 5120 parquet files in S3, they are about 300MB - 700MB each.**

Step 2: ran delete query:

```

val deleteExistingRecords = spark.read.format("org.apache.hudi").load(basePath + "/*/*").where(col("device_id").startsWith("D"))

deleteExistingRecords.

write.format("org.apache.hudi").

option("hoodie.datasource.write.operation", "delete").

option("hoodie.parquet.max.file.size", 2000000000).

option("hoodie.parquet.block.size", 2000000000).

option("hoodie.parquet.small.file.limit", 512000000).

option(TABLE_NAME, tableName).

option(TABLE_TYPE_OPT_KEY, "COPY_ON_WRITE").

option(RECORDKEY_FIELD_OPT_KEY, "device_id").

option(PRECOMBINE_FIELD_OPT_KEY, "device_id").

option(PARTITIONPATH_FIELD_OPT_KEY, "date_key").

option(HIVE_SYNC_ENABLED_OPT_KEY, "true").

option(HIVE_DATABASE_OPT_KEY, "default").

option(HIVE_TABLE_OPT_KEY, "hudi_fact_device_logs").

option(HIVE_USER_OPT_KEY, "hadoop").

option(HIVE_PARTITION_FIELDS_OPT_KEY, "date_key").

option(HIVE_PARTITION_EXTRACTOR_CLASS_OPT_KEY, classOf[MultiPartKeysValueExtractor].getName).

mode(Append).

save(basePath)

```

**Got some newly generated small files (423KB) (see screenshot on the top).**

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] jiegzhan commented on issue #1980: [SUPPORT] Small files (423KB) generated after running delete query

Posted by GitBox <gi...@apache.org>.

jiegzhan commented on issue #1980:

URL: https://github.com/apache/hudi/issues/1980#issuecomment-679398095

@bvaradar, before re-clustering is available, I tested [hoodie.cleaner.commits.retained](https://hudi.apache.org/docs/configurations.html#retainCommits).

I set option("hoodie.cleaner.commits.retained", 1), then issued a few delete queries. For each parquet file in S3, the latest version and 1 older version (sometimes, not always) got kept in S3, all other versions are gone from S3. Is this how it works?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] jiegzhan edited a comment on issue #1980: [SUPPORT] Small files (423KB) generated after running delete query

Posted by GitBox <gi...@apache.org>.

jiegzhan edited a comment on issue #1980:

URL: https://github.com/apache/hudi/issues/1980#issuecomment-676688579

@bvaradar that makes sense, thanks. After running many delete queries, I got a lot small files in S3. Is there a way to merge these small files? Basically I am trying to clean up S3 folder after running many GDPR delete requests. Thanks.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] bvaradar commented on issue #1980: [SUPPORT] Small files (423KB) generated after running delete query

Posted by GitBox <gi...@apache.org>.

bvaradar commented on issue #1980:

URL: https://github.com/apache/hudi/issues/1980#issuecomment-676626002

@jiegzhan : This can happen if you are deleting all/most of records in your dataset. Even if all the records in a file is deleted, Hudi creates a new version of the file - an empty parquet file in order to ensure queries do not read deleted records accidentally.

The commit metadata should have information about the number of records you deleted. Let me know if this makes sense.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] jiegzhan commented on issue #1980: [SUPPORT] Small files (423KB) generated after running delete query

Posted by GitBox <gi...@apache.org>.

jiegzhan commented on issue #1980:

URL: https://github.com/apache/hudi/issues/1980#issuecomment-680303027

Thanks for your explanation, @bvaradar. Closed this ticket.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] jiegzhan closed issue #1980: [SUPPORT] Small files (423KB) generated after running delete query

Posted by GitBox <gi...@apache.org>.

jiegzhan closed issue #1980:

URL: https://github.com/apache/hudi/issues/1980

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] bvaradar commented on issue #1980: [SUPPORT] Small files (423KB) generated after running delete query

Posted by GitBox <gi...@apache.org>.

bvaradar commented on issue #1980:

URL: https://github.com/apache/hudi/issues/1980#issuecomment-678036142

@jiegzhan : This is an upcoming feature to re-cluster records in files (https://cwiki.apache.org/confluence/display/HUDI/RFC+-+19+Clustering+data+for+speed+and+query+performance). We are currently working on the 0.6.0 release (Release candidate already out) and will be targeting this feature in the next major release.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] bvaradar commented on issue #1980: [SUPPORT] Small files (423KB) generated after running delete query

Posted by GitBox <gi...@apache.org>.

bvaradar commented on issue #1980:

URL: https://github.com/apache/hudi/issues/1980#issuecomment-679403428

Yes, this is expected. We retain the penultimate version of the file to prevent a running query from failing. In this case, you might see only one version of some file which did not see any deletes. That is expected.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org