You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@airflow.apache.org by "Oduig (via GitHub)" <gi...@apache.org> on 2023/02/09 16:41:25 UTC

[GitHub] [airflow] Oduig opened a new issue, #29442: Logging from inside on_failure_callback not propagated with Cloud Composer

Oduig opened a new issue, #29442:

URL: https://github.com/apache/airflow/issues/29442

### Apache Airflow version

Other Airflow 2 version (please specify below)

### What happened

When I run a DAG on Airflow, python log lines from the DAG are visible as expected in the Airflow logs. However, when I use logging inside an `on_failure_callback`, the logs are not visible anywhere. We are trying to use this logging to diagnose issues with our callbacks, and not having them makes this more difficult.

### What you think should happen instead

I think the log output from `logging.info` should end up somewhere in a log system or file.

### How to reproduce

The following DAG produces only 1 line of log output.

```

import pendulum

from datetime import timedelta

from airflow import DAG

from airflow.operators.bash import BashOperator

import logging

import requests

dag_settings = {

"dag_id": f"INT_tableau_others_recommendation_classifications",

"max_active_runs": 1,

"dagrun_timeout": timedelta(minutes=1),

"start_date": pendulum.today("UTC"),

"default_args": {

"owner": "airflow",

"catchup": False

},

"tags": ["env:johndoe"],

"on_failure_callback": (

lambda context: [

logging.info(f"Minimal example - failure callback with context: {context}"),

requests.post(

"https://<replace_this_with_your_requestbin_id>.m.pipedream.net",

json={"payload": f"Minimal example - failure callback with context: {context}"}

)

]

)

}

dag = DAG(**dag_settings)

logging.info(f"Minimal example - Created DAG {dag.dag_id}.")

BashOperator(task_id="sleep_a_while", bash_command="sleep 300", dag=dag)

```

To find the logs, I checked any and all log output produced by the Airflow scheduler, workers, DAG processor, and other services. Here is a screenshot of what this looks like, based on the above DAG.

### Operating System

composer-2.0.32

### Versions of Apache Airflow Providers

Here is the full `requirements.txt`

```

apache-airflow-providers-databricks==4.0.0

databricks-sql-connector==2.1.0

apache-beam~=2.43.0

sqlalchemy-bigquery==1.5.0

requests~=2.28.1

apache-airflow-providers-tableau==4.0.0

apache-airflow-providers-sendgrid==3.1.0

python-dotenv==0.21.0

urllib3~=1.26.8

tableauserverclient==0.23

apache-airflow-providers-http==4.1.0

# time library in airflow

pendulum==2.1.2

```

### Deployment

Composer

### Deployment details

We are running a Cloud Compose environment with image `composer-2.0.32-airflow-2.3.4`

### Anything else

In addition to checking the Airflow logs, I also used a remote shell to inspect individual `Airflow` containers to find log files on disk, but it seems they are all passed to Composer.

Logs produced by individual tasks (e.g. the output of the bash command above) end up in a bucket together with the DAG files - this bucket has a `/logs` directory, but it only contains task logs. The task logs for the `BashOperator` are empty because bash itself does not produce output, this makes sense to me.

Finally, I checked for a `scheduler` folder in the logs directory and cannot find any folders or files related to DAG-level callbacks or the scheduler.

### Are you willing to submit PR?

- [ ] Yes I am willing to submit a PR!

### Code of Conduct

- [X] I agree to follow this project's [Code of Conduct](https://github.com/apache/airflow/blob/main/CODE_OF_CONDUCT.md)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] Oduig commented on issue #29442: Logging from inside on_failure_callback not propagated with Cloud Composer

Posted by "Oduig (via GitHub)" <gi...@apache.org>.

Oduig commented on issue #29442:

URL: https://github.com/apache/airflow/issues/29442#issuecomment-1425430654

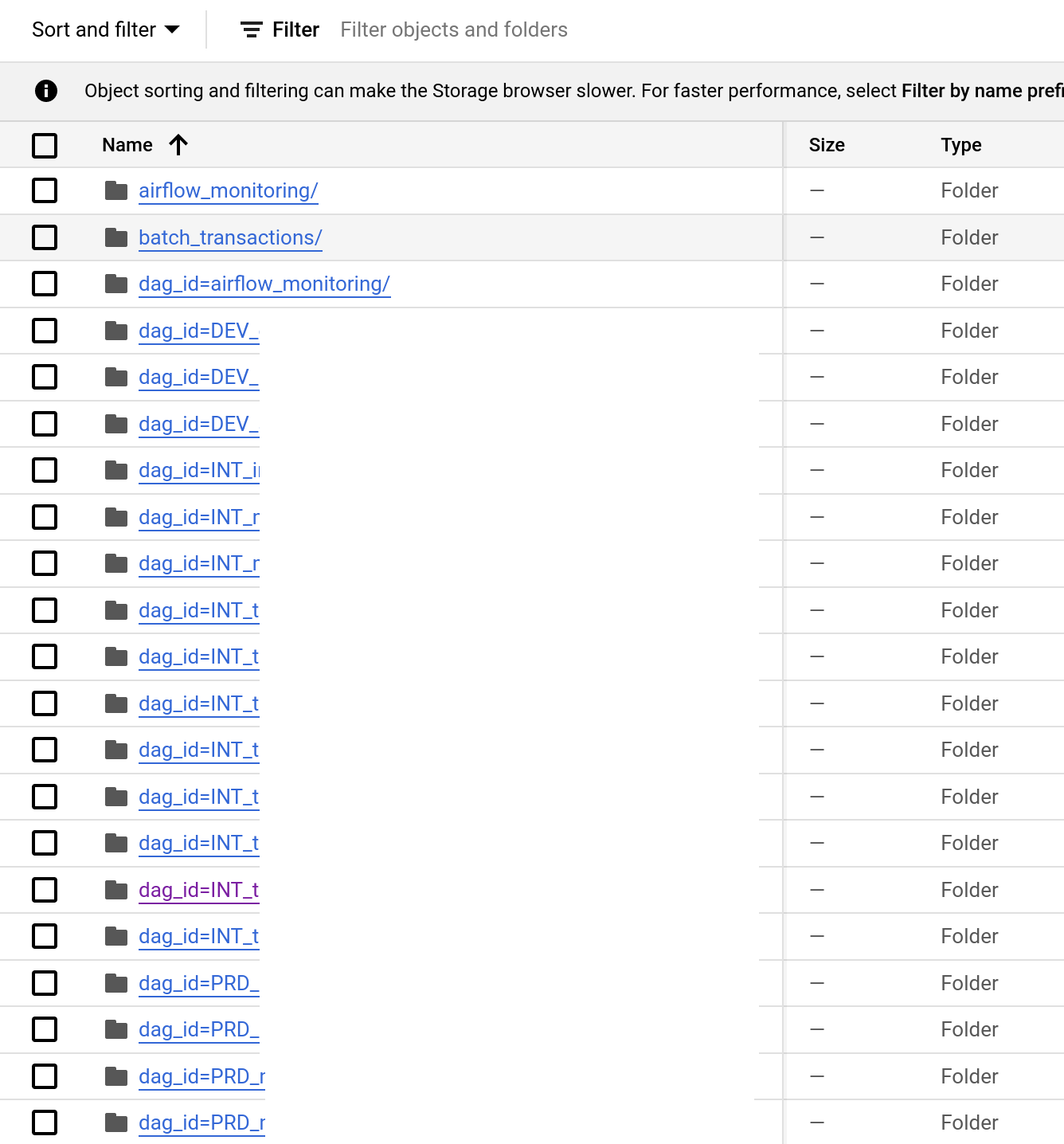

Here are the contents of our Google Storage bucket:

The logs for the individual DAGs are there though:

When I `kubectl exec` into the `airflow-scheduler` deployment, I see that the logs folder is mounted with GCS fuse as expected:

In the $AIRFLOW_HOME directory, I see a `logs/scheduler` folder with recent files in it, but all the files are empty:

Let me know if there is anything else I can check

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] potiuk commented on issue #29442: Logging from inside on_failure_callback not propagated with Cloud Composer

Posted by "potiuk (via GitHub)" <gi...@apache.org>.

potiuk commented on issue #29442:

URL: https://github.com/apache/airflow/issues/29442#issuecomment-1426388643

> Please let me know if there is anything else I can check, happy to help diagnose

Why logs files are empty there is a question to Composer team, it's not an Airflow issue. We have no idea here what is the deployment. You can discuss it with the support team there.

Closing this one in the community.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] boring-cyborg[bot] commented on issue #29442: Logging from inside on_failure_callback not propagated with Cloud Composer

Posted by "boring-cyborg[bot] (via GitHub)" <gi...@apache.org>.

boring-cyborg[bot] commented on issue #29442:

URL: https://github.com/apache/airflow/issues/29442#issuecomment-1424488855

Thanks for opening your first issue here! Be sure to follow the issue template!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] hussein-awala commented on issue #29442: Logging from inside on_failure_callback not propagated with Cloud Composer

Posted by "hussein-awala (via GitHub)" <gi...@apache.org>.

hussein-awala commented on issue #29442:

URL: https://github.com/apache/airflow/issues/29442#issuecomment-1425031828

The callback is executed when the task/run finishes, and it is executed by the scheduler, you will find this log in the scheduler log files:

```

[2023-02-10T00:01:08.696+0000] {logging_mixin.py:151} INFO - [2023-02-10T00:01:08.696+0000] {test_dag.py:19} INFO - Minimal example - failure callback with context: {'conf': <airflow.configuration.AirflowConfigParser object at 0x7f2c1c98edd0>, 'dag': <DAG: INT_tableau_others_recommendation_classifications>, 'dag_run': <DagRun INT_tableau_others_recommendation_classifications @ 2023-02-09 00:00:00+00:00: scheduled__2023-02-09T00:00:00+00:00, state:failed, queued_at: 2023-02-10 00:00:00.600499+00:00. externally triggered: False>, 'data_interval_end': DateTime(2023, 2, 10, 0, 0, 0, tzinfo=Timezone('UTC')), 'data_interval_start': DateTime(2023, 2, 9, 0, 0, 0, tzinfo=Timezone('UTC')), 'ds': '2023-02-09', 'ds_nodash': '20230209', 'execution_date': DateTime(2023, 2, 9, 0, 0, 0, tzinfo=Timezone('UTC')), 'expanded_ti_count': None, 'inlets': [], 'logical_date': DateTime(2023, 2, 9, 0, 0, 0, tzinfo=Timezone('UTC')), 'macros': <module 'airflow.macros' from '/opt/airflow/airflow/macros/__init_

_.py'>, 'next_ds': '2023-02-10', 'next_ds_nodash': '20230210', 'next_execution_date': DateTime(2023, 2, 10, 0, 0, 0, tzinfo=Timezone('UTC')), 'outlets': [], 'params': {}, 'prev_data_interval_start_success': None, 'prev_data_interval_end_success': None, 'prev_ds': '2023-02-08', 'prev_ds_nodash': '20230208', 'prev_execution_date': DateTime(2023, 2, 8, 0, 0, 0, tzinfo=Timezone('UTC')), 'prev_execution_date_success': None, 'prev_start_date_success': None, 'run_id': 'scheduled__2023-02-09T00:00:00+00:00', 'task': <Task(BashOperator): sleep_a_while>, 'task_instance': <TaskInstance: INT_tableau_others_recommendation_classifications.sleep_a_while scheduled__2023-02-09T00:00:00+00:00 [skipped]>, 'task_instance_key_str': 'INT_tableau_others_recommendation_classifications__sleep_a_while__20230209', 'test_mode': False, 'ti': <TaskInstance: INT_tableau_others_recommendation_classifications.sleep_a_while scheduled__2023-02-09T00:00:00+00:00 [skipped]>, 'tomorrow_ds': '2023-02-10', 'tomorrow_ds_no

dash': '20230210', 'triggering_dataset_events': <Proxy at 0x7f2bf9eea2d0 with factory <function TaskInstance.get_template_context.<locals>.get_triggering_events at 0x7f2c14fa0290>>, 'ts': '2023-02-09T00:00:00+00:00', 'ts_nodash': '20230209T000000', 'ts_nodash_with_tz': '20230209T000000+0000', 'var': {'json': None, 'value': None}, 'conn': None, 'yesterday_ds': '2023-02-08', 'yesterday_ds_nodash': '20230208', 'reason': 'timed_out'}

```

You can find this log in the path `$AIRFLOW_HOME/logs/scheduler`, and for cloud composer you will find it on gcs `gs://<BUCKET>/logs/scheduler`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] potiuk closed issue #29442: Logging from inside on_failure_callback not propagated with Cloud Composer

Posted by "potiuk (via GitHub)" <gi...@apache.org>.

potiuk closed issue #29442: Logging from inside on_failure_callback not propagated with Cloud Composer

URL: https://github.com/apache/airflow/issues/29442

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@airflow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org