You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@dolphinscheduler.apache.org by GitBox <gi...@apache.org> on 2021/01/21 07:08:40 UTC

[GitHub] [incubator-dolphinscheduler] zosimer opened a new issue #4517: Dolphinscheduler 1.3.4 uses shell script to execute waterdrop 1.5.1. The task is executed successfully, but the node shows that the execution failed

zosimer opened a new issue #4517:

URL: https://github.com/apache/incubator-dolphinscheduler/issues/4517

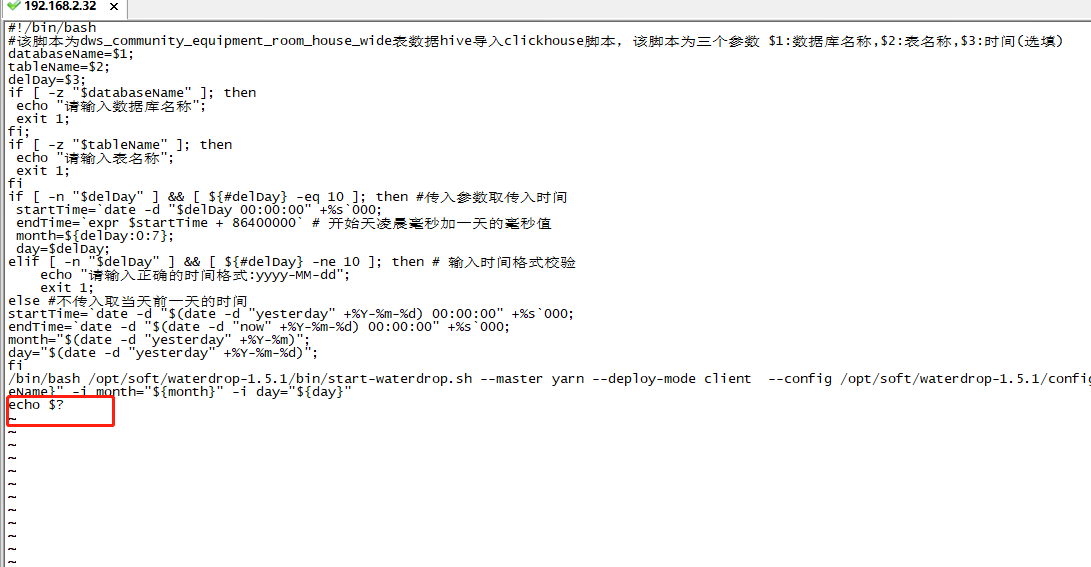

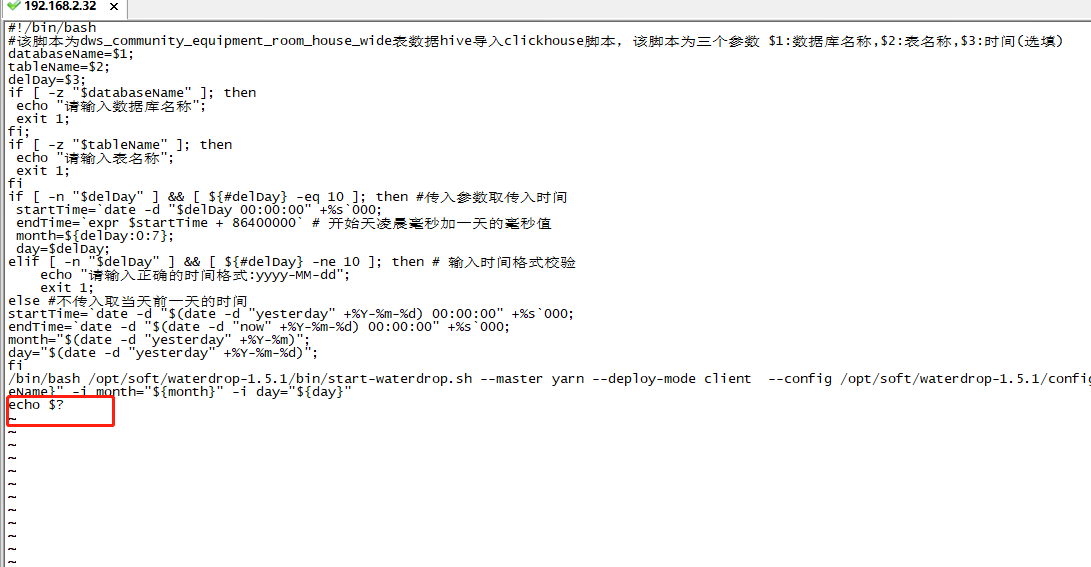

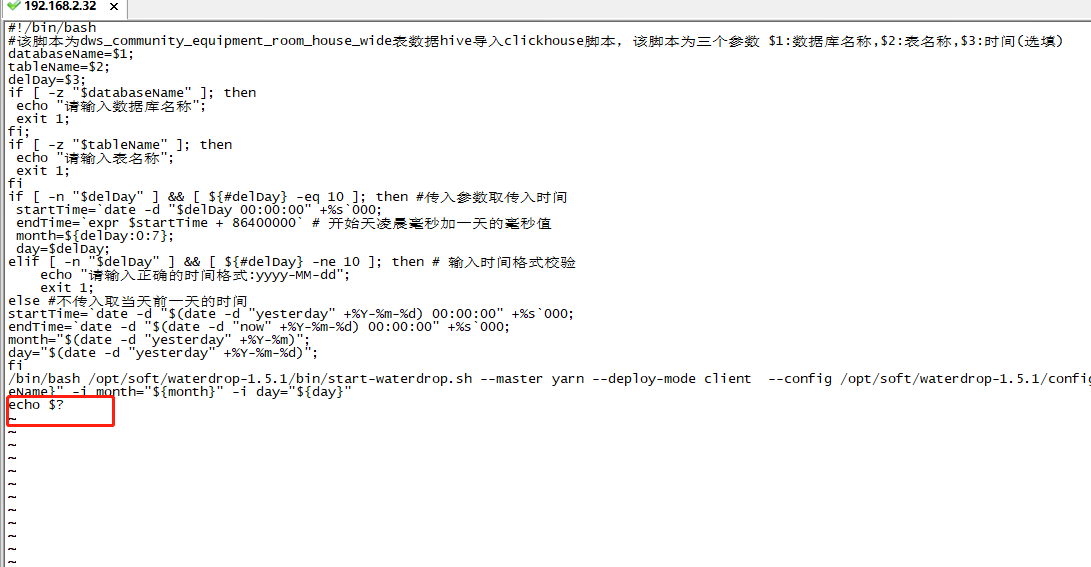

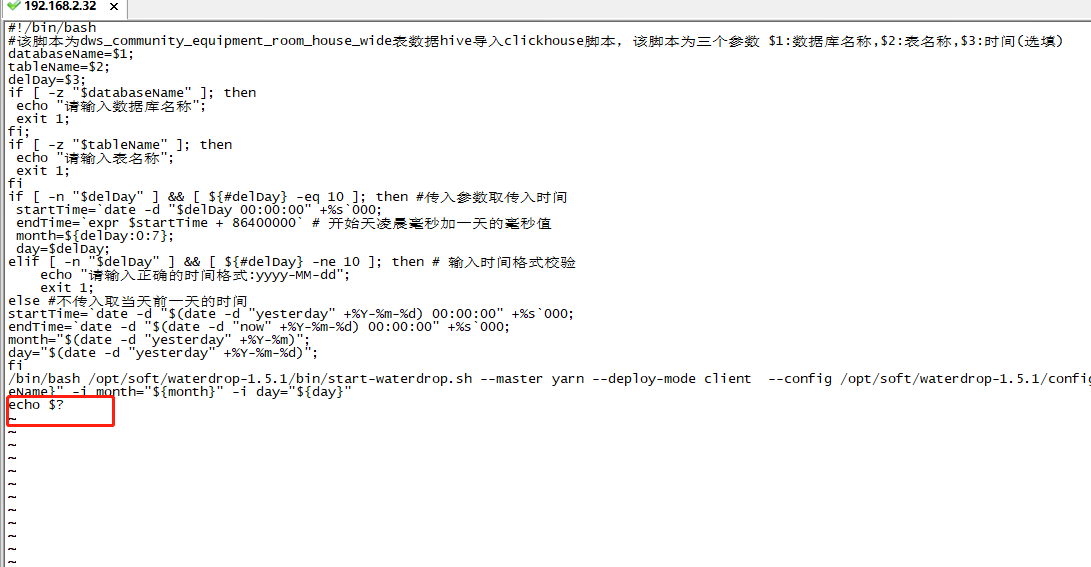

Here's a complete picture of what I did

[INFO] 2021-01-21 14:47:48.083 - [taskAppId=TASK-13-198-935]:[127] - ->

[INFO] spark conf: --conf "spark.sql.catalogImplementation=hive" --conf "spark.executor.memory=2g" --conf "spark.app.name=Waterdrop" --conf "spark.executor.cores=4" --conf "spark.executor.instances=5"

Warning: Ignoring non-spark config property: "spark.executor.memory=2g"

[INFO] 2021-01-21 14:47:49.659 - [taskAppId=TASK-13-198-935]:[127] - -> Warning: Ignoring non-spark config property: "spark.app.name=Waterdrop"

Warning: Ignoring non-spark config property: "spark.executor.instances=5"

Warning: Ignoring non-spark config property: "spark.executor.cores=4"

Warning: Ignoring non-spark config property: "spark.sql.catalogImplementation=hive"

[INFO] Loading config file: /opt/soft/waterdrop-1.5.1/config/batch.conf

[INFO] 2021-01-21 14:47:49.800 - [taskAppId=TASK-13-198-935]:[127] - -> [INFO] parsed config file: {

"spark" : {

"spark.sql.catalogImplementation" : "hive",

"spark.app.name" : "Waterdrop",

"spark.executor.instances" : 5,

"spark.executor.cores" : 4,

"spark.executor.memory" : "2g"

},

"input" : [

{

"result_table_name" : "dws_community_equipment_room_house_report_vo_wide_temp",

"pre_sql" : "select * from dws.dws_community_equipment_room_house_report_vo_wide where `month`='2021-01' and `day`='2021-01-20'",

"plugin_name" : "hive"

}

],

"filter" : [

{

"new_type" : "string",

"source_field" : "reporttimestamp",

"plugin_name" : "convert"

}

],

"output" : [

{

"retry_codes" : [

209,

210

],

"database" : "un_dws",

"password" : "MIywZnnr",

"host" : "clickhouse001:8123,clickhouse002:8123,clickhouse003:8123",

"bulk_size" : 100000,

"fields" : [

"h_id",

"h_name",

"h_mobile",

"h_account",

"h_password",

"h_address",

"h_regmethod",

"h_gender",

"h_mail",

"h_qq",

"h_headimage",

"h_identityno",

"h_birthday",

"h_nickname",

"h_creator",

"h_talkaccount",

"h_talkpassword",

"h_uhomepassword",

"h_identitytype",

"h_isaudited",

"h_rke_ischild",

"h_rke_fingerprint1",

"h_rke_callphone",

"h_rke_maxcardcount",

"h_accountsid",

"h_defaultroomid",

"h_rke_faceimg",

"h_logintype",

"h_create_time",

"h_update_time",

"r_id",

[INFO] 2021-01-21 14:47:49.801 - [taskAppId=TASK-13-198-935]:[127] - -> "r_co_id",

"r_cs_id",

"r_name",

"r_creator",

"r_accountsid",

"r_del_flag",

"r_type",

"r_create_time",

"r_update_time",

"rh_del_flag",

"co_id",

"co_name",

"co_address",

"co_stdaddress",

"co_tel",

"areaid",

"co_lat",

"co_lng",

"co_enable",

"co_logo",

"co_summary",

"co_remark",

"is_test",

"co_creator",

"co_accountsid",

"co_eqserial",

"co_latlng",

"deptid",

"co_funcontrol",

"co_create_time",

"co_update_time",

"cs_id",

"cs_co_id",

"cs_parentid",

"cs_name",

"cs_fullname",

"cs_lat",

"cs_lng",

"cs_islast",

"cs_orderindex",

"cs_creator",

"cs_accountsid",

"cs_del_flag",

"cs_create_time",

"cs_update_time",

"eq_autoid",

"eq_name",

"eq_communityid",

"eq_num",

"eq_username",

"eq_userpwd",

"eq_type",

"eq_ip",

"eq_cs_id",

"eq_addtime",

"eq_talkname",

"eq_talkpwd",

"eq_bell",

"eq_mediaday",

"eq_medianight",

"eq_nightstarthour",

"eq_nightstartminute",

"eq_nightendhour",

"eq_nightendminute",

[INFO] 2021-01-21 14:47:49.801 - [taskAppId=TASK-13-198-935]:[127] - -> "eq_isplayimage",

"eq_isplayvideo",

"eq_imageinterval",

"eq_callpriority",

"eq_starttime",

"eq_alarmtimedelay",

"eq_systempwd",

"eq_sharepwd",

"eq_volume",

"eq_modelnumber",

"eq_kernelversion",

"eq_softwareversion",

"eq_isclosed",

"eq_isonline",

"eq_isenable",

"eq_isneedpwd",

"eq_calibratetime",

"eq_policerecordid",

"eq_lastopentime",

"eq_openbyidcard",

"eq_openbybluetooth",

"eq_isdoorstatecheck",

"eq_mac",

"creater",

"eq_accountsid",

"eq_faceip",

"eq_facepwd",

"eq_hardcode",

"eq_localipvalue",

"eq_localip",

"eq_subnetmask",

"eq_gateway",

"eq_dns",

"eq_istomobile",

"eq_etype",

"eq_enum",

"eq_r_id",

"isnightdormant",

"eq_isbioassay",

"eq_facemode",

"eq_threshold",

"isthirdad",

"eq_brightness",

"is_new_video",

"eq_create_time",

"eq_update_time",

"proince_id",

"proincename",

"city_id",

"city_name",

"county_id",

"county_name",

"reportid",

"reporttype",

"reporttimestamp",

"reportdate",

"reportstatus",

"reporterrmsg",

"month",

"day"

],

"plugin_name" : "clickhouse",

"table" : "dws_community_equipment_room_house_report_vo_wide_ck_all",

"retry" : 3,

[INFO] 2021-01-21 14:47:50.401 - [taskAppId=TASK-13-198-935]:[127] - -> "username" : "default"

}

]

}

[INFO] loading SparkConf:

spark.history.kerberos.keytab => none

spark.eventLog.enabled => true

spark.jars => file:/opt/soft/waterdrop-1.5.1/lib/Waterdrop-1.5.1-2.11.8.jar

spark.history.ui.port => 18081

spark.driver.extraLibraryPath => /usr/hdp/current/hadoop-client/lib/native:/usr/hdp/current/hadoop-client/lib/native/Linux-amd64-64

spark.history.fs.cleaner.interval => 7d

spark.shuffle.io.serverThreads => 128

spark.executor.cores => 4

spark.sql.streaming.streamingQueryListeners =>

spark.executor.extraJavaOptions => -Dday=2021-01-20 -Dmonth=2021-01 -DtableName=dws_community_equipment_room_house_report_vo_wide -DdatabaseName=dws

spark.executor.extraLibraryPath => /usr/hdp/current/hadoop-client/lib/native:/usr/hdp/current/hadoop-client/lib/native/Linux-amd64-64

spark.sql.statistics.fallBackToHdfs => true

spark.shuffle.file.buffer => 1m

spark.history.provider => org.apache.spark.deploy.history.FsHistoryProvider

spark.driver.extraJavaOptions => -Dday=2021-01-20 -Dmonth=2021-01 -DtableName=dws_community_equipment_room_house_report_vo_wide -DdatabaseName=dws

spark.sql.hive.convertMetastoreOrc => true

spark.yarn.dist.files =>

spark.submit.deployMode => client

spark.sql.autoBroadcastJoinThreshold => 26214400

spark.driver.extraClassPath =>

spark.eventLog.dir => hdfs:///spark2-history/

spark.executor.memory => 2g

spark.app.name => Waterdrop

spark.sql.hive.metastore.jars => /usr/hdp/current/spark2-client/standalone-metastore/*

spark.yarn.historyServer.address => datanode005:18081

spark.yarn.queue => default

spark.sql.orc.impl => native

spark.history.fs.cleaner.enabled => true

spark.sql.queryExecutionListeners =>

spark.history.fs.logDirectory => hdfs:///spark2-history/

spark.master => yarn

spark.io.compression.lz4.blockSize => 128kb

spark.executor.instances => 5

spark.sql.catalogImplementation => hive

spark.history.kerberos.principal => none

spark.history.fs.cleaner.maxAge => 90d

spark.sql.orc.filterPushdown => true

spark.shuffle.io.backLog => 8192

spark.extraListeners =>

spark.unsafe.sorter.spill.reader.buffer.size => 1m

spark.shuffle.unsafe.file.output.buffer => 5m

spark.sql.warehouse.dir => /apps/spark/warehouse

spark.history.store.path => /var/lib/spark2/shs_db

spark.sql.hive.metastore.version => 3.0

21/01/21 14:47:49 INFO SparkContext: Running Spark version 2.3.2.3.1.4.0-315

21/01/21 14:47:49 INFO SparkContext: Submitted application: Waterdrop

21/01/21 14:47:49 INFO SecurityManager: Changing view acls to: hadoop

21/01/21 14:47:49 INFO SecurityManager: Changing modify acls to: hadoop

21/01/21 14:47:49 INFO SecurityManager: Changing view acls groups to:

21/01/21 14:47:49 INFO SecurityManager: Changing modify acls groups to:

21/01/21 14:47:49 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set()

21/01/21 14:47:50 INFO Utils: Successfully started service 'sparkDriver' on port 41209.

21/01/21 14:47:50 INFO SparkEnv: Registering MapOutputTracker

21/01/21 14:47:50 INFO SparkEnv: Registering BlockManagerMaster

21/01/21 14:47:50 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

21/01/21 14:47:50 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

21/01/21 14:47:50 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-71de7789-dece-42fc-bc93-3d69ef1b02fc

21/01/21 14:47:50 INFO MemoryStore: MemoryStore started with capacity 366.3 MB

[INFO] 2021-01-21 14:47:51.696 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:47:50 INFO SparkEnv: Registering OutputCommitCoordinator

21/01/21 14:47:50 INFO log: Logging initialized @3824ms

21/01/21 14:47:50 INFO Server: jetty-9.3.z-SNAPSHOT, build timestamp: 2018-06-06T01:11:56+08:00, git hash: 84205aa28f11a4f31f2a3b86d1bba2cc8ab69827

21/01/21 14:47:50 INFO Server: Started @3935ms

21/01/21 14:47:50 INFO AbstractConnector: Started ServerConnector@4a67318f{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

21/01/21 14:47:50 INFO Utils: Successfully started service 'SparkUI' on port 4040.

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@26f3d90c{/jobs,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@232024b9{/jobs/json,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@55a8dc49{/jobs/job,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@71ea1fda{/jobs/job/json,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@62b3df3a{/stages,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@420745d7{/stages/json,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@7e11ab3d{/stages/stage,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@5b43e173{/stages/stage/json,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@28f8e165{/stages/pool,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@545f80bf{/stages/pool/json,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@66f66866{/storage,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@22fa55b2{/storage/json,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@4d666b41{/storage/rdd,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@6594402a{/storage/rdd/json,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@30f4b1a6{/environment,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@405325cf{/environment/json,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@3e1162e7{/executors,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@79c3f01f{/executors/json,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@6c2f1700{/executors/threadDump,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@350b3a17{/executors/threadDump/json,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@38600b{/static,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@17f460bb{/,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@64a1923a{/api,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@44d70181{/jobs/job/kill,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@6aa648b9{/stages/stage/kill,null,AVAILABLE,@Spark}

21/01/21 14:47:50 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://scheduler:4040

21/01/21 14:47:50 INFO SparkContext: Added JAR file:/opt/soft/waterdrop-1.5.1/lib/Waterdrop-1.5.1-2.11.8.jar at spark://scheduler:41209/jars/Waterdrop-1.5.1-2.11.8.jar with timestamp 1611211670807

21/01/21 14:47:51 INFO EsServiceCredentialProvider: Loaded EsServiceCredentialProvider

[INFO] 2021-01-21 14:47:53.599 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:47:51 INFO ConfiguredRMFailoverProxyProvider: Failing over to rm2

21/01/21 14:47:51 INFO Client: Requesting a new application from cluster with 5 NodeManagers

21/01/21 14:47:52 INFO Configuration: found resource resource-types.xml at file:/etc/hadoop/3.1.4.0-315/0/resource-types.xml

21/01/21 14:47:52 INFO Client: Verifying our application has not requested more than the maximum memory capability of the cluster (46080 MB per container)

21/01/21 14:47:52 INFO Client: Will allocate AM container, with 896 MB memory including 384 MB overhead

21/01/21 14:47:52 INFO Client: Setting up container launch context for our AM

21/01/21 14:47:52 INFO Client: Setting up the launch environment for our AM container

21/01/21 14:47:52 INFO Client: Preparing resources for our AM container

21/01/21 14:47:53 INFO EsServiceCredentialProvider: Hadoop Security Enabled = [false]

[INFO] 2021-01-21 14:47:54.771 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:47:53 INFO EsServiceCredentialProvider: ES Auth Method = [SIMPLE]

21/01/21 14:47:53 INFO EsServiceCredentialProvider: Are creds required = [false]

21/01/21 14:47:53 INFO Client: Use hdfs cache file as spark.yarn.archive for HDP, hdfsCacheFile:hdfs://ns1/hdp/apps/3.1.4.0-315/spark2/spark2-hdp-yarn-archive.tar.gz

21/01/21 14:47:53 INFO Client: Source and destination file systems are the same. Not copying hdfs://ns1/hdp/apps/3.1.4.0-315/spark2/spark2-hdp-yarn-archive.tar.gz

21/01/21 14:47:53 INFO Client: Distribute hdfs cache file as spark.sql.hive.metastore.jars for HDP, hdfsCacheFile:hdfs://ns1/hdp/apps/3.1.4.0-315/spark2/spark2-hdp-hive-archive.tar.gz

21/01/21 14:47:53 INFO Client: Source and destination file systems are the same. Not copying hdfs://ns1/hdp/apps/3.1.4.0-315/spark2/spark2-hdp-hive-archive.tar.gz

21/01/21 14:47:54 INFO Client: Uploading resource file:/tmp/spark-de10c9d5-7632-49cf-a0b4-431af9db9ad2/__spark_conf__7359149213266667811.zip -> hdfs://ns1/user/hadoop/.sparkStaging/application_1610616162512_0320/__spark_conf__.zip

21/01/21 14:47:54 INFO SecurityManager: Changing view acls to: hadoop

21/01/21 14:47:54 INFO SecurityManager: Changing modify acls to: hadoop

21/01/21 14:47:54 INFO SecurityManager: Changing view acls groups to:

21/01/21 14:47:54 INFO SecurityManager: Changing modify acls groups to:

21/01/21 14:47:54 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set()

21/01/21 14:47:54 INFO Client: Submitting application application_1610616162512_0320 to ResourceManager

21/01/21 14:47:54 INFO YarnClientImpl: Submitted application application_1610616162512_0320

[INFO] 2021-01-21 14:47:55.791 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:47:54 INFO SchedulerExtensionServices: Starting Yarn extension services with app application_1610616162512_0320 and attemptId None

21/01/21 14:47:55 INFO Client: Application report for application_1610616162512_0320 (state: ACCEPTED)

[INFO] 2021-01-21 14:47:56.804 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:47:55 INFO Client:

client token: N/A

diagnostics: AM container is launched, waiting for AM container to Register with RM

ApplicationMaster host: N/A

ApplicationMaster RPC port: -1

queue: default

start time: 1611211674542

final status: UNDEFINED

tracking URL: http://namenode002:8088/proxy/application_1610616162512_0320/

user: hadoop

21/01/21 14:47:56 INFO Client: Application report for application_1610616162512_0320 (state: ACCEPTED)

[INFO] 2021-01-21 14:47:57.810 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:47:57 INFO Client: Application report for application_1610616162512_0320 (state: ACCEPTED)

[INFO] 2021-01-21 14:47:58.814 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:47:58 INFO Client: Application report for application_1610616162512_0320 (state: ACCEPTED)

[INFO] 2021-01-21 14:47:59.818 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:47:59 INFO Client: Application report for application_1610616162512_0320 (state: ACCEPTED)

[INFO] 2021-01-21 14:48:00.822 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:47:59 INFO YarnClientSchedulerBackend: Add WebUI Filter. org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter, Map(PROXY_HOSTS -> namenode001,namenode002, PROXY_URI_BASES -> http://namenode001:8088/proxy/application_1610616162512_0320,http://namenode002:8088/proxy/application_1610616162512_0320, RM_HA_URLS -> namenode001:8088,namenode002:8088), /proxy/application_1610616162512_0320

21/01/21 14:47:59 INFO JettyUtils: Adding filter org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter to /jobs, /jobs/json, /jobs/job, /jobs/job/json, /stages, /stages/json, /stages/stage, /stages/stage/json, /stages/pool, /stages/pool/json, /storage, /storage/json, /storage/rdd, /storage/rdd/json, /environment, /environment/json, /executors, /executors/json, /executors/threadDump, /executors/threadDump/json, /static, /, /api, /jobs/job/kill, /stages/stage/kill.

21/01/21 14:48:00 INFO YarnSchedulerBackend$YarnSchedulerEndpoint: ApplicationMaster registered as NettyRpcEndpointRef(spark-client://YarnAM)

21/01/21 14:48:00 INFO Client: Application report for application_1610616162512_0320 (state: RUNNING)

[INFO] 2021-01-21 14:48:01.993 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:00 INFO Client:

client token: N/A

diagnostics: N/A

ApplicationMaster host: 192.168.2.24

ApplicationMaster RPC port: 0

queue: default

start time: 1611211674542

final status: UNDEFINED

tracking URL: http://namenode002:8088/proxy/application_1610616162512_0320/

user: hadoop

21/01/21 14:48:00 INFO YarnClientSchedulerBackend: Application application_1610616162512_0320 has started running.

21/01/21 14:48:00 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 38571.

21/01/21 14:48:00 INFO NettyBlockTransferService: Server created on scheduler:38571

21/01/21 14:48:00 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

21/01/21 14:48:00 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, scheduler, 38571, None)

21/01/21 14:48:00 INFO BlockManagerMasterEndpoint: Registering block manager scheduler:38571 with 366.3 MB RAM, BlockManagerId(driver, scheduler, 38571, None)

21/01/21 14:48:00 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, scheduler, 38571, None)

21/01/21 14:48:00 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, scheduler, 38571, None)

21/01/21 14:48:01 INFO JettyUtils: Adding filter org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter to /metrics/json.

21/01/21 14:48:01 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@34009349{/metrics/json,null,AVAILABLE,@Spark}

21/01/21 14:48:01 INFO EventLoggingListener: Logging events to hdfs:/spark2-history/application_1610616162512_0320

[INFO] 2021-01-21 14:48:06.205 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:06 INFO YarnSchedulerBackend$YarnDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (192.168.2.23:53790) with ID 2

[INFO] 2021-01-21 14:48:07.441 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:06 INFO YarnSchedulerBackend$YarnDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (192.168.2.27:41120) with ID 3

21/01/21 14:48:06 INFO YarnSchedulerBackend$YarnDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (192.168.2.25:32962) with ID 1

21/01/21 14:48:06 INFO BlockManagerMasterEndpoint: Registering block manager datanode001:38988 with 912.3 MB RAM, BlockManagerId(2, datanode001, 38988, None)

21/01/21 14:48:06 INFO BlockManagerMasterEndpoint: Registering block manager datanode005:43427 with 912.3 MB RAM, BlockManagerId(3, datanode005, 43427, None)

21/01/21 14:48:06 INFO YarnSchedulerBackend$YarnDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (192.168.2.24:46922) with ID 5

21/01/21 14:48:06 INFO YarnSchedulerBackend$YarnDriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (192.168.2.26:33798) with ID 4

21/01/21 14:48:06 INFO YarnClientSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.8

21/01/21 14:48:06 INFO BlockManagerMasterEndpoint: Registering block manager datanode003:34874 with 912.3 MB RAM, BlockManagerId(1, datanode003, 34874, None)

find and register UDFs & UDAFs

21/01/21 14:48:06 INFO BlockManagerMasterEndpoint: Registering block manager datanode002:36415 with 912.3 MB RAM, BlockManagerId(5, datanode002, 36415, None)

21/01/21 14:48:06 INFO BlockManagerMasterEndpoint: Registering block manager datanode004:34751 with 912.3 MB RAM, BlockManagerId(4, datanode004, 34751, None)

21/01/21 14:48:07 INFO SharedState: loading hive config file: file:/etc/spark2/3.1.4.0-315/0/hive-site.xml

[INFO] 2021-01-21 14:48:08.458 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:07 INFO SharedState: Setting hive.metastore.warehouse.dir ('null') to the value of spark.sql.warehouse.dir ('/apps/spark/warehouse').

21/01/21 14:48:07 INFO SharedState: Warehouse path is '/apps/spark/warehouse'.

21/01/21 14:48:07 INFO JettyUtils: Adding filter org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter to /SQL.

21/01/21 14:48:07 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@467af68c{/SQL,null,AVAILABLE,@Spark}

21/01/21 14:48:07 INFO JettyUtils: Adding filter org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter to /SQL/json.

21/01/21 14:48:07 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@1d6f77d7{/SQL/json,null,AVAILABLE,@Spark}

21/01/21 14:48:07 INFO JettyUtils: Adding filter org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter to /SQL/execution.

21/01/21 14:48:07 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@46702c26{/SQL/execution,null,AVAILABLE,@Spark}

21/01/21 14:48:07 INFO JettyUtils: Adding filter org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter to /SQL/execution/json.

21/01/21 14:48:07 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@e3ed455{/SQL/execution/json,null,AVAILABLE,@Spark}

21/01/21 14:48:07 INFO JettyUtils: Adding filter org.apache.hadoop.yarn.server.webproxy.amfilter.AmIpFilter to /static/sql.

21/01/21 14:48:07 INFO ContextHandler: Started o.s.j.s.ServletContextHandler@21b744ec{/static/sql,null,AVAILABLE,@Spark}

21/01/21 14:48:08 INFO StateStoreCoordinatorRef: Registered StateStoreCoordinator endpoint

found and registered UDFs count[2], UDAFs count[0]

21/01/21 14:48:08 INFO ClickHouseDriver: Driver registered

[INFO] 2021-01-21 14:48:09.602 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:09 INFO HiveUtils: Initializing HiveMetastoreConnection version 3.0 using file:/usr/hdp/current/spark2-client/standalone-metastore/standalone-metastore-1.21.2.3.1.4.0-315-hive3.jar:file:/usr/hdp/current/spark2-client/standalone-metastore/standalone-metastore-1.21.2.3.1.4.0-315-hive3.jar

[INFO] 2021-01-21 14:48:10.982 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:09 INFO HiveConf: Found configuration file file:/usr/hdp/3.1.4.0-315/hive/conf/hive-site.xml

21/01/21 14:48:09 WARN HiveConf: HiveConf of name hive.heapsize does not exist

21/01/21 14:48:09 WARN HiveConf: HiveConf of name hive.stats.fetch.partition.stats does not exist

Hive Session ID = 2fc8b3fd-5cf7-4220-8012-acb64a89c974

21/01/21 14:48:09 INFO SessionState: Hive Session ID = 2fc8b3fd-5cf7-4220-8012-acb64a89c974

21/01/21 14:48:09 INFO SessionState: Created HDFS directory: /tmp/spark/hadoop/2fc8b3fd-5cf7-4220-8012-acb64a89c974

21/01/21 14:48:09 INFO SessionState: Created local directory: /tmp/hadoop/2fc8b3fd-5cf7-4220-8012-acb64a89c974

21/01/21 14:48:10 INFO SessionState: Created HDFS directory: /tmp/spark/hadoop/2fc8b3fd-5cf7-4220-8012-acb64a89c974/_tmp_space.db

21/01/21 14:48:10 INFO HiveClientImpl: Warehouse location for Hive client (version 3.0.0) is /apps/spark/warehouse

21/01/21 14:48:10 INFO HiveMetaStoreClient: Trying to connect to metastore with URI thrift://namenode002:9083

[INFO] 2021-01-21 14:48:12.969 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:11 INFO HiveMetaStoreClient: Opened a connection to metastore, current connections: 1

21/01/21 14:48:11 INFO HiveMetaStoreClient: Connected to metastore.

21/01/21 14:48:11 INFO RetryingMetaStoreClient: RetryingMetaStoreClient proxy=class org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient ugi=hadoop (auth:SIMPLE) retries=24 delay=5 lifetime=0

21/01/21 14:48:12 WARN Utils: Truncated the string representation of a plan since it was too large. This behavior can be adjusted by setting 'spark.debug.maxToStringFields' in SparkEnv.conf.

[INFO] 2021-01-21 14:48:14.485 - [taskAppId=TASK-13-198-935]:[127] - -> *#* *##* *#* ##

*#* *##* *#* ## ##

*#* ****#* *#* ## ##

*#* *#**#* *#* ## ##

*#* *#**#* *#* ## ##

*#* *#**#* *#* ******** ######## ******** ## ***** ******* ## ## ***** ******** ## *******

*#* ******** *#* ########* ######## **#######* ##*####* **######*## ##*####* **######** ##*######**

*#* *#* *#* *#* **** ***#* ## **#*** ***** ###*** **#******### ###*** **#******#** ###******#**

*#* *#* *#* *#* *#* ## *#* *#* ##* *#** **## ##* *#** **#* ##** **#*

*#* *#* *#* *#* *#* ## *#* *#* ##* *#* *## ##* *#* *#* ##* *#*

*#* *#* *#* *#* *****####* ## *#******###* ##* *#* *## ##* *#* *#* ##* *#*

*#* *#* *#* *#* **######### ## *########### ## ## ## ## ## #* ## #*

**#**#* *#**#* *#**** ## ## *#* ## *#* *## ## *#* *#* ##* *#*

*#**#* *#**#* *#* *## ## *#* ## *#* *## ## *#* *#* ##* *#*

*#**#* *#**#* *#* *## *#* *#** ## *#** **## ## *#** **#* ##** **#*

*#*#* *#*#* *#** ***### *#** **#*** **** ## **#******### ## **#******#** ###******#**

**##* *##** **######*## *##### **######## ## **######*## ## **######** ##*######**

*##* *##* ******* ## ***## ****#**** ## ******* ## ## ******** ## *******

##

##

##

##

**

21/01/21 14:48:13 INFO Clickhouse: insert into dws_community_equipment_room_house_report_vo_wide_ck_all (`h_id`,`h_name`,`h_mobile`,`h_account`,`h_password`,`h_address`,`h_regmethod`,`h_gender`,`h_mail`,`h_qq`,`h_headimage`,`h_identityno`,`h_birthday`,`h_nickname`,`h_creator`,`h_talkaccount`,`h_talkpassword`,`h_uhomepassword`,`h_identitytype`,`h_isaudited`,`h_rke_ischild`,`h_rke_fingerprint1`,`h_rke_callphone`,`h_rke_maxcardcount`,`h_accountsid`,`h_defaultroomid`,`h_rke_faceimg`,`h_logintype`,`h_create_time`,`h_update_time`,`r_id`,`r_co_id`,`r_cs_id`,`r_name`,`r_creator`,`r_accountsid`,`r_del_flag`,`r_type`,`r_create_time`,`r_update_time`,`rh_del_flag`,`co_id`,`co_name`,`co_address`,`co_stdaddress`,`co_tel`,`areaid`,`co_lat`,`co_lng`,`co_enable`,`co_logo`,`co_summary`,`co_remark`,`is_test`,`co_creator`,`co_accountsid`,`co_eqserial`,`co_latlng`,`deptid`,`co_funcontrol`,`co_create_time`,`co_update_time`,`cs_id`,`cs_co_id`,`cs_parentid`,`cs_name`,`cs_fullname`,`cs_lat`,`cs_lng`,`cs_

islast`,`cs_orderindex`,`cs_creator`,`cs_accountsid`,`cs_del_flag`,`cs_create_time`,`cs_update_time`,`eq_autoid`,`eq_name`,`eq_communityid`,`eq_num`,`eq_username`,`eq_userpwd`,`eq_type`,`eq_ip`,`eq_cs_id`,`eq_addtime`,`eq_talkname`,`eq_talkpwd`,`eq_bell`,`eq_mediaday`,`eq_medianight`,`eq_nightstarthour`,`eq_nightstartminute`,`eq_nightendhour`,`eq_nightendminute`,`eq_isplayimage`,`eq_isplayvideo`,`eq_imageinterval`,`eq_callpriority`,`eq_starttime`,`eq_alarmtimedelay`,`eq_systempwd`,`eq_sharepwd`,`eq_volume`,`eq_modelnumber`,`eq_kernelversion`,`eq_softwareversion`,`eq_isclosed`,`eq_isonline`,`eq_isenable`,`eq_isneedpwd`,`eq_calibratetime`,`eq_policerecordid`,`eq_lastopentime`,`eq_openbyidcard`,`eq_openbybluetooth`,`eq_isdoorstatecheck`,`eq_mac`,`creater`,`eq_accountsid`,`eq_faceip`,`eq_facepwd`,`eq_hardcode`,`eq_localipvalue`,`eq_localip`,`eq_subnetmask`,`eq_gateway`,`eq_dns`,`eq_istomobile`,`eq_etype`,`eq_enum`,`eq_r_id`,`isnightdormant`,`eq_isbioassay`,`eq_facemode`,`eq_threshold`,`

isthirdad`,`eq_brightness`,`is_new_video`,`eq_create_time`,`eq_update_time`,`proince_id`,`proincename`,`city_id`,`city_name`,`county_id`,`county_name`,`reportid`,`reporttype`,`reporttimestamp`,`reportdate`,`reportstatus`,`reporterrmsg`,`month`,`day`) values (?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?)

21/01/21 14:48:13 INFO SQLStdHiveAccessController: Created SQLStdHiveAccessController for session context : HiveAuthzSessionContext [sessionString=2fc8b3fd-5cf7-4220-8012-acb64a89c974, clientType=HIVECLI]

21/01/21 14:48:13 WARN SessionState: METASTORE_FILTER_HOOK will be ignored, since hive.security.authorization.manager is set to instance of HiveAuthorizerFactory.

21/01/21 14:48:13 INFO HiveMetaStoreClient: Mestastore configuration metastore.filter.hook changed from org.apache.hadoop.hive.metastore.DefaultMetaStoreFilterHookImpl to org.apache.hadoop.hive.ql.security.authorization.plugin.AuthorizationMetaStoreFilterHook

21/01/21 14:48:13 INFO HiveMetaStoreClient: Closed a connection to metastore, current connections: 0

21/01/21 14:48:13 INFO HiveMetaStoreClient: Trying to connect to metastore with URI thrift://namenode002:9083

21/01/21 14:48:13 INFO HiveMetaStoreClient: Opened a connection to metastore, current connections: 1

21/01/21 14:48:13 INFO HiveMetaStoreClient: Connected to metastore.

21/01/21 14:48:13 INFO RetryingMetaStoreClient: RetryingMetaStoreClient proxy=class org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient ugi=hadoop (auth:SIMPLE) retries=24 delay=5 lifetime=0

21/01/21 14:48:13 INFO HiveMetaStoreClient: Trying to connect to metastore with URI thrift://namenode002:9083

21/01/21 14:48:13 INFO HiveMetaStoreClient: Opened a connection to metastore, current connections: 2

21/01/21 14:48:13 INFO HiveMetaStoreClient: Connected to metastore.

21/01/21 14:48:13 INFO RetryingMetaStoreClient: RetryingMetaStoreClient proxy=class org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient ugi=hadoop (auth:SIMPLE) retries=24 delay=5 lifetime=0

21/01/21 14:48:14 INFO FileSourceStrategy: Pruning directories with: isnotnull(month#153),isnotnull(day#154),(month#153 = 2021-01),(day#154 = 2021-01-20)

[INFO] 2021-01-21 14:48:15.589 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:14 INFO FileSourceStrategy: Post-Scan Filters:

21/01/21 14:48:14 INFO FileSourceStrategy: Output Data Schema: struct<h_id: string, h_name: string, h_mobile: string, h_account: string, h_password: string ... 151 more fields>

21/01/21 14:48:14 INFO FileSourceScanExec: Pushed Filters:

21/01/21 14:48:14 INFO PrunedInMemoryFileIndex: Selected 1 partitions out of 1, pruned 0.0% partitions.

21/01/21 14:48:15 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 396.7 KB, free 365.9 MB)

21/01/21 14:48:15 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 33.1 KB, free 365.9 MB)

21/01/21 14:48:15 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on scheduler:38571 (size: 33.1 KB, free: 366.3 MB)

21/01/21 14:48:15 INFO SparkContext: Created broadcast 0 from foreachPartition at Clickhouse.scala:162

21/01/21 14:48:15 INFO FileSourceScanExec: Planning scan with bin packing, max size: 16764703 bytes, open cost is considered as scanning 4194304 bytes.

21/01/21 14:48:15 INFO SparkContext: Starting job: foreachPartition at Clickhouse.scala:162

21/01/21 14:48:15 INFO DAGScheduler: Got job 0 (foreachPartition at Clickhouse.scala:162) with 20 output partitions

21/01/21 14:48:15 INFO DAGScheduler: Final stage: ResultStage 0 (foreachPartition at Clickhouse.scala:162)

21/01/21 14:48:15 INFO DAGScheduler: Parents of final stage: List()

21/01/21 14:48:15 INFO DAGScheduler: Missing parents: List()

21/01/21 14:48:15 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[4] at foreachPartition at Clickhouse.scala:162), which has no missing parents

21/01/21 14:48:15 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 63.6 KB, free 365.8 MB)

[INFO] 2021-01-21 14:48:16.964 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:15 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 22.3 KB, free 365.8 MB)

21/01/21 14:48:15 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on scheduler:38571 (size: 22.3 KB, free: 366.2 MB)

21/01/21 14:48:15 INFO SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:1039

21/01/21 14:48:15 INFO DAGScheduler: Submitting 20 missing tasks from ResultStage 0 (MapPartitionsRDD[4] at foreachPartition at Clickhouse.scala:162) (first 15 tasks are for partitions Vector(0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14))

21/01/21 14:48:15 INFO YarnScheduler: Adding task set 0.0 with 20 tasks

21/01/21 14:48:15 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, datanode005, executor 3, partition 0, NODE_LOCAL, 8509 bytes)

21/01/21 14:48:15 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, datanode003, executor 1, partition 1, NODE_LOCAL, 8509 bytes)

21/01/21 14:48:15 INFO TaskSetManager: Starting task 2.0 in stage 0.0 (TID 2, datanode002, executor 5, partition 2, NODE_LOCAL, 8509 bytes)

21/01/21 14:48:15 INFO TaskSetManager: Starting task 9.0 in stage 0.0 (TID 3, datanode004, executor 4, partition 9, NODE_LOCAL, 8509 bytes)

21/01/21 14:48:15 INFO TaskSetManager: Starting task 3.0 in stage 0.0 (TID 4, datanode005, executor 3, partition 3, NODE_LOCAL, 8509 bytes)

21/01/21 14:48:15 INFO TaskSetManager: Starting task 4.0 in stage 0.0 (TID 5, datanode003, executor 1, partition 4, NODE_LOCAL, 8509 bytes)

21/01/21 14:48:15 INFO TaskSetManager: Starting task 5.0 in stage 0.0 (TID 6, datanode002, executor 5, partition 5, NODE_LOCAL, 8509 bytes)

21/01/21 14:48:15 INFO TaskSetManager: Starting task 10.0 in stage 0.0 (TID 7, datanode004, executor 4, partition 10, NODE_LOCAL, 8509 bytes)

21/01/21 14:48:15 INFO TaskSetManager: Starting task 6.0 in stage 0.0 (TID 8, datanode005, executor 3, partition 6, NODE_LOCAL, 8509 bytes)

21/01/21 14:48:15 INFO TaskSetManager: Starting task 7.0 in stage 0.0 (TID 9, datanode003, executor 1, partition 7, NODE_LOCAL, 8509 bytes)

21/01/21 14:48:15 INFO TaskSetManager: Starting task 8.0 in stage 0.0 (TID 10, datanode002, executor 5, partition 8, NODE_LOCAL, 8509 bytes)

21/01/21 14:48:15 INFO TaskSetManager: Starting task 11.0 in stage 0.0 (TID 11, datanode004, executor 4, partition 11, NODE_LOCAL, 8509 bytes)

21/01/21 14:48:15 INFO TaskSetManager: Starting task 12.0 in stage 0.0 (TID 12, datanode005, executor 3, partition 12, NODE_LOCAL, 8509 bytes)

21/01/21 14:48:15 INFO TaskSetManager: Starting task 13.0 in stage 0.0 (TID 13, datanode003, executor 1, partition 13, NODE_LOCAL, 8509 bytes)

21/01/21 14:48:15 INFO TaskSetManager: Starting task 14.0 in stage 0.0 (TID 14, datanode004, executor 4, partition 14, NODE_LOCAL, 8509 bytes)

21/01/21 14:48:16 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on datanode004:34751 (size: 22.3 KB, free: 912.3 MB)

[INFO] 2021-01-21 14:48:18.005 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:17 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on datanode005:43427 (size: 22.3 KB, free: 912.3 MB)

21/01/21 14:48:18 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on datanode003:34874 (size: 22.3 KB, free: 912.3 MB)

[INFO] 2021-01-21 14:48:20.268 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:18 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on datanode002:36415 (size: 22.3 KB, free: 912.3 MB)

21/01/21 14:48:18 INFO TaskSetManager: Starting task 15.0 in stage 0.0 (TID 15, datanode002, executor 5, partition 15, RACK_LOCAL, 8509 bytes)

21/01/21 14:48:18 INFO TaskSetManager: Starting task 16.0 in stage 0.0 (TID 16, datanode001, executor 2, partition 16, RACK_LOCAL, 8509 bytes)

21/01/21 14:48:18 INFO TaskSetManager: Starting task 17.0 in stage 0.0 (TID 17, datanode001, executor 2, partition 17, RACK_LOCAL, 8509 bytes)

21/01/21 14:48:18 INFO TaskSetManager: Starting task 18.0 in stage 0.0 (TID 18, datanode001, executor 2, partition 18, RACK_LOCAL, 8509 bytes)

21/01/21 14:48:18 INFO TaskSetManager: Starting task 19.0 in stage 0.0 (TID 19, datanode001, executor 2, partition 19, RACK_LOCAL, 8509 bytes)

21/01/21 14:48:20 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on datanode001:38988 (size: 22.3 KB, free: 912.3 MB)

[INFO] 2021-01-21 14:48:22.369 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:22 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on datanode005:43427 (size: 33.1 KB, free: 912.2 MB)

[INFO] 2021-01-21 14:48:25.420 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:22 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on datanode004:34751 (size: 33.1 KB, free: 912.2 MB)

21/01/21 14:48:22 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on datanode002:36415 (size: 33.1 KB, free: 912.2 MB)

21/01/21 14:48:23 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on datanode003:34874 (size: 33.1 KB, free: 912.2 MB)

21/01/21 14:48:25 INFO TaskSetManager: Finished task 8.0 in stage 0.0 (TID 10) in 9742 ms on datanode002 (executor 5) (1/20)

[INFO] 2021-01-21 14:48:29.436 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:26 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on datanode001:38988 (size: 33.1 KB, free: 912.2 MB)

21/01/21 14:48:29 INFO TaskSetManager: Finished task 17.0 in stage 0.0 (TID 17) in 10634 ms on datanode001 (executor 2) (2/20)

[INFO] 2021-01-21 14:48:45.764 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:45 INFO TaskSetManager: Finished task 19.0 in stage 0.0 (TID 19) in 26958 ms on datanode001 (executor 2) (3/20)

[INFO] 2021-01-21 14:48:52.304 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:52 INFO TaskSetManager: Finished task 9.0 in stage 0.0 (TID 3) in 36635 ms on datanode004 (executor 4) (4/20)

[INFO] 2021-01-21 14:48:53.553 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:52 INFO TaskSetManager: Finished task 10.0 in stage 0.0 (TID 7) in 37013 ms on datanode004 (executor 4) (5/20)

21/01/21 14:48:53 INFO TaskSetManager: Finished task 7.0 in stage 0.0 (TID 9) in 37879 ms on datanode003 (executor 1) (6/20)

[INFO] 2021-01-21 14:48:54.741 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:54 INFO TaskSetManager: Finished task 2.0 in stage 0.0 (TID 2) in 38747 ms on datanode002 (executor 5) (7/20)

21/01/21 14:48:54 INFO TaskSetManager: Finished task 5.0 in stage 0.0 (TID 6) in 39070 ms on datanode002 (executor 5) (8/20)

[INFO] 2021-01-21 14:48:55.833 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:54 INFO TaskSetManager: Finished task 14.0 in stage 0.0 (TID 14) in 39165 ms on datanode004 (executor 4) (9/20)

21/01/21 14:48:55 INFO TaskSetManager: Finished task 11.0 in stage 0.0 (TID 11) in 39838 ms on datanode004 (executor 4) (10/20)

21/01/21 14:48:55 INFO TaskSetManager: Finished task 6.0 in stage 0.0 (TID 8) in 39948 ms on datanode005 (executor 3) (11/20)

21/01/21 14:48:55 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 40165 ms on datanode003 (executor 1) (12/20)

[INFO] 2021-01-21 14:48:57.128 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:55 INFO TaskSetManager: Finished task 13.0 in stage 0.0 (TID 13) in 40277 ms on datanode003 (executor 1) (13/20)

21/01/21 14:48:57 INFO TaskSetManager: Finished task 12.0 in stage 0.0 (TID 12) in 41454 ms on datanode005 (executor 3) (14/20)

[INFO] 2021-01-21 14:48:58.799 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:57 INFO TaskSetManager: Finished task 4.0 in stage 0.0 (TID 5) in 41984 ms on datanode003 (executor 1) (15/20)

21/01/21 14:48:57 INFO TaskSetManager: Finished task 18.0 in stage 0.0 (TID 18) in 39040 ms on datanode001 (executor 2) (16/20)

21/01/21 14:48:57 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 42307 ms on datanode005 (executor 3) (17/20)

21/01/21 14:48:58 INFO TaskSetManager: Finished task 3.0 in stage 0.0 (TID 4) in 43127 ms on datanode005 (executor 3) (18/20)

[INFO] 2021-01-21 14:49:00.214 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:48:58 INFO TaskSetManager: Finished task 15.0 in stage 0.0 (TID 15) in 40024 ms on datanode002 (executor 5) (19/20)

21/01/21 14:49:00 INFO TaskSetManager: Finished task 16.0 in stage 0.0 (TID 16) in 41413 ms on datanode001 (executor 2) (20/20)

[INFO] 2021-01-21 14:49:01.248 - [taskAppId=TASK-13-198-935]:[127] - -> 21/01/21 14:49:00 INFO YarnScheduler: Removed TaskSet 0.0, whose tasks have all completed, from pool

21/01/21 14:49:00 INFO DAGScheduler: ResultStage 0 (foreachPartition at Clickhouse.scala:162) finished in 44.742 s

21/01/21 14:49:00 INFO DAGScheduler: Job 0 finished: foreachPartition at Clickhouse.scala:162, took 44.842088 s

21/01/21 14:49:00 INFO AbstractConnector: Stopped Spark@4a67318f{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

21/01/21 14:49:00 INFO SparkUI: Stopped Spark web UI at http://scheduler:4040

21/01/21 14:49:00 INFO YarnClientSchedulerBackend: Interrupting monitor thread

21/01/21 14:49:00 INFO YarnClientSchedulerBackend: Shutting down all executors

21/01/21 14:49:00 INFO YarnSchedulerBackend$YarnDriverEndpoint: Asking each executor to shut down

21/01/21 14:49:00 INFO SchedulerExtensionServices: Stopping SchedulerExtensionServices

(serviceOption=None,

services=List(),

started=false)

21/01/21 14:49:00 INFO YarnClientSchedulerBackend: Stopped

21/01/21 14:49:00 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

21/01/21 14:49:00 INFO MemoryStore: MemoryStore cleared

21/01/21 14:49:00 INFO BlockManager: BlockManager stopped

21/01/21 14:49:00 INFO BlockManagerMaster: BlockManagerMaster stopped

21/01/21 14:49:00 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

21/01/21 14:49:00 INFO SparkContext: Successfully stopped SparkContext

21/01/21 14:49:00 INFO ShutdownHookManager: Shutdown hook called

21/01/21 14:49:00 INFO ShutdownHookManager: Deleting directory /tmp/spark-de10c9d5-7632-49cf-a0b4-431af9db9ad2

21/01/21 14:49:00 INFO ShutdownHookManager: Deleting directory /tmp/spark-f5d79fe8-b8fb-4b1e-82b8-ed8c52c45223

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-dolphinscheduler] dailidong commented on issue #4517: Dolphinscheduler 1.3.4 uses shell script to execute waterdrop 1.5.1. The task is executed successfully, but the node shows that the execution failed

Posted by GitBox <gi...@apache.org>.

dailidong commented on issue #4517:

URL: https://github.com/apache/incubator-dolphinscheduler/issues/4517#issuecomment-766303874

did you see the this task exit code in the runtime log of the worker server?

if exitCode = 0,it means success

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-dolphinscheduler] dailidong edited a comment on issue #4517: Dolphinscheduler 1.3.4 uses shell script to execute waterdrop 1.5.1. The task is executed successfully, but the node shows that the execution failed

Posted by GitBox <gi...@apache.org>.

dailidong edited a comment on issue #4517:

URL: https://github.com/apache/incubator-dolphinscheduler/issues/4517#issuecomment-766303874

Did you see this task exit code in the runtime log of the worker server?

if exitCode = 0,it means success

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-dolphinscheduler] zosimer edited a comment on issue #4517: Dolphinscheduler 1.3.4 uses shell script to execute waterdrop 1.5.1. The task is executed successfully, but the node shows that the execution failed

Posted by GitBox <gi...@apache.org>.

zosimer edited a comment on issue #4517:

URL: https://github.com/apache/incubator-dolphinscheduler/issues/4517#issuecomment-766505343

> Did you see this task exit code in the runtime log of the worker server?

> if exitCode = 0,it means success

log print exitCode is 0

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-dolphinscheduler] zosimer edited a comment on issue #4517: Dolphinscheduler 1.3.4 uses shell script to execute waterdrop 1.5.1. The task is executed successfully, but the node shows that the execution failed

Posted by GitBox <gi...@apache.org>.

zosimer edited a comment on issue #4517:

URL: https://github.com/apache/incubator-dolphinscheduler/issues/4517#issuecomment-766505343

> Did you see this task exit code in the runtime log of the worker server?

> if exitCode = 0,it means success

log print exitCode is 0

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-dolphinscheduler] zosimer commented on issue #4517: Dolphinscheduler 1.3.4 uses shell script to execute waterdrop 1.5.1. The task is executed successfully, but the node shows that the execution failed

Posted by GitBox <gi...@apache.org>.

zosimer commented on issue #4517:

URL: https://github.com/apache/incubator-dolphinscheduler/issues/4517#issuecomment-766505343

> Did you see this task exit code in the runtime log of the worker server?

> if exitCode = 0,it means success

log print exitCode is 0

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [incubator-dolphinscheduler] zosimer commented on issue #4517: Dolphinscheduler 1.3.4 uses shell script to execute waterdrop 1.5.1. The task is executed successfully, but the node shows that the execution failed

Posted by GitBox <gi...@apache.org>.

zosimer commented on issue #4517:

URL: https://github.com/apache/incubator-dolphinscheduler/issues/4517#issuecomment-766505343

> Did you see this task exit code in the runtime log of the worker server?

> if exitCode = 0,it means success

log print exitCode is 0

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org