You are viewing a plain text version of this content. The canonical link for it is here.

Posted to reviews@spark.apache.org by "beliefer (via GitHub)" <gi...@apache.org> on 2023/03/06 03:24:44 UTC

[GitHub] [spark] beliefer opened a new pull request, #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

beliefer opened a new pull request, #40291:

URL: https://github.com/apache/spark/pull/40291

### What changes were proposed in this pull request?

Currently, the connect project have the new `DataFrameWriter` API which is corresponding to Spark `DataFrameWriter` API. But the connect's `DataFrameWriter` missing the jdbc API.

### Why are the changes needed?

This PR try to add JDBC to `DataFrameWriter`.

### Does this PR introduce _any_ user-facing change?

'No'.

New feature.

### How was this patch tested?

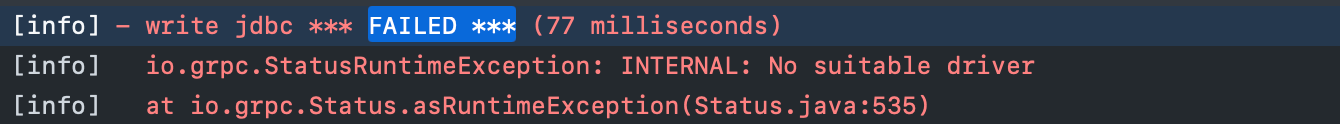

@hvanhovell It seems that add test cases no way.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] beliefer commented on pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "beliefer (via GitHub)" <gi...@apache.org>.

beliefer commented on PR #40291:

URL: https://github.com/apache/spark/pull/40291#issuecomment-1489556667

> Is that #40415?

It is https://github.com/apache/spark/pull/40358

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] hvanhovell commented on pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "hvanhovell (via GitHub)" <gi...@apache.org>.

hvanhovell commented on PR #40291:

URL: https://github.com/apache/spark/pull/40291#issuecomment-1455425240

hmmm - let me think about it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] beliefer commented on pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "beliefer (via GitHub)" <gi...@apache.org>.

beliefer commented on PR #40291:

URL: https://github.com/apache/spark/pull/40291#issuecomment-1455384866

@hvanhovell It seems that add test cases no way.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] hvanhovell commented on a diff in pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "hvanhovell (via GitHub)" <gi...@apache.org>.

hvanhovell commented on code in PR #40291:

URL: https://github.com/apache/spark/pull/40291#discussion_r1128061371

##########

connector/connect/client/jvm/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala:

##########

@@ -345,6 +345,48 @@ final class DataFrameWriter[T] private[sql] (ds: Dataset[T]) {

})

}

+ /**

+ * Saves the content of the `DataFrame` to an external database table via JDBC. In the case the

+ * table already exists in the external database, behavior of this function depends on the save

+ * mode, specified by the `mode` function (default to throwing an exception).

+ *

+ * Don't create too many partitions in parallel on a large cluster; otherwise Spark might crash

+ * your external database systems.

+ *

+ * JDBC-specific option and parameter documentation for storing tables via JDBC in <a

+ * href="https://spark.apache.org/docs/latest/sql-data-sources-jdbc.html#data-source-option">

+ * Data Source Option</a> in the version you use.

+ *

+ * @param table

+ * Name of the table in the external database.

+ * @param connectionProperties

+ * JDBC database connection arguments, a list of arbitrary string tag/value. Normally at least

+ * a "user" and "password" property should be included. "batchsize" can be used to control the

+ * number of rows per insert. "isolationLevel" can be one of "NONE", "READ_COMMITTED",

+ * "READ_UNCOMMITTED", "REPEATABLE_READ", or "SERIALIZABLE", corresponding to standard

+ * transaction isolation levels defined by JDBC's Connection object, with default of

+ * "READ_UNCOMMITTED".

+ * @since 3.4.0

+ */

+ def jdbc(url: String, table: String, connectionProperties: Properties): Unit = {

+ // connectionProperties should override settings in extraOptions.

+ this.extraOptions ++= connectionProperties.asScala

+ // explicit url and dbtable should override all

+ this.extraOptions ++= Seq("url" -> url, "dbtable" -> table)

+ format("jdbc").saveAsJdbcTable(table)

+ }

+

+ private def saveAsJdbcTable(tableName: String): Unit = {

Review Comment:

Why do we need a separate method? There is only one method using it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] LuciferYang commented on a diff in pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on code in PR #40291:

URL: https://github.com/apache/spark/pull/40291#discussion_r1129191369

##########

connector/connect/client/jvm/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala:

##########

@@ -345,6 +345,44 @@ final class DataFrameWriter[T] private[sql] (ds: Dataset[T]) {

})

}

+ /**

+ * Saves the content of the `DataFrame` to an external database table via JDBC. In the case the

+ * table already exists in the external database, behavior of this function depends on the save

+ * mode, specified by the `mode` function (default to throwing an exception).

+ *

+ * Don't create too many partitions in parallel on a large cluster; otherwise Spark might crash

+ * your external database systems.

+ *

+ * JDBC-specific option and parameter documentation for storing tables via JDBC in <a

+ * href="https://spark.apache.org/docs/latest/sql-data-sources-jdbc.html#data-source-option">

+ * Data Source Option</a> in the version you use.

+ *

+ * @param table

+ * Name of the table in the external database.

+ * @param connectionProperties

+ * JDBC database connection arguments, a list of arbitrary string tag/value. Normally at least

+ * a "user" and "password" property should be included. "batchsize" can be used to control the

+ * number of rows per insert. "isolationLevel" can be one of "NONE", "READ_COMMITTED",

+ * "READ_UNCOMMITTED", "REPEATABLE_READ", or "SERIALIZABLE", corresponding to standard

+ * transaction isolation levels defined by JDBC's Connection object, with default of

+ * "READ_UNCOMMITTED".

+ * @since 3.4.0

+ */

+ def jdbc(url: String, table: String, connectionProperties: Properties): Unit = {

Review Comment:

I think we can remove `ProblemFilters.exclude[Problem]("org.apache.spark.sql.DataFrameWriter.jdbc")` from `CheckConnectJvmClientCompatibility` in this pr

`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] hvanhovell commented on pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "hvanhovell (via GitHub)" <gi...@apache.org>.

hvanhovell commented on PR #40291:

URL: https://github.com/apache/spark/pull/40291#issuecomment-1456060688

@beliefer we should be able to create an in-memory table and append a couple of rows to that right?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] hvanhovell commented on a diff in pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "hvanhovell (via GitHub)" <gi...@apache.org>.

hvanhovell commented on code in PR #40291:

URL: https://github.com/apache/spark/pull/40291#discussion_r1128152776

##########

connector/connect/common/src/main/protobuf/spark/connect/commands.proto:

##########

@@ -116,6 +116,7 @@ message WriteOperation {

TABLE_SAVE_METHOD_UNSPECIFIED = 0;

TABLE_SAVE_METHOD_SAVE_AS_TABLE = 1;

TABLE_SAVE_METHOD_INSERT_INTO = 2;

+ TABLE_SAVE_METHOD_SAVE_AS_JDBC_TABLE = 3;

Review Comment:

It is calling save() right? Why not call it `TABLE_SAVE_METHOD_SAVE`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "beliefer (via GitHub)" <gi...@apache.org>.

beliefer commented on code in PR #40291:

URL: https://github.com/apache/spark/pull/40291#discussion_r1130258611

##########

connector/connect/server/pom.xml:

##########

@@ -199,6 +199,12 @@

<version>${tomcat.annotations.api.version}</version>

<scope>provided</scope>

</dependency>

+ <dependency>

Review Comment:

It seems the server can't find the jar from class path.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] hvanhovell commented on a diff in pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "hvanhovell (via GitHub)" <gi...@apache.org>.

hvanhovell commented on code in PR #40291:

URL: https://github.com/apache/spark/pull/40291#discussion_r1130251210

##########

connector/connect/server/pom.xml:

##########

@@ -199,6 +199,12 @@

<version>${tomcat.annotations.api.version}</version>

<scope>provided</scope>

</dependency>

+ <dependency>

Review Comment:

Why the move?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "beliefer (via GitHub)" <gi...@apache.org>.

beliefer commented on code in PR #40291:

URL: https://github.com/apache/spark/pull/40291#discussion_r1126114514

##########

connector/connect/client/jvm/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala:

##########

@@ -345,6 +345,37 @@ final class DataFrameWriter[T] private[sql] (ds: Dataset[T]) {

})

}

+ /**

+ * Saves the content of the `DataFrame` to an external database table via JDBC. In the case the

+ * table already exists in the external database, behavior of this function depends on the save

+ * mode, specified by the `mode` function (default to throwing an exception).

+ *

+ * Don't create too many partitions in parallel on a large cluster; otherwise Spark might crash

+ * your external database systems.

+ *

+ * JDBC-specific option and parameter documentation for storing tables via JDBC in <a

+ * href="https://spark.apache.org/docs/latest/sql-data-sources-jdbc.html#data-source-option">

+ * Data Source Option</a> in the version you use.

+ *

+ * @param table

+ * Name of the table in the external database.

+ * @param connectionProperties

+ * JDBC database connection arguments, a list of arbitrary string tag/value. Normally at least

+ * a "user" and "password" property should be included. "batchsize" can be used to control the

+ * number of rows per insert. "isolationLevel" can be one of "NONE", "READ_COMMITTED",

+ * "READ_UNCOMMITTED", "REPEATABLE_READ", or "SERIALIZABLE", corresponding to standard

+ * transaction isolation levels defined by JDBC's Connection object, with default of

+ * "READ_UNCOMMITTED".

+ * @since 3.4.0

+ */

+ def jdbc(url: String, table: String, connectionProperties: Properties): Unit = {

+ // connectionProperties should override settings in extraOptions.

Review Comment:

I think verify parameters on the server side is a robust way. Certainly, the work on client side will reduce the pressure on the server side.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] LuciferYang commented on a diff in pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "LuciferYang (via GitHub)" <gi...@apache.org>.

LuciferYang commented on code in PR #40291:

URL: https://github.com/apache/spark/pull/40291#discussion_r1125957292

##########

connector/connect/client/jvm/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala:

##########

@@ -345,6 +345,37 @@ final class DataFrameWriter[T] private[sql] (ds: Dataset[T]) {

})

}

+ /**

+ * Saves the content of the `DataFrame` to an external database table via JDBC. In the case the

+ * table already exists in the external database, behavior of this function depends on the save

+ * mode, specified by the `mode` function (default to throwing an exception).

+ *

+ * Don't create too many partitions in parallel on a large cluster; otherwise Spark might crash

+ * your external database systems.

+ *

+ * JDBC-specific option and parameter documentation for storing tables via JDBC in <a

+ * href="https://spark.apache.org/docs/latest/sql-data-sources-jdbc.html#data-source-option">

+ * Data Source Option</a> in the version you use.

+ *

+ * @param table

+ * Name of the table in the external database.

+ * @param connectionProperties

+ * JDBC database connection arguments, a list of arbitrary string tag/value. Normally at least

+ * a "user" and "password" property should be included. "batchsize" can be used to control the

+ * number of rows per insert. "isolationLevel" can be one of "NONE", "READ_COMMITTED",

+ * "READ_UNCOMMITTED", "REPEATABLE_READ", or "SERIALIZABLE", corresponding to standard

+ * transaction isolation levels defined by JDBC's Connection object, with default of

+ * "READ_UNCOMMITTED".

+ * @since 3.4.0

+ */

+ def jdbc(url: String, table: String, connectionProperties: Properties): Unit = {

+ // connectionProperties should override settings in extraOptions.

Review Comment:

I have a question @hvanhovell @beliefer . For the connect-client api, should we verify the parameters on the client side or on the server side?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] beliefer closed pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "beliefer (via GitHub)" <gi...@apache.org>.

beliefer closed pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

URL: https://github.com/apache/spark/pull/40291

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] hvanhovell commented on pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "hvanhovell (via GitHub)" <gi...@apache.org>.

hvanhovell commented on PR #40291:

URL: https://github.com/apache/spark/pull/40291#issuecomment-1489025120

Is that https://github.com/apache/spark/pull/40415?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] hvanhovell commented on pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "hvanhovell (via GitHub)" <gi...@apache.org>.

hvanhovell commented on PR #40291:

URL: https://github.com/apache/spark/pull/40291#issuecomment-1487890609

@beliefer are you abandoning this one?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] hvanhovell commented on a diff in pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "hvanhovell (via GitHub)" <gi...@apache.org>.

hvanhovell commented on code in PR #40291:

URL: https://github.com/apache/spark/pull/40291#discussion_r1126358047

##########

connector/connect/client/jvm/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala:

##########

@@ -345,6 +345,37 @@ final class DataFrameWriter[T] private[sql] (ds: Dataset[T]) {

})

}

+ /**

+ * Saves the content of the `DataFrame` to an external database table via JDBC. In the case the

+ * table already exists in the external database, behavior of this function depends on the save

+ * mode, specified by the `mode` function (default to throwing an exception).

+ *

+ * Don't create too many partitions in parallel on a large cluster; otherwise Spark might crash

+ * your external database systems.

+ *

+ * JDBC-specific option and parameter documentation for storing tables via JDBC in <a

+ * href="https://spark.apache.org/docs/latest/sql-data-sources-jdbc.html#data-source-option">

+ * Data Source Option</a> in the version you use.

+ *

+ * @param table

+ * Name of the table in the external database.

+ * @param connectionProperties

+ * JDBC database connection arguments, a list of arbitrary string tag/value. Normally at least

+ * a "user" and "password" property should be included. "batchsize" can be used to control the

+ * number of rows per insert. "isolationLevel" can be one of "NONE", "READ_COMMITTED",

+ * "READ_UNCOMMITTED", "REPEATABLE_READ", or "SERIALIZABLE", corresponding to standard

+ * transaction isolation levels defined by JDBC's Connection object, with default of

+ * "READ_UNCOMMITTED".

+ * @since 3.4.0

+ */

+ def jdbc(url: String, table: String, connectionProperties: Properties): Unit = {

+ // connectionProperties should override settings in extraOptions.

Review Comment:

Server side. There are a couple of reasons for this:

- The server cannot trust the client to implement the verification properly. I am sure we will get it right for Scala and Python, but there are potentially a plethora of other frontends that need to do the same.

- Keeping the client simple and reduce duplication. If we need to do this for every client we'll end up with a lot of duplication and increase client complexity.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "beliefer (via GitHub)" <gi...@apache.org>.

beliefer commented on code in PR #40291:

URL: https://github.com/apache/spark/pull/40291#discussion_r1129275882

##########

connector/connect/client/jvm/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala:

##########

@@ -345,6 +345,44 @@ final class DataFrameWriter[T] private[sql] (ds: Dataset[T]) {

})

}

+ /**

+ * Saves the content of the `DataFrame` to an external database table via JDBC. In the case the

+ * table already exists in the external database, behavior of this function depends on the save

+ * mode, specified by the `mode` function (default to throwing an exception).

+ *

+ * Don't create too many partitions in parallel on a large cluster; otherwise Spark might crash

+ * your external database systems.

+ *

+ * JDBC-specific option and parameter documentation for storing tables via JDBC in <a

+ * href="https://spark.apache.org/docs/latest/sql-data-sources-jdbc.html#data-source-option">

+ * Data Source Option</a> in the version you use.

+ *

+ * @param table

+ * Name of the table in the external database.

+ * @param connectionProperties

+ * JDBC database connection arguments, a list of arbitrary string tag/value. Normally at least

+ * a "user" and "password" property should be included. "batchsize" can be used to control the

+ * number of rows per insert. "isolationLevel" can be one of "NONE", "READ_COMMITTED",

+ * "READ_UNCOMMITTED", "REPEATABLE_READ", or "SERIALIZABLE", corresponding to standard

+ * transaction isolation levels defined by JDBC's Connection object, with default of

+ * "READ_UNCOMMITTED".

+ * @since 3.4.0

+ */

+ def jdbc(url: String, table: String, connectionProperties: Properties): Unit = {

Review Comment:

Thank you for the reminder.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] beliefer commented on pull request #40291: [WIP][SPARK-42578][CONNECT] Add JDBC to DataFrameWriter

Posted by "beliefer (via GitHub)" <gi...@apache.org>.

beliefer commented on PR #40291:

URL: https://github.com/apache/spark/pull/40291#issuecomment-1487935419

> @beliefer are you abandoning this one?

Because other PR implement this function.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org