You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@airflow.apache.org by GitBox <gi...@apache.org> on 2020/06/05 09:54:00 UTC

[GitHub] [airflow] ephraimbuddy opened a new pull request #9153: [WIP] add readonly endpoints for dagruns

ephraimbuddy opened a new pull request #9153:

URL: https://github.com/apache/airflow/pull/9153

---

Closes: #8129

Make sure to mark the boxes below before creating PR: [x]

- [ ] Description above provides context of the change

- [ ] Unit tests coverage for changes (not needed for documentation changes)

- [ ] Target Github ISSUE in description if exists

- [ ] Commits follow "[How to write a good git commit message](http://chris.beams.io/posts/git-commit/)"

- [ ] Relevant documentation is updated including usage instructions.

- [ ] I will engage committers as explained in [Contribution Workflow Example](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#contribution-workflow-example).

---

In case of fundamental code change, Airflow Improvement Proposal ([AIP](https://cwiki.apache.org/confluence/display/AIRFLOW/Airflow+Improvements+Proposals)) is needed.

In case of a new dependency, check compliance with the [ASF 3rd Party License Policy](https://www.apache.org/legal/resolved.html#category-x).

In case of backwards incompatible changes please leave a note in [UPDATING.md](https://github.com/apache/airflow/blob/master/UPDATING.md).

Read the [Pull Request Guidelines](https://github.com/apache/airflow/blob/master/CONTRIBUTING.rst#pull-request-guidelines) for more information.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] mik-laj commented on a change in pull request #9153: [WIP] add readonly endpoints for dagruns

Posted by GitBox <gi...@apache.org>.

mik-laj commented on a change in pull request #9153:

URL: https://github.com/apache/airflow/pull/9153#discussion_r436576266

##########

File path: tests/api_connexion/endpoints/test_dag_run_endpoint.py

##########

@@ -29,26 +35,471 @@ def setUpClass(cls) -> None:

def setUp(self) -> None:

self.client = self.app.test_client() # type:ignore

+ self.now = timezone.utcnow()

+ clear_db_runs()

+

+ def tearDown(self) -> None:

+ clear_db_runs()

class TestDeleteDagRun(TestDagRunEndpoint):

@pytest.mark.skip(reason="Not implemented yet")

def test_should_response_200(self):

- response = self.client.delete("/dags/TEST_DAG_ID}/dagRuns/TEST_DAG_RUN_ID")

+ response = self.client.delete("api/v1/dags/TEST_DAG_ID}/dagRuns/TEST_DAG_RUN_ID")

assert response.status_code == 204

class TestGetDagRun(TestDagRunEndpoint):

- @pytest.mark.skip(reason="Not implemented yet")

- def test_should_response_200(self):

- response = self.client.get("/dags/TEST_DAG_ID/dagRuns/TEST_DAG_RUN_ID")

+

+ @provide_session

+ def test_should_response_200(self, session):

+ dagrun_model = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=self.now,

+ start_date=self.now,

+ external_trigger=True,

+ )

+ session.add(dagrun_model)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 1

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns/TEST_DAG_RUN_ID")

assert response.status_code == 200

+ self.assertEqual(

+ response.json,

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.now.isoformat(),

+ 'external_trigger': True,

+ 'start_date': self.now.isoformat(),

+ 'conf': {},

+ }

+ )

+

+ def test_should_response_404(self):

+ response = self.client.get("api/v1/dags/invalid-id/dagRuns/invalid-id")

+ assert response.status_code == 404

+ self.assertEqual(

+ {

+ 'detail': None,

+ 'status': 404,

+ 'title': 'DAGRun not found',

+ 'type': 'about:blank'

+ },

+ response.json

+ )

class TestGetDagRuns(TestDagRunEndpoint):

- @pytest.mark.skip(reason="Not implemented yet")

+

+ @provide_session

+ def test_should_response_200(self, session):

+ dagrun_model_1 = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID_1',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=self.now,

+ start_date=self.now,

+ external_trigger=True,

+ )

+ dagrun_model_2 = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID_2',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=self.now + timedelta(minutes=1),

+ start_date=self.now,

+ external_trigger=True,

+ )

+ session.add_all([dagrun_model_1, dagrun_model_2])

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 2

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns")

+ assert response.status_code == 200

+ self.assertEqual(

+ response.json,

+ {

+ "dag_runs": [

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_1',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.now.isoformat(),

+ 'external_trigger': True,

+ 'start_date': self.now.isoformat(),

+ 'conf': {},

+ },

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_2',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': (self.now + timedelta(minutes=1)).isoformat(),

+ 'external_trigger': True,

+ 'start_date': self.now.isoformat(),

+ 'conf': {},

+ }

+ ],

+ "total_entries": 2

+ }

+ )

+

+ @provide_session

+ def test_should_return_all_with_tilde_as_dag_id(self, session):

+ dagrun_model_1 = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID_1',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=self.now,

+ start_date=self.now,

+ external_trigger=True,

+ )

+ dagrun_model_2 = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID_2',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=self.now + timedelta(minutes=1),

+ start_date=self.now,

+ external_trigger=True,

+ )

+ session.add_all([dagrun_model_1, dagrun_model_2])

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 2

+ response = self.client.get("api/v1/dags/~/dagRuns")

+ assert response.status_code == 200

+ self.assertEqual(

+ response.json,

+ {

+ "dag_runs": [

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_1',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.now.isoformat(),

+ 'external_trigger': True,

+ 'start_date': self.now.isoformat(),

+ 'conf': {},

+ },

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_2',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': (self.now + timedelta(minutes=1)).isoformat(),

+ 'external_trigger': True,

+ 'start_date': self.now.isoformat(),

+ 'conf': {},

+ }

+ ],

+ "total_entries": 2

+ }

+ )

+

+ @provide_session

+ def test_handle_limit_in_query(self, session):

+ dagrun_models = [DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID' + str(i),

+ run_type=DagRunType.MANUAL.value,

+ execution_date=self.now + timedelta(minutes=i),

+ start_date=self.now,

+ external_trigger=True,

+ ) for i in range(100)]

+

+ session.add_all(dagrun_models)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 100

+

+ response = self.client.get(

+ "api/v1/dags/TEST_DAG_ID/dagRuns?limit=1"

+ )

+ assert response.status_code == 200

+ self.assertEqual(response.json.get('total_entries'), 1)

+ self.assertEqual(

+ response.json,

+ {

+ "dag_runs": [

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID0',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': (self.now + timedelta(minutes=0)).isoformat(),

+ 'external_trigger': True,

+ 'start_date': self.now.isoformat(),

+ 'conf': {},

+ }

+ ],

+ "total_entries": 1

+ }

+ )

+

+ @provide_session

+ def test_handle_offset_in_query(self, session):

+ dagrun_models = [DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID' + str(i),

+ run_type=DagRunType.MANUAL.value,

+ execution_date=self.now + timedelta(minutes=i),

+ start_date=self.now,

+ external_trigger=True,

+ ) for i in range(4)]

+

+ session.add_all(dagrun_models)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 4

+

+ response = self.client.get(

+ "api/v1/dags/TEST_DAG_ID/dagRuns?offset=1"

+ )

+ assert response.status_code == 200

+ self.assertEqual(response.json.get('total_entries'), 3)

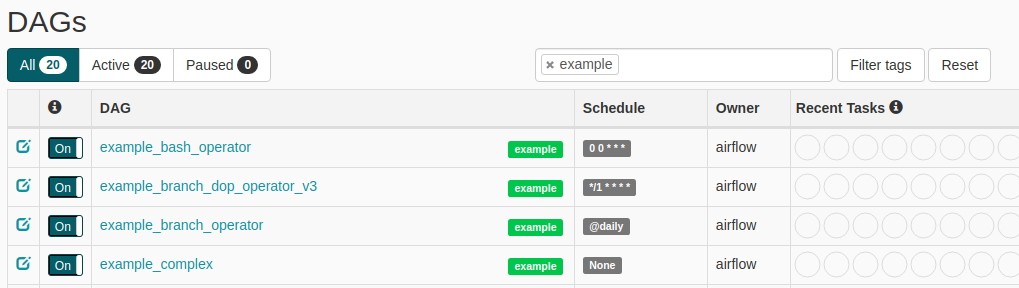

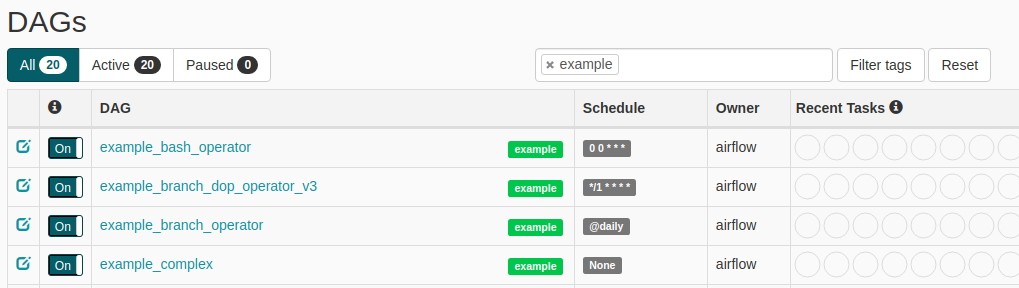

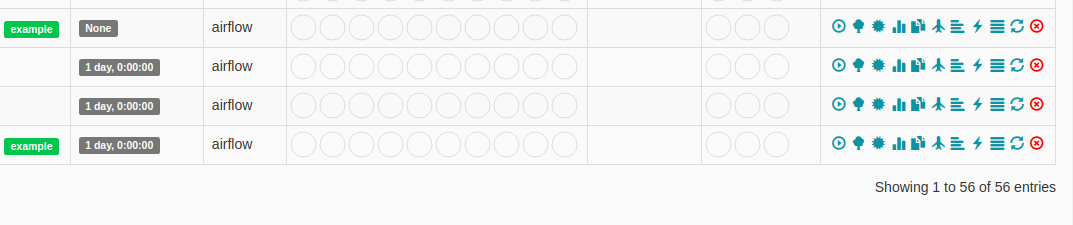

Review comment:

```suggestion

self.assertEqual(response.json.get('total_entries'), 4)

```

This should always return the amount of all items.

<img width="497" alt="Screenshot 2020-06-08 at 11 44 19" src="https://user-images.githubusercontent.com/12058428/84016326-69d82300-a97d-11ea-952c-37c472d3e16f.png">

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ephraimbuddy commented on a change in pull request #9153: [WIP] add readonly endpoints for dagruns

Posted by GitBox <gi...@apache.org>.

ephraimbuddy commented on a change in pull request #9153:

URL: https://github.com/apache/airflow/pull/9153#discussion_r437881981

##########

File path: airflow/api_connexion/endpoints/dag_run_endpoint.py

##########

@@ -26,18 +34,100 @@ def delete_dag_run():

raise NotImplementedError("Not implemented yet.")

-def get_dag_run():

+@provide_session

+def get_dag_run(dag_id, dag_run_id, session):

"""

Get a DAG Run.

"""

- raise NotImplementedError("Not implemented yet.")

+ query = session.query(DagRun)

+ query = query.filter(DagRun.dag_id == dag_id)

+ query = query.filter(DagRun.run_id == dag_run_id)

+ dag_run = query.one_or_none()

+ if dag_run is None:

+ raise NotFound("DAGRun not found")

+ return dagrun_schema.dump(dag_run)

-def get_dag_runs():

+@provide_session

+def get_dag_runs(dag_id, session):

"""

Get all DAG Runs.

"""

- raise NotImplementedError("Not implemented yet.")

+ offset = request.args.get(parameters.page_offset, 0)

+ limit = min(int(request.args.get(parameters.page_limit, 100)), 100)

+

+ start_date_gte = parse_datetime_in_query(

Review comment:

I will be using webargs. Connexion no longer validate date-format because of license of one of its library.

https://github.com/zalando/connexion/issues/476

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] mik-laj commented on a change in pull request #9153: add readonly endpoints for dagruns

Posted by GitBox <gi...@apache.org>.

mik-laj commented on a change in pull request #9153:

URL: https://github.com/apache/airflow/pull/9153#discussion_r439078448

##########

File path: tests/api_connexion/endpoints/test_dag_run_endpoint.py

##########

@@ -15,41 +15,335 @@

# specific language governing permissions and limitations

# under the License.

import unittest

+from datetime import timedelta

import pytest

+from parameterized import parameterized

+from airflow.models import DagRun

+from airflow.utils import timezone

+from airflow.utils.session import provide_session

+from airflow.utils.types import DagRunType

from airflow.www import app

+from tests.test_utils.db import clear_db_runs

class TestDagRunEndpoint(unittest.TestCase):

@classmethod

def setUpClass(cls) -> None:

super().setUpClass()

+

cls.app = app.create_app(testing=True) # type:ignore

def setUp(self) -> None:

self.client = self.app.test_client() # type:ignore

+ self.default_time = '2020-06-11T18:00:00+00:00'

+ self.default_time_2 = '2020-06-12T18:00:00+00:00'

+ clear_db_runs()

+

+ def tearDown(self) -> None:

+ clear_db_runs()

+

+ def _create_test_dag_run(self, state='running', extra_dag=False):

+ dagrun_model_1 = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID_1',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ state=state,

+ )

+ dagrun_model_2 = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID_2',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time_2),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ )

+ if extra_dag:

+ dagrun_extra = [DagRun(

+ dag_id='TEST_DAG_ID_' + str(i),

+ run_id='TEST_DAG_RUN_ID_' + str(i),

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time_2),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ ) for i in range(3, 5)]

+ return [dagrun_model_1, dagrun_model_2] + dagrun_extra

+ return [dagrun_model_1, dagrun_model_2]

class TestDeleteDagRun(TestDagRunEndpoint):

@pytest.mark.skip(reason="Not implemented yet")

def test_should_response_200(self):

- response = self.client.delete("/dags/TEST_DAG_ID}/dagRuns/TEST_DAG_RUN_ID")

+ response = self.client.delete("api/v1/dags/TEST_DAG_ID}/dagRuns/TEST_DAG_RUN_ID")

assert response.status_code == 204

class TestGetDagRun(TestDagRunEndpoint):

- @pytest.mark.skip(reason="Not implemented yet")

- def test_should_response_200(self):

- response = self.client.get("/dags/TEST_DAG_ID/dagRuns/TEST_DAG_RUN_ID")

+ @provide_session

+ def test_should_response_200(self, session):

+ dagrun_model = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ )

+ session.add(dagrun_model)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 1

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns/TEST_DAG_RUN_ID")

assert response.status_code == 200

+ self.assertEqual(

+ response.json,

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.default_time,

+ 'external_trigger': True,

+ 'start_date': self.default_time,

+ 'conf': {},

+ },

+ )

+

+ def test_should_response_404(self):

+ response = self.client.get("api/v1/dags/invalid-id/dagRuns/invalid-id")

+ assert response.status_code == 404

+ self.assertEqual(

+ {'detail': None, 'status': 404, 'title': 'DAGRun not found', 'type': 'about:blank'}, response.json

+ )

class TestGetDagRuns(TestDagRunEndpoint):

- @pytest.mark.skip(reason="Not implemented yet")

- def test_should_response_200(self):

- response = self.client.get("/dags/TEST_DAG_ID/dagRuns/")

+ @provide_session

+ def test_should_response_200(self, session):

+ dagruns = self._create_test_dag_run()

+ session.add_all(dagruns)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 2

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns")

+ assert response.status_code == 200

+ self.assertEqual(

+ response.json,

+ {

+ "dag_runs": [

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_1',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.default_time,

+ 'external_trigger': True,

+ 'start_date': self.default_time,

+ 'conf': {},

+ },

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_2',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.default_time_2,

+ 'external_trigger': True,

+ 'start_date': self.default_time,

+ 'conf': {},

+ },

+ ],

+ "total_entries": 2,

+ },

+ )

+

+ @provide_session

+ def test_should_return_all_with_tilde_as_dag_id(self, session):

+ dagruns = self._create_test_dag_run(extra_dag=True)

+ expected_dag_run_ids = ['TEST_DAG_ID', 'TEST_DAG_ID',

+ "TEST_DAG_ID_3", "TEST_DAG_ID_4"]

+ session.add_all(dagruns)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 4

+ response = self.client.get("api/v1/dags/~/dagRuns")

+ assert response.status_code == 200

+ dag_run_ids = [dag_run["dag_id"] for dag_run in response.json["dag_runs"]]

+ self.assertEqual(dag_run_ids, expected_dag_run_ids)

+

+

+class TestGetDagRunsPagination(TestDagRunEndpoint):

+ @parameterized.expand(

+ [

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=1", ["TEST_DAG_RUN_ID1"]),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=2", ["TEST_DAG_RUN_ID1", "TEST_DAG_RUN_ID2"]),

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?offset=5",

+ [

+ "TEST_DAG_RUN_ID6",

+ "TEST_DAG_RUN_ID7",

+ "TEST_DAG_RUN_ID8",

+ "TEST_DAG_RUN_ID9",

+ "TEST_DAG_RUN_ID10",

+ ],

+ ),

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?offset=0",

+ [

+ "TEST_DAG_RUN_ID1",

+ "TEST_DAG_RUN_ID2",

+ "TEST_DAG_RUN_ID3",

+ "TEST_DAG_RUN_ID4",

+ "TEST_DAG_RUN_ID5",

+ "TEST_DAG_RUN_ID6",

+ "TEST_DAG_RUN_ID7",

+ "TEST_DAG_RUN_ID8",

+ "TEST_DAG_RUN_ID9",

+ "TEST_DAG_RUN_ID10",

+ ],

+ ),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=1&offset=5", ["TEST_DAG_RUN_ID6"]),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=1&offset=1", ["TEST_DAG_RUN_ID2"]),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=2&offset=2", ["TEST_DAG_RUN_ID3", "TEST_DAG_RUN_ID4"],),

+ ]

+ )

+ @provide_session

+ def test_handle_limit_and_offset(self, url, expected_dag_run_ids, session):

+ dagrun_models = self._create_dag_runs(10)

+ session.add_all(dagrun_models)

+ session.commit()

+

+ response = self.client.get(url)

+ assert response.status_code == 200

+

+ self.assertEqual(response.json["total_entries"], 10)

+ dag_run_ids = [dag_run["dag_run_id"] for dag_run in response.json["dag_runs"]]

+ self.assertEqual(dag_run_ids, expected_dag_run_ids)

+

+ @provide_session

+ def test_should_respect_page_size_limit(self, session):

+ dagrun_models = self._create_dag_runs(200)

+ session.add_all(dagrun_models)

+ session.commit()

+

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns?limit=150")

+ assert response.status_code == 200

+

+ self.assertEqual(response.json["total_entries"], 200)

+ self.assertEqual(len(response.json["dag_runs"]), 100)

+

+ def _create_dag_runs(self, count):

+ return [

+ DagRun(

+ dag_id="TEST_DAG_ID",

+ run_id="TEST_DAG_RUN_ID" + str(i),

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time) + timedelta(minutes=i),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ )

+ for i in range(1, count + 1)

+ ]

+

+

+class TestGetDagRunsPaginationFilters(TestDagRunEndpoint):

+ @parameterized.expand(

+ [

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?start_date_gte=2020-06-18T18:00:00+00:00",

+ ["TEST_START_EXEC_DAY_18", "TEST_START_EXEC_DAY_19"],

+ ),

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?start_date_lte=2020-06-11T18:00:00+00:00",

+ ["TEST_START_EXEC_DAY_10"],

Review comment:

I have a day off today, so I have limited options to check it out, but it can help you.

https://github.com/apache/airflow/blob/master/TESTING.rst#tracking-sql-statements

If something can be done at the SQL level, then SQL can do it too.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ephraimbuddy commented on a change in pull request #9153: [WIP] add readonly endpoints for dagruns

Posted by GitBox <gi...@apache.org>.

ephraimbuddy commented on a change in pull request #9153:

URL: https://github.com/apache/airflow/pull/9153#discussion_r438468200

##########

File path: airflow/api_connexion/endpoints/dag_run_endpoint.py

##########

@@ -26,18 +34,66 @@ def delete_dag_run():

raise NotImplementedError("Not implemented yet.")

-def get_dag_run():

+@provide_session

+def get_dag_run(dag_id, dag_run_id, session):

"""

Get a DAG Run.

"""

- raise NotImplementedError("Not implemented yet.")

+ query = session.query(DagRun)

+ query = query.filter(DagRun.dag_id == dag_id)

+ query = query.filter(DagRun.run_id == dag_run_id)

+ dag_run = query.one_or_none()

+ if dag_run is None:

+ raise NotFound("DAGRun not found")

+ return dagrun_schema.dump(dag_run)

-def get_dag_runs():

+@provide_session

+def get_dag_runs(dag_id, session):

"""

Get all DAG Runs.

"""

- raise NotImplementedError("Not implemented yet.")

+ offset = request.args.get(parameters.page_offset, 0)

+ limit = min(int(request.args.get(parameters.page_limit, 100)), 100)

+ start_date_gte = request.args.get(parameters.filter_start_date_gte, None)

+ start_date_lte = request.args.get(parameters.filter_start_date_lte, None)

+ execution_date_gte = request.args.get(parameters.filter_execution_date_gte, None)

+ execution_date_lte = request.args.get(parameters.filter_execution_date_lte, None)

+ end_date_gte = request.args.get(parameters.filter_end_date_gte, None)

+ end_date_lte = request.args.get(parameters.filter_end_date_lte, None)

+ query = session.query(DagRun)

+

+ # This endpoint allows specifying ~ as the dag_id to retrieve DAG Runs for all DAGs.

+ if dag_id != '~':

+ query = query.filter(DagRun.dag_id == dag_id)

+

+ # filter start date

+ if start_date_gte:

+ query = query.filter(DagRun.start_date >= timezone.parse(start_date_gte))

+

+ if start_date_lte:

+ query = query.filter(DagRun.start_date <= timezone.parse(start_date_lte))

+

+ # filter execution date

+ if execution_date_gte:

+ query = query.filter(DagRun.execution_date >= timezone.parse(execution_date_gte))

+

+ if execution_date_lte:

+ query = query.filter(DagRun.execution_date <= timezone.parse(execution_date_lte))

+

+ # filter end date

+ if end_date_gte and not end_date_lte:

Review comment:

```suggestion

if end_date_gte:

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] mik-laj commented on a change in pull request #9153: [WIP] add readonly endpoints for dagruns

Posted by GitBox <gi...@apache.org>.

mik-laj commented on a change in pull request #9153:

URL: https://github.com/apache/airflow/pull/9153#discussion_r438012011

##########

File path: airflow/api_connexion/endpoints/dag_run_endpoint.py

##########

@@ -26,18 +34,100 @@ def delete_dag_run():

raise NotImplementedError("Not implemented yet.")

-def get_dag_run():

+@provide_session

+def get_dag_run(dag_id, dag_run_id, session):

"""

Get a DAG Run.

"""

- raise NotImplementedError("Not implemented yet.")

+ query = session.query(DagRun)

+ query = query.filter(DagRun.dag_id == dag_id)

+ query = query.filter(DagRun.run_id == dag_run_id)

+ dag_run = query.one_or_none()

+ if dag_run is None:

+ raise NotFound("DAGRun not found")

+ return dagrun_schema.dump(dag_run)

-def get_dag_runs():

+@provide_session

+def get_dag_runs(dag_id, session):

"""

Get all DAG Runs.

"""

- raise NotImplementedError("Not implemented yet.")

+ offset = request.args.get(parameters.page_offset, 0)

+ limit = min(int(request.args.get(parameters.page_limit, 100)), 100)

+

+ start_date_gte = parse_datetime_in_query(

Review comment:

I'm glad you did research. We can change it in a separate change. Now it works, so it's great. In next changes, we will be able to make a refactor and remove repetitive code fragments.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ephraimbuddy commented on a change in pull request #9153: add readonly endpoints for dagruns

Posted by GitBox <gi...@apache.org>.

ephraimbuddy commented on a change in pull request #9153:

URL: https://github.com/apache/airflow/pull/9153#discussion_r439341152

##########

File path: tests/api_connexion/endpoints/test_dag_run_endpoint.py

##########

@@ -15,41 +15,335 @@

# specific language governing permissions and limitations

# under the License.

import unittest

+from datetime import timedelta

import pytest

+from parameterized import parameterized

+from airflow.models import DagRun

+from airflow.utils import timezone

+from airflow.utils.session import provide_session

+from airflow.utils.types import DagRunType

from airflow.www import app

+from tests.test_utils.db import clear_db_runs

class TestDagRunEndpoint(unittest.TestCase):

@classmethod

def setUpClass(cls) -> None:

super().setUpClass()

+

cls.app = app.create_app(testing=True) # type:ignore

def setUp(self) -> None:

self.client = self.app.test_client() # type:ignore

+ self.default_time = '2020-06-11T18:00:00+00:00'

+ self.default_time_2 = '2020-06-12T18:00:00+00:00'

+ clear_db_runs()

+

+ def tearDown(self) -> None:

+ clear_db_runs()

+

+ def _create_test_dag_run(self, state='running', extra_dag=False):

+ dagrun_model_1 = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID_1',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ state=state,

+ )

+ dagrun_model_2 = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID_2',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time_2),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ )

+ if extra_dag:

+ dagrun_extra = [DagRun(

+ dag_id='TEST_DAG_ID_' + str(i),

+ run_id='TEST_DAG_RUN_ID_' + str(i),

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time_2),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ ) for i in range(3, 5)]

+ return [dagrun_model_1, dagrun_model_2] + dagrun_extra

+ return [dagrun_model_1, dagrun_model_2]

class TestDeleteDagRun(TestDagRunEndpoint):

@pytest.mark.skip(reason="Not implemented yet")

def test_should_response_200(self):

- response = self.client.delete("/dags/TEST_DAG_ID}/dagRuns/TEST_DAG_RUN_ID")

+ response = self.client.delete("api/v1/dags/TEST_DAG_ID}/dagRuns/TEST_DAG_RUN_ID")

assert response.status_code == 204

class TestGetDagRun(TestDagRunEndpoint):

- @pytest.mark.skip(reason="Not implemented yet")

- def test_should_response_200(self):

- response = self.client.get("/dags/TEST_DAG_ID/dagRuns/TEST_DAG_RUN_ID")

+ @provide_session

+ def test_should_response_200(self, session):

+ dagrun_model = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ )

+ session.add(dagrun_model)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 1

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns/TEST_DAG_RUN_ID")

assert response.status_code == 200

+ self.assertEqual(

+ response.json,

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.default_time,

+ 'external_trigger': True,

+ 'start_date': self.default_time,

+ 'conf': {},

+ },

+ )

+

+ def test_should_response_404(self):

+ response = self.client.get("api/v1/dags/invalid-id/dagRuns/invalid-id")

+ assert response.status_code == 404

+ self.assertEqual(

+ {'detail': None, 'status': 404, 'title': 'DAGRun not found', 'type': 'about:blank'}, response.json

+ )

class TestGetDagRuns(TestDagRunEndpoint):

- @pytest.mark.skip(reason="Not implemented yet")

- def test_should_response_200(self):

- response = self.client.get("/dags/TEST_DAG_ID/dagRuns/")

+ @provide_session

+ def test_should_response_200(self, session):

+ dagruns = self._create_test_dag_run()

+ session.add_all(dagruns)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 2

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns")

+ assert response.status_code == 200

+ self.assertEqual(

+ response.json,

+ {

+ "dag_runs": [

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_1',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.default_time,

+ 'external_trigger': True,

+ 'start_date': self.default_time,

+ 'conf': {},

+ },

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_2',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.default_time_2,

+ 'external_trigger': True,

+ 'start_date': self.default_time,

+ 'conf': {},

+ },

+ ],

+ "total_entries": 2,

+ },

+ )

+

+ @provide_session

+ def test_should_return_all_with_tilde_as_dag_id(self, session):

+ dagruns = self._create_test_dag_run(extra_dag=True)

+ expected_dag_run_ids = ['TEST_DAG_ID', 'TEST_DAG_ID',

+ "TEST_DAG_ID_3", "TEST_DAG_ID_4"]

+ session.add_all(dagruns)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 4

+ response = self.client.get("api/v1/dags/~/dagRuns")

+ assert response.status_code == 200

+ dag_run_ids = [dag_run["dag_id"] for dag_run in response.json["dag_runs"]]

+ self.assertEqual(dag_run_ids, expected_dag_run_ids)

+

+

+class TestGetDagRunsPagination(TestDagRunEndpoint):

+ @parameterized.expand(

+ [

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=1", ["TEST_DAG_RUN_ID1"]),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=2", ["TEST_DAG_RUN_ID1", "TEST_DAG_RUN_ID2"]),

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?offset=5",

+ [

+ "TEST_DAG_RUN_ID6",

+ "TEST_DAG_RUN_ID7",

+ "TEST_DAG_RUN_ID8",

+ "TEST_DAG_RUN_ID9",

+ "TEST_DAG_RUN_ID10",

+ ],

+ ),

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?offset=0",

+ [

+ "TEST_DAG_RUN_ID1",

+ "TEST_DAG_RUN_ID2",

+ "TEST_DAG_RUN_ID3",

+ "TEST_DAG_RUN_ID4",

+ "TEST_DAG_RUN_ID5",

+ "TEST_DAG_RUN_ID6",

+ "TEST_DAG_RUN_ID7",

+ "TEST_DAG_RUN_ID8",

+ "TEST_DAG_RUN_ID9",

+ "TEST_DAG_RUN_ID10",

+ ],

+ ),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=1&offset=5", ["TEST_DAG_RUN_ID6"]),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=1&offset=1", ["TEST_DAG_RUN_ID2"]),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=2&offset=2", ["TEST_DAG_RUN_ID3", "TEST_DAG_RUN_ID4"],),

+ ]

+ )

+ @provide_session

+ def test_handle_limit_and_offset(self, url, expected_dag_run_ids, session):

+ dagrun_models = self._create_dag_runs(10)

+ session.add_all(dagrun_models)

+ session.commit()

+

+ response = self.client.get(url)

+ assert response.status_code == 200

+

+ self.assertEqual(response.json["total_entries"], 10)

+ dag_run_ids = [dag_run["dag_run_id"] for dag_run in response.json["dag_runs"]]

+ self.assertEqual(dag_run_ids, expected_dag_run_ids)

+

+ @provide_session

+ def test_should_respect_page_size_limit(self, session):

+ dagrun_models = self._create_dag_runs(200)

+ session.add_all(dagrun_models)

+ session.commit()

+

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns?limit=150")

+ assert response.status_code == 200

+

+ self.assertEqual(response.json["total_entries"], 200)

+ self.assertEqual(len(response.json["dag_runs"]), 100)

+

+ def _create_dag_runs(self, count):

+ return [

+ DagRun(

+ dag_id="TEST_DAG_ID",

+ run_id="TEST_DAG_RUN_ID" + str(i),

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time) + timedelta(minutes=i),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ )

+ for i in range(1, count + 1)

+ ]

+

+

+class TestGetDagRunsPaginationFilters(TestDagRunEndpoint):

+ @parameterized.expand(

+ [

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?start_date_gte=2020-06-18T18:00:00+00:00",

+ ["TEST_START_EXEC_DAY_18", "TEST_START_EXEC_DAY_19"],

+ ),

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?start_date_lte=2020-06-11T18:00:00+00:00",

+ ["TEST_START_EXEC_DAY_10"],

Review comment:

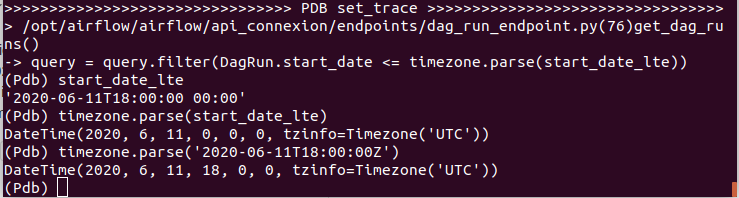

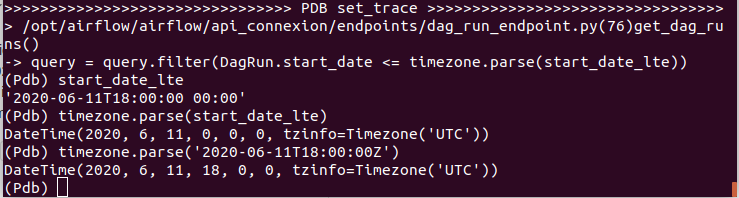

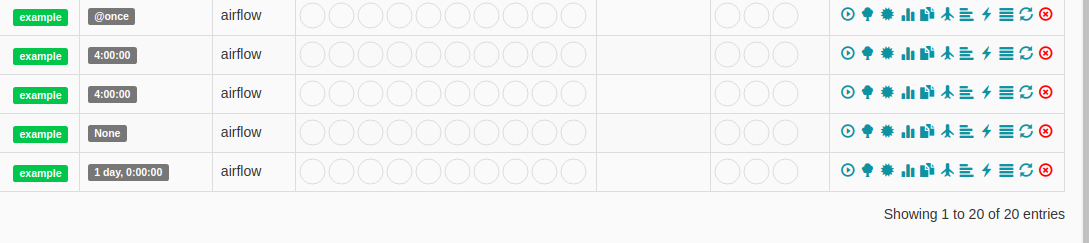

This is the command I used to investigate:

`pytest --trace-sql=num,sql,parameters --capture=no tests/api_connexion/endpoints/test_dag_run_endpoint.py -k test_date_filters_gte_and_lte_1_api_v1_dags_TEST_DAG_ID_dagRuns_start_date_lte_2020_06_11T18_00_00_00_00`

I set pdb debuger at this point in dag_run_endpoint:

` if start_date_lte:

import pdb; pdb.set_trace()

query = query.filter(DagRun.start_date <= timezone.parse(start_date_lte))`

Check image below:

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] mik-laj commented on a change in pull request #9153: [WIP] add readonly endpoints for dagruns

Posted by GitBox <gi...@apache.org>.

mik-laj commented on a change in pull request #9153:

URL: https://github.com/apache/airflow/pull/9153#discussion_r438010702

##########

File path: tests/api_connexion/endpoints/test_dag_run_endpoint.py

##########

@@ -15,41 +15,347 @@

# specific language governing permissions and limitations

# under the License.

import unittest

+from datetime import timedelta

import pytest

+from parameterized import parameterized

+from airflow.models import DagRun

+from airflow.utils import timezone

+from airflow.utils.session import provide_session

+from airflow.utils.types import DagRunType

from airflow.www import app

+from tests.test_utils.db import clear_db_runs

class TestDagRunEndpoint(unittest.TestCase):

@classmethod

def setUpClass(cls) -> None:

super().setUpClass()

+

cls.app = app.create_app(testing=True) # type:ignore

def setUp(self) -> None:

self.client = self.app.test_client() # type:ignore

+ self.now = '2020-06-11T18:00:00+00:00'

+ self.now2 = '2020-06-12T18:00:00+00:00'

+ clear_db_runs()

+

+ def tearDown(self) -> None:

+ clear_db_runs()

+

+ def _create_test_dag_run(self, state='running'):

+ dagrun_model_1 = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID_1',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.now),

+ start_date=timezone.parse(self.now),

+ external_trigger=True,

+ state=state,

+ )

+ dagrun_model_2 = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID_2',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.now2),

+ start_date=timezone.parse(self.now),

+ external_trigger=True,

+ )

+ return [dagrun_model_1, dagrun_model_2]

class TestDeleteDagRun(TestDagRunEndpoint):

@pytest.mark.skip(reason="Not implemented yet")

def test_should_response_200(self):

- response = self.client.delete("/dags/TEST_DAG_ID}/dagRuns/TEST_DAG_RUN_ID")

+ response = self.client.delete("api/v1/dags/TEST_DAG_ID}/dagRuns/TEST_DAG_RUN_ID")

assert response.status_code == 204

class TestGetDagRun(TestDagRunEndpoint):

- @pytest.mark.skip(reason="Not implemented yet")

- def test_should_response_200(self):

- response = self.client.get("/dags/TEST_DAG_ID/dagRuns/TEST_DAG_RUN_ID")

+ @provide_session

+ def test_should_response_200(self, session):

+ dagrun_model = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.now),

+ start_date=timezone.parse(self.now),

+ external_trigger=True,

+ )

+ session.add(dagrun_model)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 1

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns/TEST_DAG_RUN_ID")

assert response.status_code == 200

+ self.assertEqual(

+ response.json,

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.now,

+ 'external_trigger': True,

+ 'start_date': self.now,

+ 'conf': {},

+ },

+ )

+

+ def test_should_response_404(self):

+ response = self.client.get("api/v1/dags/invalid-id/dagRuns/invalid-id")

+ assert response.status_code == 404

+ self.assertEqual(

+ {'detail': None, 'status': 404, 'title': 'DAGRun not found', 'type': 'about:blank'}, response.json

+ )

class TestGetDagRuns(TestDagRunEndpoint):

- @pytest.mark.skip(reason="Not implemented yet")

- def test_should_response_200(self):

- response = self.client.get("/dags/TEST_DAG_ID/dagRuns/")

+ @provide_session

+ def test_should_response_200(self, session):

+ dagruns = self._create_test_dag_run()

+ session.add_all(dagruns)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 2

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns")

+ assert response.status_code == 200

+ self.assertEqual(

+ response.json,

+ {

+ "dag_runs": [

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_1',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.now,

+ 'external_trigger': True,

+ 'start_date': self.now,

+ 'conf': {},

+ },

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_2',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.now2,

+ 'external_trigger': True,

+ 'start_date': self.now,

+ 'conf': {},

+ },

+ ],

+ "total_entries": 2,

+ },

+ )

+

+ @provide_session

+ def test_should_return_all_with_tilde_as_dag_id(self, session):

+ dagruns = self._create_test_dag_run()

+ session.add_all(dagruns)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 2

+ assert result[0].dag_id == result[1].dag_id == 'TEST_DAG_ID'

+ response = self.client.get("api/v1/dags/~/dagRuns")

+ assert response.status_code == 200

+ self.assertEqual(

+ response.json,

+ {

+ "dag_runs": [

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_1',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.now,

+ 'external_trigger': True,

+ 'start_date': self.now,

+ 'conf': {},

+ },

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_2',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.now2,

+ 'external_trigger': True,

+ 'start_date': self.now,

+ 'conf': {},

+ },

+ ],

+ "total_entries": 2,

+ },

+ )

+

+

+class TestGetDagRunsPagination(TestDagRunEndpoint):

+ @parameterized.expand(

+ [

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=1", ["TEST_DAG_RUN_ID1"]),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=2", ["TEST_DAG_RUN_ID1", "TEST_DAG_RUN_ID2"]),

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?offset=5",

+ [

+ "TEST_DAG_RUN_ID6",

+ "TEST_DAG_RUN_ID7",

+ "TEST_DAG_RUN_ID8",

+ "TEST_DAG_RUN_ID9",

+ "TEST_DAG_RUN_ID10",

+ ],

+ ),

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?offset=0",

+ [

+ "TEST_DAG_RUN_ID1",

+ "TEST_DAG_RUN_ID2",

+ "TEST_DAG_RUN_ID3",

+ "TEST_DAG_RUN_ID4",

+ "TEST_DAG_RUN_ID5",

+ "TEST_DAG_RUN_ID6",

+ "TEST_DAG_RUN_ID7",

+ "TEST_DAG_RUN_ID8",

+ "TEST_DAG_RUN_ID9",

+ "TEST_DAG_RUN_ID10",

+ ],

+ ),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=1&offset=5", ["TEST_DAG_RUN_ID6"]),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=1&offset=1", ["TEST_DAG_RUN_ID2"]),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=2&offset=2", ["TEST_DAG_RUN_ID3", "TEST_DAG_RUN_ID4"],),

+ ]

+ )

+ @provide_session

+ def test_handle_limit_and_offset(self, url, expected_dag_run_ids, session):

+ dagrun_models = self._create_dag_runs(10)

+ session.add_all(dagrun_models)

+ session.commit()

+

+ response = self.client.get(url)

+ assert response.status_code == 200

+

+ self.assertEqual(response.json["total_entries"], 10)

+ dag_run_ids = [dag_run["dag_run_id"] for dag_run in response.json["dag_runs"]]

+ self.assertEqual(dag_run_ids, expected_dag_run_ids)

+

+ @provide_session

+ def test_should_respect_page_size_limit(self, session):

+ dagrun_models = self._create_dag_runs(200)

+ session.add_all(dagrun_models)

+ session.commit()

+

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns?limit=150")

+ assert response.status_code == 200

+

+ self.assertEqual(response.json["total_entries"], 200)

+ self.assertEqual(len(response.json["dag_runs"]), 100)

+

+ def _create_dag_runs(self, count):

+ return [

+ DagRun(

+ dag_id="TEST_DAG_ID",

+ run_id="TEST_DAG_RUN_ID" + str(i),

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.now) + timedelta(minutes=i),

+ start_date=timezone.parse(self.now),

+ external_trigger=True,

+ )

+ for i in range(1, count + 1)

+ ]

+

+

+class TestGetDagRunsPaginationFilters(TestDagRunEndpoint):

+ @parameterized.expand(

+ [

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?start_date_gte=2020-06-18T18:00:00+00:00",

+ ["TEST_DAG_RUN_ID8", "TEST_DAG_RUN_ID9"],

Review comment:

What do you think to check the start/execution dates here? Identifiers poorly describe these assertions.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ephraimbuddy commented on a change in pull request #9153: add readonly endpoints for dagruns

Posted by GitBox <gi...@apache.org>.

ephraimbuddy commented on a change in pull request #9153:

URL: https://github.com/apache/airflow/pull/9153#discussion_r439122700

##########

File path: tests/api_connexion/endpoints/test_dag_run_endpoint.py

##########

@@ -15,41 +15,335 @@

# specific language governing permissions and limitations

# under the License.

import unittest

+from datetime import timedelta

import pytest

+from parameterized import parameterized

+from airflow.models import DagRun

+from airflow.utils import timezone

+from airflow.utils.session import provide_session

+from airflow.utils.types import DagRunType

from airflow.www import app

+from tests.test_utils.db import clear_db_runs

class TestDagRunEndpoint(unittest.TestCase):

@classmethod

def setUpClass(cls) -> None:

super().setUpClass()

+

cls.app = app.create_app(testing=True) # type:ignore

def setUp(self) -> None:

self.client = self.app.test_client() # type:ignore

+ self.default_time = '2020-06-11T18:00:00+00:00'

+ self.default_time_2 = '2020-06-12T18:00:00+00:00'

+ clear_db_runs()

+

+ def tearDown(self) -> None:

+ clear_db_runs()

+

+ def _create_test_dag_run(self, state='running', extra_dag=False):

+ dagrun_model_1 = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID_1',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ state=state,

+ )

+ dagrun_model_2 = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID_2',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time_2),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ )

+ if extra_dag:

+ dagrun_extra = [DagRun(

+ dag_id='TEST_DAG_ID_' + str(i),

+ run_id='TEST_DAG_RUN_ID_' + str(i),

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time_2),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ ) for i in range(3, 5)]

+ return [dagrun_model_1, dagrun_model_2] + dagrun_extra

+ return [dagrun_model_1, dagrun_model_2]

class TestDeleteDagRun(TestDagRunEndpoint):

@pytest.mark.skip(reason="Not implemented yet")

def test_should_response_200(self):

- response = self.client.delete("/dags/TEST_DAG_ID}/dagRuns/TEST_DAG_RUN_ID")

+ response = self.client.delete("api/v1/dags/TEST_DAG_ID}/dagRuns/TEST_DAG_RUN_ID")

assert response.status_code == 204

class TestGetDagRun(TestDagRunEndpoint):

- @pytest.mark.skip(reason="Not implemented yet")

- def test_should_response_200(self):

- response = self.client.get("/dags/TEST_DAG_ID/dagRuns/TEST_DAG_RUN_ID")

+ @provide_session

+ def test_should_response_200(self, session):

+ dagrun_model = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ )

+ session.add(dagrun_model)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 1

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns/TEST_DAG_RUN_ID")

assert response.status_code == 200

+ self.assertEqual(

+ response.json,

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.default_time,

+ 'external_trigger': True,

+ 'start_date': self.default_time,

+ 'conf': {},

+ },

+ )

+

+ def test_should_response_404(self):

+ response = self.client.get("api/v1/dags/invalid-id/dagRuns/invalid-id")

+ assert response.status_code == 404

+ self.assertEqual(

+ {'detail': None, 'status': 404, 'title': 'DAGRun not found', 'type': 'about:blank'}, response.json

+ )

class TestGetDagRuns(TestDagRunEndpoint):

- @pytest.mark.skip(reason="Not implemented yet")

- def test_should_response_200(self):

- response = self.client.get("/dags/TEST_DAG_ID/dagRuns/")

+ @provide_session

+ def test_should_response_200(self, session):

+ dagruns = self._create_test_dag_run()

+ session.add_all(dagruns)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 2

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns")

+ assert response.status_code == 200

+ self.assertEqual(

+ response.json,

+ {

+ "dag_runs": [

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_1',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.default_time,

+ 'external_trigger': True,

+ 'start_date': self.default_time,

+ 'conf': {},

+ },

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_2',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.default_time_2,

+ 'external_trigger': True,

+ 'start_date': self.default_time,

+ 'conf': {},

+ },

+ ],

+ "total_entries": 2,

+ },

+ )

+

+ @provide_session

+ def test_should_return_all_with_tilde_as_dag_id(self, session):

+ dagruns = self._create_test_dag_run(extra_dag=True)

+ expected_dag_run_ids = ['TEST_DAG_ID', 'TEST_DAG_ID',

+ "TEST_DAG_ID_3", "TEST_DAG_ID_4"]

+ session.add_all(dagruns)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 4

+ response = self.client.get("api/v1/dags/~/dagRuns")

+ assert response.status_code == 200

+ dag_run_ids = [dag_run["dag_id"] for dag_run in response.json["dag_runs"]]

+ self.assertEqual(dag_run_ids, expected_dag_run_ids)

+

+

+class TestGetDagRunsPagination(TestDagRunEndpoint):

+ @parameterized.expand(

+ [

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=1", ["TEST_DAG_RUN_ID1"]),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=2", ["TEST_DAG_RUN_ID1", "TEST_DAG_RUN_ID2"]),

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?offset=5",

+ [

+ "TEST_DAG_RUN_ID6",

+ "TEST_DAG_RUN_ID7",

+ "TEST_DAG_RUN_ID8",

+ "TEST_DAG_RUN_ID9",

+ "TEST_DAG_RUN_ID10",

+ ],

+ ),

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?offset=0",

+ [

+ "TEST_DAG_RUN_ID1",

+ "TEST_DAG_RUN_ID2",

+ "TEST_DAG_RUN_ID3",

+ "TEST_DAG_RUN_ID4",

+ "TEST_DAG_RUN_ID5",

+ "TEST_DAG_RUN_ID6",

+ "TEST_DAG_RUN_ID7",

+ "TEST_DAG_RUN_ID8",

+ "TEST_DAG_RUN_ID9",

+ "TEST_DAG_RUN_ID10",

+ ],

+ ),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=1&offset=5", ["TEST_DAG_RUN_ID6"]),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=1&offset=1", ["TEST_DAG_RUN_ID2"]),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=2&offset=2", ["TEST_DAG_RUN_ID3", "TEST_DAG_RUN_ID4"],),

+ ]

+ )

+ @provide_session

+ def test_handle_limit_and_offset(self, url, expected_dag_run_ids, session):

+ dagrun_models = self._create_dag_runs(10)

+ session.add_all(dagrun_models)

+ session.commit()

+

+ response = self.client.get(url)

+ assert response.status_code == 200

+

+ self.assertEqual(response.json["total_entries"], 10)

+ dag_run_ids = [dag_run["dag_run_id"] for dag_run in response.json["dag_runs"]]

+ self.assertEqual(dag_run_ids, expected_dag_run_ids)

+

+ @provide_session

+ def test_should_respect_page_size_limit(self, session):

+ dagrun_models = self._create_dag_runs(200)

+ session.add_all(dagrun_models)

+ session.commit()

+

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns?limit=150")

+ assert response.status_code == 200

+

+ self.assertEqual(response.json["total_entries"], 200)

+ self.assertEqual(len(response.json["dag_runs"]), 100)

+

+ def _create_dag_runs(self, count):

+ return [

+ DagRun(

+ dag_id="TEST_DAG_ID",

+ run_id="TEST_DAG_RUN_ID" + str(i),

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time) + timedelta(minutes=i),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ )

+ for i in range(1, count + 1)

+ ]

+

+

+class TestGetDagRunsPaginationFilters(TestDagRunEndpoint):

+ @parameterized.expand(

+ [

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?start_date_gte=2020-06-18T18:00:00+00:00",

+ ["TEST_START_EXEC_DAY_18", "TEST_START_EXEC_DAY_19"],

+ ),

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?start_date_lte=2020-06-11T18:00:00+00:00",

+ ["TEST_START_EXEC_DAY_10"],

Review comment:

The fix https://github.com/apache/airflow/pull/9153/commits/7a72935b63bdb6c5622f8c00d4a3d2f996eaa2ae

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ephraimbuddy commented on a change in pull request #9153: [WIP] add readonly endpoints for dagruns

Posted by GitBox <gi...@apache.org>.

ephraimbuddy commented on a change in pull request #9153:

URL: https://github.com/apache/airflow/pull/9153#discussion_r438468343

##########

File path: airflow/api_connexion/endpoints/dag_run_endpoint.py

##########

@@ -26,18 +34,66 @@ def delete_dag_run():

raise NotImplementedError("Not implemented yet.")

-def get_dag_run():

+@provide_session

+def get_dag_run(dag_id, dag_run_id, session):

"""

Get a DAG Run.

"""

- raise NotImplementedError("Not implemented yet.")

+ query = session.query(DagRun)

+ query = query.filter(DagRun.dag_id == dag_id)

+ query = query.filter(DagRun.run_id == dag_run_id)

+ dag_run = query.one_or_none()

+ if dag_run is None:

+ raise NotFound("DAGRun not found")

+ return dagrun_schema.dump(dag_run)

-def get_dag_runs():

+@provide_session

+def get_dag_runs(dag_id, session):

"""

Get all DAG Runs.

"""

- raise NotImplementedError("Not implemented yet.")

+ offset = request.args.get(parameters.page_offset, 0)

+ limit = min(int(request.args.get(parameters.page_limit, 100)), 100)

+ start_date_gte = request.args.get(parameters.filter_start_date_gte, None)

+ start_date_lte = request.args.get(parameters.filter_start_date_lte, None)

+ execution_date_gte = request.args.get(parameters.filter_execution_date_gte, None)

+ execution_date_lte = request.args.get(parameters.filter_execution_date_lte, None)

+ end_date_gte = request.args.get(parameters.filter_end_date_gte, None)

+ end_date_lte = request.args.get(parameters.filter_end_date_lte, None)

+ query = session.query(DagRun)

+

+ # This endpoint allows specifying ~ as the dag_id to retrieve DAG Runs for all DAGs.

+ if dag_id != '~':

+ query = query.filter(DagRun.dag_id == dag_id)

+

+ # filter start date

+ if start_date_gte:

+ query = query.filter(DagRun.start_date >= timezone.parse(start_date_gte))

+

+ if start_date_lte:

+ query = query.filter(DagRun.start_date <= timezone.parse(start_date_lte))

+

+ # filter execution date

+ if execution_date_gte:

+ query = query.filter(DagRun.execution_date >= timezone.parse(execution_date_gte))

+

+ if execution_date_lte:

+ query = query.filter(DagRun.execution_date <= timezone.parse(execution_date_lte))

+

+ # filter end date

+ if end_date_gte and not end_date_lte:

+ query = query.filter(DagRun.end_date >= timezone.parse(end_date_gte))

+

+ if end_date_lte and not end_date_gte:

Review comment:

```suggestion

if end_date_lte:

```

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ephraimbuddy commented on a change in pull request #9153: add readonly endpoints for dagruns

Posted by GitBox <gi...@apache.org>.

ephraimbuddy commented on a change in pull request #9153:

URL: https://github.com/apache/airflow/pull/9153#discussion_r439341152

##########

File path: tests/api_connexion/endpoints/test_dag_run_endpoint.py

##########

@@ -15,41 +15,335 @@

# specific language governing permissions and limitations

# under the License.

import unittest

+from datetime import timedelta

import pytest

+from parameterized import parameterized

+from airflow.models import DagRun

+from airflow.utils import timezone

+from airflow.utils.session import provide_session

+from airflow.utils.types import DagRunType

from airflow.www import app

+from tests.test_utils.db import clear_db_runs

class TestDagRunEndpoint(unittest.TestCase):

@classmethod

def setUpClass(cls) -> None:

super().setUpClass()

+

cls.app = app.create_app(testing=True) # type:ignore

def setUp(self) -> None:

self.client = self.app.test_client() # type:ignore

+ self.default_time = '2020-06-11T18:00:00+00:00'

+ self.default_time_2 = '2020-06-12T18:00:00+00:00'

+ clear_db_runs()

+

+ def tearDown(self) -> None:

+ clear_db_runs()

+

+ def _create_test_dag_run(self, state='running', extra_dag=False):

+ dagrun_model_1 = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID_1',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ state=state,

+ )

+ dagrun_model_2 = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID_2',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time_2),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ )

+ if extra_dag:

+ dagrun_extra = [DagRun(

+ dag_id='TEST_DAG_ID_' + str(i),

+ run_id='TEST_DAG_RUN_ID_' + str(i),

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time_2),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ ) for i in range(3, 5)]

+ return [dagrun_model_1, dagrun_model_2] + dagrun_extra

+ return [dagrun_model_1, dagrun_model_2]

class TestDeleteDagRun(TestDagRunEndpoint):

@pytest.mark.skip(reason="Not implemented yet")

def test_should_response_200(self):

- response = self.client.delete("/dags/TEST_DAG_ID}/dagRuns/TEST_DAG_RUN_ID")

+ response = self.client.delete("api/v1/dags/TEST_DAG_ID}/dagRuns/TEST_DAG_RUN_ID")

assert response.status_code == 204

class TestGetDagRun(TestDagRunEndpoint):

- @pytest.mark.skip(reason="Not implemented yet")

- def test_should_response_200(self):

- response = self.client.get("/dags/TEST_DAG_ID/dagRuns/TEST_DAG_RUN_ID")

+ @provide_session

+ def test_should_response_200(self, session):

+ dagrun_model = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ )

+ session.add(dagrun_model)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 1

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns/TEST_DAG_RUN_ID")

assert response.status_code == 200

+ self.assertEqual(

+ response.json,

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.default_time,

+ 'external_trigger': True,

+ 'start_date': self.default_time,

+ 'conf': {},

+ },

+ )

+

+ def test_should_response_404(self):

+ response = self.client.get("api/v1/dags/invalid-id/dagRuns/invalid-id")

+ assert response.status_code == 404

+ self.assertEqual(

+ {'detail': None, 'status': 404, 'title': 'DAGRun not found', 'type': 'about:blank'}, response.json

+ )

class TestGetDagRuns(TestDagRunEndpoint):

- @pytest.mark.skip(reason="Not implemented yet")

- def test_should_response_200(self):

- response = self.client.get("/dags/TEST_DAG_ID/dagRuns/")

+ @provide_session

+ def test_should_response_200(self, session):

+ dagruns = self._create_test_dag_run()

+ session.add_all(dagruns)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 2

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns")

+ assert response.status_code == 200

+ self.assertEqual(

+ response.json,

+ {

+ "dag_runs": [

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_1',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.default_time,

+ 'external_trigger': True,

+ 'start_date': self.default_time,

+ 'conf': {},

+ },

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_2',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.default_time_2,

+ 'external_trigger': True,

+ 'start_date': self.default_time,

+ 'conf': {},

+ },

+ ],

+ "total_entries": 2,

+ },

+ )

+

+ @provide_session

+ def test_should_return_all_with_tilde_as_dag_id(self, session):

+ dagruns = self._create_test_dag_run(extra_dag=True)

+ expected_dag_run_ids = ['TEST_DAG_ID', 'TEST_DAG_ID',

+ "TEST_DAG_ID_3", "TEST_DAG_ID_4"]

+ session.add_all(dagruns)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 4

+ response = self.client.get("api/v1/dags/~/dagRuns")

+ assert response.status_code == 200

+ dag_run_ids = [dag_run["dag_id"] for dag_run in response.json["dag_runs"]]

+ self.assertEqual(dag_run_ids, expected_dag_run_ids)

+

+

+class TestGetDagRunsPagination(TestDagRunEndpoint):

+ @parameterized.expand(

+ [

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=1", ["TEST_DAG_RUN_ID1"]),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=2", ["TEST_DAG_RUN_ID1", "TEST_DAG_RUN_ID2"]),

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?offset=5",

+ [

+ "TEST_DAG_RUN_ID6",

+ "TEST_DAG_RUN_ID7",

+ "TEST_DAG_RUN_ID8",

+ "TEST_DAG_RUN_ID9",

+ "TEST_DAG_RUN_ID10",

+ ],

+ ),

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?offset=0",

+ [

+ "TEST_DAG_RUN_ID1",

+ "TEST_DAG_RUN_ID2",

+ "TEST_DAG_RUN_ID3",

+ "TEST_DAG_RUN_ID4",

+ "TEST_DAG_RUN_ID5",

+ "TEST_DAG_RUN_ID6",

+ "TEST_DAG_RUN_ID7",

+ "TEST_DAG_RUN_ID8",

+ "TEST_DAG_RUN_ID9",

+ "TEST_DAG_RUN_ID10",

+ ],

+ ),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=1&offset=5", ["TEST_DAG_RUN_ID6"]),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=1&offset=1", ["TEST_DAG_RUN_ID2"]),

+ ("api/v1/dags/TEST_DAG_ID/dagRuns?limit=2&offset=2", ["TEST_DAG_RUN_ID3", "TEST_DAG_RUN_ID4"],),

+ ]

+ )

+ @provide_session

+ def test_handle_limit_and_offset(self, url, expected_dag_run_ids, session):

+ dagrun_models = self._create_dag_runs(10)

+ session.add_all(dagrun_models)

+ session.commit()

+

+ response = self.client.get(url)

+ assert response.status_code == 200

+

+ self.assertEqual(response.json["total_entries"], 10)

+ dag_run_ids = [dag_run["dag_run_id"] for dag_run in response.json["dag_runs"]]

+ self.assertEqual(dag_run_ids, expected_dag_run_ids)

+

+ @provide_session

+ def test_should_respect_page_size_limit(self, session):

+ dagrun_models = self._create_dag_runs(200)

+ session.add_all(dagrun_models)

+ session.commit()

+

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns?limit=150")

+ assert response.status_code == 200

+

+ self.assertEqual(response.json["total_entries"], 200)

+ self.assertEqual(len(response.json["dag_runs"]), 100)

+

+ def _create_dag_runs(self, count):

+ return [

+ DagRun(

+ dag_id="TEST_DAG_ID",

+ run_id="TEST_DAG_RUN_ID" + str(i),

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.default_time) + timedelta(minutes=i),

+ start_date=timezone.parse(self.default_time),

+ external_trigger=True,

+ )

+ for i in range(1, count + 1)

+ ]

+

+

+class TestGetDagRunsPaginationFilters(TestDagRunEndpoint):

+ @parameterized.expand(

+ [

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?start_date_gte=2020-06-18T18:00:00+00:00",

+ ["TEST_START_EXEC_DAY_18", "TEST_START_EXEC_DAY_19"],

+ ),

+ (

+ "api/v1/dags/TEST_DAG_ID/dagRuns?start_date_lte=2020-06-11T18:00:00+00:00",

+ ["TEST_START_EXEC_DAY_10"],

Review comment:

This is the command I used to investigate:

`pytest --trace-sql=num,sql,parameters --capture=no tests/api_connexion/endpoints/test_dag_run_endpoint.py -k test_date_filters_gte_and_lte_1_api_v1_dags_TEST_DAG_ID_dagRuns_start_date_lte_2020_06_11T18_00_00_00_00`

I set pdb debuger at this point in dag_run_endpoint:

`

if start_date_lte:

import pdb; pdb.set_trace()

query = query.filter(DagRun.start_date <= timezone.parse(start_date_lte))

`

Check image below:

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] mik-laj merged pull request #9153: add readonly endpoints for dagruns

Posted by GitBox <gi...@apache.org>.

mik-laj merged pull request #9153:

URL: https://github.com/apache/airflow/pull/9153

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ephraimbuddy commented on a change in pull request #9153: [WIP] add readonly endpoints for dagruns

Posted by GitBox <gi...@apache.org>.

ephraimbuddy commented on a change in pull request #9153:

URL: https://github.com/apache/airflow/pull/9153#discussion_r438048590

##########

File path: tests/api_connexion/endpoints/test_dag_run_endpoint.py

##########

@@ -15,41 +15,347 @@

# specific language governing permissions and limitations

# under the License.

import unittest

+from datetime import timedelta

import pytest

+from parameterized import parameterized

+from airflow.models import DagRun

+from airflow.utils import timezone

+from airflow.utils.session import provide_session

+from airflow.utils.types import DagRunType

from airflow.www import app

+from tests.test_utils.db import clear_db_runs

class TestDagRunEndpoint(unittest.TestCase):

@classmethod

def setUpClass(cls) -> None:

super().setUpClass()

+

cls.app = app.create_app(testing=True) # type:ignore

def setUp(self) -> None:

self.client = self.app.test_client() # type:ignore

+ self.now = '2020-06-11T18:12:50.773601+00:00'

+ self.now2 = '2020-06-12T18:12:50.773601+00:00'

+ clear_db_runs()

+

+ def tearDown(self) -> None:

+ clear_db_runs()

+

+ def _create_test_dag_run(self, state='running'):

+ dagrun_model_1 = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID_1',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.now),

+ start_date=timezone.parse(self.now),

+ external_trigger=True,

+ state=state

+ )

+ dagrun_model_2 = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID_2',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.now2),

+ start_date=timezone.parse(self.now),

+ external_trigger=True,

+ )

+ return [dagrun_model_1, dagrun_model_2]

class TestDeleteDagRun(TestDagRunEndpoint):

@pytest.mark.skip(reason="Not implemented yet")

def test_should_response_200(self):

- response = self.client.delete("/dags/TEST_DAG_ID}/dagRuns/TEST_DAG_RUN_ID")

+ response = self.client.delete("api/v1/dags/TEST_DAG_ID}/dagRuns/TEST_DAG_RUN_ID")

assert response.status_code == 204

class TestGetDagRun(TestDagRunEndpoint):

- @pytest.mark.skip(reason="Not implemented yet")

- def test_should_response_200(self):

- response = self.client.get("/dags/TEST_DAG_ID/dagRuns/TEST_DAG_RUN_ID")

+

+ @provide_session

+ def test_should_response_200(self, session):

+ dagrun_model = DagRun(

+ dag_id='TEST_DAG_ID',

+ run_id='TEST_DAG_RUN_ID',

+ run_type=DagRunType.MANUAL.value,

+ execution_date=timezone.parse(self.now),

+ start_date=timezone.parse(self.now),

+ external_trigger=True,

+ )

+ session.add(dagrun_model)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 1

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns/TEST_DAG_RUN_ID")

assert response.status_code == 200

+ self.assertEqual(

+ response.json,

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.now,

+ 'external_trigger': True,

+ 'start_date': self.now,

+ 'conf': {},

+ }

+ )

+

+ def test_should_response_404(self):

+ response = self.client.get("api/v1/dags/invalid-id/dagRuns/invalid-id")

+ assert response.status_code == 404

+ self.assertEqual(

+ {

+ 'detail': None,

+ 'status': 404,

+ 'title': 'DAGRun not found',

+ 'type': 'about:blank'

+ },

+ response.json

+ )

class TestGetDagRuns(TestDagRunEndpoint):

- @pytest.mark.skip(reason="Not implemented yet")

- def test_should_response_200(self):

- response = self.client.get("/dags/TEST_DAG_ID/dagRuns/")

+

+ @provide_session

+ def test_should_response_200(self, session):

+ dagruns = self._create_test_dag_run()

+ session.add_all(dagruns)

+ session.commit()

+ result = session.query(DagRun).all()

+ assert len(result) == 2

+ response = self.client.get("api/v1/dags/TEST_DAG_ID/dagRuns")

+ assert response.status_code == 200

+ self.assertEqual(

+ response.json,

+ {

+ "dag_runs": [

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_1',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.now,

+ 'external_trigger': True,

+ 'start_date': self.now,

+ 'conf': {},

+ },

+ {

+ 'dag_id': 'TEST_DAG_ID',

+ 'dag_run_id': 'TEST_DAG_RUN_ID_2',

+ 'end_date': None,

+ 'state': 'running',

+ 'execution_date': self.now2,

+ 'external_trigger': True,

+ 'start_date': self.now,

+ 'conf': {},

+ }

+ ],

+ "total_entries": 2

+ }

+ )

+

+ @provide_session

+ def test_should_return_all_with_tilde_as_dag_id(self, session):

+ dagruns = self._create_test_dag_run()

+ session.add_all(dagruns)

Review comment:

Check this https://github.com/apache/airflow/pull/9153/commits/c41c7713ef628ab30736ebeaea40fc0a4d4ba310

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [airflow] ephraimbuddy commented on a change in pull request #9153: [WIP] add readonly endpoints for dagruns

Posted by GitBox <gi...@apache.org>.

ephraimbuddy commented on a change in pull request #9153:

URL: https://github.com/apache/airflow/pull/9153#discussion_r438048272

##########

File path: tests/api_connexion/endpoints/test_dag_run_endpoint.py

##########

@@ -15,41 +15,347 @@

# specific language governing permissions and limitations

# under the License.

import unittest

+from datetime import timedelta

import pytest

+from parameterized import parameterized

+from airflow.models import DagRun

+from airflow.utils import timezone

+from airflow.utils.session import provide_session

+from airflow.utils.types import DagRunType

from airflow.www import app

+from tests.test_utils.db import clear_db_runs

class TestDagRunEndpoint(unittest.TestCase):

@classmethod

def setUpClass(cls) -> None:

super().setUpClass()

+

cls.app = app.create_app(testing=True) # type:ignore

def setUp(self) -> None: