You are viewing a plain text version of this content. The canonical link for it is here.

Posted to dev@zeppelin.apache.org by GitBox <gi...@apache.org> on 2021/06/22 14:10:50 UTC

[GitHub] [zeppelin] zjffdu opened a new pull request #4147: [ZEPPELIN-5417] Unable to set conda env in pyspark

zjffdu opened a new pull request #4147:

URL: https://github.com/apache/zeppelin/pull/4147

### What is this PR for?

2 changes here in this PR:

* Use SparkConf instead of properties, because the spark configuration may be in spark side (e.g. spark-defaults.conf instead of zeppelin side), `properties` mean the configuration in zeppelin side, `SparkConf` is the complete configuration of spark.

* Code improvement on the logics of detecting availability of IPython.

### What type of PR is it?

[Bug Fix | Improvement ]

### Todos

* [ ] - Task

### What is the Jira issue?

* https://issues.apache.org/jira/browse/ZEPPELIN-5417

### How should this be tested?

* Manually tested

*

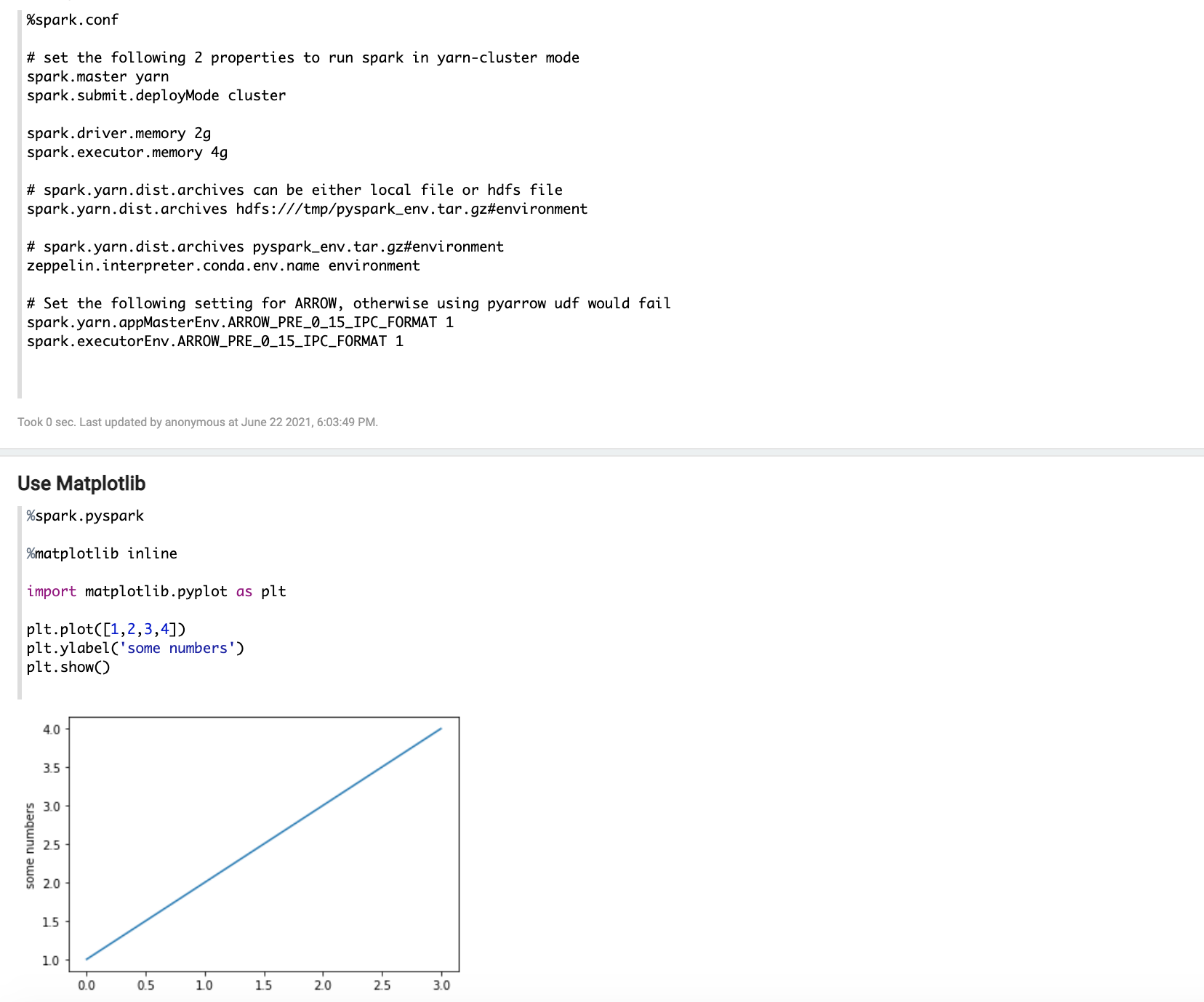

### Screenshots (if appropriate)

### Questions:

* Does the licenses files need update? No

* Is there breaking changes for older versions? No

* Does this needs documentation? No

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [zeppelin] zjffdu merged pull request #4147: [ZEPPELIN-5417] Unable to set conda env in pyspark

Posted by GitBox <gi...@apache.org>.

zjffdu merged pull request #4147:

URL: https://github.com/apache/zeppelin/pull/4147

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@zeppelin.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [zeppelin] zjffdu commented on pull request #4147: [ZEPPELIN-5417] Unable to set conda env in pyspark

Posted by GitBox <gi...@apache.org>.

zjffdu commented on pull request #4147:

URL: https://github.com/apache/zeppelin/pull/4147#issuecomment-871908444

Will merge if no more comment

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@zeppelin.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [zeppelin] Reamer commented on pull request #4147: [ZEPPELIN-5417] Unable to set conda env in pyspark

Posted by GitBox <gi...@apache.org>.

Reamer commented on pull request #4147:

URL: https://github.com/apache/zeppelin/pull/4147#issuecomment-871960458

CI fails with two jobs. Please rebase your branch to the current master, which should fix the `jdbcIntegrationTest`.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@zeppelin.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [zeppelin] zjffdu commented on a change in pull request #4147: [ZEPPELIN-5417] Unable to set conda env in pyspark

Posted by GitBox <gi...@apache.org>.

zjffdu commented on a change in pull request #4147:

URL: https://github.com/apache/zeppelin/pull/4147#discussion_r660261687

##########

File path: spark/interpreter/src/main/java/org/apache/zeppelin/spark/PySparkInterpreter.java

##########

@@ -171,11 +171,12 @@ public void setInterpreterContextInPython() {

// spark.pyspark.driver.python > spark.pyspark.python > PYSPARK_DRIVER_PYTHON > PYSPARK_PYTHON

@Override

protected String getPythonExec() {

- if (!StringUtils.isBlank(getProperty("spark.pyspark.driver.python", ""))) {

- return properties.getProperty("spark.pyspark.driver.python");

+ SparkConf sparkConf = getSparkConf();

+ if (StringUtils.isNotBlank(sparkConf.get("spark.pyspark.driver.python", ""))) {

+ return sparkConf.get("spark.pyspark.driver.python");

}

- if (!StringUtils.isBlank(getProperty("spark.pyspark.python", ""))) {

- return properties.getProperty("spark.pyspark.python");

+ if (StringUtils.isNotBlank(sparkConf.get("spark.pyspark.python", ""))) {

+ return sparkConf.get("spark.pyspark.python");

}

if (System.getenv("PYSPARK_PYTHON") != null) {

return System.getenv("PYSPARK_PYTHON");

Review comment:

Thanks for the careful review, this is a bug, fixed it now.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@zeppelin.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [zeppelin] cuspymd commented on a change in pull request #4147: [ZEPPELIN-5417] Unable to set conda env in pyspark

Posted by GitBox <gi...@apache.org>.

cuspymd commented on a change in pull request #4147:

URL: https://github.com/apache/zeppelin/pull/4147#discussion_r659469629

##########

File path: spark/interpreter/src/main/java/org/apache/zeppelin/spark/PySparkInterpreter.java

##########

@@ -171,11 +171,12 @@ public void setInterpreterContextInPython() {

// spark.pyspark.driver.python > spark.pyspark.python > PYSPARK_DRIVER_PYTHON > PYSPARK_PYTHON

@Override

protected String getPythonExec() {

- if (!StringUtils.isBlank(getProperty("spark.pyspark.driver.python", ""))) {

- return properties.getProperty("spark.pyspark.driver.python");

+ SparkConf sparkConf = getSparkConf();

+ if (StringUtils.isNotBlank(sparkConf.get("spark.pyspark.driver.python", ""))) {

+ return sparkConf.get("spark.pyspark.driver.python");

}

- if (!StringUtils.isBlank(getProperty("spark.pyspark.python", ""))) {

- return properties.getProperty("spark.pyspark.python");

+ if (StringUtils.isNotBlank(sparkConf.get("spark.pyspark.python", ""))) {

+ return sparkConf.get("spark.pyspark.python");

}

if (System.getenv("PYSPARK_PYTHON") != null) {

return System.getenv("PYSPARK_PYTHON");

Review comment:

On line 175\~179, "spark.pyspark.driver.python" takes precedence over "spark.pyspark.python".

But on line 181\~185, "PYSPARK_PYTHON" takes precedence over "PYSPARK_DRIVER_PYTHON".

Is it intentional?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@zeppelin.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [zeppelin] zjffdu commented on pull request #4147: [ZEPPELIN-5417] Unable to set conda env in pyspark

Posted by GitBox <gi...@apache.org>.

zjffdu commented on pull request #4147:

URL: https://github.com/apache/zeppelin/pull/4147#issuecomment-873392465

CI is passed except the selenium test

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@zeppelin.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [zeppelin] zjffdu commented on a change in pull request #4147: [ZEPPELIN-5417] Unable to set conda env in pyspark

Posted by GitBox <gi...@apache.org>.

zjffdu commented on a change in pull request #4147:

URL: https://github.com/apache/zeppelin/pull/4147#discussion_r660261687

##########

File path: spark/interpreter/src/main/java/org/apache/zeppelin/spark/PySparkInterpreter.java

##########

@@ -171,11 +171,12 @@ public void setInterpreterContextInPython() {

// spark.pyspark.driver.python > spark.pyspark.python > PYSPARK_DRIVER_PYTHON > PYSPARK_PYTHON

@Override

protected String getPythonExec() {

- if (!StringUtils.isBlank(getProperty("spark.pyspark.driver.python", ""))) {

- return properties.getProperty("spark.pyspark.driver.python");

+ SparkConf sparkConf = getSparkConf();

+ if (StringUtils.isNotBlank(sparkConf.get("spark.pyspark.driver.python", ""))) {

+ return sparkConf.get("spark.pyspark.driver.python");

}

- if (!StringUtils.isBlank(getProperty("spark.pyspark.python", ""))) {

- return properties.getProperty("spark.pyspark.python");

+ if (StringUtils.isNotBlank(sparkConf.get("spark.pyspark.python", ""))) {

+ return sparkConf.get("spark.pyspark.python");

}

if (System.getenv("PYSPARK_PYTHON") != null) {

return System.getenv("PYSPARK_PYTHON");

Review comment:

Thanks for the careful review, this is a bug, fixed it now.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@zeppelin.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [zeppelin] cuspymd commented on a change in pull request #4147: [ZEPPELIN-5417] Unable to set conda env in pyspark

Posted by GitBox <gi...@apache.org>.

cuspymd commented on a change in pull request #4147:

URL: https://github.com/apache/zeppelin/pull/4147#discussion_r659469629

##########

File path: spark/interpreter/src/main/java/org/apache/zeppelin/spark/PySparkInterpreter.java

##########

@@ -171,11 +171,12 @@ public void setInterpreterContextInPython() {

// spark.pyspark.driver.python > spark.pyspark.python > PYSPARK_DRIVER_PYTHON > PYSPARK_PYTHON

@Override

protected String getPythonExec() {

- if (!StringUtils.isBlank(getProperty("spark.pyspark.driver.python", ""))) {

- return properties.getProperty("spark.pyspark.driver.python");

+ SparkConf sparkConf = getSparkConf();

+ if (StringUtils.isNotBlank(sparkConf.get("spark.pyspark.driver.python", ""))) {

+ return sparkConf.get("spark.pyspark.driver.python");

}

- if (!StringUtils.isBlank(getProperty("spark.pyspark.python", ""))) {

- return properties.getProperty("spark.pyspark.python");

+ if (StringUtils.isNotBlank(sparkConf.get("spark.pyspark.python", ""))) {

+ return sparkConf.get("spark.pyspark.python");

}

if (System.getenv("PYSPARK_PYTHON") != null) {

return System.getenv("PYSPARK_PYTHON");

Review comment:

On line 175~179, "spark.pyspark.driver.python" takes precedence over "spark.pyspark.python".

But on line 181~185, "PYSPARK_PYTHON" takes precedence over "PYSPARK_DRIVER_PYTHON".

Is it intentional?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: dev-unsubscribe@zeppelin.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org