You are viewing a plain text version of this content. The canonical link for it is here.

Posted to jira@kafka.apache.org by GitBox <gi...@apache.org> on 2021/03/16 02:34:43 UTC

[GitHub] [kafka] lizthegrey opened a new pull request #10326: Add RackAwareInSyncReplicaSelector

lizthegrey opened a new pull request #10326:

URL: https://github.com/apache/kafka/pull/10326

*More detailed description of your change,

if necessary. The PR title and PR message become

the squashed commit message, so use a separate

comment to ping reviewers.*

cross rack boundaries if there is no isr in my rack. otherwise, if we are behind on replication, it may take a while to catch up and we may become bottlenecked/blocked.

*Summary of testing strategy (including rationale)

for the feature or bug fix. Unit and/or integration

tests are expected for any behaviour change and

system tests should be considered for larger changes.*

testing strategy to be determined after discussion.

### Committer Checklist (excluded from commit message)

- [ ] Verify design and implementation

- [ ] Verify test coverage and CI build status

- [ ] Verify documentation (including upgrade notes)

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] dajac commented on pull request #10326: Avoid newly replicating brokers in RackAwareReplicaSelector

Posted by GitBox <gi...@apache.org>.

dajac commented on pull request #10326:

URL: https://github.com/apache/kafka/pull/10326#issuecomment-803270024

Thanks for the explanation.

When you say that the consumer was stuck. Are you saying that the consumer was trying to fetch an offset that the newly added replica did not have yet? Say that consumer's fetch offset was 100 and newly added replica's log end offset was 10 because it was still catching up.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] dajac commented on pull request #10326: Avoid newly replicating brokers in RackAwareReplicaSelector

Posted by GitBox <gi...@apache.org>.

dajac commented on pull request #10326:

URL: https://github.com/apache/kafka/pull/10326#issuecomment-802819428

@lizthegrey Thanks for the patch. I will take a look at it shortly. This looks like a bug to me so it might be worth filling a Jira for it.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] lizthegrey closed pull request #10326: Avoid newly replicating brokers in RackAwareReplicaSelector

Posted by GitBox <gi...@apache.org>.

lizthegrey closed pull request #10326:

URL: https://github.com/apache/kafka/pull/10326

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] dajac commented on pull request #10326: Avoid newly replicating brokers in RackAwareReplicaSelector

Posted by GitBox <gi...@apache.org>.

dajac commented on pull request #10326:

URL: https://github.com/apache/kafka/pull/10326#issuecomment-843754057

@lizthegrey Please, excuse me for the delay on this one. I did not have the time to really look into it.

At the moment, it is not clear to me that the PR actually solves the problem that you hit. The part which bugs me a bit is the following one.

If you look at the [code](https://github.com/apache/kafka/blob/trunk/core/src/main/scala/kafka/server/ReplicaManager.scala#L1260) which selects the read replica, it only selects the follower replicas which have the requested fetch offset. My understanding is that the consumer was requesting an offset that the newly added replica did not have yet. Therefore, when the consumer was restarted, the broker should NOT have selected the newly added replica. If it did, I wonder how that could have happened.

Do you see any incidences in the logs that the consumer got a preferred read replica from the leader?

I wonder if the unclean leader election played a role into this. Would you have logs (controller, state changes, etc.) for that partition during that time?

We need to reconstruct the sequence of events to better understand the case.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] lizthegrey commented on a change in pull request #10326: Avoid newly replicating brokers in RackAwareReplicaSelector

Posted by GitBox <gi...@apache.org>.

lizthegrey commented on a change in pull request #10326:

URL: https://github.com/apache/kafka/pull/10326#discussion_r598012870

##########

File path: core/src/main/scala/kafka/server/ReplicaManager.scala

##########

@@ -1263,7 +1263,7 @@ class ReplicaManager(val config: KafkaConfig,

.map(replica => new DefaultReplicaView(

replicaEndpoints.getOrElse(replica.brokerId, Node.noNode()),

replica.logEndOffset,

- currentTimeMs - replica.lastCaughtUpTimeMs))

+ if (replica.lastCaughtUpTimeMs == 0) Long.MaxValue else currentTimeMs - replica.lastCaughtUpTimeMs))

Review comment:

the consideration here is: (1) that the caught up time should reflect a real value, rather than currentTimeMs which is clearly the wrong value for "time since last caught up), and (2) there may be other third-party ReplicaSelectors we don't know about floating out there, that are depending upon the time since last caught up (or want to have the option to access replicas that never caught up).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] maplebed commented on pull request #10326: Avoid newly replicating brokers in RackAwareReplicaSelector

Posted by GitBox <gi...@apache.org>.

maplebed commented on pull request #10326:

URL: https://github.com/apache/kafka/pull/10326#issuecomment-844554345

I'm sorry but the cluster has been rolled since this event so the controller and state change logs are gone.

We are not using a Java consumer - our consumer is the [go Sarama package version v1.28.0](https://github.com/Shopify/sarama/releases/tag/v1.28.0)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] lizthegrey commented on pull request #10326: Avoid newly replicating brokers in RackAwareReplicaSelector

Posted by GitBox <gi...@apache.org>.

lizthegrey commented on pull request #10326:

URL: https://github.com/apache/kafka/pull/10326#issuecomment-845210871

As per private email thread, there is now a reproduction case for this and it is not present in open source Kafka, so closing this PR!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] lizthegrey commented on pull request #10326: Avoid newly replicating brokers in RackAwareReplicaSelector

Posted by GitBox <gi...@apache.org>.

lizthegrey commented on pull request #10326:

URL: https://github.com/apache/kafka/pull/10326#issuecomment-803178364

> @lizthegrey Could you describe the case that you've hit with a bit more details? I suppose that only consumers which are lagging could hit this condition, right? We filter out replicas which do not contain the requested fetch offset.

Correct, consumers which are lagging (or requesting older offsets in the hope of catching up later) would hit this condition, where they stay stuck on brokers that do not have the offsets they need. The behavior we observed was: we had a hiccup in our Kafka quorum and lost the necessary ISR, did an unclean election, started up a new broker to replace ISR, and only half of our consumers that were configured with rack awareness caught up (because they shared the same rack parameter as brokers that were still in ISR); the other half shared a rack with brokers that were new to the replica set, and thus hung waiting for that offset to become available on that broker before they could begin to catch up. Restarting the consumer process didn't help, they still attempted to fetch from the newly replicating brokers at the checkpointed offset and failed to make progress.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] dajac commented on pull request #10326: Avoid newly replicating brokers in RackAwareReplicaSelector

Posted by GitBox <gi...@apache.org>.

dajac commented on pull request #10326:

URL: https://github.com/apache/kafka/pull/10326#issuecomment-802925914

@lizthegrey Could you describe the case that you've hit with a bit more details? I suppose that only consumers which are lagging could hit this condition, right? We filter out replicas which do not contain the requested fetch offset.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] dajac commented on a change in pull request #10326: Avoid newly replicating brokers in RackAwareReplicaSelector

Posted by GitBox <gi...@apache.org>.

dajac commented on a change in pull request #10326:

URL: https://github.com/apache/kafka/pull/10326#discussion_r597662883

##########

File path: core/src/main/scala/kafka/server/ReplicaManager.scala

##########

@@ -1263,7 +1263,7 @@ class ReplicaManager(val config: KafkaConfig,

.map(replica => new DefaultReplicaView(

replicaEndpoints.getOrElse(replica.brokerId, Node.noNode()),

replica.logEndOffset,

- currentTimeMs - replica.lastCaughtUpTimeMs))

+ if (replica.lastCaughtUpTimeMs == 0) Long.MaxValue else currentTimeMs - replica.lastCaughtUpTimeMs))

Review comment:

Did you consider filtering out replicas which never caught up? It feels a bit weird to do this here and to filter them out later on in the `RackAwareReplicaSelector` anyway. Are there any other considerations?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] dajac commented on pull request #10326: Avoid newly replicating brokers in RackAwareReplicaSelector

Posted by GitBox <gi...@apache.org>.

dajac commented on pull request #10326:

URL: https://github.com/apache/kafka/pull/10326#issuecomment-843841610

Are you using the Java consumer or another client?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] lizthegrey edited a comment on pull request #10326: Avoid newly replicating brokers in RackAwareReplicaSelector

Posted by GitBox <gi...@apache.org>.

lizthegrey edited a comment on pull request #10326:

URL: https://github.com/apache/kafka/pull/10326#issuecomment-804461064

> When you say that the consumer was stuck. Are you saying that the consumer was trying to fetch an offset that the newly added replica did not have yet? Say that consumer's fetch offset was 100 and newly added replica's log end offset was 10 because it was still catching up.

I'll see what I can grab from the logs.

Consumer:

```

time="2021-03-16T01:38:56.753761544Z" level=info msg="Started consumer" offset=473596222274 partition=2 topic=honeycomb-prod.retriever-mutation

```

Replica:

```

[2021-03-16 01:34:21,532] INFO [Log partition=honeycomb-prod.retriever-mutation-2, dir=/var/lib/kafka/data] Incrementing log start offset to 472751048791 due to leader offset increment (kafka.log.Log)

...

[2021-03-16 01:38:40,961] INFO [ProducerStateManager partition=honeycomb-prod.retriever-mutation-2] Writing producer snapshot at offset 473636529289 (kafka.log.ProducerStateManager)

[2021-03-16 01:38:40,961] INFO [Log partition=honeycomb-prod.retriever-mutation-2, dir=/var/lib/kafka/data] Rolled new log segment at offset 473636529289 in 1 ms. (kafka.log.Log)

[2021-03-16 01:39:10,038] INFO [ProducerStateManager partition=honeycomb-prod.retriever-mutation-2] Writing producer snapshot at offset 473636847643 (kafka.log.ProducerStateManager)

```

Leader:

```

[2021-03-16 01:38:16,101] INFO [ProducerStateManager partition=honeycomb-prod.retriever-mutation-2] Writing producer snapshot at offset 473636209826 (kafka.log.ProducerStateManager)

[2021-03-16 01:38:16,102] INFO [Log partition=honeycomb-prod.retriever-mutation-2, dir=/var/lib/kafka/data] Rolled new log segment at offset 473636209826 in 0 ms. (kafka.log.Log)

[2021-03-16 01:38:16,906] INFO Completed TierSegmentUploadInitiate(version=1, topicIdPartition=Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2, tierEpoch=438, objectIdAsBase64=-X3rsakGS1ecPQpGUwtPWQ, baseOffset=473635891179, endOffset=473636209825, maxTimestamp=1615858696093, size=104840863, hasEpochState=true, hasAbortedTxns=false, hasProducerState=true, stateOffsetAndEpoch=OffsetAndEpoch(offset=104953, epoch=Optional[52])) for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 9ms (kafka.tier.tasks.archive.ArchiveTask)

[2021-03-16 01:38:19,847] INFO Uploaded segment for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 1900ms (kafka.tier.tasks.archive.ArchiveTask)

[2021-03-16 01:38:20,865] INFO Finalized TierSegmentUploadComplete(version=1, topicIdPartition=Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2, tierEpoch=438, objectIdAsBase64=-X3rsakGS1ecPQpGUwtPWQ, stateOffsetAndEpoch=OffsetAndEpoch(offset=104953, epoch=Optional[52])) for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 10 ms (kafka.tier.tasks.archive.ArchiveTask)

[2021-03-16 01:38:40,958] INFO [ProducerStateManager partition=honeycomb-prod.retriever-mutation-2] Writing producer snapshot at offset 473636529289 (kafka.log.ProducerStateManager)

[2021-03-16 01:38:40,959] INFO [Log partition=honeycomb-prod.retriever-mutation-2, dir=/var/lib/kafka/data] Rolled new log segment at offset 473636529289 in 0 ms. (kafka.log.Log)

[2021-03-16 01:38:41,561] INFO Completed TierSegmentUploadInitiate(version=1, topicIdPartition=Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2, tierEpoch=438, objectIdAsBase64=oagv1U9hRhSL9WhYe5wmrg, baseOffset=473636209826, endOffset=473636529288, maxTimestamp=1615858720963, size=104848877, hasEpochState=true, hasAbortedTxns=false, hasProducerState=true, stateOffsetAndEpoch=OffsetAndEpoch(offset=104955, epoch=Optional[52])) for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 9ms (kafka.tier.tasks.archive.ArchiveTask)

[2021-03-16 01:38:44,823] INFO Uploaded segment for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 2221ms (kafka.tier.tasks.archive.ArchiveTask)

[2021-03-16 01:38:45,866] INFO Finalized TierSegmentUploadComplete(version=1, topicIdPartition=Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2, tierEpoch=438, objectIdAsBase64=oagv1U9hRhSL9WhYe5wmrg, stateOffsetAndEpoch=OffsetAndEpoch(offset=104955, epoch=Optional[52])) for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 7 ms (kafka.tier.tasks.archive.ArchiveTask)

```

Full logs at: https://gist.github.com/lizthegrey/58272cb6f52ab3c6b4a20035dbbdffff

You'll note that 473596222274 is between low water mark 472751048791 and high water mark 473636529289.

About 20 minutes later, the consumer in us-east-1b, which was still happily reading off a leader in us-east-1d (since it had no local replica in us-east-1b), successfully managed to read offsets 473652309773 and beyond according to its logs. The consumer in us-east-1a was still stuck.

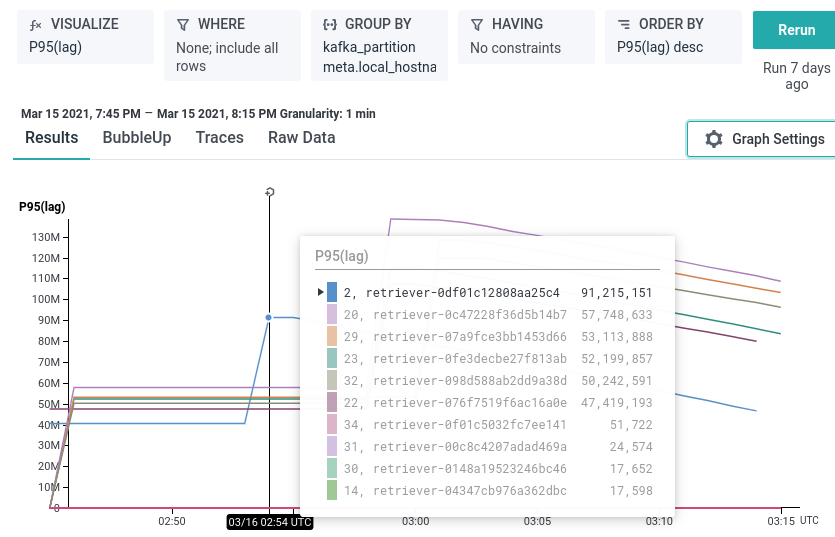

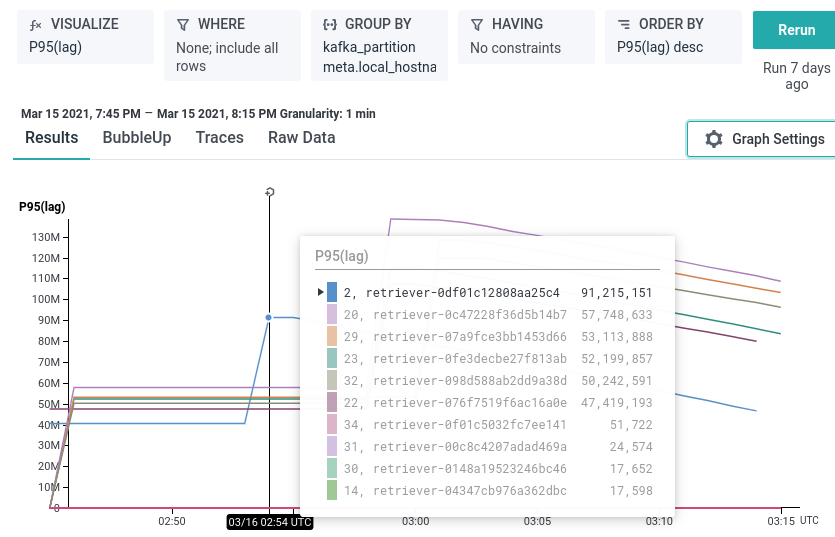

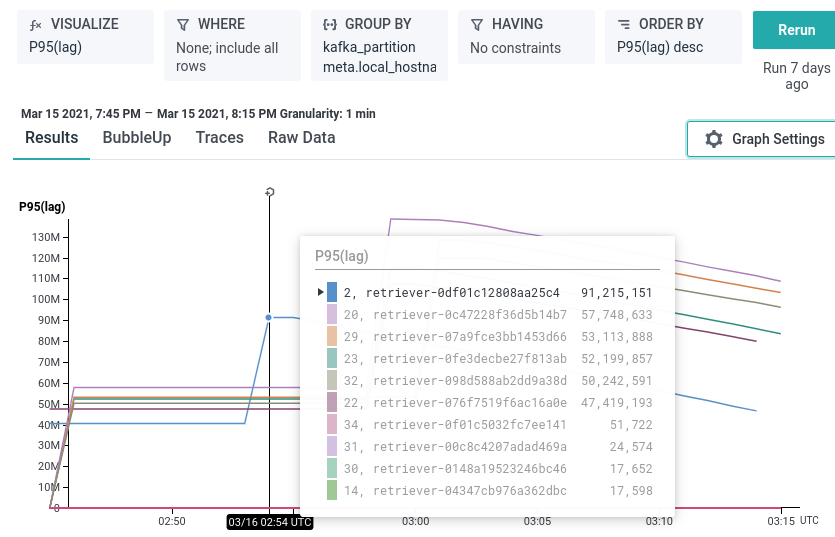

Not until 0254 UTC when the replica in us-east-1b reached a total lag of 0 and there was a new leader election, perhaps, did the consumer in us-east-1a begin being able to consume from the follower in us-east-1a.

Leader (1219):

```

[2021-03-16 02:54:28,091] INFO [ReplicaFetcherManager on broker 1212] Removed fetcher for partitions Set(honeycomb-prod.retriever-mutation-2) (kafka.server.ReplicaFetcherManager)

[2021-03-16 02:54:28,094] INFO retrying DeletionTask(Ljl8q6qISdGMmgLgfP3pZQ:hone

ycomb-prod.retriever-mutation-2, state=State(currentState: kafka.tier.tasks.dele

te.DeletionTask$CollectDeletableSegments metadata: DeleteAsLeaderMetadata(leader

Epoch: 439)), cancelled=false) after 1000ms (kafka.tier.tasks.delete.DeletionTas

k)

kafka.tier.exceptions.TierMetadataRetriableException: Leadership not established

for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2. Backing off.

[2021-03-16 02:54:28,110] INFO established leadership for Ljl8q6qISdGMmgLgfP3pZQ

:honeycomb-prod.retriever-mutation-2 (kafka.tier.tasks.archive.ArchiveTask)

```

Follower (1212):

```

[2021-03-16 02:54:28,091] INFO [ReplicaFetcherManager on broker 1219] Removed fe

tcher for partitions HashSet(honeycomb-prod.retriever-mutation-2) (kafka.server.

ReplicaFetcherManager)

[2021-03-16 02:54:28,091] INFO [ReplicaFetcherManager on broker 1219] Added fetc

her to broker 1212 for partitions Map(honeycomb-prod.retriever-mutation-2 -> (of

fset=473687483041, leaderEpoch=439)) (kafka.server.ReplicaFetcherManager)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] lizthegrey edited a comment on pull request #10326: Avoid newly replicating brokers in RackAwareReplicaSelector

Posted by GitBox <gi...@apache.org>.

lizthegrey edited a comment on pull request #10326:

URL: https://github.com/apache/kafka/pull/10326#issuecomment-804461064

> When you say that the consumer was stuck. Are you saying that the consumer was trying to fetch an offset that the newly added replica did not have yet? Say that consumer's fetch offset was 100 and newly added replica's log end offset was 10 because it was still catching up.

I'll see what I can grab from the logs.

Consumer:

```

time="2021-03-16T01:38:56.753761544Z" level=info msg="Started consumer" offset=473596222274 partition=2 topic=honeycomb-prod.retriever-mutation

```

Replica:

```

[2021-03-16 01:34:21,532] INFO [Log partition=honeycomb-prod.retriever-mutation-2, dir=/var/lib/kafka/data] Incrementing log start offset to 472751048791 due to leader offset increment (kafka.log.Log)

...

[2021-03-16 01:38:40,961] INFO [ProducerStateManager partition=honeycomb-prod.retriever-mutation-2] Writing producer snapshot at offset 473636529289 (kafka.log.ProducerStateManager)

[2021-03-16 01:38:40,961] INFO [Log partition=honeycomb-prod.retriever-mutation-2, dir=/var/lib/kafka/data] Rolled new log segment at offset 473636529289 in 1 ms. (kafka.log.Log)

[2021-03-16 01:39:10,038] INFO [ProducerStateManager partition=honeycomb-prod.retriever-mutation-2] Writing producer snapshot at offset 473636847643 (kafka.log.ProducerStateManager)

```

Leader:

```

[2021-03-16 01:38:16,101] INFO [ProducerStateManager partition=honeycomb-prod.retriever-mutation-2] Writing producer snapshot at offset 473636209826 (kafka.log.ProducerStateManager)

[2021-03-16 01:38:16,102] INFO [Log partition=honeycomb-prod.retriever-mutation-2, dir=/var/lib/kafka/data] Rolled new log segment at offset 473636209826 in 0 ms. (kafka.log.Log)

[2021-03-16 01:38:16,906] INFO Completed TierSegmentUploadInitiate(version=1, topicIdPartition=Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2, tierEpoch=438, objectIdAsBase64=-X3rsakGS1ecPQpGUwtPWQ, baseOffset=473635891179, endOffset=473636209825, maxTimestamp=1615858696093, size=104840863, hasEpochState=true, hasAbortedTxns=false, hasProducerState=true, stateOffsetAndEpoch=OffsetAndEpoch(offset=104953, epoch=Optional[52])) for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 9ms (kafka.tier.tasks.archive.ArchiveTask)

[2021-03-16 01:38:19,847] INFO Uploaded segment for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 1900ms (kafka.tier.tasks.archive.ArchiveTask)

[2021-03-16 01:38:20,865] INFO Finalized TierSegmentUploadComplete(version=1, topicIdPartition=Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2, tierEpoch=438, objectIdAsBase64=-X3rsakGS1ecPQpGUwtPWQ, stateOffsetAndEpoch=OffsetAndEpoch(offset=104953, epoch=Optional[52])) for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 10 ms (kafka.tier.tasks.archive.ArchiveTask)

[2021-03-16 01:38:40,958] INFO [ProducerStateManager partition=honeycomb-prod.retriever-mutation-2] Writing producer snapshot at offset 473636529289 (kafka.log.ProducerStateManager)

[2021-03-16 01:38:40,959] INFO [Log partition=honeycomb-prod.retriever-mutation-2, dir=/var/lib/kafka/data] Rolled new log segment at offset 473636529289 in 0 ms. (kafka.log.Log)

[2021-03-16 01:38:41,561] INFO Completed TierSegmentUploadInitiate(version=1, topicIdPartition=Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2, tierEpoch=438, objectIdAsBase64=oagv1U9hRhSL9WhYe5wmrg, baseOffset=473636209826, endOffset=473636529288, maxTimestamp=1615858720963, size=104848877, hasEpochState=true, hasAbortedTxns=false, hasProducerState=true, stateOffsetAndEpoch=OffsetAndEpoch(offset=104955, epoch=Optional[52])) for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 9ms (kafka.tier.tasks.archive.ArchiveTask)

[2021-03-16 01:38:44,823] INFO Uploaded segment for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 2221ms (kafka.tier.tasks.archive.ArchiveTask)

[2021-03-16 01:38:45,866] INFO Finalized TierSegmentUploadComplete(version=1, topicIdPartition=Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2, tierEpoch=438, objectIdAsBase64=oagv1U9hRhSL9WhYe5wmrg, stateOffsetAndEpoch=OffsetAndEpoch(offset=104955, epoch=Optional[52])) for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 7 ms (kafka.tier.tasks.archive.ArchiveTask)

```

Full logs at: https://gist.github.com/lizthegrey/58272cb6f52ab3c6b4a20035dbbdffff

You'll note that 473596222274 is between low water mark 472751048791 and high water mark 473636529289.

About 20 minutes later, the consumer in us-east-1b, which was reading off a leader in us-east-1d (since it had no local replica), successfully managed to read offsets 473652309773 and beyond. The consumer in us-east-1a was still stuck.

Not until 0254 UTC when the replica in us-east-1b reached a total lag of 0 and there was a new leader election, perhaps, did the consumer in us-east-1a begin being able to consume from the follower in us-east-1a.

Leader (1219):

```

[2021-03-16 02:54:28,091] INFO [ReplicaFetcherManager on broker 1212] Removed fetcher for partitions Set(honeycomb-prod.retriever-mutation-2) (kafka.server.ReplicaFetcherManager)

[2021-03-16 02:54:28,094] INFO retrying DeletionTask(Ljl8q6qISdGMmgLgfP3pZQ:hone

ycomb-prod.retriever-mutation-2, state=State(currentState: kafka.tier.tasks.dele

te.DeletionTask$CollectDeletableSegments metadata: DeleteAsLeaderMetadata(leader

Epoch: 439)), cancelled=false) after 1000ms (kafka.tier.tasks.delete.DeletionTas

k)

kafka.tier.exceptions.TierMetadataRetriableException: Leadership not established

for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2. Backing off.

[2021-03-16 02:54:28,110] INFO established leadership for Ljl8q6qISdGMmgLgfP3pZQ

:honeycomb-prod.retriever-mutation-2 (kafka.tier.tasks.archive.ArchiveTask)

```

Follower (1212):

```

[2021-03-16 02:54:28,091] INFO [ReplicaFetcherManager on broker 1219] Removed fe

tcher for partitions HashSet(honeycomb-prod.retriever-mutation-2) (kafka.server.

ReplicaFetcherManager)

[2021-03-16 02:54:28,091] INFO [ReplicaFetcherManager on broker 1219] Added fetc

her to broker 1212 for partitions Map(honeycomb-prod.retriever-mutation-2 -> (of

fset=473687483041, leaderEpoch=439)) (kafka.server.ReplicaFetcherManager)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] dajac commented on a change in pull request #10326: Avoid newly replicating brokers in RackAwareReplicaSelector

Posted by GitBox <gi...@apache.org>.

dajac commented on a change in pull request #10326:

URL: https://github.com/apache/kafka/pull/10326#discussion_r597662883

##########

File path: core/src/main/scala/kafka/server/ReplicaManager.scala

##########

@@ -1263,7 +1263,7 @@ class ReplicaManager(val config: KafkaConfig,

.map(replica => new DefaultReplicaView(

replicaEndpoints.getOrElse(replica.brokerId, Node.noNode()),

replica.logEndOffset,

- currentTimeMs - replica.lastCaughtUpTimeMs))

+ if (replica.lastCaughtUpTimeMs == 0) Long.MaxValue else currentTimeMs - replica.lastCaughtUpTimeMs))

Review comment:

Did you consider filtering out replicas which never caught up? It feels a bit weird to do this here to filter them out later on in the `RackAwareReplicaSelector` anyway. Are there any other considerations?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] cmccabe commented on pull request #10326: Avoid newly replicating brokers in RackAwareReplicaSelector

Posted by GitBox <gi...@apache.org>.

cmccabe commented on pull request #10326:

URL: https://github.com/apache/kafka/pull/10326#issuecomment-840123466

Does this need a KIP? cc @ijuma and @hachikuji

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kafka] lizthegrey commented on pull request #10326: Avoid newly replicating brokers in RackAwareReplicaSelector

Posted by GitBox <gi...@apache.org>.

lizthegrey commented on pull request #10326:

URL: https://github.com/apache/kafka/pull/10326#issuecomment-804461064

> When you say that the consumer was stuck. Are you saying that the consumer was trying to fetch an offset that the newly added replica did not have yet? Say that consumer's fetch offset was 100 and newly added replica's log end offset was 10 because it was still catching up.

I'll see what I can grab from the logs.

Consumer:

```

time="2021-03-16T01:38:56.753761544Z" level=info msg="Started consumer" offset=473596222274 partition=2 topic=honeycomb-prod.retriever-mutation

```

Replica:

```

[2021-03-16 01:34:21,532] INFO [Log partition=honeycomb-prod.retriever-mutation-2, dir=/var/lib/kafka/data] Incrementing log start offset to 472751048791 due to leader offset increment (kafka.log.Log)

...

[2021-03-16 01:38:40,961] INFO [ProducerStateManager partition=honeycomb-prod.retriever-mutation-2] Writing producer snapshot at offset 473636529289 (kafka.log.ProducerStateManager)

[2021-03-16 01:38:40,961] INFO [Log partition=honeycomb-prod.retriever-mutation-2, dir=/var/lib/kafka/data] Rolled new log segment at offset 473636529289 in 1 ms. (kafka.log.Log)

[2021-03-16 01:39:10,038] INFO [ProducerStateManager partition=honeycomb-prod.retriever-mutation-2] Writing producer snapshot at offset 473636847643 (kafka.log.ProducerStateManager)

```

Leader:

```

[2021-03-16 01:38:16,101] INFO [ProducerStateManager partition=honeycomb-prod.retriever-mutation-2] Writing producer snapshot at offset 473636209826 (kafka.log.ProducerStateManager)

[2021-03-16 01:38:16,102] INFO [Log partition=honeycomb-prod.retriever-mutation-2, dir=/var/lib/kafka/data] Rolled new log segment at offset 473636209826 in 0 ms. (kafka.log.Log)

[2021-03-16 01:38:16,906] INFO Completed TierSegmentUploadInitiate(version=1, topicIdPartition=Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2, tierEpoch=438, objectIdAsBase64=-X3rsakGS1ecPQpGUwtPWQ, baseOffset=473635891179, endOffset=473636209825, maxTimestamp=1615858696093, size=104840863, hasEpochState=true, hasAbortedTxns=false, hasProducerState=true, stateOffsetAndEpoch=OffsetAndEpoch(offset=104953, epoch=Optional[52])) for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 9ms (kafka.tier.tasks.archive.ArchiveTask)

[2021-03-16 01:38:19,847] INFO Uploaded segment for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 1900ms (kafka.tier.tasks.archive.ArchiveTask)

[2021-03-16 01:38:20,865] INFO Finalized TierSegmentUploadComplete(version=1, topicIdPartition=Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2, tierEpoch=438, objectIdAsBase64=-X3rsakGS1ecPQpGUwtPWQ, stateOffsetAndEpoch=OffsetAndEpoch(offset=104953, epoch=Optional[52])) for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 10 ms (kafka.tier.tasks.archive.ArchiveTask)

[2021-03-16 01:38:40,958] INFO [ProducerStateManager partition=honeycomb-prod.retriever-mutation-2] Writing producer snapshot at offset 473636529289 (kafka.log.ProducerStateManager)

[2021-03-16 01:38:40,959] INFO [Log partition=honeycomb-prod.retriever-mutation-2, dir=/var/lib/kafka/data] Rolled new log segment at offset 473636529289 in 0 ms. (kafka.log.Log)

[2021-03-16 01:38:41,561] INFO Completed TierSegmentUploadInitiate(version=1, topicIdPartition=Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2, tierEpoch=438, objectIdAsBase64=oagv1U9hRhSL9WhYe5wmrg, baseOffset=473636209826, endOffset=473636529288, maxTimestamp=1615858720963, size=104848877, hasEpochState=true, hasAbortedTxns=false, hasProducerState=true, stateOffsetAndEpoch=OffsetAndEpoch(offset=104955, epoch=Optional[52])) for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 9ms (kafka.tier.tasks.archive.ArchiveTask)

[2021-03-16 01:38:44,823] INFO Uploaded segment for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 2221ms (kafka.tier.tasks.archive.ArchiveTask)

[2021-03-16 01:38:45,866] INFO Finalized TierSegmentUploadComplete(version=1, topicIdPartition=Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2, tierEpoch=438, objectIdAsBase64=oagv1U9hRhSL9WhYe5wmrg, stateOffsetAndEpoch=OffsetAndEpoch(offset=104955, epoch=Optional[52])) for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2 in 7 ms (kafka.tier.tasks.archive.ArchiveTask)

```

Full logs at: https://gist.github.com/lizthegrey/58272cb6f52ab3c6b4a20035dbbdffff

You'll note that 473596222274 is between low water mark 472751048791 and high water mark 473636529289.

About 20 minutes later, the consumer in us-east-1b, which was reading off a leader in us-east-1d (since it had no local replica), successfully managed to read offsets 473652309773 and beyond. The consumer in us-east-1a was still stuck.

Not until 0254 UTC when the replica in us-east-1b reached a total lag of 0 and there was a new leader election, perhaps, did the consumer in us-east-1a begin being able to consume from the follower in us-east-1a.

Leader (1219):

```

[2021-03-16 02:54:28,091] INFO [ReplicaFetcherManager on broker 1212] Removed fetcher for partitions Set(honeycomb-prod.retriever-mutation-2) (kafka.server.ReplicaFetcherManager)

[2021-03-16 02:54:28,094] INFO retrying DeletionTask(Ljl8q6qISdGMmgLgfP3pZQ:hone

ycomb-prod.retriever-mutation-2, state=State(currentState: kafka.tier.tasks.dele

te.DeletionTask$CollectDeletableSegments metadata: DeleteAsLeaderMetadata(leader

Epoch: 439)), cancelled=false) after 1000ms (kafka.tier.tasks.delete.DeletionTas

k)

kafka.tier.exceptions.TierMetadataRetriableException: Leadership not established

for Ljl8q6qISdGMmgLgfP3pZQ:honeycomb-prod.retriever-mutation-2. Backing off.

[2021-03-16 02:54:28,110] INFO established leadership for Ljl8q6qISdGMmgLgfP3pZQ

:honeycomb-prod.retriever-mutation-2 (kafka.tier.tasks.archive.ArchiveTask)

```

Follower (1212):

```

[2021-03-16 02:54:28,091] INFO [ReplicaFetcherManager on broker 1219] Removed fe

tcher for partitions HashSet(honeycomb-prod.retriever-mutation-2) (kafka.server.

ReplicaFetcherManager)

[2021-03-16 02:54:28,091] INFO [ReplicaFetcherManager on broker 1219] Added fetc

her to broker 1212 for partitions Map(honeycomb-prod.retriever-mutation-2 -> (of

fset=473687483041, leaderEpoch=439)) (kafka.server.ReplicaFetcherManager)

```

https://share.getcloudapp.com/WnuYRoWG

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org