You are viewing a plain text version of this content. The canonical link for it is here.

Posted to reviews@spark.apache.org by GitBox <gi...@apache.org> on 2021/02/20 03:25:42 UTC

[GitHub] [spark] AngersZhuuuu opened a new pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

AngersZhuuuu opened a new pull request #31598:

URL: https://github.com/apache/spark/pull/31598

### What changes were proposed in this pull request?

Some user who not so clear about spark when build SparkSession

```

SparkSession.builder().config()

```

In this method user may config `spark.driver.memory` then he will think when submit job, spark driver's memory is change. But when we run this code, JVM has been started, so this configuration won't work at all. And in Spark UI, it will show `spark.driver.memory` as this configuration set by `SparkSession.builder().config()`. This makes user and administer confuse.

So we should ignore such as wrong way and logwarn.

### Why are the changes needed?

Make usage more clear

### Does this PR introduce _any_ user-facing change?

No, this pr only handle wrong use case.

### How was this patch tested?

WIP

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

AmplabJenkins removed a comment on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-787729731

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder-K8s/40158/

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-787632493

Kubernetes integration test starting

URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/40156/

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-788093819

**[Test build #135597 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/135597/testReport)** for PR 31598 at commit [`7cee11e`](https://github.com/apache/spark/commit/7cee11e564cc2fb794ca77b664e8c9bf0673514f).

* This patch passes all tests.

* This patch merges cleanly.

* This patch adds no public classes.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

HyukjinKwon commented on a change in pull request #31598:

URL: https://github.com/apache/spark/pull/31598#discussion_r581643234

##########

File path: sql/core/src/main/scala/org/apache/spark/sql/SparkSession.scala

##########

@@ -897,6 +898,24 @@ object SparkSession extends Logging {

this

}

+ // These configurations related to driver when deploy like `spark.master`,

+ // `spark.driver.memory`, this kind of properties may not be affected when

+ // setting programmatically through SparkConf in runtime, or the behavior is

+ // depending on which cluster manager and deploy mode you choose, so it would

+ // be suggested to set through configuration file or spark-submit command line options.

Review comment:

We can document first, and then link the documentation URL in the warning message.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

AmplabJenkins commented on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-785622024

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder-K8s/40031/

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

AngersZhuuuu commented on a change in pull request #31598:

URL: https://github.com/apache/spark/pull/31598#discussion_r584236782

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,22 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

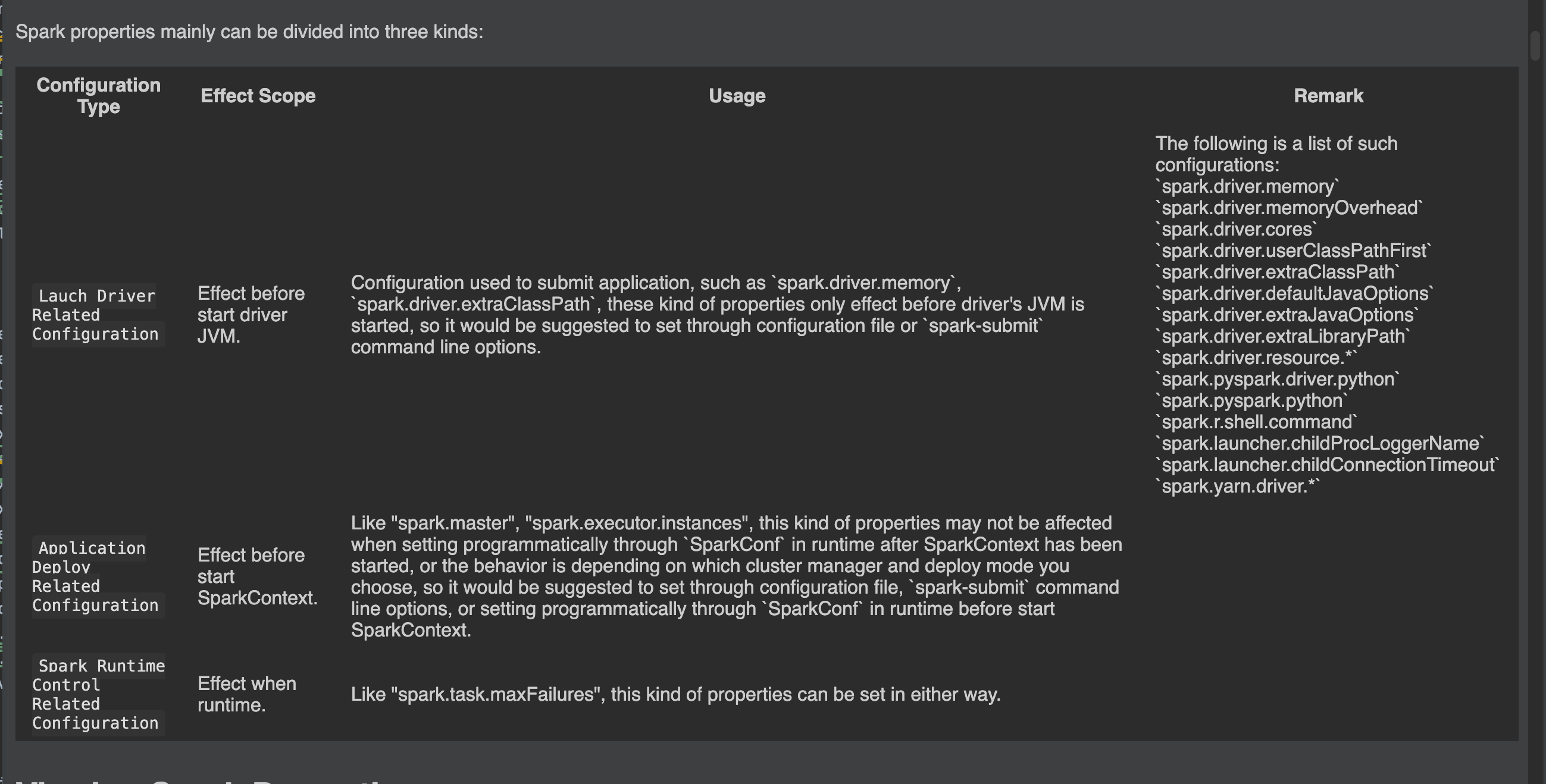

+Spark properties mainly can be divided into three kinds:

+

+ 1. configuration used to submit application, such as "spark.driver.memory", "spark.driver.extraclassPath",

Review comment:

> This text needs a fair bit of cleanup - capitalization, punctuation, syntax. Break lists of configs into a list and code quote, and so on

How about current? Make it as a list I think it will be more clear.

Also cc @dongjoon-hyun @maropu @HyukjinKwon , hope for your suggest to make it more clear and accurate.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-787661428

**[Test build #135574 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/135574/testReport)** for PR 31598 at commit [`c1d9aa7`](https://github.com/apache/spark/commit/c1d9aa736a592b883abebd285ad981ec787f78c6).

* This patch passes all tests.

* This patch merges cleanly.

* This patch adds no public classes.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

AmplabJenkins removed a comment on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-787011403

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/135529/

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-787988407

Kubernetes integration test starting

URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/40178/

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] srowen commented on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

srowen commented on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-787605028

I don't see what that column adds that the description cannot? it also squishes the horizontal space for the description.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] maropu commented on a change in pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

maropu commented on a change in pull request #31598:

URL: https://github.com/apache/spark/pull/31598#discussion_r584393540

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,61 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Spark properties mainly can be divided into three kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Effect Scope</th><th>Usage</th><th>Remark</th></tr>

Review comment:

`Remark` -> `Examples`?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] cloud-fan commented on a change in pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

cloud-fan commented on a change in pull request #31598:

URL: https://github.com/apache/spark/pull/31598#discussion_r586110532

##########

File path: sql/core/src/main/scala/org/apache/spark/sql/SparkSession.scala

##########

@@ -897,6 +898,18 @@ object SparkSession extends Logging {

this

}

+ // These configurations related to driver when deploy like `spark.master`,

+ // `spark.driver.memory`, this kind of properties may not be affected when

+ // setting programmatically through SparkConf in runtime, or the behavior is

+ // depending on which cluster manager and deploy mode you choose, so it would

+ // be suggested to set through configuration file or spark-submit command line options.

+ private val DRIVER_RELATED_LAUNCHER_CONFIG = Seq(DRIVER_MEMORY, DRIVER_CORES.key,

+ DRIVER_MEMORY_OVERHEAD.key, DRIVER_EXTRA_CLASSPATH,

+ DRIVER_DEFAULT_JAVA_OPTIONS, DRIVER_EXTRA_JAVA_OPTIONS, DRIVER_EXTRA_LIBRARY_PATH,

+ "spark.driver.resource", PYSPARK_DRIVER_PYTHON, PYSPARK_PYTHON, SPARKR_R_SHELL,

+ CHILD_PROCESS_LOGGER_NAME, CHILD_CONNECTION_TIMEOUT, DRIVER_USER_CLASS_PATH_FIRST.key,

+ "spark.yarn.*")

Review comment:

yea, something like `.scope`.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-785643448

Kubernetes integration test starting

URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/40035/

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] maropu commented on a change in pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

maropu commented on a change in pull request #31598:

URL: https://github.com/apache/spark/pull/31598#discussion_r582541755

##########

File path: core/src/main/scala/org/apache/spark/SparkContext.scala

##########

@@ -2690,7 +2690,10 @@ object SparkContext extends Logging {

setActiveContext(new SparkContext(config))

} else {

if (config.getAll.nonEmpty) {

- logWarning("Using an existing SparkContext; some configuration may not take effect.")

+ logWarning("Using an existing SparkContext; some configuration may not take effect." +

+ " For how to set these configuration correctly, you can refer to" +

+ " https://spark.apache.org/docs/latest/configuration.html" +

Review comment:

Is it okay to always refer to the `latest` page?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

AmplabJenkins removed a comment on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-788095929

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/135597/

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

HyukjinKwon commented on a change in pull request #31598:

URL: https://github.com/apache/spark/pull/31598#discussion_r581642779

##########

File path: sql/core/src/main/scala/org/apache/spark/sql/SparkSession.scala

##########

@@ -897,6 +898,24 @@ object SparkSession extends Logging {

this

}

+ // These configurations related to driver when deploy like `spark.master`,

+ // `spark.driver.memory`, this kind of properties may not be affected when

+ // setting programmatically through SparkConf in runtime, or the behavior is

+ // depending on which cluster manager and deploy mode you choose, so it would

+ // be suggested to set through configuration file or spark-submit command line options.

Review comment:

@AngersZhuuuu, I would first document this explicitly and what happen for each configuration before taking an action to show a warning. Also, shouldn't we do this in `SparkContext`?

##########

File path: sql/core/src/main/scala/org/apache/spark/sql/SparkSession.scala

##########

@@ -897,6 +898,24 @@ object SparkSession extends Logging {

this

}

+ // These configurations related to driver when deploy like `spark.master`,

Review comment:

`spark.master` seems not here?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

HyukjinKwon commented on a change in pull request #31598:

URL: https://github.com/apache/spark/pull/31598#discussion_r581642779

##########

File path: sql/core/src/main/scala/org/apache/spark/sql/SparkSession.scala

##########

@@ -897,6 +898,24 @@ object SparkSession extends Logging {

this

}

+ // These configurations related to driver when deploy like `spark.master`,

+ // `spark.driver.memory`, this kind of properties may not be affected when

+ // setting programmatically through SparkConf in runtime, or the behavior is

+ // depending on which cluster manager and deploy mode you choose, so it would

+ // be suggested to set through configuration file or spark-submit command line options.

Review comment:

@AngersZhuuuu, I would first document this explicitly and what happen for each configuration before taking an action to show a warning. Also, shouldn't we do this in `SparkContext` too?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] maropu commented on a change in pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

maropu commented on a change in pull request #31598:

URL: https://github.com/apache/spark/pull/31598#discussion_r584394455

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,61 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Spark properties mainly can be divided into three kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Effect Scope</th><th>Usage</th><th>Remark</th></tr>

Review comment:

`Scope` is a correct word here? `Effective Timing` instead?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

AmplabJenkins removed a comment on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-782591823

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder-K8s/39880/

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-782578304

**[Test build #135299 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/135299/testReport)** for PR 31598 at commit [`4db4c05`](https://github.com/apache/spark/commit/4db4c0599f1db93bbf87969f449ffe69f8dda4c1).

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] srowen commented on a change in pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

srowen commented on a change in pull request #31598:

URL: https://github.com/apache/spark/pull/31598#discussion_r584313063

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,61 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Spark properties mainly can be divided into three kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Effect Scope</th><th>Usage</th><th>Remark</th></tr>

+<tr>

+ <td><code>Lauch Driver Related Configuration</code></td>

+ <td>Effect before start driver JVM.</td>

+ <td>

+ Configuration used to submit application, such as `spark.driver.memory`, `spark.driver.extraClassPath`,

Review comment:

_an_ application

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,61 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Spark properties mainly can be divided into three kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Effect Scope</th><th>Usage</th><th>Remark</th></tr>

+<tr>

+ <td><code>Lauch Driver Related Configuration</code></td>

Review comment:

Lauch -> Launch

I think this is better as "Configurations needed at driver launch"?

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,61 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Spark properties mainly can be divided into three kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Effect Scope</th><th>Usage</th><th>Remark</th></tr>

+<tr>

+ <td><code>Lauch Driver Related Configuration</code></td>

+ <td>Effect before start driver JVM.</td>

+ <td>

+ Configuration used to submit application, such as `spark.driver.memory`, `spark.driver.extraClassPath`,

+ these kind of properties only effect before driver's JVM is started, so it would be suggested to set through

+ configuration file or `spark-submit` command line options.

+ </td>

+ <td>

+ The following is a list of such configurations:<br/>

+ `spark.driver.memory`<br/>

+ `spark.driver.memoryOverhead`<br/>

+ `spark.driver.cores`<br/>

+ `spark.driver.userClassPathFirst`<br/>

+ `spark.driver.extraClassPath`<br/>

+ `spark.driver.defaultJavaOptions`<br/>

+ `spark.driver.extraJavaOptions`<br/>

+ `spark.driver.extraLibraryPath`<br/>

+ `spark.driver.resource.*`<br/>

+ `spark.pyspark.driver.python`<br/>

+ `spark.pyspark.python`<br/>

+ `spark.r.shell.command`<br/>

+ `spark.launcher.childProcLoggerName`<br/>

+ `spark.launcher.childConnectionTimeout`<br/>

+ `spark.yarn.driver.*`

+ </td>

+</tr>

+<tr>

+ <td><code>Application Deploy Related Configuration</code></td>

+ <td>Effect before start SparkContext.</td>

+ <td>

+ Like "spark.master", "spark.executor.instances", this kind of properties may not

+ be affected when setting programmatically through `SparkConf` in runtime after SparkContext has been started,

+ or the behavior is depending on which cluster manager and deploy mode you choose, so it would be suggested to

+ set through configuration file, `spark-submit` command line options, or setting programmatically through `SparkConf`

+ in runtime before start SparkContext.

+ </td>

+ <td>

+

+ </td>

+</tr>

+<tr>

+ <td><code>Spark Runtime Control Related Configuration</code></td>

+ <td>Effect when runtime.</td>

Review comment:

I am not sure this extra column helps. Move this into the description.

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,61 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Spark properties mainly can be divided into three kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Effect Scope</th><th>Usage</th><th>Remark</th></tr>

+<tr>

+ <td><code>Lauch Driver Related Configuration</code></td>

+ <td>Effect before start driver JVM.</td>

+ <td>

+ Configuration used to submit application, such as `spark.driver.memory`, `spark.driver.extraClassPath`,

+ these kind of properties only effect before driver's JVM is started, so it would be suggested to set through

+ configuration file or `spark-submit` command line options.

+ </td>

+ <td>

+ The following is a list of such configurations:<br/>

+ `spark.driver.memory`<br/>

Review comment:

Can you use a `<ul>` here?

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,61 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Spark properties mainly can be divided into three kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Effect Scope</th><th>Usage</th><th>Remark</th></tr>

+<tr>

+ <td><code>Lauch Driver Related Configuration</code></td>

+ <td>Effect before start driver JVM.</td>

+ <td>

+ Configuration used to submit application, such as `spark.driver.memory`, `spark.driver.extraClassPath`,

+ these kind of properties only effect before driver's JVM is started, so it would be suggested to set through

Review comment:

Start a new sentence.

It's not suggested, it's required, right?

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,61 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Spark properties mainly can be divided into three kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Effect Scope</th><th>Usage</th><th>Remark</th></tr>

+<tr>

+ <td><code>Lauch Driver Related Configuration</code></td>

+ <td>Effect before start driver JVM.</td>

+ <td>

+ Configuration used to submit application, such as `spark.driver.memory`, `spark.driver.extraClassPath`,

+ these kind of properties only effect before driver's JVM is started, so it would be suggested to set through

+ configuration file or `spark-submit` command line options.

+ </td>

+ <td>

+ The following is a list of such configurations:<br/>

+ `spark.driver.memory`<br/>

+ `spark.driver.memoryOverhead`<br/>

+ `spark.driver.cores`<br/>

+ `spark.driver.userClassPathFirst`<br/>

+ `spark.driver.extraClassPath`<br/>

+ `spark.driver.defaultJavaOptions`<br/>

+ `spark.driver.extraJavaOptions`<br/>

+ `spark.driver.extraLibraryPath`<br/>

+ `spark.driver.resource.*`<br/>

+ `spark.pyspark.driver.python`<br/>

+ `spark.pyspark.python`<br/>

+ `spark.r.shell.command`<br/>

+ `spark.launcher.childProcLoggerName`<br/>

+ `spark.launcher.childConnectionTimeout`<br/>

+ `spark.yarn.driver.*`

+ </td>

+</tr>

+<tr>

+ <td><code>Application Deploy Related Configuration</code></td>

+ <td>Effect before start SparkContext.</td>

+ <td>

+ Like "spark.master", "spark.executor.instances", this kind of properties may not

Review comment:

Back-tick quote for consistency

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,61 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Spark properties mainly can be divided into three kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Effect Scope</th><th>Usage</th><th>Remark</th></tr>

+<tr>

+ <td><code>Lauch Driver Related Configuration</code></td>

+ <td>Effect before start driver JVM.</td>

+ <td>

+ Configuration used to submit application, such as `spark.driver.memory`, `spark.driver.extraClassPath`,

+ these kind of properties only effect before driver's JVM is started, so it would be suggested to set through

+ configuration file or `spark-submit` command line options.

+ </td>

+ <td>

+ The following is a list of such configurations:<br/>

+ `spark.driver.memory`<br/>

+ `spark.driver.memoryOverhead`<br/>

+ `spark.driver.cores`<br/>

+ `spark.driver.userClassPathFirst`<br/>

+ `spark.driver.extraClassPath`<br/>

+ `spark.driver.defaultJavaOptions`<br/>

+ `spark.driver.extraJavaOptions`<br/>

+ `spark.driver.extraLibraryPath`<br/>

+ `spark.driver.resource.*`<br/>

+ `spark.pyspark.driver.python`<br/>

+ `spark.pyspark.python`<br/>

+ `spark.r.shell.command`<br/>

+ `spark.launcher.childProcLoggerName`<br/>

+ `spark.launcher.childConnectionTimeout`<br/>

+ `spark.yarn.driver.*`

+ </td>

+</tr>

+<tr>

+ <td><code>Application Deploy Related Configuration</code></td>

+ <td>Effect before start SparkContext.</td>

+ <td>

+ Like "spark.master", "spark.executor.instances", this kind of properties may not

+ be affected when setting programmatically through `SparkConf` in runtime after SparkContext has been started,

+ or the behavior is depending on which cluster manager and deploy mode you choose, so it would be suggested to

+ set through configuration file, `spark-submit` command line options, or setting programmatically through `SparkConf`

+ in runtime before start SparkContext.

+ </td>

+ <td>

+

+ </td>

+</tr>

+<tr>

+ <td><code>Spark Runtime Control Related Configuration</code></td>

+ <td>Effect when runtime.</td>

+ <td>

+ Like "spark.task.maxFailures", this kind of properties can be set in either way.

Review comment:

Just say "all other properties can be set either way"

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

AngersZhuuuu commented on a change in pull request #31598:

URL: https://github.com/apache/spark/pull/31598#discussion_r584417267

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,61 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Spark properties mainly can be divided into three kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Effect Scope</th><th>Usage</th><th>Remark</th></tr>

+<tr>

+ <td><code>Lauch Driver Related Configuration</code></td>

+ <td>Effect before start driver JVM.</td>

+ <td>

+ Configuration used to submit application, such as `spark.driver.memory`, `spark.driver.extraClassPath`,

Review comment:

Done

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,61 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Spark properties mainly can be divided into three kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Effect Scope</th><th>Usage</th><th>Remark</th></tr>

+<tr>

+ <td><code>Lauch Driver Related Configuration</code></td>

Review comment:

Done

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,61 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Spark properties mainly can be divided into three kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Effect Scope</th><th>Usage</th><th>Remark</th></tr>

+<tr>

+ <td><code>Lauch Driver Related Configuration</code></td>

+ <td>Effect before start driver JVM.</td>

+ <td>

+ Configuration used to submit application, such as `spark.driver.memory`, `spark.driver.extraClassPath`,

+ these kind of properties only effect before driver's JVM is started, so it would be suggested to set through

+ configuration file or `spark-submit` command line options.

+ </td>

+ <td>

+ The following is a list of such configurations:<br/>

+ `spark.driver.memory`<br/>

+ `spark.driver.memoryOverhead`<br/>

+ `spark.driver.cores`<br/>

+ `spark.driver.userClassPathFirst`<br/>

+ `spark.driver.extraClassPath`<br/>

+ `spark.driver.defaultJavaOptions`<br/>

+ `spark.driver.extraJavaOptions`<br/>

+ `spark.driver.extraLibraryPath`<br/>

+ `spark.driver.resource.*`<br/>

+ `spark.pyspark.driver.python`<br/>

+ `spark.pyspark.python`<br/>

+ `spark.r.shell.command`<br/>

+ `spark.launcher.childProcLoggerName`<br/>

+ `spark.launcher.childConnectionTimeout`<br/>

+ `spark.yarn.driver.*`

+ </td>

+</tr>

+<tr>

+ <td><code>Application Deploy Related Configuration</code></td>

+ <td>Effect before start SparkContext.</td>

+ <td>

+ Like "spark.master", "spark.executor.instances", this kind of properties may not

Review comment:

Dne

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

AngersZhuuuu commented on a change in pull request #31598:

URL: https://github.com/apache/spark/pull/31598#discussion_r584450921

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,67 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Note that Spark properties have different effective timing and they can be divided into three kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Meaning</th><th>Examples</th></tr>

+<tr>

+ <td><code>Configurations needed at driver launch</code></td>

+ <td>

+ Configuration used to submit an application, such as <code>spark.driver.memory</code>, <code>spark.driver.extraClassPath</code>, these kind of properties only effect before driver's JVM is started, so it would be suggested to set through configuration file or <code>spark-submit</code> command line options.

+ </td>

+ <td>

+ The following is a list of such configurations:

+ <ul>

+ <li><code>spark.driver.memory</code></li>

+ <li><code>spark.driver.memoryOverhead</code></li>

+ <li><code>spark.driver.cores</code></li>

+ <li><code>spark.driver.userClassPathFirst</code></li>

+ <li><code>spark.driver.extraClassPath</code></li>

+ <li><code>spark.driver.defaultJavaOptions</code></li>

+ <li><code>spark.driver.extraJavaOptions</code></li>

+ <li><code>spark.driver.extraLibraryPath</code></li>

+ <li><code>spark.driver.resource.*</code></li>

+ <li><code>spark.pyspark.driver.python</code></li>

+ <li><code>spark.pyspark.python</code></li>

+ <li><code>spark.r.shell.command</code></li>

+ <li><code>spark.launcher.childProcLoggerName</code></li>

+ <li><code>spark.launcher.childConnectionTimeout</code></li>

+ <li><code>spark.yarn.driver.*</code></li>

+ </ul>

+ </td>

+</tr>

+<tr>

+ <td><code>Application Deploy Related Configuration</code></td>

+ <td>

+ Like <code>spark.master</code>, <code>spark.executor.instances</code>, this kind of properties may not be affected when setting programmatically through <code>SparkConf</code> in runtime after SparkContext has been started, or the behavior is depending on which cluster manager and deploy mode you choose, so it would be suggested to set through configuration file, <code>spark-submit</code> command line options, or setting programmatically through <code>SparkConf</code> in runtime before start SparkContext.

+ </td>

+ <td>

+ The following is a example such configurations:

+ <ul>

+ <li><code>spark.master</code></li>

+ <li><code>spark.app.name</code></li>

+ <li><code>spark.executor.memory</code></li>

+ <li><code>spark.submit.deployMode</code></li>

+ <li><code>spark.eventLog.enabled</code></li>

+ <li><code>etc...</code></li>

+ </ul>

+ </td>

+</tr>

+<tr>

+ <td><code>Spark Runtime Control Related Configuration</code></td>

Review comment:

> Or even we can just remove this section.

Remove LCTM, since this part may make user confused.

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,67 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Note that Spark properties have different effective timing and they can be divided into three kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Meaning</th><th>Examples</th></tr>

+<tr>

+ <td><code>Configurations needed at driver launch</code></td>

+ <td>

+ Configuration used to submit an application, such as <code>spark.driver.memory</code>, <code>spark.driver.extraClassPath</code>, these kind of properties only effect before driver's JVM is started, so it would be suggested to set through configuration file or <code>spark-submit</code> command line options.

+ </td>

+ <td>

+ The following is a list of such configurations:

+ <ul>

+ <li><code>spark.driver.memory</code></li>

+ <li><code>spark.driver.memoryOverhead</code></li>

+ <li><code>spark.driver.cores</code></li>

+ <li><code>spark.driver.userClassPathFirst</code></li>

+ <li><code>spark.driver.extraClassPath</code></li>

+ <li><code>spark.driver.defaultJavaOptions</code></li>

+ <li><code>spark.driver.extraJavaOptions</code></li>

+ <li><code>spark.driver.extraLibraryPath</code></li>

+ <li><code>spark.driver.resource.*</code></li>

+ <li><code>spark.pyspark.driver.python</code></li>

+ <li><code>spark.pyspark.python</code></li>

+ <li><code>spark.r.shell.command</code></li>

+ <li><code>spark.launcher.childProcLoggerName</code></li>

+ <li><code>spark.launcher.childConnectionTimeout</code></li>

+ <li><code>spark.yarn.driver.*</code></li>

+ </ul>

+ </td>

+</tr>

+<tr>

+ <td><code>Application Deploy Related Configuration</code></td>

+ <td>

+ Like <code>spark.master</code>, <code>spark.executor.instances</code>, this kind of properties may not be affected when setting programmatically through <code>SparkConf</code> in runtime after SparkContext has been started, or the behavior is depending on which cluster manager and deploy mode you choose, so it would be suggested to set through configuration file, <code>spark-submit</code> command line options, or setting programmatically through <code>SparkConf</code> in runtime before start SparkContext.

+ </td>

+ <td>

+ The following is a example such configurations:

+ <ul>

+ <li><code>spark.master</code></li>

+ <li><code>spark.app.name</code></li>

+ <li><code>spark.executor.memory</code></li>

+ <li><code>spark.submit.deployMode</code></li>

+ <li><code>spark.eventLog.enabled</code></li>

+ <li><code>etc...</code></li>

+ </ul>

+ </td>

+</tr>

+<tr>

+ <td><code>Spark Runtime Control Related Configuration</code></td>

Review comment:

> Or even we can just remove this section.

Remove LGTM, since this part may make user confused.

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

SparkQA removed a comment on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-787608522

**[Test build #135574 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/135574/testReport)** for PR 31598 at commit [`c1d9aa7`](https://github.com/apache/spark/commit/c1d9aa736a592b883abebd285ad981ec787f78c6).

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

AngersZhuuuu commented on a change in pull request #31598:

URL: https://github.com/apache/spark/pull/31598#discussion_r586056526

##########

File path: sql/core/src/main/scala/org/apache/spark/sql/SparkSession.scala

##########

@@ -897,6 +898,18 @@ object SparkSession extends Logging {

this

}

+ // These configurations related to driver when deploy like `spark.master`,

+ // `spark.driver.memory`, this kind of properties may not be affected when

+ // setting programmatically through SparkConf in runtime, or the behavior is

+ // depending on which cluster manager and deploy mode you choose, so it would

+ // be suggested to set through configuration file or spark-submit command line options.

+ private val DRIVER_RELATED_LAUNCHER_CONFIG = Seq(DRIVER_MEMORY, DRIVER_CORES.key,

+ DRIVER_MEMORY_OVERHEAD.key, DRIVER_EXTRA_CLASSPATH,

+ DRIVER_DEFAULT_JAVA_OPTIONS, DRIVER_EXTRA_JAVA_OPTIONS, DRIVER_EXTRA_LIBRARY_PATH,

+ "spark.driver.resource", PYSPARK_DRIVER_PYTHON, PYSPARK_PYTHON, SPARKR_R_SHELL,

+ CHILD_PROCESS_LOGGER_NAME, CHILD_CONNECTION_TIMEOUT, DRIVER_USER_CLASS_PATH_FIRST.key,

+ "spark.yarn.*")

Review comment:

> It seems like something we should build into our config library. It's hard to maintain this list here.

You mean like what I have said in https://github.com/apache/spark/pull/31598#discussion_r581689593.

We should add a new tag in ConfigEntry? like `.version()` we add `.type()` or `.scope()` ?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

AmplabJenkins removed a comment on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-787411244

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder-K8s/40131/

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

AmplabJenkins removed a comment on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-782599593

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder-K8s/39878/

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] maropu commented on a change in pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

maropu commented on a change in pull request #31598:

URL: https://github.com/apache/spark/pull/31598#discussion_r584021940

##########

File path: core/src/main/scala/org/apache/spark/SparkContext.scala

##########

@@ -2690,7 +2690,10 @@ object SparkContext extends Logging {

setActiveContext(new SparkContext(config))

} else {

if (config.getAll.nonEmpty) {

- logWarning("Using an existing SparkContext; some configuration may not take effect.")

+ logWarning("Using an existing SparkContext; some configuration may not take effect." +

+ " For how to set these configuration correctly, you can refer to" +

+ " https://spark.apache.org/docs/latest/configuration.html" +

Review comment:

It seems you need to drop the suffix: `3.2.0-SNAPSHOT` -> `3.2.0`

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-785625515

Kubernetes integration test starting

URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/40033/

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

AngersZhuuuu commented on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-799926468

Any more suggestion @cloud-fan ?

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

AmplabJenkins removed a comment on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-799942236

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

AngersZhuuuu commented on a change in pull request #31598:

URL: https://github.com/apache/spark/pull/31598#discussion_r584417333

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,61 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Spark properties mainly can be divided into three kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Effect Scope</th><th>Usage</th><th>Remark</th></tr>

+<tr>

+ <td><code>Lauch Driver Related Configuration</code></td>

+ <td>Effect before start driver JVM.</td>

+ <td>

+ Configuration used to submit application, such as `spark.driver.memory`, `spark.driver.extraClassPath`,

+ these kind of properties only effect before driver's JVM is started, so it would be suggested to set through

+ configuration file or `spark-submit` command line options.

+ </td>

+ <td>

+ The following is a list of such configurations:<br/>

+ `spark.driver.memory`<br/>

+ `spark.driver.memoryOverhead`<br/>

+ `spark.driver.cores`<br/>

+ `spark.driver.userClassPathFirst`<br/>

+ `spark.driver.extraClassPath`<br/>

+ `spark.driver.defaultJavaOptions`<br/>

+ `spark.driver.extraJavaOptions`<br/>

+ `spark.driver.extraLibraryPath`<br/>

+ `spark.driver.resource.*`<br/>

+ `spark.pyspark.driver.python`<br/>

+ `spark.pyspark.python`<br/>

+ `spark.r.shell.command`<br/>

+ `spark.launcher.childProcLoggerName`<br/>

+ `spark.launcher.childConnectionTimeout`<br/>

+ `spark.yarn.driver.*`

+ </td>

+</tr>

+<tr>

+ <td><code>Application Deploy Related Configuration</code></td>

+ <td>Effect before start SparkContext.</td>

+ <td>

+ Like "spark.master", "spark.executor.instances", this kind of properties may not

+ be affected when setting programmatically through `SparkConf` in runtime after SparkContext has been started,

+ or the behavior is depending on which cluster manager and deploy mode you choose, so it would be suggested to

+ set through configuration file, `spark-submit` command line options, or setting programmatically through `SparkConf`

+ in runtime before start SparkContext.

+ </td>

+ <td>

+

+ </td>

+</tr>

+<tr>

+ <td><code>Spark Runtime Control Related Configuration</code></td>

+ <td>Effect when runtime.</td>

+ <td>

+ Like "spark.task.maxFailures", this kind of properties can be set in either way.

Review comment:

Done

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,61 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Spark properties mainly can be divided into three kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Effect Scope</th><th>Usage</th><th>Remark</th></tr>

+<tr>

+ <td><code>Lauch Driver Related Configuration</code></td>

+ <td>Effect before start driver JVM.</td>

+ <td>

+ Configuration used to submit application, such as `spark.driver.memory`, `spark.driver.extraClassPath`,

+ these kind of properties only effect before driver's JVM is started, so it would be suggested to set through

+ configuration file or `spark-submit` command line options.

+ </td>

+ <td>

+ The following is a list of such configurations:<br/>

+ `spark.driver.memory`<br/>

+ `spark.driver.memoryOverhead`<br/>

+ `spark.driver.cores`<br/>

+ `spark.driver.userClassPathFirst`<br/>

+ `spark.driver.extraClassPath`<br/>

+ `spark.driver.defaultJavaOptions`<br/>

+ `spark.driver.extraJavaOptions`<br/>

Review comment:

Done

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AngersZhuuuu commented on a change in pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

AngersZhuuuu commented on a change in pull request #31598:

URL: https://github.com/apache/spark/pull/31598#discussion_r586168932

##########

File path: docs/configuration.md

##########

@@ -114,12 +114,52 @@ in the `spark-defaults.conf` file. A few configuration keys have been renamed si

versions of Spark; in such cases, the older key names are still accepted, but take lower

precedence than any instance of the newer key.

-Spark properties mainly can be divided into two kinds: one is related to deploy, like

-"spark.driver.memory", "spark.executor.instances", this kind of properties may not be affected when

-setting programmatically through `SparkConf` in runtime, or the behavior is depending on which

-cluster manager and deploy mode you choose, so it would be suggested to set through configuration

-file or `spark-submit` command line options; another is mainly related to Spark runtime control,

-like "spark.task.maxFailures", this kind of properties can be set in either way.

+Note that Spark properties have different effective timing and they can be divided into two kinds:

+<table class="table">

+<tr><th>Configuration Type</th><th>Meaning</th><th>Examples</th></tr>

Review comment:

> I'm not good at naming. Basically there are 3 effective timing:

>

> 1. when launching the JVM

> 2. when building the spark context

> 3. completely dynamic (sql session configs)

So here should I add back the third type configuration? Since I have remove that section https://github.com/apache/spark/pull/31598#discussion_r584449917

----------------------------------------------------------------

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #31598: [SPARK-34478][SQL] When build SparkSession, we should check config keys

Posted by GitBox <gi...@apache.org>.

AmplabJenkins removed a comment on pull request #31598:

URL: https://github.com/apache/spark/pull/31598#issuecomment-787658567