You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@zeppelin.apache.org by zj...@apache.org on 2020/06/28 08:49:35 UTC

[zeppelin] branch master updated: [ZEPPELIN-4868]. Display hive job

execution info when running hive sql in jdbc interpreter

This is an automated email from the ASF dual-hosted git repository.

zjffdu pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/zeppelin.git

The following commit(s) were added to refs/heads/master by this push:

new e331981 [ZEPPELIN-4868]. Display hive job execution info when running hive sql in jdbc interpreter

e331981 is described below

commit e331981446b1de32d2d89c332f33f5429ba67d50

Author: Jeff Zhang <zj...@apache.org>

AuthorDate: Tue Jun 9 13:46:51 2020 +0800

[ZEPPELIN-4868]. Display hive job execution info when running hive sql in jdbc interpreter

### What is this PR for?

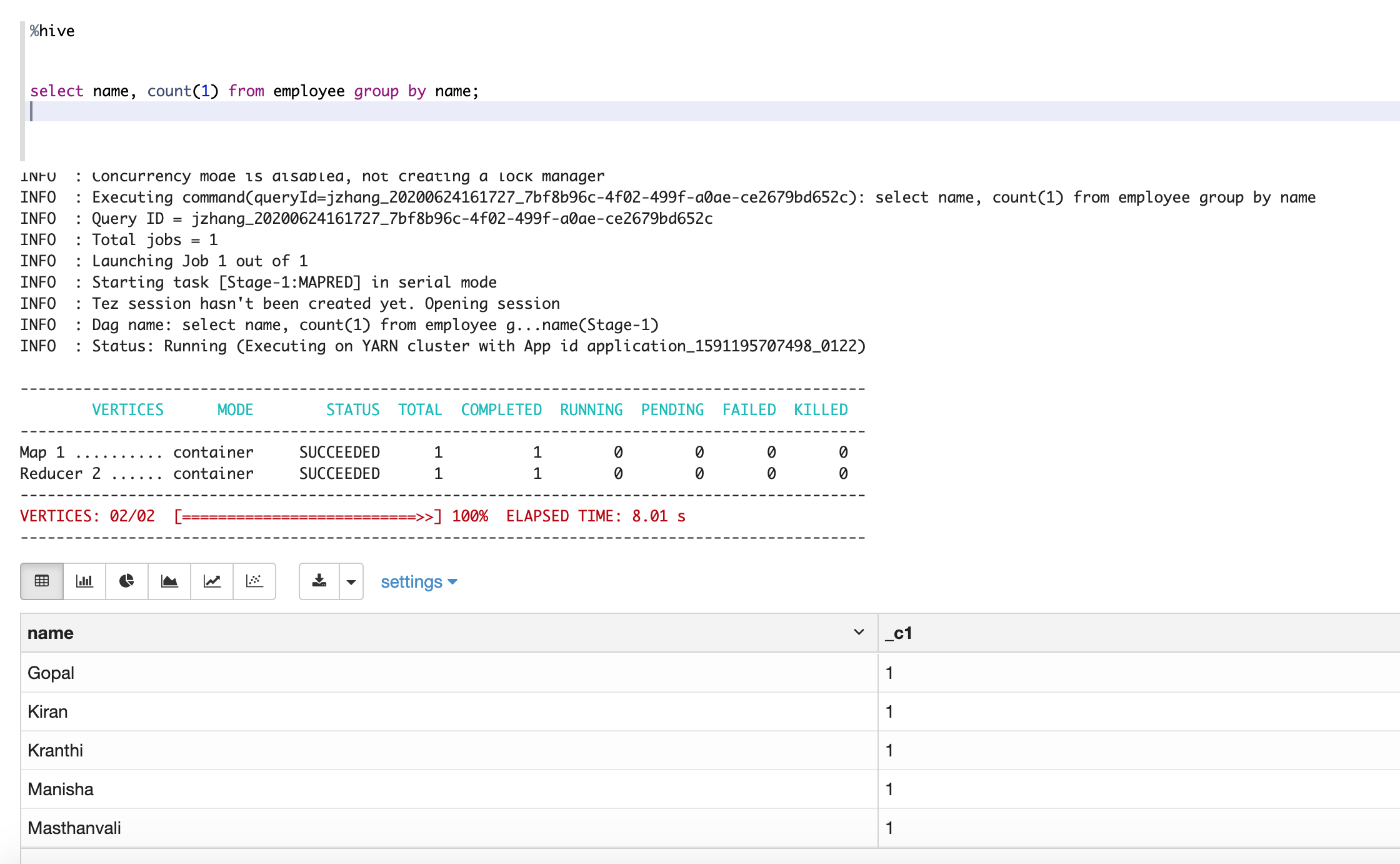

This PR is to support displaying hive job execution info for hive queries in jdbc interpreter. Because hive job usually takes long time, without any progress info is confusing for users. This PR will print any execution log info and support progress bar if hive use tez.

### What type of PR is it?

[ Feature ]

### Todos

* [ ] - Task

### What is the Jira issue?

* https://issues.apache.org/jira/browse/ZEPPELIN-4868

### How should this be tested?

* Unit test is added and also manually tested, see screenshot below.

### Screenshots (if appropriate)

### Questions:

* Does the licenses files need update? No

* Is there breaking changes for older versions? No

* Does this needs documentation? No

Author: Jeff Zhang <zj...@apache.org>

Closes #3823 from zjffdu/ZEPPELIN-4868 and squashes the following commits:

c9a512c8a [Jeff Zhang] [ZEPPELIN-4868]. Display hive job execution info when running hive sql in jdbc interpreter

---

jdbc/pom.xml | 90 +++++++-------

.../org/apache/zeppelin/jdbc/JDBCInterpreter.java | 7 ++

.../jdbc/hive/BeelineInPlaceUpdateStream.java | 113 ++++++++++++++++++

.../org/apache/zeppelin/jdbc/hive/HiveUtils.java | 129 +++++++++++++++++++++

.../org/apache/zeppelin/jdbc/hive/ProgressBar.java | 44 +++++++

.../apache/zeppelin/jdbc/JDBCInterpreterTest.java | 62 +++++-----

.../apache/zeppelin/jdbc/hive/HiveUtilsTest.java | 41 +++++++

.../zeppelin/interpreter/InterpreterContext.java | 4 +

8 files changed, 406 insertions(+), 84 deletions(-)

diff --git a/jdbc/pom.xml b/jdbc/pom.xml

index 8e7ab85..58e2ebd 100644

--- a/jdbc/pom.xml

+++ b/jdbc/pom.xml

@@ -40,6 +40,7 @@

<hadoop.common.version>${hadoop2.7.version}</hadoop.common.version>

<h2.version>1.4.190</h2.version>

<commons.dbcp2.version>2.0.1</commons.dbcp2.version>

+ <hive2.version>2.3.4</hive2.version>

<!--test library versions-->

<mockrunner.jdbc.version>1.0.8</mockrunner.jdbc.version>

@@ -137,7 +138,44 @@

</exclusion>

</exclusions>

</dependency>

-

+

+ <dependency>

+ <groupId>org.apache.hive</groupId>

+ <artifactId>hive-jdbc</artifactId>

+ <version>${hive2.version}</version>

+ <scope>provided</scope>

+ <exclusions>

+ <exclusion>

+ <groupId>org.apache.hadoop</groupId>

+ <artifactId>hadoop-common</artifactId>

+ </exclusion>

+ <exclusion>

+ <groupId>org.apache.hadoop</groupId>

+ <artifactId>hadoop-auth</artifactId>

+ </exclusion>

+ <exclusion>

+ <groupId>org.apache.httpcomponents</groupId>

+ <artifactId>httpcore</artifactId>

+ </exclusion>

+ <exclusion>

+ <groupId>org.apache.httpcomponents</groupId>

+ <artifactId>httpclient</artifactId>

+ </exclusion>

+ </exclusions>

+ </dependency>

+

+ <dependency>

+ <groupId>org.apache.httpcomponents</groupId>

+ <artifactId>httpcore</artifactId>

+ <version>4.4.1</version>

+ <scope>provided</scope>

+ </dependency>

+ <dependency>

+ <groupId>org.apache.httpcomponents</groupId>

+ <artifactId>httpclient</artifactId>

+ <version>4.4.1</version>

+ </dependency>

+

<dependency>

<groupId>net.jodah</groupId>

<artifactId>concurrentunit</artifactId>

@@ -172,56 +210,6 @@

</build>

<profiles>

- <profile>

- <id>jdbc-hive</id>

- <properties>

- <hive.version>1.2.1</hive.version>

- <hive2.version>2.1.0</hive2.version>

- </properties>

-

- <dependencies>

- <dependency>

- <groupId>org.apache.hive</groupId>

- <artifactId>hive-jdbc</artifactId>

- <version>${hive.version}</version>

- <exclusions>

- <exclusion>

- <groupId>org.apache.hadoop</groupId>

- <artifactId>hadoop-common</artifactId>

- </exclusion>

- <exclusion>

- <groupId>org.apache.hadoop</groupId>

- <artifactId>hadoop-auth</artifactId>

- </exclusion>

- <exclusion>

- <groupId>org.apache.httpcomponents</groupId>

- <artifactId>httpcore</artifactId>

- </exclusion>

- <exclusion>

- <groupId>org.apache.httpcomponents</groupId>

- <artifactId>httpclient</artifactId>

- </exclusion>

- </exclusions>

- </dependency>

-

- <dependency>

- <groupId>org.apache.httpcomponents</groupId>

- <artifactId>httpcore</artifactId>

- <version>4.4.1</version>

- </dependency>

- <dependency>

- <groupId>org.apache.httpcomponents</groupId>

- <artifactId>httpclient</artifactId>

- <version>4.4.1</version>

- </dependency>

-

- <dependency>

- <groupId>org.apache.hive.shims</groupId>

- <artifactId>hive-shims-0.23</artifactId>

- <version>${hive2.version}</version>

- </dependency>

- </dependencies>

- </profile>

<profile>

<id>jdbc-phoenix</id>

diff --git a/jdbc/src/main/java/org/apache/zeppelin/jdbc/JDBCInterpreter.java b/jdbc/src/main/java/org/apache/zeppelin/jdbc/JDBCInterpreter.java

index 8605d8b..bdda696 100644

--- a/jdbc/src/main/java/org/apache/zeppelin/jdbc/JDBCInterpreter.java

+++ b/jdbc/src/main/java/org/apache/zeppelin/jdbc/JDBCInterpreter.java

@@ -34,6 +34,7 @@ import org.apache.hadoop.security.alias.CredentialProviderFactory;

import org.apache.zeppelin.interpreter.SingleRowInterpreterResult;

import org.apache.zeppelin.interpreter.ZeppelinContext;

import org.apache.zeppelin.interpreter.util.SqlSplitter;

+import org.apache.zeppelin.jdbc.hive.HiveUtils;

import org.apache.zeppelin.tabledata.TableDataUtils;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

@@ -711,6 +712,12 @@ public class JDBCInterpreter extends KerberosInterpreter {

statement.execute(statementPrecode);

}

+ // start hive monitor thread if it is hive jdbc

+ if (getJDBCConfiguration(user).getPropertyMap(propertyKey).getProperty(URL_KEY)

+ .startsWith("jdbc:hive2://")) {

+ HiveUtils.startHiveMonitorThread(statement, context,

+ Boolean.parseBoolean(getProperty("hive.log.display", "true")));

+ }

boolean isResultSetAvailable = statement.execute(sqlToExecute);

getJDBCConfiguration(user).setConnectionInDBDriverPoolSuccessful(propertyKey);

if (isResultSetAvailable) {

diff --git a/jdbc/src/main/java/org/apache/zeppelin/jdbc/hive/BeelineInPlaceUpdateStream.java b/jdbc/src/main/java/org/apache/zeppelin/jdbc/hive/BeelineInPlaceUpdateStream.java

new file mode 100644

index 0000000..cd7cbd1

--- /dev/null

+++ b/jdbc/src/main/java/org/apache/zeppelin/jdbc/hive/BeelineInPlaceUpdateStream.java

@@ -0,0 +1,113 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more contributor license

+ * agreements. See the NOTICE file distributed with this work for additional information regarding

+ * copyright ownership. The ASF licenses this file to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance with the License. You may obtain a

+ * copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software distributed under the License

+ * is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express

+ * or implied. See the License for the specific language governing permissions and limitations under

+ * the License.

+ */

+

+package org.apache.zeppelin.jdbc.hive;

+

+

+import org.apache.hadoop.hive.common.log.InPlaceUpdate;

+import org.apache.hadoop.hive.common.log.ProgressMonitor;

+import org.apache.hive.jdbc.logs.InPlaceUpdateStream;

+import org.apache.hive.service.rpc.thrift.TJobExecutionStatus;

+import org.apache.hive.service.rpc.thrift.TProgressUpdateResp;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.PrintStream;

+import java.util.List;

+

+/**

+ * This class is copied from hive project. It is for the purpose of print job progress.

+ */

+public class BeelineInPlaceUpdateStream implements InPlaceUpdateStream {

+ private static final Logger LOGGER = LoggerFactory.getLogger(BeelineInPlaceUpdateStream.class);

+

+ private InPlaceUpdate inPlaceUpdate;

+ private EventNotifier notifier;

+

+ public BeelineInPlaceUpdateStream(PrintStream out,

+ InPlaceUpdateStream.EventNotifier notifier) {

+ this.inPlaceUpdate = new InPlaceUpdate(out);

+ this.notifier = notifier;

+ }

+

+ @Override

+ public void update(TProgressUpdateResp response) {

+ if (response == null || response.getStatus().equals(TJobExecutionStatus.NOT_AVAILABLE)) {

+ /*

+ we set it to completed if there is nothing the server has to report

+ for example, DDL statements

+ */

+ notifier.progressBarCompleted();

+ } else if (notifier.isOperationLogUpdatedAtLeastOnce()) {

+ /*

+ try to render in place update progress bar only if the operations logs is update at

+ least once

+ as this will hopefully allow printing the metadata information like query id,

+ application id

+ etc. have to remove these notifiers when the operation logs get merged into

+ GetOperationStatus

+ */

+ LOGGER.info("update progress: " + response.getProgressedPercentage());

+ inPlaceUpdate.render(new ProgressMonitorWrapper(response));

+ }

+ }

+

+ @Override

+ public EventNotifier getEventNotifier() {

+ return notifier;

+ }

+

+ static class ProgressMonitorWrapper implements ProgressMonitor {

+ private TProgressUpdateResp response;

+

+ ProgressMonitorWrapper(TProgressUpdateResp response) {

+ this.response = response;

+ }

+

+ @Override

+ public List<String> headers() {

+ return response.getHeaderNames();

+ }

+

+ @Override

+ public List<List<String>> rows() {

+ return response.getRows();

+ }

+

+ @Override

+ public String footerSummary() {

+ return response.getFooterSummary();

+ }

+

+ @Override

+ public long startTime() {

+ return response.getStartTime();

+ }

+

+ @Override

+ public String executionStatus() {

+ throw new UnsupportedOperationException(

+ "This should never be used for anything. All the required data is " +

+ "available via other methods"

+ );

+ }

+

+ @Override

+ public double progressedPercentage() {

+ return response.getProgressedPercentage();

+ }

+ }

+}

+

diff --git a/jdbc/src/main/java/org/apache/zeppelin/jdbc/hive/HiveUtils.java b/jdbc/src/main/java/org/apache/zeppelin/jdbc/hive/HiveUtils.java

new file mode 100644

index 0000000..43844b7

--- /dev/null

+++ b/jdbc/src/main/java/org/apache/zeppelin/jdbc/hive/HiveUtils.java

@@ -0,0 +1,129 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more contributor license

+ * agreements. See the NOTICE file distributed with this work for additional information regarding

+ * copyright ownership. The ASF licenses this file to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance with the License. You may obtain a

+ * copy of the License at

+ * <p>

+ * http://www.apache.org/licenses/LICENSE-2.0

+ * <p>

+ * Unless required by applicable law or agreed to in writing, software distributed under the License

+ * is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express

+ * or implied. See the License for the specific language governing permissions and limitations under

+ * the License.

+ */

+

+package org.apache.zeppelin.jdbc.hive;

+

+import org.apache.commons.dbcp2.DelegatingStatement;

+import org.apache.commons.lang3.StringUtils;

+import org.apache.hive.common.util.HiveVersionInfo;

+import org.apache.hive.jdbc.HiveStatement;

+import org.apache.zeppelin.interpreter.InterpreterContext;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.sql.Statement;

+import java.util.HashMap;

+import java.util.List;

+import java.util.Map;

+import java.util.Optional;

+import java.util.regex.Matcher;

+import java.util.regex.Pattern;

+

+/**

+ * This class include hive specific stuff.

+ * e.g. Display hive job execution info.

+ *

+ */

+public class HiveUtils {

+

+ private static final Logger LOGGER = LoggerFactory.getLogger(HiveUtils.class);

+ private static final int DEFAULT_QUERY_PROGRESS_INTERVAL = 1000;

+

+ private static final Pattern JOBURL_PATTERN =

+ Pattern.compile(".*Tracking URL = (\\S*).*", Pattern.DOTALL);

+

+ /**

+ * Display hive job execution info, and progress info for hive >= 2.3

+ *

+ * @param stmt

+ * @param context

+ * @param displayLog

+ */

+ public static void startHiveMonitorThread(Statement stmt,

+ InterpreterContext context,

+ boolean displayLog) {

+ HiveStatement hiveStmt = (HiveStatement)

+ ((DelegatingStatement) ((DelegatingStatement) stmt).getDelegate()).getDelegate();

+ String hiveVersion = HiveVersionInfo.getVersion();

+ ProgressBar progressBarTemp = null;

+ if (isProgressBarSupported(hiveVersion)) {

+ LOGGER.debug("ProgressBar is supported for hive version: " + hiveVersion);

+ progressBarTemp = new ProgressBar();

+ } else {

+ LOGGER.debug("ProgressBar is not supported for hive version: " + hiveVersion);

+ }

+ // need to use final variable progressBar in thread, so need progressBarTemp here.

+ final ProgressBar progressBar = progressBarTemp;

+

+ Thread thread = new Thread(() -> {

+ while (hiveStmt.hasMoreLogs() && !Thread.interrupted()) {

+ try {

+ List<String> logs = hiveStmt.getQueryLog();

+ String logsOutput = StringUtils.join(logs, System.lineSeparator());

+ LOGGER.debug("Hive job output: " + logsOutput);

+ boolean displayLogProperty = context.getBooleanLocalProperty("displayLog", displayLog);

+ if (!StringUtils.isBlank(logsOutput) && displayLogProperty) {

+ context.out.write(logsOutput + "\n");

+ context.out.flush();

+ }

+ if (!StringUtils.isBlank(logsOutput) && progressBar != null) {

+ progressBar.operationLogShowedToUser();

+ }

+ Optional<String> jobURL = extractJobURL(logsOutput);

+ if (jobURL.isPresent()) {

+ Map<String, String> infos = new HashMap<>();

+ infos.put("jobUrl", jobURL.get());

+ infos.put("label", "HIVE JOB");

+ infos.put("tooltip", "View in YARN WEB UI");

+ infos.put("noteId", context.getNoteId());

+ infos.put("paraId", context.getParagraphId());

+ context.getIntpEventClient().onParaInfosReceived(infos);

+ }

+ // refresh logs every 1 second.

+ Thread.sleep(DEFAULT_QUERY_PROGRESS_INTERVAL);

+ } catch (Exception e) {

+ LOGGER.warn("Fail to write output", e);

+ }

+ }

+ LOGGER.debug("Hive monitor thread is finished");

+ });

+ thread.setName("HiveMonitor-Thread");

+ thread.setDaemon(true);

+ thread.start();

+ LOGGER.info("Start HiveMonitor-Thread for sql: " + stmt);

+

+ if (progressBar != null) {

+ hiveStmt.setInPlaceUpdateStream(progressBar.getInPlaceUpdateStream(context.out));

+ }

+ }

+

+ // Hive progress bar is supported from hive 2.3 (HIVE-16045)

+ private static boolean isProgressBarSupported(String hiveVersion) {

+ String[] tokens = hiveVersion.split("\\.");

+ int majorVersion = Integer.parseInt(tokens[0]);

+ int minorVersion = Integer.parseInt(tokens[1]);

+ return majorVersion > 2 || ((majorVersion == 2) && minorVersion >= 3);

+ }

+

+ // extract hive job url from logs, it only works for MR engine.

+ static Optional<String> extractJobURL(String log) {

+ Matcher matcher = JOBURL_PATTERN.matcher(log);

+ if (matcher.matches()) {

+ String jobURL = matcher.group(1);

+ return Optional.of(jobURL);

+ }

+ return Optional.empty();

+ }

+}

diff --git a/jdbc/src/main/java/org/apache/zeppelin/jdbc/hive/ProgressBar.java b/jdbc/src/main/java/org/apache/zeppelin/jdbc/hive/ProgressBar.java

new file mode 100644

index 0000000..0cd28be

--- /dev/null

+++ b/jdbc/src/main/java/org/apache/zeppelin/jdbc/hive/ProgressBar.java

@@ -0,0 +1,44 @@

+/**

+ * Licensed to the Apache Software Foundation (ASF) under one or more contributor license

+ * agreements. See the NOTICE file distributed with this work for additional information regarding

+ * copyright ownership. The ASF licenses this file to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance with the License. You may obtain a

+ * copy of the License at

+ * <p>

+ * http://www.apache.org/licenses/LICENSE-2.0

+ * <p>

+ * Unless required by applicable law or agreed to in writing, software distributed under the License

+ * is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express

+ * or implied. See the License for the specific language governing permissions and limitations under

+ * the License.

+ */

+

+

+package org.apache.zeppelin.jdbc.hive;

+

+import org.apache.hive.jdbc.logs.InPlaceUpdateStream;

+

+import java.io.OutputStream;

+import java.io.PrintStream;

+

+/**

+ * This class is only created for hive >= 2.3 where progress bar is supported.

+ */

+public class ProgressBar {

+ private InPlaceUpdateStream.EventNotifier eventNotifier;

+

+ public ProgressBar() {

+ this.eventNotifier = new InPlaceUpdateStream.EventNotifier();

+ }

+

+ public void operationLogShowedToUser() {

+ this.eventNotifier.operationLogShowedToUser();

+ }

+

+ public BeelineInPlaceUpdateStream getInPlaceUpdateStream(OutputStream out) {

+ return new BeelineInPlaceUpdateStream(

+ new PrintStream(out),

+ eventNotifier

+ );

+ }

+}

diff --git a/jdbc/src/test/java/org/apache/zeppelin/jdbc/JDBCInterpreterTest.java b/jdbc/src/test/java/org/apache/zeppelin/jdbc/JDBCInterpreterTest.java

index 125ef9c..f6fe108 100644

--- a/jdbc/src/test/java/org/apache/zeppelin/jdbc/JDBCInterpreterTest.java

+++ b/jdbc/src/test/java/org/apache/zeppelin/jdbc/JDBCInterpreterTest.java

@@ -14,30 +14,23 @@

*/

package org.apache.zeppelin.jdbc;

-import static org.junit.Assert.assertEquals;

-import static org.junit.Assert.assertFalse;

-import static org.junit.Assert.assertNull;

-import static org.junit.Assert.assertTrue;

-

-import static java.lang.String.format;

-

-import static org.apache.zeppelin.jdbc.JDBCInterpreter.COMMON_MAX_LINE;

-import static org.apache.zeppelin.jdbc.JDBCInterpreter.DEFAULT_DRIVER;

-import static org.apache.zeppelin.jdbc.JDBCInterpreter.DEFAULT_PASSWORD;

-import static org.apache.zeppelin.jdbc.JDBCInterpreter.DEFAULT_STATEMENT_PRECODE;

-import static org.apache.zeppelin.jdbc.JDBCInterpreter.DEFAULT_USER;

-import static org.apache.zeppelin.jdbc.JDBCInterpreter.DEFAULT_URL;

-import static org.apache.zeppelin.jdbc.JDBCInterpreter.DEFAULT_PRECODE;

-import static org.apache.zeppelin.jdbc.JDBCInterpreter.PRECODE_KEY_TEMPLATE;

-

+import com.mockrunner.jdbc.BasicJDBCTestCaseAdapter;

import net.jodah.concurrentunit.Waiter;

+import org.apache.zeppelin.completer.CompletionType;

+import org.apache.zeppelin.interpreter.InterpreterContext;

+import org.apache.zeppelin.interpreter.InterpreterException;

import org.apache.zeppelin.interpreter.InterpreterOutput;

+import org.apache.zeppelin.interpreter.InterpreterResult;

import org.apache.zeppelin.interpreter.InterpreterResultMessage;

+import org.apache.zeppelin.interpreter.thrift.InterpreterCompletion;

+import org.apache.zeppelin.scheduler.FIFOScheduler;

+import org.apache.zeppelin.scheduler.ParallelScheduler;

+import org.apache.zeppelin.scheduler.Scheduler;

+import org.apache.zeppelin.user.AuthenticationInfo;

+import org.apache.zeppelin.user.UserCredentials;

+import org.apache.zeppelin.user.UsernamePassword;

import org.junit.Before;

import org.junit.Test;

-import static org.apache.zeppelin.jdbc.JDBCInterpreter.STATEMENT_PRECODE_KEY_TEMPLATE;

-import static org.junit.Assert.fail;

-

import java.io.IOException;

import java.nio.file.Files;

@@ -52,19 +45,21 @@ import java.util.Map;

import java.util.Properties;

import java.util.concurrent.TimeoutException;

-import com.mockrunner.jdbc.BasicJDBCTestCaseAdapter;

-

-import org.apache.zeppelin.completer.CompletionType;

-import org.apache.zeppelin.interpreter.InterpreterContext;

-import org.apache.zeppelin.interpreter.InterpreterException;

-import org.apache.zeppelin.interpreter.InterpreterResult;

-import org.apache.zeppelin.interpreter.thrift.InterpreterCompletion;

-import org.apache.zeppelin.scheduler.FIFOScheduler;

-import org.apache.zeppelin.scheduler.ParallelScheduler;

-import org.apache.zeppelin.scheduler.Scheduler;

-import org.apache.zeppelin.user.AuthenticationInfo;

-import org.apache.zeppelin.user.UserCredentials;

-import org.apache.zeppelin.user.UsernamePassword;

+import static java.lang.String.format;

+import static org.apache.zeppelin.jdbc.JDBCInterpreter.COMMON_MAX_LINE;

+import static org.apache.zeppelin.jdbc.JDBCInterpreter.DEFAULT_DRIVER;

+import static org.apache.zeppelin.jdbc.JDBCInterpreter.DEFAULT_PASSWORD;

+import static org.apache.zeppelin.jdbc.JDBCInterpreter.DEFAULT_PRECODE;

+import static org.apache.zeppelin.jdbc.JDBCInterpreter.DEFAULT_STATEMENT_PRECODE;

+import static org.apache.zeppelin.jdbc.JDBCInterpreter.DEFAULT_URL;

+import static org.apache.zeppelin.jdbc.JDBCInterpreter.DEFAULT_USER;

+import static org.apache.zeppelin.jdbc.JDBCInterpreter.PRECODE_KEY_TEMPLATE;

+import static org.apache.zeppelin.jdbc.JDBCInterpreter.STATEMENT_PRECODE_KEY_TEMPLATE;

+import static org.junit.Assert.assertEquals;

+import static org.junit.Assert.assertFalse;

+import static org.junit.Assert.assertNull;

+import static org.junit.Assert.assertTrue;

+import static org.junit.Assert.fail;

/**

* JDBC interpreter unit tests.

@@ -496,7 +491,8 @@ public class JDBCInterpreterTest extends BasicJDBCTestCaseAdapter {

assertEquals(true, completionList.contains(correctCompletionKeyword));

}

- private Properties getDBProperty(String dbUser, String dbPassowrd) throws IOException {

+ private Properties getDBProperty(String dbUser,

+ String dbPassowrd) throws IOException {

Properties properties = new Properties();

properties.setProperty("common.max_count", "1000");

properties.setProperty("common.max_retry", "3");

diff --git a/jdbc/src/test/java/org/apache/zeppelin/jdbc/hive/HiveUtilsTest.java b/jdbc/src/test/java/org/apache/zeppelin/jdbc/hive/HiveUtilsTest.java

new file mode 100644

index 0000000..f0f6269

--- /dev/null

+++ b/jdbc/src/test/java/org/apache/zeppelin/jdbc/hive/HiveUtilsTest.java

@@ -0,0 +1,41 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.zeppelin.jdbc.hive;

+

+import org.junit.Test;

+

+import java.util.Optional;

+

+import static org.junit.Assert.assertEquals;

+import static org.junit.Assert.assertTrue;

+

+public class HiveUtilsTest {

+

+ @Test

+ public void testJobURL() {

+ Optional<String> jobURL = HiveUtils.extractJobURL(

+ "INFO : The url to track the job: " +

+ "http://localhost:8088/proxy/application_1591195707498_0064/\n" +

+ "INFO : Starting Job = job_1591195707498_0064, " +

+ "Tracking URL = http://localhost:8088/proxy/application_1591195707498_0064/\n" +

+ "INFO : Kill Command = /Users/abc/Java/lib/hadoop-2.7.7/bin/hadoop job " +

+ " -kill job_1591195707498_0064");

+ assertTrue(jobURL.isPresent());

+ assertEquals("http://localhost:8088/proxy/application_1591195707498_0064/", jobURL.get());

+ }

+}

diff --git a/zeppelin-interpreter/src/main/java/org/apache/zeppelin/interpreter/InterpreterContext.java b/zeppelin-interpreter/src/main/java/org/apache/zeppelin/interpreter/InterpreterContext.java

index 7a215ef..2c20806 100644

--- a/zeppelin-interpreter/src/main/java/org/apache/zeppelin/interpreter/InterpreterContext.java

+++ b/zeppelin-interpreter/src/main/java/org/apache/zeppelin/interpreter/InterpreterContext.java

@@ -229,6 +229,10 @@ public class InterpreterContext {

return Double.parseDouble(localProperties.getOrDefault(key, defaultValue + ""));

}

+ public boolean getBooleanLocalProperty(String key, boolean defaultValue) {

+ return Boolean.parseBoolean(localProperties.getOrDefault(key, defaultValue + ""));

+ }

+

public AuthenticationInfo getAuthenticationInfo() {

return authenticationInfo;

}