You are viewing a plain text version of this content. The canonical link for it is here.

Posted to reviews@spark.apache.org by GitBox <gi...@apache.org> on 2022/09/28 01:54:07 UTC

[GitHub] [spark] yaooqinn opened a new pull request, #38024: [SPARK-40591][SQL] Fix data loss caused by ignoreCorruptFiles

yaooqinn opened a new pull request, #38024:

URL: https://github.com/apache/spark/pull/38024

<!--

Thanks for sending a pull request! Here are some tips for you:

1. If this is your first time, please read our contributor guidelines: https://spark.apache.org/contributing.html

2. Ensure you have added or run the appropriate tests for your PR: https://spark.apache.org/developer-tools.html

3. If the PR is unfinished, add '[WIP]' in your PR title, e.g., '[WIP][SPARK-XXXX] Your PR title ...'.

4. Be sure to keep the PR description updated to reflect all changes.

5. Please write your PR title to summarize what this PR proposes.

6. If possible, provide a concise example to reproduce the issue for a faster review.

7. If you want to add a new configuration, please read the guideline first for naming configurations in

'core/src/main/scala/org/apache/spark/internal/config/ConfigEntry.scala'.

8. If you want to add or modify an error type or message, please read the guideline first in

'core/src/main/resources/error/README.md'.

-->

### What changes were proposed in this pull request?

<!--

Please clarify what changes you are proposing. The purpose of this section is to outline the changes and how this PR fixes the issue.

If possible, please consider writing useful notes for better and faster reviews in your PR. See the examples below.

1. If you refactor some codes with changing classes, showing the class hierarchy will help reviewers.

2. If you fix some SQL features, you can provide some references of other DBMSes.

3. If there is design documentation, please add the link.

4. If there is a discussion in the mailing list, please add the link.

-->

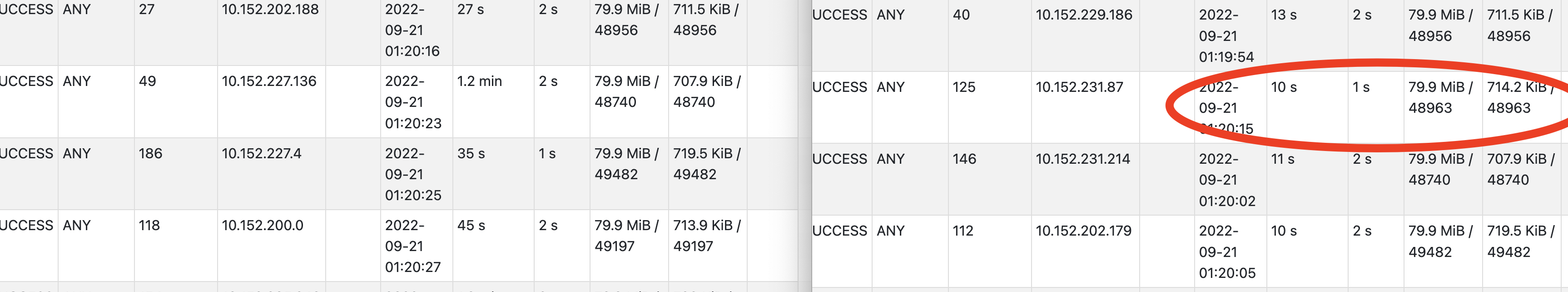

Let's take a look at the case below, the left and the right are visiting the same table and its partitions, and both of them are ignoreCorruptFiles=true. The right side shows that a task skips partial of the data it reads because of encountering 'corrupt data', while the left read this file correctly. As ignoreCorruptFiles coarsely works with RuntimeException and IOException, it can not always represent data corruption.

What's worse, such kinds of tasks are always marked as successful on the web UI. The same query visiting the same snapshot of data might result in inconsistency silently.

In this PR, we make the ignoreCorruptFiles work with taskAttemptNumber together, that is, only the last attempt will ignore the maybe-corrupted file. Users may want fewer retries in case of performance regressions, so ignoreCorruptFilesAfterRetries is introduced which can be set to less than `spark.task.maxFailures`.

### Why are the changes needed?

<!--

Please clarify why the changes are needed. For instance,

1. If you propose a new API, clarify the use case for a new API.

2. If you fix a bug, you can clarify why it is a bug.

-->

Fix data loss.

Also, the UI now contains failed tasks for both positive and negative data corruption which helps us in bug hunting.

### Does this PR introduce _any_ user-facing change?

<!--

Note that it means *any* user-facing change including all aspects such as the documentation fix.

If yes, please clarify the previous behavior and the change this PR proposes - provide the console output, description and/or an example to show the behavior difference if possible.

If possible, please also clarify if this is a user-facing change compared to the released Spark versions or within the unreleased branches such as master.

If no, write 'No'.

-->

No, it's a bug fix (maybe a UI change like what I said above).

### How was this patch tested?

<!--

If tests were added, say they were added here. Please make sure to add some test cases that check the changes thoroughly including negative and positive cases if possible.

If it was tested in a way different from regular unit tests, please clarify how you tested step by step, ideally copy and paste-able, so that other reviewers can test and check, and descendants can verify in the future.

If tests were not added, please describe why they were not added and/or why it was difficult to add.

If benchmark tests were added, please run the benchmarks in GitHub Actions for the consistent environment, and the instructions could accord to: https://spark.apache.org/developer-tools.html#github-workflow-benchmarks.

-->

tested locally and existing tests for ignoreCorruptFiles

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] srowen commented on a diff in pull request #38024: [SPARK-40591][CORE][SQL] Fix data loss caused by ignoreCorruptFiles

Posted by GitBox <gi...@apache.org>.

srowen commented on code in PR #38024:

URL: https://github.com/apache/spark/pull/38024#discussion_r982356825

##########

core/src/main/scala/org/apache/spark/internal/config/package.scala:

##########

@@ -1078,6 +1078,13 @@ package object config {

.booleanConf

.createWithDefault(false)

+ private[spark] val IGNORE_CORRUPT_FILES_AFTER_RETIES =

Review Comment:

Do we really need another config?

I'm sorta confused, isn't the file effectively 'corrupt' in this case, and you have asked to ignore that?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] yaooqinn commented on a diff in pull request #38024: [SPARK-40591][CORE][SQL] Fix data loss caused by ignoreCorruptFiles

Posted by GitBox <gi...@apache.org>.

yaooqinn commented on code in PR #38024:

URL: https://github.com/apache/spark/pull/38024#discussion_r983005641

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/v2/FilePartitionReader.scala:

##########

@@ -36,8 +36,15 @@ class FilePartitionReader[T](

private def ignoreMissingFiles = options.ignoreMissingFiles

private def ignoreCorruptFiles = options.ignoreCorruptFiles

+ private def ignoreCorruptFilesAfterRetries = options.ignoreCorruptFilesAfterRetries

override def next(): Boolean = {

+

+ def shouldSkipCorruptFiles(): Boolean = {

Review Comment:

In PR desc, I have shown a case where the same query reads the same copy of data at the same time, one is 'corrupt' and the other succeeds. It means that some errors we meet with IOE are not positive data/file corruption. If we ignore them, IMHO, it is a correctness issue. And the config ignoreCorruptFiles says that it ignores corrupt files, not errors. If the data to read contains plenty of corrupt files which means the error is unrecoverable, I add RETIES conf which separates from `spark.task.maxFailures` to limit.

BTW, I have checked the ORC read path, it seems to wrap all errors with IOE.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] mridulm commented on pull request #38024: [SPARK-40591][CORE][SQL] Fix data loss caused by ignoreCorruptFiles

Posted by GitBox <gi...@apache.org>.

mridulm commented on PR #38024:

URL: https://github.com/apache/spark/pull/38024#issuecomment-1260859431

I am a bit confused with what this PR is trying to do.

If we want to ignore corrupt files, by definition failures will be ignored - and tasks will be marked successful : because that is what the config is for.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] wayneguow commented on a diff in pull request #38024: [SPARK-40591][SQL] Fix data loss caused by ignoreCorruptFiles

Posted by GitBox <gi...@apache.org>.

wayneguow commented on code in PR #38024:

URL: https://github.com/apache/spark/pull/38024#discussion_r981991786

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/v2/FilePartitionReader.scala:

##########

@@ -36,8 +36,15 @@ class FilePartitionReader[T](

private def ignoreMissingFiles = options.ignoreMissingFiles

private def ignoreCorruptFiles = options.ignoreCorruptFiles

+ private def ignoreCorruptFilesAfterRetries = options.ignoreCorruptFilesAfterRetries

override def next(): Boolean = {

+

+ def shouldSkipCorruptFiles(): Boolean = {

Review Comment:

If network is unreachable or the file storage is broken down in a period of time temporarily, a task which has been retried beyond TASK_MAX_FAILURES would cause data lost.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] github-actions[bot] closed pull request #38024: [SPARK-40591][CORE][SQL] Fix data loss caused by ignoreCorruptFiles

Posted by GitBox <gi...@apache.org>.

github-actions[bot] closed pull request #38024: [SPARK-40591][CORE][SQL] Fix data loss caused by ignoreCorruptFiles

URL: https://github.com/apache/spark/pull/38024

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] yaooqinn commented on a diff in pull request #38024: [SPARK-40591][CORE][SQL] Fix data loss caused by ignoreCorruptFiles

Posted by GitBox <gi...@apache.org>.

yaooqinn commented on code in PR #38024:

URL: https://github.com/apache/spark/pull/38024#discussion_r982991812

##########

core/src/main/scala/org/apache/spark/internal/config/package.scala:

##########

@@ -1078,6 +1078,13 @@ package object config {

.booleanConf

.createWithDefault(false)

+ private[spark] val IGNORE_CORRUPT_FILES_AFTER_RETIES =

Review Comment:

> isn't the file effectively 'corrupt' in this case.

it isn't. an IOE could be a temporary failure.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] yaooqinn commented on pull request #38024: [SPARK-40591][SQL] Fix data loss caused by ignoreCorruptFiles

Posted by GitBox <gi...@apache.org>.

yaooqinn commented on PR #38024:

URL: https://github.com/apache/spark/pull/38024#issuecomment-1260291484

cc @cloud-fan @dongjoon-hyun @HyukjinKwon @wangyum thanks.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] srowen commented on a diff in pull request #38024: [SPARK-40591][CORE][SQL] Fix data loss caused by ignoreCorruptFiles

Posted by GitBox <gi...@apache.org>.

srowen commented on code in PR #38024:

URL: https://github.com/apache/spark/pull/38024#discussion_r983008731

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/v2/FilePartitionReader.scala:

##########

@@ -36,8 +36,15 @@ class FilePartitionReader[T](

private def ignoreMissingFiles = options.ignoreMissingFiles

private def ignoreCorruptFiles = options.ignoreCorruptFiles

+ private def ignoreCorruptFilesAfterRetries = options.ignoreCorruptFilesAfterRetries

override def next(): Boolean = {

+

+ def shouldSkipCorruptFiles(): Boolean = {

Review Comment:

Well, some kind of file corruption is just one reason for an error, and surely the config is meant to denote any inability to correctly read data. You're really distinguishing between transient and permanent failures, but, IOException doesn't tell you that.

It's not a correctness issue, as you've already said you're willing to ignore data that can't be read (otherwise, don't specify this, right?). Now that's being replaced with "data that can't be read if I try N times" which isn't fundamentally different.

Or I could spin a different argument: if the data can't be read 2 times, and is read a 3rd time, are you OK with that being correct?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] yaooqinn commented on a diff in pull request #38024: [SPARK-40591][SQL] Fix data loss caused by ignoreCorruptFiles

Posted by GitBox <gi...@apache.org>.

yaooqinn commented on code in PR #38024:

URL: https://github.com/apache/spark/pull/38024#discussion_r982003775

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/v2/FilePartitionReader.scala:

##########

@@ -36,8 +36,15 @@ class FilePartitionReader[T](

private def ignoreMissingFiles = options.ignoreMissingFiles

private def ignoreCorruptFiles = options.ignoreCorruptFiles

+ private def ignoreCorruptFilesAfterRetries = options.ignoreCorruptFilesAfterRetries

override def next(): Boolean = {

+

+ def shouldSkipCorruptFiles(): Boolean = {

Review Comment:

yes, but compare to without this PR, this case is exposed to users instead of silently marking tasks as success.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] github-actions[bot] commented on pull request #38024: [SPARK-40591][CORE][SQL] Fix data loss caused by ignoreCorruptFiles

Posted by GitBox <gi...@apache.org>.

github-actions[bot] commented on PR #38024:

URL: https://github.com/apache/spark/pull/38024#issuecomment-1382601668

We're closing this PR because it hasn't been updated in a while. This isn't a judgement on the merit of the PR in any way. It's just a way of keeping the PR queue manageable.

If you'd like to revive this PR, please reopen it and ask a committer to remove the Stale tag!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] LuciferYang commented on a diff in pull request #38024: [SPARK-40591][SQL] Fix data loss caused by ignoreCorruptFiles

Posted by GitBox <gi...@apache.org>.

LuciferYang commented on code in PR #38024:

URL: https://github.com/apache/spark/pull/38024#discussion_r981889549

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileScanRDD.scala:

##########

@@ -253,7 +259,7 @@ class FileScanRDD(

null

// Throw FileNotFoundException even if `ignoreCorruptFiles` is true

case e: FileNotFoundException if !ignoreMissingFiles => throw e

- case e @ (_: RuntimeException | _: IOException) if ignoreCorruptFiles =>

+ case e @ (_: RuntimeException | _: IOException) if shouldSkipCorruptFiles() =>

Review Comment:

`HadoopRDD` and `NewHadoopRDD` also use `ignoreCorruptFiles` although they are not sql apis, do they need similar changes?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] yaooqinn commented on a diff in pull request #38024: [SPARK-40591][SQL] Fix data loss caused by ignoreCorruptFiles

Posted by GitBox <gi...@apache.org>.

yaooqinn commented on code in PR #38024:

URL: https://github.com/apache/spark/pull/38024#discussion_r981911877

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileScanRDD.scala:

##########

@@ -253,7 +259,7 @@ class FileScanRDD(

null

// Throw FileNotFoundException even if `ignoreCorruptFiles` is true

case e: FileNotFoundException if !ignoreMissingFiles => throw e

- case e @ (_: RuntimeException | _: IOException) if ignoreCorruptFiles =>

+ case e @ (_: RuntimeException | _: IOException) if shouldSkipCorruptFiles() =>

Review Comment:

Yes, planned to fix them in another PR cuz' they are in different modules, I am OK to fix together

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] srowen commented on a diff in pull request #38024: [SPARK-40591][CORE][SQL] Fix data loss caused by ignoreCorruptFiles

Posted by GitBox <gi...@apache.org>.

srowen commented on code in PR #38024:

URL: https://github.com/apache/spark/pull/38024#discussion_r982994356

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/v2/FilePartitionReader.scala:

##########

@@ -36,8 +36,15 @@ class FilePartitionReader[T](

private def ignoreMissingFiles = options.ignoreMissingFiles

private def ignoreCorruptFiles = options.ignoreCorruptFiles

+ private def ignoreCorruptFilesAfterRetries = options.ignoreCorruptFilesAfterRetries

override def next(): Boolean = {

+

+ def shouldSkipCorruptFiles(): Boolean = {

Review Comment:

Are you effectively trying to get it to fail and retry on an IOE, rather than not retry because it says, well, I'm ignoring errors anyway? OK. I'm just wondering how many cases that hurts too, where you retry an unrecoverable error longer.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] LuciferYang commented on a diff in pull request #38024: [SPARK-40591][SQL] Fix data loss caused by ignoreCorruptFiles

Posted by GitBox <gi...@apache.org>.

LuciferYang commented on code in PR #38024:

URL: https://github.com/apache/spark/pull/38024#discussion_r981889549

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileScanRDD.scala:

##########

@@ -253,7 +259,7 @@ class FileScanRDD(

null

// Throw FileNotFoundException even if `ignoreCorruptFiles` is true

case e: FileNotFoundException if !ignoreMissingFiles => throw e

- case e @ (_: RuntimeException | _: IOException) if ignoreCorruptFiles =>

+ case e @ (_: RuntimeException | _: IOException) if shouldSkipCorruptFiles() =>

Review Comment:

`HadoopRDD` and `NewHadoopRDD` also use `ignoreCorruptFiles` although they are not sql apis, don't they need similar change?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] yaooqinn commented on a diff in pull request #38024: [SPARK-40591][CORE][SQL] Fix data loss caused by ignoreCorruptFiles

Posted by GitBox <gi...@apache.org>.

yaooqinn commented on code in PR #38024:

URL: https://github.com/apache/spark/pull/38024#discussion_r983019509

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/v2/FilePartitionReader.scala:

##########

@@ -36,8 +36,15 @@ class FilePartitionReader[T](

private def ignoreMissingFiles = options.ignoreMissingFiles

private def ignoreCorruptFiles = options.ignoreCorruptFiles

+ private def ignoreCorruptFilesAfterRetries = options.ignoreCorruptFilesAfterRetries

override def next(): Boolean = {

+

+ def shouldSkipCorruptFiles(): Boolean = {

Review Comment:

> [DOCS] Whether to ignore corrupt files. If true, the Spark jobs will continue to run when encountering corrupted files and the contents that have been read will still be returned. This configuration is effective only when using file-based sources such as Parquet, JSON and ORC.

FYI, the doc says that it ignores corrupt files, so we are willing to ignore corrupt files, right? For transient failures like a network issue, we want to retry it, because the file is not corrupt. Anyway, like what you said, we can not distinguish whether a failure is transient or permanent, so in this PR, I retry them both with a limit.

> Or I could spin a different argument: if the data can't be read 2 times, and is read a 3rd time, are you OK with that being correct?

For this case, in this PR, we can set ingoreCorruptFilesAfterRetries greater than the maximum task failures, and let it `fail` after retrying enough times. Or maybe we shall rename it to `determineFileCorruptAfterRetries`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org