You are viewing a plain text version of this content. The canonical link for it is here.

Posted to issues@kylin.apache.org by GitBox <gi...@apache.org> on 2021/04/15 09:23:44 UTC

[GitHub] [kylin] zhangayqian opened a new pull request #1636: KYLIN-4966 Refresh the existing segment according to the new cuboid list in kylin4

zhangayqian opened a new pull request #1636:

URL: https://github.com/apache/kylin/pull/1636

## Proposed changes

Describe the big picture of your changes here to communicate to the maintainers why we should accept this pull request. If it fixes a bug or resolves a feature request, be sure to link to that issue.

## Types of changes

What types of changes does your code introduce to Kylin?

_Put an `x` in the boxes that apply_

- [ ] Bugfix (non-breaking change which fixes an issue)

- [ ] New feature (non-breaking change which adds functionality)

- [ ] Breaking change (fix or feature that would cause existing functionality to not work as expected)

- [ ] Documentation Update (if none of the other choices apply)

## Checklist

_Put an `x` in the boxes that apply. You can also fill these out after creating the PR. If you're unsure about any of them, don't hesitate to ask. We're here to help! This is simply a reminder of what we are going to look for before merging your code._

- [ ] I have create an issue on [Kylin's jira](https://issues.apache.org/jira/browse/KYLIN), and have described the bug/feature there in detail

- [ ] Commit messages in my PR start with the related jira ID, like "KYLIN-0000 Make Kylin project open-source"

- [ ] Compiling and unit tests pass locally with my changes

- [ ] I have added tests that prove my fix is effective or that my feature works

- [ ] If this change need a document change, I will prepare another pr against the `document` branch

- [ ] Any dependent changes have been merged

## Further comments

If this is a relatively large or complex change, kick off the discussion at user@kylin or dev@kylin by explaining why you chose the solution you did and what alternatives you considered, etc...

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] hit-lacus commented on a change in pull request #1636: KYLIN-4966 Refresh the existing segment according to the new cuboid list in kylin4

Posted by GitBox <gi...@apache.org>.

hit-lacus commented on a change in pull request #1636:

URL: https://github.com/apache/kylin/pull/1636#discussion_r638437722

##########

File path: kylin-spark-project/kylin-spark-engine/src/main/java/org/apache/kylin/engine/spark/job/FilterRecommendCuboidJob.java

##########

@@ -0,0 +1,104 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.kylin.engine.spark.job;

+

+import org.apache.hadoop.conf.Configuration;

+import org.apache.hadoop.fs.FileSystem;

+import org.apache.hadoop.fs.FileStatus;

+import org.apache.hadoop.fs.FileUtil;

+import org.apache.hadoop.fs.Path;

+import org.apache.kylin.common.util.HadoopUtil;

+import org.apache.kylin.cube.CubeInstance;

+import org.apache.kylin.cube.CubeManager;

+import org.apache.kylin.cube.CubeSegment;

+import org.apache.kylin.cube.cuboid.CuboidModeEnum;

+import org.apache.kylin.engine.mr.steps.CubingExecutableUtil;

+import org.apache.kylin.engine.spark.application.SparkApplication;

+import org.apache.kylin.engine.spark.metadata.cube.PathManager;

+import org.apache.kylin.shaded.com.google.common.base.Preconditions;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.IOException;

+import java.util.Collections;

+import java.util.Set;

+

+public class FilterRecommendCuboidJob extends SparkApplication {

Review comment:

The class name could be more meaningful.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] hit-lacus commented on a change in pull request #1636: KYLIN-4966 Refresh the existing segment according to the new cuboid list in kylin4

Posted by GitBox <gi...@apache.org>.

hit-lacus commented on a change in pull request #1636:

URL: https://github.com/apache/kylin/pull/1636#discussion_r639436054

##########

File path: core-job/src/main/java/org/apache/kylin/job/constant/ExecutableConstants.java

##########

@@ -94,5 +94,6 @@ private ExecutableConstants() {

public static final String FLINK_SPECIFIC_CONFIG_NAME_MERGE_DICTIONARY = "mergedict";

//kylin on parquetv2

public static final String STEP_NAME_DETECT_RESOURCE = "Detect Resource";

+ public static final String STEP_NAME_BUILD_CUBOID_FROM_PARENT_CUBOID = "Build recommend cuboid from parent cuboid";

Review comment:

Use `exist` or `parent` ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] zhangayqian edited a comment on pull request #1636: KYLIN-4966 Refresh the existing segment according to the new cuboid list in kylin4

Posted by GitBox <gi...@apache.org>.

zhangayqian edited a comment on pull request #1636:

URL: https://github.com/apache/kylin/pull/1636#issuecomment-822135049

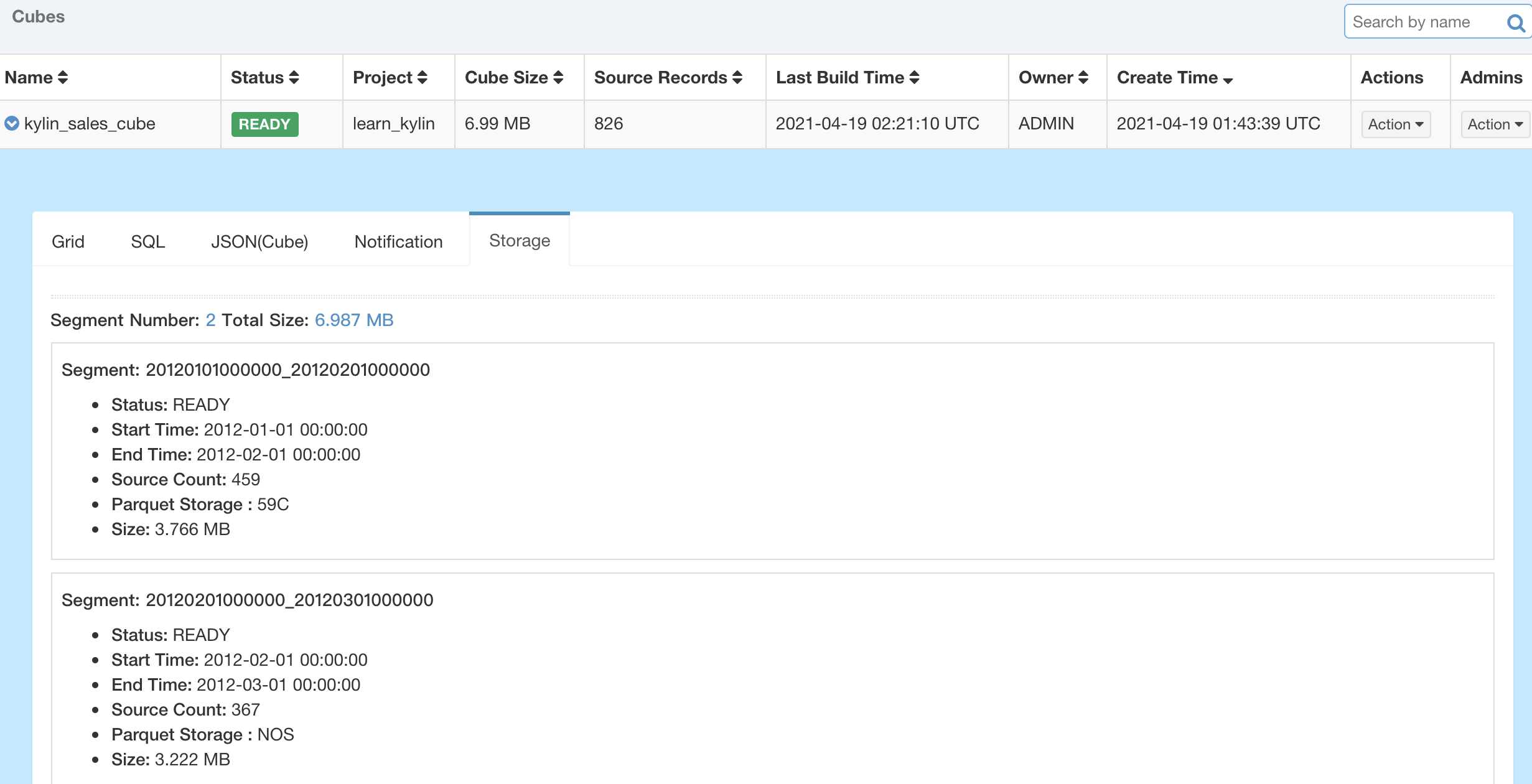

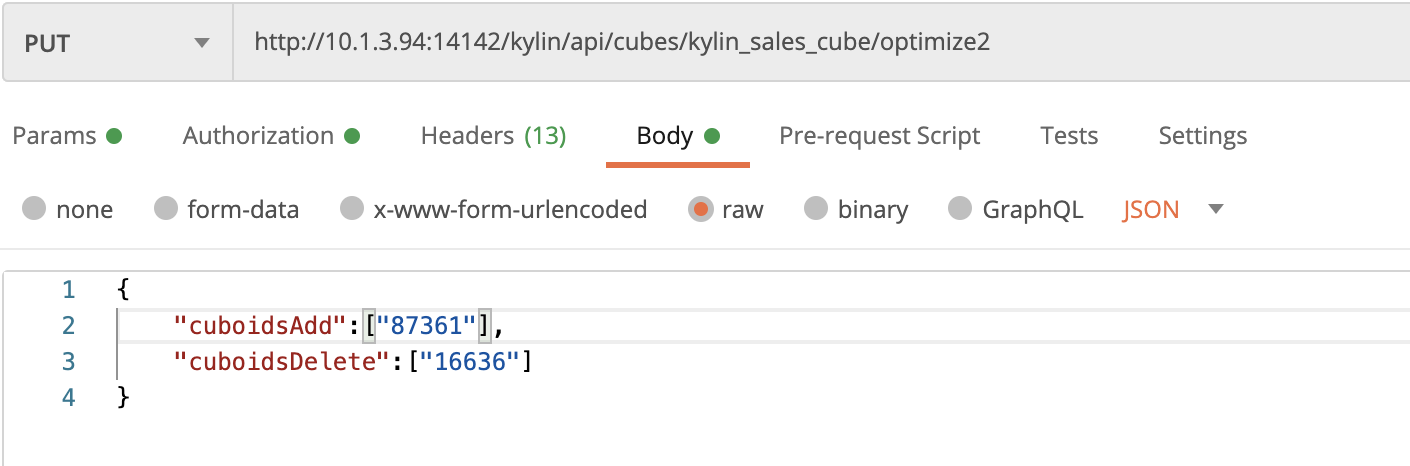

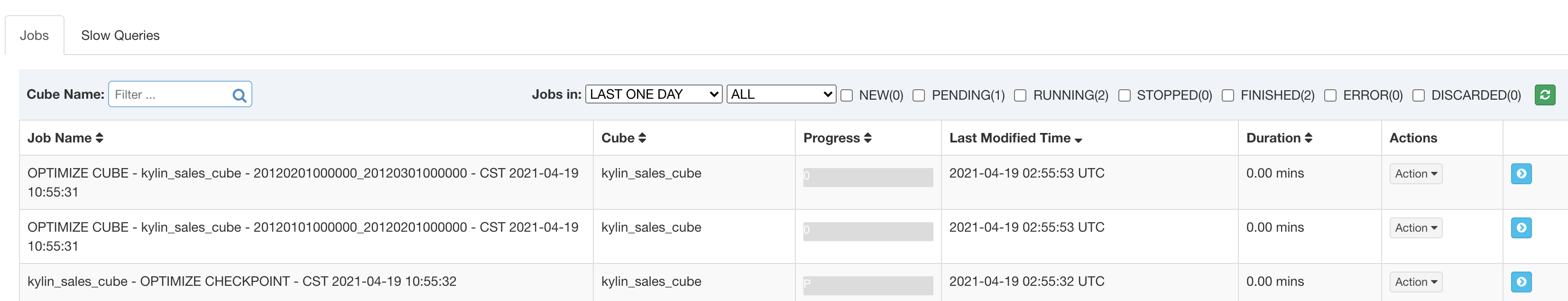

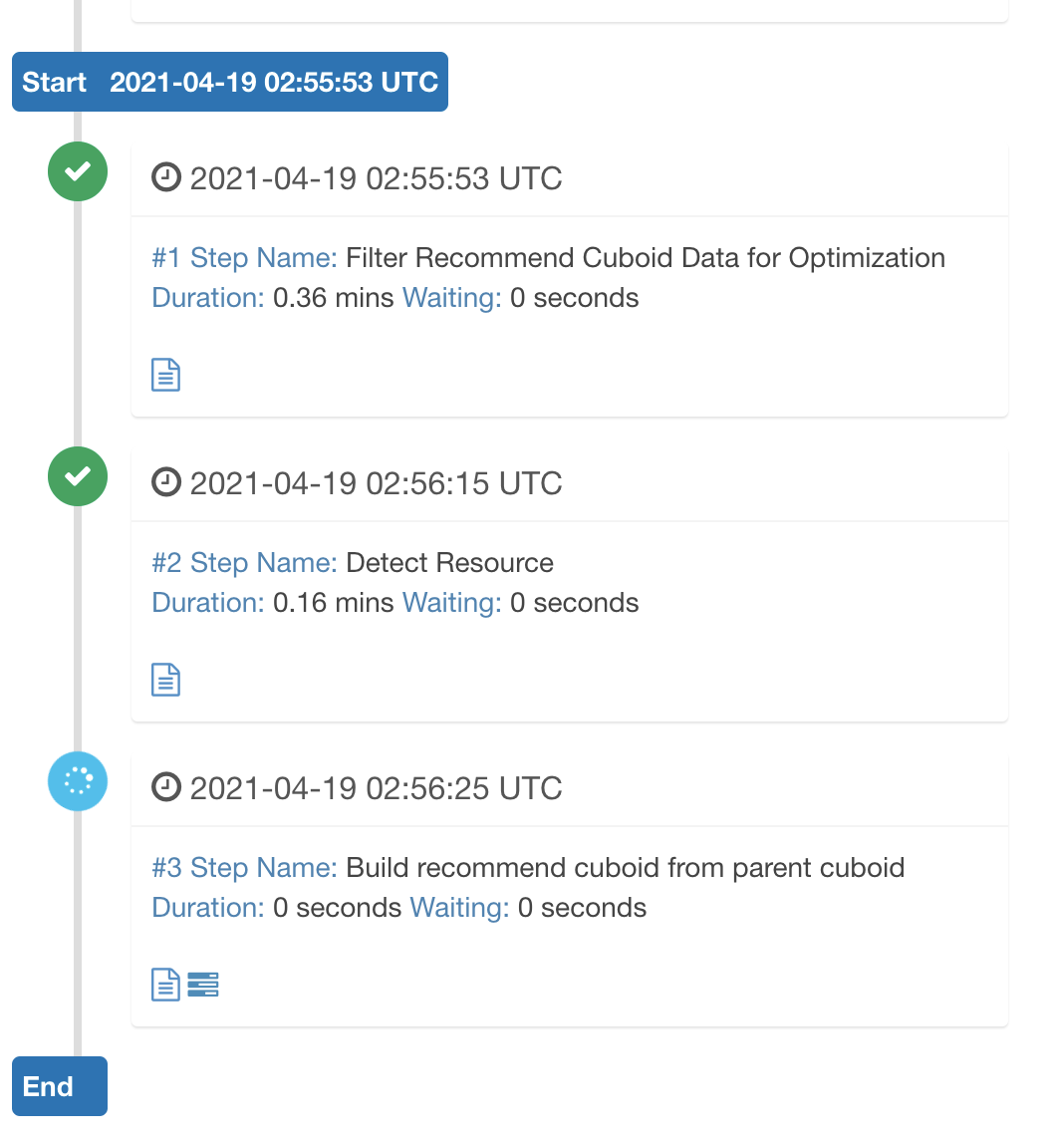

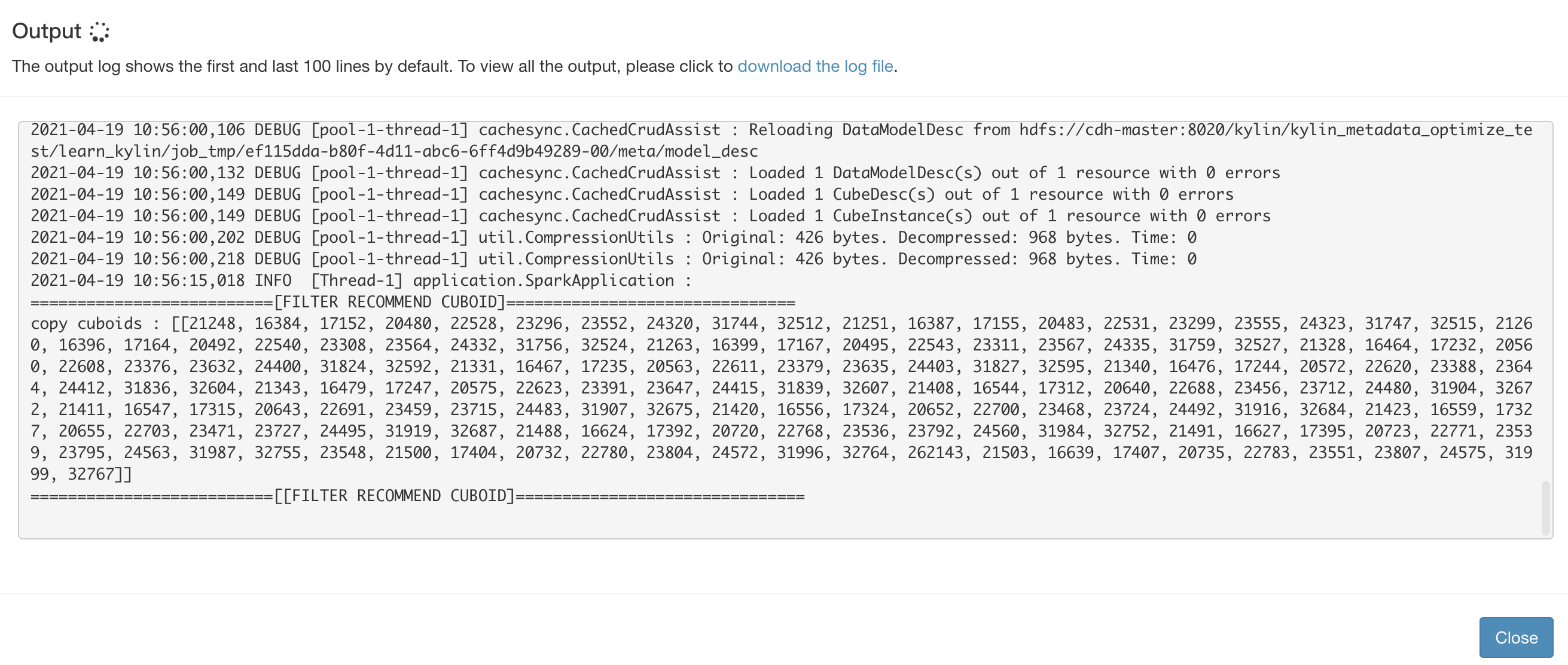

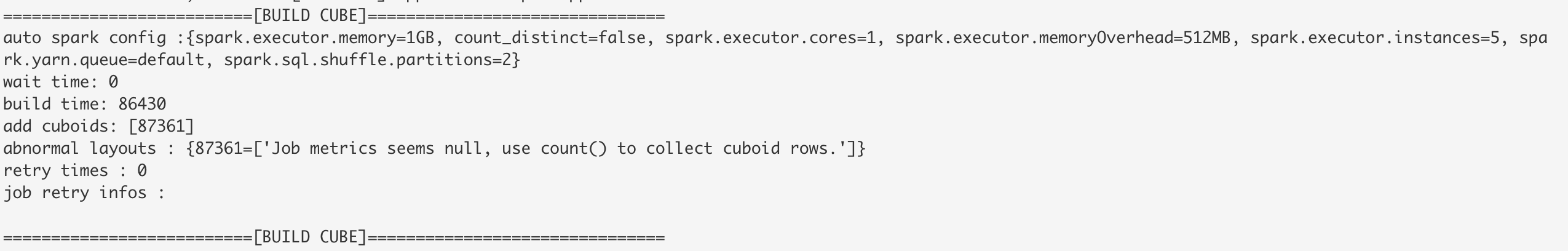

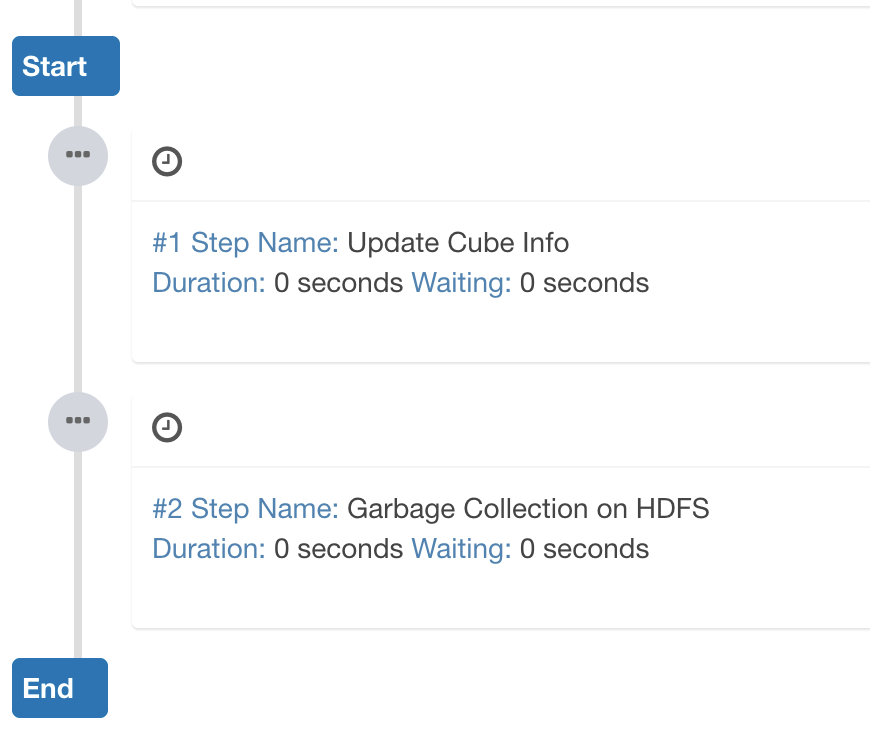

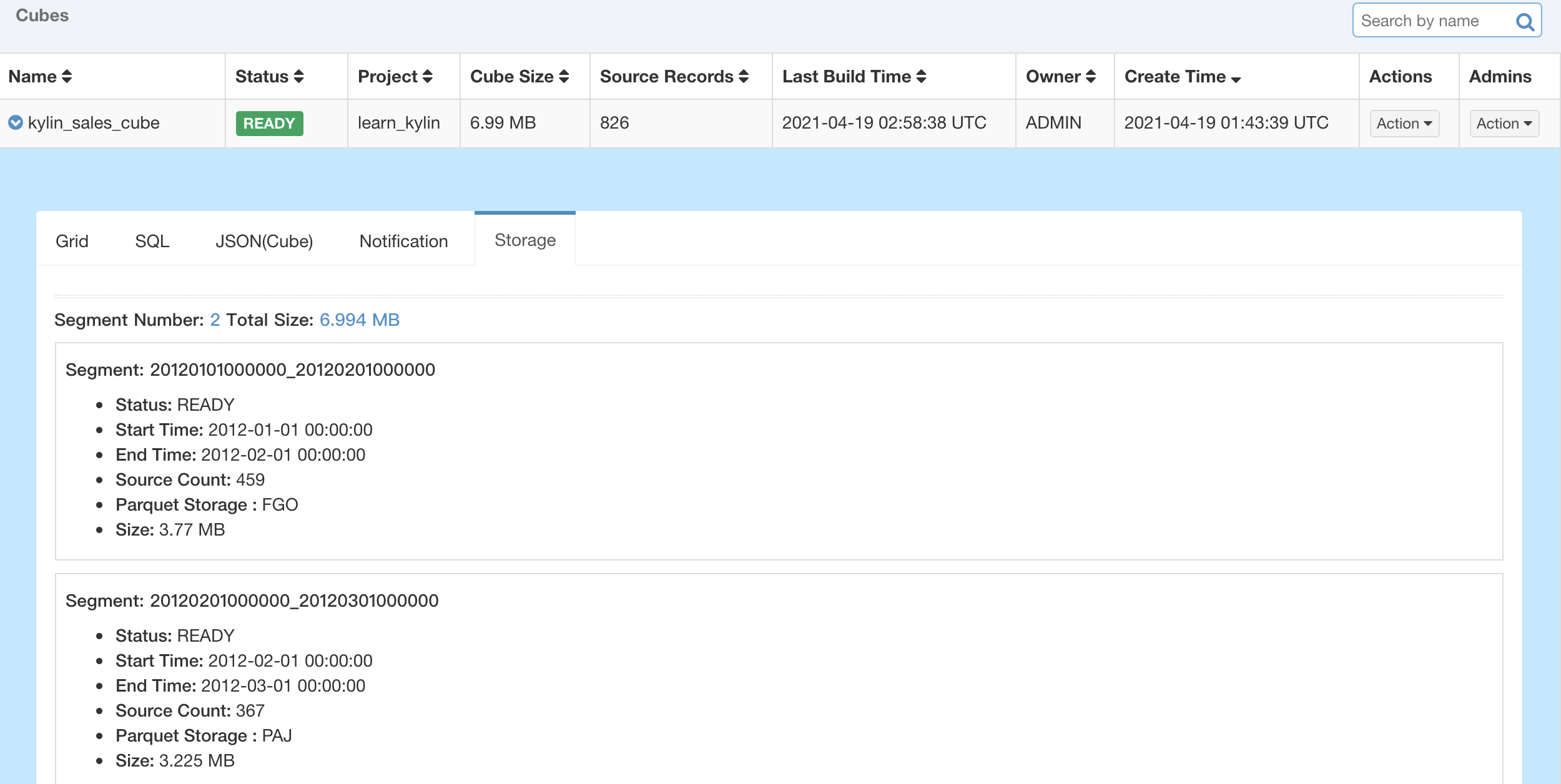

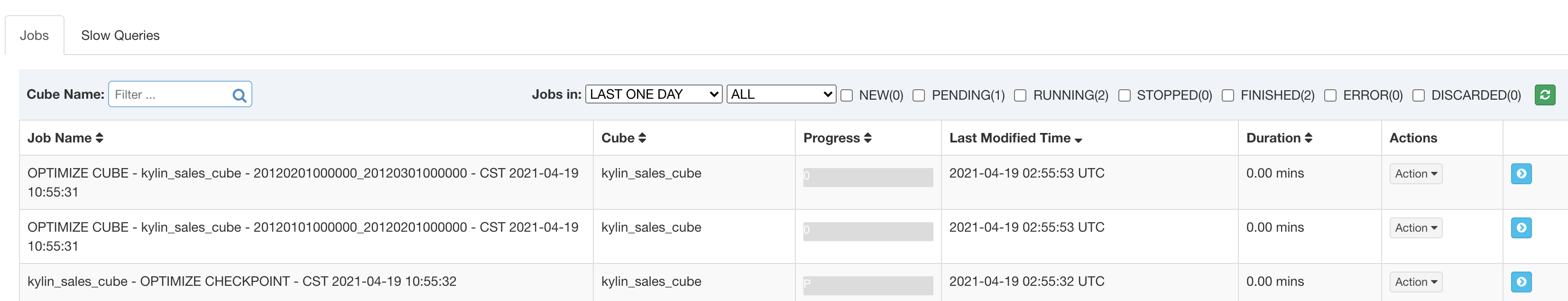

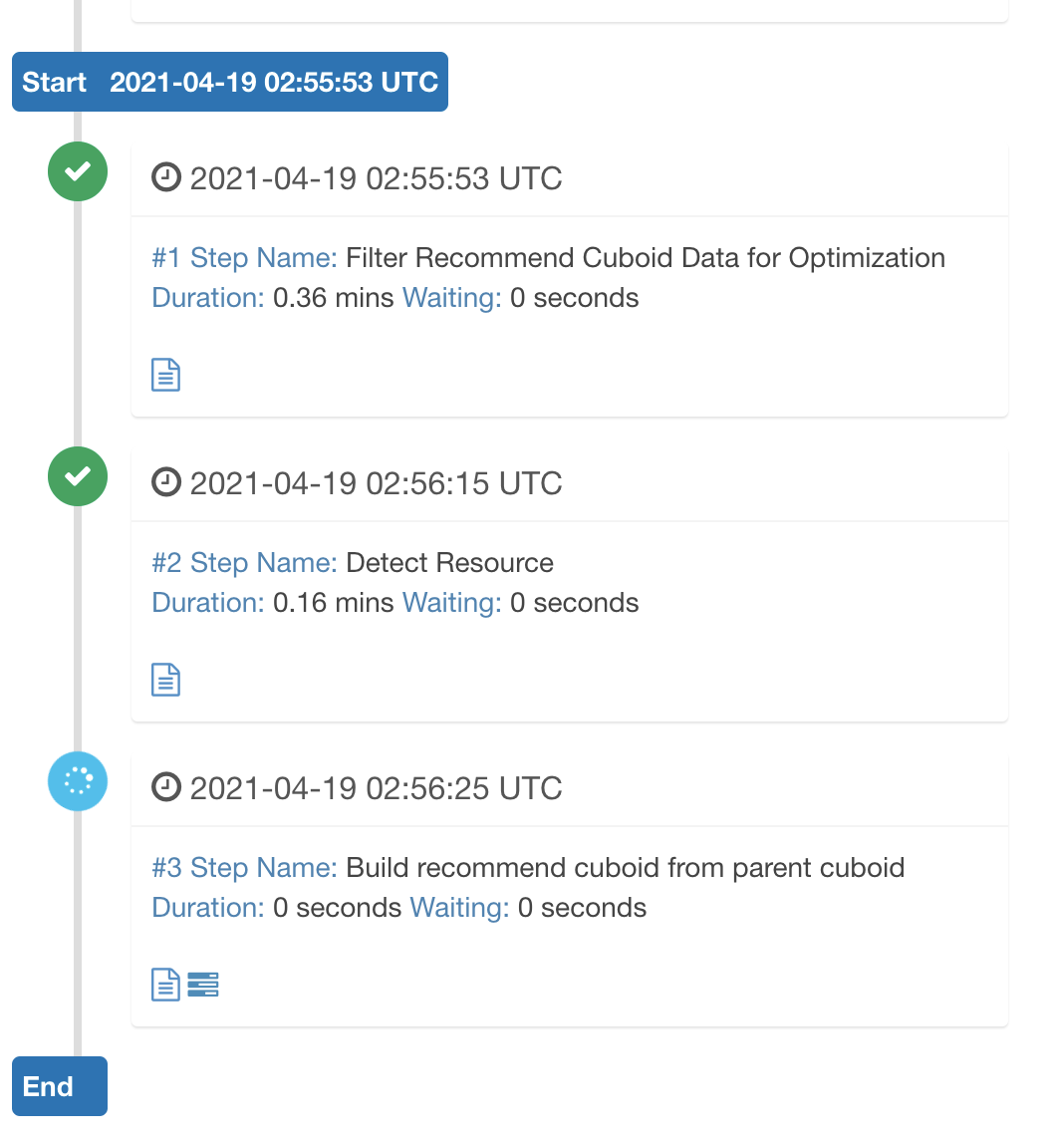

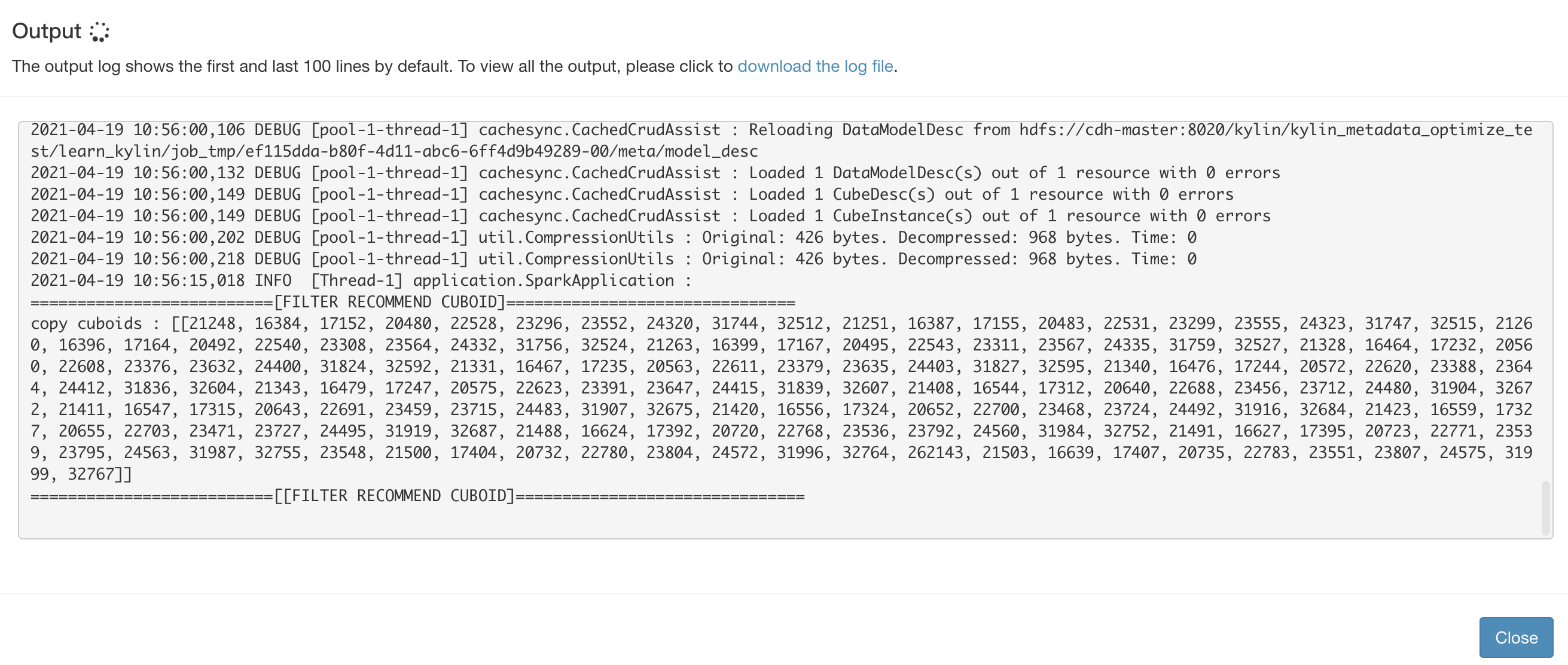

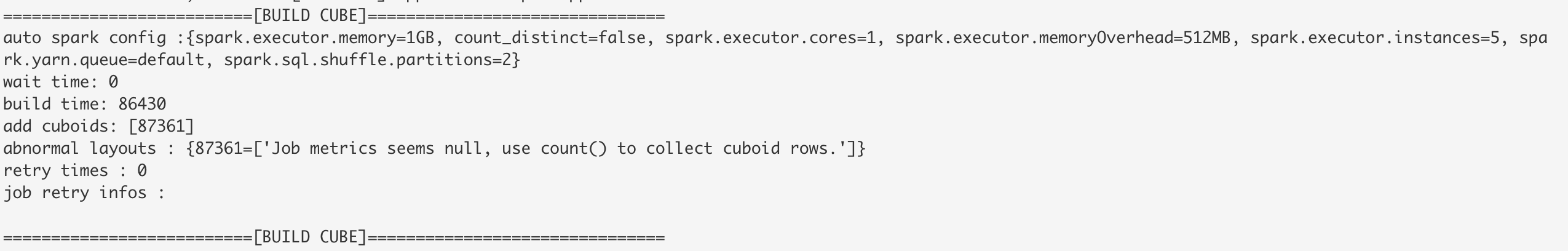

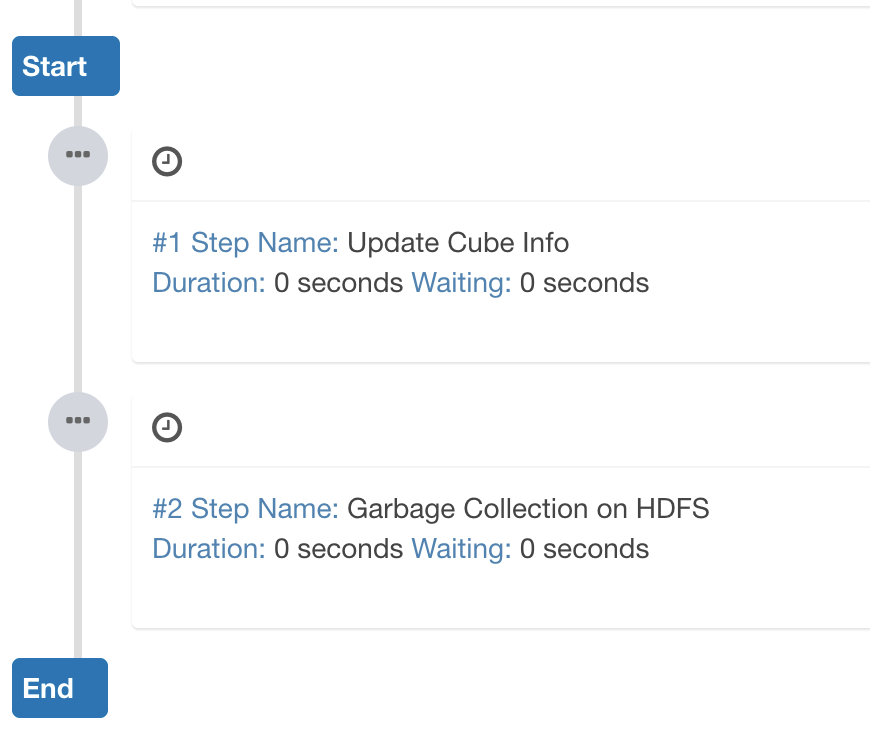

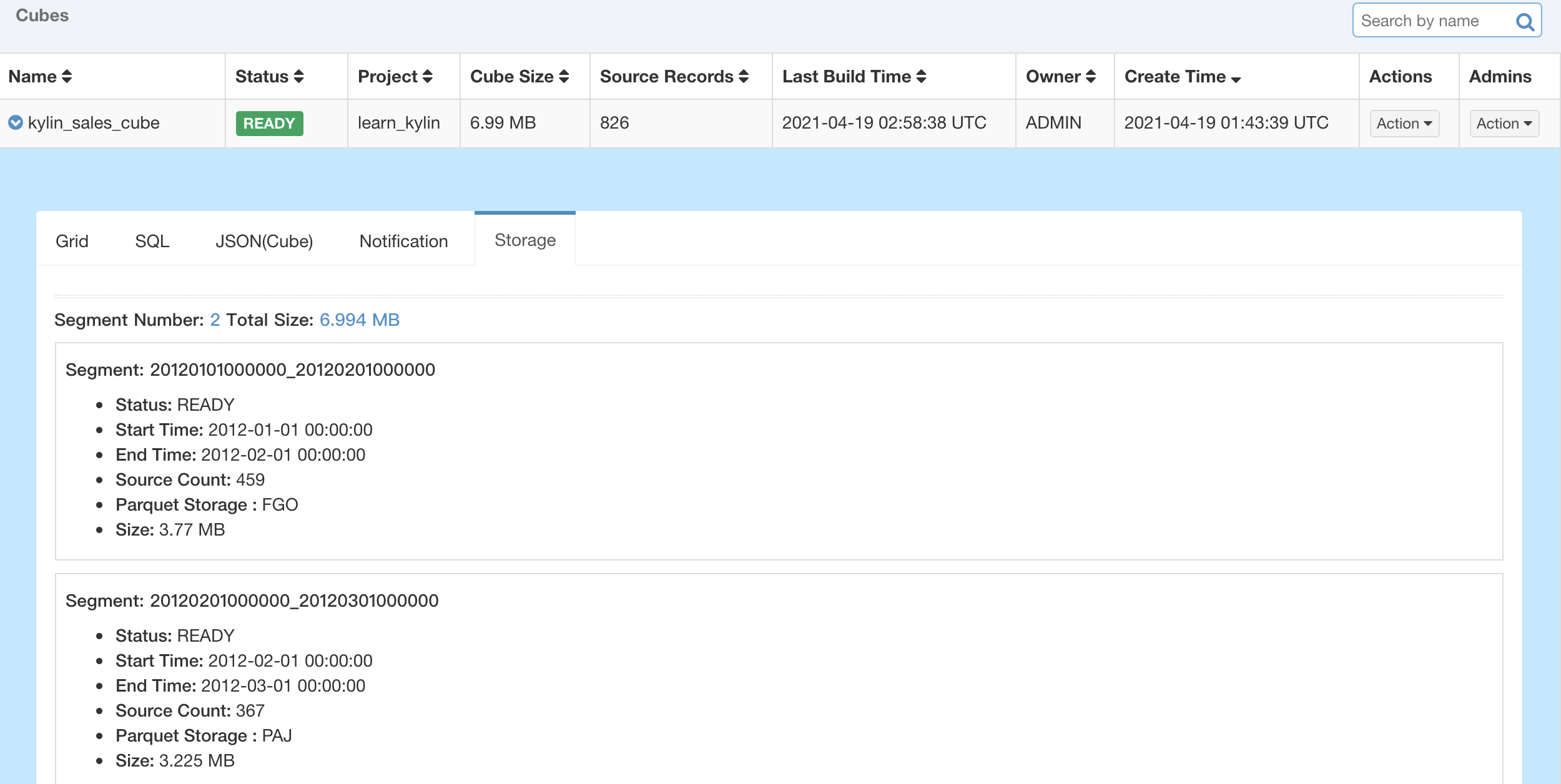

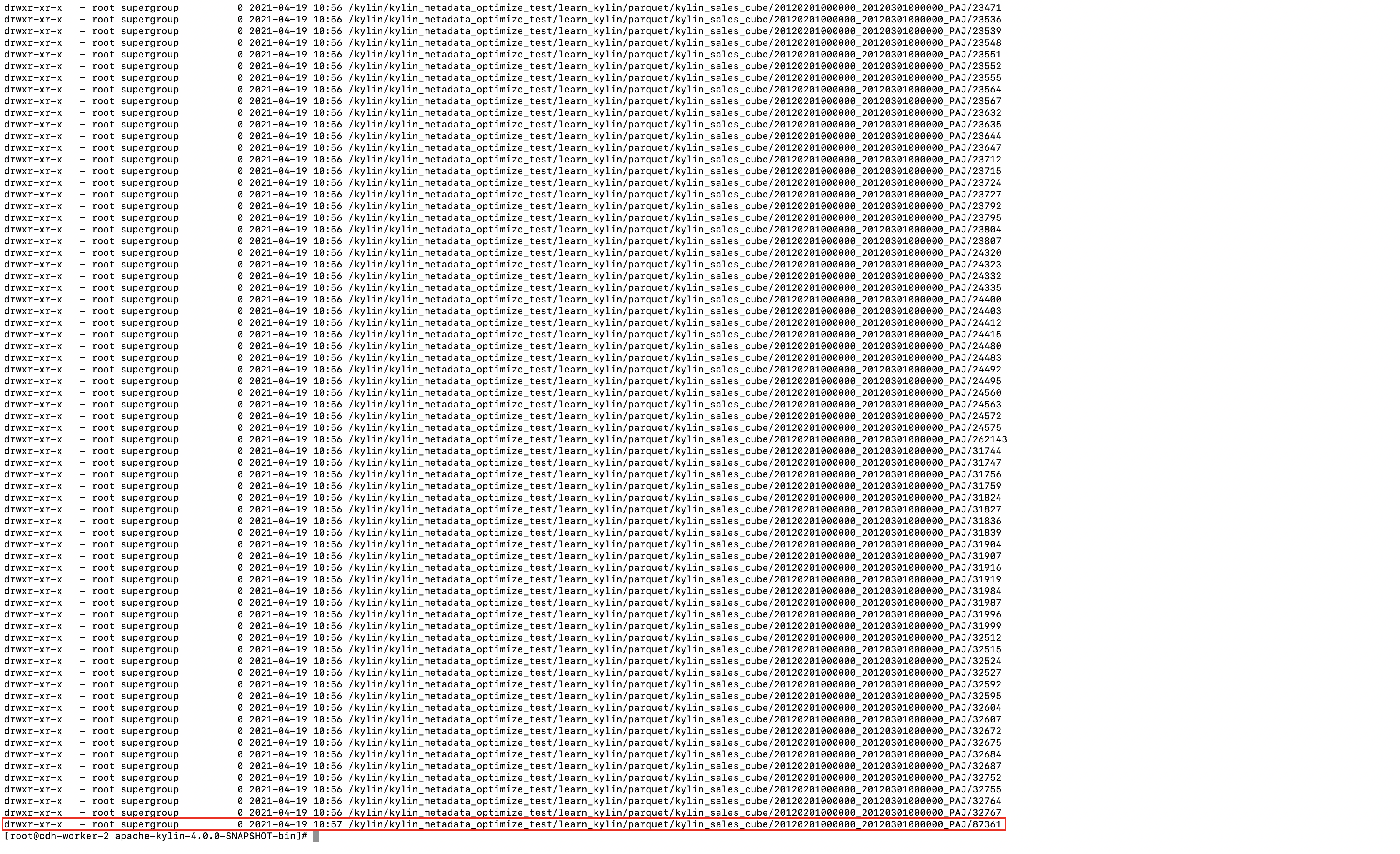

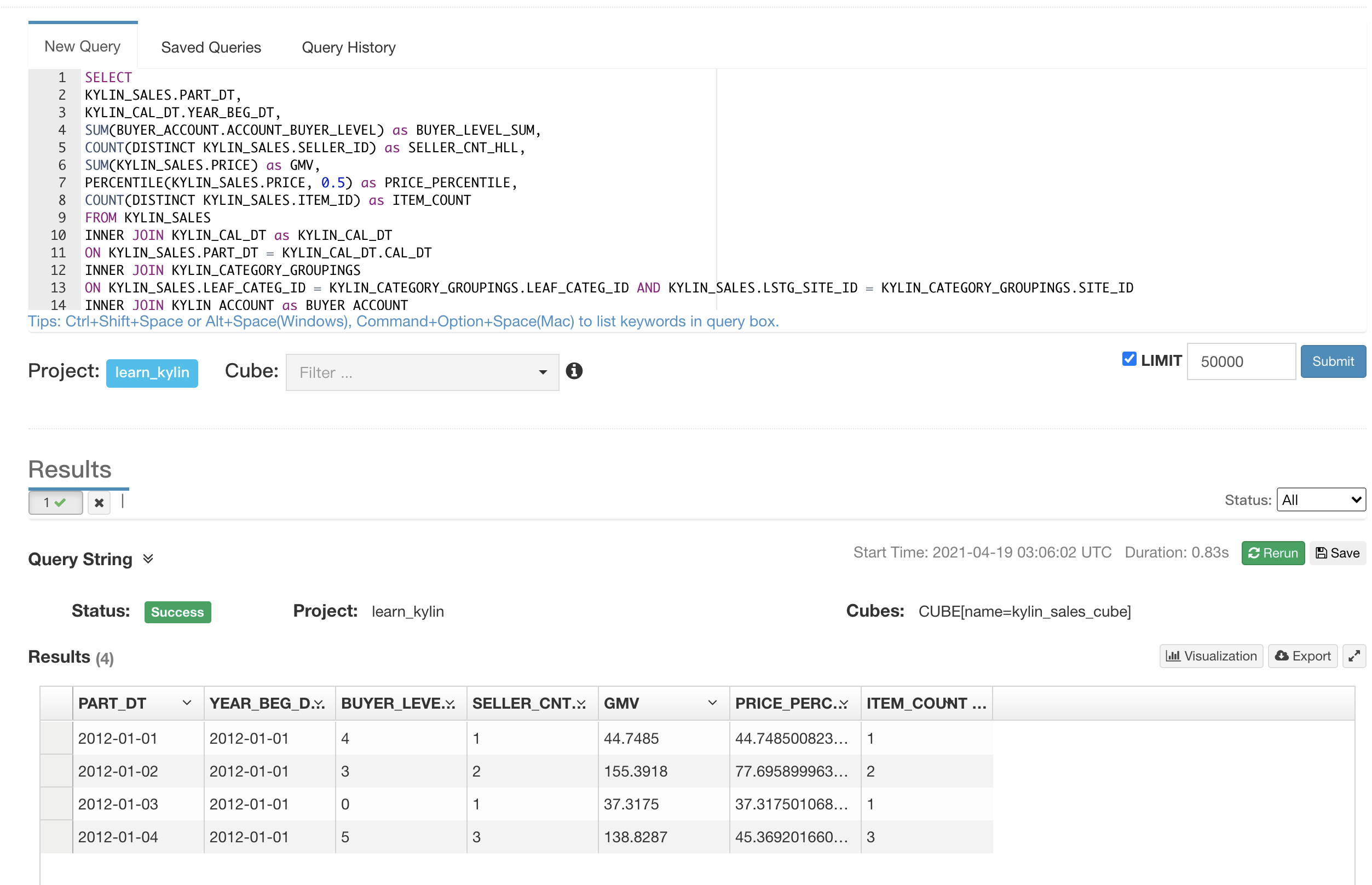

## Test Evidence

- Set `kylin.engine.segment-statistics-enabled=true`

- Build two segment

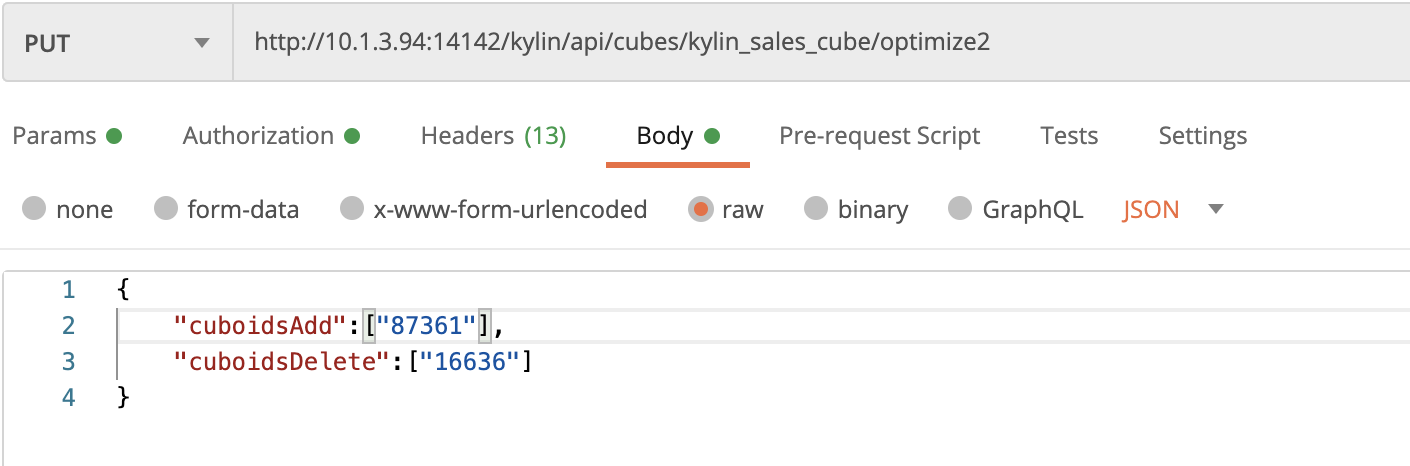

- Call the API to modify cuboid list and submit optimizing job

- Optimize Job

- Checkpoint Job

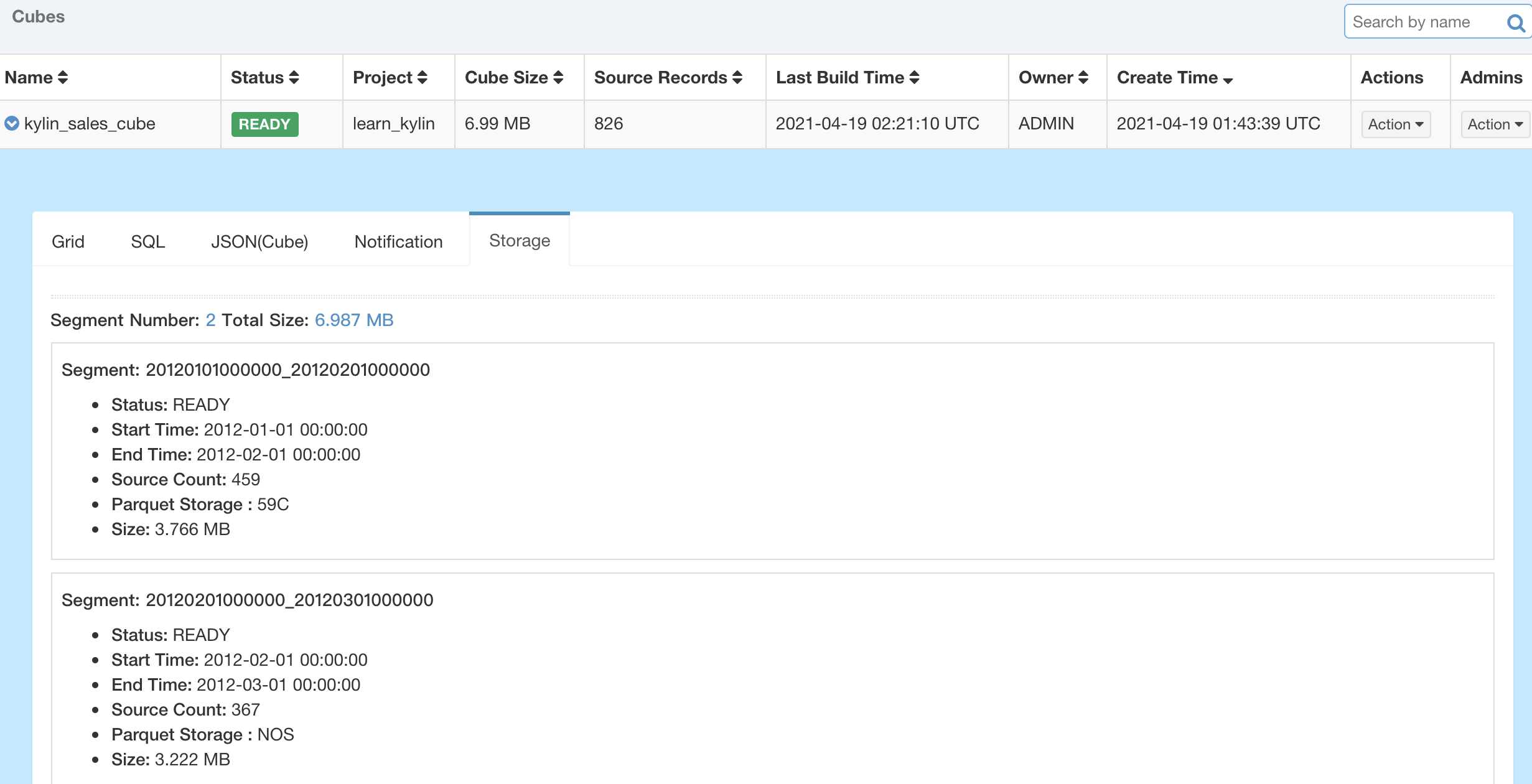

- After all jobs complete,cube segment meta have been updated

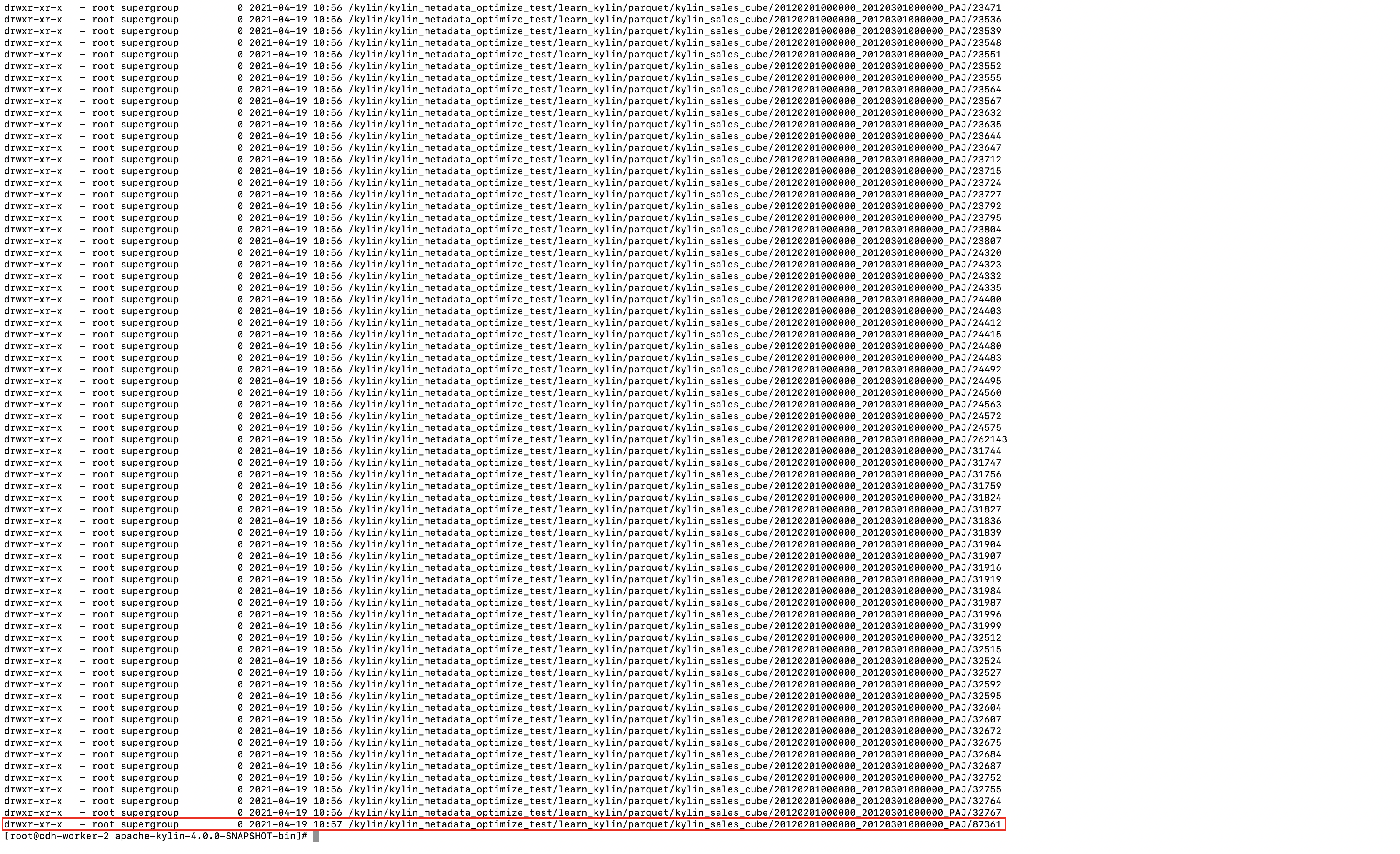

- Cuboid file in hdfs

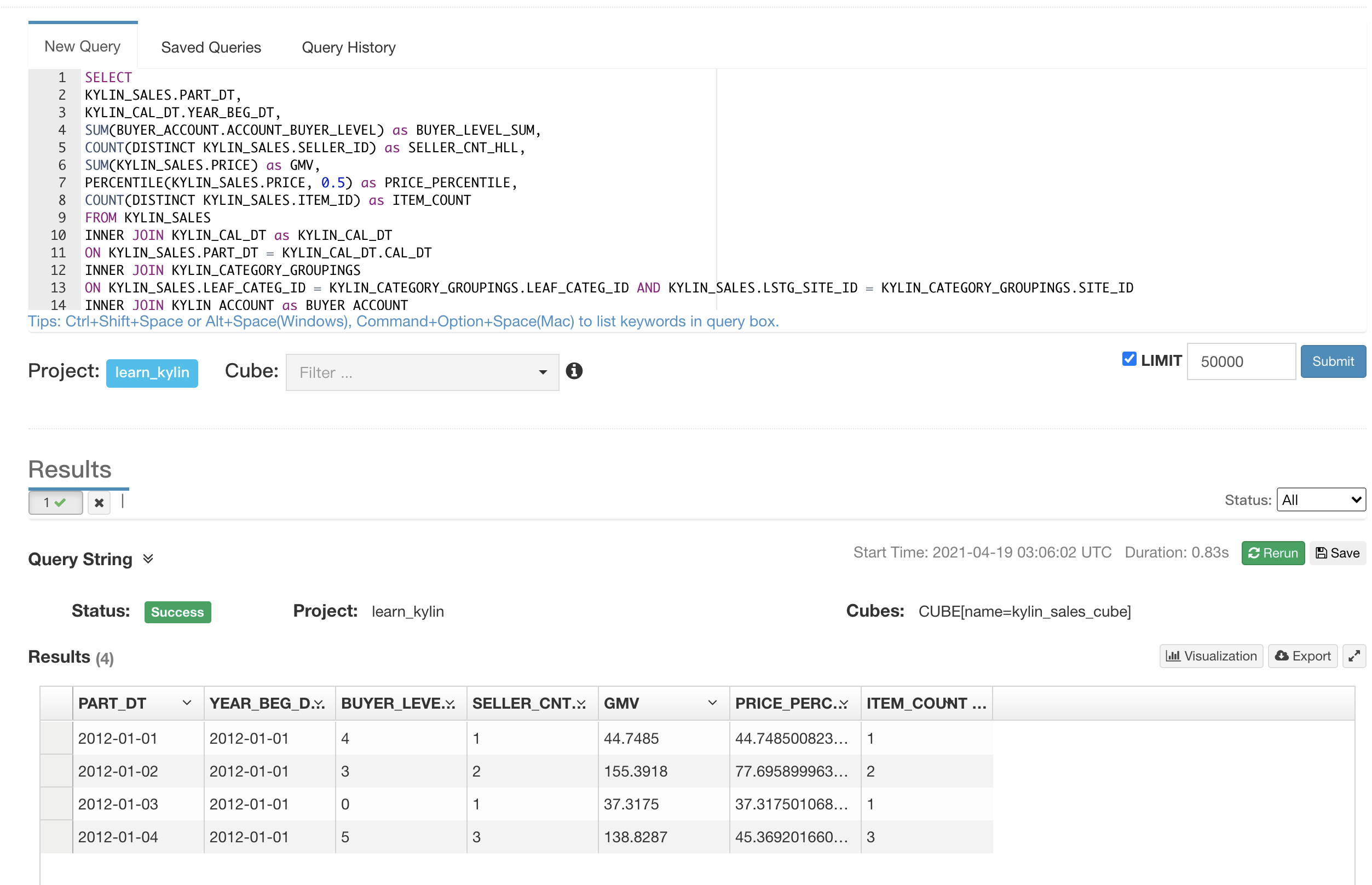

- Query

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] hit-lacus commented on a change in pull request #1636: KYLIN-4966 Refresh the existing segment according to the new cuboid list in kylin4

Posted by GitBox <gi...@apache.org>.

hit-lacus commented on a change in pull request #1636:

URL: https://github.com/apache/kylin/pull/1636#discussion_r638436850

##########

File path: kylin-spark-project/kylin-spark-engine/src/main/java/org/apache/kylin/engine/spark/job/FilterRecommendCuboidJob.java

##########

@@ -0,0 +1,104 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.kylin.engine.spark.job;

+

+import org.apache.hadoop.conf.Configuration;

+import org.apache.hadoop.fs.FileSystem;

+import org.apache.hadoop.fs.FileStatus;

+import org.apache.hadoop.fs.FileUtil;

+import org.apache.hadoop.fs.Path;

+import org.apache.kylin.common.util.HadoopUtil;

+import org.apache.kylin.cube.CubeInstance;

+import org.apache.kylin.cube.CubeManager;

+import org.apache.kylin.cube.CubeSegment;

+import org.apache.kylin.cube.cuboid.CuboidModeEnum;

+import org.apache.kylin.engine.mr.steps.CubingExecutableUtil;

+import org.apache.kylin.engine.spark.application.SparkApplication;

+import org.apache.kylin.engine.spark.metadata.cube.PathManager;

+import org.apache.kylin.shaded.com.google.common.base.Preconditions;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.IOException;

+import java.util.Collections;

+import java.util.Set;

+

+public class FilterRecommendCuboidJob extends SparkApplication {

+ protected static final Logger logger = LoggerFactory.getLogger(FilterRecommendCuboidJob.class);

+

+ private long baseCuboid;

+ private Set<Long> recommendCuboids;

+

+ private FileSystem fs = HadoopUtil.getWorkingFileSystem();

+ private Configuration conf = HadoopUtil.getCurrentConfiguration();

+

+ public FilterRecommendCuboidJob() {

+

+ }

+

+ public String getCuboidRootPath(CubeSegment segment) {

+ return PathManager.getSegmentParquetStoragePath(segment.getCubeInstance(), segment.getName(),

+ segment.getStorageLocationIdentifier());

+ }

+

+ @Override

+ protected void doExecute() throws Exception {

+ infos.clearReusedCuboids();

+ final CubeManager mgr = CubeManager.getInstance(config);

+ final CubeInstance cube = mgr.getCube(CubingExecutableUtil.getCubeName(this.getParams())).latestCopyForWrite();

+ final CubeSegment optimizeSegment = cube.getSegmentById(CubingExecutableUtil.getSegmentId(this.getParams()));

+

+ CubeSegment oldSegment = optimizeSegment.getCubeInstance().getOriginalSegmentToOptimize(optimizeSegment);

+ Preconditions.checkNotNull(oldSegment,

+ "cannot find the original segment to be optimized by " + optimizeSegment);

+

+ infos.recordReusedCuboids(Collections.singleton(cube.getCuboidsByMode(CuboidModeEnum.RECOMMEND_EXISTING)));

+

+ baseCuboid = cube.getCuboidScheduler().getBaseCuboidId();

+ recommendCuboids = cube.getCuboidsRecommend();

+

+ Preconditions.checkNotNull(recommendCuboids, "The recommend cuboid map could not be null");

+

+ Path originalCuboidPath = new Path(getCuboidRootPath(oldSegment));

+

+ try {

+ for (FileStatus cuboid : fs.listStatus(originalCuboidPath)) {

+ String cuboidId = cuboid.getPath().getName();

+ if (cuboidId.equals(String.valueOf(baseCuboid)) || recommendCuboids.contains(Long.valueOf(cuboidId))) {

+ Path optimizeCuboidPath = new Path(getCuboidRootPath(optimizeSegment) + "/" + cuboidId);

+ FileUtil.copy(fs, cuboid.getPath(), fs, optimizeCuboidPath, false, true, conf);

+ logger.info("Copy cuboid {} storage from original segment to optimized segment", cuboidId);

+ }

+ }

+ } catch (IOException e) {

+ logger.error("Failed to filter cuboid", e);

Review comment:

Could you please add cleanup step in catch clause? Maybe remove file under `optimizeCuboidPath` ? Or add cleanup task to StorageCleanupJob ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] zhangayqian commented on pull request #1636: KYLIN-4966 Refresh the existing segment according to the new cuboid list in kylin4

Posted by GitBox <gi...@apache.org>.

zhangayqian commented on pull request #1636:

URL: https://github.com/apache/kylin/pull/1636#issuecomment-822135049

## Test Evidence

- Set `kylin.engine.segment-statistics-enabled=true`

- Build two segment

- Call the API to modify cuboid list and submit optimizing job

- Optimize Job

- Checkpoint Job

- After all jobs complete,cube segment meta have been updated

- Cuboid file in hdfs

- Query

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] hit-lacus merged pull request #1636: KYLIN-4966 Refresh the existing segment according to the new cuboid list in kylin4

Posted by GitBox <gi...@apache.org>.

hit-lacus merged pull request #1636:

URL: https://github.com/apache/kylin/pull/1636

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] hit-lacus commented on a change in pull request #1636: KYLIN-4966 Refresh the existing segment according to the new cuboid list in kylin4

Posted by GitBox <gi...@apache.org>.

hit-lacus commented on a change in pull request #1636:

URL: https://github.com/apache/kylin/pull/1636#discussion_r639458670

##########

File path: kylin-spark-project/kylin-spark-engine/src/main/java/org/apache/kylin/engine/spark/job/NSparkBatchOptimizeJobCheckpointBuilder.java

##########

@@ -0,0 +1,88 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.kylin.engine.spark.job;

+

+import com.google.common.base.Preconditions;

+import org.apache.kylin.common.KylinConfig;

+import org.apache.kylin.cube.CubeInstance;

+import org.apache.kylin.engine.mr.steps.CubingExecutableUtil;

+import org.apache.kylin.job.constant.ExecutableConstants;

+import org.apache.kylin.job.execution.CheckpointExecutable;

+import org.apache.kylin.metadata.project.ProjectInstance;

+import org.apache.kylin.metadata.project.ProjectManager;

+

+import java.text.SimpleDateFormat;

+import java.util.Date;

+import java.util.List;

+import java.util.Locale;

+

+public class NSparkBatchOptimizeJobCheckpointBuilder {

+ protected SimpleDateFormat format = new SimpleDateFormat("z yyyy-MM-dd HH:mm:ss", Locale.ROOT);

+

+ final protected CubeInstance cube;

+ final protected String submitter;

+

+ public NSparkBatchOptimizeJobCheckpointBuilder(CubeInstance cube, String submitter) {

+ this.cube = cube;

+ this.submitter = submitter;

+

+ Preconditions.checkNotNull(cube.getFirstSegment(), "Cube " + cube + " is empty!!!");

+ }

+

+ public CheckpointExecutable build() {

+ KylinConfig kylinConfig = cube.getConfig();

+ List<ProjectInstance> projList = ProjectManager.getInstance(kylinConfig).findProjects(cube.getType(),

+ cube.getName());

+ if (projList == null || projList.size() == 0) {

+ throw new RuntimeException("Cannot find the project containing the cube " + cube.getName() + "!!!");

+ } else if (projList.size() >= 2) {

+ throw new RuntimeException("Find more than one project containing the cube " + cube.getName()

+ + ". It does't meet the uniqueness requirement!!! ");

+ }

+

+ CheckpointExecutable checkpointJob = new CheckpointExecutable();

+ checkpointJob.setSubmitter(submitter);

+ CubingExecutableUtil.setCubeName(cube.getName(), checkpointJob.getParams());

+ checkpointJob.setName(

+ cube.getName() + " - OPTIMIZE CHECKPOINT - " + format.format(new Date(System.currentTimeMillis())));

+ checkpointJob.setProjectName(projList.get(0).getName());

+

+ // Phase 1: Update cube information

+ checkpointJob.addTask(createUpdateCubeInfoAfterCheckpointStep());

+

+ // Phase 2: Cleanup hdfs storage

+ checkpointJob.addTask(createCleanupHdfsStorageStep());

+

+ return checkpointJob;

+ }

+

+ private NSparkUpdateCubeInfoAfterOptimizeStep createUpdateCubeInfoAfterCheckpointStep() {

Review comment:

`AfterCheckpointStep` or `AfterOptimizeJob` ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] hit-lacus commented on a change in pull request #1636: KYLIN-4966 Refresh the existing segment according to the new cuboid list in kylin4

Posted by GitBox <gi...@apache.org>.

hit-lacus commented on a change in pull request #1636:

URL: https://github.com/apache/kylin/pull/1636#discussion_r639457120

##########

File path: kylin-spark-project/kylin-spark-engine/src/main/java/org/apache/kylin/engine/spark/job/NSparkBatchOptimizeJobCheckpointBuilder.java

##########

@@ -0,0 +1,88 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.kylin.engine.spark.job;

+

+import com.google.common.base.Preconditions;

+import org.apache.kylin.common.KylinConfig;

+import org.apache.kylin.cube.CubeInstance;

+import org.apache.kylin.engine.mr.steps.CubingExecutableUtil;

+import org.apache.kylin.job.constant.ExecutableConstants;

+import org.apache.kylin.job.execution.CheckpointExecutable;

+import org.apache.kylin.metadata.project.ProjectInstance;

+import org.apache.kylin.metadata.project.ProjectManager;

+

+import java.text.SimpleDateFormat;

+import java.util.Date;

+import java.util.List;

+import java.util.Locale;

+

+public class NSparkBatchOptimizeJobCheckpointBuilder {

+ protected SimpleDateFormat format = new SimpleDateFormat("z yyyy-MM-dd HH:mm:ss", Locale.ROOT);

+

+ final protected CubeInstance cube;

+ final protected String submitter;

+

+ public NSparkBatchOptimizeJobCheckpointBuilder(CubeInstance cube, String submitter) {

+ this.cube = cube;

+ this.submitter = submitter;

+

+ Preconditions.checkNotNull(cube.getFirstSegment(), "Cube " + cube + " is empty!!!");

+ }

+

+ public CheckpointExecutable build() {

+ KylinConfig kylinConfig = cube.getConfig();

+ List<ProjectInstance> projList = ProjectManager.getInstance(kylinConfig).findProjects(cube.getType(),

+ cube.getName());

+ if (projList == null || projList.size() == 0) {

+ throw new RuntimeException("Cannot find the project containing the cube " + cube.getName() + "!!!");

+ } else if (projList.size() >= 2) {

Review comment:

In which case, could `projList.size() >= 2` happen ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] hit-lacus commented on a change in pull request #1636: KYLIN-4966 Refresh the existing segment according to the new cuboid list in kylin4

Posted by GitBox <gi...@apache.org>.

hit-lacus commented on a change in pull request #1636:

URL: https://github.com/apache/kylin/pull/1636#discussion_r639466830

##########

File path: kylin-spark-project/kylin-spark-engine/src/main/java/org/apache/kylin/engine/spark/job/NSparkOptimizingStep.java

##########

@@ -0,0 +1,94 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.kylin.engine.spark.job;

+

+import org.apache.kylin.common.KylinConfig;

+import org.apache.kylin.cube.CubeInstance;

+import org.apache.kylin.cube.CubeManager;

+import org.apache.kylin.engine.mr.CubingJob;

+import org.apache.kylin.engine.spark.metadata.cube.PathManager;

+import org.apache.kylin.engine.spark.utils.MetaDumpUtil;

+import org.apache.kylin.engine.spark.utils.UpdateMetadataUtil;

+import org.apache.kylin.job.constant.ExecutableConstants;

+import org.apache.kylin.job.exception.ExecuteException;

+import org.apache.kylin.job.execution.ExecuteResult;

+import org.apache.kylin.metadata.MetadataConstants;

+import org.apache.kylin.shaded.com.google.common.collect.Maps;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.IOException;

+import java.util.Arrays;

+import java.util.Map;

+import java.util.Set;

+

+public class NSparkOptimizingStep extends NSparkExecutable {

+ private static final Logger logger = LoggerFactory.getLogger(NSparkOptimizingStep.class);

+

+ // called by reflection

+ public NSparkOptimizingStep() {

+ }

+

+ public NSparkOptimizingStep(String sparkSubmitClassName) {

+ this.setSparkSubmitClassName(sparkSubmitClassName);

+ this.setName(ExecutableConstants.STEP_NAME_BUILD_CUBOID_FROM_PARENT_CUBOID);

+ }

+

+ @Override

+ protected Set<String> getMetadataDumpList(KylinConfig config) {

+ String cubeId = getParam(MetadataConstants.P_CUBE_ID);

+ CubeInstance cubeInstance = CubeManager.getInstance(config).getCubeByUuid(cubeId);

+ return MetaDumpUtil.collectCubeMetadata(cubeInstance);

+ }

+

+ public static class Mockup {

+ public static void main(String[] args) {

+ logger.info(NSparkCubingStep.Mockup.class + ".main() invoked, args: " + Arrays.toString(args));

+ }

+ }

+

+ @Override

+ public boolean needMergeMetadata() {

Review comment:

Why overwrite this method?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] hit-lacus commented on a change in pull request #1636: KYLIN-4966 Refresh the existing segment according to the new cuboid list in kylin4

Posted by GitBox <gi...@apache.org>.

hit-lacus commented on a change in pull request #1636:

URL: https://github.com/apache/kylin/pull/1636#discussion_r639446171

##########

File path: kylin-spark-project/kylin-spark-engine/src/main/java/org/apache/kylin/engine/spark/job/FilterRecommendCuboidJob.java

##########

@@ -0,0 +1,104 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.kylin.engine.spark.job;

+

+import org.apache.hadoop.conf.Configuration;

+import org.apache.hadoop.fs.FileSystem;

+import org.apache.hadoop.fs.FileStatus;

+import org.apache.hadoop.fs.FileUtil;

+import org.apache.hadoop.fs.Path;

+import org.apache.kylin.common.util.HadoopUtil;

+import org.apache.kylin.cube.CubeInstance;

+import org.apache.kylin.cube.CubeManager;

+import org.apache.kylin.cube.CubeSegment;

+import org.apache.kylin.cube.cuboid.CuboidModeEnum;

+import org.apache.kylin.engine.mr.steps.CubingExecutableUtil;

+import org.apache.kylin.engine.spark.application.SparkApplication;

+import org.apache.kylin.engine.spark.metadata.cube.PathManager;

+import org.apache.kylin.shaded.com.google.common.base.Preconditions;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.IOException;

+import java.util.Collections;

+import java.util.Set;

+

+public class FilterRecommendCuboidJob extends SparkApplication {

+ protected static final Logger logger = LoggerFactory.getLogger(FilterRecommendCuboidJob.class);

+

+ private long baseCuboid;

+ private Set<Long> recommendCuboids;

+

+ private FileSystem fs = HadoopUtil.getWorkingFileSystem();

+ private Configuration conf = HadoopUtil.getCurrentConfiguration();

+

+ public FilterRecommendCuboidJob() {

+

+ }

+

+ public String getCuboidRootPath(CubeSegment segment) {

+ return PathManager.getSegmentParquetStoragePath(segment.getCubeInstance(), segment.getName(),

+ segment.getStorageLocationIdentifier());

+ }

+

+ @Override

+ protected void doExecute() throws Exception {

+ infos.clearReusedCuboids();

+ final CubeManager mgr = CubeManager.getInstance(config);

+ final CubeInstance cube = mgr.getCube(CubingExecutableUtil.getCubeName(this.getParams())).latestCopyForWrite();

+ final CubeSegment optimizeSegment = cube.getSegmentById(CubingExecutableUtil.getSegmentId(this.getParams()));

+

+ CubeSegment oldSegment = optimizeSegment.getCubeInstance().getOriginalSegmentToOptimize(optimizeSegment);

+ Preconditions.checkNotNull(oldSegment,

+ "cannot find the original segment to be optimized by " + optimizeSegment);

+

+ infos.recordReusedCuboids(Collections.singleton(cube.getCuboidsByMode(CuboidModeEnum.RECOMMEND_EXISTING)));

+

+ baseCuboid = cube.getCuboidScheduler().getBaseCuboidId();

+ recommendCuboids = cube.getCuboidsRecommend();

+

+ Preconditions.checkNotNull(recommendCuboids, "The recommend cuboid map could not be null");

Review comment:

`map` or `set`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [kylin] hit-lacus commented on a change in pull request #1636: KYLIN-4966 Refresh the existing segment according to the new cuboid list in kylin4

Posted by GitBox <gi...@apache.org>.

hit-lacus commented on a change in pull request #1636:

URL: https://github.com/apache/kylin/pull/1636#discussion_r638441206

##########

File path: kylin-spark-project/kylin-spark-engine/src/main/java/org/apache/kylin/engine/spark/job/FilterRecommendCuboidJob.java

##########

@@ -0,0 +1,104 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.kylin.engine.spark.job;

+

+import org.apache.hadoop.conf.Configuration;

+import org.apache.hadoop.fs.FileSystem;

+import org.apache.hadoop.fs.FileStatus;

+import org.apache.hadoop.fs.FileUtil;

+import org.apache.hadoop.fs.Path;

+import org.apache.kylin.common.util.HadoopUtil;

+import org.apache.kylin.cube.CubeInstance;

+import org.apache.kylin.cube.CubeManager;

+import org.apache.kylin.cube.CubeSegment;

+import org.apache.kylin.cube.cuboid.CuboidModeEnum;

+import org.apache.kylin.engine.mr.steps.CubingExecutableUtil;

+import org.apache.kylin.engine.spark.application.SparkApplication;

+import org.apache.kylin.engine.spark.metadata.cube.PathManager;

+import org.apache.kylin.shaded.com.google.common.base.Preconditions;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import java.io.IOException;

+import java.util.Collections;

+import java.util.Set;

+

+public class FilterRecommendCuboidJob extends SparkApplication {

Review comment:

Maybe `CopyRemainingCuboidJob` ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org