You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@zeppelin.apache.org by zj...@apache.org on 2019/04/10 02:56:08 UTC

[zeppelin] branch master updated: ZEPPELIN-4038. Deprecate spark

2.2 and earlier

This is an automated email from the ASF dual-hosted git repository.

zjffdu pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/zeppelin.git

The following commit(s) were added to refs/heads/master by this push:

new 8e6974f ZEPPELIN-4038. Deprecate spark 2.2 and earlier

8e6974f is described below

commit 8e6974fdc33e834bc01a5ee594e2cfca4ff3045f

Author: Jeff Zhang <zj...@apache.org>

AuthorDate: Mon Mar 25 17:56:52 2019 +0800

ZEPPELIN-4038. Deprecate spark 2.2 and earlier

### What is this PR for?

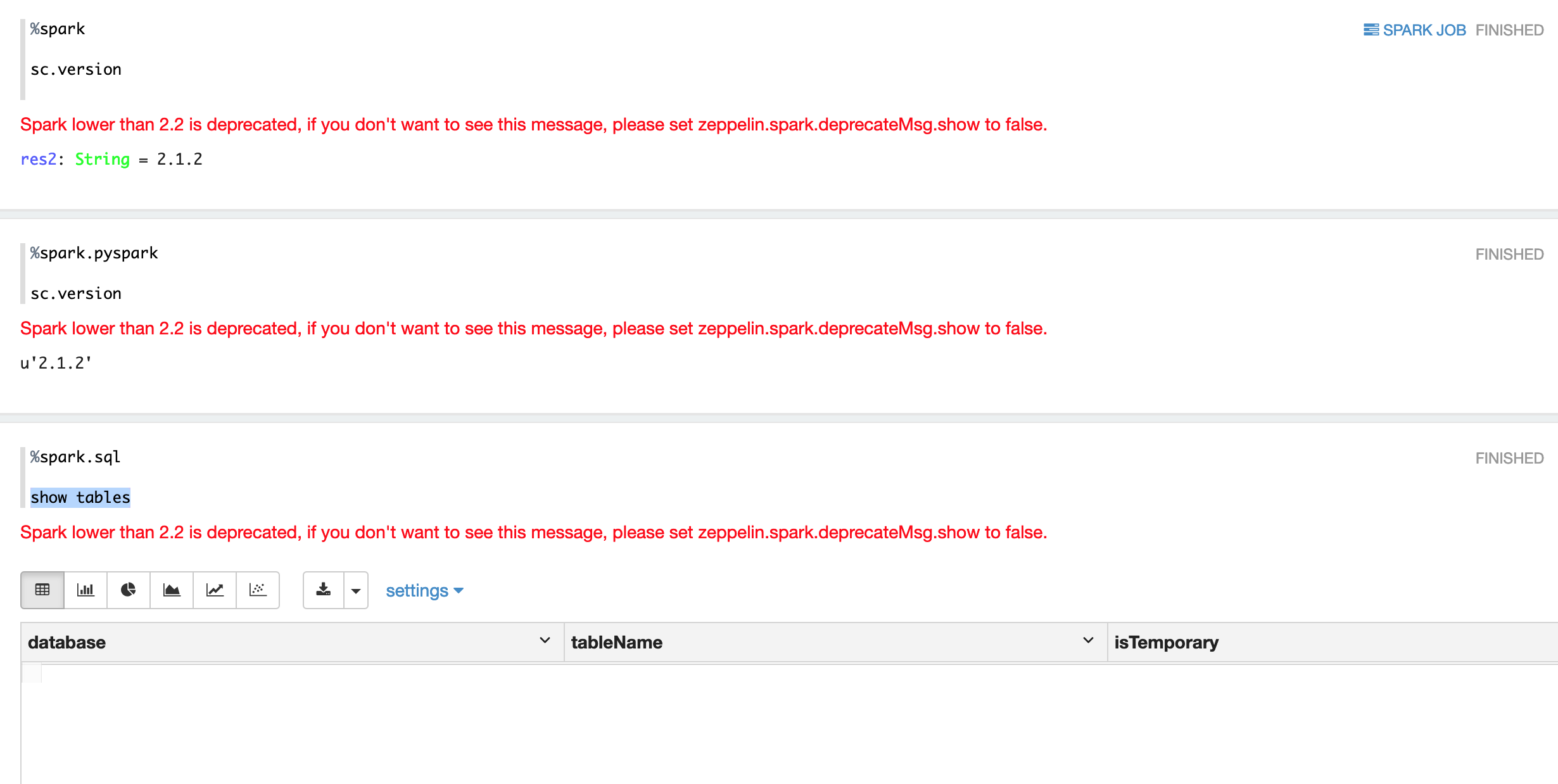

This PR is to deprecate spark 2.2 and earlier. It would display a warning message in frontend, and user can disable this message by configure `zeppelin.spark.deprecatedMsg.show` to false, by default it is true.

### What type of PR is it?

[Bug Fix]

### Todos

* [ ] - Task

### What is the Jira issue?

* https://jira.apache.org/jira/browse/ZEPPELIN-4038

### How should this be tested?

* CI pass

### Screenshots (if appropriate)

### Questions:

* Does the licenses files need update?

* Is there breaking changes for older versions?

* Does this needs documentation?

Author: Jeff Zhang <zj...@apache.org>

Closes #3341 from zjffdu/ZEPPELIN-4038 and squashes the following commits:

a66445678 [Jeff Zhang] ZEPPELIN-4038. Deprecate spark 2.2 and earlier

---

.../zeppelin/img/docs-img/spark_deprecate.png | Bin 0 -> 176802 bytes

docs/interpreter/spark.md | 6 ++++++

.../apache/zeppelin/spark/IPySparkInterpreter.java | 1 +

.../apache/zeppelin/spark/PySparkInterpreter.java | 1 +

.../apache/zeppelin/spark/SparkInterpreter.java | 2 ++

.../apache/zeppelin/spark/SparkRInterpreter.java | 3 ++-

.../apache/zeppelin/spark/SparkSqlInterpreter.java | 2 +-

.../org/apache/zeppelin/spark/SparkVersion.java | 1 +

.../main/java/org/apache/zeppelin/spark/Utils.java | 22 +++++++++++++++++++++

.../src/main/resources/interpreter-setting.json | 7 +++++++

.../zeppelin/spark/IPySparkInterpreterTest.java | 1 +

.../zeppelin/spark/NewSparkInterpreterTest.java | 7 +++++++

.../zeppelin/spark/NewSparkSqlInterpreterTest.java | 1 +

.../zeppelin/spark/OldSparkInterpreterTest.java | 1 +

.../zeppelin/spark/OldSparkSqlInterpreterTest.java | 1 +

.../spark/PySparkInterpreterMatplotlibTest.java | 1 +

.../zeppelin/spark/PySparkInterpreterTest.java | 1 +

.../zeppelin/spark/SparkRInterpreterTest.java | 3 ++-

.../zeppelin/spark/BaseSparkScalaInterpreter.scala | 2 +-

.../integration/ZeppelinSparkClusterTest.java | 2 ++

20 files changed, 61 insertions(+), 4 deletions(-)

diff --git a/docs/assets/themes/zeppelin/img/docs-img/spark_deprecate.png b/docs/assets/themes/zeppelin/img/docs-img/spark_deprecate.png

new file mode 100644

index 0000000..8a867cc

Binary files /dev/null and b/docs/assets/themes/zeppelin/img/docs-img/spark_deprecate.png differ

diff --git a/docs/interpreter/spark.md b/docs/interpreter/spark.md

index 9140825..c3c9fd7 100644

--- a/docs/interpreter/spark.md

+++ b/docs/interpreter/spark.md

@@ -374,6 +374,12 @@ Logical setup with Zeppelin, Kerberos Key Distribution Center (KDC), and Spark o

<img src="{{BASE_PATH}}/assets/themes/zeppelin/img/docs-img/kdc_zeppelin.png">

+## Deprecate Spark 2.2 and earlier versions

+Starting from 0.9, Zeppelin deprecate Spark 2.2 and earlier versions. So you will see a warning message when you use Spark 2.2 and earlier.

+You can get rid of this message by setting `zeppelin.spark.deprecatedMsg.show` to `false`.

+

+<img src="{{BASE_PATH}}/assets/themes/zeppelin/img/docs-img/spark_deprecate.png">

+

### Configuration Setup

1. On the server that Zeppelin is installed, install Kerberos client modules and configuration, krb5.conf.

diff --git a/spark/interpreter/src/main/java/org/apache/zeppelin/spark/IPySparkInterpreter.java b/spark/interpreter/src/main/java/org/apache/zeppelin/spark/IPySparkInterpreter.java

index 594c171..c69e6fd 100644

--- a/spark/interpreter/src/main/java/org/apache/zeppelin/spark/IPySparkInterpreter.java

+++ b/spark/interpreter/src/main/java/org/apache/zeppelin/spark/IPySparkInterpreter.java

@@ -85,6 +85,7 @@ public class IPySparkInterpreter extends IPythonInterpreter {

@Override

public InterpreterResult interpret(String st,

InterpreterContext context) throws InterpreterException {

+ Utils.printDeprecateMessage(sparkInterpreter.getSparkVersion(), context, properties);

InterpreterContext.set(context);

String jobGroupId = Utils.buildJobGroupId(context);

String jobDesc = Utils.buildJobDesc(context);

diff --git a/spark/interpreter/src/main/java/org/apache/zeppelin/spark/PySparkInterpreter.java b/spark/interpreter/src/main/java/org/apache/zeppelin/spark/PySparkInterpreter.java

index 486eca0..c365345 100644

--- a/spark/interpreter/src/main/java/org/apache/zeppelin/spark/PySparkInterpreter.java

+++ b/spark/interpreter/src/main/java/org/apache/zeppelin/spark/PySparkInterpreter.java

@@ -147,6 +147,7 @@ public class PySparkInterpreter extends PythonInterpreter {

@Override

public InterpreterResult interpret(String st, InterpreterContext context)

throws InterpreterException {

+ Utils.printDeprecateMessage(sparkInterpreter.getSparkVersion(), context, properties);

return super.interpret(st, context);

}

diff --git a/spark/interpreter/src/main/java/org/apache/zeppelin/spark/SparkInterpreter.java b/spark/interpreter/src/main/java/org/apache/zeppelin/spark/SparkInterpreter.java

index 43b9e76..ec0be5f 100644

--- a/spark/interpreter/src/main/java/org/apache/zeppelin/spark/SparkInterpreter.java

+++ b/spark/interpreter/src/main/java/org/apache/zeppelin/spark/SparkInterpreter.java

@@ -28,6 +28,7 @@ import org.apache.zeppelin.interpreter.thrift.InterpreterCompletion;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

+import java.io.IOException;

import java.util.List;

import java.util.Properties;

@@ -74,6 +75,7 @@ public class SparkInterpreter extends AbstractSparkInterpreter {

@Override

public InterpreterResult internalInterpret(String st, InterpreterContext context)

throws InterpreterException {

+ Utils.printDeprecateMessage(delegation.getSparkVersion(), context, properties);

return delegation.interpret(st, context);

}

diff --git a/spark/interpreter/src/main/java/org/apache/zeppelin/spark/SparkRInterpreter.java b/spark/interpreter/src/main/java/org/apache/zeppelin/spark/SparkRInterpreter.java

index 7265ae4..3b14eed 100644

--- a/spark/interpreter/src/main/java/org/apache/zeppelin/spark/SparkRInterpreter.java

+++ b/spark/interpreter/src/main/java/org/apache/zeppelin/spark/SparkRInterpreter.java

@@ -121,7 +121,8 @@ public class SparkRInterpreter extends Interpreter {

@Override

public InterpreterResult interpret(String lines, InterpreterContext interpreterContext)

throws InterpreterException {

-

+ Utils.printDeprecateMessage(sparkInterpreter.getSparkVersion(),

+ interpreterContext, properties);

String jobGroup = Utils.buildJobGroupId(interpreterContext);

String jobDesc = Utils.buildJobDesc(interpreterContext);

sparkInterpreter.getSparkContext().setJobGroup(jobGroup, jobDesc, false);

diff --git a/spark/interpreter/src/main/java/org/apache/zeppelin/spark/SparkSqlInterpreter.java b/spark/interpreter/src/main/java/org/apache/zeppelin/spark/SparkSqlInterpreter.java

index 040556b..4e63760 100644

--- a/spark/interpreter/src/main/java/org/apache/zeppelin/spark/SparkSqlInterpreter.java

+++ b/spark/interpreter/src/main/java/org/apache/zeppelin/spark/SparkSqlInterpreter.java

@@ -78,7 +78,7 @@ public class SparkSqlInterpreter extends AbstractInterpreter {

return new InterpreterResult(Code.ERROR, "Spark "

+ sparkInterpreter.getSparkVersion().toString() + " is not supported");

}

-

+ Utils.printDeprecateMessage(sparkInterpreter.getSparkVersion(), context, properties);

sparkInterpreter.getZeppelinContext().setInterpreterContext(context);

SQLContext sqlc = sparkInterpreter.getSQLContext();

SparkContext sc = sqlc.sparkContext();

diff --git a/spark/interpreter/src/main/java/org/apache/zeppelin/spark/SparkVersion.java b/spark/interpreter/src/main/java/org/apache/zeppelin/spark/SparkVersion.java

index de65105..88b10ef 100644

--- a/spark/interpreter/src/main/java/org/apache/zeppelin/spark/SparkVersion.java

+++ b/spark/interpreter/src/main/java/org/apache/zeppelin/spark/SparkVersion.java

@@ -28,6 +28,7 @@ public class SparkVersion {

public static final SparkVersion SPARK_1_6_0 = SparkVersion.fromVersionString("1.6.0");

public static final SparkVersion SPARK_2_0_0 = SparkVersion.fromVersionString("2.0.0");

+ public static final SparkVersion SPARK_2_2_0 = SparkVersion.fromVersionString("2.2.0");

public static final SparkVersion SPARK_2_3_0 = SparkVersion.fromVersionString("2.3.0");

public static final SparkVersion SPARK_2_3_1 = SparkVersion.fromVersionString("2.3.1");

public static final SparkVersion SPARK_2_4_0 = SparkVersion.fromVersionString("2.4.0");

diff --git a/spark/interpreter/src/main/java/org/apache/zeppelin/spark/Utils.java b/spark/interpreter/src/main/java/org/apache/zeppelin/spark/Utils.java

index cd6c607..1220bd7 100644

--- a/spark/interpreter/src/main/java/org/apache/zeppelin/spark/Utils.java

+++ b/spark/interpreter/src/main/java/org/apache/zeppelin/spark/Utils.java

@@ -18,10 +18,12 @@

package org.apache.zeppelin.spark;

import org.apache.zeppelin.interpreter.InterpreterContext;

+import org.apache.zeppelin.interpreter.InterpreterException;

import org.apache.zeppelin.user.AuthenticationInfo;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

+import java.io.IOException;

import java.lang.reflect.Constructor;

import java.lang.reflect.InvocationTargetException;

import java.util.Properties;

@@ -33,6 +35,10 @@ import java.util.regex.Pattern;

*/

class Utils {

public static Logger logger = LoggerFactory.getLogger(Utils.class);

+ public static String DEPRRECATED_MESSAGE =

+ "%html <font color=\"red\">Spark lower than 2.2 is deprecated, " +

+ "if you don't want to see this message, please set " +

+ "zeppelin.spark.deprecateMsg.show to false.</font>";

private static final String SCALA_COMPILER_VERSION = evaluateScalaCompilerVersion();

static Object invokeMethod(Object o, String name) {

@@ -178,4 +184,20 @@ class Utils {

}

return uName;

}

+

+ public static void printDeprecateMessage(SparkVersion sparkVersion,

+ InterpreterContext context,

+ Properties properties) throws InterpreterException {

+ context.out.clear();

+ if (sparkVersion.olderThan(SparkVersion.SPARK_2_2_0)

+ && Boolean.parseBoolean(

+ properties.getProperty("zeppelin.spark.deprecatedMsg.show", "true"))) {

+ try {

+ context.out.write(DEPRRECATED_MESSAGE);

+ context.out.write("%text ");

+ } catch (IOException e) {

+ throw new InterpreterException(e);

+ }

+ }

+ }

}

diff --git a/spark/interpreter/src/main/resources/interpreter-setting.json b/spark/interpreter/src/main/resources/interpreter-setting.json

index 5ff5cfe..341beda 100644

--- a/spark/interpreter/src/main/resources/interpreter-setting.json

+++ b/spark/interpreter/src/main/resources/interpreter-setting.json

@@ -95,6 +95,13 @@

"defaultValue": true,

"description": "Whether to enable color output of spark scala interpreter",

"type": "checkbox"

+ },

+ "zeppelin.spark.deprecatedMsg.show": {

+ "envName": null,

+ "propertyName": "zeppelin.spark.deprecatedMsg.show",

+ "defaultValue": true,

+ "description": "Whether show the spark deprecated message",

+ "type": "checkbox"

}

},

"editor": {

diff --git a/spark/interpreter/src/test/java/org/apache/zeppelin/spark/IPySparkInterpreterTest.java b/spark/interpreter/src/test/java/org/apache/zeppelin/spark/IPySparkInterpreterTest.java

index 20c336d..b8870e6 100644

--- a/spark/interpreter/src/test/java/org/apache/zeppelin/spark/IPySparkInterpreterTest.java

+++ b/spark/interpreter/src/test/java/org/apache/zeppelin/spark/IPySparkInterpreterTest.java

@@ -64,6 +64,7 @@ public class IPySparkInterpreterTest extends IPythonInterpreterTest {

p.setProperty("zeppelin.pyspark.python", "python");

p.setProperty("zeppelin.dep.localrepo", Files.createTempDir().getAbsolutePath());

p.setProperty("zeppelin.python.gatewayserver_address", "127.0.0.1");

+ p.setProperty("zeppelin.spark.deprecatedMsg.show", "false");

return p;

}

diff --git a/spark/interpreter/src/test/java/org/apache/zeppelin/spark/NewSparkInterpreterTest.java b/spark/interpreter/src/test/java/org/apache/zeppelin/spark/NewSparkInterpreterTest.java

index 7c7dad9..773deae 100644

--- a/spark/interpreter/src/test/java/org/apache/zeppelin/spark/NewSparkInterpreterTest.java

+++ b/spark/interpreter/src/test/java/org/apache/zeppelin/spark/NewSparkInterpreterTest.java

@@ -86,6 +86,7 @@ public class NewSparkInterpreterTest {

properties.setProperty("zeppelin.spark.uiWebUrl", "fake_spark_weburl");

// disable color output for easy testing

properties.setProperty("zeppelin.spark.scala.color", "false");

+ properties.setProperty("zeppelin.spark.deprecatedMsg.show", "false");

InterpreterContext context = InterpreterContext.builder()

.setInterpreterOut(new InterpreterOutput(null))

@@ -382,6 +383,7 @@ public class NewSparkInterpreterTest {

properties.setProperty("zeppelin.dep.localrepo", Files.createTempDir().getAbsolutePath());

// disable color output for easy testing

properties.setProperty("zeppelin.spark.scala.color", "false");

+ properties.setProperty("zeppelin.spark.deprecatedMsg.show", "false");

InterpreterGroup intpGroup = new InterpreterGroup();

interpreter = new SparkInterpreter(properties);

@@ -413,6 +415,7 @@ public class NewSparkInterpreterTest {

properties.setProperty("zeppelin.spark.printREPLOutput", "false");

// disable color output for easy testing

properties.setProperty("zeppelin.spark.scala.color", "false");

+ properties.setProperty("zeppelin.spark.deprecatedMsg.show", "false");

InterpreterContext.set(getInterpreterContext());

interpreter = new SparkInterpreter(properties);

@@ -442,6 +445,7 @@ public class NewSparkInterpreterTest {

properties.setProperty("spark.scheduler.mode", "FAIR");

// disable color output for easy testing

properties.setProperty("zeppelin.spark.scala.color", "false");

+ properties.setProperty("zeppelin.spark.deprecatedMsg.show", "false");

interpreter = new SparkInterpreter(properties);

assertTrue(interpreter.getDelegation() instanceof NewSparkInterpreter);

@@ -473,6 +477,7 @@ public class NewSparkInterpreterTest {

properties.setProperty("spark.ui.enabled", "false");

// disable color output for easy testing

properties.setProperty("zeppelin.spark.scala.color", "false");

+ properties.setProperty("zeppelin.spark.deprecatedMsg.show", "false");

interpreter = new SparkInterpreter(properties);

assertTrue(interpreter.getDelegation() instanceof NewSparkInterpreter);

@@ -500,6 +505,7 @@ public class NewSparkInterpreterTest {

properties.setProperty("zeppelin.spark.ui.hidden", "true");

// disable color output for easy testing

properties.setProperty("zeppelin.spark.scala.color", "false");

+ properties.setProperty("zeppelin.spark.deprecatedMsg.show", "false");

interpreter = new SparkInterpreter(properties);

assertTrue(interpreter.getDelegation() instanceof NewSparkInterpreter);

@@ -525,6 +531,7 @@ public class NewSparkInterpreterTest {

properties.setProperty("zeppelin.spark.useNew", "true");

// disable color output for easy testing

properties.setProperty("zeppelin.spark.scala.color", "false");

+ properties.setProperty("zeppelin.spark.deprecatedMsg.show", "false");

SparkInterpreter interpreter1 = new SparkInterpreter(properties);

SparkInterpreter interpreter2 = new SparkInterpreter(properties);

diff --git a/spark/interpreter/src/test/java/org/apache/zeppelin/spark/NewSparkSqlInterpreterTest.java b/spark/interpreter/src/test/java/org/apache/zeppelin/spark/NewSparkSqlInterpreterTest.java

index 573ce51..ac5c9e1 100644

--- a/spark/interpreter/src/test/java/org/apache/zeppelin/spark/NewSparkSqlInterpreterTest.java

+++ b/spark/interpreter/src/test/java/org/apache/zeppelin/spark/NewSparkSqlInterpreterTest.java

@@ -55,6 +55,7 @@ public class NewSparkSqlInterpreterTest {

p.setProperty("zeppelin.spark.sql.stacktrace", "true");

p.setProperty("zeppelin.spark.useNew", "true");

p.setProperty("zeppelin.spark.useHiveContext", "true");

+ p.setProperty("zeppelin.spark.deprecatedMsg.show", "false");

intpGroup = new InterpreterGroup();

sparkInterpreter = new SparkInterpreter(p);

diff --git a/spark/interpreter/src/test/java/org/apache/zeppelin/spark/OldSparkInterpreterTest.java b/spark/interpreter/src/test/java/org/apache/zeppelin/spark/OldSparkInterpreterTest.java

index a435272..c2a1bb0 100644

--- a/spark/interpreter/src/test/java/org/apache/zeppelin/spark/OldSparkInterpreterTest.java

+++ b/spark/interpreter/src/test/java/org/apache/zeppelin/spark/OldSparkInterpreterTest.java

@@ -87,6 +87,7 @@ public class OldSparkInterpreterTest {

p.setProperty("zeppelin.spark.property_1", "value_1");

// disable color output for easy testing

p.setProperty("zeppelin.spark.scala.color", "false");

+ p.setProperty("zeppelin.spark.deprecatedMsg.show", "false");

return p;

}

diff --git a/spark/interpreter/src/test/java/org/apache/zeppelin/spark/OldSparkSqlInterpreterTest.java b/spark/interpreter/src/test/java/org/apache/zeppelin/spark/OldSparkSqlInterpreterTest.java

index fa1e257..425651c 100644

--- a/spark/interpreter/src/test/java/org/apache/zeppelin/spark/OldSparkSqlInterpreterTest.java

+++ b/spark/interpreter/src/test/java/org/apache/zeppelin/spark/OldSparkSqlInterpreterTest.java

@@ -57,6 +57,7 @@ public class OldSparkSqlInterpreterTest {

p.setProperty("zeppelin.spark.maxResult", "10");

p.setProperty("zeppelin.spark.concurrentSQL", "false");

p.setProperty("zeppelin.spark.sql.stacktrace", "false");

+ p.setProperty("zeppelin.spark.deprecatedMsg.show", "false");

repl = new SparkInterpreter(p);

intpGroup = new InterpreterGroup();

diff --git a/spark/interpreter/src/test/java/org/apache/zeppelin/spark/PySparkInterpreterMatplotlibTest.java b/spark/interpreter/src/test/java/org/apache/zeppelin/spark/PySparkInterpreterMatplotlibTest.java

index 5a05ad5..305839a 100644

--- a/spark/interpreter/src/test/java/org/apache/zeppelin/spark/PySparkInterpreterMatplotlibTest.java

+++ b/spark/interpreter/src/test/java/org/apache/zeppelin/spark/PySparkInterpreterMatplotlibTest.java

@@ -102,6 +102,7 @@ public class PySparkInterpreterMatplotlibTest {

p.setProperty("zeppelin.dep.localrepo", tmpDir.newFolder().getAbsolutePath());

p.setProperty("zeppelin.pyspark.useIPython", "false");

p.setProperty("zeppelin.python.gatewayserver_address", "127.0.0.1");

+ p.setProperty("zeppelin.spark.deprecatedMsg.show", "false");

return p;

}

diff --git a/spark/interpreter/src/test/java/org/apache/zeppelin/spark/PySparkInterpreterTest.java b/spark/interpreter/src/test/java/org/apache/zeppelin/spark/PySparkInterpreterTest.java

index 64f1ff5..5681c18 100644

--- a/spark/interpreter/src/test/java/org/apache/zeppelin/spark/PySparkInterpreterTest.java

+++ b/spark/interpreter/src/test/java/org/apache/zeppelin/spark/PySparkInterpreterTest.java

@@ -54,6 +54,7 @@ public class PySparkInterpreterTest extends PythonInterpreterTest {

properties.setProperty("zeppelin.spark.useNew", "true");

properties.setProperty("zeppelin.spark.test", "true");

properties.setProperty("zeppelin.python.gatewayserver_address", "127.0.0.1");

+ properties.setProperty("zeppelin.spark.deprecatedMsg.show", "false");

// create interpreter group

intpGroup = new InterpreterGroup();

diff --git a/spark/interpreter/src/test/java/org/apache/zeppelin/spark/SparkRInterpreterTest.java b/spark/interpreter/src/test/java/org/apache/zeppelin/spark/SparkRInterpreterTest.java

index fb9ad62..ae48cbb 100644

--- a/spark/interpreter/src/test/java/org/apache/zeppelin/spark/SparkRInterpreterTest.java

+++ b/spark/interpreter/src/test/java/org/apache/zeppelin/spark/SparkRInterpreterTest.java

@@ -56,7 +56,8 @@ public class SparkRInterpreterTest {

properties.setProperty("zeppelin.spark.useNew", "true");

properties.setProperty("zeppelin.R.knitr", "true");

properties.setProperty("spark.r.backendConnectionTimeout", "10");

-

+ properties.setProperty("zeppelin.spark.deprecatedMsg.show", "false");

+

InterpreterContext context = getInterpreterContext();

InterpreterContext.set(context);

sparkRInterpreter = new SparkRInterpreter(properties);

diff --git a/spark/spark-scala-parent/src/main/scala/org/apache/zeppelin/spark/BaseSparkScalaInterpreter.scala b/spark/spark-scala-parent/src/main/scala/org/apache/zeppelin/spark/BaseSparkScalaInterpreter.scala

index cd241a8..3a2cd0b 100644

--- a/spark/spark-scala-parent/src/main/scala/org/apache/zeppelin/spark/BaseSparkScalaInterpreter.scala

+++ b/spark/spark-scala-parent/src/main/scala/org/apache/zeppelin/spark/BaseSparkScalaInterpreter.scala

@@ -95,7 +95,6 @@ abstract class BaseSparkScalaInterpreter(val conf: SparkConf,

System.setOut(Console.out)

interpreterOutput.setInterpreterOutput(context.out)

interpreterOutput.ignoreLeadingNewLinesFromScalaReporter()

- context.out.clear()

val status = scalaInterpret(code) match {

case success@scala.tools.nsc.interpreter.IR.Success =>

@@ -122,6 +121,7 @@ abstract class BaseSparkScalaInterpreter(val conf: SparkConf,

// reset the java stdout

System.setOut(originalOut)

+ context.out.write("")

val lastStatus = _interpret(code) match {

case scala.tools.nsc.interpreter.IR.Success =>

InterpreterResult.Code.SUCCESS

diff --git a/zeppelin-interpreter-integration/src/test/java/org/apache/zeppelin/integration/ZeppelinSparkClusterTest.java b/zeppelin-interpreter-integration/src/test/java/org/apache/zeppelin/integration/ZeppelinSparkClusterTest.java

index 78ff006..8d29163 100644

--- a/zeppelin-interpreter-integration/src/test/java/org/apache/zeppelin/integration/ZeppelinSparkClusterTest.java

+++ b/zeppelin-interpreter-integration/src/test/java/org/apache/zeppelin/integration/ZeppelinSparkClusterTest.java

@@ -112,6 +112,8 @@ public abstract class ZeppelinSparkClusterTest extends AbstractTestRestApi {

new InterpreterProperty("spark.serializer", "org.apache.spark.serializer.KryoSerializer"));

sparkProperties.put("zeppelin.spark.scala.color",

new InterpreterProperty("zeppelin.spark.scala.color", "false"));

+ sparkProperties.put("zzeppelin.spark.deprecatedMsg.show",

+ new InterpreterProperty("zeppelin.spark.deprecatedMsg.show", "false"));

TestUtils.getInstance(Notebook.class).getInterpreterSettingManager().restart(sparkIntpSetting.getId());

}