You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2021/06/21 09:54:14 UTC

[GitHub] [hudi] mauropelucchi commented on issue #2564: Hoodie clean is not deleting old files

mauropelucchi commented on issue #2564:

URL: https://github.com/apache/hudi/issues/2564#issuecomment-864899684

Hello @vinothchandar @n3nash

We continue to have this type of issue

Let's me to share our situation, the configuration is the same for all the tables in our environment

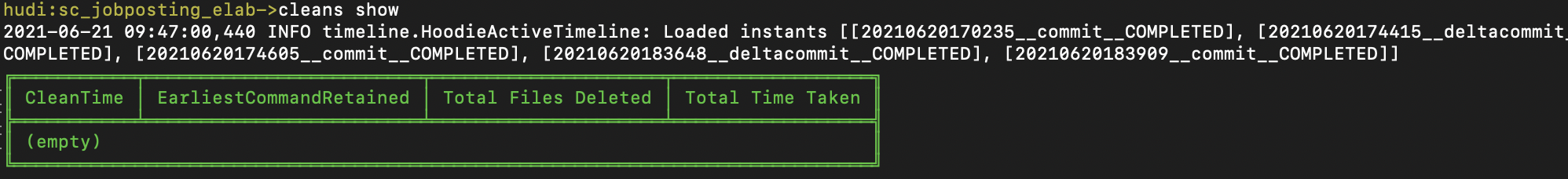

Second table:

This is our current configuration:

```

def _get_hudi_options(self, table_name: str, parallelism: int):

return {

'hoodie.table.name': table_name,

'hoodie.datasource.write.recordkey.field': 'posting_key',

'hoodie.datasource.write.partitionpath.field': 'range_partition',

'hoodie.datasource.write.table.name': table_name,

'hoodie.datasource.write.precombine.field': 'update_date',

'hoodie.datasource.write.table.type': 'MERGE_ON_READ',

'hoodie.cleaner.policy': 'KEEP_LATEST_COMMITS',

'hoodie.consistency.check.enabled': True,

'hoodie.bloom.index.filter.type': 'dynamic_v0',

'hoodie.bloom.index.bucketized.checking': False,

'hoodie.memory.merge.max.size': '2004857600000',

'hoodie.upsert.shuffle.parallelism': parallelism,

'hoodie.insert.shuffle.parallelism': parallelism,

'hoodie.bulkinsert.shuffle.parallelism': parallelism,

'hoodie.parquet.small.file.limit': '204857600',

'hoodie.parquet.max.file.size': str(self.__parquet_max_file_size_byte),

'hoodie.memory.compaction.fraction': '384402653184',

'hoodie.write.buffer.limit.bytes': str(128 * 1024 * 1024),

'hoodie.compact.inline': True,

'hoodie.compact.inline.max.delta.commits': 1,

'hoodie.datasource.compaction.async.enable': False,

'hoodie.parquet.compression.ratio': '0.35',

'hoodie.logfile.max.size': '268435456',

'hoodie.logfile.to.parquet.compression.ratio': '0.5',

'hoodie.datasource.write.hive_style_partitioning': True,

'hoodie.keep.min.commits': 5,

'hoodie.keep.max.commits': 6,

'hoodie.copyonwrite.record.size.estimate': 32,

'hoodie.cleaner.commits.retained': 4,

'hoodie.clean.automatic': True

}

```

Any ideas?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org