You are viewing a plain text version of this content. The canonical link for it is here.

Posted to issues@iceberg.apache.org by GitBox <gi...@apache.org> on 2022/05/30 16:24:55 UTC

[GitHub] [iceberg] hililiwei opened a new pull request, #4904: Flink: new sink base on the unified sink API

hililiwei opened a new pull request, #4904:

URL: https://github.com/apache/iceberg/pull/4904

## What is the purpose of the change

The following is the background and basis for this PR:

- [FLIP-143: Unified Sink API](https://cwiki.apache.org/confluence/display/FLINK/FLIP-143%3A+Unified+Sink+API)

- [FLIP-191: Extend unified Sink interface to support small file compaction](https://cwiki.apache.org/confluence/display/FLINK/FLIP-191%3A+Extend+unified+Sink+interface+to+support+small+file+compaction)

1. FLIP-143: Unified Sink API

The Unified Sink API was introduced by FLink in version 1.12, and its motivation is mainly as follows:

> As discussed in FLIP-131, Flink will deprecate the DataSet API in favor of DataStream API and Table API. Users should be able to use DataStream API to write jobs that support both bounded and unbounded execution modes. However Flink does not provide a sink API to guarantee the exactly once semantics in both bounded and unbounded scenarios, which blocks the unification.

>

> So we want to introduce a new unified sink API which could let the user develop sink once and run it everywhere. Specifically Flink allows the user to

>

> Choose the different SDK(SQL/Table/DataStream)

Choose the different execution mode(Batch/Stream) according to the scenarios(bounded/unbounded)

We hope these things(SDK/Execution mode) are transparent to the sink API.

This is the main change point of this PR. It retains most of the underlying logic, provides a V2 version of FlinkSink externally, and tries to minimize the impact of this change on users' experience.

After this PR is completed, we can try to contact Flink community to see if it is possible to directly include this new Sink into Flink repo and exist as a separate Connector.

For the above two FLIP and some other reasons, I think we should implement our Sink based on the latest API, which is clearer in structure, and this helps keep us in line with Flink..

2. FLIP-191: Extend unified Sink interface to support small file compaction

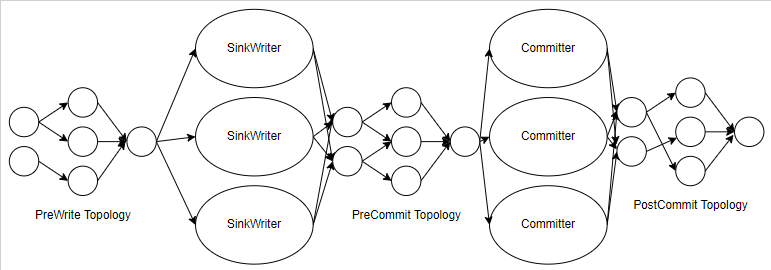

I think this illustration is very clear.

I'm going to do this in a separate PR because it's a bit of a performance bottleneck when we're dealing with a lot of data in production. If possible, I would like to raise PR after those issues are resolved.

## Brief change log

1. Add new flink sink base on [FLIP-143: Unified Sink API](https://cwiki.apache.org/confluence/display/FLINK/FLIP-143%3A+Unified+Sink+API) and [FLIP-191: Extend unified Sink interface to support small file compaction](https://cwiki.apache.org/confluence/display/FLINK/FLIP-191%3A+Extend+unified+Sink+interface+to+support+small+file+compaction)

2. `Iceberg Table Sink` uses the new sink instead of the old one.

## Verifying this change

1. Use existing unit tests.

2. Add some new

## To do

1. Unit tests to cover more case

2. Small file merge

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] hililiwei commented on a diff in pull request #4904: Flink: new sink base on the unified sink API

Posted by GitBox <gi...@apache.org>.

hililiwei commented on code in PR #4904:

URL: https://github.com/apache/iceberg/pull/4904#discussion_r925805888

##########

flink/v1.15/flink/src/main/java/org/apache/iceberg/flink/sink/v2/FlinkSink.java:

##########

@@ -0,0 +1,603 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+package org.apache.iceberg.flink.sink.v2;

+

+import java.io.IOException;

+import java.io.UncheckedIOException;

+import java.util.Collection;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.function.Function;

+import javax.annotation.Nullable;

+import org.apache.flink.api.common.functions.MapFunction;

+import org.apache.flink.api.common.functions.RichMapFunction;

+import org.apache.flink.api.common.typeinfo.TypeInformation;

+import org.apache.flink.api.connector.sink2.Committer;

+import org.apache.flink.api.connector.sink2.StatefulSink;

+import org.apache.flink.configuration.Configuration;

+import org.apache.flink.configuration.ReadableConfig;

+import org.apache.flink.core.io.SimpleVersionedSerializer;

+import org.apache.flink.streaming.api.connector.sink2.CommittableMessage;

+import org.apache.flink.streaming.api.connector.sink2.CommittableWithLineage;

+import org.apache.flink.streaming.api.connector.sink2.WithPostCommitTopology;

+import org.apache.flink.streaming.api.connector.sink2.WithPreCommitTopology;

+import org.apache.flink.streaming.api.connector.sink2.WithPreWriteTopology;

+import org.apache.flink.streaming.api.datastream.DataStream;

+import org.apache.flink.streaming.api.datastream.DataStreamSink;

+import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

+import org.apache.flink.streaming.api.operators.StreamingRuntimeContext;

+import org.apache.flink.table.api.TableSchema;

+import org.apache.flink.table.data.RowData;

+import org.apache.flink.table.data.util.DataFormatConverters;

+import org.apache.flink.table.types.DataType;

+import org.apache.flink.table.types.logical.RowType;

+import org.apache.flink.types.Row;

+import org.apache.iceberg.DataFile;

+import org.apache.iceberg.DistributionMode;

+import org.apache.iceberg.FileFormat;

+import org.apache.iceberg.PartitionField;

+import org.apache.iceberg.PartitionSpec;

+import org.apache.iceberg.Schema;

+import org.apache.iceberg.SerializableTable;

+import org.apache.iceberg.Table;

+import org.apache.iceberg.common.DynFields;

+import org.apache.iceberg.flink.FlinkSchemaUtil;

+import org.apache.iceberg.flink.FlinkWriteConf;

+import org.apache.iceberg.flink.FlinkWriteOptions;

+import org.apache.iceberg.flink.TableLoader;

+import org.apache.iceberg.flink.sink.EqualityFieldKeySelector;

+import org.apache.iceberg.flink.sink.PartitionKeySelector;

+import org.apache.iceberg.flink.sink.RowDataTaskWriterFactory;

+import org.apache.iceberg.flink.util.FlinkCompatibilityUtil;

+import org.apache.iceberg.relocated.com.google.common.base.Preconditions;

+import org.apache.iceberg.relocated.com.google.common.collect.Lists;

+import org.apache.iceberg.relocated.com.google.common.collect.Maps;

+import org.apache.iceberg.relocated.com.google.common.collect.Sets;

+import org.apache.iceberg.types.TypeUtil;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import static org.apache.iceberg.TableProperties.WRITE_DISTRIBUTION_MODE;

+

+public class FlinkSink implements StatefulSink<RowData, IcebergStreamWriterState>,

+ WithPreWriteTopology<RowData>,

+ WithPreCommitTopology<RowData, IcebergFlinkCommittable>,

+ WithPostCommitTopology<RowData, IcebergFlinkCommittable> {

+ private static final Logger LOG = LoggerFactory.getLogger(FlinkSink.class);

+

+ private final TableLoader tableLoader;

+ private final Table table;

+ private final boolean overwrite;

+ private final boolean upsertMode;

+ private final DistributionMode distributionMode;

+ private final List<String> equalityFieldColumns;

+ private final List<Integer> equalityFieldIds;

+ private final FileFormat dataFileFormat;

+ private final int workerPoolSize;

+ private final long targetDataFileSize;

+ private final String uidPrefix;

+ private final Map<String, String> snapshotProperties;

+ private final RowType flinkRowType;

+

+ public FlinkSink(TableLoader tableLoader,

+ @Nullable Table newTable,

+ TableSchema tableSchema,

+ List<String> equalityFieldColumns,

+ @Nullable String uidPrefix,

+ ReadableConfig readableConfig,

+ Map<String, String> writeOptions,

+ Map<String, String> snapshotProperties) {

+ this.tableLoader = tableLoader;

+ this.equalityFieldColumns = equalityFieldColumns;

+ this.uidPrefix = uidPrefix == null ? "" : uidPrefix;

+ this.snapshotProperties = snapshotProperties;

+

+ Preconditions.checkNotNull(tableLoader, "Table loader shouldn't be null");

+

+ if (newTable == null) {

+ tableLoader.open();

+ try (TableLoader loader = tableLoader) {

+ this.table = loader.loadTable();

+ } catch (IOException e) {

+ throw new UncheckedIOException("Failed to load iceberg table from table loader: " + tableLoader, e);

+ }

+ } else {

+ this.table = newTable;

+ }

+ FlinkWriteConf flinkWriteConf = new FlinkWriteConf(table, writeOptions, readableConfig);

+

+ this.equalityFieldIds = checkAndGetEqualityFieldIds(table, equalityFieldColumns);

+

+ this.flinkRowType = toFlinkRowType(table.schema(), tableSchema);

+

+ // Validate the equality fields and partition fields if we enable the upsert mode.

+ if (flinkWriteConf.upsertMode()) {

+ Preconditions.checkState(

+ !flinkWriteConf.overwriteMode(),

+ "OVERWRITE mode shouldn't be enable when configuring to use UPSERT data stream.");

+ Preconditions.checkState(

+ !equalityFieldIds.isEmpty(),

+ "Equality field columns shouldn't be empty when configuring to use UPSERT data stream.");

+ if (!table.spec().isUnpartitioned()) {

+ for (PartitionField partitionField : table.spec().fields()) {

+ Preconditions.checkState(equalityFieldIds.contains(partitionField.sourceId()),

+ "In UPSERT mode, partition field '%s' should be included in equality fields: '%s'",

+ partitionField, equalityFieldColumns);

+ }

+ }

+ }

+

+ this.upsertMode = flinkWriteConf.upsertMode();

+ this.overwrite = flinkWriteConf.overwriteMode();

+ this.distributionMode = flinkWriteConf.distributionMode();

+ this.workerPoolSize = flinkWriteConf.workerPoolSize();

+ this.targetDataFileSize = flinkWriteConf.targetDataFileSize();

+ this.dataFileFormat = flinkWriteConf.dataFileFormat();

+ }

+

+ @Override

+ public DataStream<RowData> addPreWriteTopology(DataStream<RowData> inputDataStream) {

+ return distributeDataStream(inputDataStream, equalityFieldIds, table.spec(),

+ table.schema(), flinkRowType, distributionMode, equalityFieldColumns);

+ }

+

+ @Override

+ public IcebergStreamWriter createWriter(InitContext context) {

+ return restoreWriter(context, null);

+ }

+

+ @Override

+ public IcebergStreamWriter restoreWriter(InitContext context,

+ Collection<IcebergStreamWriterState> recoveredState) {

+ StreamingRuntimeContext runtimeContext = runtimeContextHidden(context);

+ int attemptNumber = runtimeContext.getAttemptNumber();

+ String jobId = runtimeContext.getJobId().toString();

+ IcebergStreamWriter streamWriter = createStreamWriter(table, targetDataFileSize, dataFileFormat, upsertMode,

+ flinkRowType, equalityFieldIds, jobId, context.getSubtaskId(), attemptNumber);

+ if (recoveredState == null) {

+ return streamWriter;

+ }

+

+ return streamWriter.restoreWriter(recoveredState);

+ }

+

+ @Override

+ public SimpleVersionedSerializer<IcebergStreamWriterState> getWriterStateSerializer() {

+ return new IcebergStreamWriterStateSerializer<>();

+ }

+

+ static IcebergStreamWriter createStreamWriter(Table table,

+ long targetDataFileSize,

+ FileFormat dataFileFormat,

+ boolean upsertMode,

+ RowType flinkRowType,

+ List<Integer> equalityFieldIds,

+ String jobId,

+ int subTaskId,

+ long attemptId) {

+ RowDataTaskWriterFactory taskWriterFactory = new RowDataTaskWriterFactory(SerializableTable.copyOf(table),

+ flinkRowType, targetDataFileSize, dataFileFormat, equalityFieldIds, upsertMode);

+

+ return new IcebergStreamWriter(table.name(), taskWriterFactory, jobId, subTaskId, attemptId);

+ }

+

+ @Override

+ public DataStream<CommittableMessage<IcebergFlinkCommittable>> addPreCommitTopology(

+ DataStream<CommittableMessage<IcebergFlinkCommittable>> writeResults) {

+ return writeResults.map(new RichMapFunction<CommittableMessage<IcebergFlinkCommittable>,

+ CommittableMessage<IcebergFlinkCommittable>>() {

+ @Override

+ public CommittableMessage<IcebergFlinkCommittable> map(CommittableMessage<IcebergFlinkCommittable> message) {

+ if (message instanceof CommittableWithLineage) {

+ CommittableWithLineage<IcebergFlinkCommittable> committableWithLineage =

+ (CommittableWithLineage<IcebergFlinkCommittable>) message;

+ IcebergFlinkCommittable committable = committableWithLineage.getCommittable();

+ committable.checkpointId(committableWithLineage.getCheckpointId().orElse(0));

+ committable.subtaskId(committableWithLineage.getSubtaskId());

+ committable.jobID(getRuntimeContext().getJobId().toString());

+ }

+ return message;

+ }

+ }).uid(uidPrefix + "pre-commit-topology").global();

+ }

+

+ @Override

+ public Committer<IcebergFlinkCommittable> createCommitter() {

+ return new IcebergFilesCommitter(tableLoader, overwrite, snapshotProperties, workerPoolSize);

+ }

+

+ @Override

+ public SimpleVersionedSerializer<IcebergFlinkCommittable> getCommittableSerializer() {

+ return new IcebergFlinkCommittableSerializer();

+ }

+

+ @Override

+ public void addPostCommitTopology(DataStream<CommittableMessage<IcebergFlinkCommittable>> committables) {

+ // TODO Support small file compaction

+ }

+

+ private StreamingRuntimeContext runtimeContextHidden(InitContext context) {

+ DynFields.BoundField<StreamingRuntimeContext> runtimeContextBoundField =

+ DynFields.builder().hiddenImpl(context.getClass(), "runtimeContext").build(context);

+ return runtimeContextBoundField.get();

+ }

+

+ /**

+ * Initialize a {@link Builder} to export the data from generic input data stream into iceberg table. We use

+ * {@link RowData} inside the sink connector, so users need to provide a mapper function and a

+ * {@link TypeInformation} to convert those generic records to a RowData DataStream.

+ *

+ * @param input the generic source input data stream.

+ * @param mapper function to convert the generic data to {@link RowData}

+ * @param outputType to define the {@link TypeInformation} for the input data.

+ * @param <T> the data type of records.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public static <T> Builder builderFor(DataStream<T> input,

+ MapFunction<T, RowData> mapper,

+ TypeInformation<RowData> outputType) {

+ return new Builder().forMapperOutputType(input, mapper, outputType);

+ }

+

+ /**

+ * Initialize a {@link Builder} to export the data from input data stream with {@link Row}s into iceberg table. We use

+ * {@link RowData} inside the sink connector, so users need to provide a {@link TableSchema} for builder to convert

+ * those {@link Row}s to a {@link RowData} DataStream.

+ *

+ * @param input the source input data stream with {@link Row}s.

+ * @param tableSchema defines the {@link TypeInformation} for input data.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public static Builder forRow(DataStream<Row> input, TableSchema tableSchema) {

+ RowType rowType = (RowType) tableSchema.toRowDataType().getLogicalType();

+ DataType[] fieldDataTypes = tableSchema.getFieldDataTypes();

+

+ DataFormatConverters.RowConverter rowConverter = new DataFormatConverters.RowConverter(fieldDataTypes);

+ return builderFor(input, rowConverter::toInternal, FlinkCompatibilityUtil.toTypeInfo(rowType))

+ .tableSchema(tableSchema);

+ }

+

+ /**

+ * Initialize a {@link Builder} to export the data from input data stream with {@link RowData}s into iceberg table.

+ *

+ * @param input the source input data stream with {@link RowData}s.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public static Builder forRowData(DataStream<RowData> input) {

+ return new Builder().forRowData(input);

+ }

+

+ /**

+ * Initialize a {@link Builder} to export the data from input data stream with {@link RowData}s into iceberg table.

+ *

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public static Builder builder() {

+ return new Builder();

+ }

+

+ public static class Builder {

+ private Function<String, DataStream<RowData>> inputCreator = null;

+ private TableLoader tableLoader;

+ private Table table;

+ private TableSchema tableSchema;

+ private Integer writeParallelism = null;

+ private List<String> equalityFieldColumns = null;

+ private String uidPrefix = "iceberg-flink-job";

+ private final Map<String, String> snapshotProperties = Maps.newHashMap();

+ private ReadableConfig readableConfig = new Configuration();

+ private final Map<String, String> writeOptions = Maps.newHashMap();

+

+ private Builder() {

+ }

+

+ private Builder forRowData(DataStream<RowData> newRowDataInput) {

+ this.inputCreator = ignored -> newRowDataInput;

+ return this;

+ }

+

+ private <T> Builder forMapperOutputType(DataStream<T> input,

+ MapFunction<T, RowData> mapper,

+ TypeInformation<RowData> outputType) {

+ this.inputCreator = newUidPrefix -> {

+ // Input stream order is crucial for some situation(e.g. in cdc case).

+ // Therefore, we need to set the parallelismof map operator same as its input to keep map operator

+ // chaining its input, and avoid rebalanced by default.

+ SingleOutputStreamOperator<RowData> inputStream = input.map(mapper, outputType)

+ .setParallelism(input.getParallelism());

+ if (newUidPrefix != null) {

+ inputStream.name(Builder.this.operatorName(newUidPrefix)).uid(newUidPrefix + "-mapper");

+ }

+ return inputStream;

+ };

+ return this;

+ }

+

+ /**

+ * This iceberg {@link Table} instance is used for initializing {@link IcebergStreamWriter} which will write all

+ * the records into {@link DataFile}s and emit them to downstream operator. Providing a table would avoid so many

+ * table loading from each separate task.

+ *

+ * @param newTable the loaded iceberg table instance.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public Builder table(Table newTable) {

+ this.table = newTable;

+ return this;

+ }

+

+ /**

+ * The table loader is used for loading tables in {@link IcebergFilesCommitter} lazily, we need this loader because

+ * {@link Table} is not serializable and could not just use the loaded table from Builder#table in the remote task

+ * manager.

+ *

+ * @param newTableLoader to load iceberg table inside tasks.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public Builder tableLoader(TableLoader newTableLoader) {

+ this.tableLoader = newTableLoader;

+ return this;

+ }

+

+ /**

+ * Set the write properties for Flink sink.

+ * View the supported properties in {@link FlinkWriteOptions}

+ */

+ public Builder set(String property, String value) {

+ writeOptions.put(property, value);

+ return this;

+ }

+

+ /**

+ * Set the write properties for Flink sink.

+ * View the supported properties in {@link FlinkWriteOptions}

+ */

+ public Builder setAll(Map<String, String> properties) {

+ writeOptions.putAll(properties);

+ return this;

+ }

+

+ public Builder tableSchema(TableSchema newTableSchema) {

+ this.tableSchema = newTableSchema;

+ return this;

+ }

+

+ public Builder overwrite(boolean newOverwrite) {

+ writeOptions.put(FlinkWriteOptions.OVERWRITE_MODE.key(), Boolean.toString(newOverwrite));

+ return this;

+ }

+

+ public Builder flinkConf(ReadableConfig config) {

+ this.readableConfig = config;

+ return this;

+ }

+

+ /**

+ * Configure the write {@link DistributionMode} that the flink sink will use. Currently, flink support

+ * {@link DistributionMode#NONE} and {@link DistributionMode#HASH}.

+ *

+ * @param mode to specify the write distribution mode.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public Builder distributionMode(DistributionMode mode) {

+ Preconditions.checkArgument(

+ !DistributionMode.RANGE.equals(mode),

+ "Flink does not support 'range' write distribution mode now.");

+ if (mode != null) {

+ writeOptions.put(FlinkWriteOptions.DISTRIBUTION_MODE.key(), mode.modeName());

+ }

+ return this;

+ }

+

+ /**

+ * Configuring the write parallel number for iceberg stream writer.

+ *

+ * @param newWriteParallelism the number of parallel iceberg stream writer.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public Builder writeParallelism(int newWriteParallelism) {

+ this.writeParallelism = newWriteParallelism;

+ return this;

+ }

+

+ /**

+ * All INSERT/UPDATE_AFTER events from input stream will be transformed to UPSERT events, which means it will

+ * DELETE the old records and then INSERT the new records. In partitioned table, the partition fields should be

+ * a subset of equality fields, otherwise the old row that located in partition-A could not be deleted by the

+ * new row that located in partition-B.

+ *

+ * @param enabled indicate whether it should transform all INSERT/UPDATE_AFTER events to UPSERT.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public Builder upsert(boolean enabled) {

+ writeOptions.put(FlinkWriteOptions.WRITE_UPSERT_ENABLED.key(), Boolean.toString(enabled));

+ return this;

+ }

+

+ /**

+ * Configuring the equality field columns for iceberg table that accept CDC or UPSERT events.

+ *

+ * @param columns defines the iceberg table's key.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public Builder equalityFieldColumns(List<String> columns) {

+ this.equalityFieldColumns = columns;

+ return this;

+ }

+

+ /**

+ * Set the uid prefix for FlinkSink operators. Note that FlinkSink internally consists of multiple operators (like

+ * writer, committer, dummy sink etc.) Actually operator uid will be appended with a suffix like "uidPrefix-writer".

Review Comment:

I think we can add some topology diagrams on top?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] hililiwei commented on a diff in pull request #4904: Flink: new sink base on the unified sink API

Posted by GitBox <gi...@apache.org>.

hililiwei commented on code in PR #4904:

URL: https://github.com/apache/iceberg/pull/4904#discussion_r925812879

##########

flink/v1.15/flink/src/main/java/org/apache/iceberg/flink/sink/v2/IcebergFilesCommitter.java:

##########

@@ -0,0 +1,261 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+package org.apache.iceberg.flink.sink.v2;

+

+import java.io.IOException;

+import java.io.Serializable;

+import java.util.Arrays;

+import java.util.Collection;

+import java.util.Map;

+import java.util.concurrent.ExecutorService;

+import org.apache.flink.api.connector.sink2.Committer;

+import org.apache.iceberg.AppendFiles;

+import org.apache.iceberg.ReplacePartitions;

+import org.apache.iceberg.RowDelta;

+import org.apache.iceberg.SnapshotUpdate;

+import org.apache.iceberg.Table;

+import org.apache.iceberg.flink.TableLoader;

+import org.apache.iceberg.io.WriteResult;

+import org.apache.iceberg.relocated.com.google.common.base.Preconditions;

+import org.apache.iceberg.relocated.com.google.common.collect.Lists;

+import org.apache.iceberg.util.PropertyUtil;

+import org.apache.iceberg.util.ThreadPools;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+public class IcebergFilesCommitter implements Committer<IcebergFlinkCommittable>, Serializable {

+

+ private static final long serialVersionUID = 1L;

+ private static final long INITIAL_CHECKPOINT_ID = -1L;

+

+ private static final Logger LOG = LoggerFactory.getLogger(IcebergFilesCommitter.class);

+ private static final String FLINK_JOB_ID = "flink.job-id";

+

+ // The max checkpoint id we've committed to iceberg table. As the flink's checkpoint is always increasing, so we could

+ // correctly commit all the data files whose checkpoint id is greater than the max committed one to iceberg table, for

+ // avoiding committing the same data files twice. This id will be attached to iceberg's meta when committing the

+ // iceberg transaction.

+ private static final String MAX_COMMITTED_CHECKPOINT_ID = "flink.max-committed-checkpoint-id";

+ static final String MAX_CONTINUOUS_EMPTY_COMMITS = "flink.max-continuous-empty-commits";

+ static final int MAX_CONTINUOUS_EMPTY_COMMITS_DEFAULT = 10;

+

+ // TableLoader to load iceberg table lazily.

+ private final TableLoader tableLoader;

+ private final boolean replacePartitions;

+ private final Map<String, String> snapshotProperties;

+

+ // It will have an unique identifier for one job.

+ private final Table table;

+ private transient long maxCommittedCheckpointId;

+ private transient int continuousEmptyCheckpoints;

+ private transient int maxContinuousEmptyCommits;

+

+ private transient ExecutorService workerPool;

+

+ public IcebergFilesCommitter(TableLoader tableLoader,

+ boolean replacePartitions,

+ Map<String, String> snapshotProperties,

+ Integer workerPoolSize) {

+

+ this.tableLoader = tableLoader;

+ this.replacePartitions = replacePartitions;

+ this.snapshotProperties = snapshotProperties;

+

+ this.workerPool =

+ ThreadPools.newWorkerPool("iceberg-worker-pool-" + Thread.currentThread().getName(), workerPoolSize);

+

+ // Open the table loader and load the table.

+ this.tableLoader.open();

+ this.table = tableLoader.loadTable();

+

+ maxContinuousEmptyCommits = PropertyUtil.propertyAsInt(table.properties(),

+ MAX_CONTINUOUS_EMPTY_COMMITS,

+ MAX_CONTINUOUS_EMPTY_COMMITS_DEFAULT);

+ Preconditions.checkArgument(

+ maxContinuousEmptyCommits > 0,

+ MAX_CONTINUOUS_EMPTY_COMMITS + " must be positive");

+ this.maxCommittedCheckpointId = INITIAL_CHECKPOINT_ID;

+ }

+

+ @Override

+ public void commit(Collection<CommitRequest<IcebergFlinkCommittable>> requests)

+ throws IOException, InterruptedException {

+ int dataFilesNumRestored = 0;

+ int deleteFilesNumRestored = 0;

+

+ int dataFilesNumStored = 0;

+ int deleteFilesNumStored = 0;

+

+ long checkpointId = 0;

+ Collection<CommitRequest<IcebergFlinkCommittable>> restored = Lists.newArrayList();

+ Collection<CommitRequest<IcebergFlinkCommittable>> store = Lists.newArrayList();

+

+ for (CommitRequest<IcebergFlinkCommittable> request : requests) {

+ checkpointId = request.getCommittable().checkpointId();

+ WriteResult committable = request.getCommittable().committable();

+ if (request.getNumberOfRetries() > 0) {

+ if (request.getCommittable().checkpointId() > maxCommittedCheckpointId) {

+ restored.add(request);

+ dataFilesNumRestored = dataFilesNumRestored + committable.dataFiles().length;

+ deleteFilesNumRestored = deleteFilesNumRestored + committable.deleteFiles().length;

+ }

+ } else {

+ if (request.getCommittable().checkpointId() > maxCommittedCheckpointId) {

+ store.add(request);

+ dataFilesNumStored = dataFilesNumStored + committable.dataFiles().length;

+ deleteFilesNumStored = deleteFilesNumStored + committable.deleteFiles().length;

+ }

+ }

+ }

+

+ if (restored.size() > 0) {

+ commitResult(dataFilesNumRestored, deleteFilesNumRestored, restored);

+ }

+ commitResult(dataFilesNumStored, deleteFilesNumStored, store);

Review Comment:

Not necessarily. When we have no data to commit, we increase the number of empty commits by one. When it is greater than the threshold, we also make a commit.

```java

private void commitResult(int dataFilesNum, int deleteFilesNum,

Collection<CommitRequest<FilesCommittable>> committableCollection) {

int totalFiles = dataFilesNum + deleteFilesNum;

continuousEmptyCheckpoints = totalFiles == 0 ? continuousEmptyCheckpoints + 1 : 0;

if (totalFiles != 0 || continuousEmptyCheckpoints % maxContinuousEmptyCommits == 0) {

if (replacePartitions) {

replacePartitions(committableCollection, deleteFilesNum);

} else {

commitDeltaTxn(committableCollection, dataFilesNum, deleteFilesNum);

}

continuousEmptyCheckpoints = 0;

}

}

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] pvary commented on a diff in pull request #4904: Flink: new sink base on the unified sink API

Posted by GitBox <gi...@apache.org>.

pvary commented on code in PR #4904:

URL: https://github.com/apache/iceberg/pull/4904#discussion_r925860958

##########

flink/v1.15/flink/src/main/java/org/apache/iceberg/flink/sink/v2/FlinkSink.java:

##########

@@ -0,0 +1,603 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+package org.apache.iceberg.flink.sink.v2;

+

+import java.io.IOException;

+import java.io.UncheckedIOException;

+import java.util.Collection;

+import java.util.List;

+import java.util.Map;

+import java.util.Set;

+import java.util.function.Function;

+import javax.annotation.Nullable;

+import org.apache.flink.api.common.functions.MapFunction;

+import org.apache.flink.api.common.functions.RichMapFunction;

+import org.apache.flink.api.common.typeinfo.TypeInformation;

+import org.apache.flink.api.connector.sink2.Committer;

+import org.apache.flink.api.connector.sink2.StatefulSink;

+import org.apache.flink.configuration.Configuration;

+import org.apache.flink.configuration.ReadableConfig;

+import org.apache.flink.core.io.SimpleVersionedSerializer;

+import org.apache.flink.streaming.api.connector.sink2.CommittableMessage;

+import org.apache.flink.streaming.api.connector.sink2.CommittableWithLineage;

+import org.apache.flink.streaming.api.connector.sink2.WithPostCommitTopology;

+import org.apache.flink.streaming.api.connector.sink2.WithPreCommitTopology;

+import org.apache.flink.streaming.api.connector.sink2.WithPreWriteTopology;

+import org.apache.flink.streaming.api.datastream.DataStream;

+import org.apache.flink.streaming.api.datastream.DataStreamSink;

+import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

+import org.apache.flink.streaming.api.operators.StreamingRuntimeContext;

+import org.apache.flink.table.api.TableSchema;

+import org.apache.flink.table.data.RowData;

+import org.apache.flink.table.data.util.DataFormatConverters;

+import org.apache.flink.table.types.DataType;

+import org.apache.flink.table.types.logical.RowType;

+import org.apache.flink.types.Row;

+import org.apache.iceberg.DataFile;

+import org.apache.iceberg.DistributionMode;

+import org.apache.iceberg.FileFormat;

+import org.apache.iceberg.PartitionField;

+import org.apache.iceberg.PartitionSpec;

+import org.apache.iceberg.Schema;

+import org.apache.iceberg.SerializableTable;

+import org.apache.iceberg.Table;

+import org.apache.iceberg.common.DynFields;

+import org.apache.iceberg.flink.FlinkSchemaUtil;

+import org.apache.iceberg.flink.FlinkWriteConf;

+import org.apache.iceberg.flink.FlinkWriteOptions;

+import org.apache.iceberg.flink.TableLoader;

+import org.apache.iceberg.flink.sink.EqualityFieldKeySelector;

+import org.apache.iceberg.flink.sink.PartitionKeySelector;

+import org.apache.iceberg.flink.sink.RowDataTaskWriterFactory;

+import org.apache.iceberg.flink.util.FlinkCompatibilityUtil;

+import org.apache.iceberg.relocated.com.google.common.base.Preconditions;

+import org.apache.iceberg.relocated.com.google.common.collect.Lists;

+import org.apache.iceberg.relocated.com.google.common.collect.Maps;

+import org.apache.iceberg.relocated.com.google.common.collect.Sets;

+import org.apache.iceberg.types.TypeUtil;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import static org.apache.iceberg.TableProperties.WRITE_DISTRIBUTION_MODE;

+

+public class FlinkSink implements StatefulSink<RowData, IcebergStreamWriterState>,

+ WithPreWriteTopology<RowData>,

+ WithPreCommitTopology<RowData, IcebergFlinkCommittable>,

+ WithPostCommitTopology<RowData, IcebergFlinkCommittable> {

+ private static final Logger LOG = LoggerFactory.getLogger(FlinkSink.class);

+

+ private final TableLoader tableLoader;

+ private final Table table;

+ private final boolean overwrite;

+ private final boolean upsertMode;

+ private final DistributionMode distributionMode;

+ private final List<String> equalityFieldColumns;

+ private final List<Integer> equalityFieldIds;

+ private final FileFormat dataFileFormat;

+ private final int workerPoolSize;

+ private final long targetDataFileSize;

+ private final String uidPrefix;

+ private final Map<String, String> snapshotProperties;

+ private final RowType flinkRowType;

+

+ public FlinkSink(TableLoader tableLoader,

+ @Nullable Table newTable,

+ TableSchema tableSchema,

+ List<String> equalityFieldColumns,

+ @Nullable String uidPrefix,

+ ReadableConfig readableConfig,

+ Map<String, String> writeOptions,

+ Map<String, String> snapshotProperties) {

+ this.tableLoader = tableLoader;

+ this.equalityFieldColumns = equalityFieldColumns;

+ this.uidPrefix = uidPrefix == null ? "" : uidPrefix;

+ this.snapshotProperties = snapshotProperties;

+

+ Preconditions.checkNotNull(tableLoader, "Table loader shouldn't be null");

+

+ if (newTable == null) {

+ tableLoader.open();

+ try (TableLoader loader = tableLoader) {

+ this.table = loader.loadTable();

+ } catch (IOException e) {

+ throw new UncheckedIOException("Failed to load iceberg table from table loader: " + tableLoader, e);

+ }

+ } else {

+ this.table = newTable;

+ }

+ FlinkWriteConf flinkWriteConf = new FlinkWriteConf(table, writeOptions, readableConfig);

+

+ this.equalityFieldIds = checkAndGetEqualityFieldIds(table, equalityFieldColumns);

+

+ this.flinkRowType = toFlinkRowType(table.schema(), tableSchema);

+

+ // Validate the equality fields and partition fields if we enable the upsert mode.

+ if (flinkWriteConf.upsertMode()) {

+ Preconditions.checkState(

+ !flinkWriteConf.overwriteMode(),

+ "OVERWRITE mode shouldn't be enable when configuring to use UPSERT data stream.");

+ Preconditions.checkState(

+ !equalityFieldIds.isEmpty(),

+ "Equality field columns shouldn't be empty when configuring to use UPSERT data stream.");

+ if (!table.spec().isUnpartitioned()) {

+ for (PartitionField partitionField : table.spec().fields()) {

+ Preconditions.checkState(equalityFieldIds.contains(partitionField.sourceId()),

+ "In UPSERT mode, partition field '%s' should be included in equality fields: '%s'",

+ partitionField, equalityFieldColumns);

+ }

+ }

+ }

+

+ this.upsertMode = flinkWriteConf.upsertMode();

+ this.overwrite = flinkWriteConf.overwriteMode();

+ this.distributionMode = flinkWriteConf.distributionMode();

+ this.workerPoolSize = flinkWriteConf.workerPoolSize();

+ this.targetDataFileSize = flinkWriteConf.targetDataFileSize();

+ this.dataFileFormat = flinkWriteConf.dataFileFormat();

+ }

+

+ @Override

+ public DataStream<RowData> addPreWriteTopology(DataStream<RowData> inputDataStream) {

+ return distributeDataStream(inputDataStream, equalityFieldIds, table.spec(),

+ table.schema(), flinkRowType, distributionMode, equalityFieldColumns);

+ }

+

+ @Override

+ public IcebergStreamWriter createWriter(InitContext context) {

+ return restoreWriter(context, null);

+ }

+

+ @Override

+ public IcebergStreamWriter restoreWriter(InitContext context,

+ Collection<IcebergStreamWriterState> recoveredState) {

+ StreamingRuntimeContext runtimeContext = runtimeContextHidden(context);

+ int attemptNumber = runtimeContext.getAttemptNumber();

+ String jobId = runtimeContext.getJobId().toString();

+ IcebergStreamWriter streamWriter = createStreamWriter(table, targetDataFileSize, dataFileFormat, upsertMode,

+ flinkRowType, equalityFieldIds, jobId, context.getSubtaskId(), attemptNumber);

+ if (recoveredState == null) {

+ return streamWriter;

+ }

+

+ return streamWriter.restoreWriter(recoveredState);

+ }

+

+ @Override

+ public SimpleVersionedSerializer<IcebergStreamWriterState> getWriterStateSerializer() {

+ return new IcebergStreamWriterStateSerializer<>();

+ }

+

+ static IcebergStreamWriter createStreamWriter(Table table,

+ long targetDataFileSize,

+ FileFormat dataFileFormat,

+ boolean upsertMode,

+ RowType flinkRowType,

+ List<Integer> equalityFieldIds,

+ String jobId,

+ int subTaskId,

+ long attemptId) {

+ RowDataTaskWriterFactory taskWriterFactory = new RowDataTaskWriterFactory(SerializableTable.copyOf(table),

+ flinkRowType, targetDataFileSize, dataFileFormat, equalityFieldIds, upsertMode);

+

+ return new IcebergStreamWriter(table.name(), taskWriterFactory, jobId, subTaskId, attemptId);

+ }

+

+ @Override

+ public DataStream<CommittableMessage<IcebergFlinkCommittable>> addPreCommitTopology(

+ DataStream<CommittableMessage<IcebergFlinkCommittable>> writeResults) {

+ return writeResults.map(new RichMapFunction<CommittableMessage<IcebergFlinkCommittable>,

+ CommittableMessage<IcebergFlinkCommittable>>() {

+ @Override

+ public CommittableMessage<IcebergFlinkCommittable> map(CommittableMessage<IcebergFlinkCommittable> message) {

+ if (message instanceof CommittableWithLineage) {

+ CommittableWithLineage<IcebergFlinkCommittable> committableWithLineage =

+ (CommittableWithLineage<IcebergFlinkCommittable>) message;

+ IcebergFlinkCommittable committable = committableWithLineage.getCommittable();

+ committable.checkpointId(committableWithLineage.getCheckpointId().orElse(0));

+ committable.subtaskId(committableWithLineage.getSubtaskId());

+ committable.jobID(getRuntimeContext().getJobId().toString());

+ }

+ return message;

+ }

+ }).uid(uidPrefix + "pre-commit-topology").global();

+ }

+

+ @Override

+ public Committer<IcebergFlinkCommittable> createCommitter() {

+ return new IcebergFilesCommitter(tableLoader, overwrite, snapshotProperties, workerPoolSize);

+ }

+

+ @Override

+ public SimpleVersionedSerializer<IcebergFlinkCommittable> getCommittableSerializer() {

+ return new IcebergFlinkCommittableSerializer();

+ }

+

+ @Override

+ public void addPostCommitTopology(DataStream<CommittableMessage<IcebergFlinkCommittable>> committables) {

+ // TODO Support small file compaction

+ }

+

+ private StreamingRuntimeContext runtimeContextHidden(InitContext context) {

+ DynFields.BoundField<StreamingRuntimeContext> runtimeContextBoundField =

+ DynFields.builder().hiddenImpl(context.getClass(), "runtimeContext").build(context);

+ return runtimeContextBoundField.get();

+ }

+

+ /**

+ * Initialize a {@link Builder} to export the data from generic input data stream into iceberg table. We use

+ * {@link RowData} inside the sink connector, so users need to provide a mapper function and a

+ * {@link TypeInformation} to convert those generic records to a RowData DataStream.

+ *

+ * @param input the generic source input data stream.

+ * @param mapper function to convert the generic data to {@link RowData}

+ * @param outputType to define the {@link TypeInformation} for the input data.

+ * @param <T> the data type of records.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public static <T> Builder builderFor(DataStream<T> input,

+ MapFunction<T, RowData> mapper,

+ TypeInformation<RowData> outputType) {

+ return new Builder().forMapperOutputType(input, mapper, outputType);

+ }

+

+ /**

+ * Initialize a {@link Builder} to export the data from input data stream with {@link Row}s into iceberg table. We use

+ * {@link RowData} inside the sink connector, so users need to provide a {@link TableSchema} for builder to convert

+ * those {@link Row}s to a {@link RowData} DataStream.

+ *

+ * @param input the source input data stream with {@link Row}s.

+ * @param tableSchema defines the {@link TypeInformation} for input data.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public static Builder forRow(DataStream<Row> input, TableSchema tableSchema) {

+ RowType rowType = (RowType) tableSchema.toRowDataType().getLogicalType();

+ DataType[] fieldDataTypes = tableSchema.getFieldDataTypes();

+

+ DataFormatConverters.RowConverter rowConverter = new DataFormatConverters.RowConverter(fieldDataTypes);

+ return builderFor(input, rowConverter::toInternal, FlinkCompatibilityUtil.toTypeInfo(rowType))

+ .tableSchema(tableSchema);

+ }

+

+ /**

+ * Initialize a {@link Builder} to export the data from input data stream with {@link RowData}s into iceberg table.

+ *

+ * @param input the source input data stream with {@link RowData}s.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public static Builder forRowData(DataStream<RowData> input) {

+ return new Builder().forRowData(input);

+ }

+

+ /**

+ * Initialize a {@link Builder} to export the data from input data stream with {@link RowData}s into iceberg table.

+ *

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public static Builder builder() {

+ return new Builder();

+ }

+

+ public static class Builder {

+ private Function<String, DataStream<RowData>> inputCreator = null;

+ private TableLoader tableLoader;

+ private Table table;

+ private TableSchema tableSchema;

+ private Integer writeParallelism = null;

+ private List<String> equalityFieldColumns = null;

+ private String uidPrefix = "iceberg-flink-job";

+ private final Map<String, String> snapshotProperties = Maps.newHashMap();

+ private ReadableConfig readableConfig = new Configuration();

+ private final Map<String, String> writeOptions = Maps.newHashMap();

+

+ private Builder() {

+ }

+

+ private Builder forRowData(DataStream<RowData> newRowDataInput) {

+ this.inputCreator = ignored -> newRowDataInput;

+ return this;

+ }

+

+ private <T> Builder forMapperOutputType(DataStream<T> input,

+ MapFunction<T, RowData> mapper,

+ TypeInformation<RowData> outputType) {

+ this.inputCreator = newUidPrefix -> {

+ // Input stream order is crucial for some situation(e.g. in cdc case).

+ // Therefore, we need to set the parallelismof map operator same as its input to keep map operator

+ // chaining its input, and avoid rebalanced by default.

+ SingleOutputStreamOperator<RowData> inputStream = input.map(mapper, outputType)

+ .setParallelism(input.getParallelism());

+ if (newUidPrefix != null) {

+ inputStream.name(Builder.this.operatorName(newUidPrefix)).uid(newUidPrefix + "-mapper");

+ }

+ return inputStream;

+ };

+ return this;

+ }

+

+ /**

+ * This iceberg {@link Table} instance is used for initializing {@link IcebergStreamWriter} which will write all

+ * the records into {@link DataFile}s and emit them to downstream operator. Providing a table would avoid so many

+ * table loading from each separate task.

+ *

+ * @param newTable the loaded iceberg table instance.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public Builder table(Table newTable) {

+ this.table = newTable;

+ return this;

+ }

+

+ /**

+ * The table loader is used for loading tables in {@link IcebergFilesCommitter} lazily, we need this loader because

+ * {@link Table} is not serializable and could not just use the loaded table from Builder#table in the remote task

+ * manager.

+ *

+ * @param newTableLoader to load iceberg table inside tasks.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public Builder tableLoader(TableLoader newTableLoader) {

+ this.tableLoader = newTableLoader;

+ return this;

+ }

+

+ /**

+ * Set the write properties for Flink sink.

+ * View the supported properties in {@link FlinkWriteOptions}

+ */

+ public Builder set(String property, String value) {

+ writeOptions.put(property, value);

+ return this;

+ }

+

+ /**

+ * Set the write properties for Flink sink.

+ * View the supported properties in {@link FlinkWriteOptions}

+ */

+ public Builder setAll(Map<String, String> properties) {

+ writeOptions.putAll(properties);

+ return this;

+ }

+

+ public Builder tableSchema(TableSchema newTableSchema) {

+ this.tableSchema = newTableSchema;

+ return this;

+ }

+

+ public Builder overwrite(boolean newOverwrite) {

+ writeOptions.put(FlinkWriteOptions.OVERWRITE_MODE.key(), Boolean.toString(newOverwrite));

+ return this;

+ }

+

+ public Builder flinkConf(ReadableConfig config) {

+ this.readableConfig = config;

+ return this;

+ }

+

+ /**

+ * Configure the write {@link DistributionMode} that the flink sink will use. Currently, flink support

+ * {@link DistributionMode#NONE} and {@link DistributionMode#HASH}.

+ *

+ * @param mode to specify the write distribution mode.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public Builder distributionMode(DistributionMode mode) {

+ Preconditions.checkArgument(

+ !DistributionMode.RANGE.equals(mode),

+ "Flink does not support 'range' write distribution mode now.");

+ if (mode != null) {

+ writeOptions.put(FlinkWriteOptions.DISTRIBUTION_MODE.key(), mode.modeName());

+ }

+ return this;

+ }

+

+ /**

+ * Configuring the write parallel number for iceberg stream writer.

+ *

+ * @param newWriteParallelism the number of parallel iceberg stream writer.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public Builder writeParallelism(int newWriteParallelism) {

+ this.writeParallelism = newWriteParallelism;

+ return this;

+ }

+

+ /**

+ * All INSERT/UPDATE_AFTER events from input stream will be transformed to UPSERT events, which means it will

+ * DELETE the old records and then INSERT the new records. In partitioned table, the partition fields should be

+ * a subset of equality fields, otherwise the old row that located in partition-A could not be deleted by the

+ * new row that located in partition-B.

+ *

+ * @param enabled indicate whether it should transform all INSERT/UPDATE_AFTER events to UPSERT.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public Builder upsert(boolean enabled) {

+ writeOptions.put(FlinkWriteOptions.WRITE_UPSERT_ENABLED.key(), Boolean.toString(enabled));

+ return this;

+ }

+

+ /**

+ * Configuring the equality field columns for iceberg table that accept CDC or UPSERT events.

+ *

+ * @param columns defines the iceberg table's key.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public Builder equalityFieldColumns(List<String> columns) {

+ this.equalityFieldColumns = columns;

+ return this;

+ }

+

+ /**

+ * Set the uid prefix for FlinkSink operators. Note that FlinkSink internally consists of multiple operators (like

+ * writer, committer, dummy sink etc.) Actually operator uid will be appended with a suffix like "uidPrefix-writer".

Review Comment:

That would be nice! 😄

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] pvary commented on a diff in pull request #4904: Flink: new sink base on the unified sink API

Posted by GitBox <gi...@apache.org>.

pvary commented on code in PR #4904:

URL: https://github.com/apache/iceberg/pull/4904#discussion_r925864835

##########

flink/v1.15/flink/src/test/java/org/apache/iceberg/flink/sink/v2/TestStreamWriter.java:

##########

@@ -0,0 +1,338 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+package org.apache.iceberg.flink.sink.v2;

+

+import java.io.File;

+import java.io.IOException;

+import java.util.Arrays;

+import java.util.Collection;

+import java.util.List;

+import java.util.Locale;

+import java.util.Map;

+import org.apache.flink.api.connector.sink2.SinkWriter;

+import org.apache.flink.configuration.Configuration;

+import org.apache.flink.metrics.testutils.MetricListener;

+import org.apache.flink.table.api.DataTypes;

+import org.apache.flink.table.api.TableSchema;

+import org.apache.flink.table.data.GenericRowData;

+import org.apache.flink.table.data.RowData;

+import org.apache.flink.table.types.logical.RowType;

+import org.apache.iceberg.AppendFiles;

+import org.apache.iceberg.DataFile;

+import org.apache.iceberg.FileFormat;

+import org.apache.iceberg.PartitionSpec;

+import org.apache.iceberg.Schema;

+import org.apache.iceberg.SerializableTable;

+import org.apache.iceberg.Table;

+import org.apache.iceberg.TableProperties;

+import org.apache.iceberg.data.GenericRecord;

+import org.apache.iceberg.data.Record;

+import org.apache.iceberg.flink.FlinkWriteConf;

+import org.apache.iceberg.flink.FlinkWriteOptions;

+import org.apache.iceberg.flink.SimpleDataUtil;

+import org.apache.iceberg.flink.sink.RowDataTaskWriterFactory;

+import org.apache.iceberg.hadoop.HadoopTables;

+import org.apache.iceberg.io.WriteResult;

+import org.apache.iceberg.relocated.com.google.common.collect.ImmutableMap;

+import org.apache.iceberg.relocated.com.google.common.collect.Lists;

+import org.apache.iceberg.relocated.com.google.common.collect.Maps;

+import org.apache.iceberg.types.Types;

+import org.junit.Assert;

+import org.junit.Before;

+import org.junit.Rule;

+import org.junit.Test;

+import org.junit.rules.TemporaryFolder;

+import org.junit.runner.RunWith;

+import org.junit.runners.Parameterized;

+

+@RunWith(Parameterized.class)

+public class TestStreamWriter {

+ @Rule

+ public TemporaryFolder tempFolder = new TemporaryFolder();

+ private MetricListener metricListener;

+ private Table table;

+

+ private final FileFormat format;

+ private final boolean partitioned;

+

+ @Parameterized.Parameters(name = "format = {0}, partitioned = {1}")

+ public static Object[][] parameters() {

+ return new Object[][] {

+ {"avro", true},

+ {"avro", false},

+ {"orc", true},

+ {"orc", false},

+ {"parquet", true},

+ {"parquet", false}

+ };

+ }

+

+ public TestStreamWriter(String format, boolean partitioned) {

+ this.format = FileFormat.valueOf(format.toUpperCase(Locale.ENGLISH));

+ this.partitioned = partitioned;

+ }

+

+ @Before

+ public void before() throws IOException {

+ metricListener = new MetricListener();

+ File folder = tempFolder.newFolder();

+ // Construct the iceberg table.

+ Map<String, String> props = ImmutableMap.of(TableProperties.DEFAULT_FILE_FORMAT, format.name());

+ table = SimpleDataUtil.createTable(folder.getAbsolutePath(), props, partitioned);

+ }

+

+ @Test

+ public void testPreCommit() throws Exception {

+ StreamWriter streamWriter = createIcebergStreamWriter();

+ streamWriter.write(SimpleDataUtil.createRowData(1, "hello"), new ContextImpl());

+ streamWriter.write(SimpleDataUtil.createRowData(2, "hello"), new ContextImpl());

+ streamWriter.write(SimpleDataUtil.createRowData(3, "hello"), new ContextImpl());

+ streamWriter.write(SimpleDataUtil.createRowData(4, "hello"), new ContextImpl());

+ streamWriter.write(SimpleDataUtil.createRowData(5, "hello"), new ContextImpl());

+

+ Collection<FilesCommittable> filesCommittables = streamWriter.prepareCommit();

+ Assert.assertEquals(filesCommittables.size(), 1);

+ }

+

+ @Test

+ public void testWritingTable() throws Exception {

+ StreamWriter streamWriter = createIcebergStreamWriter();

+ // The first checkpoint

+ streamWriter.write(SimpleDataUtil.createRowData(1, "hello"), new ContextImpl());

+ streamWriter.write(SimpleDataUtil.createRowData(2, "world"), new ContextImpl());

+ streamWriter.write(SimpleDataUtil.createRowData(3, "hello"), new ContextImpl());

+

+ Collection<FilesCommittable> filesCommittables = streamWriter.prepareCommit();

+ Assert.assertEquals(filesCommittables.size(), 1);

+

+ AppendFiles appendFiles = table.newAppend();

+

+ WriteResult result = null;

+ for (FilesCommittable filesCommittable : filesCommittables) {

+ result = filesCommittable.committable();

+ }

+ long expectedDataFiles = partitioned ? 2 : 1;

Review Comment:

nit: newline?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: issues-unsubscribe@iceberg.apache.org

For additional commands, e-mail: issues-help@iceberg.apache.org

[GitHub] [iceberg] hililiwei commented on a diff in pull request #4904: Flink: new sink base on the unified sink API

Posted by GitBox <gi...@apache.org>.

hililiwei commented on code in PR #4904:

URL: https://github.com/apache/iceberg/pull/4904#discussion_r910557828

##########

flink/v1.15/flink/src/main/java/org/apache/iceberg/flink/sink/v2/FlinkSink.java:

##########

@@ -0,0 +1,635 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one

+ * or more contributor license agreements. See the NOTICE file

+ * distributed with this work for additional information

+ * regarding copyright ownership. The ASF licenses this file

+ * to you under the Apache License, Version 2.0 (the

+ * "License"); you may not use this file except in compliance

+ * with the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing,

+ * software distributed under the License is distributed on an

+ * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+ * KIND, either express or implied. See the License for the

+ * specific language governing permissions and limitations

+ * under the License.

+ */

+

+package org.apache.iceberg.flink.sink.v2;

+

+import java.io.IOException;

+import java.io.UncheckedIOException;

+import java.util.Collection;

+import java.util.List;

+import java.util.Locale;

+import java.util.Map;

+import java.util.Set;

+import java.util.function.Function;

+import javax.annotation.Nullable;

+import org.apache.flink.api.common.functions.MapFunction;

+import org.apache.flink.api.common.functions.RichMapFunction;

+import org.apache.flink.api.common.typeinfo.TypeInformation;

+import org.apache.flink.api.connector.sink2.Committer;

+import org.apache.flink.api.connector.sink2.StatefulSink;

+import org.apache.flink.configuration.Configuration;

+import org.apache.flink.configuration.ReadableConfig;

+import org.apache.flink.core.io.SimpleVersionedSerializer;

+import org.apache.flink.streaming.api.connector.sink2.CommittableMessage;

+import org.apache.flink.streaming.api.connector.sink2.CommittableWithLineage;

+import org.apache.flink.streaming.api.connector.sink2.WithPostCommitTopology;

+import org.apache.flink.streaming.api.connector.sink2.WithPreCommitTopology;

+import org.apache.flink.streaming.api.connector.sink2.WithPreWriteTopology;

+import org.apache.flink.streaming.api.datastream.DataStream;

+import org.apache.flink.streaming.api.datastream.DataStreamSink;

+import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

+import org.apache.flink.streaming.api.operators.StreamingRuntimeContext;

+import org.apache.flink.table.api.TableSchema;

+import org.apache.flink.table.data.RowData;

+import org.apache.flink.table.data.util.DataFormatConverters;

+import org.apache.flink.table.types.DataType;

+import org.apache.flink.table.types.logical.RowType;

+import org.apache.flink.types.Row;

+import org.apache.iceberg.DataFile;

+import org.apache.iceberg.DistributionMode;

+import org.apache.iceberg.FileFormat;

+import org.apache.iceberg.PartitionField;

+import org.apache.iceberg.PartitionSpec;

+import org.apache.iceberg.Schema;

+import org.apache.iceberg.SerializableTable;

+import org.apache.iceberg.Table;

+import org.apache.iceberg.common.DynFields;

+import org.apache.iceberg.flink.FlinkConfigOptions;

+import org.apache.iceberg.flink.FlinkSchemaUtil;

+import org.apache.iceberg.flink.TableLoader;

+import org.apache.iceberg.flink.sink.EqualityFieldKeySelector;

+import org.apache.iceberg.flink.sink.PartitionKeySelector;

+import org.apache.iceberg.flink.sink.RowDataTaskWriterFactory;

+import org.apache.iceberg.flink.util.FlinkCompatibilityUtil;

+import org.apache.iceberg.relocated.com.google.common.annotations.VisibleForTesting;

+import org.apache.iceberg.relocated.com.google.common.base.Preconditions;

+import org.apache.iceberg.relocated.com.google.common.collect.Lists;

+import org.apache.iceberg.relocated.com.google.common.collect.Maps;

+import org.apache.iceberg.relocated.com.google.common.collect.Sets;

+import org.apache.iceberg.types.TypeUtil;

+import org.apache.iceberg.util.PropertyUtil;

+import org.slf4j.Logger;

+import org.slf4j.LoggerFactory;

+

+import static org.apache.iceberg.TableProperties.DEFAULT_FILE_FORMAT;

+import static org.apache.iceberg.TableProperties.DEFAULT_FILE_FORMAT_DEFAULT;

+import static org.apache.iceberg.TableProperties.UPSERT_ENABLED;

+import static org.apache.iceberg.TableProperties.UPSERT_ENABLED_DEFAULT;

+import static org.apache.iceberg.TableProperties.WRITE_DISTRIBUTION_MODE;

+import static org.apache.iceberg.TableProperties.WRITE_DISTRIBUTION_MODE_NONE;

+import static org.apache.iceberg.TableProperties.WRITE_TARGET_FILE_SIZE_BYTES;

+import static org.apache.iceberg.TableProperties.WRITE_TARGET_FILE_SIZE_BYTES_DEFAULT;

+

+public class FlinkSink implements StatefulSink<RowData, IcebergStreamWriterState>,

+ WithPreWriteTopology<RowData>,

+ WithPreCommitTopology<RowData, IcebergFlinkCommittable>,

+ WithPostCommitTopology<RowData, IcebergFlinkCommittable> {

+ private static final Logger LOG = LoggerFactory.getLogger(FlinkSink.class);

+

+ private final TableLoader tableLoader;

+ private final Table table;

+ private final boolean overwrite;

+ private final boolean upsertMode;

+ private final DistributionMode distributionMode;

+ private final List<String> equalityFieldColumns;

+ private final List<Integer> equalityFieldIds;

+ private final int workerPoolSize;

+ private final String uidPrefix;

+ private final Map<String, String> snapshotProperties;

+ private final RowType flinkRowType;

+

+ public FlinkSink(TableLoader tableLoader,

+ @Nullable Table newTable,

+ TableSchema tableSchema,

+ boolean overwrite,

+ DistributionMode distributionMode,

+ boolean upsert,

+ List<String> equalityFieldColumns,

+ @Nullable String uidPrefix,

+ ReadableConfig readableConfig,

+ Map<String, String> snapshotProperties) {

+ this.tableLoader = tableLoader;

+ this.overwrite = overwrite;

+ this.distributionMode = distributionMode;

+ this.equalityFieldColumns = equalityFieldColumns;

+ this.uidPrefix = uidPrefix == null ? "" : uidPrefix;

+ this.snapshotProperties = snapshotProperties;

+

+ Preconditions.checkNotNull(tableLoader, "Table loader shouldn't be null");

+

+ if (newTable == null) {

+ tableLoader.open();

+ try (TableLoader loader = tableLoader) {

+ this.table = loader.loadTable();

+ } catch (IOException e) {

+ throw new UncheckedIOException("Failed to load iceberg table from table loader: " + tableLoader, e);

+ }

+ } else {

+ this.table = newTable;

+ }

+

+ this.workerPoolSize = readableConfig.get(FlinkConfigOptions.TABLE_EXEC_ICEBERG_WORKER_POOL_SIZE);

+ this.equalityFieldIds = checkAndGetEqualityFieldIds(table, equalityFieldColumns);

+

+ this.flinkRowType = toFlinkRowType(table.schema(), tableSchema);

+

+ // Fallback to use upsert mode parsed from table properties if don't specify in job level.

+ this.upsertMode = upsert || PropertyUtil.propertyAsBoolean(table.properties(),

+ UPSERT_ENABLED, UPSERT_ENABLED_DEFAULT);

+

+ // Validate the equality fields and partition fields if we enable the upsert mode.

+ if (upsertMode) {

+ Preconditions.checkState(

+ !overwrite,

+ "OVERWRITE mode shouldn't be enable when configuring to use UPSERT data stream.");

+ Preconditions.checkState(

+ !equalityFieldIds.isEmpty(),

+ "Equality field columns shouldn't be empty when configuring to use UPSERT data stream.");

+ if (!table.spec().isUnpartitioned()) {

+ for (PartitionField partitionField : table.spec().fields()) {

+ Preconditions.checkState(equalityFieldIds.contains(partitionField.sourceId()),

+ "In UPSERT mode, partition field '%s' should be included in equality fields: '%s'",

+ partitionField, equalityFieldColumns);

+ }

+ }

+ }

+ }

+

+ @Override

+ public DataStream<RowData> addPreWriteTopology(DataStream<RowData> inputDataStream) {

+ return distributeDataStream(inputDataStream, table.properties(), equalityFieldIds, table.spec(),

+ table.schema(), flinkRowType, distributionMode, equalityFieldColumns);

+ }

+

+ @Override

+ public IcebergStreamWriter createWriter(InitContext context) {

+ return restoreWriter(context, null);

+ }

+

+ @Override

+ public IcebergStreamWriter restoreWriter(InitContext context,

+ Collection<IcebergStreamWriterState> recoveredState) {

+ StreamingRuntimeContext runtimeContext = runtimeContextHidden(context);

+ int attemptNumber = runtimeContext.getAttemptNumber();

+ String jobId = runtimeContext.getJobId().toString();

+ IcebergStreamWriter streamWriter = createStreamWriter(table, flinkRowType, equalityFieldIds,

+ upsertMode, jobId, context.getSubtaskId(), attemptNumber);

+ if (recoveredState == null) {

+ return streamWriter;

+ }

+ return streamWriter.restoreWriter(recoveredState);

+ }

+

+ @Override

+ public SimpleVersionedSerializer<IcebergStreamWriterState> getWriterStateSerializer() {

+ return new IcebergStreamWriterStateSerializer<>();

+ }

+

+ static IcebergStreamWriter createStreamWriter(Table table,

+ RowType flinkRowType,

+ List<Integer> equalityFieldIds,

+ boolean upsert,

+ String jobId,

+ int subTaskId,

+ long attemptId) {

+ Map<String, String> props = table.properties();

+ long targetFileSize = PropertyUtil.propertyAsLong(props, WRITE_TARGET_FILE_SIZE_BYTES,

+ WRITE_TARGET_FILE_SIZE_BYTES_DEFAULT);

+

+ RowDataTaskWriterFactory taskWriterFactory = new RowDataTaskWriterFactory(SerializableTable.copyOf(table),

+ flinkRowType, targetFileSize, getFileFormat(props), equalityFieldIds, upsert);

+

+ return new IcebergStreamWriter(table.name(), taskWriterFactory, jobId, subTaskId, attemptId);

+ }

+

+ @Override

+ public DataStream<CommittableMessage<IcebergFlinkCommittable>> addPreCommitTopology(

+ DataStream<CommittableMessage<IcebergFlinkCommittable>> writeResults) {

+ return writeResults.map(new RichMapFunction<CommittableMessage<IcebergFlinkCommittable>,

+ CommittableMessage<IcebergFlinkCommittable>>() {

+ @Override

+ public CommittableMessage<IcebergFlinkCommittable> map(CommittableMessage<IcebergFlinkCommittable> message) {

+ if (message instanceof CommittableWithLineage) {

+ CommittableWithLineage<IcebergFlinkCommittable> committableWithLineage =

+ (CommittableWithLineage<IcebergFlinkCommittable>) message;

+ IcebergFlinkCommittable committable = committableWithLineage.getCommittable();

+ committable.checkpointId(committableWithLineage.getCheckpointId().orElse(0));

+ committable.subtaskId(committableWithLineage.getSubtaskId());

+ committable.jobID(getRuntimeContext().getJobId().toString());

+ }

+ return message;

+ }

+ }).uid(uidPrefix + "pre-commit-topology").global();

+ }

+

+ @Override

+ public Committer<IcebergFlinkCommittable> createCommitter() {

+ return new IcebergFilesCommitter(tableLoader, overwrite, snapshotProperties, workerPoolSize);

+ }

+

+ @Override

+ public SimpleVersionedSerializer<IcebergFlinkCommittable> getCommittableSerializer() {

+ return new IcebergFlinkCommittableSerializer();

+ }

+

+ @Override

+ public void addPostCommitTopology(DataStream<CommittableMessage<IcebergFlinkCommittable>> committables) {

+ // TODO Support small file compaction

+ }

+

+ private StreamingRuntimeContext runtimeContextHidden(InitContext context) {

+ DynFields.BoundField<StreamingRuntimeContext> runtimeContextBoundField =

+ DynFields.builder().hiddenImpl(context.getClass(), "runtimeContext").build(context);

+ return runtimeContextBoundField.get();

+ }

+

+ private static FileFormat getFileFormat(Map<String, String> properties) {

+ String formatString = properties.getOrDefault(DEFAULT_FILE_FORMAT, DEFAULT_FILE_FORMAT_DEFAULT);

+ return FileFormat.valueOf(formatString.toUpperCase(Locale.ENGLISH));

+ }

+

+ /**

+ * Initialize a {@link Builder} to export the data from generic input data stream into iceberg table. We use

+ * {@link RowData} inside the sink connector, so users need to provide a mapper function and a

+ * {@link TypeInformation} to convert those generic records to a RowData DataStream.

+ *

+ * @param input the generic source input data stream.

+ * @param mapper function to convert the generic data to {@link RowData}

+ * @param outputType to define the {@link TypeInformation} for the input data.

+ * @param <T> the data type of records.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public static <T> Builder builderFor(DataStream<T> input,

+ MapFunction<T, RowData> mapper,

+ TypeInformation<RowData> outputType) {

+ return new Builder().forMapperOutputType(input, mapper, outputType);

+ }

+

+ /**

+ * Initialize a {@link Builder} to export the data from input data stream with {@link Row}s into iceberg table. We use

+ * {@link RowData} inside the sink connector, so users need to provide a {@link TableSchema} for builder to convert

+ * those {@link Row}s to a {@link RowData} DataStream.

+ *

+ * @param input the source input data stream with {@link Row}s.

+ * @param tableSchema defines the {@link TypeInformation} for input data.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public static Builder forRow(DataStream<Row> input, TableSchema tableSchema) {

+ RowType rowType = (RowType) tableSchema.toRowDataType().getLogicalType();

+ DataType[] fieldDataTypes = tableSchema.getFieldDataTypes();

+

+ DataFormatConverters.RowConverter rowConverter = new DataFormatConverters.RowConverter(fieldDataTypes);

+ return builderFor(input, rowConverter::toInternal, FlinkCompatibilityUtil.toTypeInfo(rowType))

+ .tableSchema(tableSchema);

+ }

+

+ /**

+ * Initialize a {@link Builder} to export the data from input data stream with {@link RowData}s into iceberg table.

+ *

+ * @param input the source input data stream with {@link RowData}s.

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public static Builder forRowData(DataStream<RowData> input) {

+ return new Builder().forRowData(input);

+ }

+

+ /**

+ * Initialize a {@link Builder} to export the data from input data stream with {@link RowData}s into iceberg table.

+ *

+ * @return {@link Builder} to connect the iceberg table.

+ */

+ public static Builder builder() {

+ return new Builder();

+ }

+

+ public static class Builder {

+ private Function<String, DataStream<RowData>> inputCreator = null;

+ private TableLoader tableLoader;

+ private Table table;

+ private TableSchema tableSchema;

+ private boolean overwrite = false;

+ private DistributionMode distributionMode = null;

+ private Integer writeParallelism = null;

+ private boolean upsert = false;