You are viewing a plain text version of this content. The canonical link for it is here.

Posted to reviews@spark.apache.org by "zhenlineo (via GitHub)" <gi...@apache.org> on 2023/03/10 04:23:49 UTC

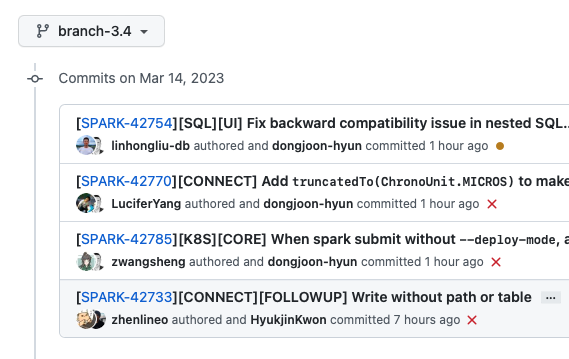

[GitHub] [spark] zhenlineo opened a new pull request, #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

zhenlineo opened a new pull request, #40358:

URL: https://github.com/apache/spark/pull/40358

### What changes were proposed in this pull request?

Fixes `DataFrameWriter.save` to work without path or table parameter.

Added support of jdbc method in the writer as it is one of the impl that does not contains a path or table.

### Why are the changes needed?

DataFrameWriter.save should work without path parameter because some data sources, such as jdbc, noop, works without those parameters.

The follow up fix for scala client of https://github.com/apache/spark/pull/40356

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit and E2E test

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] ueshin commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "ueshin (via GitHub)" <gi...@apache.org>.

ueshin commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1132736845

##########

connector/connect/client/jvm/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala:

##########

@@ -228,7 +228,9 @@ final class DataFrameWriter[T] private[sql] (ds: Dataset[T]) {

// Set path or table

f(builder)

- require(builder.hasPath != builder.hasTable) // Only one can be set

+

+ // Cannot both be set, hasPath or hasTable or nothing

+ require(builder.hasPath || builder.hasTable || (!builder.hasPath && !builder.hasTable))

Review Comment:

It seems to pass for both set case, too?

`require(!(builder.hasPath && builder.hasTable))`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "dongjoon-hyun (via GitHub)" <gi...@apache.org>.

dongjoon-hyun commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1136022984

##########

connector/connect/client/jvm/src/test/scala/org/apache/spark/sql/ClientE2ETestSuite.scala:

##########

@@ -175,6 +176,26 @@ class ClientE2ETestSuite extends RemoteSparkSession with SQLHelper {

}

}

+ test("write without table or path") {

+ // Should receive no error to write noop

+ spark.range(10).write.format("noop").mode("append").save()

+ }

+

+ test("write jdbc") {

Review Comment:

Thank you, @zhenlineo . Let me check more.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] HyukjinKwon closed pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "HyukjinKwon (via GitHub)" <gi...@apache.org>.

HyukjinKwon closed pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

URL: https://github.com/apache/spark/pull/40358

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "dongjoon-hyun (via GitHub)" <gi...@apache.org>.

dongjoon-hyun commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1135896883

##########

connector/connect/client/jvm/src/test/scala/org/apache/spark/sql/ClientE2ETestSuite.scala:

##########

@@ -175,6 +176,26 @@ class ClientE2ETestSuite extends RemoteSparkSession with SQLHelper {

}

}

+ test("write without table or path") {

+ // Should receive no error to write noop

+ spark.range(10).write.format("noop").mode("append").save()

+ }

+

+ test("write jdbc") {

Review Comment:

This seems to break `branch-3.4` somehow. I'm checking it now.

- https://github.com/apache/spark/actions/runs/4414215325/jobs/7742911619

```

[info] - write jdbc *** FAILED *** (527 milliseconds)

[info] io.grpc.StatusRuntimeException: INTERNAL: No suitable driver

[info] at io.grpc.Status.asRuntimeException(Status.java:535)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] ueshin commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "ueshin (via GitHub)" <gi...@apache.org>.

ueshin commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1132736845

##########

connector/connect/client/jvm/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala:

##########

@@ -228,7 +228,9 @@ final class DataFrameWriter[T] private[sql] (ds: Dataset[T]) {

// Set path or table

f(builder)

- require(builder.hasPath != builder.hasTable) // Only one can be set

+

+ // Cannot both be set, hasPath or hasTable or nothing

+ require(builder.hasPath || builder.hasTable || (!builder.hasPath && !builder.hasTable))

Review Comment:

It seems to pass for both set case?

`require(!(builder.hasPath && builder.hasTable))`?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "HyukjinKwon (via GitHub)" <gi...@apache.org>.

HyukjinKwon commented on PR #40358:

URL: https://github.com/apache/spark/pull/40358#issuecomment-1467729639

Merged to master and branch-3.4.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "dongjoon-hyun (via GitHub)" <gi...@apache.org>.

dongjoon-hyun commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1135947502

##########

connector/connect/client/jvm/src/test/scala/org/apache/spark/sql/ClientE2ETestSuite.scala:

##########

@@ -175,6 +176,26 @@ class ClientE2ETestSuite extends RemoteSparkSession with SQLHelper {

}

}

+ test("write without table or path") {

+ // Should receive no error to write noop

+ spark.range(10).write.format("noop").mode("append").save()

+ }

+

+ test("write jdbc") {

Review Comment:

In addition, please see the CI. It's broken consistently.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] zhenlineo commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "zhenlineo (via GitHub)" <gi...@apache.org>.

zhenlineo commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1136713977

##########

connector/connect/client/jvm/src/test/scala/org/apache/spark/sql/ClientE2ETestSuite.scala:

##########

@@ -175,6 +176,26 @@ class ClientE2ETestSuite extends RemoteSparkSession with SQLHelper {

}

}

+ test("write without table or path") {

+ // Should receive no error to write noop

+ spark.range(10).write.format("noop").mode("append").save()

+ }

+

+ test("write jdbc") {

Review Comment:

@dongjoon-hyun I saw the 3.4 build went back to green. Is this https://github.com/apache/spark/commit/bf9c4b99cfc9fd987b0ccbf77f01f8a6ec965acf the fix? Is there still a problem that I shall fix?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "dongjoon-hyun (via GitHub)" <gi...@apache.org>.

dongjoon-hyun commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1137339742

##########

connector/connect/client/jvm/src/test/scala/org/apache/spark/sql/ClientE2ETestSuite.scala:

##########

@@ -175,6 +176,26 @@ class ClientE2ETestSuite extends RemoteSparkSession with SQLHelper {

}

}

+ test("write without table or path") {

+ // Should receive no error to write noop

+ spark.range(10).write.format("noop").mode("append").save()

+ }

+

+ test("write jdbc") {

Review Comment:

Yes, it's resolved for now. Thank you for checking, @zhenlineo !

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] zhenlineo commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "zhenlineo (via GitHub)" <gi...@apache.org>.

zhenlineo commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1135964882

##########

connector/connect/client/jvm/src/test/scala/org/apache/spark/sql/ClientE2ETestSuite.scala:

##########

@@ -175,6 +176,26 @@ class ClientE2ETestSuite extends RemoteSparkSession with SQLHelper {

}

}

+ test("write without table or path") {

+ // Should receive no error to write noop

+ spark.range(10).write.format("noop").mode("append").save()

+ }

+

+ test("write jdbc") {

Review Comment:

@dongjoon-hyun Be free to revert it in 3.4 and I will take a better look late today or tomorrow. Thanks. I can send a PR target at 3.4 directly. Or whatever is the easiest.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "dongjoon-hyun (via GitHub)" <gi...@apache.org>.

dongjoon-hyun commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1136030130

##########

connector/connect/client/jvm/src/test/scala/org/apache/spark/sql/ClientE2ETestSuite.scala:

##########

@@ -175,6 +176,26 @@ class ClientE2ETestSuite extends RemoteSparkSession with SQLHelper {

}

}

+ test("write without table or path") {

+ // Should receive no error to write noop

+ spark.range(10).write.format("noop").mode("append").save()

+ }

+

+ test("write jdbc") {

Review Comment:

From my side, https://github.com/apache/spark/pull/40358#discussion_r1135938056 also failed.

```

$ build/sbt -Phive -Pconnect package

$ build/sbt "connect-client-jvm/test"

...

[info] ClientE2ETestSuite:

[info] - spark result schema (290 milliseconds)

[info] - spark result array (290 milliseconds)

[info] - eager execution of sql (15 seconds, 819 milliseconds)

[info] - simple dataset (1 second, 28 milliseconds)

[info] - SPARK-42665: Ignore simple udf test until the udf is fully implemented. !!! IGNORED !!!

[info] - read and write (929 milliseconds)

[info] - read path collision (31 milliseconds)

[info] - write table (4 seconds, 540 milliseconds)

[info] - write without table or path (348 milliseconds)

[info] - write jdbc *** FAILED *** (365 milliseconds)

[info] io.grpc.StatusRuntimeException: INTERNAL: No suitable driver

...

```

~In this case, the usual suspect is Java. GitHub Action CI and I'm using Java 8.~

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "dongjoon-hyun (via GitHub)" <gi...@apache.org>.

dongjoon-hyun commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1136030130

##########

connector/connect/client/jvm/src/test/scala/org/apache/spark/sql/ClientE2ETestSuite.scala:

##########

@@ -175,6 +176,26 @@ class ClientE2ETestSuite extends RemoteSparkSession with SQLHelper {

}

}

+ test("write without table or path") {

+ // Should receive no error to write noop

+ spark.range(10).write.format("noop").mode("append").save()

+ }

+

+ test("write jdbc") {

Review Comment:

From my side, https://github.com/apache/spark/pull/40358#discussion_r1135938056 also failed.

```

$ build/sbt -Phive -Pconnect package

$ build/sbt "connect-client-jvm/test"

...

[info] ClientE2ETestSuite:

[info] - spark result schema (290 milliseconds)

[info] - spark result array (290 milliseconds)

[info] - eager execution of sql (15 seconds, 819 milliseconds)

[info] - simple dataset (1 second, 28 milliseconds)

[info] - SPARK-42665: Ignore simple udf test until the udf is fully implemented. !!! IGNORED !!!

[info] - read and write (929 milliseconds)

[info] - read path collision (31 milliseconds)

[info] - write table (4 seconds, 540 milliseconds)

[info] - write without table or path (348 milliseconds)

[info] - write jdbc *** FAILED *** (365 milliseconds)

[info] io.grpc.StatusRuntimeException: INTERNAL: No suitable driver

...

```

In this case, the usual suspect is Java. GitHub Action CI and I'm using Java 8.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "dongjoon-hyun (via GitHub)" <gi...@apache.org>.

dongjoon-hyun commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1135946160

##########

connector/connect/client/jvm/src/test/scala/org/apache/spark/sql/ClientE2ETestSuite.scala:

##########

@@ -175,6 +176,26 @@ class ClientE2ETestSuite extends RemoteSparkSession with SQLHelper {

}

}

+ test("write without table or path") {

+ // Should receive no error to write noop

+ spark.range(10).write.format("noop").mode("append").save()

+ }

+

+ test("write jdbc") {

Review Comment:

To @zhenlineo , I reproduced the error locally in this way on `branch-3.4` while the same command works in `master`.

```

$ build/sbt -Phive -Phadoop-3 assembly/package "protobuf/test" "connect-common/test" "connect/test" "connect-client-jvm/test"

...

[info] ClientE2ETestSuite:

[info] - spark result schema (319 milliseconds)

[info] - spark result array (350 milliseconds)

[info] - eager execution of sql (18 seconds, 3 milliseconds)

[info] - simple dataset (1 second, 194 milliseconds)

[info] - SPARK-42665: Ignore simple udf test until the udf is fully implemented. !!! IGNORED !!!

[info] - read and write (1 second, 32 milliseconds)

[info] - read path collision (32 milliseconds)

[info] - write table (5 seconds, 349 milliseconds)

[info] - write without table or path (170 milliseconds)

[info] - write jdbc *** FAILED *** (325 milliseconds)

[info] io.grpc.StatusRuntimeException: INTERNAL: No suitable driver

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] zhenlineo commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "zhenlineo (via GitHub)" <gi...@apache.org>.

zhenlineo commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1135938056

##########

connector/connect/client/jvm/src/test/scala/org/apache/spark/sql/ClientE2ETestSuite.scala:

##########

@@ -175,6 +176,26 @@ class ClientE2ETestSuite extends RemoteSparkSession with SQLHelper {

}

}

+ test("write without table or path") {

+ // Should receive no error to write noop

+ spark.range(10).write.format("noop").mode("append").save()

+ }

+

+ test("write jdbc") {

Review Comment:

@dongjoon-hyun I checked `branch-3.4` locally and I can run the following without error:

```

build/sbt -Phive -Pconnect package

build/sbt "connect-client-jvm/test"

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] amaliujia commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "amaliujia (via GitHub)" <gi...@apache.org>.

amaliujia commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1131967870

##########

connector/connect/client/jvm/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala:

##########

@@ -345,6 +347,37 @@ final class DataFrameWriter[T] private[sql] (ds: Dataset[T]) {

})

}

+ /**

+ * Saves the content of the `DataFrame` to an external database table via JDBC. In the case the

+ * table already exists in the external database, behavior of this function depends on the save

+ * mode, specified by the `mode` function (default to throwing an exception).

+ *

+ * Don't create too many partitions in parallel on a large cluster; otherwise Spark might crash

+ * your external database systems.

+ *

+ * JDBC-specific option and parameter documentation for storing tables via JDBC in <a

+ * href="https://spark.apache.org/docs/latest/sql-data-sources-jdbc.html#data-source-option">

+ * Data Source Option</a> in the version you use.

+ *

+ * @param table

+ * Name of the table in the external database.

+ * @param connectionProperties

+ * JDBC database connection arguments, a list of arbitrary string tag/value. Normally at least

+ * a "user" and "password" property should be included. "batchsize" can be used to control the

+ * number of rows per insert. "isolationLevel" can be one of "NONE", "READ_COMMITTED",

+ * "READ_UNCOMMITTED", "REPEATABLE_READ", or "SERIALIZABLE", corresponding to standard

+ * transaction isolation levels defined by JDBC's Connection object, with default of

+ * "READ_UNCOMMITTED".

+ * @since 1.4.0

Review Comment:

since 3.4.0

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] zhenlineo commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "zhenlineo (via GitHub)" <gi...@apache.org>.

zhenlineo commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1135969290

##########

connector/connect/client/jvm/src/test/scala/org/apache/spark/sql/ClientE2ETestSuite.scala:

##########

@@ -175,6 +176,26 @@ class ClientE2ETestSuite extends RemoteSparkSession with SQLHelper {

}

}

+ test("write without table or path") {

+ // Should receive no error to write noop

+ spark.range(10).write.format("noop").mode("append").save()

+ }

+

+ test("write jdbc") {

Review Comment:

@dongjoon-hyun A quick hint before I run. This commands worked locally for 3.4 branch:

```

build/sbt -Phive -Phadoop-3 -Pconnect assembly/package "protobuf/test" "connect-common/test" "connect/test" "connect-client-jvm/test"

```

Do you know if we can just add `Pconnect` to the exec command?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] zhenlineo commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "zhenlineo (via GitHub)" <gi...@apache.org>.

zhenlineo commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1136720132

##########

connector/connect/client/jvm/src/test/scala/org/apache/spark/sql/ClientE2ETestSuite.scala:

##########

@@ -175,6 +176,26 @@ class ClientE2ETestSuite extends RemoteSparkSession with SQLHelper {

}

}

+ test("write without table or path") {

+ // Should receive no error to write noop

+ spark.range(10).write.format("noop").mode("append").save()

+ }

+

+ test("write jdbc") {

Review Comment:

@dongjoon-hyun Aha, I saw the fix https://github.com/apache/spark/commit/ab7c4f8d0392b7e2f5c7ff6d8f6375cad8e874f3. Thanks a lot!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "dongjoon-hyun (via GitHub)" <gi...@apache.org>.

dongjoon-hyun commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1137339742

##########

connector/connect/client/jvm/src/test/scala/org/apache/spark/sql/ClientE2ETestSuite.scala:

##########

@@ -175,6 +176,26 @@ class ClientE2ETestSuite extends RemoteSparkSession with SQLHelper {

}

}

+ test("write without table or path") {

+ // Should receive no error to write noop

+ spark.range(10).write.format("noop").mode("append").save()

+ }

+

+ test("write jdbc") {

Review Comment:

Yes, it's triaged for now. Thank you for checking, @zhenlineo !

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] dongjoon-hyun commented on a diff in pull request #40358: [SPARK-42733][CONNECT][Followup] Write without path or table

Posted by "dongjoon-hyun (via GitHub)" <gi...@apache.org>.

dongjoon-hyun commented on code in PR #40358:

URL: https://github.com/apache/spark/pull/40358#discussion_r1135893272

##########

connector/connect/client/jvm/src/test/scala/org/apache/spark/sql/DatasetSuite.scala:

##########

@@ -100,6 +101,33 @@ class DatasetSuite extends ConnectFunSuite with BeforeAndAfterEach {

assert(actualPlan.equals(expectedPlan))

}

+ test("write jdbc") {

Review Comment:

This seems to break `branch-3.4` somehow. I'm checking it now.

- https://github.com/apache/spark/actions/runs/4414215325/jobs/7742911619

```

[info] - write jdbc *** FAILED *** (527 milliseconds)

[info] io.grpc.StatusRuntimeException: INTERNAL: No suitable driver

[info] at io.grpc.Status.asRuntimeException(Status.java:535)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org