You are viewing a plain text version of this content. The canonical link for it is here.

Posted to reviews@spark.apache.org by yucai <gi...@git.apache.org> on 2018/08/12 05:32:04 UTC

[GitHub] spark issue #19788: [SPARK-9853][Core] Optimize shuffle fetch of contiguous ...

Github user yucai commented on the issue:

https://github.com/apache/spark/pull/19788

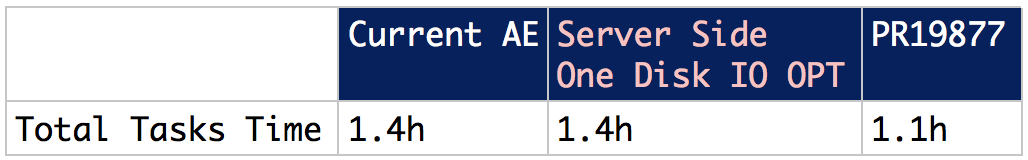

**Summary**

One disk IO solution's performance seems not as good as current PR19877's implementation.

**Benchmark**

```scacla

spark.range(1, 5120000000L, 1, 1280).selectExpr("id as key", "id as value").groupBy("key").agg(max("value")).foreach(identity(_))

```

All codes are based on the same recent master.

Current AE: https://github.com/yucai/spark/tree/current_ae

Server Side One Disk IO OPT: https://github.com/yucai/spark/tree/one_disk

Rebase PR19877: https://github.com/yucai/spark/commits/pr19788_merge

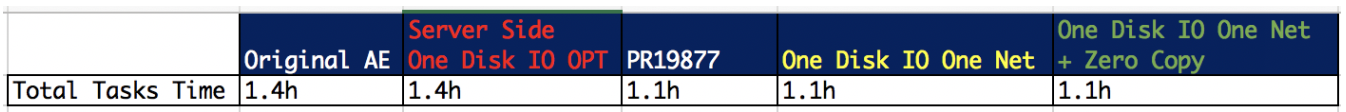

**Deep Dive**

In one disk IO OPT solution, we still have two disadvantages:

1. Need send the shuffle block one by one, and the client side needs process them one by one.

Instead PR19877 will send all of them in one time (logically) and client side processes them in one time.

2. No netty's zero copy.

So I did another two experiment to verify my guess.

1. One Disk IO One Net (I hacked some client side codes):

https://github.com/yucai/spark/commits/one_disk_one_net_1

2. One Disk IO One Net + Zero Copy (need client hack also):

https://github.com/yucai/spark/commits/one_disk_one_net_2

After optimizing to "one net", we got the similar performance as PR19877.

Looks like "one net" is also important, but it needs change in client side.

@cloud-fan, I understand you may be very busy with 2.4, feel free to ping me if you have any suggestion.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org