You are viewing a plain text version of this content. The canonical link for it is here.

Posted to notifications@shardingsphere.apache.org by GitBox <gi...@apache.org> on 2022/01/11 07:41:03 UTC

[GitHub] [shardingsphere-elasticjob] RedRed228 opened a new issue #2038: OneOffJob cannot be triggered on some instances occasionally

RedRed228 opened a new issue #2038:

URL: https://github.com/apache/shardingsphere-elasticjob/issues/2038

Version: 3.0.1

Project: ElasticJob-Lite

Problem: All instances that have sharding items should start running after calling instanceService.triggerAllInstances(), but some instances cannot be triggered.

The problem occured occasionally, and it disappear if we restart the instance or any other instance join in, which will cause the resharding nessary flag being setted.

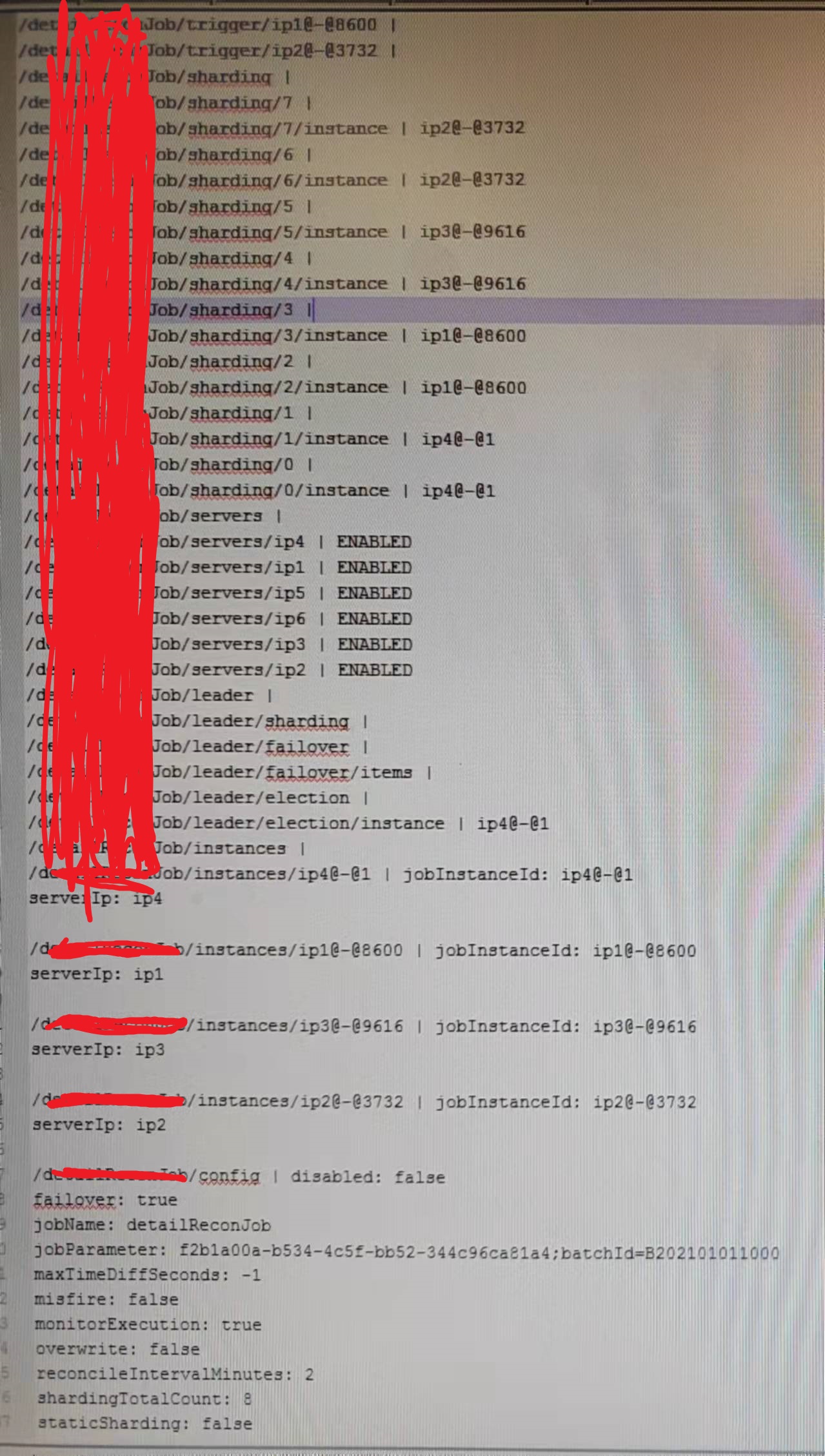

The dump when the problem occured:

From the dump we can see that trigger node have instances nodes that didn't be triggered, the instances ip1@-8600 and ip2@-@3732 are running normally but their shards cannot be triggered.

Any relay will be appreaciated!

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@shardingsphere.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [shardingsphere-elasticjob] RedRed228 edited a comment on issue #2038: OneOffJob cannot be triggered on some instances occasionally

Posted by GitBox <gi...@apache.org>.

RedRed228 edited a comment on issue #2038:

URL: https://github.com/apache/shardingsphere-elasticjob/issues/2038#issuecomment-1013025677

The issue sometimes looks like: when we triggered the job, no shard was triggered at all. The dump file:

From the zookeeper data we can see that the resharding necessary flag was set and the leader was elected, but the leader didn’t do resharding.

The leader instance has many debug logs:

The ip server node is a server that have been deleted by us. We deleted the node and this is the cause of the issue, I will discuss of the reason why we delete the server node later.

The follower instances have many debug logs:

With the debug logs, we can infer that the leader didn’t do resharding. So I dumped the leader’s stack:

I found that the "Curator-SafeNotifyService-0" thread always stuck here, falling into endless loop at ServerService.isEnableServer():

public boolean isEnableServer(final String ip) {

String serverStatus = jobNodeStorage.getJobNodeData(serverNode.getServerNode(ip));

**//Endless loop happend!

while (Strings.isNullOrEmpty(serverStatus)) {

BlockUtils.waitingShortTime();

serverStatus = jobNodeStorage.getJobNodeData(serverNode.getServerNode(ip));

}**

return !ServerStatus.DISABLED.name().equals(serverStatus);

}

Reason found:

It's because our application was runing on cloud-native architechture, and we found that the servers node's children become more and more with the container(pod) being destroyed and created again and again. So I registered a shutdownHook when the container is created, which would remove the instance's server node under servers node from zookeeper when the container is destroyed by calling JobOperateAPI's remove method.

It caused this issue because when container was destroyed, the instance gone down and JobCrashedJobListener will be triggered, and the listener will fall into the endless loop as mentioned above if the instance’s server node was deleted.

All shards would not be triggered if the leader node‘s "Curator-SafeNotifyService-0" thread fall into the endless loop because only the leader node can do resharding, and all the listeners are triggered by the same thread(This maybe another issue.). But if the leader node didn’t fall into the endless loop, resharding will success and we would see that some shards are trigged successfully but those whose instance fell into the endless loop are not, just like the issue.

But we do need to solve the problem of server nodes becoming more and more under servers node, so before delete the server node, we sleep some time longer than sessionTimeout to ensure that all the alive instances have finished JobCrashedJobListener to avoid "Curator-SafeNotifyService-0" thread falling into the endless loop.

And I’m not sure if we can call addListener(listener, executor) instead of addListener(listener) to register listeners in JobNodeStorage’s addDataListener method:

public void addDataListener(final CuratorCacheListener listener) {

CuratorCache cache = (CuratorCache) regCenter.getRawCache("/" + jobName);

**//Maybe we can call addListener(listener, executor) instead of addListener(listener) at here?

cache.listenable().addListener(listener);**

}

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@shardingsphere.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [shardingsphere-elasticjob] RedRed228 edited a comment on issue #2038: OneOffJob cannot be triggered or can only be triggerd on a part of instances occasionally

Posted by GitBox <gi...@apache.org>.

RedRed228 edited a comment on issue #2038:

URL: https://github.com/apache/shardingsphere-elasticjob/issues/2038#issuecomment-1013025677

The issue sometimes looks like: when we triggered the job, no shard was triggered at all. The dump file:

From the zookeeper data we can see that the resharding necessary flag was set and the leader was elected, but the leader didn’t do resharding.

The leader instance has many debug logs:

The ip server node is a server that have been deleted by us. We deleted the node and this is the cause of the issue, I will discuss of the reason why we delete the server node later.

The follower instances have many debug logs:

With the debug logs, we can infer that the leader didn’t do resharding. So I dumped the leader’s stack:

I found that the "Curator-SafeNotifyService-0" thread always stuck here, falling into endless loop at ServerService.isEnableServer():

public boolean isEnableServer(final String ip) {

String serverStatus = jobNodeStorage.getJobNodeData(serverNode.getServerNode(ip));

**//Endless loop happend!

while (Strings.isNullOrEmpty(serverStatus)) {

BlockUtils.waitingShortTime();

serverStatus = jobNodeStorage.getJobNodeData(serverNode.getServerNode(ip));

}**

return !ServerStatus.DISABLED.name().equals(serverStatus);

}

Reason found:

It's because our application was runing on cloud-native architechture, and we found that the servers node's children become more and more with the container(pod) being destroyed and created again and again. So I registered a shutdownHook when the container is created, which would remove the instance's server node under servers node from zookeeper when the container is destroyed by calling JobOperateAPI's remove method.

It caused this issue because when container was destroyed, the instance gone down and JobCrashedJobListener will be triggered, and the listener will fall into the endless loop as mentioned above if the instance’s server node was deleted.

All shards would not be triggered if the leader node‘s "Curator-SafeNotifyService-0" thread fall into the endless loop because only the leader node can do resharding, and all the listeners are triggered by the same thread(**This maybe another issue.**). But if the leader node didn’t fall into the endless loop, resharding will success and we would see that some shards are trigged successfully but those whose instance fell into the endless loop are not, just like the issue.

But we do need to solve the problem of server nodes becoming more and more under servers node, so before delete the server node, we sleep some time longer than sessionTimeout to ensure that all the alive instances have finished JobCrashedJobListener to avoid "Curator-SafeNotifyService-0" thread falling into the endless loop.

And I’m not sure if we can call addListener(listener, executor) instead of addListener(listener) to register listeners in JobNodeStorage’s addDataListener method to solve "**may be another issue**" metioned above.

public void addDataListener(final CuratorCacheListener listener) {

CuratorCache cache = (CuratorCache) regCenter.getRawCache("/" + jobName);

**//Maybe we can call addListener(listener, executor) instead of addListener(listener) at here?

cache.listenable().addListener(listener);**

}

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@shardingsphere.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [shardingsphere-elasticjob] TeslaCN commented on issue #2038: OneOffJob cannot be triggered on some instances occasionally

Posted by GitBox <gi...@apache.org>.

TeslaCN commented on issue #2038:

URL: https://github.com/apache/shardingsphere-elasticjob/issues/2038#issuecomment-1009769413

Thanks for your feedback. We will do some investigation.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@shardingsphere.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [shardingsphere-elasticjob] RedRed228 edited a comment on issue #2038: OneOffJob cannot be triggered on some instances occasionally

Posted by GitBox <gi...@apache.org>.

RedRed228 edited a comment on issue #2038:

URL: https://github.com/apache/shardingsphere-elasticjob/issues/2038#issuecomment-1013025677

The issue sometimes looks like: when we triggered the job, no shard was triggered at all.

I didn’t get the dump file but checked the zookeeper node directly when the problem occurred. From the zookeeper data I saw the resharding necessary flag was set and the leader was elected, but the leader didn’t do resharding.

The leader instance has many debug logs:

The ip server node is a server that have been deleted by us. We deleted the node and this is the cause of the issue, I will discuss of the reason why we delete the server node later.

The follower instances have many debug logs:

With the debug logs, we can infer that the leader didn’t do resharding. So I dumped the leader’s stack:

I found that the "Curator-SafeNotifyService-0" thread always stuck here, falling into endless loop at ServerService.isEnableServer():

public boolean isEnableServer(final String ip) {

String serverStatus = jobNodeStorage.getJobNodeData(serverNode.getServerNode(ip));

**//Endless loop happend!

while (Strings.isNullOrEmpty(serverStatus)) {

BlockUtils.waitingShortTime();

serverStatus = jobNodeStorage.getJobNodeData(serverNode.getServerNode(ip));

}**

return !ServerStatus.DISABLED.name().equals(serverStatus);

}

Reason found:

It's because our application was runing on cloud-native architechture, and we found that the servers node's children become more and more with the container(pod) being destroyed and created again and again. So I registered a shutdownHook when the container is created, which would remove the instance's server node under servers node from zookeeper when the container is destroyed by calling JobOperateAPI's remove method.

It caused this issue because when container was destroyed, the instance gone down and JobCrashedJobListener will be triggered, and the listener will fall into the endless loop as mentioned above if the instance’s server node was deleted.

All shards would not be triggered if the leader node‘s "Curator-SafeNotifyService-0" thread fall into the endless loop because only the leader node can do resharding, and all the listeners are triggered by the same thread(This maybe another issue.). But if the leader node didn’t fall into the endless loop, resharding will success and we would see that some shards are trigged successfully but those whose instance fell into the endless loop are not, just like the issue.

But we do need to solve the problem of server nodes becoming more and more under servers node, so before delete the server node, we sleep some time longer than sessionTimeout to ensure that all the alive instances have finished JobCrashedJobListener to avoid "Curator-SafeNotifyService-0" thread falling into the endless loop.

And I’m not sure if we can call addListener(listener, executor) instead of addListener(listener) to register listeners in JobNodeStorage’s addDataListener method:

public void addDataListener(final CuratorCacheListener listener) {

CuratorCache cache = (CuratorCache) regCenter.getRawCache("/" + jobName);

**//Maybe we can call addListener(listener, executor) instead of addListener(listener) at here?

cache.listenable().addListener(listener);**

}

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@shardingsphere.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [shardingsphere-elasticjob] RedRed228 commented on issue #2038: OneOffJob cannot be triggered on some instances occasionally

Posted by GitBox <gi...@apache.org>.

RedRed228 commented on issue #2038:

URL: https://github.com/apache/shardingsphere-elasticjob/issues/2038#issuecomment-1013025677

The issue sometimes looks like: when we triggered the job, no shard was triggered at all.

I didn’t get the dump file but checked the zookeeper node directly when the problem occurred. From the zookeeper data I saw the resharding necessary flag was set and the leader was elected, but the leader didn’t do resharding.

The leader instance has many debug logs:

The ip server node is a server that have been deleted by us. We deleted the node and this is the cause of the issue, I will discuss of the reason why we delete the server node later.

The follower instances have many debug logs:

With the debug logs, we can infer that the leader didn’t do resharding. So I dumped the leader’s stack:

I found that the "Curator-SafeNotifyService-0" thread always stuck here, falling into endless loop at ServerService.isEnableServer():

public boolean isEnableServer(final String ip) {

String serverStatus = jobNodeStorage.getJobNodeData(serverNode.getServerNode(ip));

while (Strings.isNullOrEmpty(serverStatus)) {

BlockUtils.waitingShortTime();

serverStatus = jobNodeStorage.getJobNodeData(serverNode.getServerNode(ip));

}

return !ServerStatus.DISABLED.name().equals(serverStatus);

}

Reason found:

It's because our application was runing on cloud-native architechture, and we found that the servers node's children become more and more with the container(pod) being destroyed and created again and again. So I registered a shutdownHook when the container is created, which would remove the instance's server node under servers node from zookeeper when the container is destroyed by calling JobOperateAPI's remove method.

It caused this issue because when container was destroyed, the instance gone down and JobCrashedJobListener will be triggered, and the listener will fall into the endless loop as mentioned above if the instance’s server node was deleted.

All shards would not be triggered if the leader node‘s "Curator-SafeNotifyService-0" thread fall into the endless loop because only the leader node can do resharding, and all the listeners are triggered by the same thread(This maybe another issue.). But if the leader node didn’t fall into the endless loop, resharding will success and we would see that some shards are trigged successfully but those whose instance fell into the endless loop are not, just like the issue.

But we do need to solve the problem of server nodes becoming more and more under servers node, so before delete the server node, we sleep some time longer than sessionTimeout to ensure that all the alive instances have finished JobCrashedJobListener to avoid "Curator-SafeNotifyService-0" thread falling into the endless loop.

And I’m not sure if we can call addListener(listener, executor) instead of addListener(listener) to register listeners in JobNodeStorage’s addDataListener method:

public void addDataListener(final CuratorCacheListener listener) {

CuratorCache cache = (CuratorCache) regCenter.getRawCache("/" + jobName);

cache.listenable().addListener(listener);

}

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@shardingsphere.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [shardingsphere-elasticjob] RedRed228 edited a comment on issue #2038: OneOffJob cannot be triggered on some instances occasionally

Posted by GitBox <gi...@apache.org>.

RedRed228 edited a comment on issue #2038:

URL: https://github.com/apache/shardingsphere-elasticjob/issues/2038#issuecomment-1013025677

The issue sometimes looks like: when we triggered the job, no shard was triggered at all.

I didn’t get the dump file but checked the zookeeper node directly when the problem occurred. From the zookeeper data I saw the resharding necessary flag was set and the leader was elected, but the leader didn’t do resharding.

The leader instance has many debug logs:

The ip server node is a server that have been deleted by us. We deleted the node and this is the cause of the issue, I will discuss of the reason why we delete the server node later.

The follower instances have many debug logs:

With the debug logs, we can infer that the leader didn’t do resharding. So I dumped the leader’s stack:

I found that the "Curator-SafeNotifyService-0" thread always stuck here, falling into endless loop at ServerService.isEnableServer():

public boolean isEnableServer(final String ip) {

String serverStatus = jobNodeStorage.getJobNodeData(serverNode.getServerNode(ip));

//Endless loop happend!

**while (Strings.isNullOrEmpty(serverStatus)) {

BlockUtils.waitingShortTime();

serverStatus = jobNodeStorage.getJobNodeData(serverNode.getServerNode(ip));

}**

return !ServerStatus.DISABLED.name().equals(serverStatus);

}

Reason found:

It's because our application was runing on cloud-native architechture, and we found that the servers node's children become more and more with the container(pod) being destroyed and created again and again. So I registered a shutdownHook when the container is created, which would remove the instance's server node under servers node from zookeeper when the container is destroyed by calling JobOperateAPI's remove method.

It caused this issue because when container was destroyed, the instance gone down and JobCrashedJobListener will be triggered, and the listener will fall into the endless loop as mentioned above if the instance’s server node was deleted.

All shards would not be triggered if the leader node‘s "Curator-SafeNotifyService-0" thread fall into the endless loop because only the leader node can do resharding, and all the listeners are triggered by the same thread(This maybe another issue.). But if the leader node didn’t fall into the endless loop, resharding will success and we would see that some shards are trigged successfully but those whose instance fell into the endless loop are not, just like the issue.

But we do need to solve the problem of server nodes becoming more and more under servers node, so before delete the server node, we sleep some time longer than sessionTimeout to ensure that all the alive instances have finished JobCrashedJobListener to avoid "Curator-SafeNotifyService-0" thread falling into the endless loop.

And I’m not sure if we can call addListener(listener, executor) instead of addListener(listener) to register listeners in JobNodeStorage’s addDataListener method:

public void addDataListener(final CuratorCacheListener listener) {

CuratorCache cache = (CuratorCache) regCenter.getRawCache("/" + jobName);

**//Maybe we can call addListener(listener, executor) instead of addListener(listener) at here?

cache.listenable().addListener(listener);**

}

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@shardingsphere.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [shardingsphere-elasticjob] RedRed228 edited a comment on issue #2038: OneOffJob cannot be triggered on some instances occasionally

Posted by GitBox <gi...@apache.org>.

RedRed228 edited a comment on issue #2038:

URL: https://github.com/apache/shardingsphere-elasticjob/issues/2038#issuecomment-1013025677

The issue sometimes looks like: when we triggered the job, no shard was triggered at all.

I didn’t get the dump file but checked the zookeeper node directly when the problem occurred. From the zookeeper data I saw the resharding necessary flag was set and the leader was elected, but the leader didn’t do resharding.

The leader instance has many debug logs:

The ip server node is a server that have been deleted by us. We deleted the node and this is the cause of the issue, I will discuss of the reason why we delete the server node later.

The follower instances have many debug logs:

With the debug logs, we can infer that the leader didn’t do resharding. So I dumped the leader’s stack:

I found that the "Curator-SafeNotifyService-0" thread always stuck here, falling into endless loop at ServerService.isEnableServer():

public boolean isEnableServer(final String ip) {

String serverStatus = jobNodeStorage.getJobNodeData(serverNode.getServerNode(ip));

** //Endless loop happend!

while (Strings.isNullOrEmpty(serverStatus)) {

BlockUtils.waitingShortTime();

serverStatus = jobNodeStorage.getJobNodeData(serverNode.getServerNode(ip));

}**

return !ServerStatus.DISABLED.name().equals(serverStatus);

}

Reason found:

It's because our application was runing on cloud-native architechture, and we found that the servers node's children become more and more with the container(pod) being destroyed and created again and again. So I registered a shutdownHook when the container is created, which would remove the instance's server node under servers node from zookeeper when the container is destroyed by calling JobOperateAPI's remove method.

It caused this issue because when container was destroyed, the instance gone down and JobCrashedJobListener will be triggered, and the listener will fall into the endless loop as mentioned above if the instance’s server node was deleted.

All shards would not be triggered if the leader node‘s "Curator-SafeNotifyService-0" thread fall into the endless loop because only the leader node can do resharding, and all the listeners are triggered by the same thread(This maybe another issue.). But if the leader node didn’t fall into the endless loop, resharding will success and we would see that some shards are trigged successfully but those whose instance fell into the endless loop are not, just like the issue.

But we do need to solve the problem of server nodes becoming more and more under servers node, so before delete the server node, we sleep some time longer than sessionTimeout to ensure that all the alive instances have finished JobCrashedJobListener to avoid "Curator-SafeNotifyService-0" thread falling into the endless loop.

And I’m not sure if we can call addListener(listener, executor) instead of addListener(listener) to register listeners in JobNodeStorage’s addDataListener method:

public void addDataListener(final CuratorCacheListener listener) {

CuratorCache cache = (CuratorCache) regCenter.getRawCache("/" + jobName);

**//Maybe we can call addListener(listener, executor) instead of addListener(listener) at here?

cache.listenable().addListener(listener);**

}

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: notifications-unsubscribe@shardingsphere.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org