You are viewing a plain text version of this content. The canonical link for it is here.

Posted to reviews@spark.apache.org by phegstrom <gi...@git.apache.org> on 2018/08/24 20:55:12 UTC

[GitHub] spark pull request #22227: [SPARK-25202] [Core] Implements split with limit ...

GitHub user phegstrom opened a pull request:

https://github.com/apache/spark/pull/22227

[SPARK-25202] [Core] Implements split with limit sql function

## What changes were proposed in this pull request?

Adds support for the setting limit in the sql split function

## How was this patch tested?

1. Updated unit tests

2. Tested using Scala spark shell

Please review http://spark.apache.org/contributing.html before opening a pull request.

You can merge this pull request into a Git repository by running:

$ git pull https://github.com/phegstrom/spark master

Alternatively you can review and apply these changes as the patch at:

https://github.com/apache/spark/pull/22227.patch

To close this pull request, make a commit to your master/trunk branch

with (at least) the following in the commit message:

This closes #22227

----

commit 15362be4764d2b33757488efe38667cc762246ad

Author: Parker Hegstrom <ph...@...>

Date: 2018-08-24T19:19:58Z

implement split with limit

commit ceb3f41238c8731606164cea5c45a0b87bb5d6f2

Author: Parker Hegstrom <ph...@...>

Date: 2018-08-24T20:26:42Z

linting

----

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r214561691

--- Diff: sql/core/src/test/resources/sql-tests/inputs/string-functions.sql ---

@@ -46,4 +46,10 @@ FROM (

encode(string(id + 2), 'utf-8') col3,

encode(string(id + 3), 'utf-8') col4

FROM range(10)

-)

+);

+

+-- split function

+select split('aa1cc2ee3', '[1-9]+');

--- End diff --

I would use upper cases here for keywords.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/22227

**[Test build #96112 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/96112/testReport)** for PR 22227 at commit [`5c8f487`](https://github.com/apache/spark/commit/5c8f48715748bdeda703761fba6a4d1828a19985).

* This patch passes all tests.

* This patch merges cleanly.

* This patch adds no public classes.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Test FAILed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/95476/

Test FAILed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/22227

**[Test build #96015 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/96015/testReport)** for PR 22227 at commit [`69d2190`](https://github.com/apache/spark/commit/69d219018c9b2a7f3fb7dd716619f067aec0d2dc).

* This patch **fails Spark unit tests**.

* This patch merges cleanly.

* This patch adds no public classes.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Test PASSed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/96826/

Test PASSed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by phegstrom <gi...@git.apache.org>.

Github user phegstrom commented on the issue:

https://github.com/apache/spark/pull/22227

hmm @HyukjinKwon any ideas what's happening?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/22227

**[Test build #95563 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/95563/testReport)** for PR 22227 at commit [`d80b1a1`](https://github.com/apache/spark/commit/d80b1a15ed8941bad78df2c5f7168a4196d27be4).

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r217563366

--- Diff: python/pyspark/sql/functions.py ---

@@ -1671,18 +1671,32 @@ def repeat(col, n):

@since(1.5)

@ignore_unicode_prefix

-def split(str, pattern):

+def split(str, pattern, limit=-1):

"""

- Splits str around pattern (pattern is a regular expression).

+ Splits str around matches of the given pattern.

- .. note:: pattern is a string represent the regular expression.

+ :param str: a string expression to split

+ :param pattern: a string representing a regular expression. The regex string should be

+ a Java regular expression.

--- End diff --

Shall we make it four-spaced.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by maropu <gi...@git.apache.org>.

Github user maropu commented on the issue:

https://github.com/apache/spark/pull/22227

LGTM except for the R/python parts (I'm not familiar with these parts and I'll leave them to @felixcheung and @HyukjinKwon).

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on the issue:

https://github.com/apache/spark/pull/22227

Seems fine otherwise.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by phegstrom <gi...@git.apache.org>.

Github user phegstrom commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r213063991

--- Diff: sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/regexpExpressions.scala ---

@@ -232,30 +232,41 @@ case class RLike(left: Expression, right: Expression) extends StringRegexExpress

* Splits str around pat (pattern is a regular expression).

*/

@ExpressionDescription(

- usage = "_FUNC_(str, regex) - Splits `str` around occurrences that match `regex`.",

+ usage = "_FUNC_(str, regex, limit) - Splits `str` around occurrences that match `regex`." +

+ "The `limit` parameter controls the number of times the pattern is applied and " +

+ "therefore affects the length of the resulting array. If the limit n is " +

+ "greater than zero then the pattern will be applied at most n - 1 times, " +

+ "the array's length will be no greater than n, and the array's last entry " +

+ "will contain all input beyond the last matched delimiter. If n is " +

+ "non-positive then the pattern will be applied as many times as " +

+ "possible and the array can have any length. If n is zero then the " +

+ "pattern will be applied as many times as possible, the array can " +

+ "have any length, and trailing empty strings will be discarded.",

examples = """

Examples:

- > SELECT _FUNC_('oneAtwoBthreeC', '[ABC]');

+ > SELECT _FUNC_('oneAtwoBthreeC', '[ABC]', -1);

["one","two","three",""]

+| > SELECT _FUNC_('oneAtwoBthreeC', '[ABC]', 2);

+ | ["one","twoBthreeC"]

""")

-case class StringSplit(str: Expression, pattern: Expression)

- extends BinaryExpression with ImplicitCastInputTypes {

+case class StringSplit(str: Expression, pattern: Expression, limit: Expression)

--- End diff --

^ ignore this! found it @maropu

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by maropu <gi...@git.apache.org>.

Github user maropu commented on the issue:

https://github.com/apache/spark/pull/22227

You need to handle that in both codegen(doGenCode) and interpreter(nullSafeEval) path. Also, can you add tests to check if they have the same behaviour in the limit=0/limit=-1 cases? You'd be better to explicitly put comments in a proper position about the decision.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Merged build finished. Test FAILed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Merged build finished. Test FAILed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by ueshin <gi...@git.apache.org>.

Github user ueshin commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r214497013

--- Diff: sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/regexpExpressions.scala ---

@@ -229,36 +229,74 @@ case class RLike(left: Expression, right: Expression) extends StringRegexExpress

/**

- * Splits str around pat (pattern is a regular expression).

+ * Splits str around matches of the given regex.

*/

@ExpressionDescription(

- usage = "_FUNC_(str, regex) - Splits `str` around occurrences that match `regex`.",

+ usage = "_FUNC_(str, regex, limit) - Splits `str` around occurrences that match `regex`" +

+ " and returns an array of at most `limit`",

+ arguments = """

+ Arguments:

+ * str - a string expression to split.

+ * regex - a string representing a regular expression. The regex string should be a

+ Java regular expression.

+ * limit - an integer expression which controls the number of times the regex is applied.

+

+ limit > 0: The resulting array's length will not be more than `limit`,

+ and the resulting array's last entry will contain all input

+ beyond the last matched regex.

+ limit <= 0: `regex` will be applied as many times as possible, and

+ the resulting array can be of any size.

+ """,

examples = """

Examples:

> SELECT _FUNC_('oneAtwoBthreeC', '[ABC]');

["one","two","three",""]

+ > SELECT _FUNC_('oneAtwoBthreeC', '[ABC]', -1);

+ ["one","two","three",""]

+ > SELECT _FUNC_('oneAtwoBthreeC', '[ABC]', 2);

+ ["one","twoBthreeC"]

""")

-case class StringSplit(str: Expression, pattern: Expression)

- extends BinaryExpression with ImplicitCastInputTypes {

+case class StringSplit(str: Expression, regex: Expression, limit: Expression)

+ extends TernaryExpression with ImplicitCastInputTypes {

- override def left: Expression = str

- override def right: Expression = pattern

override def dataType: DataType = ArrayType(StringType)

- override def inputTypes: Seq[DataType] = Seq(StringType, StringType)

+ override def inputTypes: Seq[DataType] = Seq(StringType, StringType, IntegerType)

+ override def children: Seq[Expression] = str :: regex :: limit :: Nil

+

+ def this(exp: Expression, regex: Expression) = this(exp, regex, Literal(-1));

- override def nullSafeEval(string: Any, regex: Any): Any = {

- val strings = string.asInstanceOf[UTF8String].split(regex.asInstanceOf[UTF8String], -1)

+ override def nullSafeEval(string: Any, regex: Any, limit: Any): Any = {

+ val strings = string.asInstanceOf[UTF8String].split(

+ regex.asInstanceOf[UTF8String], maybeFallbackLimitValue(limit.asInstanceOf[Int]))

new GenericArrayData(strings.asInstanceOf[Array[Any]])

}

override def doGenCode(ctx: CodegenContext, ev: ExprCode): ExprCode = {

val arrayClass = classOf[GenericArrayData].getName

- nullSafeCodeGen(ctx, ev, (str, pattern) =>

+ nullSafeCodeGen(ctx, ev, (str, regex, limit) => {

// Array in java is covariant, so we don't need to cast UTF8String[] to Object[].

- s"""${ev.value} = new $arrayClass($str.split($pattern, -1));""")

+ s"""${ev.value} = new $arrayClass($str.split(

+ $regex,${handleCodeGenLimitFallback(limit)}));""".stripMargin

+ })

}

override def prettyName: String = "split"

+

+ /**

+ * Java String's split method supports "ignore empty string" behavior when the limit is 0.

+ * To avoid this, we fall back to -1 when the limit is 0. Otherwise, this is a noop.

+ */

+ def maybeFallbackLimitValue(limit: Int): Int = {

--- End diff --

+1, and please add `limit = 0` case in `UTF8StringSuite`.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Test FAILed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/95494/

Test FAILed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r216941249

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/functions.scala ---

@@ -2546,15 +2546,51 @@ object functions {

def soundex(e: Column): Column = withExpr { SoundEx(e.expr) }

/**

- * Splits str around pattern (pattern is a regular expression).

+ * Splits str around matches of the given regex.

*

- * @note Pattern is a string representation of the regular expression.

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex string should be

+ * a Java regular expression.

*

* @group string_funcs

* @since 1.5.0

*/

- def split(str: Column, pattern: String): Column = withExpr {

- StringSplit(str.expr, lit(pattern).expr)

+ def split(str: Column, regex: String): Column = withExpr {

+ StringSplit(str.expr, Literal(regex), Literal(-1))

+ }

+

+ /**

+ * Splits str around matches of the given regex.

+ *

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex string should be

+ * a Java regular expression.

+ * @param limit an integer expression which controls the number of times the regex is applied.

+ * <ul>

+ * <li>limit greater than 0

+ * <ul>

+ * <li>

+ * The resulting array's length will not be more than limit,

+ * and the resulting array's last entry will contain all input

+ * beyond the last matched regex.

+ * </li>

+ * </ul>

+ * </li>

+ * <li>limit less than or equal to 0

+ * <ul>

+ * <li>

+ * `regex` will be applied as many times as possible,

+ * and the resulting array can be of any size.

+ * </li>

+ * </ul>

+ * </li>

+ * </ul>

--- End diff --

I think you can just:

```

* <ul>

* <li>limit greater than 0: The resulting array's length will not be more than limit,

* and the resulting array's last entry will contain all input

* beyond the last matched regex.</li>

* <li>limit less than or equal to 0: `regex` will be applied as many times as possible,

* and the resulting array can be of any size.</li>

* </ul>

```

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on the issue:

https://github.com/apache/spark/pull/22227

retest this please

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r214245829

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/functions.scala ---

@@ -2546,15 +2546,37 @@ object functions {

def soundex(e: Column): Column = withExpr { SoundEx(e.expr) }

/**

- * Splits str around pattern (pattern is a regular expression).

+ * Splits str around matches of the given regex.

*

- * @note Pattern is a string representation of the regular expression.

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex string should be

+ * a Java regular expression.

*

* @group string_funcs

* @since 1.5.0

*/

- def split(str: Column, pattern: String): Column = withExpr {

- StringSplit(str.expr, lit(pattern).expr)

+ def split(str: Column, regex: String): Column = withExpr {

+ StringSplit(str.expr, Literal(regex), Literal(-1))

+ }

+

+ /**

+ * Splits str around matches of the given regex.

+ *

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex string should be

+ * a Java regular expression.

+ * @param limit an integer expression which controls the number of times the regex is applied.

+ * limit greater than 0: The resulting array's length will not be more than `limit`,

+ * and the resulting array's last entry will contain all input beyond

+ * the last matched regex.

+ * limit less than or equal to 0: `regex` will be applied as many times as possible, and

+ * the resulting array can be of any size.

--- End diff --

Indentation here looks a bit odd and looks inconsistent at least. Can you double check Scaladoc and format this correctly?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r217563726

--- Diff: sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/regexpExpressions.scala ---

@@ -229,33 +229,53 @@ case class RLike(left: Expression, right: Expression) extends StringRegexExpress

/**

- * Splits str around pat (pattern is a regular expression).

+ * Splits str around matches of the given regex.

*/

@ExpressionDescription(

- usage = "_FUNC_(str, regex) - Splits `str` around occurrences that match `regex`.",

+ usage = "_FUNC_(str, regex, limit) - Splits `str` around occurrences that match `regex`" +

+ " and returns an array with a length of at most `limit`",

+ arguments = """

+ Arguments:

+ * str - a string expression to split.

+ * regex - a string representing a regular expression. The regex string should be a

+ Java regular expression.

+ * limit - an integer expression which controls the number of times the regex is applied.

+ * limit > 0: The resulting array's length will not be more than `limit`,

+ and the resulting array's last entry will contain all input

+ beyond the last matched regex.

--- End diff --

indentation:

```

* limit > 0: The resulting array's length will not be more than `limit`,

and the resulting array's last entry will contain all input

beyond the last matched regex.

```

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Merged build finished. Test PASSed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/22227

**[Test build #96966 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/96966/testReport)** for PR 22227 at commit [`34ba74f`](https://github.com/apache/spark/commit/34ba74f79aad2a0e2fe9e0d6f6110a10a51c8108).

* This patch **fails due to an unknown error code, -9**.

* This patch merges cleanly.

* This patch adds no public classes.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r214562121

--- Diff: python/pyspark/sql/functions.py ---

@@ -1669,20 +1669,33 @@ def repeat(col, n):

return Column(sc._jvm.functions.repeat(_to_java_column(col), n))

-@since(1.5)

+@since(2.4)

@ignore_unicode_prefix

-def split(str, pattern):

+def split(str, pattern, limit=-1):

"""

- Splits str around pattern (pattern is a regular expression).

+ Splits str around matches of the given pattern.

+

+ :param str: a string expression to split

+ :param pattern: a string representing a regular expression. The regex string should be

+ a Java regular expression.

+ :param limit: an integer expression which controls the number of times the pattern is applied.

- .. note:: pattern is a string represent the regular expression.

+ * ``limit > 0``: The resulting array's length will not be more than `limit`, and the

+ resulting array's last entry will contain all input beyond the last

+ matched pattern.

+ * ``limit <= 0``: `pattern` will be applied as many times as possible, and the resulting

+ array can be of any size.

--- End diff --

Indentation:

```diff

- * ``limit > 0``: The resulting array's length will not be more than `limit`, and the

- resulting array's last entry will contain all input beyond the last

- matched pattern.

- * ``limit <= 0``: `pattern` will be applied as many times as possible, and the resulting

- array can be of any size.

+ * ``limit > 0``: The resulting array's length will not be more than `limit`, and the

+ resulting array's last entry will contain all input beyond the last

+ matched pattern.

+ * ``limit <= 0``: `pattern` will be applied as many times as possible, and the resulting

+ array can be of any size.

```

Did you check the HTML output?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by phegstrom <gi...@git.apache.org>.

Github user phegstrom commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r221647616

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/functions.scala ---

@@ -2546,15 +2546,39 @@ object functions {

def soundex(e: Column): Column = withExpr { SoundEx(e.expr) }

/**

- * Splits str around pattern (pattern is a regular expression).

+ * Splits str around matches of the given regex.

*

- * @note Pattern is a string representation of the regular expression.

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex string should be

+ * a Java regular expression.

*

* @group string_funcs

* @since 1.5.0

*/

- def split(str: Column, pattern: String): Column = withExpr {

- StringSplit(str.expr, lit(pattern).expr)

+ def split(str: Column, regex: String): Column = withExpr {

+ StringSplit(str.expr, Literal(regex), Literal(-1))

+ }

+

+ /**

+ * Splits str around matches of the given regex.

+ *

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex string should be

+ * a Java regular expression.

+ * @param limit an integer expression which controls the number of times the regex is applied.

+ * <ul>

+ * <li>limit greater than 0: The resulting array's length will not be more than limit,

+ * and the resulting array's last entry will contain all input beyond the last

+ * matched regex.</li>

+ * <li>limit less than or equal to 0: `regex` will be applied as many times as

+ * possible, and the resulting array can be of any size.</li>

--- End diff --

ah, I'll look into that

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by viirya <gi...@git.apache.org>.

Github user viirya commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r221645288

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/functions.scala ---

@@ -2546,15 +2546,39 @@ object functions {

def soundex(e: Column): Column = withExpr { SoundEx(e.expr) }

/**

- * Splits str around pattern (pattern is a regular expression).

+ * Splits str around matches of the given regex.

*

- * @note Pattern is a string representation of the regular expression.

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex string should be

+ * a Java regular expression.

*

* @group string_funcs

* @since 1.5.0

*/

- def split(str: Column, pattern: String): Column = withExpr {

- StringSplit(str.expr, lit(pattern).expr)

+ def split(str: Column, regex: String): Column = withExpr {

+ StringSplit(str.expr, Literal(regex), Literal(-1))

+ }

+

+ /**

+ * Splits str around matches of the given regex.

+ *

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex string should be

+ * a Java regular expression.

+ * @param limit an integer expression which controls the number of times the regex is applied.

+ * <ul>

+ * <li>limit greater than 0: The resulting array's length will not be more than limit,

+ * and the resulting array's last entry will contain all input beyond the last

+ * matched regex.</li>

+ * <li>limit less than or equal to 0: `regex` will be applied as many times as

+ * possible, and the resulting array can be of any size.</li>

--- End diff --

I mean we may not need ending tag `</li>`.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/22227

**[Test build #95486 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/95486/testReport)** for PR 22227 at commit [`79599eb`](https://github.com/apache/spark/commit/79599ebc26f089737e101b417428ceb6620f802d).

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/22227

**[Test build #95987 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/95987/testReport)** for PR 22227 at commit [`69d2190`](https://github.com/apache/spark/commit/69d219018c9b2a7f3fb7dd716619f067aec0d2dc).

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/22227

**[Test build #96015 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/96015/testReport)** for PR 22227 at commit [`69d2190`](https://github.com/apache/spark/commit/69d219018c9b2a7f3fb7dd716619f067aec0d2dc).

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by felixcheung <gi...@git.apache.org>.

Github user felixcheung commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r219714851

--- Diff: R/pkg/R/functions.R ---

@@ -3404,19 +3404,27 @@ setMethod("collect_set",

#' Equivalent to \code{split} SQL function.

#'

#' @rdname column_string_functions

+#' @param limit determines the length of the returned array.

+#' \itemize{

+#' \item \code{limit > 0}: length of the array will be at most \code{limit}

+#' \item \code{limit <= 0}: the returned array can have any length

+#' }

+#'

#' @aliases split_string split_string,Column-method

#' @examples

#'

#' \dontrun{

#' head(select(df, split_string(df$Sex, "a")))

#' head(select(df, split_string(df$Class, "\\d")))

+#' head(select(df, split_string(df$Class, "\\d", 2)))

#' # This is equivalent to the following SQL expression

#' head(selectExpr(df, "split(Class, '\\\\d')"))}

--- End diff --

good point - also the example should run in the order documented.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Test FAILed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/95774/

Test FAILed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by maropu <gi...@git.apache.org>.

Github user maropu commented on the issue:

https://github.com/apache/spark/pull/22227

Also, you need to update split in python and R.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Merged build finished. Test FAILed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by phegstrom <gi...@git.apache.org>.

Github user phegstrom closed the pull request at:

https://github.com/apache/spark/pull/22227

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Merged build finished. Test FAILed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by phegstrom <gi...@git.apache.org>.

Github user phegstrom commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r215764191

--- Diff: python/pyspark/sql/functions.py ---

@@ -1669,20 +1669,33 @@ def repeat(col, n):

return Column(sc._jvm.functions.repeat(_to_java_column(col), n))

-@since(1.5)

+@since(2.4)

@ignore_unicode_prefix

-def split(str, pattern):

+def split(str, pattern, limit=-1):

"""

- Splits str around pattern (pattern is a regular expression).

+ Splits str around matches of the given pattern.

+

+ :param str: a string expression to split

+ :param pattern: a string representing a regular expression. The regex string should be

+ a Java regular expression.

+ :param limit: an integer expression which controls the number of times the pattern is applied.

- .. note:: pattern is a string represent the regular expression.

+ * ``limit > 0``: The resulting array's length will not be more than `limit`, and the

+ resulting array's last entry will contain all input beyond the last

+ matched pattern.

+ * ``limit <= 0``: `pattern` will be applied as many times as possible, and the resulting

+ array can be of any size.

--- End diff --

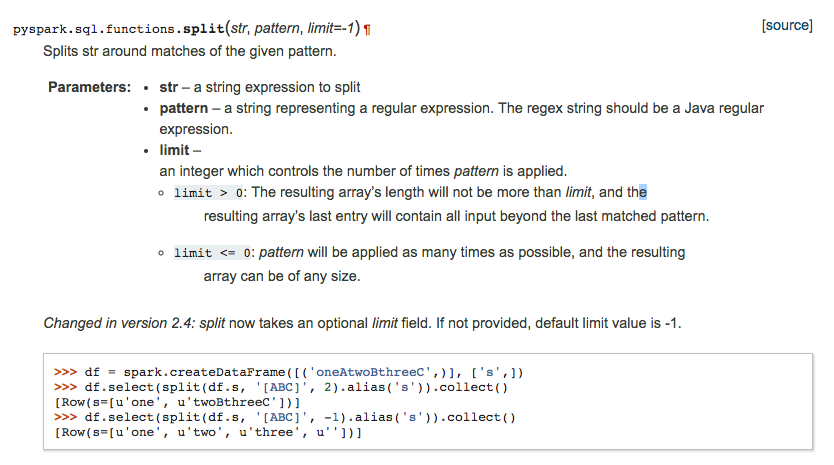

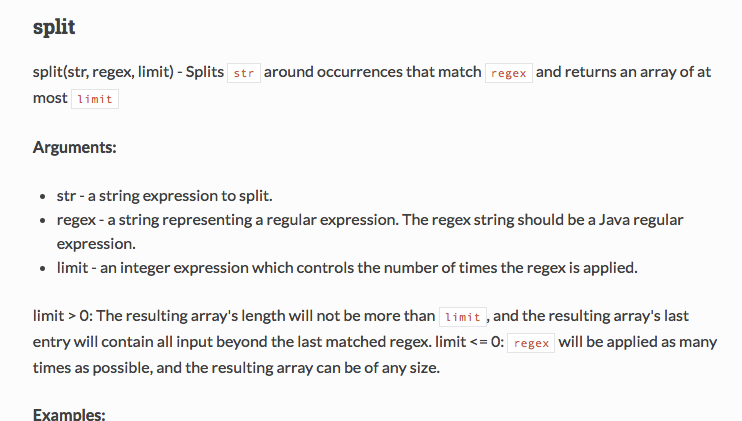

I did, see attached! Let me know what you think (unsure why initial description of limit starts on a new line):

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Merged build finished. Test FAILed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [Core] Implements split with limit sql fun...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Can one of the admins verify this patch?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on the issue:

https://github.com/apache/spark/pull/22227

Merged to master.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/22227

**[Test build #95774 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/95774/testReport)** for PR 22227 at commit [`64b0afc`](https://github.com/apache/spark/commit/64b0afca802c4557b5a53aa62b7486c3d8d4fe8c).

* This patch **fails SparkR unit tests**.

* This patch merges cleanly.

* This patch adds no public classes.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [Core] Implements split with limit ...

Posted by maropu <gi...@git.apache.org>.

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r212782784

--- Diff: sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/regexpExpressions.scala ---

@@ -232,30 +232,41 @@ case class RLike(left: Expression, right: Expression) extends StringRegexExpress

* Splits str around pat (pattern is a regular expression).

*/

@ExpressionDescription(

- usage = "_FUNC_(str, regex) - Splits `str` around occurrences that match `regex`.",

+ usage = "_FUNC_(str, regex, limit) - Splits `str` around occurrences that match `regex`." +

--- End diff --

Can you refine the description and the format along with the others, e.g., `RLike`

https://github.com/apache/spark/blob/ceb3f41238c8731606164cea5c45a0b87bb5d6f2/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/regexpExpressions.scala#L78

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by viirya <gi...@git.apache.org>.

Github user viirya commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r221662234

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/functions.scala ---

@@ -2546,15 +2546,39 @@ object functions {

def soundex(e: Column): Column = withExpr { SoundEx(e.expr) }

/**

- * Splits str around pattern (pattern is a regular expression).

+ * Splits str around matches of the given regex.

*

- * @note Pattern is a string representation of the regular expression.

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex string should be

+ * a Java regular expression.

*

* @group string_funcs

* @since 1.5.0

*/

- def split(str: Column, pattern: String): Column = withExpr {

- StringSplit(str.expr, lit(pattern).expr)

+ def split(str: Column, regex: String): Column = withExpr {

+ StringSplit(str.expr, Literal(regex), Literal(-1))

+ }

+

+ /**

+ * Splits str around matches of the given regex.

+ *

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex string should be

+ * a Java regular expression.

+ * @param limit an integer expression which controls the number of times the regex is applied.

+ * <ul>

+ * <li>limit greater than 0: The resulting array's length will not be more than limit,

+ * and the resulting array's last entry will contain all input beyond the last

+ * matched regex.</li>

+ * <li>limit less than or equal to 0: `regex` will be applied as many times as

+ * possible, and the resulting array can be of any size.</li>

--- End diff --

Ok. Then it's fine. Thanks for looking at it.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by phegstrom <gi...@git.apache.org>.

Github user phegstrom commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r217930978

--- Diff: R/pkg/tests/fulltests/test_sparkSQL.R ---

@@ -1803,6 +1803,18 @@ test_that("string operators", {

collect(select(df4, split_string(df4$a, "\\\\")))[1, 1],

list(list("a.b@c.d 1", "b"))

)

+ expect_equal(

+ collect(select(df4, split_string(df4$a, "\\.", 2)))[1, 1],

+ list(list("a", "b@c.d 1\\b"))

+ )

+ expect_equal(

+ collect(select(df4, split_string(df4$a, "b", -2)))[1, 1],

+ list(list("a.", "@c.d 1\\", ""))

+ )

+ expect_equal(

+ collect(select(df4, split_string(df4$a, "b", 0)))[1, 1],

--- End diff --

per @felixcheung's I added back the `limit = 0` case

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Test FAILed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/95578/

Test FAILed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by phegstrom <gi...@git.apache.org>.

Github user phegstrom commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r214516644

--- Diff: sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/regexpExpressions.scala ---

@@ -229,36 +229,74 @@ case class RLike(left: Expression, right: Expression) extends StringRegexExpress

/**

- * Splits str around pat (pattern is a regular expression).

+ * Splits str around matches of the given regex.

*/

@ExpressionDescription(

- usage = "_FUNC_(str, regex) - Splits `str` around occurrences that match `regex`.",

+ usage = "_FUNC_(str, regex, limit) - Splits `str` around occurrences that match `regex`" +

+ " and returns an array of at most `limit`",

+ arguments = """

+ Arguments:

+ * str - a string expression to split.

+ * regex - a string representing a regular expression. The regex string should be a

+ Java regular expression.

+ * limit - an integer expression which controls the number of times the regex is applied.

+

+ limit > 0: The resulting array's length will not be more than `limit`,

+ and the resulting array's last entry will contain all input

+ beyond the last matched regex.

+ limit <= 0: `regex` will be applied as many times as possible, and

+ the resulting array can be of any size.

+ """,

examples = """

Examples:

> SELECT _FUNC_('oneAtwoBthreeC', '[ABC]');

["one","two","three",""]

+ > SELECT _FUNC_('oneAtwoBthreeC', '[ABC]', -1);

+ ["one","two","three",""]

+ > SELECT _FUNC_('oneAtwoBthreeC', '[ABC]', 2);

+ ["one","twoBthreeC"]

""")

-case class StringSplit(str: Expression, pattern: Expression)

- extends BinaryExpression with ImplicitCastInputTypes {

+case class StringSplit(str: Expression, regex: Expression, limit: Expression)

+ extends TernaryExpression with ImplicitCastInputTypes {

- override def left: Expression = str

- override def right: Expression = pattern

override def dataType: DataType = ArrayType(StringType)

- override def inputTypes: Seq[DataType] = Seq(StringType, StringType)

+ override def inputTypes: Seq[DataType] = Seq(StringType, StringType, IntegerType)

+ override def children: Seq[Expression] = str :: regex :: limit :: Nil

+

+ def this(exp: Expression, regex: Expression) = this(exp, regex, Literal(-1));

- override def nullSafeEval(string: Any, regex: Any): Any = {

- val strings = string.asInstanceOf[UTF8String].split(regex.asInstanceOf[UTF8String], -1)

+ override def nullSafeEval(string: Any, regex: Any, limit: Any): Any = {

+ val strings = string.asInstanceOf[UTF8String].split(

+ regex.asInstanceOf[UTF8String], maybeFallbackLimitValue(limit.asInstanceOf[Int]))

new GenericArrayData(strings.asInstanceOf[Array[Any]])

}

override def doGenCode(ctx: CodegenContext, ev: ExprCode): ExprCode = {

val arrayClass = classOf[GenericArrayData].getName

- nullSafeCodeGen(ctx, ev, (str, pattern) =>

+ nullSafeCodeGen(ctx, ev, (str, regex, limit) => {

// Array in java is covariant, so we don't need to cast UTF8String[] to Object[].

- s"""${ev.value} = new $arrayClass($str.split($pattern, -1));""")

+ s"""${ev.value} = new $arrayClass($str.split(

+ $regex,${handleCodeGenLimitFallback(limit)}));""".stripMargin

+ })

}

override def prettyName: String = "split"

+

+ /**

+ * Java String's split method supports "ignore empty string" behavior when the limit is 0.

+ * To avoid this, we fall back to -1 when the limit is 0. Otherwise, this is a noop.

+ */

+ def maybeFallbackLimitValue(limit: Int): Int = {

--- End diff --

done!

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by phegstrom <gi...@git.apache.org>.

Github user phegstrom commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r221652521

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/functions.scala ---

@@ -2546,15 +2546,39 @@ object functions {

def soundex(e: Column): Column = withExpr { SoundEx(e.expr) }

/**

- * Splits str around pattern (pattern is a regular expression).

+ * Splits str around matches of the given regex.

*

- * @note Pattern is a string representation of the regular expression.

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex string should be

+ * a Java regular expression.

*

* @group string_funcs

* @since 1.5.0

*/

- def split(str: Column, pattern: String): Column = withExpr {

- StringSplit(str.expr, lit(pattern).expr)

+ def split(str: Column, regex: String): Column = withExpr {

+ StringSplit(str.expr, Literal(regex), Literal(-1))

+ }

+

+ /**

+ * Splits str around matches of the given regex.

+ *

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex string should be

+ * a Java regular expression.

+ * @param limit an integer expression which controls the number of times the regex is applied.

+ * <ul>

+ * <li>limit greater than 0: The resulting array's length will not be more than limit,

+ * and the resulting array's last entry will contain all input beyond the last

+ * matched regex.</li>

+ * <li>limit less than or equal to 0: `regex` will be applied as many times as

+ * possible, and the resulting array can be of any size.</li>

--- End diff --

@viirya throughout this repository, the `</li>` has always been included. For consistency, I think we should just keep it as is

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [Core] Implements split with limit sql fun...

Posted by maropu <gi...@git.apache.org>.

Github user maropu commented on the issue:

https://github.com/apache/spark/pull/22227

not `[CORE]` but `[SQL]` in the title.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/22227

**[Test build #95907 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/95907/testReport)** for PR 22227 at commit [`b12ee88`](https://github.com/apache/spark/commit/b12ee881c1d9025644d075a6124f9e6e465a6378).

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Merged build finished. Test PASSed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/22227

**[Test build #96826 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/96826/testReport)** for PR 22227 at commit [`34ba74f`](https://github.com/apache/spark/commit/34ba74f79aad2a0e2fe9e0d6f6110a10a51c8108).

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on the issue:

https://github.com/apache/spark/pull/22227

retest this please

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by viirya <gi...@git.apache.org>.

Github user viirya commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r219691017

--- Diff: sql/core/src/main/scala/org/apache/spark/sql/functions.scala ---

@@ -2546,15 +2546,39 @@ object functions {

def soundex(e: Column): Column = withExpr { SoundEx(e.expr) }

/**

- * Splits str around pattern (pattern is a regular expression).

+ * Splits str around matches of the given regex.

*

- * @note Pattern is a string representation of the regular expression.

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex string should be

+ * a Java regular expression.

*

* @group string_funcs

* @since 1.5.0

*/

- def split(str: Column, pattern: String): Column = withExpr {

- StringSplit(str.expr, lit(pattern).expr)

+ def split(str: Column, regex: String): Column = withExpr {

+ StringSplit(str.expr, Literal(regex), Literal(-1))

+ }

+

+ /**

+ * Splits str around matches of the given regex.

+ *

+ * @param str a string expression to split

+ * @param regex a string representing a regular expression. The regex string should be

+ * a Java regular expression.

+ * @param limit an integer expression which controls the number of times the regex is applied.

+ * <ul>

+ * <li>limit greater than 0: The resulting array's length will not be more than limit,

+ * and the resulting array's last entry will contain all input beyond the last

+ * matched regex.</li>

+ * <li>limit less than or equal to 0: `regex` will be applied as many times as

+ * possible, and the resulting array can be of any size.</li>

--- End diff --

I think we don't need `</li>`.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by maropu <gi...@git.apache.org>.

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r213539767

--- Diff: sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/regexpExpressions.scala ---

@@ -229,33 +229,59 @@ case class RLike(left: Expression, right: Expression) extends StringRegexExpress

/**

- * Splits str around pat (pattern is a regular expression).

+ * Splits str around pattern (pattern is a regular expression).

*/

@ExpressionDescription(

- usage = "_FUNC_(str, regex) - Splits `str` around occurrences that match `regex`.",

+ usage = "_FUNC_(str, regex, limit) - Splits `str` around occurrences that match `regex`" +

+ " and returns an array of at most `limit`",

+ arguments = """

+ Arguments:

+ * str - a string expression to split.

+ * pattern - a string representing a regular expression. The pattern string should be a

+ Java regular expression.

+ * limit - an integer expression which controls the number of times the pattern is applied.

+

+ limit > 0:

+ The resulting array's length will not be more than `limit`, and the resulting array's

+ last entry will contain all input beyond the last matched pattern.

+

+ limit < 0:

+ `pattern` will be applied as many times as possible, and the resulting

+ array can be of any size.

+

+ limit = 0:

+ `pattern` will be applied as many times as possible, the resulting array can

+ be of any size, and trailing empty strings will be discarded.

+ """,

examples = """

Examples:

> SELECT _FUNC_('oneAtwoBthreeC', '[ABC]');

["one","two","three",""]

+| > SELECT _FUNC_('oneAtwoBthreeC', '[ABC]', 0);

--- End diff --

drop `|`

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/22227

**[Test build #96112 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/96112/testReport)** for PR 22227 at commit [`5c8f487`](https://github.com/apache/spark/commit/5c8f48715748bdeda703761fba6a4d1828a19985).

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Can one of the admins verify this patch?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by phegstrom <gi...@git.apache.org>.

Github user phegstrom commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r216247365

--- Diff: R/pkg/R/functions.R ---

@@ -3404,19 +3404,24 @@ setMethod("collect_set",

#' Equivalent to \code{split} SQL function.

#'

#' @rdname column_string_functions

+#' @param limit determines the size of the returned array. If `limit` is positive,

+#' size of the array will be at most `limit`. If `limit` is negative, the

--- End diff --

will do

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by SparkQA <gi...@git.apache.org>.

Github user SparkQA commented on the issue:

https://github.com/apache/spark/pull/22227

**[Test build #95872 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/95872/testReport)** for PR 22227 at commit [`b12ee88`](https://github.com/apache/spark/commit/b12ee881c1d9025644d075a6124f9e6e465a6378).

* This patch **fails Spark unit tests**.

* This patch merges cleanly.

* This patch adds no public classes.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Merged build finished. Test PASSed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on the issue:

https://github.com/apache/spark/pull/22227

ok to test

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on the issue:

https://github.com/apache/spark/pull/22227

Seems okay

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by phegstrom <gi...@git.apache.org>.

Github user phegstrom commented on the issue:

https://github.com/apache/spark/pull/22227

hey @HyukjinKwon, I'm seeing two tests fail in the most recent run, but traces don't seem related to any of my changes -- not to mention the test name is `(It is not a test it is a sbt.testing.SuiteSelector)`. Is this something I need to worry about?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by phegstrom <gi...@git.apache.org>.

Github user phegstrom commented on the issue:

https://github.com/apache/spark/pull/22227

@HyukjinKwon are things passing?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [Core] Implements split with limit ...

Posted by phegstrom <gi...@git.apache.org>.

Github user phegstrom commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r212986332

--- Diff: sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/regexpExpressions.scala ---

@@ -232,30 +232,41 @@ case class RLike(left: Expression, right: Expression) extends StringRegexExpress

* Splits str around pat (pattern is a regular expression).

*/

@ExpressionDescription(

- usage = "_FUNC_(str, regex) - Splits `str` around occurrences that match `regex`.",

+ usage = "_FUNC_(str, regex, limit) - Splits `str` around occurrences that match `regex`." +

+ "The `limit` parameter controls the number of times the pattern is applied and " +

+ "therefore affects the length of the resulting array. If the limit n is " +

+ "greater than zero then the pattern will be applied at most n - 1 times, " +

+ "the array's length will be no greater than n, and the array's last entry " +

+ "will contain all input beyond the last matched delimiter. If n is " +

+ "non-positive then the pattern will be applied as many times as " +

+ "possible and the array can have any length. If n is zero then the " +

+ "pattern will be applied as many times as possible, the array can " +

+ "have any length, and trailing empty strings will be discarded.",

--- End diff --

@viirya i'll take a crack at it -- the usage is a bit funky given the different behavior based on what `limit` is, I aired on the side of verbose

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on the issue:

https://github.com/apache/spark/pull/22227

retest this please

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [Core] Implements split with limit sql fun...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Can one of the admins verify this patch?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22227: [SPARK-25202] [SQL] Implements split with limit sql func...

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22227

Test FAILed.

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/95311/

Test FAILed.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by phegstrom <gi...@git.apache.org>.

Github user phegstrom commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r213061265

--- Diff: sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/regexpExpressions.scala ---

@@ -232,30 +232,41 @@ case class RLike(left: Expression, right: Expression) extends StringRegexExpress

* Splits str around pat (pattern is a regular expression).

*/

@ExpressionDescription(

- usage = "_FUNC_(str, regex) - Splits `str` around occurrences that match `regex`.",

+ usage = "_FUNC_(str, regex, limit) - Splits `str` around occurrences that match `regex`." +

+ "The `limit` parameter controls the number of times the pattern is applied and " +

+ "therefore affects the length of the resulting array. If the limit n is " +

+ "greater than zero then the pattern will be applied at most n - 1 times, " +

+ "the array's length will be no greater than n, and the array's last entry " +

+ "will contain all input beyond the last matched delimiter. If n is " +

+ "non-positive then the pattern will be applied as many times as " +

+ "possible and the array can have any length. If n is zero then the " +

+ "pattern will be applied as many times as possible, the array can " +

+ "have any length, and trailing empty strings will be discarded.",

examples = """

Examples:

- > SELECT _FUNC_('oneAtwoBthreeC', '[ABC]');

+ > SELECT _FUNC_('oneAtwoBthreeC', '[ABC]', -1);

["one","two","three",""]

+| > SELECT _FUNC_('oneAtwoBthreeC', '[ABC]', 2);

+ | ["one","twoBthreeC"]

""")

-case class StringSplit(str: Expression, pattern: Expression)

- extends BinaryExpression with ImplicitCastInputTypes {

+case class StringSplit(str: Expression, pattern: Expression, limit: Expression)

--- End diff --

@maropu which tests use `string-functions.sql`? would like to add tests here but not sure how to explicitly kick off the test as there are no `*Suites` which use this file it seems.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by maropu <gi...@git.apache.org>.

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r213539793

--- Diff: sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/regexpExpressions.scala ---

@@ -229,33 +229,59 @@ case class RLike(left: Expression, right: Expression) extends StringRegexExpress

/**

- * Splits str around pat (pattern is a regular expression).

+ * Splits str around pattern (pattern is a regular expression).

*/

@ExpressionDescription(

- usage = "_FUNC_(str, regex) - Splits `str` around occurrences that match `regex`.",

+ usage = "_FUNC_(str, regex, limit) - Splits `str` around occurrences that match `regex`" +

+ " and returns an array of at most `limit`",

+ arguments = """

+ Arguments:

+ * str - a string expression to split.

+ * pattern - a string representing a regular expression. The pattern string should be a

+ Java regular expression.

+ * limit - an integer expression which controls the number of times the pattern is applied.

+

+ limit > 0:

+ The resulting array's length will not be more than `limit`, and the resulting array's

+ last entry will contain all input beyond the last matched pattern.

+

+ limit < 0:

+ `pattern` will be applied as many times as possible, and the resulting

+ array can be of any size.

+

+ limit = 0:

+ `pattern` will be applied as many times as possible, the resulting array can

+ be of any size, and trailing empty strings will be discarded.

+ """,

examples = """

Examples:

> SELECT _FUNC_('oneAtwoBthreeC', '[ABC]');

["one","two","three",""]

+| > SELECT _FUNC_('oneAtwoBthreeC', '[ABC]', 0);

+ ["one","two","three"]

+| > SELECT _FUNC_('oneAtwoBthreeC', '[ABC]', 2);

--- End diff --

ditto

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22227: [SPARK-25202] [SQL] Implements split with limit s...

Posted by maropu <gi...@git.apache.org>.

Github user maropu commented on a diff in the pull request:

https://github.com/apache/spark/pull/22227#discussion_r213538010

--- Diff: sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/regexpExpressions.scala ---

@@ -229,33 +229,59 @@ case class RLike(left: Expression, right: Expression) extends StringRegexExpress

/**

- * Splits str around pat (pattern is a regular expression).

+ * Splits str around pattern (pattern is a regular expression).

*/

@ExpressionDescription(

- usage = "_FUNC_(str, regex) - Splits `str` around occurrences that match `regex`.",

+ usage = "_FUNC_(str, regex, limit) - Splits `str` around occurrences that match `regex`" +

+ " and returns an array of at most `limit`",

+ arguments = """

+ Arguments:

+ * str - a string expression to split.

+ * pattern - a string representing a regular expression. The pattern string should be a

+ Java regular expression.

+ * limit - an integer expression which controls the number of times the pattern is applied.

+

+ limit > 0:

+ The resulting array's length will not be more than `limit`, and the resulting array's

+ last entry will contain all input beyond the last matched pattern.

+

+ limit < 0:

+ `pattern` will be applied as many times as possible, and the resulting

+ array can be of any size.

+

+ limit = 0:

+ `pattern` will be applied as many times as possible, the resulting array can

+ be of any size, and trailing empty strings will be discarded.

+ """,

examples = """

Examples: