You are viewing a plain text version of this content. The canonical link for it is here.

Posted to dev@liminal.apache.org by jb...@apache.org on 2020/07/20 06:24:40 UTC

[incubator-liminal] branch master created (now 88174a6)

This is an automated email from the ASF dual-hosted git repository.

jbonofre pushed a change to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-liminal.git.

at 88174a6 Add NOTICE and DISCLAIMER

This branch includes the following new commits:

new 87da6bd first commit

new 2887b19 First code commit

new aa63e16 rainbow_dags dag creation + python task

new 9e646df Tasks stubs

new 1c204b6 Add 'build' package

new c74af6c Add run_tests script

new 79c9031 Add LICENSE

new a32d4eb Add build module

new 45ceeac Fix requirements.txt

new 9292bb8 Refactor PythonTask

new 0817e97 Refactor build

new eed1cd0 Elaborate build tests

new f4bdfac Add cli

new ae36a5e Remove TODOs with GitHub issues

new 77139de Fix rainbow_dags python task

new 326a042 Change pythontask config to input/output enhancement

new 14716fc Add python_server service

new 38152de Class registries

new da4022c Fix import bug

new 2ef72ec Performance improvement for class_util

new c1d77f2 Make paths in tests relative to script location

new 7596566 Add job_start and job_end tasks

new 93d9fa2 Update README

new 2d34195 Update README with yml example

new 779e86e Format yaml in README

new 2ce98f4 Add example repository structure to README

new 44d9c3d Update README fix task description

new e1767df Missing requirements

new be9e513 TODO

new c21bfdf Fix missing tasks/dags bug

new 253a15a Add pipeline configuration as default arguments

new 8df40b2 Use user pip conf in docker build

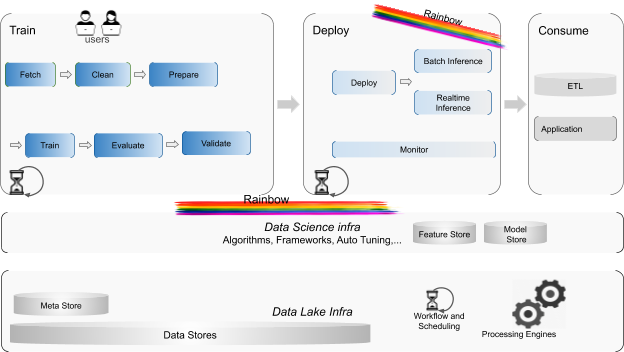

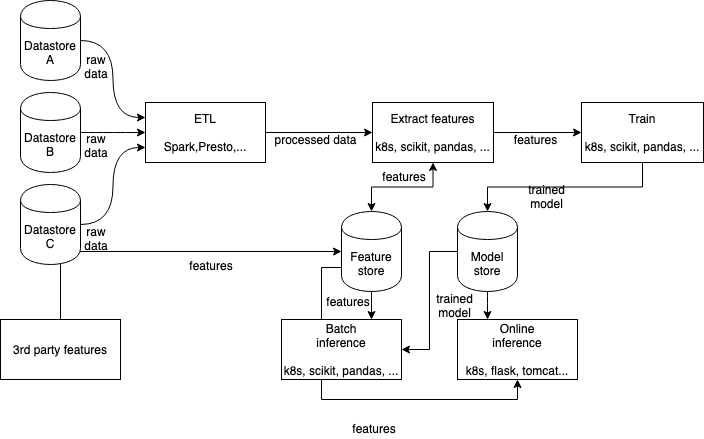

new 54fb987 Add architecture diagrams

new 3245e47 Add short architecture description

new d80d9c0 Upgrade the quality of the diagram

new 269459e Upgrade the quality of the diagram

new 6da38b8 Rainbow local mode

new 07aad66 Local mode improvements

new 324f717 perform pip upgrade when building python images

new fac89af fix jobEndStatus tasks state check

new 0c8f8dc Rename project to Liminal

new 249aa74 fix split list function

new 88174a6 Add NOTICE and DISCLAIMER

The 43 revisions listed above as "new" are entirely new to this

repository and will be described in separate emails. The revisions

listed as "add" were already present in the repository and have only

been added to this reference.

[incubator-liminal] 43/43: Add NOTICE and DISCLAIMER

Posted by jb...@apache.org.

This is an automated email from the ASF dual-hosted git repository.

jbonofre pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-liminal.git

commit 88174a6fe519f9a6052f6e5d366a37a88a915ee4

Author: jbonofre <jb...@apache.org>

AuthorDate: Mon Jul 20 08:22:45 2020 +0200

Add NOTICE and DISCLAIMER

---

README.md | 4 ++--

1 file changed, 2 insertions(+), 2 deletions(-)

diff --git a/README.md b/README.md

index 3623e76..8d1fd47 100644

--- a/README.md

+++ b/README.md

@@ -17,9 +17,9 @@ specific language governing permissions and limitations

under the License.

-->

-# Liminal

+# Apache Liminal

-Liminal is an end-to-end platform for data engineers & scientists, allowing them to build,

+Apache Liminal is an end-to-end platform for data engineers & scientists, allowing them to build,

train and deploy machine learning models in a robust and agile way.

The platform provides the abstractions and declarative capabilities for

[incubator-liminal] 08/43: Add build module

Posted by jb...@apache.org.

This is an automated email from the ASF dual-hosted git repository.

jbonofre pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-liminal.git

commit a32d4eb722511db7b2f29228fae8e08ca5de8e81

Author: aviemzur <av...@gmail.com>

AuthorDate: Thu Mar 12 10:08:43 2020 +0200

Add build module

---

README.md | 4 ++

rainbow/docker/__init__.py | 1 -

rainbow/docker/python/Dockerfile | 19 +++++++

rainbow/docker/{ => python}/__init__.py | 1 -

rainbow/docker/python/python_image.py | 61 ++++++++++++++++++++++

rainbow/{ => runners/airflow}/build/__init__.py | 0

.../rainbow_dags.py => build/build_rainbow.py} | 18 +++----

.../airflow/build/python/container-setup.sh | 9 ++++

.../airflow/build/python/container-teardown.sh | 6 +++

rainbow/runners/airflow/dag/rainbow_dags.py | 10 ++--

rainbow/runners/airflow/model/task.py | 4 +-

.../airflow/tasks/create_cloudformation_stack.py | 2 +-

.../airflow/tasks/delete_cloudformation_stack.py | 2 +-

rainbow/runners/airflow/tasks/job_end.py | 2 +-

rainbow/runners/airflow/tasks/job_start.py | 2 +-

rainbow/runners/airflow/tasks/python.py | 13 +++--

rainbow/runners/airflow/tasks/spark.py | 2 +-

rainbow/runners/airflow/tasks/sql.py | 2 +-

requirements.txt | 3 ++

tests/runners/airflow/dag/test_rainbow_dags.py | 5 ++

.../runners/airflow/tasks/hello_world}/__init__.py | 1 -

.../airflow/tasks/hello_world/hello_world.py | 2 +-

tests/runners/airflow/tasks/test_python.py | 45 +++++++++++++---

23 files changed, 175 insertions(+), 39 deletions(-)

diff --git a/README.md b/README.md

index 7168564..d8b9a23 100644

--- a/README.md

+++ b/README.md

@@ -1 +1,5 @@

# rainbow

+

+```

+ln -s "/Applications/Docker.app/Contents//Resources/bin/docker-credential-desktop" "/usr/local/bin/docker-credential-desktop"

+```

\ No newline at end of file

diff --git a/rainbow/docker/__init__.py b/rainbow/docker/__init__.py

index 8bb1ec2..217e5db 100644

--- a/rainbow/docker/__init__.py

+++ b/rainbow/docker/__init__.py

@@ -15,4 +15,3 @@

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

-# TODO: docker

diff --git a/rainbow/docker/python/Dockerfile b/rainbow/docker/python/Dockerfile

new file mode 100644

index 0000000..d4e3ed2

--- /dev/null

+++ b/rainbow/docker/python/Dockerfile

@@ -0,0 +1,19 @@

+# Use an official Python runtime as a parent image

+FROM python:3.7-slim

+

+# Install aptitude build-essential

+#RUN apt-get install -y --reinstall build-essential

+

+# Set the working directory to /app

+WORKDIR /app

+

+# Order of operations is important here for docker's caching & incremental build performance. !

+# Be careful when changing this code. !

+

+# Install any needed packages specified in requirements.txt

+COPY ./requirements.txt /app

+RUN pip install -r requirements.txt

+

+# Copy the current directory contents into the container at /app

+RUN echo "Copying source code.."

+COPY . /app

diff --git a/rainbow/docker/__init__.py b/rainbow/docker/python/__init__.py

similarity index 98%

copy from rainbow/docker/__init__.py

copy to rainbow/docker/python/__init__.py

index 8bb1ec2..217e5db 100644

--- a/rainbow/docker/__init__.py

+++ b/rainbow/docker/python/__init__.py

@@ -15,4 +15,3 @@

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

-# TODO: docker

diff --git a/rainbow/docker/python/python_image.py b/rainbow/docker/python/python_image.py

new file mode 100644

index 0000000..d66dfbe

--- /dev/null

+++ b/rainbow/docker/python/python_image.py

@@ -0,0 +1,61 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+import os

+import shutil

+import tempfile

+import docker

+

+

+def build(source_path, tag, extra_files=None):

+ if extra_files is None:

+ extra_files = []

+

+ print(f'Building image {tag}')

+

+ temp_dir = tempfile.mkdtemp()

+ # Delete dir for shutil.copytree to work

+ os.rmdir(temp_dir)

+

+ __copy_source(source_path, temp_dir)

+

+ requirements_file_path = os.path.join(temp_dir, 'requirements.txt')

+ if not os.path.exists(requirements_file_path):

+ with open(requirements_file_path, 'w'):

+ pass

+

+ dockerfile_path = os.path.join(os.path.dirname(__file__), 'Dockerfile')

+

+ for file in extra_files + [dockerfile_path]:

+ __copy_file(file, temp_dir)

+

+ print(temp_dir, os.listdir(temp_dir))

+

+ docker_client = docker.from_env()

+ docker_client.images.build(path=temp_dir, tag=tag)

+

+ docker_client.close()

+

+ shutil.rmtree(temp_dir)

+

+

+def __copy_source(source_path, destination_path):

+ shutil.copytree(source_path, destination_path)

+

+

+def __copy_file(source_file_path, destination_file_path):

+ shutil.copy2(source_file_path, destination_file_path)

diff --git a/rainbow/build/__init__.py b/rainbow/runners/airflow/build/__init__.py

similarity index 100%

rename from rainbow/build/__init__.py

rename to rainbow/runners/airflow/build/__init__.py

diff --git a/rainbow/runners/airflow/dag/rainbow_dags.py b/rainbow/runners/airflow/build/build_rainbow.py

similarity index 86%

copy from rainbow/runners/airflow/dag/rainbow_dags.py

copy to rainbow/runners/airflow/build/build_rainbow.py

index 6bdf66b..222ea5f 100644

--- a/rainbow/runners/airflow/dag/rainbow_dags.py

+++ b/rainbow/runners/airflow/build/build_rainbow.py

@@ -26,7 +26,10 @@ from airflow import DAG

from rainbow.runners.airflow.tasks.python import PythonTask

-def register_dags(path):

+def build_rainbow(path):

+ """

+ TODO: doc for build_rainbow

+ """

files = []

for r, d, f in os.walk(path):

for file in f:

@@ -38,7 +41,7 @@ def register_dags(path):

dags = []

for config_file in files:

- print(f'Registering DAG for file: f{config_file}')

+ print(f'Building artifacts file: f{config_file}')

with open(config_file) as stream:

# TODO: validate config

@@ -64,12 +67,7 @@ def register_dags(path):

task_instance = get_task_class(task_type)(

dag, pipeline['pipeline'], parent if parent else None, task, 'all_success'

)

- parent = task_instance.apply_task_to_dag()

-

- print(f'{parent}{{{task_type}}}')

-

- dags.append(dag)

- return dags

+ parent = task_instance.build()

# TODO: task class registry

@@ -83,6 +81,4 @@ def get_task_class(task_type):

if __name__ == '__main__':

- # TODO: configurable yaml dir

- path = 'tests/runners/airflow/dag/rainbow'

- register_dags(path)

+ register_dags('')

diff --git a/rainbow/runners/airflow/build/python/container-setup.sh b/rainbow/runners/airflow/build/python/container-setup.sh

new file mode 100755

index 0000000..6e8d242

--- /dev/null

+++ b/rainbow/runners/airflow/build/python/container-setup.sh

@@ -0,0 +1,9 @@

+#!/bin/bash

+

+echo """$RAINBOW_INPUT""" > rainbow_input.json

+

+AIRFLOW_RETURN_FILE=/airflow/xcom/return.json

+

+mkdir -p /airflow/xcom/

+

+echo {} > $AIRFLOW_RETURN_FILE

diff --git a/rainbow/runners/airflow/build/python/container-teardown.sh b/rainbow/runners/airflow/build/python/container-teardown.sh

new file mode 100755

index 0000000..1219407

--- /dev/null

+++ b/rainbow/runners/airflow/build/python/container-teardown.sh

@@ -0,0 +1,6 @@

+#!/bin/bash

+

+USER_CONFIG_OUTPUT_FILE=$1

+if [ "$USER_CONFIG_OUTPUT_FILE" != "" ]; then

+ cp ${USER_CONFIG_OUTPUT_FILE} /airflow/xcom/return.json

+fi

diff --git a/rainbow/runners/airflow/dag/rainbow_dags.py b/rainbow/runners/airflow/dag/rainbow_dags.py

index 6bdf66b..c564737 100644

--- a/rainbow/runners/airflow/dag/rainbow_dags.py

+++ b/rainbow/runners/airflow/dag/rainbow_dags.py

@@ -23,10 +23,13 @@ from datetime import datetime

import yaml

from airflow import DAG

-from rainbow.runners.airflow.tasks.python import PythonTask

+from rainbow.runners.airflow.build import build_rainbow

def register_dags(path):

+ """

+ TODO: doc for register_dags

+ """

files = []

for r, d, f in os.walk(path):

for file in f:

@@ -72,10 +75,7 @@ def register_dags(path):

return dags

-# TODO: task class registry

-task_classes = {

- 'python': PythonTask

-}

+task_classes = build_rainbow.task_classes

def get_task_class(task_type):

diff --git a/rainbow/runners/airflow/model/task.py b/rainbow/runners/airflow/model/task.py

index 2650aa1..25656ee 100644

--- a/rainbow/runners/airflow/model/task.py

+++ b/rainbow/runners/airflow/model/task.py

@@ -32,9 +32,9 @@ class Task:

self.config = config

self.trigger_rule = trigger_rule

- def setup(self):

+ def build(self):

"""

- Setup method for task.

+ Build task's artifacts.

"""

raise NotImplementedError()

diff --git a/rainbow/runners/airflow/tasks/create_cloudformation_stack.py b/rainbow/runners/airflow/tasks/create_cloudformation_stack.py

index 9304167..c478dc7 100644

--- a/rainbow/runners/airflow/tasks/create_cloudformation_stack.py

+++ b/rainbow/runners/airflow/tasks/create_cloudformation_stack.py

@@ -27,7 +27,7 @@ class CreateCloudFormationStackTask(task.Task):

def __init__(self, dag, pipeline_name, parent, config, trigger_rule):

super().__init__(dag, pipeline_name, parent, config, trigger_rule)

- def setup(self):

+ def build(self):

pass

def apply_task_to_dag(self):

diff --git a/rainbow/runners/airflow/tasks/delete_cloudformation_stack.py b/rainbow/runners/airflow/tasks/delete_cloudformation_stack.py

index 66d5783..d172284 100644

--- a/rainbow/runners/airflow/tasks/delete_cloudformation_stack.py

+++ b/rainbow/runners/airflow/tasks/delete_cloudformation_stack.py

@@ -27,7 +27,7 @@ class DeleteCloudFormationStackTask(task.Task):

def __init__(self, dag, pipeline_name, parent, config, trigger_rule):

super().__init__(dag, pipeline_name, parent, config, trigger_rule)

- def setup(self):

+ def build(self):

pass

def apply_task_to_dag(self):

diff --git a/rainbow/runners/airflow/tasks/job_end.py b/rainbow/runners/airflow/tasks/job_end.py

index b3244c4..a6c5ef2 100644

--- a/rainbow/runners/airflow/tasks/job_end.py

+++ b/rainbow/runners/airflow/tasks/job_end.py

@@ -27,7 +27,7 @@ class JobEndTask(task.Task):

def __init__(self, dag, pipeline_name, parent, config, trigger_rule):

super().__init__(dag, pipeline_name, parent, config, trigger_rule)

- def setup(self):

+ def build(self):

pass

def apply_task_to_dag(self):

diff --git a/rainbow/runners/airflow/tasks/job_start.py b/rainbow/runners/airflow/tasks/job_start.py

index f794e09..7338363 100644

--- a/rainbow/runners/airflow/tasks/job_start.py

+++ b/rainbow/runners/airflow/tasks/job_start.py

@@ -27,7 +27,7 @@ class JobStartTask(task.Task):

def __init__(self, dag, pipeline_name, parent, config, trigger_rule):

super().__init__(dag, pipeline_name, parent, config, trigger_rule)

- def setup(self):

+ def build(self):

pass

def apply_task_to_dag(self):

diff --git a/rainbow/runners/airflow/tasks/python.py b/rainbow/runners/airflow/tasks/python.py

index 983ce0c..8317854 100644

--- a/rainbow/runners/airflow/tasks/python.py

+++ b/rainbow/runners/airflow/tasks/python.py

@@ -16,10 +16,12 @@

# specific language governing permissions and limitations

# under the License.

import json

+import os

from airflow.models import Variable

from airflow.operators.dummy_operator import DummyOperator

+from rainbow.docker.python import python_image

from rainbow.runners.airflow.model import task

from rainbow.runners.airflow.operators.kubernetes_pod_operator import \

ConfigurableKubernetesPodOperator, \

@@ -45,9 +47,14 @@ class PythonTask(task.Task):

self.config_task_id = self.task_name + '_input'

self.executors = self.__executors()

- def setup(self):

- # TODO: build docker image if needed.

- pass

+ def build(self):

+ if 'source' in self.config:

+ script_dir = os.path.dirname(__file__)

+

+ python_image.build(self.config['source'], self.image, [

+ os.path.join(script_dir, '../build/python/container-setup.sh'),

+ os.path.join(script_dir, '../build/python/container-teardown.sh')

+ ])

def apply_task_to_dag(self):

diff --git a/rainbow/runners/airflow/tasks/spark.py b/rainbow/runners/airflow/tasks/spark.py

index ebae64e..8846f97 100644

--- a/rainbow/runners/airflow/tasks/spark.py

+++ b/rainbow/runners/airflow/tasks/spark.py

@@ -27,7 +27,7 @@ class SparkTask(task.Task):

def __init__(self, dag, pipeline_name, parent, config, trigger_rule):

super().__init__(dag, pipeline_name, parent, config, trigger_rule)

- def setup(self):

+ def build(self):

pass

def apply_task_to_dag(self):

diff --git a/rainbow/runners/airflow/tasks/sql.py b/rainbow/runners/airflow/tasks/sql.py

index 6dfc0f1..23458a9 100644

--- a/rainbow/runners/airflow/tasks/sql.py

+++ b/rainbow/runners/airflow/tasks/sql.py

@@ -27,7 +27,7 @@ class SparkTask(task.Task):

def __init__(self, dag, pipeline_name, parent, config, trigger_rule):

super().__init__(dag, pipeline_name, parent, config, trigger_rule)

- def setup(self):

+ def build(self):

pass

def apply_task_to_dag(self):

diff --git a/requirements.txt b/requirements.txt

new file mode 100644

index 0000000..f22c0a7

--- /dev/null

+++ b/requirements.txt

@@ -0,0 +1,3 @@

+docker:4.2.0

+apache-airflow:1.10.9

+docker-pycreds:0.4.0

diff --git a/tests/runners/airflow/dag/test_rainbow_dags.py b/tests/runners/airflow/dag/test_rainbow_dags.py

index 41bea09..c66e3bc 100644

--- a/tests/runners/airflow/dag/test_rainbow_dags.py

+++ b/tests/runners/airflow/dag/test_rainbow_dags.py

@@ -1,6 +1,7 @@

from unittest import TestCase

from rainbow.runners.airflow.dag import rainbow_dags

+import unittest

class Test(TestCase):

@@ -9,3 +10,7 @@ class Test(TestCase):

self.assertEqual(len(dags), 1)

# TODO: elaborate test

pass

+

+

+if __name__ == '__main__':

+ unittest.main()

diff --git a/rainbow/docker/__init__.py b/tests/runners/airflow/tasks/hello_world/__init__.py

similarity index 98%

copy from rainbow/docker/__init__.py

copy to tests/runners/airflow/tasks/hello_world/__init__.py

index 8bb1ec2..217e5db 100644

--- a/rainbow/docker/__init__.py

+++ b/tests/runners/airflow/tasks/hello_world/__init__.py

@@ -15,4 +15,3 @@

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

-# TODO: docker

diff --git a/rainbow/docker/__init__.py b/tests/runners/airflow/tasks/hello_world/hello_world.py

similarity index 97%

copy from rainbow/docker/__init__.py

copy to tests/runners/airflow/tasks/hello_world/hello_world.py

index 8bb1ec2..9b87c05 100644

--- a/rainbow/docker/__init__.py

+++ b/tests/runners/airflow/tasks/hello_world/hello_world.py

@@ -15,4 +15,4 @@

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

-# TODO: docker

+print('Hello world!')

diff --git a/tests/runners/airflow/tasks/test_python.py b/tests/runners/airflow/tasks/test_python.py

index 4f5808b..4bbbe9c 100644

--- a/tests/runners/airflow/tasks/test_python.py

+++ b/tests/runners/airflow/tasks/test_python.py

@@ -16,8 +16,11 @@

# specific language governing permissions and limitations

# under the License.

+import unittest

from unittest import TestCase

+import docker

+

from rainbow.runners.airflow.operators.kubernetes_pod_operator import \

ConfigurableKubernetesPodOperator

from rainbow.runners.airflow.tasks import python

@@ -25,20 +28,14 @@ from tests.util import dag_test_utils

class TestPythonTask(TestCase):

+

def test_apply_task_to_dag(self):

# TODO: elaborate tests

dag = dag_test_utils.create_dag()

task_id = 'my_task'

- config = {

- 'task': task_id,

- 'cmd': 'foo bar',

- 'image': 'my_image',

- 'input_type': 'my_input_type',

- 'input_path': 'my_input',

- 'output_path': '/my_output.json'

- }

+ config = self.__create_conf(task_id)

task0 = python.PythonTask(dag, 'my_pipeline', None, config, 'all_success')

task0.apply_task_to_dag()

@@ -48,3 +45,35 @@ class TestPythonTask(TestCase):

self.assertIsInstance(dag_task0, ConfigurableKubernetesPodOperator)

self.assertEqual(dag_task0.task_id, task_id)

+

+ def test_build(self):

+ config = self.__create_conf('my_task')

+

+ task0 = python.PythonTask(None, None, None, config, None)

+ task0.build()

+

+ # TODO: elaborate test of image, validate input/output

+ image_name = config['image']

+

+ docker_client = docker.from_env()

+ docker_client.images.get(image_name)

+ container_log = docker_client.containers.run(image_name, "python hello_world.py")

+ docker_client.close()

+

+ self.assertEqual("b'Hello world!\\n'", str(container_log))

+

+ @staticmethod

+ def __create_conf(task_id):

+ return {

+ 'task': task_id,

+ 'cmd': 'foo bar',

+ 'image': 'my_image',

+ 'source': 'tests/runners/airflow/tasks/hello_world',

+ 'input_type': 'my_input_type',

+ 'input_path': 'my_input',

+ 'output_path': '/my_output.json'

+ }

+

+

+if __name__ == '__main__':

+ unittest.main()

[incubator-liminal] 19/43: Fix import bug

Posted by jb...@apache.org.

This is an automated email from the ASF dual-hosted git repository.

jbonofre pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-liminal.git

commit da4022cacb1fecf22dc3f9abfde12ff602768e24

Author: aviemzur <av...@gmail.com>

AuthorDate: Tue Mar 17 11:16:37 2020 +0200

Fix import bug

---

rainbow/core/util/class_util.py | 9 ++++++---

1 file changed, 6 insertions(+), 3 deletions(-)

diff --git a/rainbow/core/util/class_util.py b/rainbow/core/util/class_util.py

index 59b8543..31e1806 100644

--- a/rainbow/core/util/class_util.py

+++ b/rainbow/core/util/class_util.py

@@ -29,13 +29,14 @@ def find_subclasses_in_packages(packages, parent_class):

"""

classes = {}

- for package in [a for a in sys.path]:

- for root, directories, files in os.walk(package):

+ for py_path in [a for a in sys.path]:

+ for root, directories, files in os.walk(py_path):

for file in files:

file_path = os.path.join(root, file)

if any(p in file_path for p in packages) \

and file.endswith('.py') \

and '__pycache__' not in file_path:

+

spec = importlib.util.spec_from_file_location(file[:-3], file_path)

mod = importlib.util.module_from_spec(spec)

spec.loader.exec_module(mod)

@@ -43,7 +44,9 @@ def find_subclasses_in_packages(packages, parent_class):

if inspect.isclass(obj) and not obj.__name__.endswith('Mixin'):

module_name = mod.__name__

class_name = obj.__name__

- module = root[len(package) + 1:].replace('/', '.') + '.' + module_name

+ parent_module = root[len(py_path) + 1:].replace('/', '.')

+ module = parent_module.replace('airflow.dags.', '') + \

+ '.' + module_name

clazz = __get_class(module, class_name)

if issubclass(clazz, parent_class):

classes.update({module_name: clazz})

[incubator-liminal] 29/43: TODO

Posted by jb...@apache.org.

This is an automated email from the ASF dual-hosted git repository.

jbonofre pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-liminal.git

commit be9e513fec8a7b47153eff2eac022d1689dfa33a

Author: aviemzur <av...@gmail.com>

AuthorDate: Tue Apr 7 14:46:27 2020 +0300

TODO

---

tests/runners/airflow/dag/test_rainbow_dags.py | 2 ++

1 file changed, 2 insertions(+)

diff --git a/tests/runners/airflow/dag/test_rainbow_dags.py b/tests/runners/airflow/dag/test_rainbow_dags.py

index d8c1afc..c744ce5 100644

--- a/tests/runners/airflow/dag/test_rainbow_dags.py

+++ b/tests/runners/airflow/dag/test_rainbow_dags.py

@@ -13,6 +13,8 @@ class Test(TestCase):

self.assertEqual(len(dags), 1)

test_pipeline = dags[0]

+

+ # TODO: elaborate tests to assert all dags have correct tasks

self.assertEqual(test_pipeline.dag_id, 'my_pipeline')

def test_default_start_task(self):

[incubator-liminal] 21/43: Make paths in tests relative to script

location

Posted by jb...@apache.org.

This is an automated email from the ASF dual-hosted git repository.

jbonofre pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-liminal.git

commit c1d77f21b750402487063ba32ac4439d5382be52

Author: aviemzur <av...@gmail.com>

AuthorDate: Sun Mar 22 10:31:07 2020 +0200

Make paths in tests relative to script location

---

.../airflow/build/http/python/test_python_server_image_builder.py | 7 ++++---

tests/runners/airflow/build/python/test_python_image_builder.py | 7 +++++--

tests/runners/airflow/build/test_build_rainbows.py | 5 ++---

tests/runners/airflow/dag/test_rainbow_dags.py | 4 +++-

tests/runners/airflow/tasks/test_python.py | 2 +-

5 files changed, 15 insertions(+), 10 deletions(-)

diff --git a/tests/runners/airflow/build/http/python/test_python_server_image_builder.py b/tests/runners/airflow/build/http/python/test_python_server_image_builder.py

index 3423976..63fc8fa 100644

--- a/tests/runners/airflow/build/http/python/test_python_server_image_builder.py

+++ b/tests/runners/airflow/build/http/python/test_python_server_image_builder.py

@@ -15,7 +15,7 @@

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

-

+import os

import threading

import time

import unittest

@@ -41,8 +41,9 @@ class TestPythonServer(TestCase):

self.docker_client.close()

def test_build_python_server(self):

+ base_path = os.path.join(os.path.dirname(__file__), '../../../rainbow')

builder = PythonServerImageBuilder(config=self.config,

- base_path='tests/runners/airflow/rainbow',

+ base_path=base_path,

relative_source_path='myserver',

tag=self.image_name)

@@ -87,7 +88,7 @@ class TestPythonServer(TestCase):

'task': task_id,

'cmd': 'foo bar',

'image': 'rainbow_server_image',

- 'source': 'tests/runners/airflow/rainbow/myserver',

+ 'source': 'baz',

'input_type': 'my_input_type',

'input_path': 'my_input',

'output_path': '/my_output.json',

diff --git a/tests/runners/airflow/build/python/test_python_image_builder.py b/tests/runners/airflow/build/python/test_python_image_builder.py

index c8328da..7376987 100644

--- a/tests/runners/airflow/build/python/test_python_image_builder.py

+++ b/tests/runners/airflow/build/python/test_python_image_builder.py

@@ -15,6 +15,7 @@

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

+import os

from unittest import TestCase

import docker

@@ -29,8 +30,10 @@ class TestPythonImageBuilder(TestCase):

image_name = config['image']

+ base_path = os.path.join(os.path.dirname(__file__), '../../rainbow')

+

builder = PythonImageBuilder(config=config,

- base_path='tests/runners/airflow/rainbow',

+ base_path=base_path,

relative_source_path='helloworld',

tag=image_name)

@@ -59,7 +62,7 @@ class TestPythonImageBuilder(TestCase):

'task': task_id,

'cmd': 'foo bar',

'image': 'rainbow_image',

- 'source': 'tests/runners/airflow/rainbow/helloworld',

+ 'source': 'baz',

'input_type': 'my_input_type',

'input_path': 'my_input',

'output_path': '/my_output.json'

diff --git a/tests/runners/airflow/build/test_build_rainbows.py b/tests/runners/airflow/build/test_build_rainbows.py

index 9a4d31c..c5d8ea7 100644

--- a/tests/runners/airflow/build/test_build_rainbows.py

+++ b/tests/runners/airflow/build/test_build_rainbows.py

@@ -15,7 +15,7 @@

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

-

+import os

import unittest

from unittest import TestCase

@@ -25,7 +25,6 @@ from rainbow.build import build_rainbows

class TestBuildRainbows(TestCase):

-

__image_names = [

'my_static_input_task_image',

'my_task_output_input_task_image',

@@ -46,7 +45,7 @@ class TestBuildRainbows(TestCase):

self.docker_client.images.remove(image=image_name)

def test_build_rainbow(self):

- build_rainbows.build_rainbows('tests/runners/airflow/rainbow')

+ build_rainbows.build_rainbows(os.path.join(os.path.dirname(__file__), '../rainbow'))

for image in self.__image_names:

self.docker_client.images.get(image)

diff --git a/tests/runners/airflow/dag/test_rainbow_dags.py b/tests/runners/airflow/dag/test_rainbow_dags.py

index 2a65f31..c8f2e38 100644

--- a/tests/runners/airflow/dag/test_rainbow_dags.py

+++ b/tests/runners/airflow/dag/test_rainbow_dags.py

@@ -1,3 +1,4 @@

+import os

from unittest import TestCase

from rainbow.runners.airflow.dag import rainbow_dags

@@ -6,7 +7,8 @@ import unittest

class Test(TestCase):

def test_register_dags(self):

- dags = rainbow_dags.register_dags('tests/runners/airflow/rainbow')

+ base_path = os.path.join(os.path.dirname(__file__), '../rainbow')

+ dags = rainbow_dags.register_dags(base_path)

self.assertEqual(len(dags), 1)

# TODO: elaborate test

pass

diff --git a/tests/runners/airflow/tasks/test_python.py b/tests/runners/airflow/tasks/test_python.py

index 18e6c1a..ac295eb 100644

--- a/tests/runners/airflow/tasks/test_python.py

+++ b/tests/runners/airflow/tasks/test_python.py

@@ -50,7 +50,7 @@ class TestPythonTask(TestCase):

'task': task_id,

'cmd': 'foo bar',

'image': 'rainbow_image',

- 'source': 'tests/runners/airflow/rainbow/helloworld',

+ 'source': 'baz',

'input_type': 'my_input_type',

'input_path': 'my_input',

'output_path': '/my_output.json'

[incubator-liminal] 24/43: Update README with yml example

Posted by jb...@apache.org.

This is an automated email from the ASF dual-hosted git repository.

jbonofre pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-liminal.git

commit 2d34195321dcc5ddd2e33a37b8042b73f749c09a

Author: aviemzur <av...@gmail.com>

AuthorDate: Mon Mar 23 10:31:27 2020 +0200

Update README with yml example

---

README.md | 69 +++++++++++++++++++++++++++++

rainbow/build/build_rainbows.py | 15 ++++---

rainbow/runners/airflow/dag/rainbow_dags.py | 3 +-

tests/runners/airflow/rainbow/rainbow.yml | 26 +++++------

4 files changed, 94 insertions(+), 19 deletions(-)

diff --git a/README.md b/README.md

index 257bb9a..62036e0 100644

--- a/README.md

+++ b/README.md

@@ -9,3 +9,72 @@ Rainbow's goal is to operationalize the machine learning process, allowing data

quickly transition from a successful experiment to an automated pipeline of model training,

validation, deployment and inference in production, freeing them from engineering and

non-functional tasks, and allowing them to focus on machine learning code and artifacts.

+

+# Basics

+

+Using simple YAML configuration, create your own schedule data pipelines (a sequence of tasks to

+perform), application servers, and more.

+

+## Example YAML config file

+

+name: MyPipeline

+owner: Bosco Albert Baracus

+pipelines:

+ - pipeline: my_pipeline

+ start_date: 1970-01-01

+ timeout_minutes: 45

+ schedule: 0 * 1 * *

+ metrics:

+ namespace: TestNamespace

+ backends: [ 'cloudwatch' ]

+ tasks:

+ - task: my_static_input_task

+ type: python

+ description: static input task

+ image: my_static_input_task_image

+ source: helloworld

+ env_vars:

+ env1: "a"

+ env2: "b"

+ input_type: static

+ input_path: '[ { "foo": "bar" }, { "foo": "baz" } ]'

+ output_path: /output.json

+ cmd: python -u hello_world.py

+ - task: my_parallelized_static_input_task

+ type: python

+ description: parallelized static input task

+ image: my_static_input_task_image

+ env_vars:

+ env1: "a"

+ env2: "b"

+ input_type: static

+ input_path: '[ { "foo": "bar" }, { "foo": "baz" } ]'

+ split_input: True

+ executors: 2

+ cmd: python -u helloworld.py

+ - task: my_task_output_input_task

+ type: python

+ description: parallelized static input task

+ image: my_task_output_input_task_image

+ source: helloworld

+ env_vars:

+ env1: "a"

+ env2: "b"

+ input_type: task

+ input_path: my_static_input_task

+ cmd: python -u hello_world.py

+services:

+ - service:

+ name: my_python_server

+ type: python_server

+ description: my python server

+ image: my_server_image

+ source: myserver

+ endpoints:

+ - endpoint: /myendpoint1

+ module: myserver.my_server

+ function: myendpoint1func

+

+# Installation

+

+TODO: installation.

diff --git a/rainbow/build/build_rainbows.py b/rainbow/build/build_rainbows.py

index fa3a922..4ed5bab 100644

--- a/rainbow/build/build_rainbows.py

+++ b/rainbow/build/build_rainbows.py

@@ -40,12 +40,17 @@ def build_rainbows(path):

for pipeline in rainbow_config['pipelines']:

for task in pipeline['tasks']:

- task_type = task['type']

- builder_class = __get_task_build_class(task_type)

- if builder_class:

- __build_image(base_path, task, builder_class)

+ task_name = task['task']

+

+ if 'source' in task:

+ task_type = task['type']

+ builder_class = __get_task_build_class(task_type)

+ if builder_class:

+ __build_image(base_path, task, builder_class)

+ else:

+ raise ValueError(f'No such task type: {task_type}')

else:

- raise ValueError(f'No such task type: {task_type}')

+ print(f'No source configured for task {task_name}, skipping build..')

for service in rainbow_config['services']:

service_type = service['type']

diff --git a/rainbow/runners/airflow/dag/rainbow_dags.py b/rainbow/runners/airflow/dag/rainbow_dags.py

index 71d18d2..17fd8d9 100644

--- a/rainbow/runners/airflow/dag/rainbow_dags.py

+++ b/rainbow/runners/airflow/dag/rainbow_dags.py

@@ -16,7 +16,7 @@

# specific language governing permissions and limitations

# under the License.

-from datetime import datetime

+from datetime import datetime, timedelta

import yaml

from airflow import DAG

@@ -56,6 +56,7 @@ def register_dags(configs_path):

dag = DAG(

dag_id=pipeline_name,

default_args=default_args,

+ dagrun_timeout=timedelta(minutes=pipeline['timeout_minutes']),

catchup=False

)

diff --git a/tests/runners/airflow/rainbow/rainbow.yml b/tests/runners/airflow/rainbow/rainbow.yml

index 27507fd..66e3dec 100644

--- a/tests/runners/airflow/rainbow/rainbow.yml

+++ b/tests/runners/airflow/rainbow/rainbow.yml

@@ -21,7 +21,7 @@ owner: Bosco Albert Baracus

pipelines:

- pipeline: my_pipeline

start_date: 1970-01-01

- timeout-minutes: 45

+ timeout_minutes: 45

schedule: 0 * 1 * *

metrics:

namespace: TestNamespace

@@ -39,18 +39,18 @@ pipelines:

input_path: '[ { "foo": "bar" }, { "foo": "baz" } ]'

output_path: /output.json

cmd: python -u hello_world.py

-# - task: my_parallelized_static_input_task

-# type: python

-# description: parallelized static input task

-# image: my_static_input_task_image

-# env_vars:

-# env1: "a"

-# env2: "b"

-# input_type: static

-# input_path: '[ { "foo": "bar" }, { "foo": "baz" } ]'

-# split_input: True

-# executors: 2

-# cmd: python -u helloworld.py

+ - task: my_parallelized_static_input_task

+ type: python

+ description: parallelized static input task

+ image: my_static_input_task_image

+ env_vars:

+ env1: "a"

+ env2: "b"

+ input_type: static

+ input_path: '[ { "foo": "bar" }, { "foo": "baz" } ]'

+ split_input: True

+ executors: 2

+ cmd: python -u helloworld.py

- task: my_task_output_input_task

type: python

description: parallelized static input task

[incubator-liminal] 33/43: Add architecture diagrams

Posted by jb...@apache.org.

This is an automated email from the ASF dual-hosted git repository.

jbonofre pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-liminal.git

commit 54fb98781b3522058f322a78cc0919f713b621e5

Author: lior.schachter <li...@naturalint.com>

AuthorDate: Sat Apr 11 12:57:12 2020 +0300

Add architecture diagrams

---

images/rainbow_001.png | Bin 0 -> 54903 bytes

images/rainbow_002.png | Bin 0 -> 34933 bytes

2 files changed, 0 insertions(+), 0 deletions(-)

diff --git a/images/rainbow_001.png b/images/rainbow_001.png

new file mode 100644

index 0000000..b3f50bb

Binary files /dev/null and b/images/rainbow_001.png differ

diff --git a/images/rainbow_002.png b/images/rainbow_002.png

new file mode 100644

index 0000000..7beed54

Binary files /dev/null and b/images/rainbow_002.png differ

[incubator-liminal] 02/43: First code commit

Posted by jb...@apache.org.

This is an automated email from the ASF dual-hosted git repository.

jbonofre pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/incubator-liminal.git

commit 2887b1993ae5d17cc0e172a6f9f828ec4f362e04

Author: aviemzur <av...@gmail.com>

AuthorDate: Sun Mar 8 15:46:22 2020 +0200

First code commit

---

.gitignore | 8 +

LICENSE | 250 +++++++++++++++++++

rainbow/__init__.py | 17 ++

rainbow/cli/__init__.py | 17 ++

rainbow/core/__init__.py | 17 ++

rainbow/docker/__init__.py | 17 ++

rainbow/http/__init__.py | 17 ++

rainbow/monitoring/__init__.py | 17 ++

rainbow/runners/__init__.py | 17 ++

rainbow/runners/airflow/__init__.py | 17 ++

rainbow/runners/airflow/compiler/__init__.py | 17 ++

.../runners/airflow/compiler/rainbow_compiler.py | 26 ++

rainbow/runners/airflow/dag/__init__.py | 17 ++

rainbow/runners/airflow/operators/__init__.py | 17 ++

.../runners/airflow/operators/cloudformation.py | 270 +++++++++++++++++++++

.../airflow/operators/job_status_operator.py | 180 ++++++++++++++

.../airflow/operators/kubernetes_pod_operator.py | 140 +++++++++++

rainbow/sql/__init__.py | 17 ++

tests/__init__.py | 17 ++

tests/runners/__init__.py | 17 ++

tests/runners/airflow/__init__.py | 17 ++

tests/runners/airflow/compiler/__init__.py | 17 ++

tests/runners/airflow/compiler/rainbow.yml | 115 +++++++++

.../airflow/compiler/test_rainbow_compiler.py | 33 +++

tests/runners/airflow/operators/__init__.py | 17 ++

25 files changed, 1311 insertions(+)

diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000..e14e323

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,8 @@

+.idea

+bin

+include

+lib

+venv

+.Python

+*.pyc

+pip-selfcheck.json

diff --git a/LICENSE b/LICENSE

new file mode 100644

index 0000000..8f1552e

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,250 @@

+ Apache License

+ Version 2.0, January 2004

+ http://www.apache.org/licenses/

+

+TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

+

+1. Definitions.

+

+ "License" shall mean the terms and conditions for use, reproduction,

+ and distribution as defined by Sections 1 through 9 of this document.

+

+ "Licensor" shall mean the copyright owner or entity authorized by

+ the copyright owner that is granting the License.

+

+ "Legal Entity" shall mean the union of the acting entity and all

+ other entities that control, are controlled by, or are under common

+ control with that entity. For the purposes of this definition,

+ "control" means (i) the power, direct or indirect, to cause the

+ direction or management of such entity, whether by contract or

+ otherwise, or (ii) ownership of fifty percent (50%) or more of the

+ outstanding shares, or (iii) beneficial ownership of such entity.

+

+ "You" (or "Your") shall mean an individual or Legal Entity

+ exercising permissions granted by this License.

+

+ "Source" form shall mean the preferred form for making modifications,

+ including but not limited to software source code, documentation

+ source, and configuration files.

+

+ "Object" form shall mean any form resulting from mechanical

+ transformation or translation of a Source form, including but

+ not limited to compiled object code, generated documentation,

+ and conversions to other media types.

+

+ "Work" shall mean the work of authorship, whether in Source or

+ Object form, made available under the License, as indicated by a

+ copyright notice that is included in or attached to the work

+ (an example is provided in the Appendix below).

+

+ "Derivative Works" shall mean any work, whether in Source or Object

+ form, that is based on (or derived from) the Work and for which the

+ editorial revisions, annotations, elaborations, or other modifications

+ represent, as a whole, an original work of authorship. For the purposes

+ of this License, Derivative Works shall not include works that remain

+ separable from, or merely link (or bind by name) to the interfaces of,

+ the Work and Derivative Works thereof.

+

+ "Contribution" shall mean any work of authorship, including

+ the original version of the Work and any modifications or additions

+ to that Work or Derivative Works thereof, that is intentionally

+ submitted to Licensor for inclusion in the Work by the copyright owner

+ or by an individual or Legal Entity authorized to submit on behalf of

+ the copyright owner. For the purposes of this definition, "submitted"

+ means any form of electronic, verbal, or written communication sent

+ to the Licensor or its representatives, including but not limited to

+ communication on electronic mailing lists, source code control systems,

+ and issue tracking systems that are managed by, or on behalf of, the

+ Licensor for the purpose of discussing and improving the Work, but

+ excluding communication that is conspicuously marked or otherwise

+ designated in writing by the copyright owner as "Not a Contribution."

+

+ "Contributor" shall mean Licensor and any individual or Legal Entity

+ on behalf of whom a Contribution has been received by Licensor and

+ subsequently incorporated within the Work.

+

+2. Grant of Copyright License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ copyright license to reproduce, prepare Derivative Works of,

+ publicly display, publicly perform, sublicense, and distribute the

+ Work and such Derivative Works in Source or Object form.

+

+3. Grant of Patent License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ (except as stated in this section) patent license to make, have made,

+ use, offer to sell, sell, import, and otherwise transfer the Work,

+ where such license applies only to those patent claims licensable

+ by such Contributor that are necessarily infringed by their

+ Contribution(s) alone or by combination of their Contribution(s)

+ with the Work to which such Contribution(s) was submitted. If You

+ institute patent litigation against any entity (including a

+ cross-claim or counterclaim in a lawsuit) alleging that the Work

+ or a Contribution incorporated within the Work constitutes direct

+ or contributory patent infringement, then any patent licenses

+ granted to You under this License for that Work shall terminate

+ as of the date such litigation is filed.

+

+4. Redistribution. You may reproduce and distribute copies of the

+ Work or Derivative Works thereof in any medium, with or without

+ modifications, and in Source or Object form, provided that You

+ meet the following conditions:

+

+ (a) You must give any other recipients of the Work or

+ Derivative Works a copy of this License; and

+

+ (b) You must cause any modified files to carry prominent notices

+ stating that You changed the files; and

+

+ (c) You must retain, in the Source form of any Derivative Works

+ that You distribute, all copyright, patent, trademark, and

+ attribution notices from the Source form of the Work,

+ excluding those notices that do not pertain to any part of

+ the Derivative Works; and

+

+ (d) If the Work includes a "NOTICE" text file as part of its

+ distribution, then any Derivative Works that You distribute must

+ include a readable copy of the attribution notices contained

+ within such NOTICE file, excluding those notices that do not

+ pertain to any part of the Derivative Works, in at least one

+ of the following places: within a NOTICE text file distributed

+ as part of the Derivative Works; within the Source form or

+ documentation, if provided along with the Derivative Works; or,

+ within a display generated by the Derivative Works, if and

+ wherever such third-party notices normally appear. The contents

+ of the NOTICE file are for informational purposes only and

+ do not modify the License. You may add Your own attribution

+ notices within Derivative Works that You distribute, alongside

+ or as an addendum to the NOTICE text from the Work, provided

+ that such additional attribution notices cannot be construed

+ as modifying the License.

+

+ You may add Your own copyright statement to Your modifications and

+ may provide additional or different license terms and conditions

+ for use, reproduction, or distribution of Your modifications, or

+ for any such Derivative Works as a whole, provided Your use,

+ reproduction, and distribution of the Work otherwise complies with

+ the conditions stated in this License.

+

+5. Submission of Contributions. Unless You explicitly state otherwise,

+ any Contribution intentionally submitted for inclusion in the Work

+ by You to the Licensor shall be under the terms and conditions of

+ this License, without any additional terms or conditions.

+ Notwithstanding the above, nothing herein shall supersede or modify

+ the terms of any separate license agreement you may have executed

+ with Licensor regarding such Contributions.

+

+6. Trademarks. This License does not grant permission to use the trade

+ names, trademarks, service marks, or product names of the Licensor,

+ except as required for reasonable and customary use in describing the

+ origin of the Work and reproducing the content of the NOTICE file.

+

+7. Disclaimer of Warranty. Unless required by applicable law or

+ agreed to in writing, Licensor provides the Work (and each

+ Contributor provides its Contributions) on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

+ implied, including, without limitation, any warranties or conditions

+ of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

+ PARTICULAR PURPOSE. You are solely responsible for determining the

+ appropriateness of using or redistributing the Work and assume any

+ risks associated with Your exercise of permissions under this License.

+

+8. Limitation of Liability. In no event and under no legal theory,

+ whether in tort (including negligence), contract, or otherwise,

+ unless required by applicable law (such as deliberate and grossly

+ negligent acts) or agreed to in writing, shall any Contributor be

+ liable to You for damages, including any direct, indirect, special,

+ incidental, or consequential damages of any character arising as a

+ result of this License or out of the use or inability to use the

+ Work (including but not limited to damages for loss of goodwill,

+ work stoppage, computer failure or malfunction, or any and all

+ other commercial damages or losses), even if such Contributor

+ has been advised of the possibility of such damages.

+

+9. Accepting Warranty or Additional Liability. While redistributing

+ the Work or Derivative Works thereof, You may choose to offer,

+ and charge a fee for, acceptance of support, warranty, indemnity,

+ or other liability obligations and/or rights consistent with this

+ License. However, in accepting such obligations, You may act only

+ on Your own behalf and on Your sole responsibility, not on behalf

+ of any other Contributor, and only if You agree to indemnify,

+ defend, and hold each Contributor harmless for any liability

+ incurred by, or claims asserted against, such Contributor by reason

+ of your accepting any such warranty or additional liability.

+

+END OF TERMS AND CONDITIONS

+

+APPENDIX: How to apply the Apache License to your work.

+

+ To apply the Apache License to your work, attach the following

+ boilerplate notice, with the fields enclosed by brackets "[]"

+ replaced with your own identifying information. (Don't include

+ the brackets!) The text should be enclosed in the appropriate

+ comment syntax for the file format. We also recommend that a

+ file or class name and description of purpose be included on the

+ same "printed page" as the copyright notice for easier

+ identification within third-party archives.

+

+Copyright [yyyy] [name of copyright owner]

+

+Licensed under the Apache License, Version 2.0 (the "License");

+you may not use this file except in compliance with the License.

+You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+Unless required by applicable law or agreed to in writing, software

+distributed under the License is distributed on an "AS IS" BASIS,

+WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+See the License for the specific language governing permissions and

+limitations under the License.

+

+============================================================================

+ APACHE AIRFLOW SUBCOMPONENTS:

+

+ The Apache Airflow project contains subcomponents with separate copyright

+ notices and license terms. Your use of the source code for the these

+ subcomponents is subject to the terms and conditions of the following

+ licenses.

+

+

+========================================================================

+Third party Apache 2.0 licenses

+========================================================================

+

+The following components are provided under the Apache 2.0 License.

+See project link for details. The text of each license is also included

+at licenses/LICENSE-[project].txt.

+

+ (ALv2 License) hue v4.3.0 (https://github.com/cloudera/hue/)

+ (ALv2 License) jqclock v2.3.0 (https://github.com/JohnRDOrazio/jQuery-Clock-Plugin)

+ (ALv2 License) bootstrap3-typeahead v4.0.2 (https://github.com/bassjobsen/Bootstrap-3-Typeahead)

+ (ALv2 License) airflow.contrib.auth.backends.github_enterprise_auth

+

+========================================================================

+MIT licenses

+========================================================================

+

+The following components are provided under the MIT License. See project link for details.

+The text of each license is also included at licenses/LICENSE-[project].txt.

+

+ (MIT License) jquery v3.4.1 (https://jquery.org/license/)

+ (MIT License) dagre-d3 v0.6.4 (https://github.com/cpettitt/dagre-d3)

+ (MIT License) bootstrap v3.2 (https://github.com/twbs/bootstrap/)

+ (MIT License) d3-tip v0.9.1 (https://github.com/Caged/d3-tip)

+ (MIT License) dataTables v1.10.20 (https://datatables.net)

+ (MIT License) Bootstrap Toggle v2.2.2 (http://www.bootstraptoggle.com)

+ (MIT License) normalize.css v3.0.2 (http://necolas.github.io/normalize.css/)

+ (MIT License) ElasticMock v1.3.2 (https://github.com/vrcmarcos/elasticmock)

+ (MIT License) MomentJS v2.24.0 (http://momentjs.com/)

+ (MIT License) python-slugify v2.0.1 (https://github.com/un33k/python-slugify)

+ (MIT License) python-nvd3 v0.15.0 (https://github.com/areski/python-nvd3)

+

+========================================================================

+BSD 3-Clause licenses

+========================================================================

+The following components are provided under the BSD 3-Clause license. See project links for details.

+The text of each license is also included at licenses/LICENSE-[project].txt.

+

+ (BSD 3 License) d3 v5.15.0 (https://d3js.org)

diff --git a/rainbow/__init__.py b/rainbow/__init__.py

new file mode 100644

index 0000000..217e5db

--- /dev/null

+++ b/rainbow/__init__.py

@@ -0,0 +1,17 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

diff --git a/rainbow/cli/__init__.py b/rainbow/cli/__init__.py

new file mode 100644

index 0000000..217e5db

--- /dev/null

+++ b/rainbow/cli/__init__.py

@@ -0,0 +1,17 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

diff --git a/rainbow/core/__init__.py b/rainbow/core/__init__.py

new file mode 100644

index 0000000..217e5db

--- /dev/null

+++ b/rainbow/core/__init__.py

@@ -0,0 +1,17 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

diff --git a/rainbow/docker/__init__.py b/rainbow/docker/__init__.py

new file mode 100644

index 0000000..217e5db

--- /dev/null

+++ b/rainbow/docker/__init__.py

@@ -0,0 +1,17 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

diff --git a/rainbow/http/__init__.py b/rainbow/http/__init__.py

new file mode 100644

index 0000000..217e5db

--- /dev/null

+++ b/rainbow/http/__init__.py

@@ -0,0 +1,17 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

diff --git a/rainbow/monitoring/__init__.py b/rainbow/monitoring/__init__.py

new file mode 100644

index 0000000..217e5db

--- /dev/null

+++ b/rainbow/monitoring/__init__.py

@@ -0,0 +1,17 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

diff --git a/rainbow/runners/__init__.py b/rainbow/runners/__init__.py

new file mode 100644

index 0000000..217e5db

--- /dev/null

+++ b/rainbow/runners/__init__.py

@@ -0,0 +1,17 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

diff --git a/rainbow/runners/airflow/__init__.py b/rainbow/runners/airflow/__init__.py

new file mode 100644

index 0000000..217e5db

--- /dev/null

+++ b/rainbow/runners/airflow/__init__.py

@@ -0,0 +1,17 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

diff --git a/rainbow/runners/airflow/compiler/__init__.py b/rainbow/runners/airflow/compiler/__init__.py

new file mode 100644

index 0000000..217e5db

--- /dev/null

+++ b/rainbow/runners/airflow/compiler/__init__.py

@@ -0,0 +1,17 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

diff --git a/rainbow/runners/airflow/compiler/rainbow_compiler.py b/rainbow/runners/airflow/compiler/rainbow_compiler.py

new file mode 100644

index 0000000..818fdc5

--- /dev/null

+++ b/rainbow/runners/airflow/compiler/rainbow_compiler.py

@@ -0,0 +1,26 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+"""

+Compiler for rainbows.

+"""

+import yaml

+

+

+def parse_yaml(path):

+ with open(path, 'r') as stream:

+ return yaml.safe_load(stream)

diff --git a/rainbow/runners/airflow/dag/__init__.py b/rainbow/runners/airflow/dag/__init__.py

new file mode 100644

index 0000000..217e5db

--- /dev/null

+++ b/rainbow/runners/airflow/dag/__init__.py

@@ -0,0 +1,17 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

diff --git a/rainbow/runners/airflow/operators/__init__.py b/rainbow/runners/airflow/operators/__init__.py

new file mode 100644

index 0000000..217e5db

--- /dev/null

+++ b/rainbow/runners/airflow/operators/__init__.py

@@ -0,0 +1,17 @@

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

diff --git a/rainbow/runners/airflow/operators/cloudformation.py b/rainbow/runners/airflow/operators/cloudformation.py

new file mode 100644

index 0000000..0a70e5a

--- /dev/null

+++ b/rainbow/runners/airflow/operators/cloudformation.py

@@ -0,0 +1,270 @@

+# -*- coding: utf-8 -*-

+#

+# Licensed to the Apache Software Foundation (ASF) under one

+# or more contributor license agreements. See the NOTICE file

+# distributed with this work for additional information

+# regarding copyright ownership. The ASF licenses this file

+# to you under the Apache License, Version 2.0 (the

+# "License"); you may not use this file except in compliance

+# with the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing,

+# software distributed under the License is distributed on an

+# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

+# KIND, either express or implied. See the License for the

+# specific language governing permissions and limitations

+# under the License.

+"""

+This module contains CloudFormation create/delete stack operators.

+Can be removed when Airflow 2.0.0 is released.

+"""

+from typing import List

+

+from airflow.contrib.hooks.aws_hook import AwsHook

+from airflow.models import BaseOperator

+from airflow.sensors.base_sensor_operator import BaseSensorOperator

+from airflow.utils.decorators import apply_defaults

+from botocore.exceptions import ClientError

+

+

+# noinspection PyAbstractClass

+class CloudFormationHook(AwsHook):

+ """

+ Interact with AWS CloudFormation.

+ """

+

+ def __init__(self, region_name=None, *args, **kwargs):

+ self.region_name = region_name

+ self.conn = None

+ super().__init__(*args, **kwargs)

+

+ def get_conn(self):

+ self.conn = self.get_client_type('cloudformation', self.region_name)

+ return self.conn

+

+

+class BaseCloudFormationOperator(BaseOperator):

+ """

+ Base operator for CloudFormation operations.

+

+ :param params: parameters to be passed to CloudFormation.

+ :type dict

+ :param aws_conn_id: aws connection to uses

+ :type aws_conn_id: str

+ """

+ template_fields: List[str] = []

+ template_ext = ()

+ ui_color = '#1d472b'

+ ui_fgcolor = '#FFF'

+

+ @apply_defaults

+ def __init__(

+ self,

+ params,

+ aws_conn_id='aws_default',

+ *args, **kwargs):

+ super().__init__(*args, **kwargs)

+ self.params = params

+ self.aws_conn_id = aws_conn_id

+

+ def execute(self, context):

+ self.log.info('Parameters: %s', self.params)

+

+ self.cloudformation_op(CloudFormationHook(aws_conn_id=self.aws_conn_id).get_conn())

+

+ def cloudformation_op(self, cloudformation):

+ """

+ This is the main method to run CloudFormation operation.

+ """

+ raise NotImplementedError()

+

+

+class CloudFormationCreateStackOperator(BaseCloudFormationOperator):

+ """

+ An operator that creates a CloudFormation stack.

+

+ :param params: parameters to be passed to CloudFormation. For possible arguments see:

+ https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/cloudformation.html#CloudFormation.Client.create_stack

+ :type dict

+ :param aws_conn_id: aws connection to uses

+ :type aws_conn_id: str

+ """

+ template_fields: List[str] = []

+ template_ext = ()

+ ui_color = '#6b9659'

+

+ @apply_defaults

+ def __init__(

+ self,

+ params,

+ aws_conn_id='aws_default',

+ *args, **kwargs):

+ super().__init__(params=params, aws_conn_id=aws_conn_id, *args, **kwargs)

+

+ def cloudformation_op(self, cloudformation):

+ cloudformation.create_stack(**self.params)

+

+

+class CloudFormationDeleteStackOperator(BaseCloudFormationOperator):

+ """

+ An operator that deletes a CloudFormation stack.

+

+ :param params: parameters to be passed to CloudFormation. For possible arguments see:

+ https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/cloudformation.html#CloudFormation.Client.delete_stack

+ :type dict

+ :param aws_conn_id: aws connection to uses

+ :type aws_conn_id: str

+ """

+ template_fields: List[str] = []

+ template_ext = ()

+ ui_color = '#1d472b'

+ ui_fgcolor = '#FFF'

+

+ @apply_defaults

+ def __init__(

+ self,

+ params,

+ aws_conn_id='aws_default',

+ *args, **kwargs):

+ super().__init__(params=params, aws_conn_id=aws_conn_id, *args, **kwargs)

+

+ def cloudformation_op(self, cloudformation):

+ cloudformation.delete_stack(**self.params)

+

+

+class BaseCloudFormationSensor(BaseSensorOperator):

+ """

+ Waits for a stack operation to complete on AWS CloudFormation.

+

+ :param stack_name: The name of the stack to wait for (templated)

+ :type stack_name: str

+ :param aws_conn_id: ID of the Airflow connection where credentials and extra configuration are

+ stored

+ :type aws_conn_id: str

+ :param poke_interval: Time in seconds that the job should wait between each try

+ :type poke_interval: int

+ """

+

+ @apply_defaults

+ def __init__(self,

+ stack_name,

+ complete_status,

+ in_progress_status,

+ aws_conn_id='aws_default',

+ poke_interval=30,

+ *args,

+ **kwargs):

+ super().__init__(poke_interval=poke_interval, *args, **kwargs)

+ self.aws_conn_id = aws_conn_id

+ self.stack_name = stack_name

+ self.complete_status = complete_status

+ self.in_progress_status = in_progress_status

+ self.hook = None

+

+ def poke(self, context):

+ """

+ Checks for existence of the stack in AWS CloudFormation.

+ """

+ cloudformation = self.get_hook().get_conn()

+

+ self.log.info('Poking for stack %s', self.stack_name)

+

+ try:

+ stacks = cloudformation.describe_stacks(StackName=self.stack_name)['Stacks']

+ stack_status = stacks[0]['StackStatus']

+ if stack_status == self.complete_status:

+ return True

+ elif stack_status == self.in_progress_status:

+ return False

+ else:

+ raise ValueError(f'Stack {self.stack_name} in bad state: {stack_status}')

+ except ClientError as e:

+ if 'does not exist' in str(e):

+ if not self.allow_non_existing_stack_status():

+ raise ValueError(f'Stack {self.stack_name} does not exist')

+ else:

+ return True

+ else:

+ raise e

+

+ def get_hook(self):

+ """

+ Gets the AwsGlueCatalogHook

+ """

+ if not self.hook:

+ self.hook = CloudFormationHook(aws_conn_id=self.aws_conn_id)

+

+ return self.hook

+

+ def allow_non_existing_stack_status(self):

+ """

+ Boolean value whether or not sensor should allow non existing stack responses.

+ """

+ return False

+

+

+class CloudFormationCreateStackSensor(BaseCloudFormationSensor):

+ """