You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@s2graph.apache.org by st...@apache.org on 2018/04/24 02:06:11 UTC

[01/16] incubator-s2graph git commit: merge mailing list and PR144 to

README.md

Repository: incubator-s2graph

Updated Branches:

refs/heads/master b21db657e -> b8f990504

merge mailing list and PR144 to README.md

Project: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/repo

Commit: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/commit/f4312882

Tree: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/tree/f4312882

Diff: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/diff/f4312882

Branch: refs/heads/master

Commit: f43128827080e6bdd0eb93b42ba978a4793961c8

Parents: 245335a

Author: DO YUNG YOON <st...@apache.org>

Authored: Fri Mar 30 11:54:19 2018 +0900

Committer: DO YUNG YOON <st...@apache.org>

Committed: Fri Mar 30 11:54:19 2018 +0900

----------------------------------------------------------------------

s2jobs/README.md | 318 ++++++++++++++++++++++++++++++++++++++++++++++++++

1 file changed, 318 insertions(+)

----------------------------------------------------------------------

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/f4312882/s2jobs/README.md

----------------------------------------------------------------------

diff --git a/s2jobs/README.md b/s2jobs/README.md

new file mode 100644

index 0000000..e201ad6

--- /dev/null

+++ b/s2jobs/README.md

@@ -0,0 +1,318 @@

+

+## S2Jobs

+

+S2Jobs is a collection of spark programs that connect S2Graph `WAL` to other systems.

+

+

+## Background

+

+By default, S2Graph publish all incoming data as `WAL` to Apache Kafka for users who want to subscribe `WAL`.

+

+There are many use cases of this `WAL`, but let's just start with simple example, such as **finding out the number of new edges created per minute(OLAP query).**

+

+One possible way is run full table scan on HBase using API, then group by each edge's `createdAt` property value, then count number of edges per each `createdAt` bucket, in this case minute.

+

+Running full table scan on HBase through RegionServer on same cluster that is serving lots of concurrent OLTP requests is prohibit, arguably.

+

+Instead one can subscribe `WAL` from kafka, and sink `WAL` into HDFS, which usually separate hadoop cluster from the cluster which run HBase region server for OLTP requests.

+

+Once `WAL` is available in separate cluster as file, by default the Spark DataFrame, answering above question becomes very easy with spark sql.

+

+```

+select MINUTE(timestamp), count(1)

+from wal

+where operation = 'insert'

+and timestamp between (${start_ts}, ${end_ts})

+```

+

+Above approach works, but there is usually few minutes of lag. If user want to reduce this lag, then it is also possible to subscribe `WAL` from kafka then ingest data into analytics platform such as Druid.

+

+S2Jobs intentionaly provide only interfaces and very basic implementation for connecting `WAL` to other system. It is up to users what system they would use for `WAL` and S2Jobs want the community to contribute this as they leverage S2Graph `WAL`.

+

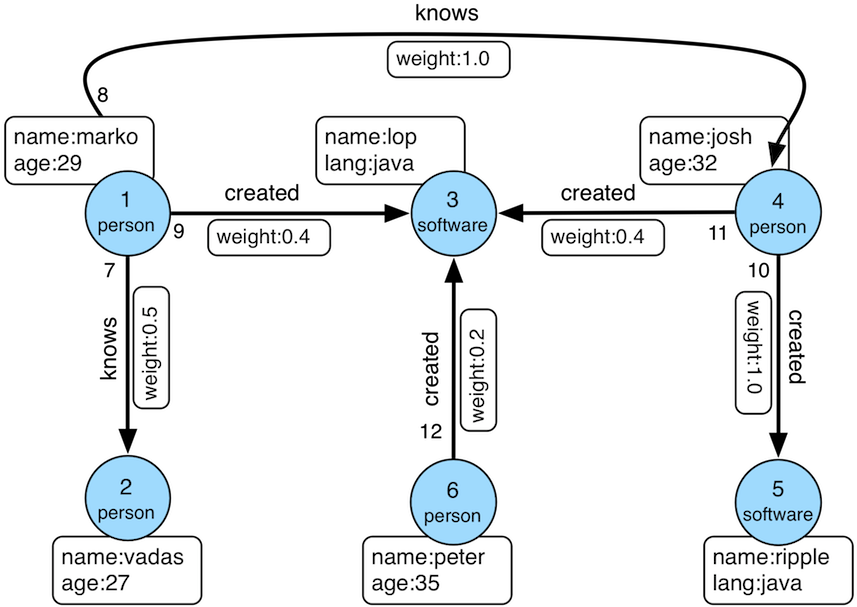

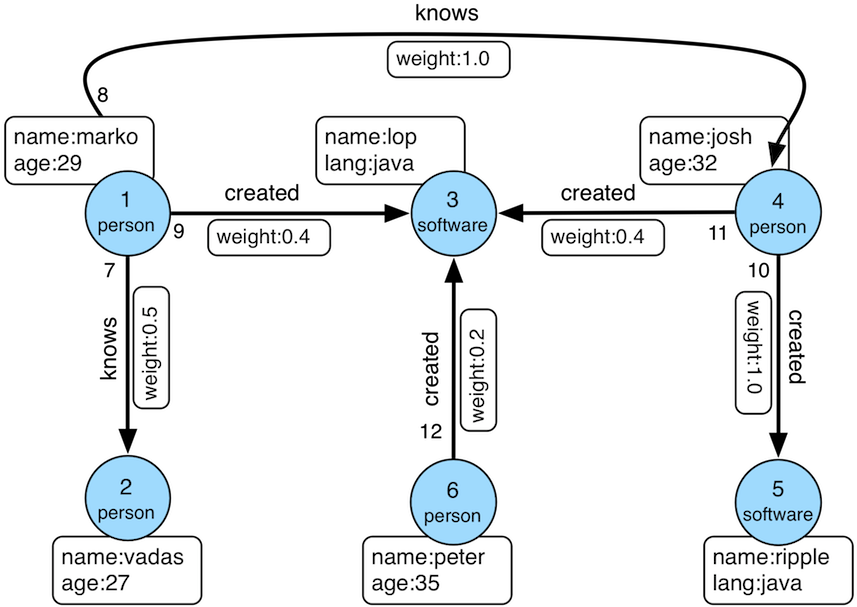

+## Basic Architecture

+

+One simple example data flow would look like following.

+

+<img width="1222" alt="screen shot 2018-03-29 at 3 04 21 pm" src="https://user-images.githubusercontent.com/1264825/38072702-84ef93dc-3362-11e8-9f47-db41f50467f0.png">

+

+Most of spark program available on S2jobs follow following abstraction.

+

+### Task

+`Process class` ? `Task trait` ? `TaskConf`?

+

+### Current Supported Task

+

+### Source

+

+- kakfa : built-in

+- file : built-in

+- hive : built-in

+

+### Process

+

+- sql : process spark sql

+- custom : implement if necessary

+

+### Sink

+

+- kafka : built-in

+

+- file : built-in

+

+- es : elasticsearch-spark

+

+- **s2graph** : added

+

+ - Use the mutateElement function of the S2graph object.

+ - S2graph related setting is required.

+ - put the config file in the classpath or specify it in the job description options.

+

+ ```

+ ex)

+ "type": "s2graph",

+ "options": {

+ "hbase.zookeeper.quorum": "",

+ "db.default.driver": "",

+ "db.default.url": ""

+ }

+

+ ```

+

+#### Data Schema for Kafka

+

+When using Kafka as data source consumer needs to parse it and later on interpret it, because of Kafka has no schema.

+

+When reading data from Kafka with structure streaming, the Dataframe has the following schema.

+

+```

+Column Type

+key binary

+value binary

+topic string

+partition int

+offset long

+timestamp long

+timestampType int

+

+```

+

+In the case of JSON format, data schema can be supported in config.

+You can create a schema by giving a string representing the struct type as JSON as shown below.

+

+```

+{

+ "type": "struct",

+ "fields": [

+ {

+ "name": "timestamp",

+ "type": "long",

+ "nullable": false,

+ "metadata": {}

+ },

+ {

+ "name": "operation",

+ "type": "string",

+ "nullable": true,

+ "metadata": {}

+ },

+ {

+ "name": "elem",

+ "type": "string",

+ "nullable": true,

+ "metadata": {}

+ },

+ {

+ "name": "from",

+ "type": "string",

+ "nullable": true,

+ "metadata": {}

+ },

+ {

+ "name": "to",

+ "type": "string",

+ "nullable": true,

+ "metadata": {}

+ },

+ {

+ "name": "label",

+ "type": "string",

+ "nullable": true,

+ "metadata": {}

+ },

+ {

+ "name": "service",

+ "type": "string",

+ "nullable": true,

+ "metadata": {}

+ },

+ {

+ "name": "props",

+ "type": "string",

+ "nullable": true,

+ "metadata": {}

+ }

+ ]

+}

+

+```

+

+

+----------

+

+### Job Description

+

+**Tasks** and **workflow** can be described in **job** description, and dependencies between tasks are defined by the name of the task specified in the inputs field

+

+>Note that this works was influenced by [airstream of Airbnb](https://www.slideshare.net/databricks/building-data-product-based-on-apache-spark-at-airbnb-with-jingwei-lu-and-liyin-tang).

+

+#### Json Spec

+

+```

+{

+ "name": "JOB_NAME",

+ "source": [

+ {

+ "name": "TASK_NAME",

+ "inputs": [],

+ "type": "SOURCE_TYPE",

+ "options": {

+ "KEY" : "VALUE"

+ }

+ }

+ ],

+ "process": [

+ {

+ "name": "TASK_NAME",

+ "inputs": ["INPUT_TASK_NAME"],

+ "type": "PROCESS_TYPE",

+ "options": {

+ "KEY" : "VALUE"

+ }

+ }

+ ],

+ "sink": [

+ {

+ "name": "TASK_NAME",

+ "inputs": ["INPUT_TASK_NAME"],

+ "type": "SINK_TYPE",

+ "options": {

+ "KEY" : "VALUE"

+ }

+ }

+ ]

+}

+

+```

+

+

+----------

+

+

+### Sample job

+

+#### 1. wallog trasnform (kafka to kafka)

+

+```

+{

+ "name": "kafkaJob",

+ "source": [

+ {

+ "name": "wal",

+ "inputs": [],

+ "type": "kafka",

+ "options": {

+ "kafka.bootstrap.servers" : "localhost:9092",

+ "subscribe": "s2graphInJson",

+ "maxOffsetsPerTrigger": "10000",

+ "format": "json",

+ "schema": "{\"type\":\"struct\",\"fields\":[{\"name\":\"timestamp\",\"type\":\"long\",\"nullable\":false,\"metadata\":{}},{\"name\":\"operation\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}},{\"name\":\"elem\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}},{\"name\":\"from\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}},{\"name\":\"to\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}},{\"name\":\"label\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}},{\"name\":\"service\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}},{\"name\":\"props\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}}]}"

+ }

+ }

+ ],

+ "process": [

+ {

+ "name": "transform",

+ "inputs": ["wal"],

+ "type": "sql",

+ "options": {

+ "sql": "SELECT timestamp, `from` as userId, to as itemId, label as action FROM wal WHERE label = 'user_action'"

+ }

+ }

+ ],

+ "sink": [

+ {

+ "name": "kafka_sink",

+ "inputs": ["transform"],

+ "type": "kafka",

+ "options": {

+ "kafka.bootstrap.servers" : "localhost:9092",

+ "topic": "s2graphTransform",

+ "format": "json"

+ }

+ }

+ ]

+}

+

+```

+

+#### 2. wallog transform (hdfs to hdfs)

+

+```

+{

+ "name": "hdfsJob",

+ "source": [

+ {

+ "name": "wal",

+ "inputs": [],

+ "type": "file",

+ "options": {

+ "paths": "/wal",

+ "format": "parquet"

+ }

+ }

+ ],

+ "process": [

+ {

+ "name": "transform",

+ "inputs": ["wal"],

+ "type": "sql",

+ "options": {

+ "sql": "SELECT timestamp, `from` as userId, to as itemId, label as action FROM wal WHERE label = 'user_action'"

+ }

+ }

+ ],

+ "sink": [

+ {

+ "name": "hdfs_sink",

+ "inputs": ["transform"],

+ "type": "file",

+ "options": {

+ "path": "/wal_transform",

+ "format": "json"

+ }

+ }

+ ]

+}

+

+```

+

+

+----------

+

+

+### Launch Job

+

+When submitting spark job with assembly jar, use these parameters with the job description file path.

+(currently only support file type)

+

+```

+// main class : org.apache.s2graph.s2jobs.JobLauncher

+Usage: run [file|db] [options]

+ -n, --name <value> job display name

+Command: file [options]

+get config from file

+ -f, --confFile <file> configuration file

+Command: db [options]

+get config from db

+ -i, --jobId <jobId> configuration file

+```

\ No newline at end of file

[03/16] incubator-s2graph git commit: add s2graphql,

h2 service to launch

Posted by st...@apache.org.

add s2graphql, h2 service to launch

Project: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/repo

Commit: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/commit/f8c323b8

Tree: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/tree/f8c323b8

Diff: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/diff/f8c323b8

Branch: refs/heads/master

Commit: f8c323b83a16e58dd9e4f24ecf108e0ab3897010

Parents: 0c9f74f

Author: Chul Kang <el...@apache.org>

Authored: Tue Apr 10 17:45:22 2018 +0900

Committer: Chul Kang <el...@apache.org>

Committed: Wed Apr 11 02:57:40 2018 +0900

----------------------------------------------------------------------

bin/s2graph-daemon.sh | 8 +++++++-

bin/s2graph.sh | 5 +++--

bin/start-s2graph.sh | 7 ++++++-

bin/stop-s2graph.sh | 5 ++++-

4 files changed, 20 insertions(+), 5 deletions(-)

----------------------------------------------------------------------

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/f8c323b8/bin/s2graph-daemon.sh

----------------------------------------------------------------------

diff --git a/bin/s2graph-daemon.sh b/bin/s2graph-daemon.sh

index 18c327b..d0ffe80 100755

--- a/bin/s2graph-daemon.sh

+++ b/bin/s2graph-daemon.sh

@@ -20,7 +20,7 @@

usage="This script is intended to be used by other scripts in this directory."

usage=$"$usage\n Please refer to start-s2graph.sh and stop-s2graph.sh"

usage=$"$usage\n Usage: s2graph-daemon.sh (start|stop|restart|run|status)"

-usage="$usage (s2rest_play|s2rest_netty|...) <args...>"

+usage="$usage (s2rest_play|s2rest_netty|s2graphql|...) <args...>"

bin=$(cd "$(dirname "${BASH_SOURCE-$0}")">/dev/null; pwd)

@@ -65,9 +65,15 @@ case $service in

s2rest_play)

main="play.core.server.NettyServer"

;;

+s2graphql)

+ main="org.apache.s2graph.graphql.Server"

+ ;;

hbase)

main="org.apache.hadoop.hbase.master.HMaster"

;;

+h2)

+ main="org.h2.tools.Server"

+ ;;

*)

panic "Unknown service: $service"

esac

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/f8c323b8/bin/s2graph.sh

----------------------------------------------------------------------

diff --git a/bin/s2graph.sh b/bin/s2graph.sh

index 58ddc28..bef373a 100755

--- a/bin/s2graph.sh

+++ b/bin/s2graph.sh

@@ -17,6 +17,7 @@

# starts/stops/restarts an S2Graph server

usage="Usage: s2graph.sh (start|stop|restart|run|status)"

+usage="$usage (s2rest_play|s2rest_netty|s2graphql|...)"

bin=$(cd "$(dirname "${BASH_SOURCE-$0}")">/dev/null; pwd)

@@ -25,8 +26,8 @@ bin=$(cd "$(dirname "${BASH_SOURCE-$0}")">/dev/null; pwd)

. $bin/s2graph-common.sh

# show usage when executed without enough arguments

-if [ $# -lt 1 ]; then

+if [ $# -lt 2 ]; then

panic $usage

fi

-$bin/s2graph-daemon.sh $1 s2rest_play

+$bin/s2graph-daemon.sh $1 $2

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/f8c323b8/bin/start-s2graph.sh

----------------------------------------------------------------------

diff --git a/bin/start-s2graph.sh b/bin/start-s2graph.sh

index 23ab1ab..1347a1a 100755

--- a/bin/start-s2graph.sh

+++ b/bin/start-s2graph.sh

@@ -24,4 +24,9 @@ bin=$(cd "$(dirname "${BASH_SOURCE-$0}")">/dev/null; pwd)

. $bin/s2graph-common.sh

$bin/hbase-standalone.sh start

-$bin/s2graph.sh start

+$bin/s2graph-daemon.sh start h2

+

+service="s2rest_play"

+[ $# -gt 0 ] && { service=$1; }

+

+$bin/s2graph.sh start ${service}

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/f8c323b8/bin/stop-s2graph.sh

----------------------------------------------------------------------

diff --git a/bin/stop-s2graph.sh b/bin/stop-s2graph.sh

index 124c192..09f7426 100755

--- a/bin/stop-s2graph.sh

+++ b/bin/stop-s2graph.sh

@@ -23,5 +23,8 @@ bin=$(cd "$(dirname "${BASH_SOURCE-$0}")">/dev/null; pwd)

. $bin/s2graph-env.sh

. $bin/s2graph-common.sh

-$bin/s2graph.sh stop

+service="s2rest_play"

+[ $# -gt 0 ] && { service=$1; }

+$bin/s2graph.sh stop ${service}

+$bin/s2graph-daemon.sh stop h2

$bin/hbase-standalone.sh stop

[05/16] incubator-s2graph git commit: add column options for FileSink

Posted by st...@apache.org.

add column options for FileSink

Project: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/repo

Commit: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/commit/bf05d528

Tree: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/tree/bf05d528

Diff: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/diff/bf05d528

Branch: refs/heads/master

Commit: bf05d5288307c2d2099d3269ef598077638973b2

Parents: 9fb1fee

Author: Chul Kang <el...@apache.org>

Authored: Tue Apr 10 17:46:58 2018 +0900

Committer: Chul Kang <el...@apache.org>

Committed: Wed Apr 11 02:57:55 2018 +0900

----------------------------------------------------------------------

s2jobs/src/main/scala/org/apache/s2graph/s2jobs/task/Source.scala | 3 +++

1 file changed, 3 insertions(+)

----------------------------------------------------------------------

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/bf05d528/s2jobs/src/main/scala/org/apache/s2graph/s2jobs/task/Source.scala

----------------------------------------------------------------------

diff --git a/s2jobs/src/main/scala/org/apache/s2graph/s2jobs/task/Source.scala b/s2jobs/src/main/scala/org/apache/s2graph/s2jobs/task/Source.scala

index d2ca8ef..1801fe9 100644

--- a/s2jobs/src/main/scala/org/apache/s2graph/s2jobs/task/Source.scala

+++ b/s2jobs/src/main/scala/org/apache/s2graph/s2jobs/task/Source.scala

@@ -81,12 +81,15 @@ class FileSource(conf:TaskConf) extends Source(conf) {

import org.apache.s2graph.s2jobs.Schema._

val paths = conf.options("paths").split(",")

val format = conf.options.getOrElse("format", DEFAULT_FORMAT)

+ val columnsOpt = conf.options.get("columns")

format match {

case "edgeLog" =>

ss.read.format("com.databricks.spark.csv").option("delimiter", "\t")

.schema(BulkLoadSchema).load(paths: _*)

case _ => ss.read.format(format).load(paths: _*)

+ val df = ss.read.format(format).load(paths: _*)

+ if (columnsOpt.isDefined) df.toDF(columnsOpt.get.split(",").map(_.trim): _*) else df

}

}

}

[04/16] incubator-s2graph git commit: modify h2db option to launch

using server mode

Posted by st...@apache.org.

modify h2db option to launch using server mode

Project: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/repo

Commit: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/commit/9fb1fee6

Tree: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/tree/9fb1fee6

Diff: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/diff/9fb1fee6

Branch: refs/heads/master

Commit: 9fb1fee6dd95bd349fb0d460739aa2ffb34b09cc

Parents: f8c323b

Author: Chul Kang <el...@apache.org>

Authored: Tue Apr 10 17:46:22 2018 +0900

Committer: Chul Kang <el...@apache.org>

Committed: Wed Apr 11 02:57:49 2018 +0900

----------------------------------------------------------------------

conf/application.conf | 3 ++-

1 file changed, 2 insertions(+), 1 deletion(-)

----------------------------------------------------------------------

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/9fb1fee6/conf/application.conf

----------------------------------------------------------------------

diff --git a/conf/application.conf b/conf/application.conf

index 5dfa653..1ffea4a 100644

--- a/conf/application.conf

+++ b/conf/application.conf

@@ -72,6 +72,7 @@

# ex) to use mysql as metastore, change db.default.driver = "com.mysql.jdbc.Driver"

# and db.default.url to point to jdbc connection.

db.default.driver = "org.h2.Driver"

-db.default.url = "jdbc:h2:file:./var/metastore;MODE=MYSQL"

+//db.default.url = "jdbc:h2:file:./var/metastore;MODE=MYSQL"

+db.default.url = "jdbc:h2:tcp://localhost/~/graph-dev;MODE=MYSQL"

db.default.user = "sa"

db.default.password = "sa"

[07/16] incubator-s2graph git commit: add example

Posted by st...@apache.org.

add example

Project: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/repo

Commit: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/commit/c97986f3

Tree: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/tree/c97986f3

Diff: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/diff/c97986f3

Branch: refs/heads/master

Commit: c97986f32dbbe05e6487a328ce766228ed5f33ea

Parents: cbc23ec

Author: Chul Kang <el...@apache.org>

Authored: Wed Apr 11 02:52:40 2018 +0900

Committer: Chul Kang <el...@apache.org>

Committed: Wed Apr 11 02:58:15 2018 +0900

----------------------------------------------------------------------

example/common.sh | 54 +++++++++++

example/create_schema.sh | 33 +++++++

example/import_data.sh | 24 +++++

example/movielens/desc.txt | 8 ++

example/movielens/generate_input.sh | 11 +++

example/movielens/jobdesc.template | 106 +++++++++++++++++++++

example/movielens/schema/edge.rated.graphql | 42 ++++++++

example/movielens/schema/edge.related.graphql | 40 ++++++++

example/movielens/schema/edge.tagged.graphql | 37 +++++++

example/movielens/schema/service.graphql | 14 +++

example/movielens/schema/vertex.movie.graphql | 30 ++++++

example/movielens/schema/vertex.tag.graphql | 16 ++++

example/movielens/schema/vertex.user.graphql | 16 ++++

example/prepare.sh | 41 ++++++++

example/run.sh | 27 ++++++

15 files changed, 499 insertions(+)

----------------------------------------------------------------------

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/c97986f3/example/common.sh

----------------------------------------------------------------------

diff --git a/example/common.sh b/example/common.sh

new file mode 100644

index 0000000..6b5b755

--- /dev/null

+++ b/example/common.sh

@@ -0,0 +1,54 @@

+#!/usr/bin/env bash

+ROOTDIR=`pwd`/..

+WORKDIR=`pwd`

+PREFIX="[s2graph] "

+REST="localhost:8000"

+

+msg() {

+ LEVEL=$1

+ MSG=$2

+ TIME=`date +"%Y-%m-%d %H:%M:%S"`

+ echo "${PREFIX} $LEVEL $MSG"

+}

+

+q() {

+ MSG=$1

+ TIME=`date +"%Y-%m-%d %H:%M:%S"`

+ echo ""

+ read -r -p "${PREFIX} >>> $MSG " var

+}

+

+info() { MSG=$1; msg "" "$MSG";}

+warn() { MSG=$1; msg "[WARN] " "$MSG";}

+error() { MSG=$1; msg "[ERROR] " "$MSG"; exit -1;}

+

+usage() {

+ echo "Usage: $0 [SERVICE_NAME]"

+ exit -1

+}

+

+graphql_rest() {

+ file=$1

+ value=`cat ${file} | sed 's/\"/\\\"/g'`

+ query=$(echo $value|tr -d '\n')

+

+ echo $query

+ curl -i -XPOST $REST/graphql -H 'content-type: application/json' -d "

+ {

+ \"query\": \"$query\",

+ \"variables\": null

+ }"

+ sleep 5

+}

+get_services() {

+ curl -i -XPOST $REST/graphql -H 'content-type: application/json' -d '

+ {

+ "query": "query{Management{Services{id name }}}"

+ }'

+}

+get_labels() {

+ curl -i -XPOST $REST/graphql -H 'content-type: application/json' -d '

+ {

+ "query": "query{Management{Labels {id name}}}"

+ }'

+}

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/c97986f3/example/create_schema.sh

----------------------------------------------------------------------

diff --git a/example/create_schema.sh b/example/create_schema.sh

new file mode 100755

index 0000000..9f511cb

--- /dev/null

+++ b/example/create_schema.sh

@@ -0,0 +1,33 @@

+#!/usr/bin/env bash

+source common.sh

+

+[ $# -ne 1 ] && { usage; }

+

+SERVICE=$1

+SERVICE_HOME=${WORKDIR}/${SERVICE}

+SCHEMA_HOME=${SERVICE_HOME}/schema

+

+info "schema dir : $SCHEMA_HOME"

+

+q "generate input >>> "

+cd ${SERVICE_HOME}

+./generate_input.sh

+

+q "create service >>> "

+graphql_rest ${SCHEMA_HOME}/service.graphql

+get_services

+

+q "create vertices >>>"

+for file in `ls ${SCHEMA_HOME}/vertex.*`; do

+ info ""

+ info "file: $file"

+ graphql_rest $file

+done

+

+q "create edges >>> "

+for file in `ls ${SCHEMA_HOME}/edge.*`; do

+ info ""

+ info "file: $file"

+ graphql_rest $file

+done

+get_labels

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/c97986f3/example/import_data.sh

----------------------------------------------------------------------

diff --git a/example/import_data.sh b/example/import_data.sh

new file mode 100755

index 0000000..6822c00

--- /dev/null

+++ b/example/import_data.sh

@@ -0,0 +1,24 @@

+#!/usr/bin/env bash

+source common.sh

+

+[ $# -ne 1 ] && { usage; }

+

+SERVICE=$1

+JOBDESC_TPL=${WORKDIR}/${SERVICE}/jobdesc.template

+JOBDESC=${WORKDIR}/${SERVICE}/jobdesc.json

+WORKING_DIR=`pwd`

+JAR=`ls ${ROOTDIR}/s2jobs/target/scala-2.11/s2jobs-assembly*.jar`

+

+info "WORKING_DIR : $WORKING_DIR"

+info "JAR : $JAR"

+

+sed -e "s/\[=WORKING_DIR\]/${WORKING_DIR//\//\\/}/g" $JOBDESC_TPL > $JOBDESC

+

+unset HADOOP_CONF_DIR

+info "spark submit.."

+${SPARK_HOME}/bin/spark-submit \

+ --class org.apache.s2graph.s2jobs.JobLauncher \

+ --master local[2] \

+ --driver-class-path h2-1.4.192.jar \

+ $JAR file -f $JOBDESC -n SAMPLEJOB:$SERVICE

+

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/c97986f3/example/movielens/desc.txt

----------------------------------------------------------------------

diff --git a/example/movielens/desc.txt b/example/movielens/desc.txt

new file mode 100644

index 0000000..0da9a86

--- /dev/null

+++ b/example/movielens/desc.txt

@@ -0,0 +1,8 @@

+MovieLens

+GroupLens Research has collected and made available rating data sets from the MovieLens web site (http://movielens.org).

+The data sets were collected over various periods of time, depending on the size of the set.

+

+This dataset (ml-latest-small) describes 5-star rating and free-text tagging activity from MovieLens, a movie recommendation service.

+It contains 100004 ratings and 1296 tag applications across 9125 movies.

+These data were created by 671 users between January 09, 1995 and October 16, 2016.

+This dataset was generated on October 17, 2016.

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/c97986f3/example/movielens/generate_input.sh

----------------------------------------------------------------------

diff --git a/example/movielens/generate_input.sh b/example/movielens/generate_input.sh

new file mode 100755

index 0000000..b28640e

--- /dev/null

+++ b/example/movielens/generate_input.sh

@@ -0,0 +1,11 @@

+#!/usr/bin/env bash

+

+INPUT=input

+mkdir -p ${INPUT}

+rm -rf ${INPUT}/*

+cd ${INPUT}

+wget http://files.grouplens.org/datasets/movielens/ml-latest-small.zip

+unzip ml-latest-small.zip

+rm -f ml-latest-small.zip

+mv ml-latest-small/* .

+rmdir ml-latest-small

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/c97986f3/example/movielens/jobdesc.template

----------------------------------------------------------------------

diff --git a/example/movielens/jobdesc.template b/example/movielens/jobdesc.template

new file mode 100644

index 0000000..ed62383

--- /dev/null

+++ b/example/movielens/jobdesc.template

@@ -0,0 +1,106 @@

+{

+ "name": "import_movielens",

+ "source": [

+ {

+ "name": "movies",

+ "inputs": [],

+ "type": "file",

+ "options": {

+ "paths": "file://[=WORKING_DIR]/movielens/input/movies.csv",

+ "format": "csv",

+ "columns": "movieId,title,genres"

+ }

+ },

+ {

+ "name": "ratings",

+ "inputs": [],

+ "type": "file",

+ "options": {

+ "paths": "file://[=WORKING_DIR]/movielens/input/ratings.csv",

+ "format": "csv",

+ "columns": "userId,movieId,rating,timestamp"

+ }

+ },

+ {

+ "name": "tags",

+ "inputs": [],

+ "type": "file",

+ "options": {

+ "paths": "file://[=WORKING_DIR]/movielens/input/tags.csv",

+ "format": "csv",

+ "columns": "userId,movieId,tag,timestamp"

+ }

+ }

+ ],

+ "process": [

+ {

+ "name": "vertex_movie",

+ "inputs": [

+ "movies"

+ ],

+ "type": "sql",

+ "options": {

+ "sql": "SELECT \n(unix_timestamp() * 1000) as timestamp, \n'v' as elem, \nCAST(movieId AS LONG) AS id, \n'movielens' as service, \n'Movie' as column, \nto_json(\nnamed_struct(\n 'title', title, \n 'genres', genres\n)\n) as props \nFROM movies \nWHERE movieId != 'movieId'"

+ }

+ },

+ {

+ "name": "edge_rated",

+ "inputs": [

+ "ratings"

+ ],

+ "type": "sql",

+ "options": {

+ "sql": "SELECT \nCAST(timestamp AS LONG) * 1000 AS timestamp, \n'e' as elem, \nCAST(userId AS LONG) as `from`, \nCAST(movieId AS LONG) as to, \n'rated' as label, \nto_json(\nnamed_struct(\n 'score', CAST(rating as float)\n)\n) as props \nFROM ratings \nWHERE userId != 'userId'"

+ }

+ },

+ {

+ "name": "edge_tagged",

+ "inputs": [

+ "tags"

+ ],

+ "type": "sql",

+ "options": {

+ "sql": "SELECT \nCAST(timestamp AS LONG) * 1000 AS timestamp, \n'e' as elem, \nCAST(userId AS LONG) as `from`, \nCAST(movieId AS LONG) as to, \n'tagged' as label, \nto_json(\nnamed_struct('tag', tag)\n) as props \nFROM tags \nWHERE userId != 'userId'"

+ }

+ },

+ {

+ "name": "edges",

+ "inputs": [

+ "edge_rated",

+ "edge_tagged"

+ ],

+ "type": "sql",

+ "options": {

+ "sql": "SELECT * FROM edge_rated UNION SELECT * FROM edge_tagged"

+ }

+ }

+ ],

+ "sink": [

+ {

+ "name": "vertex_sink",

+ "inputs": [

+ "vertex_movie"

+ ],

+ "type": "s2graph",

+ "options": {

+ "db.default.driver":"org.h2.Driver",

+ "db.default.url": "jdbc:h2:tcp://localhost/./var/metastore;MODE=MYSQL",

+ "s2.spark.sql.streaming.sink.grouped.size": "10",

+ "s2.spark.sql.streaming.sink.wait.time": "10"

+ }

+ },

+ {

+ "name": "edge_sink",

+ "inputs": [

+ "edges"

+ ],

+ "type": "s2graph",

+ "options": {

+ "db.default.driver":"org.h2.Driver",

+ "db.default.url": "jdbc:h2:tcp://localhost/./var/metastore;MODE=MYSQL",

+ "s2.spark.sql.streaming.sink.grouped.size": "10",

+ "s2.spark.sql.streaming.sink.wait.time": "10"

+ }

+ }

+ ]

+}

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/c97986f3/example/movielens/schema/edge.rated.graphql

----------------------------------------------------------------------

diff --git a/example/movielens/schema/edge.rated.graphql b/example/movielens/schema/edge.rated.graphql

new file mode 100644

index 0000000..6e3c40f

--- /dev/null

+++ b/example/movielens/schema/edge.rated.graphql

@@ -0,0 +1,42 @@

+mutation{

+ Management{

+ createLabel(

+ name:"rated"

+ sourceService: {

+ movielens: {

+ columnName: User

+ }

+ }

+ targetService: {

+ movielens: {

+ columnName: Movie

+ }

+ }

+ serviceName: movielens

+ consistencyLevel: strong

+ props:[

+ {

+ name: "score"

+ dataType: double

+ defaultValue: "0.0"

+ storeInGlobalIndex: true

+ }

+ ]

+ indices:{

+ name:"_PK"

+ propNames:["score"]

+ }

+ ) {

+ isSuccess

+ message

+ object{

+ id

+ name

+ props{

+ name

+ }

+ }

+ }

+ }

+}

+

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/c97986f3/example/movielens/schema/edge.related.graphql

----------------------------------------------------------------------

diff --git a/example/movielens/schema/edge.related.graphql b/example/movielens/schema/edge.related.graphql

new file mode 100644

index 0000000..baf6e54

--- /dev/null

+++ b/example/movielens/schema/edge.related.graphql

@@ -0,0 +1,40 @@

+mutation{

+ Management{

+ createLabel(

+ name:"related"

+ sourceService: {

+ movielens: {

+ columnName: Movie

+ }

+ }

+ targetService: {

+ movielens: {

+ columnName: Tag

+ }

+ }

+ serviceName: movielens

+ consistencyLevel: weak

+ props:{

+ name: "relevance"

+ dataType: double

+ defaultValue: "0.0"

+ storeInGlobalIndex: true

+ }

+ indices:{

+ name:"_PK"

+ propNames:["relevance"]

+ }

+

+ ) {

+ isSuccess

+ message

+ object{

+ id

+ name

+ props{

+ name

+ }

+ }

+ }

+ }

+}

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/c97986f3/example/movielens/schema/edge.tagged.graphql

----------------------------------------------------------------------

diff --git a/example/movielens/schema/edge.tagged.graphql b/example/movielens/schema/edge.tagged.graphql

new file mode 100644

index 0000000..da5e997

--- /dev/null

+++ b/example/movielens/schema/edge.tagged.graphql

@@ -0,0 +1,37 @@

+mutation{

+ Management{

+ createLabel(

+ name:"tagged"

+ sourceService: {

+ movielens: {

+ columnName: User

+ }

+ }

+ targetService: {

+ movielens: {

+ columnName: Movie

+ }

+ }

+ serviceName: movielens

+ consistencyLevel: weak

+ props:[

+ {

+ name: "tag"

+ dataType: string

+ defaultValue: ""

+ storeInGlobalIndex: true

+ }

+ ]

+ ) {

+ isSuccess

+ message

+ object{

+ id

+ name

+ props{

+ name

+ }

+ }

+ }

+ }

+}

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/c97986f3/example/movielens/schema/service.graphql

----------------------------------------------------------------------

diff --git a/example/movielens/schema/service.graphql b/example/movielens/schema/service.graphql

new file mode 100644

index 0000000..ed7e157

--- /dev/null

+++ b/example/movielens/schema/service.graphql

@@ -0,0 +1,14 @@

+mutation{

+ Management{

+ createService(

+ name:"movielens"

+ ){

+ isSuccess

+ message

+ object{

+ id

+ name

+ }

+ }

+ }

+}

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/c97986f3/example/movielens/schema/vertex.movie.graphql

----------------------------------------------------------------------

diff --git a/example/movielens/schema/vertex.movie.graphql b/example/movielens/schema/vertex.movie.graphql

new file mode 100644

index 0000000..3702a1e

--- /dev/null

+++ b/example/movielens/schema/vertex.movie.graphql

@@ -0,0 +1,30 @@

+mutation{

+ Management{

+ createServiceColumn(

+ serviceName:movielens

+ columnName:"Movie"

+ columnType: long

+ props: [

+ {

+ name: "title"

+ dataType: string

+ defaultValue: ""

+ storeInGlobalIndex: true

+ },

+ {

+ name: "genres"

+ dataType: string

+ defaultValue: ""

+ storeInGlobalIndex: true

+ }

+ ]

+ ){

+ isSuccess

+ message

+ object{

+ id

+ name

+ }

+ }

+ }

+}

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/c97986f3/example/movielens/schema/vertex.tag.graphql

----------------------------------------------------------------------

diff --git a/example/movielens/schema/vertex.tag.graphql b/example/movielens/schema/vertex.tag.graphql

new file mode 100644

index 0000000..05bd11c

--- /dev/null

+++ b/example/movielens/schema/vertex.tag.graphql

@@ -0,0 +1,16 @@

+mutation{

+ Management{

+ createServiceColumn(

+ serviceName:movielens

+ columnName:"Tag"

+ columnType: string

+ ){

+ isSuccess

+ message

+ object{

+ id

+ name

+ }

+ }

+ }

+}

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/c97986f3/example/movielens/schema/vertex.user.graphql

----------------------------------------------------------------------

diff --git a/example/movielens/schema/vertex.user.graphql b/example/movielens/schema/vertex.user.graphql

new file mode 100644

index 0000000..bba8a16

--- /dev/null

+++ b/example/movielens/schema/vertex.user.graphql

@@ -0,0 +1,16 @@

+mutation{

+ Management{

+ createServiceColumn(

+ serviceName:movielens

+ columnName:"User"

+ columnType: long

+ ){

+ isSuccess

+ message

+ object{

+ id

+ name

+ }

+ }

+ }

+}

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/c97986f3/example/prepare.sh

----------------------------------------------------------------------

diff --git a/example/prepare.sh b/example/prepare.sh

new file mode 100755

index 0000000..f35c94c

--- /dev/null

+++ b/example/prepare.sh

@@ -0,0 +1,41 @@

+#!/usr/bin/env bash

+source common.sh

+

+q "1. s2graphql is running?"

+status=`curl -s -o /dev/null -w "%{http_code}" "$REST"`

+if [ $status != '200' ]; then

+ warn "s2graphql not running.. "

+

+ cd $ROOTDIR

+ output="$(ls target/apache-s2graph-*-incubating-bin)"

+ if [ -z "$output" ]; then

+ info "build package..."

+ sbt clean package

+ fi

+

+ info "now we will launch s2graphql using build scripts"

+ cd target/apache-s2graph-*-incubating-bin

+ ./bin/start-s2graph.sh s2graphql

+else

+ info "s2graphql is running!!"

+fi

+

+q "2. s2jobs assembly jar exists?"

+jar=`ls $ROOTDIR/s2jobs/target/scala-2.11/s2jobs-assembly*.jar`

+if [ -z $jar ]; then

+ warn "s2jobs assembly not exists.."

+ info "start s2jobs assembly now"

+ cd $ROOTDIR

+ sbt 'project s2jobs' clean assembly

+else

+ info "s2jobs assembly exists!!"

+fi

+

+q "3. SPARK_HOME is set?"

+if [ -z $SPARK_HOME ]; then

+ error "it must be setting SPARK_HOME environment variable"

+else

+ info "SPARK_HOME exists!! (${SPARK_HOME})"

+fi

+

+info "prepare finished.."

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/c97986f3/example/run.sh

----------------------------------------------------------------------

diff --git a/example/run.sh b/example/run.sh

new file mode 100755

index 0000000..08f2989

--- /dev/null

+++ b/example/run.sh

@@ -0,0 +1,27 @@

+#!/usr/bin/env bash

+source common.sh

+

+SERVICE="movielens"

+[ $# -gt 0 ] && { SERVICE=$1; }

+DESC=`cat $SERVICE/desc.txt`

+

+info ""

+info ""

+info "Let's try to create the toy project '$SERVICE' using s2graphql and s2jobs."

+info ""

+while IFS='' read -r line || [[ -n "$line" ]]; do

+ info "$line"

+done < "$SERVICE/desc.txt"

+

+q "First of all, we will check prerequisites"

+./prepare.sh $SERVICE

+[ $? -ne 0 ] && { exit -1; }

+

+q "And now, we create vertex and edge schema using graphql"

+./create_schema.sh $SERVICE

+[ $? -ne 0 ] && { exit -1; }

+

+q "Finally, we import example data to service"

+./import_data.sh $SERVICE

+[ $? -ne 0 ] && { exit -1; }

+

[02/16] incubator-s2graph git commit: update README.

Posted by st...@apache.org.

update README.

Project: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/repo

Commit: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/commit/0c9f74fd

Tree: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/tree/0c9f74fd

Diff: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/diff/0c9f74fd

Branch: refs/heads/master

Commit: 0c9f74fda42aa7b319cc3b11c2f504d5d799191e

Parents: f431288

Author: DO YUNG YOON <st...@apache.org>

Authored: Fri Apr 6 19:08:16 2018 +0900

Committer: DO YUNG YOON <st...@apache.org>

Committed: Fri Apr 6 20:25:45 2018 +0900

----------------------------------------------------------------------

s2jobs/README.md | 237 +++++++++++++++++++++++++++++---------------------

1 file changed, 139 insertions(+), 98 deletions(-)

----------------------------------------------------------------------

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/0c9f74fd/s2jobs/README.md

----------------------------------------------------------------------

diff --git a/s2jobs/README.md b/s2jobs/README.md

index e201ad6..f79abb7 100644

--- a/s2jobs/README.md

+++ b/s2jobs/README.md

@@ -1,84 +1,127 @@

-## S2Jobs

-S2Jobs is a collection of spark programs that connect S2Graph `WAL` to other systems.

+

+# S2Jobs

+S2Jobs is a collection of spark programs which can be used to support `online transaction processing(OLAP)` on S2Graph.

-## Background

+There are currently two ways to run `OLAP` on S2Graph.

-By default, S2Graph publish all incoming data as `WAL` to Apache Kafka for users who want to subscribe `WAL`.

-There are many use cases of this `WAL`, but let's just start with simple example, such as **finding out the number of new edges created per minute(OLAP query).**

+----------

+

+

+## 1. HBase Snapshots

+

+HBase provides excellent support for creating table [snapshot](http://hbase.apache.org/0.94/book/ops.snapshots.html)

-One possible way is run full table scan on HBase using API, then group by each edge's `createdAt` property value, then count number of edges per each `createdAt` bucket, in this case minute.

+S2Jobs provide `S2GraphSource` class which can create `Spark DataFrame` from `S2Edge/S2Vertex` stored in HBase Snapshot.

-Running full table scan on HBase through RegionServer on same cluster that is serving lots of concurrent OLTP requests is prohibit, arguably.

+Instead of providing graph algorithms such as `PageRank` by itself, S2Graph let users connect graph stored in S2Graph to their favorite analytics platform, for example [**`Apache Spark`**](https://spark.apache.org/).

-Instead one can subscribe `WAL` from kafka, and sink `WAL` into HDFS, which usually separate hadoop cluster from the cluster which run HBase region server for OLTP requests.

+Once user finished processing, S2Jobs provide `S2GraphSink` to connect analyzed data into S2Graph back.

-Once `WAL` is available in separate cluster as file, by default the Spark DataFrame, answering above question becomes very easy with spark sql.

+

+

+

+This architecture seems complicated at the first glace, but note that this approach has lots of advantages on performance and stability on `OLTP` cluster especially comparing to using HBase client API `Scan`.

+

+Here is result `DataFrame` schema for `S2Vertex` and `S2Edge`.

```

-select MINUTE(timestamp), count(1)

-from wal

-where operation = 'insert'

-and timestamp between (${start_ts}, ${end_ts})

+S2Vertex

+root

+ |-- timestamp: long (nullable = false)

+ |-- operation: string (nullable = false)

+ |-- elem: string (nullable = false)

+ |-- id: string (nullable = false)

+ |-- service: string (nullable = false)

+ |-- column: string (nullable = false)

+ |-- props: string (nullable = false)

+

+S2Edge

+root

+ |-- timestamp: long (nullable = false)

+ |-- operation: string (nullable = false)

+ |-- elem: string (nullable = false)

+ |-- from: string (nullable = false)

+ |-- to: string (nullable = false)

+ |-- label: string (nullable = false)

+ |-- props: string (nullable = false)

+ |-- direction: string (nullable = true)

```

-Above approach works, but there is usually few minutes of lag. If user want to reduce this lag, then it is also possible to subscribe `WAL` from kafka then ingest data into analytics platform such as Druid.

+To run graph algorithm, transform above `DataFrame` into [GraphFrames](https://graphframes.github.io/index.html), then run provided functionality on `GraphFrames`.

-S2Jobs intentionaly provide only interfaces and very basic implementation for connecting `WAL` to other system. It is up to users what system they would use for `WAL` and S2Jobs want the community to contribute this as they leverage S2Graph `WAL`.

+Lastly, `S2GraphSource` and `S2GraphSink` open two interface `GraphElementReadable` and `GraphElementWritable` for users who want to serialize/deserialize custom graph from/to S2Graph.

-## Basic Architecture

+For example, one can simply implement `RDFTsvFormatReader` to convert each triple on RDF file to `S2Edge/S2Vertex` then use it in `S2GraphSource`'s `toDF` method to create `DataFrame` from RDF.

-One simple example data flow would look like following.

+This comes very handily when there are many different data sources with different formats to migrate into S2Graph.

-<img width="1222" alt="screen shot 2018-03-29 at 3 04 21 pm" src="https://user-images.githubusercontent.com/1264825/38072702-84ef93dc-3362-11e8-9f47-db41f50467f0.png">

-Most of spark program available on S2jobs follow following abstraction.

+## 2. `WAL` log on Kafka

-### Task

-`Process class` ? `Task trait` ? `TaskConf`?

+By default, S2Graph publish all incoming data into Kafka, and users subscribe this for **incremental processing**.

-### Current Supported Task

+S2jobs provide programs to process `stream` for incremental processing, using [Spark Structured Streaming](https://spark.apache.org/docs/latest/structured-streaming-programming-guide.html), which provide a great way to express streaming computation the same way as a batch computation.

-### Source

+The `Job` in S2Jobs abstract one spark and `Job` consist of multiple `Task`s. Think `Job` as very simple `workflow` and there are `Source`, `Process`, `Sink` subclass that implement `Task` interface.

-- kakfa : built-in

-- file : built-in

-- hive : built-in

+----------

+### 2.1. Job Description

-### Process

+**Tasks** and **workflow** can be described in **Job** description, and dependencies between tasks are defined by the name of the task specified in the inputs field

-- sql : process spark sql

-- custom : implement if necessary

+>Note that these works were influenced by [airstream of Airbnb](https://www.slideshare.net/databricks/building-data-product-based-on-apache-spark-at-airbnb-with-jingwei-lu-and-liyin-tang).

-### Sink

+#### Json Spec

-- kafka : built-in

-

-- file : built-in

-

-- es : elasticsearch-spark

-

-- **s2graph** : added

-

- - Use the mutateElement function of the S2graph object.

- - S2graph related setting is required.

- - put the config file in the classpath or specify it in the job description options.

-

- ```

- ex)

- "type": "s2graph",

- "options": {

- "hbase.zookeeper.quorum": "",

- "db.default.driver": "",

- "db.default.url": ""

+```js

+{

+ "name": "JOB_NAME",

+ "source": [

+ {

+ "name": "TASK_NAME",

+ "inputs": [],

+ "type": "SOURCE_TYPE",

+ "options": {

+ "KEY" : "VALUE"

+ }

}

-

- ```

+ ],

+ "process": [

+ {

+ "name": "TASK_NAME",

+ "inputs": ["INPUT_TASK_NAME"],

+ "type": "PROCESS_TYPE",

+ "options": {

+ "KEY" : "VALUE"

+ }

+ }

+ ],

+ "sink": [

+ {

+ "name": "TASK_NAME",

+ "inputs": ["INPUT_TASK_NAME"],

+ "type": "SINK_TYPE",

+ "options": {

+ "KEY" : "VALUE"

+ }

+ }

+ ]

+}

+

+```

+----------

+

+### 2.2. Current supported `Task`s.

-#### Data Schema for Kafka

+#### Source

+

+- KafkaSource: Built-in from Spark.

+

+##### Data Schema for Kafka

When using Kafka as data source consumer needs to parse it and later on interpret it, because of Kafka has no schema.

@@ -156,61 +199,57 @@ You can create a schema by giving a string representing the struct type as JSON

```

+- FileSource: Built-in from Spark.

+- HiveSource: Built-in from Spark.

+- S2GraphSource

+ - HBaseSnapshot read, then create DataFrame. See HBaseSnapshot in this document.

+ - Example options for `S2GraphSource` are following(reference examples for details).

+

+```js

+{

+ "type": "s2graph",

+ "options": {

+ "hbase.zookeeper.quorum": "localhost",

+ "db.default.driver": "com.mysql.jdbc.Driver",

+ "db.default.url": "jdbc:mysql://localhost:3306/graph_dev",

+ "db.default.user": "graph",

+ "db.default.password": "graph",

+ "hbase.rootdir": "/hbase",

+ "restore.path": "/tmp/restore_hbase",

+ "hbase.table.names": "movielens-snapshot"

+ }

+}

+```

-----------

-### Job Description

+#### Process

+- SqlProcess : process spark sql

+- custom : implement if necessary

-**Tasks** and **workflow** can be described in **job** description, and dependencies between tasks are defined by the name of the task specified in the inputs field

+#### Sink

->Note that this works was influenced by [airstream of Airbnb](https://www.slideshare.net/databricks/building-data-product-based-on-apache-spark-at-airbnb-with-jingwei-lu-and-liyin-tang).

+- KafkaSink : built-in from Spark.

+- FileSink : built-in from Spark.

+- HiveSink: buit-in from Spark.

+- ESSink : elasticsearch-spark

+- **S2GraphSink**

+ - writeBatchBulkload: build `HFile` directly, then load it using `LoadIncrementalHFiles` from HBase.

+ - writeBatchWithMutate: use the `mutateElement` function of the S2graph object.

-#### Json Spec

-```

-{

- "name": "JOB_NAME",

- "source": [

- {

- "name": "TASK_NAME",

- "inputs": [],

- "type": "SOURCE_TYPE",

- "options": {

- "KEY" : "VALUE"

- }

- }

- ],

- "process": [

- {

- "name": "TASK_NAME",

- "inputs": ["INPUT_TASK_NAME"],

- "type": "PROCESS_TYPE",

- "options": {

- "KEY" : "VALUE"

- }

- }

- ],

- "sink": [

- {

- "name": "TASK_NAME",

- "inputs": ["INPUT_TASK_NAME"],

- "type": "SINK_TYPE",

- "options": {

- "KEY" : "VALUE"

- }

- }

- ]

-}

-

-```

----------

-### Sample job

+The very basic pipeline can be illustrated in the following figure.

-#### 1. wallog trasnform (kafka to kafka)

+

+

+

+# Job Examples

+

+## 1. `WAL` log trasnform (kafka to kafka)

```

{

@@ -255,7 +294,7 @@ You can create a schema by giving a string representing the struct type as JSON

```

-#### 2. wallog transform (hdfs to hdfs)

+## 2. `WAL` log transform (HDFS to HDFS)

```

{

@@ -300,7 +339,7 @@ You can create a schema by giving a string representing the struct type as JSON

----------

-### Launch Job

+## Launch Job

When submitting spark job with assembly jar, use these parameters with the job description file path.

(currently only support file type)

@@ -315,4 +354,6 @@ get config from file

Command: db [options]

get config from db

-i, --jobId <jobId> configuration file

-```

\ No newline at end of file

+```

+

+

[14/16] incubator-s2graph git commit: add extra jar to launch spark

job

Posted by st...@apache.org.

add extra jar to launch spark job

Project: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/repo

Commit: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/commit/a3ecb57a

Tree: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/tree/a3ecb57a

Diff: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/diff/a3ecb57a

Branch: refs/heads/master

Commit: a3ecb57a6278a55bcb53e3e23b7d3b807cac8553

Parents: e4cca3b

Author: Chul Kang <el...@apache.org>

Authored: Mon Apr 23 20:36:28 2018 +0900

Committer: Chul Kang <el...@apache.org>

Committed: Mon Apr 23 20:36:28 2018 +0900

----------------------------------------------------------------------

example/import_data.sh | 8 ++++++--

example/prepare.sh | 5 ++++-

2 files changed, 10 insertions(+), 3 deletions(-)

----------------------------------------------------------------------

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/a3ecb57a/example/import_data.sh

----------------------------------------------------------------------

diff --git a/example/import_data.sh b/example/import_data.sh

index 0cb9cd4..d2ca336 100644

--- a/example/import_data.sh

+++ b/example/import_data.sh

@@ -24,9 +24,11 @@ JOBDESC_TPL=${WORKDIR}/${SERVICE}/jobdesc.template

JOBDESC=${WORKDIR}/${SERVICE}/jobdesc.json

WORKING_DIR=`pwd`

JAR=`ls ${ROOTDIR}/s2jobs/target/scala-2.11/s2jobs-assembly*.jar`

+LIB=`cd ${ROOTDIR}/target/apache-s2graph-*-incubating-bin/lib; pwd`

info "WORKING_DIR : $WORKING_DIR"

info "JAR : $JAR"

+info "LIB : $LIB"

sed -e "s/\[=WORKING_DIR\]/${WORKING_DIR//\//\\/}/g" $JOBDESC_TPL | grep "^[^#]" > $JOBDESC

@@ -35,6 +37,8 @@ info "spark submit.."

${SPARK_HOME}/bin/spark-submit \

--class org.apache.s2graph.s2jobs.JobLauncher \

--master local[2] \

- --driver-class-path h2-1.4.192.jar \

- $JAR file -f $JOBDESC -n SAMPLEJOB:$SERVICE

+ --jars $LIB/h2-1.4.192.jar,$LIB/lucene-core-7.1.0.jar \

+ --driver-class-path "$LIB/h2-1.4.192.jar:$LIB/lucene-core-7.1.0.jar" \

+ --driver-java-options "-Dderby.system.home=/tmp/derby" \

+ $JAR file -f $JOBDESC -n SAMPLEJOB:$SERVICE

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/a3ecb57a/example/prepare.sh

----------------------------------------------------------------------

diff --git a/example/prepare.sh b/example/prepare.sh

index ebb78b8..9cfd804 100644

--- a/example/prepare.sh

+++ b/example/prepare.sh

@@ -22,6 +22,9 @@ status=`curl -s -o /dev/null -w "%{http_code}" "$REST"`

if [ $status != '200' ]; then

warn "s2graphql not running.. "

+ wget https://raw.githubusercontent.com/daewon/sangria-akka-http-example/master/src/main/resources/assets/graphiql.html

+ mv graphiql.html $ROOTDIR/s2graphql/src/main/resources/assets

+

cd $ROOTDIR

output="$(ls target/apache-s2graph-*-incubating-bin)"

if [ -z "$output" ]; then

@@ -31,7 +34,7 @@ if [ $status != '200' ]; then

info "now we will launch s2graphql using build scripts"

cd target/apache-s2graph-*-incubating-bin

- ./bin/start-s2graph.sh s2graphql

+ sh ./bin/start-s2graph.sh s2graphql

else

info "s2graphql is running!!"

fi

[09/16] incubator-s2graph git commit: increate timeout when create

schema

Posted by st...@apache.org.

increate timeout when create schema

Project: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/repo

Commit: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/commit/e28071d7

Tree: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/tree/e28071d7

Diff: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/diff/e28071d7

Branch: refs/heads/master

Commit: e28071d749b7ff289a96af288b1f41f1c73fd44a

Parents: cf33660

Author: Chul Kang <el...@apache.org>

Authored: Thu Apr 12 12:29:10 2018 +0900

Committer: Chul Kang <el...@apache.org>

Committed: Thu Apr 12 12:29:10 2018 +0900

----------------------------------------------------------------------

conf/application.conf | 5 +++++

example/create_schema.sh | 1 +

2 files changed, 6 insertions(+)

----------------------------------------------------------------------

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/e28071d7/conf/application.conf

----------------------------------------------------------------------

diff --git a/conf/application.conf b/conf/application.conf

index 5711d14..2124900 100644

--- a/conf/application.conf

+++ b/conf/application.conf

@@ -76,3 +76,8 @@ db.default.driver = "org.h2.Driver"

db.default.url = "jdbc:h2:tcp://localhost/./var/metastore;MODE=MYSQL"

db.default.user = "sa"

db.default.password = "sa"

+

+akka.http.server {

+ request-timeout = 120 s

+}

+

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/e28071d7/example/create_schema.sh

----------------------------------------------------------------------

diff --git a/example/create_schema.sh b/example/create_schema.sh

index 9f511cb..bce0db9 100755

--- a/example/create_schema.sh

+++ b/example/create_schema.sh

@@ -23,6 +23,7 @@ for file in `ls ${SCHEMA_HOME}/vertex.*`; do

info "file: $file"

graphql_rest $file

done

+get_services

q "create edges >>> "

for file in `ls ${SCHEMA_HOME}/edge.*`; do

[16/16] incubator-s2graph git commit: [S2GRAPH-193]: Add README for

S2Jobs sub-project.

Posted by st...@apache.org.

[S2GRAPH-193]: Add README for S2Jobs sub-project.

JIRA:

[S2GRAPH-193] https://issues.apache.org/jira/browse/S2GRAPH-193

Pull Request:

Closes #156

Author

DO YUNG YOON <st...@apache.org>

Project: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/repo

Commit: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/commit/b8f99050

Tree: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/tree/b8f99050

Diff: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/diff/b8f99050

Branch: refs/heads/master

Commit: b8f990504374f68188fb831b747ed76550fee086

Parents: e4cca3b fbfcb02

Author: Doyung Yoon <st...@apache.org>

Authored: Mon Apr 23 21:54:11 2018 +0900

Committer: DO YUNG YOON <st...@apache.org>

Committed: Tue Apr 24 11:05:44 2018 +0900

----------------------------------------------------------------------

example/import_data.sh | 8 ++++++--

example/prepare.sh | 5 ++++-

2 files changed, 10 insertions(+), 3 deletions(-)

----------------------------------------------------------------------

[08/16] incubator-s2graph git commit: modify README

Posted by st...@apache.org.

modify README

Project: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/repo

Commit: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/commit/cf336609

Tree: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/tree/cf336609

Diff: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/diff/cf336609

Branch: refs/heads/master

Commit: cf336609bc454c3d0292293dc1929d2ca61379e6

Parents: c97986f

Author: Chul Kang <el...@apache.org>

Authored: Wed Apr 11 17:22:28 2018 +0900

Committer: Chul Kang <el...@apache.org>

Committed: Wed Apr 11 17:22:28 2018 +0900

----------------------------------------------------------------------

s2jobs/README.md | 36 +++++++++++++++++++++++++++++-------

1 file changed, 29 insertions(+), 7 deletions(-)

----------------------------------------------------------------------

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/cf336609/s2jobs/README.md

----------------------------------------------------------------------

diff --git a/s2jobs/README.md b/s2jobs/README.md

index f79abb7..2315e82 100644

--- a/s2jobs/README.md

+++ b/s2jobs/README.md

@@ -335,6 +335,21 @@ The very basic pipeline can be illustrated in the following figure.

```

+## 3. movielens (File to S2Graph)

+

+You can also run an example job that parses movielens data and writes to S2graph.

+The dataset includes user rating and tagging activity from MovieLens(https://movielens.org/), a movie recommendation service.

+

+```

+// move to example folder

+$ cd ../example

+

+// run example job

+$ ./run.sh movielens

+```

+

+It demonstrate how to build a graph-based data using the publicly available MovieLens dataset on graph database S2Graph,

+and provides an environment that makes it easy to use various queries using GraphQL.

----------

@@ -347,13 +362,20 @@ When submitting spark job with assembly jar, use these parameters with the job d

```

// main class : org.apache.s2graph.s2jobs.JobLauncher

Usage: run [file|db] [options]

- -n, --name <value> job display name

-Command: file [options]

-get config from file

- -f, --confFile <file> configuration file

-Command: db [options]

-get config from db

- -i, --jobId <jobId> configuration file

+ -n, --name <value> job display name

+Command: file [options] get config from file

+ -f, --confFile <file> configuration file

+Command: db [options] get config from db

+ -i, --jobId <jobId> configuration file

+```

+

+For example, you can run your application using spark-submit as shown below.

+```

+$ sbt 'project s2jobs' assembly

+$ ${SPARK_HOME}/bin/spark-submit \

+ --class org.apache.s2graph.s2jobs.JobLauncher \

+ --master local[2] \

+ s2jobs/target/scala-2.11/s2jobs-assembly-0.2.1-SNAPSHOT.jar file -f JOB_DESC.json -n JOB_NAME

```

[15/16] incubator-s2graph git commit: add graphiql.html for example

Posted by st...@apache.org.

add graphiql.html for example

Project: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/repo

Commit: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/commit/fbfcb020

Tree: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/tree/fbfcb020

Diff: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/diff/fbfcb020

Branch: refs/heads/master

Commit: fbfcb0208d8ecd083de2213e4371f04f79b165a4

Parents: a3ecb57

Author: Chul Kang <el...@apache.org>

Authored: Mon Apr 23 21:12:45 2018 +0900

Committer: Chul Kang <el...@apache.org>

Committed: Mon Apr 23 21:12:45 2018 +0900

----------------------------------------------------------------------

example/prepare.sh | 6 +++---

1 file changed, 3 insertions(+), 3 deletions(-)

----------------------------------------------------------------------

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/fbfcb020/example/prepare.sh

----------------------------------------------------------------------

diff --git a/example/prepare.sh b/example/prepare.sh

index 9cfd804..01d8a20 100644

--- a/example/prepare.sh

+++ b/example/prepare.sh

@@ -22,9 +22,6 @@ status=`curl -s -o /dev/null -w "%{http_code}" "$REST"`

if [ $status != '200' ]; then

warn "s2graphql not running.. "

- wget https://raw.githubusercontent.com/daewon/sangria-akka-http-example/master/src/main/resources/assets/graphiql.html

- mv graphiql.html $ROOTDIR/s2graphql/src/main/resources/assets

-

cd $ROOTDIR

output="$(ls target/apache-s2graph-*-incubating-bin)"

if [ -z "$output" ]; then

@@ -34,6 +31,9 @@ if [ $status != '200' ]; then

info "now we will launch s2graphql using build scripts"

cd target/apache-s2graph-*-incubating-bin

+ wget https://raw.githubusercontent.com/daewon/sangria-akka-http-example/master/src/main/resources/assets/graphiql.html

+ mkdir -p conf/assets

+ mv graphiql.html conf/assets

sh ./bin/start-s2graph.sh s2graphql

else

info "s2graphql is running!!"

[11/16] incubator-s2graph git commit: Merge branch 'master' into

S2GRAPH-193

Posted by st...@apache.org.

Merge branch 'master' into S2GRAPH-193

Project: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/repo

Commit: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/commit/233a17fb

Tree: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/tree/233a17fb

Diff: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/diff/233a17fb

Branch: refs/heads/master

Commit: 233a17fb72e1ae483cf4af78f59dab6a8800b1b5

Parents: fc273fb b21db65

Author: DO YUNG YOON <st...@apache.org>

Authored: Mon Apr 23 13:53:38 2018 +0900

Committer: DO YUNG YOON <st...@apache.org>

Committed: Mon Apr 23 13:53:38 2018 +0900

----------------------------------------------------------------------

CHANGES | 15 +

build.sbt | 36 ++

dev_support/README.md | 19 +-

project/Common.scala | 3 +-

project/plugins.sbt | 2 +

.../org/apache/s2graph/core/PostProcess.scala | 22 ++

.../org/apache/s2graph/core/QueryParam.scala | 91 +++--

.../scala/org/apache/s2graph/core/S2Edge.scala | 108 +++---

.../org/apache/s2graph/core/S2EdgeLike.scala | 9 +-

.../scala/org/apache/s2graph/core/S2Graph.scala | 33 +-

.../org/apache/s2graph/core/S2VertexLike.scala | 10 +-

.../s2graph/core/index/ESIndexProvider.scala | 67 ++--

.../s2graph/core/index/IndexProvider.scala | 3 +-

.../core/index/LuceneIndexProvider.scala | 125 ++++---

.../s2graph/core/parsers/WhereParser.scala | 64 ++--

.../s2graph/core/rest/RequestParser.scala | 32 +-

.../apache/s2graph/core/storage/SKeyValue.scala | 5 +-

.../hbase/AsynchbaseStorageManagement.scala | 2 +-

.../tall/SnapshotEdgeDeserializable.scala | 5 +-

.../core/Integrate/IntegrateCommon.scala | 8 +-

.../s2graph/core/Integrate/QueryTest.scala | 2 +-

.../core/Integrate/VertexTestHelper.scala | 42 +++

.../core/benchmark/BenchmarkCommon.scala | 1 -

.../core/benchmark/JsonBenchmarkSpec.scala | 78 -----

.../core/benchmark/SamplingBenchmarkSpec.scala | 105 ------

.../s2graph/core/index/IndexProviderTest.scala | 23 +-

.../s2graph/core/parsers/WhereParserTest.scala | 11 +-

.../loader/core/CounterEtlFunctionsSpec.scala | 2 +-

.../apache/s2graph/graphql/GraphQLServer.scala | 35 +-

.../org/apache/s2graph/graphql/HttpServer.scala | 34 +-

.../apache/s2graph/graphql/bind/AstHelper.scala | 28 ++

.../s2graph/graphql/bind/Unmarshaller.scala | 127 +++++++

.../s2graph/graphql/marshaller/package.scala | 124 -------

.../graphql/repository/GraphRepository.scala | 111 ++++--

.../s2graph/graphql/resolver/Resolver.scala | 28 --

.../s2graph/graphql/types/FieldResolver.scala | 108 ++++++

.../s2graph/graphql/types/ManagementType.scala | 312 +++++++++++++++++

.../graphql/types/S2ManagementType.scala | 341 -------------------

.../apache/s2graph/graphql/types/S2Type.scala | 225 ++++++------

.../types/SangriaPlayJsonScalarType.scala | 76 -----

.../s2graph/graphql/types/SchemaDef.scala | 4 +-

.../s2graph/graphql/types/StaticType.scala | 149 ++++++++

.../apache/s2graph/graphql/types/package.scala | 122 +------

.../apache/s2graph/graphql/ScenarioTest.scala | 186 ++++++----

.../org/apache/s2graph/graphql/SchemaTest.scala | 35 +-

.../org/apache/s2graph/graphql/TestGraph.scala | 19 +-

.../org/apache/s2graph/s2jobs/DegreeKey.scala | 80 +++++

.../apache/s2graph/s2jobs/JobDescription.scala | 3 +-

.../apache/s2graph/s2jobs/S2GraphHelper.scala | 104 +++++-

.../org/apache/s2graph/s2jobs/Schema.scala | 21 ++

.../s2jobs/loader/GraphFileGenerator.scala | 8 +-

.../s2jobs/loader/GraphFileOptions.scala | 45 ++-

.../s2graph/s2jobs/loader/HFileGenerator.scala | 189 ++++------

.../s2jobs/loader/HFileMRGenerator.scala | 43 +--

.../loader/LocalBulkLoaderTransformer.scala | 51 +++

.../s2jobs/loader/RawFileGenerator.scala | 28 +-

.../loader/SparkBulkLoaderTransformer.scala | 73 ++++

.../s2jobs/serde/GraphElementReadable.scala | 26 ++

.../s2jobs/serde/GraphElementWritable.scala | 30 ++

.../s2graph/s2jobs/serde/Transformer.scala | 41 +++

.../s2jobs/serde/reader/IdentityReader.scala | 28 ++

.../serde/reader/RowBulkFormatReader.scala | 33 ++

.../s2jobs/serde/reader/S2GraphCellReader.scala | 54 +++

.../serde/reader/TsvBulkFormatReader.scala | 29 ++

.../s2jobs/serde/writer/IdentityWriter.scala | 32 ++

.../s2jobs/serde/writer/KeyValueWriter.scala | 48 +++

.../serde/writer/RowDataFrameWriter.scala | 36 ++

.../org/apache/s2graph/s2jobs/task/Sink.scala | 119 ++++++-

.../org/apache/s2graph/s2jobs/task/Source.scala | 39 +++

.../org/apache/s2graph/s2jobs/task/Task.scala | 21 ++

.../spark/sql/streaming/S2SinkContext.scala | 2 +

.../sql/streaming/S2StreamQueryWriter.scala | 53 +--

.../apache/s2graph/s2jobs/BaseSparkTest.scala | 138 ++++++++

.../s2graph/s2jobs/S2GraphHelperTest.scala | 13 +-

.../s2jobs/loader/GraphFileGeneratorTest.scala | 307 ++++++++---------

.../apache/s2graph/s2jobs/task/SinkTest.scala | 98 ++++++

.../apache/s2graph/s2jobs/task/SourceTest.scala | 130 +++++++

.../s2graph/s2jobs/task/TaskConfTest.scala | 61 ++++

s2rest_netty/build.sbt | 2 -

s2rest_play/build.sbt | 2 -

80 files changed, 3167 insertions(+), 1807 deletions(-)

----------------------------------------------------------------------

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/233a17fb/s2jobs/src/main/scala/org/apache/s2graph/s2jobs/task/Source.scala

----------------------------------------------------------------------

[12/16] incubator-s2graph git commit: update README.md to add

s2graphql and s2jobs.

Posted by st...@apache.org.

update README.md to add s2graphql and s2jobs.

Project: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/repo

Commit: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/commit/35d9a438

Tree: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/tree/35d9a438

Diff: http://git-wip-us.apache.org/repos/asf/incubator-s2graph/diff/35d9a438

Branch: refs/heads/master

Commit: 35d9a438f27f080f426fceac819f3f45108a9223

Parents: 233a17f

Author: DO YUNG YOON <st...@apache.org>

Authored: Mon Apr 23 14:35:46 2018 +0900

Committer: DO YUNG YOON <st...@apache.org>

Committed: Mon Apr 23 14:36:05 2018 +0900

----------------------------------------------------------------------

README.md | 1049 +++++++++++++++++++++++++-------------------------------

1 file changed, 462 insertions(+), 587 deletions(-)

----------------------------------------------------------------------

http://git-wip-us.apache.org/repos/asf/incubator-s2graph/blob/35d9a438/README.md

----------------------------------------------------------------------

diff --git a/README.md b/README.md

index bac5ac9..ba7e81c 100644

--- a/README.md

+++ b/README.md

@@ -1,588 +1,463 @@

-<!---

-/*

- * Licensed to the Apache Software Foundation (ASF) under one

- * or more contributor license agreements. See the NOTICE file

- * distributed with this work for additional information

- * regarding copyright ownership. The ASF licenses this file

- * to you under the Apache License, Version 2.0 (the

- * "License"); you may not use this file except in compliance

- * with the License. You may obtain a copy of the License at

- *

- * http://www.apache.org/licenses/LICENSE-2.0

- *

- * Unless required by applicable law or agreed to in writing,

- * software distributed under the License is distributed on an

- * "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

- * KIND, either express or implied. See the License for the

- * specific language governing permissions and limitations

- * under the License.

- */

---->

-S2Graph [](https://travis-ci.org/apache/incubator-s2graph)

-=======

-

-[**S2Graph**](http://s2graph.apache.org/) is a **graph database** designed to handle transactional graph processing at scale. Its REST API allows you to store, manage and query relational information using **edge** and **vertex** representations in a **fully asynchronous** and **non-blocking** manner.

-

-S2Graph is a implementation of [**Apache TinkerPop**](https://tinkerpop.apache.org/) on [**Apache HBASE**](https://hbase.apache.org/).

-

-This document covers some basic concepts and terms of S2Graph as well as help you get a feel for the S2Graph API.

-

-Building from the source

-========================

-

-To build S2Graph from the source, install the JDK 8 and [SBT](http://www.scala-sbt.org/), and run the following command in the project root:

-

- sbt package

-

-This will create a distribution of S2Graph that is ready to be deployed.

-

-One can find distribution on `target/apache-s2graph-$version-incubating-bin`.

-

-Quick Start

-===========

-

-Once extracted the downloaded binary release of S2Graph or built from the source as described above, the following files and directories should be found in the directory.

-

-```

-DISCLAIMER

-LICENCE # the Apache License 2.0

-NOTICE

-bin # scripts to manage the lifecycle of S2Graph

-conf # configuration files

-lib # contains the binary

-logs # application logs

-var # application data

-```

-

-This directory layout contains all binary and scripts required to launch S2Graph. The directories `logs` and `var` may not be present initially, and are created once S2Graph is launched.

-

-The following will launch S2Graph, using [HBase](https://hbase.apache.org/) in the standalone mode for data storage and [H2](http://www.h2database.com/html/main.html) as the metadata storage.

-

- sh bin/start-s2graph.sh

-

-To connect to a remote HBase cluster or use MySQL as the metastore, refer to the instructions in [`conf/application.conf`](conf/application.conf).

-S2Graph is tested on HBase versions 0.98, 1.0, 1.1, and 1.2 (https://hub.docker.com/r/harisekhon/hbase/tags/).

-

-Project Layout

-==============

-

-Here is what you can find in each subproject.

-

-1. `s2core`: The core library, containing the data abstractions for graph entities, storage adapters and utilities.

-2. `s2rest_play`: The REST server built with [Play framework](https://www.playframework.com/), providing the write and query API.

-3. `s2rest_netty`: The REST server built directly using Netty, implementing only the query API.

-4. `loader`: A collection of Spark jobs for bulk loading streaming data into S2Graph.

-5. `spark`: Spark utilities for `loader` and `s2counter_loader`.

-6. `s2counter_core`: The core library providing data structures and logics for `s2counter_loader`.

-7. `s2counter_loader`: Spark streaming jobs that consume Kafka WAL logs and calculate various top-*K* results on-the-fly.

-8. `s2graph_gremlin`: Gremlin plugin for tinkerpop users.

-

-The first three projects are for OLTP-style workloads, currently the main target of S2Graph. The other four projects could be helpful for OLAP-style or streaming workloads, especially for integrating S2Graph with [Apache Spark](https://spark.apache.org/) and/or [Kafka](https://kafka.apache.org/). Note that, the latter four projects are currently out-of-date, which we are planning to update and provide documentations in the upcoming releases.

-

-Your First Graph

-================

-

-Once the S2Graph server has been set up, you can now start to send HTTP queries to the server to create a graph and pour some data in it. This tutorial goes over a simple toy problem to get a sense of how S2Graph's API looks like. [`bin/example.sh`](bin/example.sh) contains the example code below.

-

-The toy problem is to create a timeline feature for a simple social media, like a simplified version of Facebook's timeline:stuck_out_tongue_winking_eye:. Using simple S2Graph queries it is possible to keep track of each user's friends and their posts.

-

-1. First, we need a name for the new service.

-

- The following POST query will create a service named "KakaoFavorites".

-

- ```

- curl -XPOST localhost:9000/graphs/createService -H 'Content-Type: Application/json' -d '

- {"serviceName": "KakaoFavorites", "compressionAlgorithm" : "gz"}

- '

- ```

-

- To make sure the service is created correctly, check out the following.

-

- ```

- curl -XGET localhost:9000/graphs/getService/KakaoFavorites

- ```

-

-2. Next, we will need some friends.

-

- In S2Graph, relationships are organized as labels. Create a label called `friends` using the following `createLabel` API call:

-

- ```