You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@pulsar.apache.org by GitBox <gi...@apache.org> on 2022/03/31 01:56:39 UTC

[GitHub] [pulsar] leizhiyuan opened a new issue #14957: Direct Memory Leak when TooManyRequestsException occurs

leizhiyuan opened a new issue #14957:

URL: https://github.com/apache/pulsar/issues/14957

**Describe the bug**

A clear and concise description of what the bug is.

when TooManyRequestsException occurs, direct memory of pulsar will leak

**To Reproduce**

Steps to reproduce the behavior:

1. start standalone with

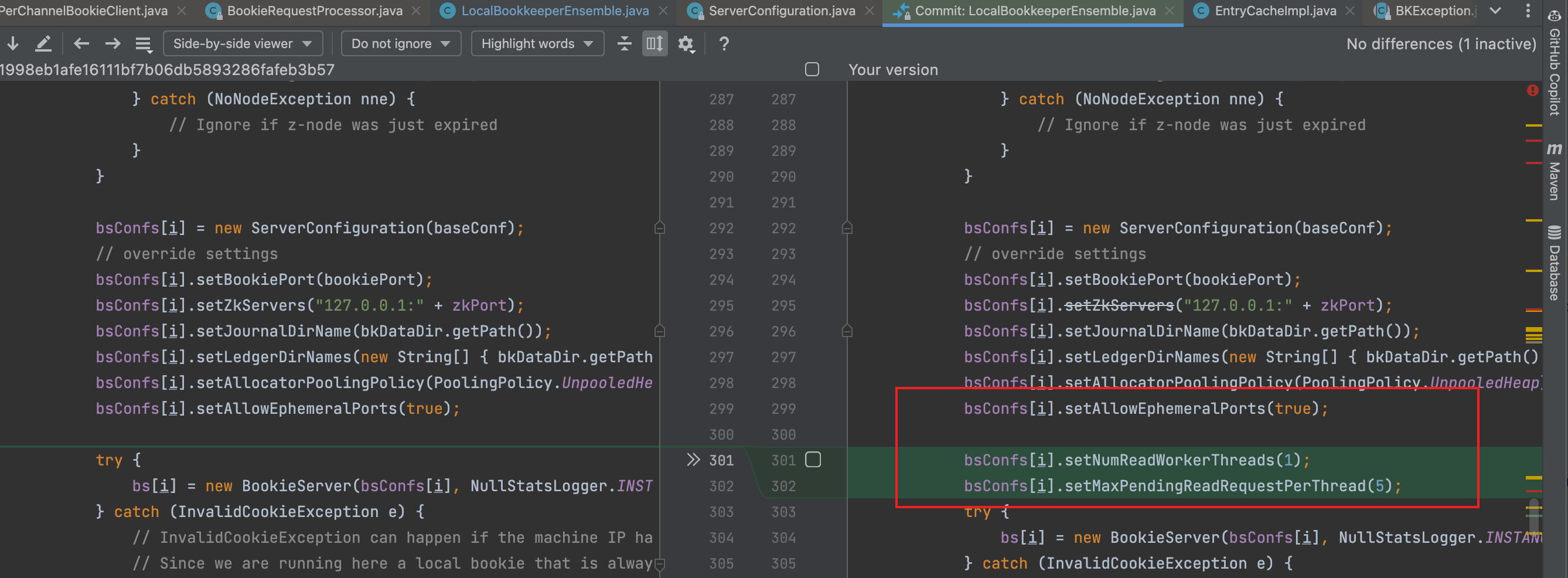

1.1 modify LocalBookkeeperEnsemble to reproduce TooManyRequestsException

```

bsConfs[i].setNumReadWorkerThreads(1);

bsConfs[i].setMaxPendingReadRequestPerThread(5);

```

1.2 add a thread to monitor direct memory of netty

```

Executors.newScheduledThreadPool(1).scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

System.out.println("monitor "+ JvmMetrics.getJvmDirectMemoryUsed()/1024/1024);

}

},1,1, TimeUnit.SECONDS);

```

2. producer some mesages ,just like 10000, then close producer.

```

package org.example;

import org.apache.pulsar.client.api.*;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.atomic.AtomicInteger;

import static org.example.Helper.TP_TOPIC;

public class SimpleProducer {

private static Logger logger = LoggerFactory.getLogger(SimpleProducer.class);

private static AtomicInteger atomicInteger=new AtomicInteger(0);

public static void main(String[] args) {

logger.info("producer start");

try {

execute();

} catch (Exception e) {

e.printStackTrace();

}

}

public static void execute() throws Exception {

PulsarClient client = Helper.buildClient();

Runtime.getRuntime().addShutdownHook(new Thread(() -> {

try {

client.close();

} catch (PulsarClientException e) {

e.printStackTrace();

}

}));

Producer<String> producer = client.newProducer(Schema.STRING)

.topic(TP_TOPIC)

.create();

for (int i = 0; i < 100; i++) {

try {

MessageId id = producer.newMessage().key(String.valueOf(i)).value(buildString()).property("tag2", "TAGS").send();

atomicInteger.incrementAndGet();

System.out.println(id);

//System.out.println(id);

} catch (Throwable throwable) {

throwable.printStackTrace();

}

}

System.out.println("result " +atomicInteger);

}

private static String buildString() {

StringBuilder sb=new StringBuilder();

for (int i=0;i<1024;i++){

sb.append(i);

}

return sb.toString();

}

}

```

3. consume with 50 consumers

```

package org.example;

import static org.example.Helper.TP_TOPIC;

import java.util.HashMap;

import java.util.Map;

import java.util.Random;

import java.util.concurrent.ConcurrentHashMap;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.atomic.AtomicInteger;

import org.apache.pulsar.client.api.Consumer;

import org.apache.pulsar.client.api.Message;

import org.apache.pulsar.client.api.MessageListener;

import org.apache.pulsar.client.api.PulsarClient;

import org.apache.pulsar.client.api.PulsarClientException;

import org.apache.pulsar.client.api.Schema;

import org.apache.pulsar.client.api.SubscriptionInitialPosition;

import org.apache.pulsar.client.api.SubscriptionType;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class SimpleConsumer {

private static Logger logger = LoggerFactory.getLogger(SimpleConsumer.class);

protected static Map<String, Consumer> consumers = new ConcurrentHashMap<>();

static PulsarClient client = Helper.buildClient();

private static AtomicInteger atomicInteger = new AtomicInteger(0);

public static void main(String[] args) {

logger.info("consumer start");

try {

execute();

} catch (Exception e) {

e.printStackTrace();

}

}

public static void execute() throws Exception {

for (int i = 0; i < 50; i++) {

createConsumer(i);

}

TimeUnit.SECONDS.sleep(1000000);

}

private static void createConsumer(int i) throws PulsarClientException {

Consumer consumer = client.newConsumer(Schema.BYTES)

.topic(TP_TOPIC)

.subscriptionName("eb-bystander"+i)

.subscriptionInitialPosition(SubscriptionInitialPosition.Earliest)

.subscriptionType(SubscriptionType.Shared)

.messageListener(new MessageListener<byte[]>() {

@Override

public void received(Consumer<byte[]> consumer, Message<byte[]> message) {

atomicInteger.incrementAndGet();

System.out.println("final " + atomicInteger);

System.out.println(message.getMessageId());

try {

consumer.acknowledge(message);

} catch (PulsarClientException e) {

e.printStackTrace();

}

}

})

.subscribe();

consumers.put(String.valueOf(i), consumer);

}

}

```

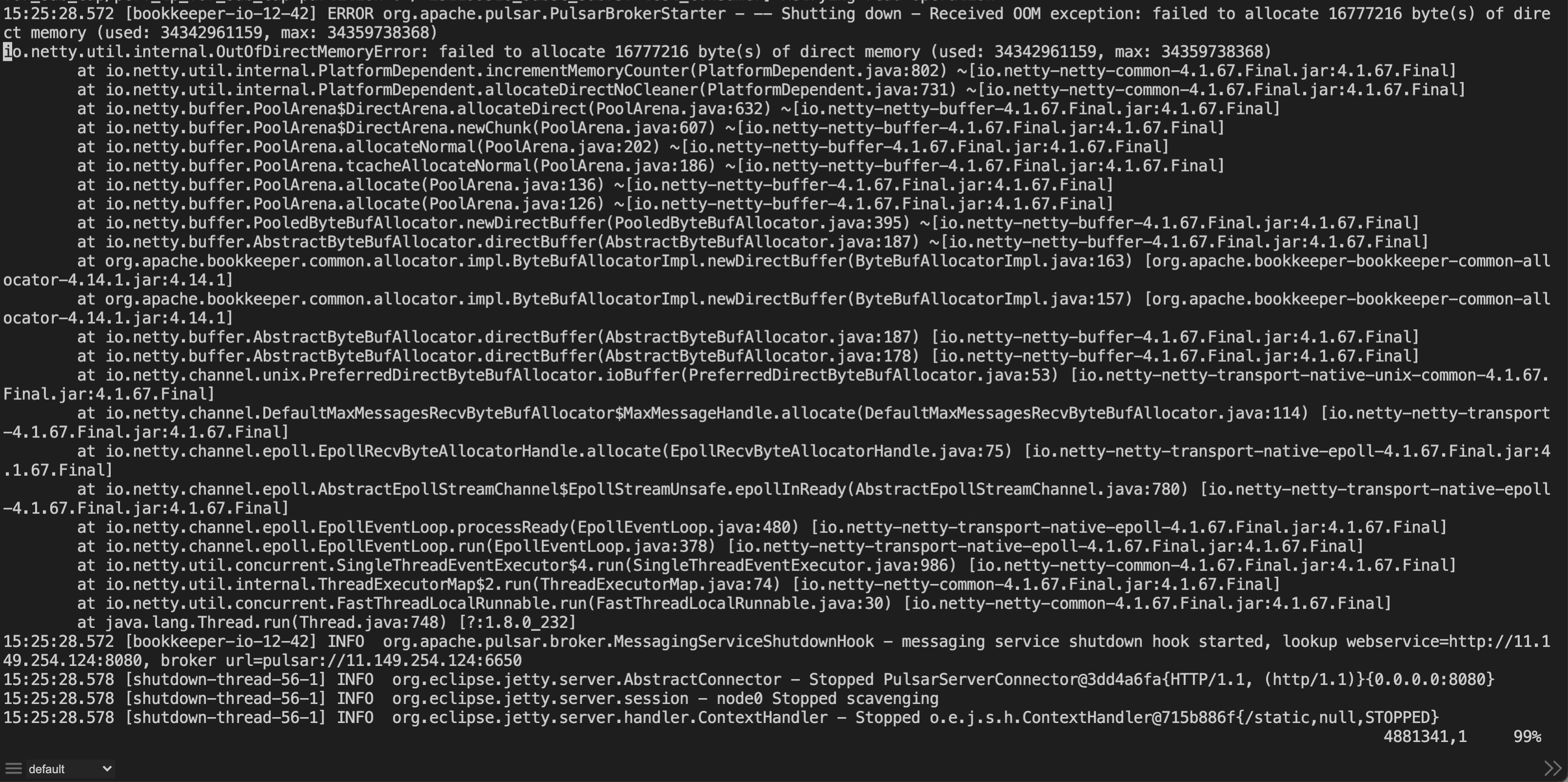

4. when see TooManyRequestsException in logs, the scene is ok

<img width="2478" alt="image" src="https://user-images.githubusercontent.com/2684384/160960331-5b8fe8ba-b3e6-4741-998b-611834143fbc.png">

5. wait and see, you will see the ` System.out.println("monitor "+ JvmMetrics.getJvmDirectMemoryUsed()/1024/1024);` will increase step by 16M,in my local machine ,it increased from 2048 to 3168 later.

**Expected behavior**

A clear and concise description of what you expected to happen.

**Screenshots**

If applicable, add screenshots to help explain your problem.

**Desktop (please complete the following information):**

- OS: [e.g. iOS]

**Additional context**

Add any other context about the problem here.

some extra info when open leakDetectionLevel of netty in real scene.

```

06:24:05.862 [bookkeeper-io-12-8] ERROR io.netty.util.ResourceLeakDetector - LEAK: ByteBuf.release() was not called before it's garbage-collected. See https://netty.io/wiki/reference-counted-objects.html for more information.

Recent access records:

#1:

io.netty.handler.codec.ByteToMessageDecoder.expandCumulation(ByteToMessageDecoder.java:549)

io.netty.handler.codec.ByteToMessageDecoder$1.cumulate(ByteToMessageDecoder.java:97)

io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:274)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

io.netty.channel.epoll.AbstractEpollStreamChannel$EpollStreamUnsafe.epollInReady(AbstractEpollStreamChannel.java:795)

io.netty.channel.epoll.EpollEventLoop.processReady(EpollEventLoop.java:480)

io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:378)

io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:986)

io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

java.lang.Thread.run(Thread.java:748)

#2:

io.netty.buffer.AdvancedLeakAwareByteBuf.getUnsignedInt(AdvancedLeakAwareByteBuf.java:196)

io.netty.handler.codec.LengthFieldBasedFrameDecoder.getUnadjustedFrameLength(LengthFieldBasedFrameDecoder.java:464)

io.netty.handler.codec.LengthFieldBasedFrameDecoder.decode(LengthFieldBasedFrameDecoder.java:406)

io.netty.handler.codec.LengthFieldBasedFrameDecoder.decode(LengthFieldBasedFrameDecoder.java:332)

io.netty.handler.codec.ByteToMessageDecoder.decodeRemovalReentryProtection(ByteToMessageDecoder.java:507)

io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:446)

io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:276)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

io.netty.channel.epoll.AbstractEpollStreamChannel$EpollStreamUnsafe.epollInReady(AbstractEpollStreamChannel.java:795)

io.netty.channel.epoll.EpollEventLoop.processReady(EpollEventLoop.java:480)

io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:378)

io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:986)

io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

java.lang.Thread.run(Thread.java:748)

#3:

io.netty.buffer.AdvancedLeakAwareByteBuf.order(AdvancedLeakAwareByteBuf.java:70)

io.netty.handler.codec.LengthFieldBasedFrameDecoder.getUnadjustedFrameLength(LengthFieldBasedFrameDecoder.java:451)

io.netty.handler.codec.LengthFieldBasedFrameDecoder.decode(LengthFieldBasedFrameDecoder.java:406)

io.netty.handler.codec.LengthFieldBasedFrameDecoder.decode(LengthFieldBasedFrameDecoder.java:332)

io.netty.handler.codec.ByteToMessageDecoder.decodeRemovalReentryProtection(ByteToMessageDecoder.java:507)

io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:446)

io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:276)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

io.netty.channel.epoll.AbstractEpollStreamChannel$EpollStreamUnsafe.epollInReady(AbstractEpollStreamChannel.java:795)

io.netty.channel.epoll.EpollEventLoop.processReady(EpollEventLoop.java:480)

io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:378)

io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:986)

io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

java.lang.Thread.run(Thread.java:748)

#4:

io.netty.buffer.AdvancedLeakAwareByteBuf.retainedSlice(AdvancedLeakAwareByteBuf.java:94)

io.netty.handler.codec.LengthFieldBasedFrameDecoder.extractFrame(LengthFieldBasedFrameDecoder.java:498)

io.netty.handler.codec.LengthFieldBasedFrameDecoder.decode(LengthFieldBasedFrameDecoder.java:437)

io.netty.handler.codec.LengthFieldBasedFrameDecoder.decode(LengthFieldBasedFrameDecoder.java:332)

io.netty.handler.codec.ByteToMessageDecoder.decodeRemovalReentryProtection(ByteToMessageDecoder.java:507)

io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:446)

io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:276)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

io.netty.channel.epoll.AbstractEpollStreamChannel$EpollStreamUnsafe.epollInReady(AbstractEpollStreamChannel.java:795)

io.netty.channel.epoll.EpollEventLoop.processReady(EpollEventLoop.java:480)

io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:378)

io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:986)

io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

java.lang.Thread.run(Thread.java:748)

#5:

io.netty.buffer.AdvancedLeakAwareByteBuf.skipBytes(AdvancedLeakAwareByteBuf.java:532)

io.netty.handler.codec.LengthFieldBasedFrameDecoder.decode(LengthFieldBasedFrameDecoder.java:432)

io.netty.handler.codec.LengthFieldBasedFrameDecoder.decode(LengthFieldBasedFrameDecoder.java:332)

io.netty.handler.codec.ByteToMessageDecoder.decodeRemovalReentryProtection(ByteToMessageDecoder.java:507)

io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:446)

io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:276)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

io.netty.channel.epoll.AbstractEpollStreamChannel$EpollStreamUnsafe.epollInReady(AbstractEpollStreamChannel.java:795)

io.netty.channel.epoll.EpollEventLoop.processReady(EpollEventLoop.java:480)

io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:378)

io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:986)

io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

java.lang.Thread.run(Thread.java:748)

#6:

Hint: 'lengthbasedframedecoder' will handle the message from this point.

io.netty.channel.DefaultChannelPipeline.touch(DefaultChannelPipeline.java:116)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

io.netty.channel.epoll.AbstractEpollStreamChannel$EpollStreamUnsafe.epollInReady(AbstractEpollStreamChannel.java:795)

io.netty.channel.epoll.EpollEventLoop.processReady(EpollEventLoop.java:480)

io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:378)

io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:986)

io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

java.lang.Thread.run(Thread.java:748)

#7:

Hint: 'DefaultChannelPipeline$HeadContext#0' will handle the message from this point.

io.netty.channel.DefaultChannelPipeline.touch(DefaultChannelPipeline.java:116)

io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

io.netty.channel.epoll.AbstractEpollStreamChannel$EpollStreamUnsafe.epollInReady(AbstractEpollStreamChannel.java:795)

io.netty.channel.epoll.EpollEventLoop.processReady(EpollEventLoop.java:480)

io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:378)

io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:986)

io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

java.lang.Thread.run(Thread.java:748)

Created at:

io.netty.buffer.PooledByteBufAllocator.newDirectBuffer(PooledByteBufAllocator.java:402)

io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:187)

org.apache.bookkeeper.common.allocator.impl.ByteBufAllocatorImpl.newDirectBuffer(ByteBufAllocatorImpl.java:163)

org.apache.bookkeeper.common.allocator.impl.ByteBufAllocatorImpl.newDirectBuffer(ByteBufAllocatorImpl.java:157)

io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:187)

io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:178)

io.netty.channel.unix.PreferredDirectByteBufAllocator.ioBuffer(PreferredDirectByteBufAllocator.java:53)

io.netty.channel.DefaultMaxMessagesRecvByteBufAllocator$MaxMessageHandle.allocate(DefaultMaxMessagesRecvByteBufAllocator.java:114)

io.netty.channel.epoll.EpollRecvByteAllocatorHandle.allocate(EpollRecvByteAllocatorHandle.java:75)

io.netty.channel.epoll.AbstractEpollStreamChannel$EpollStreamUnsafe.epollInReady(AbstractEpollStreamChannel.java:780)

io.netty.channel.epoll.EpollEventLoop.processReady(EpollEventLoop.java:480)

io.netty.channel.epoll.EpollEventLoop.run(EpollEventLoop.java:378)

io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:986)

io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

java.lang.Thread.run(Thread.java:748)

: 2 leak records were discarded because they were duplicates

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@pulsar.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] leizhiyuan commented on issue #14957: Direct Memory Leak when TooManyRequestsException occurs

Posted by GitBox <gi...@apache.org>.

leizhiyuan commented on issue #14957:

URL: https://github.com/apache/pulsar/issues/14957#issuecomment-1085392831

thanks for the help of @hangc0276

this only occurs in 2.7.2

branch 2.7.2 and master cannot reproduce.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@pulsar.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] leizhiyuan closed issue #14957: Direct Memory Leak when TooManyRequestsException occurs

Posted by GitBox <gi...@apache.org>.

leizhiyuan closed issue #14957:

URL: https://github.com/apache/pulsar/issues/14957

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@pulsar.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [pulsar] leizhiyuan edited a comment on issue #14957: Direct Memory Leak when TooManyRequestsException occurs

Posted by GitBox <gi...@apache.org>.

leizhiyuan edited a comment on issue #14957:

URL: https://github.com/apache/pulsar/issues/14957#issuecomment-1085392831

thanks for the help of @hangc0276

this only occurs in 2.7.2

branch-2.7 and master cannot reproduce.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@pulsar.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org