You are viewing a plain text version of this content. The canonical link for it is here.

Posted to reviews@spark.apache.org by GitBox <gi...@apache.org> on 2022/11/25 07:25:49 UTC

[GitHub] [spark] beliefer opened a new pull request, #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

beliefer opened a new pull request, #38799:

URL: https://github.com/apache/spark/pull/38799

### What changes were proposed in this pull request?

introduce a new node `WindowGroupLimit` to filter out unnecessary rows based on rank computed on partial dataset.

it supports following pattern:

```

SELECT (... (row_number|rank|dense_rank)()

OVER (

PARTITION BY ...

ORDER BY ... ) AS rn)

WHERE rn (==|<|<=) k

AND other conditions

```

For these three rank-like functions (row_number|rank|dense_rank), the rank of a key computed on partial dataset always <= its final rank computed on the whole dataset,so we can safely discard rows with partial rank > k, anywhere.

This PR also take over some functions from https://github.com/apache/spark/pull/34367.

### Why are the changes needed?

1. reduce the shuffle write

2. solve skewed-window problem, a practical case was optimized from 2.5h to 26min.

3. improve the performance and TPC-DS.

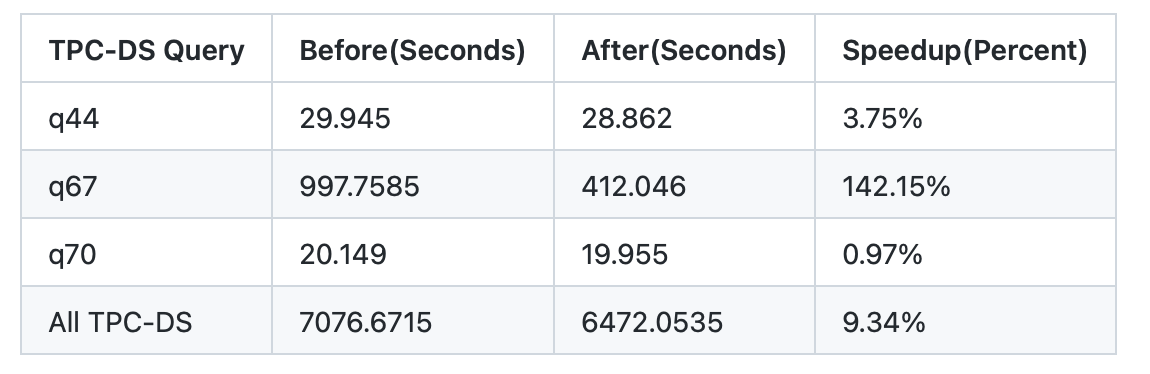

**Micro Benchmark**

TPC-DS data size: 2TB.

This improvement is valid for TPC-DS q67 and no regression for other test cases.

### Does this PR introduce _any_ user-facing change?

'No'.

Just update the inner implementation and add a new config.

### How was this patch tested?

1. new test suites

2. new micro benchmark

```

[info] Benchmark Top-K: Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative

[info] -----------------------------------------------------------------------------------------------------------------------------------------------

[info] ROW_NUMBER (PARTITION: , WindowGroupLimit: false) 13036 15052 969 1.6 621.6 1.0X

[info] ROW_NUMBER (PARTITION: , WindowGroupLimit: true) 4269 4650 303 4.9 203.6 3.1X

[info] ROW_NUMBER (PARTITION: PARTITION BY b, WindowGroupLimit: false) 24159 25238 919 0.9 1152.0 0.5X

[info] ROW_NUMBER (PARTITION: PARTITION BY b, WindowGroupLimit: true) 6466 6594 104 3.2 308.3 2.0X

[info] RANK (PARTITION: , WindowGroupLimit: false) 11291 11691 252 1.9 538.4 1.2X

[info] RANK (PARTITION: , WindowGroupLimit: true) 3376 3709 218 6.2 161.0 3.9X

[info] RANK (PARTITION: PARTITION BY b, WindowGroupLimit: false) 24778 24927 69 0.8 1181.5 0.5X

[info] RANK (PARTITION: PARTITION BY b, WindowGroupLimit: true) 6531 6613 68 3.2 311.4 2.0X

[info] DENSE_RANK (PARTITION: , WindowGroupLimit: false) 11468 11730 142 1.8 546.8 1.1X

[info] DENSE_RANK (PARTITION: , WindowGroupLimit: true) 3459 3658 201 6.1 164.9 3.8X

[info] DENSE_RANK (PARTITION: PARTITION BY b, WindowGroupLimit: false) 24809 24961 173 0.8 1183.0 0.5X

[info] DENSE_RANK (PARTITION: PARTITION BY b, WindowGroupLimit: true) 6512 6579 44 3.2 310.5 2.0X

```

3. manual test on TPC-DS

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by "cloud-fan (via GitHub)" <gi...@apache.org>.

cloud-fan commented on code in PR #38799:

URL: https://github.com/apache/spark/pull/38799#discussion_r1111759712

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/window/WindowGroupLimitExec.scala:

##########

@@ -0,0 +1,249 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.window

+

+import org.apache.spark.rdd.RDD

+import org.apache.spark.sql.catalyst.InternalRow

+import org.apache.spark.sql.catalyst.expressions.{Ascending, Attribute, DenseRank, Expression, Rank, RowNumber, SortOrder, UnsafeProjection, UnsafeRow}

+import org.apache.spark.sql.catalyst.expressions.codegen.GenerateOrdering

+import org.apache.spark.sql.catalyst.plans.physical.{AllTuples, ClusteredDistribution, Distribution, Partitioning}

+import org.apache.spark.sql.execution.{SparkPlan, UnaryExecNode}

+

+sealed trait WindowGroupLimitMode

+

+case object Partial extends WindowGroupLimitMode

+

+case object Final extends WindowGroupLimitMode

+

+/**

+ * This operator is designed to filter out unnecessary rows before WindowExec

+ * for top-k computation.

+ * @param partitionSpec Should be the same as [[WindowExec#partitionSpec]].

+ * @param orderSpec Should be the same as [[WindowExec#orderSpec]].

+ * @param rankLikeFunction The function to compute row rank, should be RowNumber/Rank/DenseRank.

+ * @param limit The limit for rank value.

+ * @param mode The mode describes [[WindowGroupLimitExec]] before or after shuffle.

+ * @param child The child spark plan.

+ */

+case class WindowGroupLimitExec(

+ partitionSpec: Seq[Expression],

+ orderSpec: Seq[SortOrder],

+ rankLikeFunction: Expression,

+ limit: Int,

+ mode: WindowGroupLimitMode,

+ child: SparkPlan) extends UnaryExecNode {

+

+ override def output: Seq[Attribute] = child.output

+

+ override def requiredChildDistribution: Seq[Distribution] = mode match {

+ case Partial => super.requiredChildDistribution

+ case Final =>

+ if (partitionSpec.isEmpty) {

+ AllTuples :: Nil

+ } else {

+ ClusteredDistribution(partitionSpec) :: Nil

+ }

+ }

+

+ override def requiredChildOrdering: Seq[Seq[SortOrder]] =

+ Seq(partitionSpec.map(SortOrder(_, Ascending)) ++ orderSpec)

+

+ override def outputOrdering: Seq[SortOrder] = child.outputOrdering

+

+ override def outputPartitioning: Partitioning = child.outputPartitioning

+

+ protected override def doExecute(): RDD[InternalRow] = rankLikeFunction match {

+ case _: RowNumber if partitionSpec.isEmpty =>

+ child.execute().mapPartitionsInternal(SimpleLimitIterator(_, limit))

+ case _: RowNumber =>

+ child.execute().mapPartitionsInternal(new GroupedLimitIterator(_, output, partitionSpec,

+ (input: Iterator[InternalRow]) => SimpleLimitIterator(input, limit)))

+ case _: Rank if partitionSpec.isEmpty =>

+ child.execute().mapPartitionsInternal(RankLimitIterator(output, _, orderSpec, limit))

+ case _: Rank =>

+ child.execute().mapPartitionsInternal(new GroupedLimitIterator(_, output, partitionSpec,

+ (input: Iterator[InternalRow]) => RankLimitIterator(output, input, orderSpec, limit)))

+ case _: DenseRank if partitionSpec.isEmpty =>

+ child.execute().mapPartitionsInternal(DenseRankLimitIterator(output, _, orderSpec, limit))

+ case _: DenseRank =>

+ child.execute().mapPartitionsInternal(new GroupedLimitIterator(_, output, partitionSpec,

+ (input: Iterator[InternalRow]) => DenseRankLimitIterator(output, input, orderSpec, limit)))

+ }

+

+ override protected def withNewChildInternal(newChild: SparkPlan): WindowGroupLimitExec =

+ copy(child = newChild)

+}

+

+abstract class BaseLimitIterator extends Iterator[InternalRow] {

+

+ def input: Iterator[InternalRow]

+

+ def limit: Int

+

+ var rank = 0

+

+ var nextRow: UnsafeRow = null

+

+ // Increase the rank value.

+ def increaseRank(): Unit

+

+ override def hasNext: Boolean = rank < limit && input.hasNext

+

+ override def next(): InternalRow = {

+ if (!hasNext) throw new NoSuchElementException

+ nextRow = input.next().asInstanceOf[UnsafeRow]

+ increaseRank()

+ nextRow

+ }

+

+ def reset(): Unit

+}

+

+case class SimpleLimitIterator(

+ input: Iterator[InternalRow],

+ limit: Int) extends BaseLimitIterator {

+

+ override def increaseRank(): Unit = {

+ rank += 1

+ }

+

+ override def reset(): Unit = {

+ rank = 0

+ }

+}

+

+trait OrderSpecProvider {

+ def output: Seq[Attribute]

+ def orderSpec: Seq[SortOrder]

+

+ val ordering = GenerateOrdering.generate(orderSpec, output)

+ var currentRankRow: UnsafeRow = null

+}

+

+case class RankLimitIterator(

+ output: Seq[Attribute],

+ input: Iterator[InternalRow],

+ orderSpec: Seq[SortOrder],

+ limit: Int) extends BaseLimitIterator with OrderSpecProvider {

+

+ var count = 0

+

+ override def increaseRank(): Unit = {

+ if (count == 0) {

+ currentRankRow = nextRow.copy()

+ } else {

+ if (ordering.compare(currentRankRow, nextRow) != 0) {

+ rank = count

+ currentRankRow = nextRow.copy()

+ }

+ }

+ count += 1

+ }

+

+ override def reset(): Unit = {

+ rank = 0

+ count = 0

+ }

+}

+

+case class DenseRankLimitIterator(

+ output: Seq[Attribute],

+ input: Iterator[InternalRow],

+ orderSpec: Seq[SortOrder],

+ limit: Int) extends BaseLimitIterator with OrderSpecProvider {

+

+ override def increaseRank(): Unit = {

+ if (currentRankRow == null) {

+ currentRankRow = nextRow.copy()

+ } else {

+ if (ordering.compare(currentRankRow, nextRow) != 0) {

+ rank += 1

+ currentRankRow = nextRow.copy()

+ }

+ }

+ }

+

+ override def reset(): Unit = {

+ rank = 0

+ }

+}

+

+class GroupedLimitIterator(

+ input: Iterator[InternalRow],

+ output: Seq[Attribute],

+ partitionSpec: Seq[Expression],

+ createLimitIterator: Iterator[InternalRow] => BaseLimitIterator)

+ extends Iterator[InternalRow] {

+

+ val grouping = UnsafeProjection.create(partitionSpec, output)

+

+ // Manage the stream and the grouping.

+ var nextRow: UnsafeRow = null

+ var nextGroup: UnsafeRow = null

+ var nextRowAvailable: Boolean = false

+ protected[this] def fetchNextRow(): Unit = {

+ nextRowAvailable = input.hasNext

+ if (nextRowAvailable) {

+ nextRow = input.next().asInstanceOf[UnsafeRow]

+ nextGroup = grouping(nextRow)

+ }

+ }

+ fetchNextRow()

+

+ var groupIterator: GroupIterator = _

+ var limitIterator: BaseLimitIterator = _

+ if (nextRowAvailable) {

+ groupIterator = new GroupIterator()

+ limitIterator = createLimitIterator(groupIterator)

+ }

+

+ override final def hasNext: Boolean = limitIterator.hasNext || {

+ groupIterator.skipRemainingRows()

+ limitIterator.reset()

+ groupIterator.hasNext

Review Comment:

How about

```

def hasNext: Boolean = {

if (!limitIterator.hasNext) {

// comments

groupIterator.skipRemainingRows()

limitIterator.reset()

}

limitIterator.hasNext

}

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] beliefer commented on pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by GitBox <gi...@apache.org>.

beliefer commented on PR #38799:

URL: https://github.com/apache/spark/pull/38799#issuecomment-1340278339

@zhengruifeng @cloud-fan Could you have any other suggestion ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by GitBox <gi...@apache.org>.

cloud-fan commented on code in PR #38799:

URL: https://github.com/apache/spark/pull/38799#discussion_r1046751527

##########

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/InsertWindowGroupLimit.scala:

##########

@@ -0,0 +1,97 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.catalyst.optimizer

+

+import org.apache.spark.sql.catalyst.expressions.{Alias, Attribute, CurrentRow, DenseRank, EqualTo, Expression, ExpressionSet, GreaterThan, GreaterThanOrEqual, IntegerLiteral, LessThan, LessThanOrEqual, NamedExpression, PredicateHelper, Rank, RowFrame, RowNumber, SpecifiedWindowFrame, UnboundedPreceding, WindowExpression, WindowSpecDefinition}

+import org.apache.spark.sql.catalyst.plans.logical.{Filter, LocalRelation, LogicalPlan, Window, WindowGroupLimit}

+import org.apache.spark.sql.catalyst.rules.Rule

+import org.apache.spark.sql.catalyst.trees.TreePattern.{FILTER, WINDOW}

+

+/**

+ * Optimize the filter based on rank-like window function by reduce not required rows.

+ * This rule optimizes the following cases:

+ * {{{

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE rn = 5

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE 5 = rn

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE rn < 5

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE 5 > rn

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE rn <= 5

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE 5 >= rn

+ * }}}

+ */

+object InsertWindowGroupLimit extends Rule[LogicalPlan] with PredicateHelper {

+

+ /**

+ * Extract all the limit values from predicates.

+ */

+ def extractLimits(condition: Expression, attr: Attribute): Option[Int] = {

+ val limits = splitConjunctivePredicates(condition).collect {

+ case EqualTo(IntegerLiteral(limit), e) if e.semanticEquals(attr) => limit

+ case EqualTo(e, IntegerLiteral(limit)) if e.semanticEquals(attr) => limit

+ case LessThan(e, IntegerLiteral(limit)) if e.semanticEquals(attr) => limit - 1

+ case GreaterThan(IntegerLiteral(limit), e) if e.semanticEquals(attr) => limit - 1

+ case LessThanOrEqual(e, IntegerLiteral(limit)) if e.semanticEquals(attr) => limit

+ case GreaterThanOrEqual(IntegerLiteral(limit), e) if e.semanticEquals(attr) => limit

+ }

+

+ if (limits.nonEmpty) Some(limits.min) else None

+ }

+

+ private def supports(

+ windowExpressions: Seq[NamedExpression]): Boolean = windowExpressions.exists {

+ case Alias(WindowExpression(_: Rank | _: DenseRank | _: RowNumber, WindowSpecDefinition(_, _,

+ SpecifiedWindowFrame(RowFrame, UnboundedPreceding, CurrentRow))), _) => true

+ case _ => false

+ }

+

+ def apply(plan: LogicalPlan): LogicalPlan = {

+ if (conf.windowGroupLimitThreshold == -1) return plan

+

+ plan.transformWithPruning(_.containsAllPatterns(FILTER, WINDOW), ruleId) {

+ case filter @ Filter(condition,

+ window @ Window(windowExpressions, partitionSpec, orderSpec, child))

+ if !child.isInstanceOf[WindowGroupLimit] &&

+ supports(windowExpressions) && orderSpec.nonEmpty =>

+ val limits = windowExpressions.collect {

+ case alias @ Alias(WindowExpression(rankLikeFunction, _), _) =>

+ extractLimits(condition, alias.toAttribute).map((_, rankLikeFunction))

+ }.flatten

+

+ // multiple different rank-like functions unsupported.

+ if (limits.isEmpty || ExpressionSet(limits.map(_._2)).size > 1) {

+ filter

+ } else {

+ val minLimit = limits.minBy(_._1)

+ minLimit match {

Review Comment:

```suggestion

limits.minBy(_._1) match {

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by GitBox <gi...@apache.org>.

cloud-fan commented on code in PR #38799:

URL: https://github.com/apache/spark/pull/38799#discussion_r1036828144

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/SparkStrategies.scala:

##########

@@ -627,6 +627,87 @@ abstract class SparkStrategies extends QueryPlanner[SparkPlan] {

}

}

+ /**

+ * Optimize the filter based on rank-like window function by reduce not required rows.

+ * This rule optimizes the following cases:

+ * {{{

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE rn = 5

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE 5 = rn

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE rn < 5

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE 5 > rn

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE rn <= 5

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE 5 >= rn

+ * }}}

+ */

+ object WindowGroupLimit extends Strategy with PredicateHelper {

+

+ /**

+ * Extract all the limit values from predicates.

+ */

+ def extractLimits(condition: Expression, attr: Attribute): Option[Int] = {

+ val limits = splitConjunctivePredicates(condition).collect {

+ case EqualTo(IntegerLiteral(limit), e) if e.semanticEquals(attr) => limit

+ case EqualTo(e, IntegerLiteral(limit)) if e.semanticEquals(attr) => limit

+ case LessThan(e, IntegerLiteral(limit)) if e.semanticEquals(attr) => limit - 1

+ case GreaterThan(IntegerLiteral(limit), e) if e.semanticEquals(attr) => limit - 1

+ case LessThanOrEqual(e, IntegerLiteral(limit)) if e.semanticEquals(attr) => limit

+ case GreaterThanOrEqual(IntegerLiteral(limit), e) if e.semanticEquals(attr) => limit

+ }

+

+ if (limits.nonEmpty) Some(limits.min) else None

+ }

+

+ private def supports(

+ windowExpressions: Seq[NamedExpression]): Boolean = windowExpressions.exists {

+ case Alias(WindowExpression(_: Rank | _: DenseRank | _: RowNumber, WindowSpecDefinition(_, _,

+ SpecifiedWindowFrame(RowFrame, UnboundedPreceding, CurrentRow))), _) => true

+ case _ => false

+ }

+

+ def apply(plan: LogicalPlan): Seq[SparkPlan] = {

+ if (conf.windowGroupLimitThreshold == -1) return Nil

+

+ plan match {

+ case filter @ Filter(condition,

+ window @ logical.Window(windowExpressions, partitionSpec, orderSpec, child))

+ if !child.isInstanceOf[logical.Window] &&

+ supports(windowExpressions) && orderSpec.nonEmpty =>

Review Comment:

do we really require the window to only contain rank like functions?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by GitBox <gi...@apache.org>.

beliefer commented on code in PR #38799:

URL: https://github.com/apache/spark/pull/38799#discussion_r1034474429

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/window/WindowGroupLimitExec.scala:

##########

@@ -0,0 +1,236 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.window

+

+import org.apache.spark.rdd.RDD

+import org.apache.spark.sql.catalyst.InternalRow

+import org.apache.spark.sql.catalyst.expressions.{Ascending, Attribute, DenseRank, Expression, Rank, RowNumber, SortOrder, UnsafeProjection, UnsafeRow}

+import org.apache.spark.sql.catalyst.expressions.codegen.GenerateOrdering

+import org.apache.spark.sql.catalyst.plans.physical.{AllTuples, ClusteredDistribution, Distribution, Partitioning}

+import org.apache.spark.sql.execution.{ExternalAppendOnlyUnsafeRowArray, SparkPlan, UnaryExecNode}

+

+sealed trait WindowGroupLimitMode

+

+case object Partial extends WindowGroupLimitMode

+

+case object Final extends WindowGroupLimitMode

+

+/**

+ * This operator is designed to filter out unnecessary rows before WindowExec

+ * for top-k computation.

+ * @param partitionSpec Should be the same as [[WindowExec#partitionSpec]]

+ * @param orderSpec Should be the same as [[WindowExec#orderSpec]]

+ * @param rankLikeFunction The function to compute row rank, should be RowNumber/Rank/DenseRank.

+ */

+case class WindowGroupLimitExec(

+ partitionSpec: Seq[Expression],

+ orderSpec: Seq[SortOrder],

+ rankLikeFunction: Expression,

+ limit: Int,

+ mode: WindowGroupLimitMode,

+ child: SparkPlan) extends UnaryExecNode {

+

+ override def output: Seq[Attribute] = child.output

+

+ override def requiredChildDistribution: Seq[Distribution] = mode match {

+ case Partial => super.requiredChildDistribution

+ case Final =>

+ if (partitionSpec.isEmpty) {

+ // Only show warning when the number of bytes is larger than 100 MiB?

+ logWarning("No Partition Defined for Window operation! Moving all data to a single "

+ + "partition, this can cause serious performance degradation.")

+ AllTuples :: Nil

+ } else {

+ ClusteredDistribution(partitionSpec) :: Nil

+ }

+ }

+

+ override def requiredChildOrdering: Seq[Seq[SortOrder]] =

Review Comment:

Yes. I have another PR to improve this issue.

Please see https://github.com/apache/spark/pull/38689

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by GitBox <gi...@apache.org>.

cloud-fan commented on code in PR #38799:

URL: https://github.com/apache/spark/pull/38799#discussion_r1033303620

##########

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/InsertWindowGroupLimit.scala:

##########

@@ -0,0 +1,97 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.catalyst.optimizer

+

+import org.apache.spark.sql.catalyst.expressions.{Alias, Attribute, CurrentRow, DenseRank, EqualTo, Expression, GreaterThan, GreaterThanOrEqual, IntegerLiteral, LessThan, LessThanOrEqual, NamedExpression, PredicateHelper, Rank, RowFrame, RowNumber, SpecifiedWindowFrame, UnboundedPreceding, WindowExpression, WindowSpecDefinition}

+import org.apache.spark.sql.catalyst.plans.logical.{Filter, LocalRelation, LogicalPlan, Window, WindowGroupLimit}

+import org.apache.spark.sql.catalyst.rules.Rule

+import org.apache.spark.sql.catalyst.trees.TreePattern.{FILTER, WINDOW}

+

+/**

+ * Optimize the filter based on rank-like window function by reduce not required rows.

+ * This rule optimizes the following cases:

+ * {{{

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE rn = 5

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE 5 = rn

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE rn < 5

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE 5 > rn

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE rn <= 5

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE 5 >= rn

+ * }}}

+ */

+object InsertWindowGroupLimit extends Rule[LogicalPlan] with PredicateHelper {

+

+ /**

+ * Extract all the limit values from predicates.

+ */

+ def extractLimits(condition: Expression, attr: Attribute): Option[Int] = {

+ val limits = splitConjunctivePredicates(condition).collect {

+ case EqualTo(IntegerLiteral(limit), e) if e.semanticEquals(attr) => limit

+ case EqualTo(e, IntegerLiteral(limit)) if e.semanticEquals(attr) => limit

+ case LessThan(e, IntegerLiteral(limit)) if e.semanticEquals(attr) => limit - 1

+ case GreaterThan(IntegerLiteral(limit), e) if e.semanticEquals(attr) => limit - 1

+ case LessThanOrEqual(e, IntegerLiteral(limit)) if e.semanticEquals(attr) => limit

+ case GreaterThanOrEqual(IntegerLiteral(limit), e) if e.semanticEquals(attr) => limit

+ }

+

+ if (limits.nonEmpty) Some(limits.min) else None

+ }

+

+ private def supports(

+ windowExpressions: Seq[NamedExpression]): Boolean = windowExpressions.forall {

+ case Alias(WindowExpression(_: Rank | _: DenseRank | _: RowNumber, WindowSpecDefinition(_, _,

+ SpecifiedWindowFrame(RowFrame, UnboundedPreceding, CurrentRow))), _) => true

+ case _ => false

+ }

+

+ def apply(plan: LogicalPlan): LogicalPlan = {

+ if (!conf.windowGroupLimitEnabled) return plan

+

+ plan.transformWithPruning(

+ _.containsAllPatterns(FILTER, WINDOW), ruleId) {

+ case filter @ Filter(condition,

+ window @ Window(windowExpressions, partitionSpec, orderSpec, child))

+ if !child.isInstanceOf[WindowGroupLimit] && !child.isInstanceOf[Window] &&

+ supports(windowExpressions) && orderSpec.nonEmpty =>

Review Comment:

We should only check related window expressios. e.g. `select other_window_func, row_number() ... as rn where rc = 1` should be supported.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] zhengruifeng commented on pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by GitBox <gi...@apache.org>.

zhengruifeng commented on PR #38799:

URL: https://github.com/apache/spark/pull/38799#issuecomment-1330127603

+1 on add a separate config as the threshold to trigger this optimization.

since new logical and physical plans are added, should also consider how it affect existing rules.

thanks for taking this over @beliefer

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by "cloud-fan (via GitHub)" <gi...@apache.org>.

cloud-fan commented on code in PR #38799:

URL: https://github.com/apache/spark/pull/38799#discussion_r1100230288

##########

sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala:

##########

@@ -2580,6 +2580,18 @@ object SQLConf {

.intConf

.createWithDefault(SHUFFLE_SPILL_NUM_ELEMENTS_FORCE_SPILL_THRESHOLD.defaultValue.get)

+ val WINDOW_GROUP_LIMIT_THRESHOLD =

+ buildConf("spark.sql.optimizer.windowGroupLimitThreshold")

+ .internal()

+ .doc("Threshold for filter the dataset by the window group limit before" +

Review Comment:

```

Threshold for triggering `InsertWindowGroupLimit`. 0 means disabling the optimization.

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by GitBox <gi...@apache.org>.

cloud-fan commented on code in PR #38799:

URL: https://github.com/apache/spark/pull/38799#discussion_r1046764675

##########

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/InsertWindowGroupLimit.scala:

##########

@@ -0,0 +1,97 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.catalyst.optimizer

+

+import org.apache.spark.sql.catalyst.expressions.{Alias, Attribute, CurrentRow, DenseRank, EqualTo, Expression, ExpressionSet, GreaterThan, GreaterThanOrEqual, IntegerLiteral, LessThan, LessThanOrEqual, NamedExpression, PredicateHelper, Rank, RowFrame, RowNumber, SpecifiedWindowFrame, UnboundedPreceding, WindowExpression, WindowSpecDefinition}

+import org.apache.spark.sql.catalyst.plans.logical.{Filter, LocalRelation, LogicalPlan, Window, WindowGroupLimit}

+import org.apache.spark.sql.catalyst.rules.Rule

+import org.apache.spark.sql.catalyst.trees.TreePattern.{FILTER, WINDOW}

+

+/**

+ * Optimize the filter based on rank-like window function by reduce not required rows.

+ * This rule optimizes the following cases:

+ * {{{

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE rn = 5

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE 5 = rn

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE rn < 5

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE 5 > rn

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE rn <= 5

+ * SELECT *, ROW_NUMBER() OVER(PARTITION BY k ORDER BY a) AS rn FROM Tab1 WHERE 5 >= rn

+ * }}}

+ */

+object InsertWindowGroupLimit extends Rule[LogicalPlan] with PredicateHelper {

+

+ /**

+ * Extract all the limit values from predicates.

+ */

+ def extractLimits(condition: Expression, attr: Attribute): Option[Int] = {

+ val limits = splitConjunctivePredicates(condition).collect {

+ case EqualTo(IntegerLiteral(limit), e) if e.semanticEquals(attr) => limit

+ case EqualTo(e, IntegerLiteral(limit)) if e.semanticEquals(attr) => limit

+ case LessThan(e, IntegerLiteral(limit)) if e.semanticEquals(attr) => limit - 1

+ case GreaterThan(IntegerLiteral(limit), e) if e.semanticEquals(attr) => limit - 1

+ case LessThanOrEqual(e, IntegerLiteral(limit)) if e.semanticEquals(attr) => limit

+ case GreaterThanOrEqual(IntegerLiteral(limit), e) if e.semanticEquals(attr) => limit

+ }

+

+ if (limits.nonEmpty) Some(limits.min) else None

+ }

+

+ private def supports(

+ windowExpressions: Seq[NamedExpression]): Boolean = windowExpressions.exists {

+ case Alias(WindowExpression(_: Rank | _: DenseRank | _: RowNumber, WindowSpecDefinition(_, _,

+ SpecifiedWindowFrame(RowFrame, UnboundedPreceding, CurrentRow))), _) => true

+ case _ => false

+ }

+

+ def apply(plan: LogicalPlan): LogicalPlan = {

+ if (conf.windowGroupLimitThreshold == -1) return plan

+

+ plan.transformWithPruning(_.containsAllPatterns(FILTER, WINDOW), ruleId) {

+ case filter @ Filter(condition,

+ window @ Window(windowExpressions, partitionSpec, orderSpec, child))

+ if !child.isInstanceOf[WindowGroupLimit] &&

+ supports(windowExpressions) && orderSpec.nonEmpty =>

+ val limits = windowExpressions.collect {

+ case alias @ Alias(WindowExpression(rankLikeFunction, _), _) =>

+ extractLimits(condition, alias.toAttribute).map((_, rankLikeFunction))

+ }.flatten

+

+ // multiple different rank-like functions unsupported.

+ if (limits.isEmpty || ExpressionSet(limits.map(_._2)).size > 1) {

Review Comment:

> ExpressionSet(limits.map(_._2)).size > 1

Do we really need this condition? We are inserting a limit-like operator, and we can just randomly pick one supported window function to do limit.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by GitBox <gi...@apache.org>.

cloud-fan commented on code in PR #38799:

URL: https://github.com/apache/spark/pull/38799#discussion_r1046756910

##########

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/optimizer/InsertWindowGroupLimitSuite.scala:

##########

@@ -0,0 +1,308 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.catalyst.optimizer

+

+import org.apache.spark.sql.Row

+import org.apache.spark.sql.catalyst.dsl.expressions._

+import org.apache.spark.sql.catalyst.dsl.plans._

+import org.apache.spark.sql.catalyst.expressions.{CurrentRow, DenseRank, Literal, NthValue, NTile, Rank, RowFrame, RowNumber, SpecifiedWindowFrame, UnboundedPreceding}

+import org.apache.spark.sql.catalyst.plans.PlanTest

+import org.apache.spark.sql.catalyst.plans.logical.{LocalRelation, LogicalPlan}

+import org.apache.spark.sql.catalyst.rules.RuleExecutor

+import org.apache.spark.sql.internal.SQLConf

+

+class InsertWindowGroupLimitSuite extends PlanTest {

+ private object Optimize extends RuleExecutor[LogicalPlan] {

+ val batches =

+ Batch("Insert WindowGroupLimit", FixedPoint(10),

+ CollapseProject,

+ RemoveNoopOperators,

+ PushDownPredicates,

+ InsertWindowGroupLimit) :: Nil

+ }

+

+ private object WithoutOptimize extends RuleExecutor[LogicalPlan] {

+ val batches =

+ Batch("Insert WindowGroupLimit", FixedPoint(10),

+ CollapseProject,

+ RemoveNoopOperators,

+ PushDownPredicates) :: Nil

+ }

+

+ private val testRelation = LocalRelation.fromExternalRows(

+ Seq("a".attr.int, "b".attr.int, "c".attr.int),

+ 1.to(10).map(i => Row(i % 3, 2, i)))

Review Comment:

This is optimizer test, we don't need data.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by GitBox <gi...@apache.org>.

beliefer commented on code in PR #38799:

URL: https://github.com/apache/spark/pull/38799#discussion_r1067823587

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/window/WindowGroupLimitExec.scala:

##########

@@ -0,0 +1,241 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.window

+

+import org.apache.spark.rdd.RDD

+import org.apache.spark.sql.catalyst.InternalRow

+import org.apache.spark.sql.catalyst.expressions.{Ascending, Attribute, DenseRank, Expression, Rank, RowNumber, SortOrder, UnsafeProjection, UnsafeRow}

+import org.apache.spark.sql.catalyst.expressions.codegen.GenerateOrdering

+import org.apache.spark.sql.catalyst.plans.physical.{AllTuples, ClusteredDistribution, Distribution, Partitioning}

+import org.apache.spark.sql.execution.{SparkPlan, UnaryExecNode}

+

+sealed trait WindowGroupLimitMode

+

+case object Partial extends WindowGroupLimitMode

+

+case object Final extends WindowGroupLimitMode

+

+/**

+ * This operator is designed to filter out unnecessary rows before WindowExec

+ * for top-k computation.

+ * @param partitionSpec Should be the same as [[WindowExec#partitionSpec]]

+ * @param orderSpec Should be the same as [[WindowExec#orderSpec]]

+ * @param rankLikeFunction The function to compute row rank, should be RowNumber/Rank/DenseRank.

+ */

+case class WindowGroupLimitExec(

+ partitionSpec: Seq[Expression],

+ orderSpec: Seq[SortOrder],

+ rankLikeFunction: Expression,

+ limit: Int,

+ mode: WindowGroupLimitMode,

+ child: SparkPlan) extends UnaryExecNode {

+

+ override def output: Seq[Attribute] = child.output

+

+ override def requiredChildDistribution: Seq[Distribution] = mode match {

+ case Partial => super.requiredChildDistribution

+ case Final =>

+ if (partitionSpec.isEmpty) {

+ AllTuples :: Nil

+ } else {

+ ClusteredDistribution(partitionSpec) :: Nil

+ }

+ }

+

+ override def requiredChildOrdering: Seq[Seq[SortOrder]] =

+ Seq(partitionSpec.map(SortOrder(_, Ascending)) ++ orderSpec)

+

+ override def outputOrdering: Seq[SortOrder] = child.outputOrdering

+

+ override def outputPartitioning: Partitioning = child.outputPartitioning

+

+ protected override def doExecute(): RDD[InternalRow] = rankLikeFunction match {

+ case _: RowNumber =>

+ child.execute().mapPartitions(SimpleGroupLimitIterator(partitionSpec, output, _, limit))

+ case _: Rank =>

+ child.execute().mapPartitions(

+ RankGroupLimitIterator(partitionSpec, output, _, orderSpec, limit))

+ case _: DenseRank =>

+ child.execute().mapPartitions(

+ DenseRankGroupLimitIterator(partitionSpec, output, _, orderSpec, limit))

+ }

+

+ override protected def withNewChildInternal(newChild: SparkPlan): WindowGroupLimitExec =

+ copy(child = newChild)

+}

+

+abstract class WindowIterator extends Iterator[InternalRow] {

+

+ def partitionSpec: Seq[Expression]

+

+ def output: Seq[Attribute]

+

+ def input: Iterator[InternalRow]

+

+ def limit: Int

+

+ val grouping = UnsafeProjection.create(partitionSpec, output)

+

+ // Manage the stream and the grouping.

+ var nextRow: UnsafeRow = null

+ var nextGroup: UnsafeRow = null

+ var nextRowAvailable: Boolean = false

+ protected[this] def fetchNextRow(): Unit = {

+ nextRowAvailable = input.hasNext

+ if (nextRowAvailable) {

+ nextRow = input.next().asInstanceOf[UnsafeRow]

+ nextGroup = grouping(nextRow)

+ } else {

+ nextRow = null

+ nextGroup = null

+ }

+ }

+ fetchNextRow()

+

+ var rank = 0

+

+ // Increase the rank value.

+ def increaseRank(): Unit

+

+ // Clear the rank value.

+ def clearRank(): Unit

+

+ var bufferIterator: Iterator[InternalRow] = _

+

+ private[this] def fetchNextGroup(): Unit = {

+ clearRank()

+ bufferIterator = createGroupIterator()

+ }

+

+ override final def hasNext: Boolean =

+ (bufferIterator != null && bufferIterator.hasNext) || nextRowAvailable

Review Comment:

When current group doesn't have more rows, `clearRank` will be called.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] beliefer commented on pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by "beliefer (via GitHub)" <gi...@apache.org>.

beliefer commented on PR #38799:

URL: https://github.com/apache/spark/pull/38799#issuecomment-1423565384

> there are 3 tpc queries having plan change, what's their perf result?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by "cloud-fan (via GitHub)" <gi...@apache.org>.

cloud-fan commented on code in PR #38799:

URL: https://github.com/apache/spark/pull/38799#discussion_r1108420829

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/window/WindowGroupLimitExec.scala:

##########

@@ -0,0 +1,281 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.window

+

+import org.apache.spark.rdd.RDD

+import org.apache.spark.sql.catalyst.InternalRow

+import org.apache.spark.sql.catalyst.expressions.{Ascending, Attribute, DenseRank, Expression, Rank, RowNumber, SortOrder, UnsafeProjection, UnsafeRow}

+import org.apache.spark.sql.catalyst.expressions.codegen.GenerateOrdering

+import org.apache.spark.sql.catalyst.plans.physical.{AllTuples, ClusteredDistribution, Distribution, Partitioning}

+import org.apache.spark.sql.execution.{SparkPlan, UnaryExecNode}

+

+sealed trait WindowGroupLimitMode

+

+case object Partial extends WindowGroupLimitMode

+

+case object Final extends WindowGroupLimitMode

+

+/**

+ * This operator is designed to filter out unnecessary rows before WindowExec

+ * for top-k computation.

+ * @param partitionSpec Should be the same as [[WindowExec#partitionSpec]].

+ * @param orderSpec Should be the same as [[WindowExec#orderSpec]].

+ * @param rankLikeFunction The function to compute row rank, should be RowNumber/Rank/DenseRank.

+ * @param limit The limit for rank value.

+ * @param mode The mode describes [[WindowGroupLimitExec]] before or after shuffle.

+ * @param child The child spark plan.

+ */

+case class WindowGroupLimitExec(

+ partitionSpec: Seq[Expression],

+ orderSpec: Seq[SortOrder],

+ rankLikeFunction: Expression,

+ limit: Int,

+ mode: WindowGroupLimitMode,

+ child: SparkPlan) extends UnaryExecNode {

+

+ override def output: Seq[Attribute] = child.output

+

+ override def requiredChildDistribution: Seq[Distribution] = mode match {

+ case Partial => super.requiredChildDistribution

+ case Final =>

+ if (partitionSpec.isEmpty) {

+ AllTuples :: Nil

+ } else {

+ ClusteredDistribution(partitionSpec) :: Nil

+ }

+ }

+

+ override def requiredChildOrdering: Seq[Seq[SortOrder]] =

+ Seq(partitionSpec.map(SortOrder(_, Ascending)) ++ orderSpec)

+

+ override def outputOrdering: Seq[SortOrder] = child.outputOrdering

+

+ override def outputPartitioning: Partitioning = child.outputPartitioning

+

+ protected override def doExecute(): RDD[InternalRow] = rankLikeFunction match {

+ case _: RowNumber if partitionSpec.isEmpty =>

+ child.execute().mapPartitionsInternal(SimpleLimitIterator(_, limit))

+ case _: RowNumber =>

+ child.execute().mapPartitionsInternal(

+ SimpleGroupLimitIterator(partitionSpec, output, _, limit))

+ case _: Rank if partitionSpec.isEmpty =>

+ child.execute().mapPartitionsInternal(RankLimitIterator(output, _, orderSpec, limit))

+ case _: Rank =>

+ child.execute().mapPartitionsInternal(

+ RankGroupLimitIterator(partitionSpec, output, _, orderSpec, limit))

+ case _: DenseRank if partitionSpec.isEmpty =>

+ child.execute().mapPartitionsInternal(DenseRankLimitIterator(output, _, orderSpec, limit))

+ case _: DenseRank =>

+ child.execute().mapPartitionsInternal(

+ DenseRankGroupLimitIterator(partitionSpec, output, _, orderSpec, limit))

+ }

+

+ override protected def withNewChildInternal(newChild: SparkPlan): WindowGroupLimitExec =

+ copy(child = newChild)

+}

+

+abstract class BaseLimitIterator extends Iterator[InternalRow] {

+

+ def input: Iterator[InternalRow]

+

+ def limit: Int

+

+ var rank = 0

+

+ var nextRow: UnsafeRow = null

+

+ // Increase the rank value.

+ def increaseRank(): Unit

+

+ override def hasNext: Boolean = rank < limit && input.hasNext

+

+ override def next(): InternalRow = {

+ nextRow = input.next().asInstanceOf[UnsafeRow]

+ increaseRank()

+ nextRow

+ }

+}

+

+case class SimpleLimitIterator(

+ input: Iterator[InternalRow],

+ limit: Int) extends BaseLimitIterator {

+

+ override def increaseRank(): Unit = {

+ rank += 1

+ }

+}

+

+trait OrderSpecProvider {

+ def output: Seq[Attribute]

+ def orderSpec: Seq[SortOrder]

+

+ val ordering = GenerateOrdering.generate(orderSpec, output)

+ var currentRankRow: UnsafeRow = null

+}

+

+case class RankLimitIterator(

+ output: Seq[Attribute],

+ input: Iterator[InternalRow],

+ orderSpec: Seq[SortOrder],

+ limit: Int) extends BaseLimitIterator with OrderSpecProvider {

+

+ var count = 0

+

+ override def increaseRank(): Unit = {

+ if (count == 0) {

+ currentRankRow = nextRow.copy()

+ } else {

+ if (ordering.compare(currentRankRow, nextRow) != 0) {

+ rank = count

+ currentRankRow = nextRow.copy()

+ }

+ }

+ count += 1

+ }

+}

+

+case class DenseRankLimitIterator(

+ output: Seq[Attribute],

+ input: Iterator[InternalRow],

+ orderSpec: Seq[SortOrder],

+ limit: Int) extends BaseLimitIterator with OrderSpecProvider {

+

+ override def increaseRank(): Unit = {

+ if (currentRankRow == null) {

+ currentRankRow = nextRow.copy()

+ } else {

+ if (ordering.compare(currentRankRow, nextRow) != 0) {

+ rank += 1

+ currentRankRow = nextRow.copy()

+ }

+ }

+ }

+}

+

+trait WindowIterator extends Iterator[InternalRow] {

Review Comment:

let's make it simpler:

```

class GroupedLimitIterator(input, partitionSpec, createLimitIterator: Iterator => BaseLimitIterator) ... {

var groupIterator = null

var limitIterator = null

if (input.hasNext) {

groupIterator = new GroupIterator(input, partitionSpec)

limitIterator = createLimitIterator(groupIterator)

}

def hasNext = limitIterator != null && limitIterator.hasNext

def next = {

if (!hasNext) throw NoSuchElementException

val res = limitIterator.next

if (!limitIterator.hasNext) {

groupIterator.skipRemainingRows()

limitIterator.reset()

}

res

}

}

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] beliefer commented on a diff in pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by "beliefer (via GitHub)" <gi...@apache.org>.

beliefer commented on code in PR #38799:

URL: https://github.com/apache/spark/pull/38799#discussion_r1100920458

##########

sql/core/src/test/scala/org/apache/spark/sql/DataFrameWindowFunctionsSuite.scala:

##########

@@ -1265,4 +1265,168 @@ class DataFrameWindowFunctionsSuite extends QueryTest

)

)

}

+

+ test("SPARK-37099: Insert window group limit node for top-k computation") {

+

+ val nullStr: String = null

+ val df = Seq(

+ ("a", 0, "c", 1.0),

+ ("a", 1, "x", 2.0),

+ ("a", 2, "y", 3.0),

+ ("a", 3, "z", -1.0),

+ ("a", 4, "", 2.0),

+ ("a", 4, "", 2.0),

+ ("b", 1, "h", Double.NaN),

+ ("b", 1, "n", Double.PositiveInfinity),

+ ("c", 1, "z", -2.0),

+ ("c", 1, "a", -4.0),

+ ("c", 2, nullStr, 5.0)).toDF("key", "value", "order", "value2")

+

+ val window = Window.partitionBy($"key").orderBy($"order".asc_nulls_first)

+ val window2 = Window.partitionBy($"key").orderBy($"order".desc_nulls_first)

+

+ Seq(-1, 100).foreach { threshold =>

+ withSQLConf(SQLConf.WINDOW_GROUP_LIMIT_THRESHOLD.key -> threshold.toString) {

+ Seq($"rn" === 0, $"rn" < 1, $"rn" <= 0).foreach { condition =>

+ checkAnswer(df.withColumn("rn", row_number().over(window)).where(condition),

+ Seq.empty[Row]

+ )

+ }

+

+ Seq($"rn" === 1, $"rn" < 2, $"rn" <= 1).foreach { condition =>

+ checkAnswer(df.withColumn("rn", row_number().over(window)).where(condition),

+ Seq(

+ Row("a", 4, "", 2.0, 1),

+ Row("b", 1, "h", Double.NaN, 1),

+ Row("c", 2, null, 5.0, 1)

+ )

+ )

+

+ checkAnswer(df.withColumn("rn", rank().over(window)).where(condition),

+ Seq(

+ Row("a", 4, "", 2.0, 1),

+ Row("a", 4, "", 2.0, 1),

+ Row("b", 1, "h", Double.NaN, 1),

+ Row("c", 2, null, 5.0, 1)

+ )

+ )

+

+ checkAnswer(df.withColumn("rn", dense_rank().over(window)).where(condition),

+ Seq(

+ Row("a", 4, "", 2.0, 1),

+ Row("a", 4, "", 2.0, 1),

+ Row("b", 1, "h", Double.NaN, 1),

+ Row("c", 2, null, 5.0, 1)

+ )

+ )

+ }

+

+ Seq($"rn" < 3, $"rn" <= 2).foreach { condition =>

+ checkAnswer(df.withColumn("rn", row_number().over(window)).where(condition),

+ Seq(

+ Row("a", 4, "", 2.0, 1),

+ Row("a", 4, "", 2.0, 2),

+ Row("b", 1, "h", Double.NaN, 1),

+ Row("b", 1, "n", Double.PositiveInfinity, 2),

+ Row("c", 1, "a", -4.0, 2),

+ Row("c", 2, null, 5.0, 1)

+ )

+ )

+

+ checkAnswer(df.withColumn("rn", rank().over(window)).where(condition),

+ Seq(

+ Row("a", 4, "", 2.0, 1),

+ Row("a", 4, "", 2.0, 1),

+ Row("b", 1, "h", Double.NaN, 1),

+ Row("b", 1, "n", Double.PositiveInfinity, 2),

+ Row("c", 1, "a", -4.0, 2),

+ Row("c", 2, null, 5.0, 1)

+ )

+ )

+

+ checkAnswer(df.withColumn("rn", dense_rank().over(window)).where(condition),

+ Seq(

+ Row("a", 0, "c", 1.0, 2),

+ Row("a", 4, "", 2.0, 1),

+ Row("a", 4, "", 2.0, 1),

+ Row("b", 1, "h", Double.NaN, 1),

+ Row("b", 1, "n", Double.PositiveInfinity, 2),

+ Row("c", 1, "a", -4.0, 2),

+ Row("c", 2, null, 5.0, 1)

+ )

+ )

+ }

+

+ val condition = $"rn" === 2 && $"value2" > 0.5

+ checkAnswer(df.withColumn("rn", row_number().over(window)).where(condition),

+ Seq(

+ Row("a", 4, "", 2.0, 2),

+ Row("b", 1, "n", Double.PositiveInfinity, 2)

+ )

+ )

+

+ checkAnswer(df.withColumn("rn", rank().over(window)).where(condition),

+ Seq(

+ Row("b", 1, "n", Double.PositiveInfinity, 2)

+ )

+ )

+

+ checkAnswer(df.withColumn("rn", dense_rank().over(window)).where(condition),

+ Seq(

+ Row("a", 0, "c", 1.0, 2),

+ Row("b", 1, "n", Double.PositiveInfinity, 2)

+ )

+ )

+

+ val multipleRowNumbers = df

+ .withColumn("rn", row_number().over(window))

+ .withColumn("rn2", row_number().over(window))

+ .where('rn < 2 && 'rn2 < 3)

+ checkAnswer(multipleRowNumbers,

+ Seq(

+ Row("a", 4, "", 2.0, 1, 1),

+ Row("b", 1, "h", Double.NaN, 1, 1),

+ Row("c", 2, null, 5.0, 1, 1)

+ )

+ )

+

+ val multipleRanks = df

+ .withColumn("rn", rank().over(window))

+ .withColumn("rn2", rank().over(window))

+ .where('rn < 2 && 'rn2 < 3)

+ checkAnswer(multipleRanks,

+ Seq(

+ Row("a", 4, "", 2.0, 1, 1),

+ Row("a", 4, "", 2.0, 1, 1),

+ Row("b", 1, "h", Double.NaN, 1, 1),

+ Row("c", 2, null, 5.0, 1, 1)

+ )

+ )

+

+ val multipleDenseRanks = df

+ .withColumn("rn", dense_rank().over(window))

+ .withColumn("rn2", dense_rank().over(window))

+ .where('rn < 2 && 'rn2 < 3)

+ checkAnswer(multipleDenseRanks,

+ Seq(

+ Row("a", 4, "", 2.0, 1, 1),

+ Row("a", 4, "", 2.0, 1, 1),

+ Row("b", 1, "h", Double.NaN, 1, 1),

+ Row("c", 2, null, 5.0, 1, 1)

+ )

+ )

+

+ val multipleWindowsOne = df

+ .withColumn("rn2", row_number().over(window2))

+ .withColumn("rn", row_number().over(window))

Review Comment:

Yes. The inner Window will not be optimized.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] beliefer commented on pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by GitBox <gi...@apache.org>.

beliefer commented on PR #38799:

URL: https://github.com/apache/spark/pull/38799#issuecomment-1352608435

The failure GA is unrelated with this PR.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] cloud-fan commented on a diff in pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by GitBox <gi...@apache.org>.

cloud-fan commented on code in PR #38799:

URL: https://github.com/apache/spark/pull/38799#discussion_r1046828353

##########

sql/core/src/main/scala/org/apache/spark/sql/execution/window/WindowGroupLimitExec.scala:

##########

@@ -0,0 +1,251 @@

+/*

+ * Licensed to the Apache Software Foundation (ASF) under one or more

+ * contributor license agreements. See the NOTICE file distributed with

+ * this work for additional information regarding copyright ownership.

+ * The ASF licenses this file to You under the Apache License, Version 2.0

+ * (the "License"); you may not use this file except in compliance with

+ * the License. You may obtain a copy of the License at

+ *

+ * http://www.apache.org/licenses/LICENSE-2.0

+ *

+ * Unless required by applicable law or agreed to in writing, software

+ * distributed under the License is distributed on an "AS IS" BASIS,

+ * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ * See the License for the specific language governing permissions and

+ * limitations under the License.

+ */

+

+package org.apache.spark.sql.execution.window

+

+import org.apache.spark.rdd.RDD

+import org.apache.spark.sql.catalyst.InternalRow

+import org.apache.spark.sql.catalyst.expressions.{Ascending, Attribute, DenseRank, Expression, Rank, RowNumber, SortOrder, UnsafeProjection, UnsafeRow}

+import org.apache.spark.sql.catalyst.expressions.codegen.GenerateOrdering

+import org.apache.spark.sql.catalyst.plans.physical.{AllTuples, ClusteredDistribution, Distribution, Partitioning}

+import org.apache.spark.sql.execution.{SparkPlan, UnaryExecNode}

+

+sealed trait WindowGroupLimitMode

+

+case object Partial extends WindowGroupLimitMode

+

+case object Final extends WindowGroupLimitMode

+

+/**

+ * This operator is designed to filter out unnecessary rows before WindowExec

+ * for top-k computation.

+ * @param partitionSpec Should be the same as [[WindowExec#partitionSpec]]

+ * @param orderSpec Should be the same as [[WindowExec#orderSpec]]

+ * @param rankLikeFunction The function to compute row rank, should be RowNumber/Rank/DenseRank.

+ */

+case class WindowGroupLimitExec(

+ partitionSpec: Seq[Expression],

+ orderSpec: Seq[SortOrder],

+ rankLikeFunction: Expression,

+ limit: Int,

+ mode: WindowGroupLimitMode,

+ child: SparkPlan) extends UnaryExecNode {

+

+ override def output: Seq[Attribute] = child.output

+

+ override def requiredChildDistribution: Seq[Distribution] = mode match {

+ case Partial => super.requiredChildDistribution

+ case Final =>

+ if (partitionSpec.isEmpty) {

+ AllTuples :: Nil

+ } else {

+ ClusteredDistribution(partitionSpec) :: Nil

+ }

+ }

+

+ override def requiredChildOrdering: Seq[Seq[SortOrder]] =

+ Seq(partitionSpec.map(SortOrder(_, Ascending)) ++ orderSpec)

+

+ override def outputOrdering: Seq[SortOrder] = child.outputOrdering

+

+ override def outputPartitioning: Partitioning = child.outputPartitioning

+

+ protected override def doExecute(): RDD[InternalRow] = rankLikeFunction match {

+ case _: RowNumber =>

+ child.execute().mapPartitions(SimpleGroupLimitIterator(partitionSpec, output, _, limit))

+ case _: Rank =>

+ child.execute().mapPartitions(

+ RankGroupLimitIterator(partitionSpec, output, _, orderSpec, limit))

+ case _: DenseRank =>

+ child.execute().mapPartitions(

+ DenseRankGroupLimitIterator(partitionSpec, output, _, orderSpec, limit))

+ }

+

+ override protected def withNewChildInternal(newChild: SparkPlan): WindowGroupLimitExec =

+ copy(child = newChild)

+}

+

+abstract class WindowIterator extends Iterator[InternalRow] {

+

+ def partitionSpec: Seq[Expression]

+

+ def output: Seq[Attribute]

+

+ def stream: Iterator[InternalRow]

+

+ val grouping = UnsafeProjection.create(partitionSpec, output)

+

+ // Manage the stream and the grouping.

+ var nextRow: UnsafeRow = null

+ var nextGroup: UnsafeRow = null

+ var nextRowAvailable: Boolean = false

+ protected[this] def fetchNextRow(): Unit = {

+ nextRowAvailable = stream.hasNext

+ if (nextRowAvailable) {

+ nextRow = stream.next().asInstanceOf[UnsafeRow]

+ nextGroup = grouping(nextRow)

+ } else {

+ nextRow = null

+ nextGroup = null

+ }

+ }

+ fetchNextRow()

+

+ // Whether or not the rank exceeding the window group limit value.

+ def exceedingLimit(): Boolean

+

+ // Increase the rank value.

+ def increaseRank(): Unit

+

+ // Clear the rank value.

+ def clearRank(): Unit

+

+ var bufferIterator: Iterator[InternalRow] = _

+

+ private[this] def fetchNextPartition(): Unit = {

+ clearRank()

+ bufferIterator = createGroupIterator()

+ }

+

+ override final def hasNext: Boolean =

+ (bufferIterator != null && bufferIterator.hasNext) || nextRowAvailable

+

+ override final def next(): InternalRow = {

+ // Load the next partition if we need to.

+ if ((bufferIterator == null || !bufferIterator.hasNext) && nextRowAvailable) {

+ fetchNextPartition()

+ }

+

+ if (bufferIterator.hasNext) {

+ bufferIterator.next()

+ } else {

+ throw new NoSuchElementException

+ }

+ }

+

+ private def createGroupIterator(): Iterator[InternalRow] = {

+ new Iterator[InternalRow] {

+ // Before we start to fetch new input rows, make a copy of nextGroup.

+ val currentGroup = nextGroup.copy()

+

+ def hasNext: Boolean = {

+ if (nextRowAvailable) {

+ if (exceedingLimit() && nextGroup == currentGroup) {

+ do {

+ fetchNextRow()

+ } while (nextRowAvailable && nextGroup == currentGroup)

+ }

+ nextRowAvailable && nextGroup == currentGroup

+ } else {

+ nextRowAvailable

+ }

+ }

+

+ def next(): InternalRow = {

+ val currentRow = nextRow.copy()

+ increaseRank()

+ fetchNextRow()

+ currentRow

+ }

+ }

+ }

+}

+

+case class SimpleGroupLimitIterator(

+ partitionSpec: Seq[Expression],

+ output: Seq[Attribute],

+ stream: Iterator[InternalRow],

+ limit: Int) extends WindowIterator {

+ var count = 0

+

+ override def exceedingLimit(): Boolean = {

+ count >= limit

+ }

+

+ override def increaseRank(): Unit = {

+ count += 1

+ }

+

+ override def clearRank(): Unit = {

+ count = 0

+ }

+}

+

+case class RankGroupLimitIterator(

+ partitionSpec: Seq[Expression],

+ output: Seq[Attribute],

+ stream: Iterator[InternalRow],

+ orderSpec: Seq[SortOrder],

+ limit: Int) extends WindowIterator {

+ val ordering = GenerateOrdering.generate(orderSpec, output)

+ var count = 0

+ var rank = 0

+ var currentRank: UnsafeRow = null

+

+ override def exceedingLimit(): Boolean = {

+ rank >= limit

+ }

+

+ override def increaseRank(): Unit = {

+ if (count == 0) {

+ currentRank = nextRow.copy()

+ } else {

+ if (ordering.compare(currentRank, nextRow) != 0) {

+ rank = count

+ currentRank = nextRow.copy()

+ }

+ }

+ count += 1

+ }

+

+ override def clearRank(): Unit = {

+ count = 0

+ rank = 0

+ currentRank = null

+ }

+}

+

+case class DenseRankGroupLimitIterator(

+ partitionSpec: Seq[Expression],

+ output: Seq[Attribute],

+ stream: Iterator[InternalRow],

+ orderSpec: Seq[SortOrder],

+ limit: Int) extends WindowIterator {

+ val ordering = GenerateOrdering.generate(orderSpec, output)

+ var rank = 0

Review Comment:

For `SimpleGroupLimitIterator`, rank is simply the count.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] beliefer commented on pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by GitBox <gi...@apache.org>.

beliefer commented on PR #38799:

URL: https://github.com/apache/spark/pull/38799#issuecomment-1365007428

ping @cloud-fan

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] cloud-fan commented on pull request #38799: [SPARK-37099][SQL] Introduce the group limit of Window for rank-based filter to optimize top-k computation

Posted by GitBox <gi...@apache.org>.

cloud-fan commented on PR #38799:

URL: https://github.com/apache/spark/pull/38799#issuecomment-1330155848

> since new logical and physical plans are added, should also consider how it affect existing rules.

How about we do this optimization as a planner rule? then logical plan won't change.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org