You are viewing a plain text version of this content. The canonical link for it is here.

Posted to github@arrow.apache.org by GitBox <gi...@apache.org> on 2021/07/13 22:05:44 UTC

[GitHub] [arrow] zeroshade opened a new pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

zeroshade opened a new pull request #10716:

URL: https://github.com/apache/arrow/pull/10716

@emkornfield Thanks for merging the previous PR #10379

Here's the remaining files that we pulled out of that PR to shrink it down, including all the unit tests for the Encoding package.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] emkornfield commented on a change in pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

emkornfield commented on a change in pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#discussion_r680022978

##########

File path: go/parquet/internal/encoding/encoding_benchmarks_test.go

##########

@@ -0,0 +1,461 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+package encoding_test

+

+import (

+ "fmt"

+ "math"

+ "testing"

+

+ "github.com/apache/arrow/go/arrow"

+ "github.com/apache/arrow/go/arrow/array"

+ "github.com/apache/arrow/go/arrow/memory"

+ "github.com/apache/arrow/go/parquet"

+ "github.com/apache/arrow/go/parquet/internal/encoding"

+ "github.com/apache/arrow/go/parquet/internal/hashing"

+ "github.com/apache/arrow/go/parquet/internal/testutils"

+ "github.com/apache/arrow/go/parquet/schema"

+)

+

+const (

+ MINSIZE = 1024

+ MAXSIZE = 65536

+)

+

+func BenchmarkPlainEncodingBoolean(b *testing.B) {

+ for sz := MINSIZE; sz < MAXSIZE+1; sz *= 2 {

Review comment:

there isn't a built in construct in GO benchmarks for adjusting batch size?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] zeroshade commented on a change in pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

zeroshade commented on a change in pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#discussion_r686975396

##########

File path: go/parquet/internal/encoding/memo_table_test.go

##########

@@ -0,0 +1,284 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+package encoding_test

+

+import (

+ "math"

+ "testing"

+

+ "github.com/apache/arrow/go/arrow/memory"

+ "github.com/apache/arrow/go/parquet/internal/encoding"

+ "github.com/apache/arrow/go/parquet/internal/hashing"

+ "github.com/stretchr/testify/suite"

+)

+

+type MemoTableTestSuite struct {

+ suite.Suite

+}

+

+func TestMemoTable(t *testing.T) {

+ suite.Run(t, new(MemoTableTestSuite))

+}

+

+func (m *MemoTableTestSuite) assertGetNotFound(table encoding.MemoTable, v interface{}) {

+ _, ok := table.Get(v)

+ m.False(ok)

+}

+

+func (m *MemoTableTestSuite) assertGet(table encoding.MemoTable, v interface{}, expected int) {

+ idx, ok := table.Get(v)

+ m.Equal(expected, idx)

+ m.True(ok)

+}

+

+func (m *MemoTableTestSuite) assertGetOrInsert(table encoding.MemoTable, v interface{}, expected int) {

+ idx, _, err := table.GetOrInsert(v)

+ m.NoError(err)

+ m.Equal(expected, idx)

+}

+

+func (m *MemoTableTestSuite) assertGetNullNotFound(table encoding.MemoTable) {

+ _, ok := table.GetNull()

+ m.False(ok)

+}

+

+func (m *MemoTableTestSuite) assertGetNull(table encoding.MemoTable, expected int) {

+ idx, ok := table.GetNull()

+ m.Equal(expected, idx)

+ m.True(ok)

+}

+

+func (m *MemoTableTestSuite) assertGetOrInsertNull(table encoding.MemoTable, expected int) {

+ idx, _ := table.GetOrInsertNull()

+ m.Equal(expected, idx)

+}

+

+func (m *MemoTableTestSuite) TestInt64() {

+ const (

+ A int64 = 1234

+ B int64 = 0

+ C int64 = -98765321

+ D int64 = 12345678901234

+ E int64 = -1

+ F int64 = 1

+ G int64 = 9223372036854775807

+ H int64 = -9223372036854775807 - 1

+ )

+

+ // table := encoding.NewInt64MemoTable(nil)

+ table := hashing.NewInt64MemoTable(0)

+ m.Zero(table.Size())

+ m.assertGetNotFound(table, A)

+ m.assertGetNullNotFound(table)

+ m.assertGetOrInsert(table, A, 0)

+ m.assertGetNotFound(table, B)

+ m.assertGetOrInsert(table, B, 1)

+ m.assertGetOrInsert(table, C, 2)

+ m.assertGetOrInsert(table, D, 3)

+ m.assertGetOrInsert(table, E, 4)

+ m.assertGetOrInsertNull(table, 5)

+

+ m.assertGet(table, A, 0)

+ m.assertGetOrInsert(table, A, 0)

+ m.assertGet(table, E, 4)

+ m.assertGetOrInsert(table, E, 4)

+

+ m.assertGetOrInsert(table, F, 6)

+ m.assertGetOrInsert(table, G, 7)

+ m.assertGetOrInsert(table, H, 8)

+

+ m.assertGetOrInsert(table, G, 7)

+ m.assertGetOrInsert(table, F, 6)

+ m.assertGetOrInsertNull(table, 5)

+ m.assertGetOrInsert(table, E, 4)

+ m.assertGetOrInsert(table, D, 3)

+ m.assertGetOrInsert(table, C, 2)

+ m.assertGetOrInsert(table, B, 1)

+ m.assertGetOrInsert(table, A, 0)

+

+ const sz int = 9

+ m.Equal(sz, table.Size())

+ m.Panics(func() {

+ values := make([]int32, sz)

+ table.CopyValues(values)

+ }, "should panic because wrong type")

+ m.Panics(func() {

+ values := make([]int64, sz-3)

+ table.CopyValues(values)

+ }, "should panic because out of bounds")

+

+ {

+ values := make([]int64, sz)

+ table.CopyValues(values)

+ m.Equal([]int64{A, B, C, D, E, 0, F, G, H}, values)

+ }

+ {

+ const offset = 3

+ values := make([]int64, sz-offset)

+ table.CopyValuesSubset(offset, values)

+ m.Equal([]int64{D, E, 0, F, G, H}, values)

+ }

+}

+

+func (m *MemoTableTestSuite) TestFloat64() {

+ const (

+ A float64 = 0.0

+ B float64 = 1.5

+ C float64 = -0.1

+ )

+ var (

+ D = math.Inf(1)

+ E = -D

+ F = math.NaN()

Review comment:

added tests with 2 other bit representations of NaN for comparisons

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] zeroshade commented on pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

zeroshade commented on pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#issuecomment-883459361

@emkornfield @sbinet Bump for visibility to get reviews

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] zeroshade commented on a change in pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

zeroshade commented on a change in pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#discussion_r680029295

##########

File path: go/parquet/internal/encoding/levels.go

##########

@@ -0,0 +1,284 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+package encoding

+

+import (

+ "bytes"

+ "encoding/binary"

+ "io"

+ "math/bits"

+

+ "github.com/JohnCGriffin/overflow"

+ "github.com/apache/arrow/go/arrow/bitutil"

+ "github.com/apache/arrow/go/parquet"

+ format "github.com/apache/arrow/go/parquet/internal/gen-go/parquet"

+ "github.com/apache/arrow/go/parquet/internal/utils"

+)

+

+// LevelEncoder is for handling the encoding of Definition and Repetition levels

+// to parquet files.

+type LevelEncoder struct {

+ bitWidth int

+ rleLen int

+ encoding format.Encoding

+ rle *utils.RleEncoder

+ bit *utils.BitWriter

+}

+

+// LevelEncodingMaxBufferSize estimates the max number of bytes needed to encode data with the

+// specified encoding given the max level and number of buffered values provided.

+func LevelEncodingMaxBufferSize(encoding parquet.Encoding, maxLvl int16, nbuffered int) int {

+ bitWidth := bits.Len64(uint64(maxLvl))

+ nbytes := 0

+ switch encoding {

+ case parquet.Encodings.RLE:

+ nbytes = utils.MaxBufferSize(bitWidth, nbuffered) + utils.MinBufferSize(bitWidth)

+ case parquet.Encodings.BitPacked:

+ nbytes = int(bitutil.BytesForBits(int64(nbuffered * bitWidth)))

+ default:

+ panic("parquet: unknown encoding type for levels")

+ }

+ return nbytes

+}

+

+// Reset resets the encoder allowing it to be reused and updating the maxlevel to the new

+// specified value.

+func (l *LevelEncoder) Reset(maxLvl int16) {

+ l.bitWidth = bits.Len64(uint64(maxLvl))

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rle.Clear()

+ l.rle.BitWidth = l.bitWidth

+ case format.Encoding_BIT_PACKED:

+ l.bit.Clear()

+ default:

+ panic("parquet: unknown encoding type")

+ }

+}

+

+// Init is called to set up the desired encoding type, max level and underlying writer for a

+// level encoder to control where the resulting encoded buffer will end up.

+func (l *LevelEncoder) Init(encoding parquet.Encoding, maxLvl int16, w io.WriterAt) {

+ l.bitWidth = bits.Len64(uint64(maxLvl))

+ l.encoding = format.Encoding(encoding)

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rle = utils.NewRleEncoder(w, l.bitWidth)

+ case format.Encoding_BIT_PACKED:

+ l.bit = utils.NewBitWriter(w)

+ default:

+ panic("parquet: unknown encoding type for levels")

+ }

+}

+

+// EncodeNoFlush encodes the provided levels in the encoder, but doesn't flush

+// the buffer and return it yet, appending these encoded values. Returns the number

Review comment:

it *should* always return `len(lvls)`, if it returns less that means it encountered an error/issue while encoding. I'll add that to the comment.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] emkornfield commented on a change in pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

emkornfield commented on a change in pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#discussion_r686489652

##########

File path: go/parquet/internal/encoding/memo_table.go

##########

@@ -0,0 +1,380 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+package encoding

+

+import (

+ "math"

+ "unsafe"

+

+ "github.com/apache/arrow/go/arrow"

+ "github.com/apache/arrow/go/arrow/array"

+ "github.com/apache/arrow/go/arrow/memory"

+ "github.com/apache/arrow/go/parquet"

+ "github.com/apache/arrow/go/parquet/internal/hashing"

+)

+

+//go:generate go run ../../../arrow/_tools/tmpl/main.go -i -data=physical_types.tmpldata memo_table_types.gen.go.tmpl

+

+// MemoTable interface that can be used to swap out implementations of the hash table

+// used for handling dictionary encoding. Dictionary encoding is built against this interface

+// to make it easy for code generation and changing implementations.

+//

+// Values should remember the order they are inserted to generate a valid dictionary index

+type MemoTable interface {

+ // Reset drops everything in the table allowing it to be reused

+ Reset()

+ // Size returns the current number of unique values stored in the table

+ // including whether or not a null value has been passed in using GetOrInsertNull

+ Size() int

Review comment:

int is 32 bits here? does it pay to have errors returned for the insertion operations if they exceed that range?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] emkornfield commented on a change in pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

emkornfield commented on a change in pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#discussion_r680034150

##########

File path: go/parquet/internal/encoding/levels.go

##########

@@ -0,0 +1,284 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+package encoding

+

+import (

+ "bytes"

+ "encoding/binary"

+ "io"

+ "math/bits"

+

+ "github.com/JohnCGriffin/overflow"

+ "github.com/apache/arrow/go/arrow/bitutil"

+ "github.com/apache/arrow/go/parquet"

+ format "github.com/apache/arrow/go/parquet/internal/gen-go/parquet"

+ "github.com/apache/arrow/go/parquet/internal/utils"

+)

+

+// LevelEncoder is for handling the encoding of Definition and Repetition levels

+// to parquet files.

+type LevelEncoder struct {

+ bitWidth int

+ rleLen int

+ encoding format.Encoding

+ rle *utils.RleEncoder

+ bit *utils.BitWriter

+}

+

+// LevelEncodingMaxBufferSize estimates the max number of bytes needed to encode data with the

+// specified encoding given the max level and number of buffered values provided.

+func LevelEncodingMaxBufferSize(encoding parquet.Encoding, maxLvl int16, nbuffered int) int {

+ bitWidth := bits.Len64(uint64(maxLvl))

+ nbytes := 0

+ switch encoding {

+ case parquet.Encodings.RLE:

+ nbytes = utils.MaxBufferSize(bitWidth, nbuffered) + utils.MinBufferSize(bitWidth)

+ case parquet.Encodings.BitPacked:

+ nbytes = int(bitutil.BytesForBits(int64(nbuffered * bitWidth)))

+ default:

+ panic("parquet: unknown encoding type for levels")

+ }

+ return nbytes

+}

+

+// Reset resets the encoder allowing it to be reused and updating the maxlevel to the new

+// specified value.

+func (l *LevelEncoder) Reset(maxLvl int16) {

+ l.bitWidth = bits.Len64(uint64(maxLvl))

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rle.Clear()

+ l.rle.BitWidth = l.bitWidth

+ case format.Encoding_BIT_PACKED:

+ l.bit.Clear()

+ default:

+ panic("parquet: unknown encoding type")

+ }

+}

+

+// Init is called to set up the desired encoding type, max level and underlying writer for a

+// level encoder to control where the resulting encoded buffer will end up.

+func (l *LevelEncoder) Init(encoding parquet.Encoding, maxLvl int16, w io.WriterAt) {

+ l.bitWidth = bits.Len64(uint64(maxLvl))

+ l.encoding = format.Encoding(encoding)

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rle = utils.NewRleEncoder(w, l.bitWidth)

+ case format.Encoding_BIT_PACKED:

+ l.bit = utils.NewBitWriter(w)

+ default:

+ panic("parquet: unknown encoding type for levels")

+ }

+}

+

+// EncodeNoFlush encodes the provided levels in the encoder, but doesn't flush

+// the buffer and return it yet, appending these encoded values. Returns the number

Review comment:

so it is up to users to check that? should it propogate an error instead?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] github-actions[bot] commented on pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

github-actions[bot] commented on pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#issuecomment-879433836

https://issues.apache.org/jira/browse/ARROW-13330

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] emkornfield commented on a change in pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

emkornfield commented on a change in pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#discussion_r680027388

##########

File path: go/parquet/internal/encoding/levels.go

##########

@@ -0,0 +1,284 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+package encoding

+

+import (

+ "bytes"

+ "encoding/binary"

+ "io"

+ "math/bits"

+

+ "github.com/JohnCGriffin/overflow"

+ "github.com/apache/arrow/go/arrow/bitutil"

+ "github.com/apache/arrow/go/parquet"

+ format "github.com/apache/arrow/go/parquet/internal/gen-go/parquet"

+ "github.com/apache/arrow/go/parquet/internal/utils"

+)

+

+// LevelEncoder is for handling the encoding of Definition and Repetition levels

+// to parquet files.

+type LevelEncoder struct {

+ bitWidth int

+ rleLen int

+ encoding format.Encoding

+ rle *utils.RleEncoder

+ bit *utils.BitWriter

+}

+

+// LevelEncodingMaxBufferSize estimates the max number of bytes needed to encode data with the

+// specified encoding given the max level and number of buffered values provided.

+func LevelEncodingMaxBufferSize(encoding parquet.Encoding, maxLvl int16, nbuffered int) int {

+ bitWidth := bits.Len64(uint64(maxLvl))

+ nbytes := 0

+ switch encoding {

+ case parquet.Encodings.RLE:

+ nbytes = utils.MaxBufferSize(bitWidth, nbuffered) + utils.MinBufferSize(bitWidth)

+ case parquet.Encodings.BitPacked:

+ nbytes = int(bitutil.BytesForBits(int64(nbuffered * bitWidth)))

+ default:

+ panic("parquet: unknown encoding type for levels")

+ }

+ return nbytes

+}

+

+// Reset resets the encoder allowing it to be reused and updating the maxlevel to the new

+// specified value.

+func (l *LevelEncoder) Reset(maxLvl int16) {

+ l.bitWidth = bits.Len64(uint64(maxLvl))

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rle.Clear()

+ l.rle.BitWidth = l.bitWidth

+ case format.Encoding_BIT_PACKED:

+ l.bit.Clear()

+ default:

+ panic("parquet: unknown encoding type")

+ }

+}

+

+// Init is called to set up the desired encoding type, max level and underlying writer for a

+// level encoder to control where the resulting encoded buffer will end up.

+func (l *LevelEncoder) Init(encoding parquet.Encoding, maxLvl int16, w io.WriterAt) {

+ l.bitWidth = bits.Len64(uint64(maxLvl))

+ l.encoding = format.Encoding(encoding)

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rle = utils.NewRleEncoder(w, l.bitWidth)

+ case format.Encoding_BIT_PACKED:

+ l.bit = utils.NewBitWriter(w)

+ default:

+ panic("parquet: unknown encoding type for levels")

+ }

+}

+

+// EncodeNoFlush encodes the provided levels in the encoder, but doesn't flush

+// the buffer and return it yet, appending these encoded values. Returns the number

+// of values encoded.

+func (l *LevelEncoder) EncodeNoFlush(lvls []int16) int {

+ nencoded := 0

+ if l.rle == nil && l.bit == nil {

+ panic("parquet: level encoders are not initialized")

+ }

+

+ switch l.encoding {

+ case format.Encoding_RLE:

+ for _, level := range lvls {

+ if !l.rle.Put(uint64(level)) {

+ break

+ }

+ nencoded++

+ }

+ default:

+ for _, level := range lvls {

+ if l.bit.WriteValue(uint64(level), uint(l.bitWidth)) != nil {

+ break

+ }

+ nencoded++

+ }

+ }

+ return nencoded

+}

+

+// Flush flushes out any encoded data to the underlying writer.

+func (l *LevelEncoder) Flush() {

+ if l.rle == nil && l.bit == nil {

+ panic("parquet: level encoders are not initialized")

+ }

+

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rleLen = l.rle.Flush()

+ default:

+ l.bit.Flush(false)

+ }

+}

+

+// Encode encodes the slice of definition or repetition levels based on

+// the currently configured encoding type and returns the number of

+// values that were encoded.

+func (l *LevelEncoder) Encode(lvls []int16) int {

+ nencoded := 0

+ if l.rle == nil && l.bit == nil {

+ panic("parquet: level encoders are not initialized")

+ }

+

+ switch l.encoding {

+ case format.Encoding_RLE:

+ for _, level := range lvls {

+ if !l.rle.Put(uint64(level)) {

+ break

+ }

+ nencoded++

+ }

+ l.rleLen = l.rle.Flush()

+ default:

+ for _, level := range lvls {

+ if l.bit.WriteValue(uint64(level), uint(l.bitWidth)) != nil {

+ break

+ }

+ nencoded++

+ }

+ l.bit.Flush(false)

+ }

+ return nencoded

+}

+

+// Len returns the number of bytes that were written as Run Length encoded

+// levels, this is only valid for run length encoding and will panic if using

+// deprecated bit packed encoding.

+func (l *LevelEncoder) Len() int {

+ if l.encoding != format.Encoding_RLE {

+ panic("parquet: level encoder, only implemented for RLE")

+ }

+ return l.rleLen

+}

+

+// LevelDecoder handles the decoding of repetition and definition levels from a

+// parquet file supporting bit packed and run length encoded values.

+type LevelDecoder struct {

+ bitWidth int

+ remaining int

Review comment:

a comment on what remaining is might be useful.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] emkornfield commented on a change in pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

emkornfield commented on a change in pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#discussion_r686491375

##########

File path: go/parquet/internal/encoding/memo_table_test.go

##########

@@ -0,0 +1,284 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+package encoding_test

+

+import (

+ "math"

+ "testing"

+

+ "github.com/apache/arrow/go/arrow/memory"

+ "github.com/apache/arrow/go/parquet/internal/encoding"

+ "github.com/apache/arrow/go/parquet/internal/hashing"

+ "github.com/stretchr/testify/suite"

+)

+

+type MemoTableTestSuite struct {

+ suite.Suite

+}

+

+func TestMemoTable(t *testing.T) {

+ suite.Run(t, new(MemoTableTestSuite))

+}

+

+func (m *MemoTableTestSuite) assertGetNotFound(table encoding.MemoTable, v interface{}) {

+ _, ok := table.Get(v)

+ m.False(ok)

+}

+

+func (m *MemoTableTestSuite) assertGet(table encoding.MemoTable, v interface{}, expected int) {

+ idx, ok := table.Get(v)

+ m.Equal(expected, idx)

+ m.True(ok)

+}

+

+func (m *MemoTableTestSuite) assertGetOrInsert(table encoding.MemoTable, v interface{}, expected int) {

+ idx, _, err := table.GetOrInsert(v)

+ m.NoError(err)

+ m.Equal(expected, idx)

+}

+

+func (m *MemoTableTestSuite) assertGetNullNotFound(table encoding.MemoTable) {

+ _, ok := table.GetNull()

+ m.False(ok)

+}

+

+func (m *MemoTableTestSuite) assertGetNull(table encoding.MemoTable, expected int) {

+ idx, ok := table.GetNull()

+ m.Equal(expected, idx)

+ m.True(ok)

+}

+

+func (m *MemoTableTestSuite) assertGetOrInsertNull(table encoding.MemoTable, expected int) {

+ idx, _ := table.GetOrInsertNull()

+ m.Equal(expected, idx)

+}

+

+func (m *MemoTableTestSuite) TestInt64() {

+ const (

+ A int64 = 1234

+ B int64 = 0

+ C int64 = -98765321

+ D int64 = 12345678901234

+ E int64 = -1

+ F int64 = 1

+ G int64 = 9223372036854775807

+ H int64 = -9223372036854775807 - 1

+ )

+

+ // table := encoding.NewInt64MemoTable(nil)

+ table := hashing.NewInt64MemoTable(0)

+ m.Zero(table.Size())

+ m.assertGetNotFound(table, A)

+ m.assertGetNullNotFound(table)

+ m.assertGetOrInsert(table, A, 0)

+ m.assertGetNotFound(table, B)

+ m.assertGetOrInsert(table, B, 1)

+ m.assertGetOrInsert(table, C, 2)

+ m.assertGetOrInsert(table, D, 3)

+ m.assertGetOrInsert(table, E, 4)

+ m.assertGetOrInsertNull(table, 5)

+

+ m.assertGet(table, A, 0)

+ m.assertGetOrInsert(table, A, 0)

+ m.assertGet(table, E, 4)

+ m.assertGetOrInsert(table, E, 4)

+

+ m.assertGetOrInsert(table, F, 6)

+ m.assertGetOrInsert(table, G, 7)

+ m.assertGetOrInsert(table, H, 8)

+

+ m.assertGetOrInsert(table, G, 7)

+ m.assertGetOrInsert(table, F, 6)

+ m.assertGetOrInsertNull(table, 5)

+ m.assertGetOrInsert(table, E, 4)

+ m.assertGetOrInsert(table, D, 3)

+ m.assertGetOrInsert(table, C, 2)

+ m.assertGetOrInsert(table, B, 1)

+ m.assertGetOrInsert(table, A, 0)

+

+ const sz int = 9

+ m.Equal(sz, table.Size())

+ m.Panics(func() {

+ values := make([]int32, sz)

+ table.CopyValues(values)

+ }, "should panic because wrong type")

+ m.Panics(func() {

+ values := make([]int64, sz-3)

+ table.CopyValues(values)

+ }, "should panic because out of bounds")

+

+ {

+ values := make([]int64, sz)

+ table.CopyValues(values)

+ m.Equal([]int64{A, B, C, D, E, 0, F, G, H}, values)

+ }

+ {

+ const offset = 3

+ values := make([]int64, sz-offset)

+ table.CopyValuesSubset(offset, values)

+ m.Equal([]int64{D, E, 0, F, G, H}, values)

+ }

+}

+

+func (m *MemoTableTestSuite) TestFloat64() {

+ const (

+ A float64 = 0.0

+ B float64 = 1.5

+ C float64 = -0.1

+ )

+ var (

+ D = math.Inf(1)

+ E = -D

+ F = math.NaN()

Review comment:

ideally, you would test with to different bit representations of Nan

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] zeroshade commented on a change in pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

zeroshade commented on a change in pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#discussion_r680074956

##########

File path: go/parquet/internal/encoding/levels.go

##########

@@ -0,0 +1,284 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+package encoding

+

+import (

+ "bytes"

+ "encoding/binary"

+ "io"

+ "math/bits"

+

+ "github.com/JohnCGriffin/overflow"

+ "github.com/apache/arrow/go/arrow/bitutil"

+ "github.com/apache/arrow/go/parquet"

+ format "github.com/apache/arrow/go/parquet/internal/gen-go/parquet"

+ "github.com/apache/arrow/go/parquet/internal/utils"

+)

+

+// LevelEncoder is for handling the encoding of Definition and Repetition levels

+// to parquet files.

+type LevelEncoder struct {

+ bitWidth int

+ rleLen int

+ encoding format.Encoding

+ rle *utils.RleEncoder

+ bit *utils.BitWriter

+}

+

+// LevelEncodingMaxBufferSize estimates the max number of bytes needed to encode data with the

+// specified encoding given the max level and number of buffered values provided.

+func LevelEncodingMaxBufferSize(encoding parquet.Encoding, maxLvl int16, nbuffered int) int {

+ bitWidth := bits.Len64(uint64(maxLvl))

+ nbytes := 0

+ switch encoding {

+ case parquet.Encodings.RLE:

+ nbytes = utils.MaxBufferSize(bitWidth, nbuffered) + utils.MinBufferSize(bitWidth)

+ case parquet.Encodings.BitPacked:

+ nbytes = int(bitutil.BytesForBits(int64(nbuffered * bitWidth)))

+ default:

+ panic("parquet: unknown encoding type for levels")

+ }

+ return nbytes

+}

+

+// Reset resets the encoder allowing it to be reused and updating the maxlevel to the new

+// specified value.

+func (l *LevelEncoder) Reset(maxLvl int16) {

+ l.bitWidth = bits.Len64(uint64(maxLvl))

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rle.Clear()

+ l.rle.BitWidth = l.bitWidth

+ case format.Encoding_BIT_PACKED:

+ l.bit.Clear()

+ default:

+ panic("parquet: unknown encoding type")

+ }

+}

+

+// Init is called to set up the desired encoding type, max level and underlying writer for a

+// level encoder to control where the resulting encoded buffer will end up.

+func (l *LevelEncoder) Init(encoding parquet.Encoding, maxLvl int16, w io.WriterAt) {

+ l.bitWidth = bits.Len64(uint64(maxLvl))

+ l.encoding = format.Encoding(encoding)

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rle = utils.NewRleEncoder(w, l.bitWidth)

+ case format.Encoding_BIT_PACKED:

+ l.bit = utils.NewBitWriter(w)

+ default:

+ panic("parquet: unknown encoding type for levels")

+ }

+}

+

+// EncodeNoFlush encodes the provided levels in the encoder, but doesn't flush

+// the buffer and return it yet, appending these encoded values. Returns the number

Review comment:

updated this change to add error propagation to all the necessary spots (and all the subsequent calls and dependencies) so that consumers no longer have to rely on checking the number of values returned but can see easily if an error was returned by the encoders.

##########

File path: go/parquet/internal/encoding/levels.go

##########

@@ -0,0 +1,284 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+package encoding

+

+import (

+ "bytes"

+ "encoding/binary"

+ "io"

+ "math/bits"

+

+ "github.com/JohnCGriffin/overflow"

+ "github.com/apache/arrow/go/arrow/bitutil"

+ "github.com/apache/arrow/go/parquet"

+ format "github.com/apache/arrow/go/parquet/internal/gen-go/parquet"

+ "github.com/apache/arrow/go/parquet/internal/utils"

+)

+

+// LevelEncoder is for handling the encoding of Definition and Repetition levels

+// to parquet files.

+type LevelEncoder struct {

+ bitWidth int

+ rleLen int

+ encoding format.Encoding

+ rle *utils.RleEncoder

+ bit *utils.BitWriter

+}

+

+// LevelEncodingMaxBufferSize estimates the max number of bytes needed to encode data with the

+// specified encoding given the max level and number of buffered values provided.

+func LevelEncodingMaxBufferSize(encoding parquet.Encoding, maxLvl int16, nbuffered int) int {

+ bitWidth := bits.Len64(uint64(maxLvl))

+ nbytes := 0

+ switch encoding {

+ case parquet.Encodings.RLE:

+ nbytes = utils.MaxBufferSize(bitWidth, nbuffered) + utils.MinBufferSize(bitWidth)

+ case parquet.Encodings.BitPacked:

+ nbytes = int(bitutil.BytesForBits(int64(nbuffered * bitWidth)))

+ default:

+ panic("parquet: unknown encoding type for levels")

+ }

+ return nbytes

+}

+

+// Reset resets the encoder allowing it to be reused and updating the maxlevel to the new

+// specified value.

+func (l *LevelEncoder) Reset(maxLvl int16) {

+ l.bitWidth = bits.Len64(uint64(maxLvl))

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rle.Clear()

+ l.rle.BitWidth = l.bitWidth

+ case format.Encoding_BIT_PACKED:

+ l.bit.Clear()

+ default:

+ panic("parquet: unknown encoding type")

+ }

+}

+

+// Init is called to set up the desired encoding type, max level and underlying writer for a

+// level encoder to control where the resulting encoded buffer will end up.

+func (l *LevelEncoder) Init(encoding parquet.Encoding, maxLvl int16, w io.WriterAt) {

+ l.bitWidth = bits.Len64(uint64(maxLvl))

+ l.encoding = format.Encoding(encoding)

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rle = utils.NewRleEncoder(w, l.bitWidth)

+ case format.Encoding_BIT_PACKED:

+ l.bit = utils.NewBitWriter(w)

+ default:

+ panic("parquet: unknown encoding type for levels")

+ }

+}

+

+// EncodeNoFlush encodes the provided levels in the encoder, but doesn't flush

+// the buffer and return it yet, appending these encoded values. Returns the number

+// of values encoded.

+func (l *LevelEncoder) EncodeNoFlush(lvls []int16) int {

+ nencoded := 0

+ if l.rle == nil && l.bit == nil {

+ panic("parquet: level encoders are not initialized")

+ }

+

+ switch l.encoding {

+ case format.Encoding_RLE:

+ for _, level := range lvls {

+ if !l.rle.Put(uint64(level)) {

+ break

+ }

+ nencoded++

+ }

+ default:

+ for _, level := range lvls {

+ if l.bit.WriteValue(uint64(level), uint(l.bitWidth)) != nil {

+ break

+ }

+ nencoded++

+ }

+ }

+ return nencoded

+}

+

+// Flush flushes out any encoded data to the underlying writer.

+func (l *LevelEncoder) Flush() {

+ if l.rle == nil && l.bit == nil {

+ panic("parquet: level encoders are not initialized")

+ }

+

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rleLen = l.rle.Flush()

+ default:

+ l.bit.Flush(false)

+ }

+}

+

+// Encode encodes the slice of definition or repetition levels based on

+// the currently configured encoding type and returns the number of

+// values that were encoded.

+func (l *LevelEncoder) Encode(lvls []int16) int {

+ nencoded := 0

+ if l.rle == nil && l.bit == nil {

+ panic("parquet: level encoders are not initialized")

+ }

+

+ switch l.encoding {

+ case format.Encoding_RLE:

+ for _, level := range lvls {

+ if !l.rle.Put(uint64(level)) {

+ break

+ }

+ nencoded++

+ }

+ l.rleLen = l.rle.Flush()

+ default:

+ for _, level := range lvls {

+ if l.bit.WriteValue(uint64(level), uint(l.bitWidth)) != nil {

+ break

+ }

+ nencoded++

+ }

+ l.bit.Flush(false)

+ }

+ return nencoded

+}

+

+// Len returns the number of bytes that were written as Run Length encoded

+// levels, this is only valid for run length encoding and will panic if using

+// deprecated bit packed encoding.

+func (l *LevelEncoder) Len() int {

+ if l.encoding != format.Encoding_RLE {

+ panic("parquet: level encoder, only implemented for RLE")

+ }

+ return l.rleLen

+}

+

+// LevelDecoder handles the decoding of repetition and definition levels from a

+// parquet file supporting bit packed and run length encoded values.

+type LevelDecoder struct {

+ bitWidth int

+ remaining int

+ maxLvl int16

+ encoding format.Encoding

+ rle *utils.RleDecoder

Review comment:

done

##########

File path: go/parquet/internal/encoding/levels.go

##########

@@ -0,0 +1,284 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+package encoding

+

+import (

+ "bytes"

+ "encoding/binary"

+ "io"

+ "math/bits"

+

+ "github.com/JohnCGriffin/overflow"

+ "github.com/apache/arrow/go/arrow/bitutil"

+ "github.com/apache/arrow/go/parquet"

+ format "github.com/apache/arrow/go/parquet/internal/gen-go/parquet"

+ "github.com/apache/arrow/go/parquet/internal/utils"

+)

+

+// LevelEncoder is for handling the encoding of Definition and Repetition levels

+// to parquet files.

+type LevelEncoder struct {

+ bitWidth int

+ rleLen int

+ encoding format.Encoding

+ rle *utils.RleEncoder

+ bit *utils.BitWriter

+}

+

+// LevelEncodingMaxBufferSize estimates the max number of bytes needed to encode data with the

+// specified encoding given the max level and number of buffered values provided.

+func LevelEncodingMaxBufferSize(encoding parquet.Encoding, maxLvl int16, nbuffered int) int {

+ bitWidth := bits.Len64(uint64(maxLvl))

+ nbytes := 0

+ switch encoding {

+ case parquet.Encodings.RLE:

+ nbytes = utils.MaxBufferSize(bitWidth, nbuffered) + utils.MinBufferSize(bitWidth)

+ case parquet.Encodings.BitPacked:

+ nbytes = int(bitutil.BytesForBits(int64(nbuffered * bitWidth)))

+ default:

+ panic("parquet: unknown encoding type for levels")

+ }

+ return nbytes

+}

+

+// Reset resets the encoder allowing it to be reused and updating the maxlevel to the new

+// specified value.

+func (l *LevelEncoder) Reset(maxLvl int16) {

+ l.bitWidth = bits.Len64(uint64(maxLvl))

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rle.Clear()

+ l.rle.BitWidth = l.bitWidth

+ case format.Encoding_BIT_PACKED:

+ l.bit.Clear()

+ default:

+ panic("parquet: unknown encoding type")

+ }

+}

+

+// Init is called to set up the desired encoding type, max level and underlying writer for a

+// level encoder to control where the resulting encoded buffer will end up.

+func (l *LevelEncoder) Init(encoding parquet.Encoding, maxLvl int16, w io.WriterAt) {

+ l.bitWidth = bits.Len64(uint64(maxLvl))

+ l.encoding = format.Encoding(encoding)

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rle = utils.NewRleEncoder(w, l.bitWidth)

+ case format.Encoding_BIT_PACKED:

+ l.bit = utils.NewBitWriter(w)

+ default:

+ panic("parquet: unknown encoding type for levels")

+ }

+}

+

+// EncodeNoFlush encodes the provided levels in the encoder, but doesn't flush

+// the buffer and return it yet, appending these encoded values. Returns the number

+// of values encoded.

+func (l *LevelEncoder) EncodeNoFlush(lvls []int16) int {

+ nencoded := 0

+ if l.rle == nil && l.bit == nil {

+ panic("parquet: level encoders are not initialized")

+ }

+

+ switch l.encoding {

+ case format.Encoding_RLE:

+ for _, level := range lvls {

+ if !l.rle.Put(uint64(level)) {

+ break

+ }

+ nencoded++

+ }

+ default:

+ for _, level := range lvls {

+ if l.bit.WriteValue(uint64(level), uint(l.bitWidth)) != nil {

+ break

+ }

+ nencoded++

+ }

+ }

+ return nencoded

+}

+

+// Flush flushes out any encoded data to the underlying writer.

+func (l *LevelEncoder) Flush() {

+ if l.rle == nil && l.bit == nil {

+ panic("parquet: level encoders are not initialized")

+ }

+

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rleLen = l.rle.Flush()

+ default:

+ l.bit.Flush(false)

+ }

+}

+

+// Encode encodes the slice of definition or repetition levels based on

+// the currently configured encoding type and returns the number of

+// values that were encoded.

+func (l *LevelEncoder) Encode(lvls []int16) int {

+ nencoded := 0

+ if l.rle == nil && l.bit == nil {

+ panic("parquet: level encoders are not initialized")

+ }

+

+ switch l.encoding {

+ case format.Encoding_RLE:

+ for _, level := range lvls {

+ if !l.rle.Put(uint64(level)) {

+ break

+ }

+ nencoded++

+ }

+ l.rleLen = l.rle.Flush()

+ default:

+ for _, level := range lvls {

+ if l.bit.WriteValue(uint64(level), uint(l.bitWidth)) != nil {

+ break

+ }

+ nencoded++

+ }

+ l.bit.Flush(false)

+ }

+ return nencoded

+}

+

+// Len returns the number of bytes that were written as Run Length encoded

+// levels, this is only valid for run length encoding and will panic if using

+// deprecated bit packed encoding.

+func (l *LevelEncoder) Len() int {

+ if l.encoding != format.Encoding_RLE {

+ panic("parquet: level encoder, only implemented for RLE")

+ }

+ return l.rleLen

+}

+

+// LevelDecoder handles the decoding of repetition and definition levels from a

+// parquet file supporting bit packed and run length encoded values.

+type LevelDecoder struct {

+ bitWidth int

+ remaining int

Review comment:

done.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] emkornfield commented on pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

emkornfield commented on pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#issuecomment-899963348

+1 merging. Thank you @zeroshade

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] zeroshade commented on a change in pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

zeroshade commented on a change in pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#discussion_r686851251

##########

File path: go/parquet/internal/encoding/memo_table.go

##########

@@ -0,0 +1,380 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+package encoding

+

+import (

+ "math"

+ "unsafe"

+

+ "github.com/apache/arrow/go/arrow"

+ "github.com/apache/arrow/go/arrow/array"

+ "github.com/apache/arrow/go/arrow/memory"

+ "github.com/apache/arrow/go/parquet"

+ "github.com/apache/arrow/go/parquet/internal/hashing"

+)

+

+//go:generate go run ../../../arrow/_tools/tmpl/main.go -i -data=physical_types.tmpldata memo_table_types.gen.go.tmpl

+

+// MemoTable interface that can be used to swap out implementations of the hash table

+// used for handling dictionary encoding. Dictionary encoding is built against this interface

+// to make it easy for code generation and changing implementations.

+//

+// Values should remember the order they are inserted to generate a valid dictionary index

+type MemoTable interface {

+ // Reset drops everything in the table allowing it to be reused

+ Reset()

+ // Size returns the current number of unique values stored in the table

+ // including whether or not a null value has been passed in using GetOrInsertNull

+ Size() int

+ // CopyValues populates out with the values currently in the table, out must

+ // be a slice of the appropriate type for the table type.

+ CopyValues(out interface{})

+ // CopyValuesSubset is like CopyValues but only copies a subset of values starting

+ // at the indicated index.

+ CopyValuesSubset(start int, out interface{})

+ // Get returns the index of the table the specified value is, and a boolean indicating

+ // whether or not the value was found in the table. Will panic if val is not the appropriate

+ // type for the underlying table.

+ Get(val interface{}) (int, bool)

+ // GetOrInsert is the same as Get, except if the value is not currently in the table it will

+ // be inserted into the table.

+ GetOrInsert(val interface{}) (idx int, existed bool, err error)

+ // GetNull returns the index of the null value and whether or not it was found in the table

+ GetNull() (int, bool)

+ // GetOrInsertNull returns the index of the null value, if it didn't already exist in the table,

+ // it is inserted.

+ GetOrInsertNull() (idx int, existed bool)

+}

+

+// BinaryMemoTable is an extension of the MemoTable interface adding extra methods

+// for handling byte arrays/strings/fixed length byte arrays.

+type BinaryMemoTable interface {

+ MemoTable

+ // ValuesSize returns the total number of bytes needed to copy all of the values

+ // from this table.

+ ValuesSize() int

Review comment:

No, it's just the raw bytes of the strings as they are stored like in an arrow array (since I back the binary memotable using a `BinaryBuilder` and just call `DataLen` on it). This is specifically used for copying the raw values as a single chunk of memory which is why the offsets are stored separately and copied out separately.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] emkornfield commented on pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

emkornfield commented on pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#issuecomment-889976220

sorry I have had less time then I would have liked recently for Arrow reviews, will try to get to this soon.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] emkornfield commented on a change in pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

emkornfield commented on a change in pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#discussion_r680026208

##########

File path: go/parquet/internal/encoding/levels.go

##########

@@ -0,0 +1,284 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+package encoding

+

+import (

+ "bytes"

+ "encoding/binary"

+ "io"

+ "math/bits"

+

+ "github.com/JohnCGriffin/overflow"

+ "github.com/apache/arrow/go/arrow/bitutil"

+ "github.com/apache/arrow/go/parquet"

+ format "github.com/apache/arrow/go/parquet/internal/gen-go/parquet"

+ "github.com/apache/arrow/go/parquet/internal/utils"

+)

+

+// LevelEncoder is for handling the encoding of Definition and Repetition levels

+// to parquet files.

+type LevelEncoder struct {

+ bitWidth int

+ rleLen int

+ encoding format.Encoding

+ rle *utils.RleEncoder

+ bit *utils.BitWriter

+}

+

+// LevelEncodingMaxBufferSize estimates the max number of bytes needed to encode data with the

+// specified encoding given the max level and number of buffered values provided.

+func LevelEncodingMaxBufferSize(encoding parquet.Encoding, maxLvl int16, nbuffered int) int {

+ bitWidth := bits.Len64(uint64(maxLvl))

+ nbytes := 0

+ switch encoding {

+ case parquet.Encodings.RLE:

+ nbytes = utils.MaxBufferSize(bitWidth, nbuffered) + utils.MinBufferSize(bitWidth)

+ case parquet.Encodings.BitPacked:

+ nbytes = int(bitutil.BytesForBits(int64(nbuffered * bitWidth)))

+ default:

+ panic("parquet: unknown encoding type for levels")

+ }

+ return nbytes

+}

+

+// Reset resets the encoder allowing it to be reused and updating the maxlevel to the new

+// specified value.

+func (l *LevelEncoder) Reset(maxLvl int16) {

+ l.bitWidth = bits.Len64(uint64(maxLvl))

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rle.Clear()

+ l.rle.BitWidth = l.bitWidth

+ case format.Encoding_BIT_PACKED:

+ l.bit.Clear()

+ default:

+ panic("parquet: unknown encoding type")

+ }

+}

+

+// Init is called to set up the desired encoding type, max level and underlying writer for a

+// level encoder to control where the resulting encoded buffer will end up.

+func (l *LevelEncoder) Init(encoding parquet.Encoding, maxLvl int16, w io.WriterAt) {

+ l.bitWidth = bits.Len64(uint64(maxLvl))

+ l.encoding = format.Encoding(encoding)

+ switch l.encoding {

+ case format.Encoding_RLE:

+ l.rle = utils.NewRleEncoder(w, l.bitWidth)

+ case format.Encoding_BIT_PACKED:

+ l.bit = utils.NewBitWriter(w)

+ default:

+ panic("parquet: unknown encoding type for levels")

+ }

+}

+

+// EncodeNoFlush encodes the provided levels in the encoder, but doesn't flush

+// the buffer and return it yet, appending these encoded values. Returns the number

Review comment:

does it ever return less than the values provided in lvls? If so please document it (if not maybe still note that this is simply for the API consumer's convenience?)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] zeroshade edited a comment on pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

zeroshade edited a comment on pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#issuecomment-896915175

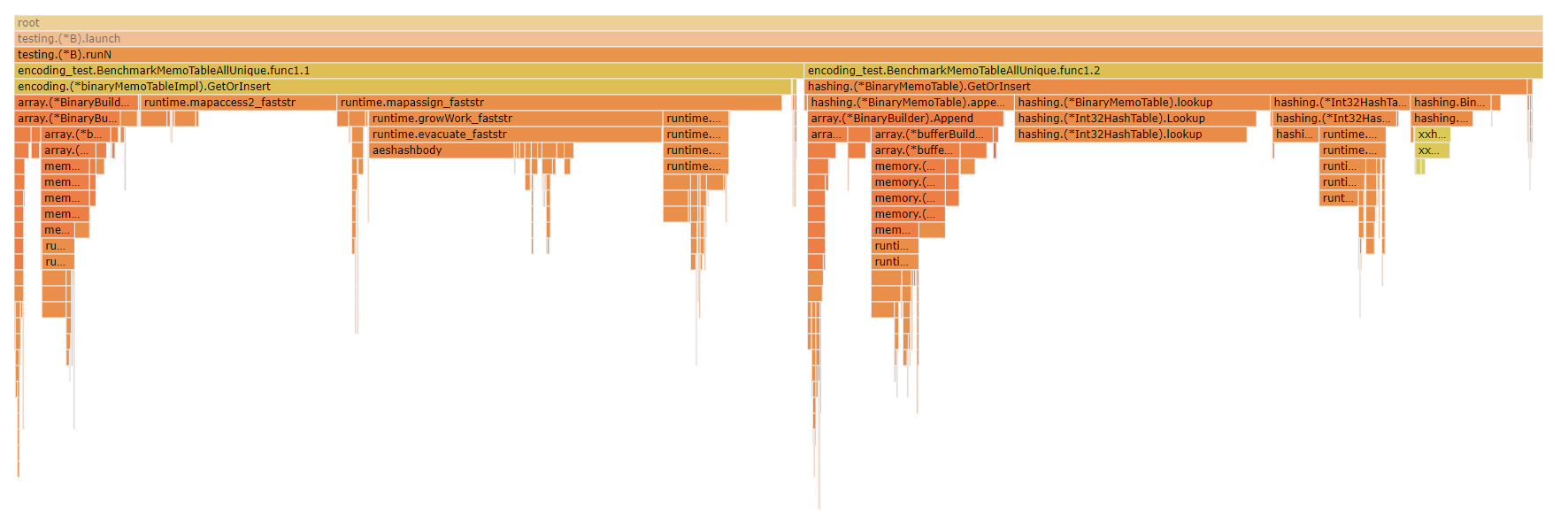

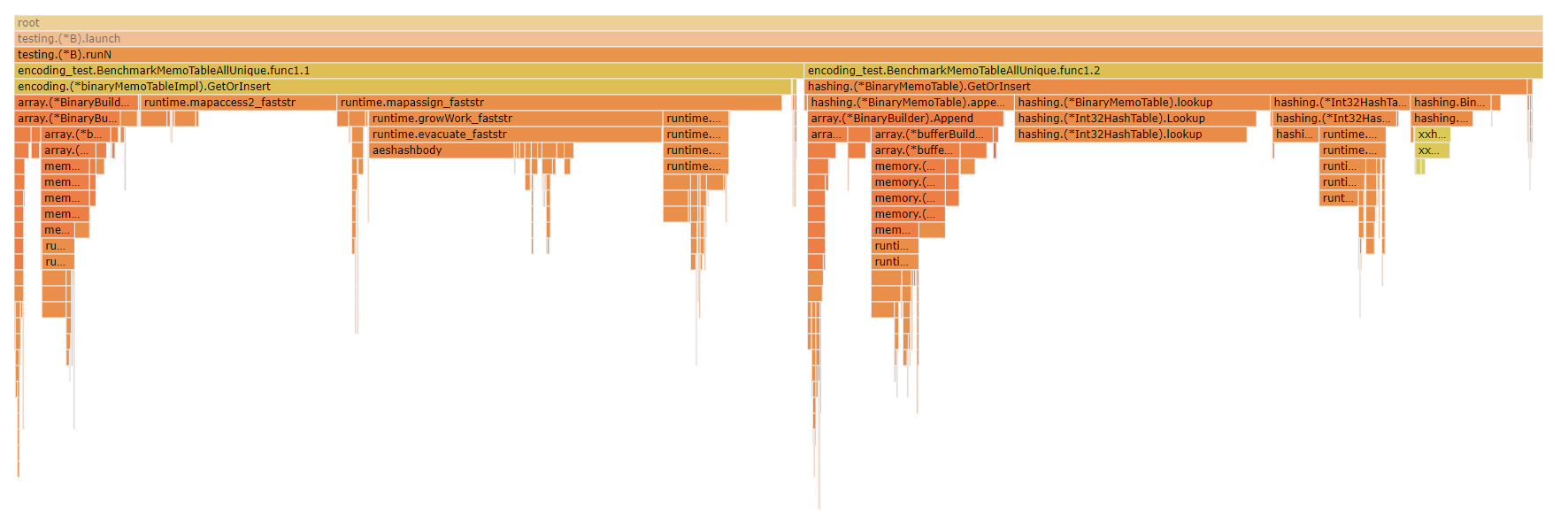

@emkornfield Just to tack on here, another interesting view is looking at a flame graph of the CPU profile for the `BenchmarkMemoTableAllUnique` benchmark case, just benchmarking the binary string case where the largest difference between the two is that in the builtin Go Map based implementation I use a `map[string]int` to map strings to their memo index, whereas in the custom implementation I use an `Int32HashTable` to map the hash of the string to the memo index, with the hash of the string being calculated with the custom hash implementation.

Looking at the flame graph you can see that a larger proportion of the CPU time for the builtin map-based implementation is spent in the map itself whether performing the hashes or accessing/growing/allocating vs adding the strings to the `BinaryBuilder` while in the xxh3 based custom implementation there's a smaller proportion of the time spent actually performing the hashing and the lookups/allocations. In the benchmarks I'm specifically using 0 when creating the new memo table to avoid pre-allocating in order to make the comparison between the go map implementation a closer / better comparison since, to my knowledge, there's no way to pre-allocate a size for the builtin golang map. But if I change that and have it actually use reserve to pre-allocate space the difference can become more pronounced.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] zeroshade commented on a change in pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

zeroshade commented on a change in pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#discussion_r686950122

##########

File path: go/parquet/internal/encoding/memo_table.go

##########

@@ -0,0 +1,380 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+package encoding

+

+import (

+ "math"

+ "unsafe"

+

+ "github.com/apache/arrow/go/arrow"

+ "github.com/apache/arrow/go/arrow/array"

+ "github.com/apache/arrow/go/arrow/memory"

+ "github.com/apache/arrow/go/parquet"

+ "github.com/apache/arrow/go/parquet/internal/hashing"

+)

+

+//go:generate go run ../../../arrow/_tools/tmpl/main.go -i -data=physical_types.tmpldata memo_table_types.gen.go.tmpl

+

+// MemoTable interface that can be used to swap out implementations of the hash table

+// used for handling dictionary encoding. Dictionary encoding is built against this interface

+// to make it easy for code generation and changing implementations.

+//

+// Values should remember the order they are inserted to generate a valid dictionary index

+type MemoTable interface {

+ // Reset drops everything in the table allowing it to be reused

+ Reset()

+ // Size returns the current number of unique values stored in the table

+ // including whether or not a null value has been passed in using GetOrInsertNull

+ Size() int

Review comment:

`int` is implementation defined, on 32 bit platforms `int` will be 32 bits, on 64 bit platforms it will be 64 bits.

In this memo table, I guess I assumed that it was unlikely that there'd ever be billions of elements in the table such that it was necessary or likely for a check to exceed the range of an int. Personally i'd prefer to not add the extra check inside of the insertion operation simply because it's a critical path that is likely to be inside of a tight loop so if possible, I'd prefer to avoid adding the check for whether the new size will exceed `math.MaxInt` but rather just document that the memotable has a limitation on the number of unique values it can hold being `MaxInt`. Thoughts?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] zeroshade commented on a change in pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

zeroshade commented on a change in pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#discussion_r686852669

##########

File path: go/parquet/internal/encoding/memo_table_test.go

##########

@@ -0,0 +1,284 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+package encoding_test

+

+import (

+ "math"

+ "testing"

+

+ "github.com/apache/arrow/go/arrow/memory"

+ "github.com/apache/arrow/go/parquet/internal/encoding"

+ "github.com/apache/arrow/go/parquet/internal/hashing"

+ "github.com/stretchr/testify/suite"

+)

+

+type MemoTableTestSuite struct {

+ suite.Suite

+}

+

+func TestMemoTable(t *testing.T) {

+ suite.Run(t, new(MemoTableTestSuite))

+}

+

+func (m *MemoTableTestSuite) assertGetNotFound(table encoding.MemoTable, v interface{}) {

+ _, ok := table.Get(v)

+ m.False(ok)

+}

+

+func (m *MemoTableTestSuite) assertGet(table encoding.MemoTable, v interface{}, expected int) {

+ idx, ok := table.Get(v)

+ m.Equal(expected, idx)

+ m.True(ok)

+}

+

+func (m *MemoTableTestSuite) assertGetOrInsert(table encoding.MemoTable, v interface{}, expected int) {

+ idx, _, err := table.GetOrInsert(v)

+ m.NoError(err)

+ m.Equal(expected, idx)

+}

+

+func (m *MemoTableTestSuite) assertGetNullNotFound(table encoding.MemoTable) {

+ _, ok := table.GetNull()

+ m.False(ok)

+}

+

+func (m *MemoTableTestSuite) assertGetNull(table encoding.MemoTable, expected int) {

+ idx, ok := table.GetNull()

+ m.Equal(expected, idx)

+ m.True(ok)

+}

+

+func (m *MemoTableTestSuite) assertGetOrInsertNull(table encoding.MemoTable, expected int) {

+ idx, _ := table.GetOrInsertNull()

+ m.Equal(expected, idx)

+}

+

+func (m *MemoTableTestSuite) TestInt64() {

+ const (

+ A int64 = 1234

+ B int64 = 0

+ C int64 = -98765321

+ D int64 = 12345678901234

+ E int64 = -1

+ F int64 = 1

+ G int64 = 9223372036854775807

+ H int64 = -9223372036854775807 - 1

+ )

+

+ // table := encoding.NewInt64MemoTable(nil)

+ table := hashing.NewInt64MemoTable(0)

+ m.Zero(table.Size())

+ m.assertGetNotFound(table, A)

+ m.assertGetNullNotFound(table)

+ m.assertGetOrInsert(table, A, 0)

+ m.assertGetNotFound(table, B)

+ m.assertGetOrInsert(table, B, 1)

+ m.assertGetOrInsert(table, C, 2)

+ m.assertGetOrInsert(table, D, 3)

+ m.assertGetOrInsert(table, E, 4)

+ m.assertGetOrInsertNull(table, 5)

+

+ m.assertGet(table, A, 0)

+ m.assertGetOrInsert(table, A, 0)

+ m.assertGet(table, E, 4)

+ m.assertGetOrInsert(table, E, 4)

+

+ m.assertGetOrInsert(table, F, 6)

+ m.assertGetOrInsert(table, G, 7)

+ m.assertGetOrInsert(table, H, 8)

+

+ m.assertGetOrInsert(table, G, 7)

+ m.assertGetOrInsert(table, F, 6)

+ m.assertGetOrInsertNull(table, 5)

+ m.assertGetOrInsert(table, E, 4)

+ m.assertGetOrInsert(table, D, 3)

+ m.assertGetOrInsert(table, C, 2)

+ m.assertGetOrInsert(table, B, 1)

+ m.assertGetOrInsert(table, A, 0)

+

+ const sz int = 9

+ m.Equal(sz, table.Size())

+ m.Panics(func() {

+ values := make([]int32, sz)

+ table.CopyValues(values)

+ }, "should panic because wrong type")

+ m.Panics(func() {

+ values := make([]int64, sz-3)

+ table.CopyValues(values)

+ }, "should panic because out of bounds")

+

+ {

+ values := make([]int64, sz)

+ table.CopyValues(values)

+ m.Equal([]int64{A, B, C, D, E, 0, F, G, H}, values)

+ }

+ {

+ const offset = 3

+ values := make([]int64, sz-offset)

+ table.CopyValuesSubset(offset, values)

+ m.Equal([]int64{D, E, 0, F, G, H}, values)

+ }

+}

+

+func (m *MemoTableTestSuite) TestFloat64() {

+ const (

+ A float64 = 0.0

+ B float64 = 1.5

+ C float64 = -0.1

+ )

+ var (

+ D = math.Inf(1)

+ E = -D

+ F = math.NaN()

Review comment:

That's a good point, i'll look up a couple different bit representations of Nan to compare with and add them in.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: github-unsubscribe@arrow.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [arrow] emkornfield commented on a change in pull request #10716: ARROW-13330: [Go][Parquet] Add the rest of the Encoding package

Posted by GitBox <gi...@apache.org>.

emkornfield commented on a change in pull request #10716:

URL: https://github.com/apache/arrow/pull/10716#discussion_r686489838

##########

File path: go/parquet/internal/encoding/memo_table.go

##########

@@ -0,0 +1,380 @@

+// Licensed to the Apache Software Foundation (ASF) under one

+// or more contributor license agreements. See the NOTICE file

+// distributed with this work for additional information

+// regarding copyright ownership. The ASF licenses this file

+// to you under the Apache License, Version 2.0 (the

+// "License"); you may not use this file except in compliance

+// with the License. You may obtain a copy of the License at

+//

+// http://www.apache.org/licenses/LICENSE-2.0

+//

+// Unless required by applicable law or agreed to in writing, software

+// distributed under the License is distributed on an "AS IS" BASIS,

+// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+// See the License for the specific language governing permissions and

+// limitations under the License.

+

+package encoding

+

+import (

+ "math"

+ "unsafe"

+

+ "github.com/apache/arrow/go/arrow"

+ "github.com/apache/arrow/go/arrow/array"

+ "github.com/apache/arrow/go/arrow/memory"

+ "github.com/apache/arrow/go/parquet"

+ "github.com/apache/arrow/go/parquet/internal/hashing"

+)

+

+//go:generate go run ../../../arrow/_tools/tmpl/main.go -i -data=physical_types.tmpldata memo_table_types.gen.go.tmpl

+

+// MemoTable interface that can be used to swap out implementations of the hash table

+// used for handling dictionary encoding. Dictionary encoding is built against this interface

+// to make it easy for code generation and changing implementations.

+//

+// Values should remember the order they are inserted to generate a valid dictionary index

+type MemoTable interface {

+ // Reset drops everything in the table allowing it to be reused

+ Reset()

+ // Size returns the current number of unique values stored in the table

+ // including whether or not a null value has been passed in using GetOrInsertNull

+ Size() int

+ // CopyValues populates out with the values currently in the table, out must

+ // be a slice of the appropriate type for the table type.

+ CopyValues(out interface{})

+ // CopyValuesSubset is like CopyValues but only copies a subset of values starting

+ // at the indicated index.