You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@tvm.apache.org by GitBox <gi...@apache.org> on 2022/12/28 04:43:05 UTC

[GitHub] [tvm] wangzy0327 opened a new issue, #13666: [Bug] rocm platform result are not correct

wangzy0327 opened a new issue, #13666:

URL: https://github.com/apache/tvm/issues/13666

I tried to execute mnist-model by tvm in rocm platform(rocm 5.2). The result of execution is error.

### Expected behavior

The result of rocm platform equals result of cuda platform or opencl platform

### Actual behavior

The result of rocm platform not equal result of cuda platform or opencl platform

### Environment

Operating System:Ubuntu 20.04

TVM version : 7f1856d34f03113dc3a7733c010be43446161944

platform: rocm 5.2

Any environment details, such as: Operating System, TVM version, etc

### Steps to reproduce

There is the test code.

<details>

<summary>onnx_rocm.py</summary>

```

from csv import writer

from pyexpat import model

import onnx

#from tvm.driver import tvmc

import numpy as np

import tvm

import tvm.relay as relay

from tvm.contrib import graph_executor

import tvm.testing

import numpy as np

import os

class NetworkData():

def __init__(self,name:str,net_set:list,prefix_str:str,suffix_str:str,input_name:str,input:tuple,output:tuple):

self.name = name

self.net_set = net_set

self.prefix_str = prefix_str

self.suffix_str = suffix_str

self.input_name = input_name

self.input = input

self.output = output

mnist_networkData = NetworkData(name = "mnist",

net_set = ["mnist-7","mnist-8"],

prefix_str = "mnist/model/",

suffix_str = ".onnx",

input_name = 'Input3',

input = (1,1,28,28),

output = (1,10))

MODEL_NAME = {

"mnist":mnist_networkData,

}

dtype="float32"

common_prefix_str = "onnx-model/vision/classification/"

tol_paras = [1e-7,1e-6,1e-5,1e-4,1e-3,1e-2]

import logging

logging.basicConfig(level=logging.ERROR)

import warnings

warnings.filterwarnings('ignore')

def build(target:str,mod:tvm.IRModule, params:dict, input_name:str, input_data:np.ndarray, input:tuple, output: tuple) -> np.ndarray:

tgt = tvm.target.Target(target=target, host="llvm")

with tvm.transform.PassContext(opt_level=3):

lib = relay.build(mod, target=target, params=params)

# print(lib.get_lib().imported_modules[0].get_source())

# print("------------------------source code start----------------------------")

# print(lib.get_lib().imported_modules[0].get_source())

# print("------------------------source code end----------------------------")

dev = tvm.device(str(target), 0)

module = graph_executor.GraphModule(lib["default"](dev))

module.set_input(input_name, input_data)

module.run()

output_shape = output

tvm_output = module.get_output(0, tvm.nd.empty(output_shape)).numpy()

return tvm_output

def main(model_network : NetworkData):

# 设置随机种子

np.random.seed(0)

I_np = np.random.uniform(size = model_network.input).astype(dtype)

print(I_np[0][0][0][:10])

header = ['network_name','network_sub_name','input','output','tolerance','rocm_cost_time','opencl_cost_time']

rows = []

for child_model_network in model_network.net_set:

print("--------"+child_model_network+"----start-------------")

onnx_model = onnx.load(common_prefix_str +

model_network.prefix_str +

child_model_network +

model_network.suffix_str)

shape_dict = {model_network.input_name: I_np.shape}

mod, params = relay.frontend.from_onnx(onnx_model, shape_dict)

import datetime

# opencl_starttime = datetime.datetime.now()

# opencl_output = build("opencl",mod = mod,params = params,input_name = model_network.input_name,input_data = I_np, input = I_np.shape, output = model_network.output)

# opencl_endtime = datetime.datetime.now()

# opencl_duringtime = opencl_endtime - opencl_starttime

# print("%15s network opencl cost time is %s s"%(child_model_network,opencl_duringtime))

rocm_starttime = datetime.datetime.now()

rocm_output = build("rocm",mod = mod,params = params,input_name = model_network.input_name,input_data = I_np, input = I_np.shape, output = model_network.output)

rocm_endtime = datetime.datetime.now()

rocm_duringtime = rocm_endtime - rocm_starttime

print("%15s network rocm cost time is %s s"%(child_model_network,rocm_duringtime))

opencl_starttime = datetime.datetime.now()

opencl_output = build("opencl",mod = mod,params = params,input_name = model_network.input_name,input_data = I_np, input = I_np.shape, output = model_network.output)

opencl_endtime = datetime.datetime.now()

opencl_duringtime = opencl_endtime - opencl_starttime

print("%15s network opencl cost time is %s s"%(child_model_network,opencl_duringtime))

if rocm_output.ndim > 2:

rocm_output = rocm_output.reshape(rocm_output.shape[0],rocm_output.shape[1])

opencl_output = opencl_output.reshape(opencl_output.shape[0],opencl_output.shape[1])

print(rocm_output[0][:10])

print(opencl_output[0][:10])

row = {'network_name': model_network.name,'network_sub_name':child_model_network, 'input':model_network.input, 'output':model_network.output, 'rocm_cost_time':rocm_duringtime,'opencl_cost_time':opencl_duringtime}

for para in tol_paras:

if np.allclose(rocm_output,opencl_output,rtol=para, atol=para):

row["tolerance"] = para

rows.append(row)

print("%15s opencl network tolerance is %g"%(child_model_network,para))

break

import csv

file_exist = False

access_mode = 'w+'

model_network_file = model_network.name+'_network_test_data.csv'

if os.path.exists(model_network_file):

file_exist = True

access_mode = 'a+'

with open(model_network_file,access_mode,encoding='utf-8',newline='') as f:

writer = csv.DictWriter(f,header)

if not file_exist :

writer.writeheader()

writer.writerows(rows)

pass

for name,each_network in MODEL_NAME.items():

print("-----------"+name+"----start----------------")

main(each_network)

```

</details>

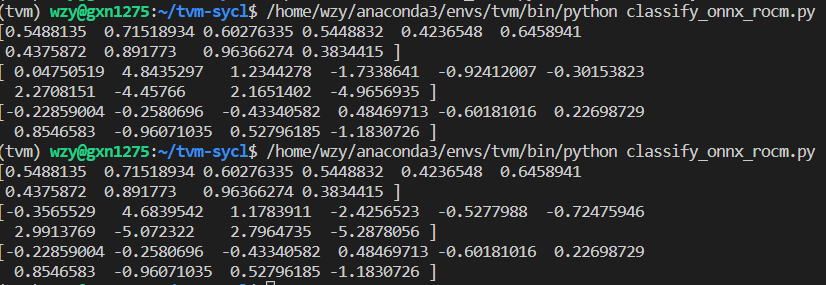

The result of program as follow.

### Triage

Please refer to the list of label tags [here](https://github.com/apache/tvm/wiki/Issue-Triage-Labels) to find the relevant tags and add them below in a bullet format (example below).

* needs-triage

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] mvermeulen commented on issue #13666: [Bug] rocm platform result are not correct

Posted by GitBox <gi...@apache.org>.

mvermeulen commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1387643084

Tried this with the following docker image I built from latest ROCm:

```

docker pull mevermeulen/rocm-tvm:5.4.2

```

I didn't have OpenCL built in that so I compared with CPU execution and I don't see an issue:

```

root@chilecito:/src/rocm-tvm/qa# python3 /home/mev/onnx_rocm.py

[0.5488135 0.71518934 0.60276335 0.5448832 0.4236548 0.6458941

0.4375872 0.891773 0.96366274 0.3834415 ]

[-0.22859086 -0.25806987 -0.43340546 0.4846983 -0.6018106 0.22698797

0.85465795 -0.9607101 0.5279621 -1.1830723 ]

[-0.22859041 -0.25806972 -0.43340546 0.4846975 -0.6018108 0.2269876

0.8546581 -0.9607104 0.527962 -1.1830723 ]

```

To compare against the CPU, I modified the last part of the program as follows:

```def main():

np.random.seed(0)

I_np = np.random.uniform(size = input_size).astype(dtype)

print(I_np[0][0][0][:10])

onnx_model = onnx.load("/home/mev/mnist-7.onnx")

mod,params = relay.frontend.from_onnx(onnx_model,{"Input3":I_np.shape})

rocm_output = build("rocm",mod = mod,params = params,input_name = input_name,input_data = I_np, input = I_np.shape, output = output_size)

cpu_output = build("llvm",mod = mod,params = params,input_name = input_name,input_data = I_np, input = I_np.shape, output = output_size)

# opencl_output = build("opencl",mod = mod,params = params,input_name = input_name,input_data = I_np, input = I_np.shape, output = output_size)

print(rocm_output[0][:10])

print(cpu_output[0][:10])

# print(opencl_output[0][:10])

```

@wangzy0327 does my docker work for you? If so, a spot you can use for comparison.

Also can you cross check that your ROCm installation and driver is properly installed. For example you can try:

```

prompt% rocminfo

prompt% cd /opt/rocm/share/hip/samples/0_Intro/square

prompt% make

prompt% cat square.out

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] wangzy0327 commented on issue #13666: [Bug] rocm platform result are not correct

Posted by GitBox <gi...@apache.org>.

wangzy0327 commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1370942424

@mashi

The minimal test case as follow.

<details>

<summary>onnx_rocm.py</summary>

```

from csv import writer

from pyexpat import model

import onnx

#from tvm.driver import tvmc

import numpy as np

import tvm

import tvm.relay as relay

from tvm.contrib import graph_executor

import tvm.testing

import numpy as np

import os

import logging

logging.basicConfig(level=logging.ERROR)

import warnings

warnings.filterwarnings('ignore')

//onnx-model mnist-7

def build(target:str,mod:tvm.IRModule, params:dict, input_name:str, input_data:np.ndarray, input:tuple, output: tuple) -> np.ndarray:

tgt = tvm.target.Target(target=target, host="llvm")

with tvm.transform.PassContext(opt_level=3):

lib = relay.build(mod, target=target, params=params)

dev = tvm.device(str(target), 0)

module = graph_executor.GraphModule(lib["default"](dev))

module.set_input(input_name, input_data)

module.run()

output_shape = output

tvm_output = module.get_output(0, tvm.nd.empty(output_shape)).numpy()

return tvm_output

def main(model_network : NetworkData):

# 设置随机种子

np.random.seed(0)

I_np = np.random.uniform(size = model_network.input).astype(dtype)

print(I_np[0][0][0][:10])

onnx_model = onnx.load("onnx-model/vision/classification/mnist/model/mnist-7.onnx")

mod,params = relay.frontend.from_onnx(onnx_model,{"Input3":I_np.shape})

rocm_output = build("rocm",mod = mod,params = params,input_name = model_network.input_name,input_data = I_np, input = I_np.shape, output = model_network.output)

opencl_output = build("opencl",mod = mod,params = params,input_name = model_network.input_name,input_data = I_np, input = I_np.shape, output = model_network.output)

print(rocm_output[0][:10])

print(opencl_output[0][:10])

for name,each_network in MODEL_NAME.items():

print("-----------"+name+"----start----------------")

main(each_network)

```

</details>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] wangzy0327 commented on issue #13666: [Bug] rocm platform result are not correct

Posted by "wangzy0327 (via GitHub)" <gi...@apache.org>.

wangzy0327 commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1495285831

> [mvermeulen](/mvermeulen)

@mvermeulen hello,I tried to run the docker mevermeulen/rocm-tvm:5.4.2 with the python(anaconda conda virtual on host OS), but I get the error.

```

root@a67fdbf3dda4:/src/tvm/build# /home/wzy/anaconda3/envs/tvm/bin/python /home/wzy/tvm-sycl/onnx_rocm.py

Traceback (most recent call last):

File "/home/wzy/tvm-sycl/onnx_rocm.py", line 5, in <module>

import tvm

File "/src/tvm/python/tvm/__init__.py", line 26, in <module>

from ._ffi.base import TVMError, __version__, _RUNTIME_ONLY

File "/src/tvm/python/tvm/_ffi/__init__.py", line 28, in <module>

from .base import register_error

File "/src/tvm/python/tvm/_ffi/base.py", line 71, in <module>

_LIB, _LIB_NAME = _load_lib()

File "/src/tvm/python/tvm/_ffi/base.py", line 57, in _load_lib

lib = ctypes.CDLL(lib_path[0], ctypes.RTLD_GLOBAL)

File "/home/wzy/anaconda3/envs/tvm/lib/python3.7/ctypes/__init__.py", line 364, in __init__

self._handle = _dlopen(self._name, mode)

OSError: /home/wzy/anaconda3/envs/tvm/bin/../lib/libstdc++.so.6: version `GLIBCXX_3.4.29' not found (required by /src/tvm/build/libtvm.so)

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] wangzy0327 commented on issue #13666: [Bug] rocm platform result are not correct

Posted by GitBox <gi...@apache.org>.

wangzy0327 commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1372001733

Remove NetworkData stuff.The "minimal" test which compare two outputs between rocm and opencl as follow.

<details>

<summary>onnx_rocm.py</summary>

```

from pyexpat import model

import onnx

#from tvm.driver import tvmc

import numpy as np

import tvm

import tvm.relay as relay

from tvm.contrib import graph_executor

import tvm.testing

import numpy as np

dtype="float32"

common_prefix_str = "onnx-model/vision/classification/"

tol_paras = [1e-7,1e-6,1e-5,1e-4,1e-3,1e-2]

input_name = "Input3"

input_size = (1,1,28,28)

output_size = (1,10)

import logging

logging.basicConfig(level=logging.ERROR)

import warnings

warnings.filterwarnings('ignore')

def build(target:str,mod:tvm.IRModule, params:dict, input_name:str, input_data:np.ndarray, input:tuple, output: tuple) -> np.ndarray:

tgt = tvm.target.Target(target=target, host="llvm")

with tvm.transform.PassContext(opt_level=3):

lib = relay.build(mod, target=target, params=params)

dev = tvm.device(str(target), 0)

module = graph_executor.GraphModule(lib["default"](dev))

module.set_input(input_name, input_data)

module.run()

output_shape = output

tvm_output = module.get_output(0, tvm.nd.empty(output_shape)).numpy()

return tvm_output

def main():

np.random.seed(0)

I_np = np.random.uniform(size = input_size).astype(dtype)

print(I_np[0][0][0][:10])

onnx_model = onnx.load("onnx-model/vision/classification/mnist/model/mnist-7.onnx")

mod,params = relay.frontend.from_onnx(onnx_model,{"Input3":I_np.shape})

rocm_output = build("rocm",mod = mod,params = params,input_name = input_name,input_data = I_np, input = I_np.shape, output = output_size)

opencl_output = build("opencl",mod = mod,params = params,input_name = input_name,input_data = I_np, input = I_np.shape, output = output_size)

print(rocm_output[0][:10])

print(opencl_output[0][:10])

main()

```

</details>

The result of output is as follow.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] masahi commented on issue #13666: [Bug] rocm platform result are not correct

Posted by "masahi (via GitHub)" <gi...@apache.org>.

masahi commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1495370643

see https://github.com/apache/tvm/issues/13666#issuecomment-1397176511

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] masahi commented on issue #13666: [Bug] rocm platform result are not correct

Posted by GitBox <gi...@apache.org>.

masahi commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1372042675

Okay reproduced.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] masahi commented on issue #13666: [Bug] rocm platform result are not correct

Posted by GitBox <gi...@apache.org>.

masahi commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1396406356

@mvermeulen I tried to use your image but got the following error

```

# rocminfo

ROCk module is loaded

Unable to open /dev/kfd read-write: No such file or directory

Failed to get user name to check for video group membership

```

while the command works outside of docker.

Also, to use rocm 5 with TVM we need the bitcode file change in https://github.com/masahi/tvm/commit/26d2701b7823ab4d93b8d980bc8689e9c03b2ee1 (which is not merged to `main`). Is this a correct fix, and have you already integrated this change for rocm 5.4 testing?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] wangzy0327 commented on issue #13666: [Bug] rocm platform result are not correct

Posted by GitBox <gi...@apache.org>.

wangzy0327 commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1396327672

ok, I will try to run it later. Can you tell me about the docker tvm on code commit?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] wangzy0327 commented on issue #13666: [Bug] rocm platform result are not correct

Posted by "wangzy0327 (via GitHub)" <gi...@apache.org>.

wangzy0327 commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1413100409

> Tried this with the following docker image I built from latest ROCm:

>

> ```

> docker pull mevermeulen/rocm-tvm:5.4.2

> ```

>

> I didn't have OpenCL built in that so I compared with CPU execution and I don't see an issue:

>

> ```

> root@chilecito:/src/rocm-tvm/qa# python3 /home/mev/onnx_rocm.py

> [0.5488135 0.71518934 0.60276335 0.5448832 0.4236548 0.6458941

> 0.4375872 0.891773 0.96366274 0.3834415 ]

> [-0.22859086 -0.25806987 -0.43340546 0.4846983 -0.6018106 0.22698797

> 0.85465795 -0.9607101 0.5279621 -1.1830723 ]

> [-0.22859041 -0.25806972 -0.43340546 0.4846975 -0.6018108 0.2269876

> 0.8546581 -0.9607104 0.527962 -1.1830723 ]

> ```

>

> To compare against the CPU, I modified the last part of the program as follows:

>

> ```

> np.random.seed(0)

> I_np = np.random.uniform(size = input_size).astype(dtype)

> print(I_np[0][0][0][:10])

> onnx_model = onnx.load("/home/mev/mnist-7.onnx")

> mod,params = relay.frontend.from_onnx(onnx_model,{"Input3":I_np.shape})

> rocm_output = build("rocm",mod = mod,params = params,input_name = input_name,input_data = I_np, input = I_np.shape, output = output_size)

> cpu_output = build("llvm",mod = mod,params = params,input_name = input_name,input_data = I_np, input = I_np.shape, output = output_size)

> # opencl_output = build("opencl",mod = mod,params = params,input_name = input_name,input_data = I_np, input = I_np.shape, output = output_size)

> print(rocm_output[0][:10])

> print(cpu_output[0][:10])

> # print(opencl_output[0][:10])

> ```

>

> @wangzy0327 does my docker work for you? If so, a spot you can use for comparison.

>

> Also can you cross check that your ROCm installation and driver is properly installed. For example you can try:

>

> ```

> prompt% rocminfo

>

> prompt% cd /opt/rocm/share/hip/samples/0_Intro/square

> prompt% make

> prompt% cat square.out

> ```

@mvermeulen

I compare two platform `rocm` and `rocm -libs=miopen` on tvm v0.10.0 version to run the code as follow.

<details>

<summary>onnx_rocm.py</summary>

```

from pyexpat import model

import onnx

#from tvm.driver import tvmc

import numpy as np

import tvm

import tvm.relay as relay

from tvm.contrib import graph_executor

import tvm.testing

import numpy as np

dtype="float32"

common_prefix_str = "onnx-model/vision/classification/"

tol_paras = [1e-7,1e-6,1e-5,1e-4,1e-3,1e-2]

input_name = "Input3"

input_size = (1,1,28,28)

output_size = (1,10)

import logging

logging.basicConfig(level=logging.ERROR)

import warnings

warnings.filterwarnings('ignore')

def build(target:str,mod:tvm.IRModule, params:dict, input_name:str, input_data:np.ndarray, input:tuple, output: tuple) -> np.ndarray:

tgt = tvm.target.Target(target=target, host="llvm")

with tvm.transform.PassContext(opt_level=3):

lib = relay.build(mod, target=target, params=params)

dev = tvm.device(str(target), 0)

module = graph_executor.GraphModule(lib["default"](dev))

module.set_input(input_name, input_data)

module.run()

output_shape = output

tvm_output = module.get_output(0, tvm.nd.empty(output_shape)).numpy()

return tvm_output

def main():

np.random.seed(0)

I_np = np.random.uniform(size = input_size).astype(dtype)

print(I_np[0][0][0][:10])

onnx_model = onnx.load("onnx-model/vision/classification/mnist/model/mnist-7.onnx")

mod,params = relay.frontend.from_onnx(onnx_model,{"Input3":I_np.shape})

rocm_lib_output = build("rocm -libs=miopen",mod = mod,params = params,input_name = input_name,input_data = I_np, input = I_np.shape, output = output_size)

rocm_output = build("rocm",mod = mod,params = params,input_name = input_name,input_data = I_np, input = I_np.shape, output = output_size)

opencl_output = build("opencl",mod = mod,params = params,input_name = input_name,input_data = I_np, input = I_np.shape, output = output_size)

print(rocm_output[0][:10])

print(rocm_lib_output[0][:10])

print(opencl_output[0][:10])

main()

```

</details>

I get the error on AMD gfx908 device . The error is `ValueError:Cannot find global function tvm.contrib.miopen.conv2d.setup` .

How to fix it ?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] masahi commented on issue #13666: [Bug] rocm platform result are not correct

Posted by "masahi (via GitHub)" <gi...@apache.org>.

masahi commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1404538202

Ok I don't know what is wrong with the original schedule, but using the other one fixes this issue https://github.com/apache/tvm/pull/13847

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] masahi commented on issue #13666: [Bug] rocm platform result are not correct

Posted by GitBox <gi...@apache.org>.

masahi commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1371979718

I said "minimal" test. Remove `NetworkData` stuff which is not even defined.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] wangzy0327 commented on issue #13666: [Bug] rocm platform result are not correct

Posted by "wangzy0327 (via GitHub)" <gi...@apache.org>.

wangzy0327 commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1413131336

> Try building with `USE_ROCBLAS=ON` and use `-libs=rocblas` (not miopen)

And there is the result output that is not correct

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] wangzy0327 commented on issue #13666: [Bug] rocm platform result are not correct

Posted by "wangzy0327 (via GitHub)" <gi...@apache.org>.

wangzy0327 commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1413075362

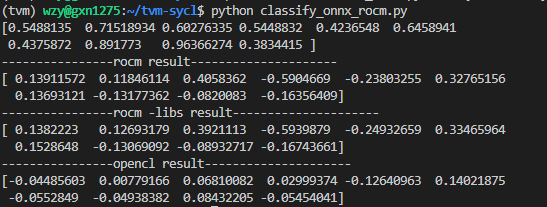

@masahi Hi,I have tried to fix the code as #13847 and run the code on gfx908(MI100)

<summary>onnx_rocm.py</summary>

```

from pyexpat import model

import onnx

#from tvm.driver import tvmc

import numpy as np

import tvm

import tvm.relay as relay

from tvm.contrib import graph_executor

import tvm.testing

import numpy as np

dtype="float32"

common_prefix_str = "onnx-model/vision/classification/"

tol_paras = [1e-7,1e-6,1e-5,1e-4,1e-3,1e-2]

input_name = "Input3"

input_size = (1,1,28,28)

output_size = (1,10)

import logging

logging.basicConfig(level=logging.ERROR)

import warnings

warnings.filterwarnings('ignore')

def build(target:str,mod:tvm.IRModule, params:dict, input_name:str, input_data:np.ndarray, input:tuple, output: tuple) -> np.ndarray:

tgt = tvm.target.Target(target=target, host="llvm")

with tvm.transform.PassContext(opt_level=3):

lib = relay.build(mod, target=target, params=params)

dev = tvm.device(str(target), 0)

module = graph_executor.GraphModule(lib["default"](dev))

module.set_input(input_name, input_data)

module.run()

output_shape = output

tvm_output = module.get_output(0, tvm.nd.empty(output_shape)).numpy()

return tvm_output

def main():

np.random.seed(0)

I_np = np.random.uniform(size = input_size).astype(dtype)

print(I_np[0][0][0][:10])

onnx_model = onnx.load("onnx-model/vision/classification/mnist/model/mnist-7.onnx")

mod,params = relay.frontend.from_onnx(onnx_model,{"Input3":I_np.shape})

rocm_output = build("rocm",mod = mod,params = params,input_name = input_name,input_data = I_np, input = I_np.shape, output = output_size)

opencl_output = build("opencl",mod = mod,params = params,input_name = input_name,input_data = I_np, input = I_np.shape, output = output_size)

print(rocm_output[0][:10])

print(opencl_output[0][:10])

main()

```

The result as follows:

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] wangzy0327 commented on issue #13666: [Bug] rocm platform result are not correct

Posted by "wangzy0327 (via GitHub)" <gi...@apache.org>.

wangzy0327 commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1413906080

> > I get the error on AMD gfx908 device . The error is ValueError:Cannot find global

> > function tvm.contrib.miopen.conv2d.setup .

> > How to fix it ?

>

> What is your setting for USE_MIOPEN configuration variable?

@mvermeulen

Set(USE_MIOPEN ON)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] masahi commented on issue #13666: [Bug] rocm platform result are not correct

Posted by "masahi (via GitHub)" <gi...@apache.org>.

masahi commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1404527150

Interesting! I can also confirm that `-libs=rocblas` works. So this is specific to `dense` op. It's also interesting to hear that gfx906 works fine.

I'll look into what's wrong with dense codegen for rocm.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] mvermeulen commented on issue #13666: [Bug] rocm platform result are not correct

Posted by GitBox <gi...@apache.org>.

mvermeulen commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1397176511

@masahi most likely you are missing arguments when starting up the docker container. Here is how I run it:

```

docker run -it --device=/dev/dri --device=/dev/kfd --network=host --group-add=render -v /home/mev:/home/mev mevermeulen/rocm-tvm:5.4.2 /bin/bash

```

The --device options make sure the GPU devices are also available inside the docker image. When this is done, /dev/kfd is created and has read/write permissions by the "render" group. On my system, I happened to run as root so it worked anyways but if I were somehow running as a non-root user (either inside or outside the docker), I would want to be part of the group to get permissions to the device files.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] masahi commented on issue #13666: [Bug] rocm platform result are not correct

Posted by GitBox <gi...@apache.org>.

masahi commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1397695640

@mvermeulen Thanks I got it working, but the result is the same as my non-docker execution: LLVM and rocm results are different.

```

LLVM output

[-0.22859041 -0.25806972 -0.43340546 0.4846975 -0.6018108 0.2269876

0.8546581 -0.9607104 0.527962 -1.1830723 ]

rocm output

[ 0.64347816 -1.4370097 -1.527026 1.1573262 -0.03408854 -1.1726259

0.8344087 -1.231696 0.9886506 -1.0510902 ]

```

Also, running https://github.com/apache/tvm/blob/main/gallery/how_to/compile_models/from_pytorch.py inside the container (after changing the target to rocm) I get

```

Relay top-1 id: 277, class name: red fox, Vulpes vulpes

Torch top-1 id: 281, class name: tabby, tabby cat

```

which is an incorrect result (the same as non-docker execution).

It would be interesting if the result depends on the GPU. Mine is RX 6600xt which is not officially supported.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] junrushao closed issue #13666: [Bug] rocm platform result are not correct

Posted by "junrushao (via GitHub)" <gi...@apache.org>.

junrushao closed issue #13666: [Bug] rocm platform result are not correct

URL: https://github.com/apache/tvm/issues/13666

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] masahi commented on issue #13666: [Bug] rocm platform result are not correct

Posted by "masahi (via GitHub)" <gi...@apache.org>.

masahi commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1413128673

Try building with `USE_ROCBLAS=ON` and use `-libs=rocblas` (not miopen)

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] masahi commented on issue #13666: [Bug] rocm platform result are not correct

Posted by GitBox <gi...@apache.org>.

masahi commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1370777170

Does https://github.com/apache/tvm/blob/main/apps/topi_recipe/gemm/cuda_gemm_square.py run successfully for you?

Also, please make your test case minimal. It is not obvious what it is doing at a quick glance.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] mvermeulen commented on issue #13666: [Bug] rocm platform result are not correct

Posted by "mvermeulen (via GitHub)" <gi...@apache.org>.

mvermeulen commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1404483929

@masahi - some further characterization:

1. Using Radeon VII (gfx906): both the onnx_rocm.py and the from_pytorch.py work as expected. In particular, the relay/torch graphs both indicate id 281 and other results are as reported above.

2. Using RX 6900XT (gfx1030); I see similar failure to what you report above. However, if I change the target specification to be: ```target = tvm.target.Target("rocm -libs=rocblas", host="llvm")``` then it behaves the same as Radeon VII.

3. Using RX 6800m (gfx1031); then I need to set the environment variable ```HSA_OVERRIDE_GFX_VERSION=10.3.0``` and then using ```-libs=rocblas``` again causes things to pass.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] mvermeulen commented on issue #13666: [Bug] rocm platform result are not correct

Posted by "mvermeulen (via GitHub)" <gi...@apache.org>.

mvermeulen commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1413902505

> I get the error on AMD gfx908 device . The error is ValueError:Cannot find global

> function tvm.contrib.miopen.conv2d.setup .

> How to fix it ?

What is your setting for USE_MIOPEN configuration variable?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] wangzy0327 commented on issue #13666: [Bug] rocm platform result are not correct

Posted by "wangzy0327 (via GitHub)" <gi...@apache.org>.

wangzy0327 commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1495361255

@mvermeulen I execute ```rocminfo``` in docker rocm-tvm,but get error as below.

```

root@0e7c68384def:/opt/rocm/bin# ./rocminfo

ROCk module is loaded

Unable to open /dev/kfd read-write: No such file or directory

Failed to get user name to check for video group membership

```

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [tvm] wangzy0327 commented on issue #13666: [Bug] rocm platform result are not correct

Posted by GitBox <gi...@apache.org>.

wangzy0327 commented on issue #13666:

URL: https://github.com/apache/tvm/issues/13666#issuecomment-1372045435

> Okay reproduced.

How to solve this problem?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@tvm.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org