You are viewing a plain text version of this content. The canonical link for it is here.

Posted to reviews@spark.apache.org by GitBox <gi...@apache.org> on 2021/04/16 08:07:03 UTC

[GitHub] [spark] itholic opened a new pull request #32204: [SPARK-34494] Move JSON data source options from Python and Scala into a single page

itholic opened a new pull request #32204:

URL: https://github.com/apache/spark/pull/32204

### What changes were proposed in this pull request?

This PR proposes move JSON data source options from Python, Scala and Java into a single page.

### Why are the changes needed?

So far, the documentation for JSON data source options is separated into different pages for each language API documents. However, this makes managing many options inconvenient, so it is efficient to manage all options in a single page and provide a link to that page in the API of each language.

### Does this PR introduce _any_ user-facing change?

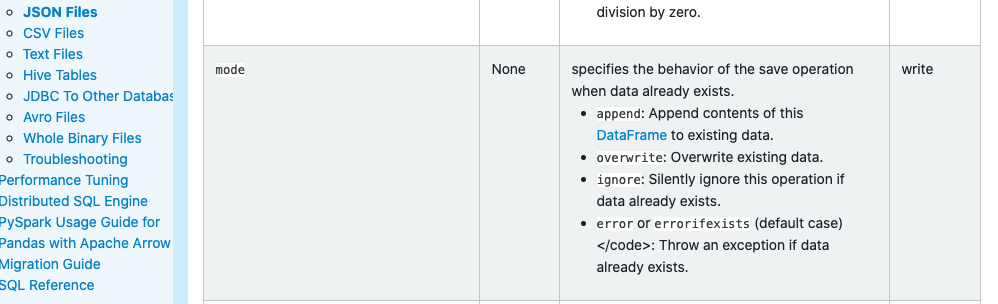

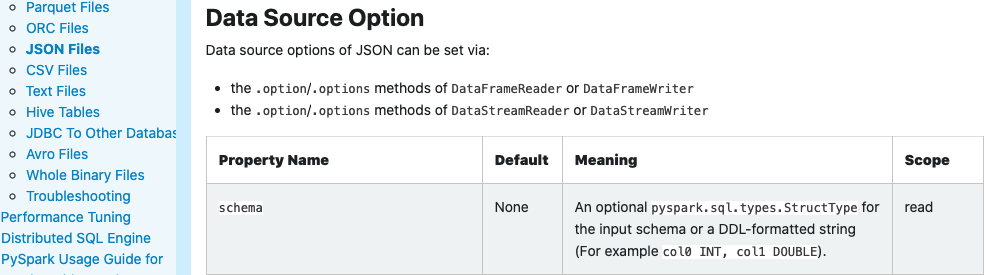

Yes, the documents will be shown below after this change:

- Python

<img width="714" alt="Screen Shot 2021-04-16 at 5 04 11 PM" src="https://user-images.githubusercontent.com/44108233/114992491-ca0cef00-9ed5-11eb-9d0f-4de60d8b2516.png">

- Scala

<img width="726" alt="Screen Shot 2021-04-16 at 5 04 54 PM" src="https://user-images.githubusercontent.com/44108233/114992594-e315a000-9ed5-11eb-8bd3-af7e568fcfe1.png">

- Java

<img width="911" alt="Screen Shot 2021-04-16 at 5 06 11 PM" src="https://user-images.githubusercontent.com/44108233/114992751-10624e00-9ed6-11eb-888c-8668d3c74289.png">

### How was this patch tested?

Manually build docs and confirm the page.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] itholic commented on a change in pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

itholic commented on a change in pull request #32204:

URL: https://github.com/apache/spark/pull/32204#discussion_r630662846

##########

File path: python/pyspark/sql/readwriter.py

##########

@@ -233,114 +233,13 @@ def json(self, path, schema=None, primitivesAsString=None, prefersDecimal=None,

path : str, list or :class:`RDD`

string represents path to the JSON dataset, or a list of paths,

or RDD of Strings storing JSON objects.

- schema : :class:`pyspark.sql.types.StructType` or str, optional

- an optional :class:`pyspark.sql.types.StructType` for the input schema or

- a DDL-formatted string (For example ``col0 INT, col1 DOUBLE``).

- primitivesAsString : str or bool, optional

- infers all primitive values as a string type. If None is set,

- it uses the default value, ``false``.

- prefersDecimal : str or bool, optional

- infers all floating-point values as a decimal type. If the values

- do not fit in decimal, then it infers them as doubles. If None is

- set, it uses the default value, ``false``.

- allowComments : str or bool, optional

- ignores Java/C++ style comment in JSON records. If None is set,

- it uses the default value, ``false``.

- allowUnquotedFieldNames : str or bool, optional

- allows unquoted JSON field names. If None is set,

- it uses the default value, ``false``.

- allowSingleQuotes : str or bool, optional

- allows single quotes in addition to double quotes. If None is

- set, it uses the default value, ``true``.

- allowNumericLeadingZero : str or bool, optional

- allows leading zeros in numbers (e.g. 00012). If None is

- set, it uses the default value, ``false``.

- allowBackslashEscapingAnyCharacter : str or bool, optional

- allows accepting quoting of all character

- using backslash quoting mechanism. If None is

- set, it uses the default value, ``false``.

- mode : str, optional

- allows a mode for dealing with corrupt records during parsing. If None is

- set, it uses the default value, ``PERMISSIVE``.

-

- * ``PERMISSIVE``: when it meets a corrupted record, puts the malformed string \

- into a field configured by ``columnNameOfCorruptRecord``, and sets malformed \

- fields to ``null``. To keep corrupt records, an user can set a string type \

- field named ``columnNameOfCorruptRecord`` in an user-defined schema. If a \

- schema does not have the field, it drops corrupt records during parsing. \

- When inferring a schema, it implicitly adds a ``columnNameOfCorruptRecord`` \

- field in an output schema.

- * ``DROPMALFORMED``: ignores the whole corrupted records.

- * ``FAILFAST``: throws an exception when it meets corrupted records.

- columnNameOfCorruptRecord: str, optional

- allows renaming the new field having malformed string

- created by ``PERMISSIVE`` mode. This overrides

- ``spark.sql.columnNameOfCorruptRecord``. If None is set,

- it uses the value specified in

- ``spark.sql.columnNameOfCorruptRecord``.

- dateFormat : str, optional

- sets the string that indicates a date format. Custom date formats

- follow the formats at

- `datetime pattern <https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html>`_. # noqa

- This applies to date type. If None is set, it uses the

- default value, ``yyyy-MM-dd``.

- timestampFormat : str, optional

- sets the string that indicates a timestamp format.

- Custom date formats follow the formats at

- `datetime pattern <https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html>`_. # noqa

- This applies to timestamp type. If None is set, it uses the

- default value, ``yyyy-MM-dd'T'HH:mm:ss[.SSS][XXX]``.

- multiLine : str or bool, optional

- parse one record, which may span multiple lines, per file. If None is

- set, it uses the default value, ``false``.

- allowUnquotedControlChars : str or bool, optional

- allows JSON Strings to contain unquoted control

- characters (ASCII characters with value less than 32,

- including tab and line feed characters) or not.

- encoding : str or bool, optional

- allows to forcibly set one of standard basic or extended encoding for

- the JSON files. For example UTF-16BE, UTF-32LE. If None is set,

- the encoding of input JSON will be detected automatically

- when the multiLine option is set to ``true``.

- lineSep : str, optional

- defines the line separator that should be used for parsing. If None is

- set, it covers all ``\\r``, ``\\r\\n`` and ``\\n``.

- samplingRatio : str or float, optional

- defines fraction of input JSON objects used for schema inferring.

- If None is set, it uses the default value, ``1.0``.

- dropFieldIfAllNull : str or bool, optional

- whether to ignore column of all null values or empty

- array/struct during schema inference. If None is set, it

- uses the default value, ``false``.

- locale : str, optional

- sets a locale as language tag in IETF BCP 47 format. If None is set,

- it uses the default value, ``en-US``. For instance, ``locale`` is used while

- parsing dates and timestamps.

- pathGlobFilter : str or bool, optional

Review comment:

Thanks for the comment, @HyukjinKwon

It's documented in [Generic File Source Options](https://spark.apache.org/docs/latest/sql-data-sources-generic-options.html#path-global-filter), so removed it from the docstring.

Then, should we add the link to Generic File Source Options, too? or just keep it here??

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

SparkQA removed a comment on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-843064865

**[Test build #138678 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138678/testReport)** for PR 32204 at commit [`b7171f2`](https://github.com/apache/spark/commit/b7171f2348db967bcefbb5efa7400deab15f5f23).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-840315107

Kubernetes integration test starting

URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/43012/

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-842401491

**[Test build #138633 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138633/testReport)** for PR 32204 at commit [`cd9f103`](https://github.com/apache/spark/commit/cd9f103683deb5c5d722dbddf9f6c9505336f8bd).

* This patch passes all tests.

* This patch merges cleanly.

* This patch adds the following public classes _(experimental)_:

* `case class UpdatingSessionsExec(`

* `class UpdatingSessionsIterator(`

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

HyukjinKwon commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-840312271

@itholic:

1. Please check the option **one by one** and see if each exists.

2. Document general options in https://spark.apache.org/docs/latest/sql-data-sources-generic-options.html if there are missing ones

3. If you're going to do this separately in a separate JIRA, don't remove general options in API documentations for now.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

AmplabJenkins removed a comment on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-840934265

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder-K8s/43050/

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] itholic edited a comment on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

itholic edited a comment on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-840913159

Thanks, @HyukjinKwon .

I checked one by one, and seems like the general options are already documentd to the https://spark.apache.org/docs/latest/sql-data-sources-generic-options.html. I think the parameters I documented & removed are all of JSON specific options ??

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

AmplabJenkins commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-841040366

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder-K8s/43070/

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

SparkQA removed a comment on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-840291088

**[Test build #138492 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138492/testReport)** for PR 32204 at commit [`a386788`](https://github.com/apache/spark/commit/a386788b44fb5255d2784ce423e3f879ba97f53c).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

HyukjinKwon commented on a change in pull request #32204:

URL: https://github.com/apache/spark/pull/32204#discussion_r631575888

##########

File path: python/pyspark/sql/readwriter.py

##########

@@ -1196,39 +1097,13 @@ def json(self, path, mode=None, compression=None, dateFormat=None, timestampForm

----------

path : str

the path in any Hadoop supported file system

- mode : str, optional

Review comment:

mode is a general option

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-845617127

Kubernetes integration test starting

URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/43302/

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] itholic commented on a change in pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

itholic commented on a change in pull request #32204:

URL: https://github.com/apache/spark/pull/32204#discussion_r632177276

##########

File path: python/pyspark/sql/readwriter.py

##########

@@ -1196,39 +1097,13 @@ def json(self, path, mode=None, compression=None, dateFormat=None, timestampForm

----------

path : str

the path in any Hadoop supported file system

- mode : str, optional

Review comment:

yeah, so I documented this to the "Data Source Options" table in JSON Files page, and removed here.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] HyukjinKwon closed pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

HyukjinKwon closed pull request #32204:

URL: https://github.com/apache/spark/pull/32204

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on pull request #32204: [SPARK-34494] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

SparkQA removed a comment on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-822298400

**[Test build #137597 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/137597/testReport)** for PR 32204 at commit [`c31c6f0`](https://github.com/apache/spark/commit/c31c6f07db757ed3cb44e0b142f544c499f82a7d).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

HyukjinKwon commented on a change in pull request #32204:

URL: https://github.com/apache/spark/pull/32204#discussion_r636568884

##########

File path: sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

##########

@@ -441,81 +390,13 @@ class DataFrameReader private[sql](sparkSession: SparkSession) extends Logging {

* This function goes through the input once to determine the input schema. If you know the

* schema in advance, use the version that specifies the schema to avoid the extra scan.

*

- * You can set the following JSON-specific options to deal with non-standard JSON files:

- * <ul>

- * <li>`primitivesAsString` (default `false`): infers all primitive values as a string type</li>

- * <li>`prefersDecimal` (default `false`): infers all floating-point values as a decimal

- * type. If the values do not fit in decimal, then it infers them as doubles.</li>

- * <li>`allowComments` (default `false`): ignores Java/C++ style comment in JSON records</li>

- * <li>`allowUnquotedFieldNames` (default `false`): allows unquoted JSON field names</li>

- * <li>`allowSingleQuotes` (default `true`): allows single quotes in addition to double quotes

- * </li>

- * <li>`allowNumericLeadingZeros` (default `false`): allows leading zeros in numbers

- * (e.g. 00012)</li>

- * <li>`allowBackslashEscapingAnyCharacter` (default `false`): allows accepting quoting of all

- * character using backslash quoting mechanism</li>

- * <li>`allowUnquotedControlChars` (default `false`): allows JSON Strings to contain unquoted

- * control characters (ASCII characters with value less than 32, including tab and line feed

- * characters) or not.</li>

- * <li>`mode` (default `PERMISSIVE`): allows a mode for dealing with corrupt records

- * during parsing.

- * <ul>

- * <li>`PERMISSIVE` : when it meets a corrupted record, puts the malformed string into a

- * field configured by `columnNameOfCorruptRecord`, and sets malformed fields to `null`. To

- * keep corrupt records, an user can set a string type field named

- * `columnNameOfCorruptRecord` in an user-defined schema. If a schema does not have the

- * field, it drops corrupt records during parsing. When inferring a schema, it implicitly

- * adds a `columnNameOfCorruptRecord` field in an output schema.</li>

- * <li>`DROPMALFORMED` : ignores the whole corrupted records.</li>

- * <li>`FAILFAST` : throws an exception when it meets corrupted records.</li>

- * </ul>

- * </li>

- * <li>`columnNameOfCorruptRecord` (default is the value specified in

- * `spark.sql.columnNameOfCorruptRecord`): allows renaming the new field having malformed string

- * created by `PERMISSIVE` mode. This overrides `spark.sql.columnNameOfCorruptRecord`.</li>

- * <li>`dateFormat` (default `yyyy-MM-dd`): sets the string that indicates a date format.

- * Custom date formats follow the formats at

- * <a href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html">

- * Datetime Patterns</a>.

- * This applies to date type.</li>

- * <li>`timestampFormat` (default `yyyy-MM-dd'T'HH:mm:ss[.SSS][XXX]`): sets the string that

- * indicates a timestamp format. Custom date formats follow the formats at

- * <a href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html">

- * Datetime Patterns</a>.

- * This applies to timestamp type.</li>

- * <li>`multiLine` (default `false`): parse one record, which may span multiple lines,

- * per file</li>

- * <li>`encoding` (by default it is not set): allows to forcibly set one of standard basic

- * or extended encoding for the JSON files. For example UTF-16BE, UTF-32LE. If the encoding

- * is not specified and `multiLine` is set to `true`, it will be detected automatically.</li>

- * <li>`lineSep` (default covers all `\r`, `\r\n` and `\n`): defines the line separator

- * that should be used for parsing.</li>

- * <li>`samplingRatio` (default is 1.0): defines fraction of input JSON objects used

- * for schema inferring.</li>

- * <li>`dropFieldIfAllNull` (default `false`): whether to ignore column of all null values or

- * empty array/struct during schema inference.</li>

- * <li>`locale` (default is `en-US`): sets a locale as language tag in IETF BCP 47 format.

- * For instance, this is used while parsing dates and timestamps.</li>

- * <li>`pathGlobFilter`: an optional glob pattern to only include files with paths matching

- * the pattern. The syntax follows <code>org.apache.hadoop.fs.GlobFilter</code>.

- * It does not change the behavior of partition discovery.</li>

- * <li>`modifiedBefore` (batch only): an optional timestamp to only include files with

- * modification times occurring before the specified Time. The provided timestamp

- * must be in the following form: YYYY-MM-DDTHH:mm:ss (e.g. 2020-06-01T13:00:00)</li>

- * <li>`modifiedAfter` (batch only): an optional timestamp to only include files with

- * modification times occurring after the specified Time. The provided timestamp

- * must be in the following form: YYYY-MM-DDTHH:mm:ss (e.g. 2020-06-01T13:00:00)</li>

- * <li>`recursiveFileLookup`: recursively scan a directory for files. Using this option

- * disables partition discovery</li>

- * <li>`allowNonNumericNumbers` (default `true`): allows JSON parser to recognize set of

- * "Not-a-Number" (NaN) tokens as legal floating number values:

- * <ul>

- * <li>`+INF` for positive infinity, as well as alias of `+Infinity` and `Infinity`.

- * <li>`-INF` for negative infinity), alias `-Infinity`.

- * <li>`NaN` for other not-a-numbers, like result of division by zero.

- * </ul>

- * </li>

- * </ul>

+ * You can find the JSON-specific options for reading JSON files in

+ * <a href="https://spark.apache.org/docs/latest/sql-data-sources-json.html#data-source-option">

+ * Data Source Option</a> in the version you use.

+ * More general options can be found in

+ * <a href=

+ * "https://spark.apache.org/docs/latest/sql-data-sources-generic-options.html">

+ * Generic Files Source Options</a> in the version you use.

Review comment:

Shall we remove this too?

##########

File path: python/pyspark/sql/streaming.py

##########

@@ -507,102 +479,15 @@ def json(self, path, schema=None, primitivesAsString=None, prefersDecimal=None,

schema : :class:`pyspark.sql.types.StructType` or str, optional

an optional :class:`pyspark.sql.types.StructType` for the input schema

or a DDL-formatted string (For example ``col0 INT, col1 DOUBLE``).

- primitivesAsString : str or bool, optional

- infers all primitive values as a string type. If None is set,

- it uses the default value, ``false``.

- prefersDecimal : str or bool, optional

- infers all floating-point values as a decimal type. If the values

- do not fit in decimal, then it infers them as doubles. If None is

- set, it uses the default value, ``false``.

- allowComments : str or bool, optional

- ignores Java/C++ style comment in JSON records. If None is set,

- it uses the default value, ``false``.

- allowUnquotedFieldNames : str or bool, optional

- allows unquoted JSON field names. If None is set,

- it uses the default value, ``false``.

- allowSingleQuotes : str or bool, optional

- allows single quotes in addition to double quotes. If None is

- set, it uses the default value, ``true``.

- allowNumericLeadingZero : str or bool, optional

- allows leading zeros in numbers (e.g. 00012). If None is

- set, it uses the default value, ``false``.

- allowBackslashEscapingAnyCharacter : str or bool, optional

- allows accepting quoting of all character

- using backslash quoting mechanism. If None is

- set, it uses the default value, ``false``.

- mode : str, optional

- allows a mode for dealing with corrupt records during parsing. If None is

- set, it uses the default value, ``PERMISSIVE``.

-

- * ``PERMISSIVE``: when it meets a corrupted record, puts the malformed string \

- into a field configured by ``columnNameOfCorruptRecord``, and sets malformed \

- fields to ``null``. To keep corrupt records, an user can set a string type \

- field named ``columnNameOfCorruptRecord`` in an user-defined schema. If a \

- schema does not have the field, it drops corrupt records during parsing. \

- When inferring a schema, it implicitly adds a ``columnNameOfCorruptRecord`` \

- field in an output schema.

- * ``DROPMALFORMED``: ignores the whole corrupted records.

- * ``FAILFAST``: throws an exception when it meets corrupted records.

-

- columnNameOfCorruptRecord : str, optional

- allows renaming the new field having malformed string

- created by ``PERMISSIVE`` mode. This overrides

- ``spark.sql.columnNameOfCorruptRecord``. If None is set,

- it uses the value specified in

- ``spark.sql.columnNameOfCorruptRecord``.

- dateFormat : str, optional

- sets the string that indicates a date format. Custom date formats

- follow the formats at

- `datetime pattern <https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html>`_. # noqa

- This applies to date type. If None is set, it uses the

- default value, ``yyyy-MM-dd``.

- timestampFormat : str, optional

- sets the string that indicates a timestamp format.

- Custom date formats follow the formats at

- `datetime pattern <https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html>`_. # noqa

- This applies to timestamp type. If None is set, it uses the

- default value, ``yyyy-MM-dd'T'HH:mm:ss[.SSS][XXX]``.

- multiLine : str or bool, optional

- parse one record, which may span multiple lines, per file. If None is

- set, it uses the default value, ``false``.

- allowUnquotedControlChars : str or bool, optional

- allows JSON Strings to contain unquoted control

- characters (ASCII characters with value less than 32,

- including tab and line feed characters) or not.

- lineSep : str, optional

- defines the line separator that should be used for parsing. If None is

- set, it covers all ``\\r``, ``\\r\\n`` and ``\\n``.

- locale : str, optional

- sets a locale as language tag in IETF BCP 47 format. If None is set,

- it uses the default value, ``en-US``. For instance, ``locale`` is used while

- parsing dates and timestamps.

- dropFieldIfAllNull : str or bool, optional

- whether to ignore column of all null values or empty

- array/struct during schema inference. If None is set, it

- uses the default value, ``false``.

- encoding : str or bool, optional

- allows to forcibly set one of standard basic or extended encoding for

- the JSON files. For example UTF-16BE, UTF-32LE. If None is set,

- the encoding of input JSON will be detected automatically

- when the multiLine option is set to ``true``.

- pathGlobFilter : str or bool, optional

- an optional glob pattern to only include files with paths matching

- the pattern. The syntax follows `org.apache.hadoop.fs.GlobFilter`.

- It does not change the behavior of

- `partition discovery <https://spark.apache.org/docs/latest/sql-data-sources-parquet.html#partition-discovery>`_. # noqa

- recursiveFileLookup : str or bool, optional

- recursively scan a directory for files. Using this option

- disables

- `partition discovery <https://spark.apache.org/docs/latest/sql-data-sources-parquet.html#partition-discovery>`_. # noqa

- allowNonNumericNumbers : str or bool, optional

- allows JSON parser to recognize set of "Not-a-Number" (NaN)

- tokens as legal floating number values. If None is set,

- it uses the default value, ``true``.

- * ``+INF``: for positive infinity, as well as alias of

- ``+Infinity`` and ``Infinity``.

- * ``-INF``: for negative infinity, alias ``-Infinity``.

- * ``NaN``: for other not-a-numbers, like result of division by zero.

+ Other Parameters

+ ----------------

+ Extra options (keyword argument)

+ For the extra options, refer to

+ `Data Source Option <https://spark.apache.org/docs/latest/sql-data-sources-parquet.html#data-source-option>`_ # noqa

+ and

+ `Generic File Source Options <https://spark.apache.org/docs/latest/sql-data-sources-generic-options.html`>_ # noqa

+ in the version you use.

Review comment:

Shall we remove this too?

##########

File path: python/pyspark/sql/readwriter.py

##########

@@ -236,112 +190,15 @@ def json(self, path, schema=None, primitivesAsString=None, prefersDecimal=None,

schema : :class:`pyspark.sql.types.StructType` or str, optional

an optional :class:`pyspark.sql.types.StructType` for the input schema or

a DDL-formatted string (For example ``col0 INT, col1 DOUBLE``).

- primitivesAsString : str or bool, optional

- infers all primitive values as a string type. If None is set,

- it uses the default value, ``false``.

- prefersDecimal : str or bool, optional

- infers all floating-point values as a decimal type. If the values

- do not fit in decimal, then it infers them as doubles. If None is

- set, it uses the default value, ``false``.

- allowComments : str or bool, optional

- ignores Java/C++ style comment in JSON records. If None is set,

- it uses the default value, ``false``.

- allowUnquotedFieldNames : str or bool, optional

- allows unquoted JSON field names. If None is set,

- it uses the default value, ``false``.

- allowSingleQuotes : str or bool, optional

- allows single quotes in addition to double quotes. If None is

- set, it uses the default value, ``true``.

- allowNumericLeadingZero : str or bool, optional

- allows leading zeros in numbers (e.g. 00012). If None is

- set, it uses the default value, ``false``.

- allowBackslashEscapingAnyCharacter : str or bool, optional

- allows accepting quoting of all character

- using backslash quoting mechanism. If None is

- set, it uses the default value, ``false``.

- mode : str, optional

- allows a mode for dealing with corrupt records during parsing. If None is

- set, it uses the default value, ``PERMISSIVE``.

-

- * ``PERMISSIVE``: when it meets a corrupted record, puts the malformed string \

- into a field configured by ``columnNameOfCorruptRecord``, and sets malformed \

- fields to ``null``. To keep corrupt records, an user can set a string type \

- field named ``columnNameOfCorruptRecord`` in an user-defined schema. If a \

- schema does not have the field, it drops corrupt records during parsing. \

- When inferring a schema, it implicitly adds a ``columnNameOfCorruptRecord`` \

- field in an output schema.

- * ``DROPMALFORMED``: ignores the whole corrupted records.

- * ``FAILFAST``: throws an exception when it meets corrupted records.

-

- columnNameOfCorruptRecord: str, optional

- allows renaming the new field having malformed string

- created by ``PERMISSIVE`` mode. This overrides

- ``spark.sql.columnNameOfCorruptRecord``. If None is set,

- it uses the value specified in

- ``spark.sql.columnNameOfCorruptRecord``.

- dateFormat : str, optional

- sets the string that indicates a date format. Custom date formats

- follow the formats at

- `datetime pattern <https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html>`_. # noqa

- This applies to date type. If None is set, it uses the

- default value, ``yyyy-MM-dd``.

- timestampFormat : str, optional

- sets the string that indicates a timestamp format.

- Custom date formats follow the formats at

- `datetime pattern <https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html>`_. # noqa

- This applies to timestamp type. If None is set, it uses the

- default value, ``yyyy-MM-dd'T'HH:mm:ss[.SSS][XXX]``.

- multiLine : str or bool, optional

- parse one record, which may span multiple lines, per file. If None is

- set, it uses the default value, ``false``.

- allowUnquotedControlChars : str or bool, optional

- allows JSON Strings to contain unquoted control

- characters (ASCII characters with value less than 32,

- including tab and line feed characters) or not.

- encoding : str or bool, optional

- allows to forcibly set one of standard basic or extended encoding for

- the JSON files. For example UTF-16BE, UTF-32LE. If None is set,

- the encoding of input JSON will be detected automatically

- when the multiLine option is set to ``true``.

- lineSep : str, optional

- defines the line separator that should be used for parsing. If None is

- set, it covers all ``\\r``, ``\\r\\n`` and ``\\n``.

- samplingRatio : str or float, optional

- defines fraction of input JSON objects used for schema inferring.

- If None is set, it uses the default value, ``1.0``.

- dropFieldIfAllNull : str or bool, optional

- whether to ignore column of all null values or empty

- array/struct during schema inference. If None is set, it

- uses the default value, ``false``.

- locale : str, optional

- sets a locale as language tag in IETF BCP 47 format. If None is set,

- it uses the default value, ``en-US``. For instance, ``locale`` is used while

- parsing dates and timestamps.

- pathGlobFilter : str or bool, optional

- an optional glob pattern to only include files with paths matching

- the pattern. The syntax follows `org.apache.hadoop.fs.GlobFilter`.

- It does not change the behavior of

- `partition discovery <https://spark.apache.org/docs/latest/sql-data-sources-parquet.html#partition-discovery>`_. # noqa

- recursiveFileLookup : str or bool, optional

- recursively scan a directory for files. Using this option

- disables

- `partition discovery <https://spark.apache.org/docs/latest/sql-data-sources-parquet.html#partition-discovery>`_. # noqa

- allowNonNumericNumbers : str or bool

- allows JSON parser to recognize set of "Not-a-Number" (NaN)

- tokens as legal floating number values. If None is set,

- it uses the default value, ``true``.

-

- * ``+INF``: for positive infinity, as well as alias of

- ``+Infinity`` and ``Infinity``.

- * ``-INF``: for negative infinity, alias ``-Infinity``.

- * ``NaN``: for other not-a-numbers, like result of division by zero.

- modifiedBefore : an optional timestamp to only include files with

- modification times occurring before the specified time. The provided timestamp

- must be in the following format: YYYY-MM-DDTHH:mm:ss (e.g. 2020-06-01T13:00:00)

- modifiedAfter : an optional timestamp to only include files with

- modification times occurring after the specified time. The provided timestamp

- must be in the following format: YYYY-MM-DDTHH:mm:ss (e.g. 2020-06-01T13:00:00)

+ Other Parameters

+ ----------------

+ Extra options

+ For the extra options, refer to

+ `Data Source Option <https://spark.apache.org/docs/latest/sql-data-sources-parquet.html#data-source-option>`_ # noqa

+ and

+ `Generic File Source Options <https://spark.apache.org/docs/latest/sql-data-sources-generic-options.html`>_ # noqa

+ in the version you use.

Review comment:

Shall we remove this too?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

HyukjinKwon commented on a change in pull request #32204:

URL: https://github.com/apache/spark/pull/32204#discussion_r633025442

##########

File path: docs/sql-data-sources-json.md

##########

@@ -94,3 +94,146 @@ SELECT * FROM jsonTable

</div>

</div>

+

+## Data Source Option

+

+Data source options of JSON can be set via:

+* the `.option`/`.options` methods of `DataFrameReader` or `DataFrameWriter`

+* the `.option`/`.options` methods of `DataStreamReader` or `DataStreamWriter`

+

+<table class="table">

+ <tr><th><b>Property Name</b></th><th><b>Default</b></th><th><b>Meaning</b></th><th><b>Scope</b></th></tr>

+ <tr>

+ <td><code>primitivesAsString</code></td>

+ <td>None</td>

+ <td>infers all primitive values as a string type. If None is set, it uses the default value, <code>false</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>prefersDecimal</code></td>

+ <td>None</td>

+ <td>infers all floating-point values as a decimal type. If the values do not fit in decimal, then it infers them as doubles. If None is set, it uses the default value, <code>false</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>allowComments</code></td>

+ <td>None</td>

+ <td>ignores Java/C++ style comment in JSON records. If None is set, it uses the default value, <code>false</code></td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>allowUnquotedFieldNames</code></td>

+ <td>None</td>

+ <td>allows unquoted JSON field names. If None is set, it uses the default value, <code>false</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>allowSingleQuotes</code></td>

+ <td>None</td>

+ <td>allows single quotes in addition to double quotes. If None is set, it uses the default value, <code>true</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>allowNumericLeadingZero</code></td>

+ <td>None</td>

+ <td>allows leading zeros in numbers (e.g. 00012). If None is set, it uses the default value, <code>false</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>allowBackslashEscapingAnyCharacter</code></td>

+ <td>None</td>

+ <td>allows accepting quoting of all character using backslash quoting mechanism. If None is set, it uses the default value, <code>false</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>columnNameOfCorruptRecord</code></td>

+ <td>None</td>

+ <td>allows renaming the new field having malformed string created by <code>PERMISSIVE</code> mode. This overrides spark.sql.columnNameOfCorruptRecord. If None is set, it uses the value specified in <code>spark.sql.columnNameOfCorruptRecord</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>dateFormat</code></td>

+ <td>None</td>

+ <td>sets the string that indicates a date format. Custom date formats follow the formats at <a href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html"> datetime pattern</a>. This applies to date type. If None is set, it uses the default value, <code>yyyy-MM-dd</code>.</td>

+ <td>read/write</td>

+ </tr>

+ <tr>

+ <td><code>timestampFormat</code></td>

+ <td>None</td>

+ <td>sets the string that indicates a timestamp format. Custom date formats follow the formats at <a href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html"> datetime pattern</a>. This applies to timestamp type. If None is set, it uses the default value, <code>yyyy-MM-dd'T'HH:mm:ss[.SSS][XXX]</code>.</td>

+ <td>read/write</td>

+ </tr>

+ <tr>

+ <td><code>multiLine</code></td>

+ <td>None</td>

+ <td>parse one record, which may span multiple lines, per file. If None is set, it uses the default value, <code>false</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>allowUnquotedControlChars</code></td>

+ <td>None</td>

+ <td>allows JSON Strings to contain unquoted control characters (ASCII characters with value less than 32, including tab and line feed characters) or not.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>encoding</code></td>

+ <td>None</td>

+ <td>allows to forcibly set one of standard basic or extended encoding for the JSON files. For example UTF-16BE, UTF-32LE. If None is set, the encoding of input JSON will be detected automatically when the multiLine option is set to <code>true</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>lineSep</code></td>

+ <td>None</td>

+ <td>defines the line separator that should be used for parsing. If None is set, it covers all <code>\r</code>, <code>\r\n</code> and <code>\n</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>samplingRatio</code></td>

+ <td>None</td>

+ <td>defines fraction of input JSON objects used for schema inferring. If None is set, it uses the default value, <code>1.0</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>dropFieldIfAllNull</code></td>

+ <td>None</td>

+ <td>whether to ignore column of all null values or empty array/struct during schema inference. If None is set, it uses the default value, <code>false</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>locale</code></td>

+ <td>None</td>

+ <td>sets a locale as language tag in IETF BCP 47 format. If None is set, it uses the default value, <code>en-US</code>. For instance, <code>locale</code> is used while parsing dates and timestamps.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>allowNonNumericNumbers</code></td>

+ <td>None</td>

+ <td>allows JSON parser to recognize set of “Not-a-Number” (NaN) tokens as legal floating number values. If None is set, it uses the default value, <code>true</code>.<br>

+ <ul>

+ <li><code>+INF</code>: for positive infinity, as well as alias of <code>+Infinity</code> and <code>Infinity</code>.</li>

+ <li><code>-INF</code>: for negative infinity, alias <code>-Infinity</code>.</li>

+ <li><code>NaN</code>: for other not-a-numbers, like result of division by zero.</li>

+ </ul>

+ </td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>compression</code></td>

+ <td>None</td>

+ <td>compression codec to use when saving to file. This can be one of the known case-insensitive shorten names (none, bzip2, gzip, lz4, snappy and deflate).</td>

+ <td>write</td>

+ </tr>

+ <tr>

+ <td><code>encoding</code></td>

Review comment:

Can you combine with `encoding` option above?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

HyukjinKwon commented on a change in pull request #32204:

URL: https://github.com/apache/spark/pull/32204#discussion_r634194606

##########

File path: sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

##########

@@ -443,20 +443,6 @@ class DataFrameReader private[sql](sparkSession: SparkSession) extends Logging {

*

* You can set the following JSON-specific options to deal with non-standard JSON files:

* <ul>

- * <li>`primitivesAsString` (default `false`): infers all primitive values as a string type</li>

- * <li>`prefersDecimal` (default `false`): infers all floating-point values as a decimal

- * type. If the values do not fit in decimal, then it infers them as doubles.</li>

- * <li>`allowComments` (default `false`): ignores Java/C++ style comment in JSON records</li>

- * <li>`allowUnquotedFieldNames` (default `false`): allows unquoted JSON field names</li>

- * <li>`allowSingleQuotes` (default `true`): allows single quotes in addition to double quotes

- * </li>

- * <li>`allowNumericLeadingZeros` (default `false`): allows leading zeros in numbers

- * (e.g. 00012)</li>

- * <li>`allowBackslashEscapingAnyCharacter` (default `false`): allows accepting quoting of all

- * character using backslash quoting mechanism</li>

- * <li>`allowUnquotedControlChars` (default `false`): allows JSON Strings to contain unquoted

- * control characters (ASCII characters with value less than 32, including tab and line feed

- * characters) or not.</li>

* <li>`mode` (default `PERMISSIVE`): allows a mode for dealing with corrupt records

Review comment:

mode in read path is an option for JSON and CSV. write mode (overwrite, etc.) isn't an option.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

AmplabJenkins commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-842429046

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on pull request #32204: [SPARK-34494] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

SparkQA removed a comment on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-821022487

**[Test build #137474 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/137474/testReport)** for PR 32204 at commit [`89d9be1`](https://github.com/apache/spark/commit/89d9be177539d307ef008c42c7245e90effec7e1).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-841039764

Kubernetes integration test status failure

URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/43070/

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-843268988

**[Test build #138681 has finished](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138681/testReport)** for PR 32204 at commit [`3316616`](https://github.com/apache/spark/commit/3316616593f72196fc98b6dc13bb6e19110207ca).

* This patch passes all tests.

* This patch merges cleanly.

* This patch adds no public classes.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

HyukjinKwon commented on a change in pull request #32204:

URL: https://github.com/apache/spark/pull/32204#discussion_r635793918

##########

File path: python/pyspark/sql/readwriter.py

##########

@@ -1204,31 +1055,13 @@ def json(self, path, mode=None, compression=None, dateFormat=None, timestampForm

* ``ignore``: Silently ignore this operation if data already exists.

* ``error`` or ``errorifexists`` (default case): Throw an exception if data already \

exists.

- compression : str, optional

- compression codec to use when saving to file. This can be one of the

- known case-insensitive shorten names (none, bzip2, gzip, lz4,

- snappy and deflate).

- dateFormat : str, optional

- sets the string that indicates a date format. Custom date formats

- follow the formats at

- `datetime pattern <https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html>`_. # noqa

- This applies to date type. If None is set, it uses the

- default value, ``yyyy-MM-dd``.

- timestampFormat : str, optional

- sets the string that indicates a timestamp format.

- Custom date formats follow the formats at

- `datetime pattern <https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html>`_. # noqa

- This applies to timestamp type. If None is set, it uses the

- default value, ``yyyy-MM-dd'T'HH:mm:ss[.SSS][XXX]``.

- encoding : str, optional

- specifies encoding (charset) of saved json files. If None is set,

- the default UTF-8 charset will be used.

- lineSep : str, optional

- defines the line separator that should be used for writing. If None is

- set, it uses the default value, ``\\n``.

- ignoreNullFields : str or bool, optional

- Whether to ignore null fields when generating JSON objects.

- If None is set, it uses the default value, ``true``.

+

+ Other Parameters

+ ----------------

+ Extra options

+ For the extra options, refer to

+ `Data Source Option <https://spark.apache.org/docs/latest/sql-data-sources-parquet.html#data-source-option>`_ # noqa

+ in the version you use.

Review comment:

Here you didn't link generic options but you did for Parquet. What's diff?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32204: [SPARK-34494] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

AmplabJenkins commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-821056642

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder-K8s/42050/

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] HyukjinKwon commented on a change in pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

HyukjinKwon commented on a change in pull request #32204:

URL: https://github.com/apache/spark/pull/32204#discussion_r638679043

##########

File path: docs/sql-data-sources-json.md

##########

@@ -94,3 +94,168 @@ SELECT * FROM jsonTable

</div>

</div>

+

+## Data Source Option

+

+Data source options of JSON can be set via:

+* the `.option`/`.options` methods of

+ * `DataFrameReader`

+ * `DataFrameWriter`

+ * `DataStreamReader`

+ * `DataStreamWriter`

+

+<table class="table">

+ <tr><th><b>Property Name</b></th><th><b>Default</b></th><th><b>Meaning</b></th><th><b>Scope</b></th></tr>

+ <tr>

+ <!-- TODO(SPARK-35433): Add timeZone to Data Source Option for CSV, too. -->

+ <td><code>timeZone</code></td>

+ <td>None</td>

+ <td>Sets the string that indicates a time zone ID to be used to format timestamps in the JSON datasources or partition values. The following formats of <code>timeZone</code> are supported:<br>

+ <ul>

+ <li>Region-based zone ID: It should have the form 'area/city', such as 'America/Los_Angeles'.</li>

+ <li>Zone offset: It should be in the format '(+|-)HH:mm', for example '-08:00' or '+01:00'. Also 'UTC' and 'Z' are supported as aliases of '+00:00'.</li>

+ </ul>

+ Other short names like 'CST' are not recommended to use because they can be ambiguous. If it isn't set, the current value of the SQL config <code>spark.sql.session.timeZone</code> is used by default.

+ </td>

+ <td>read/write</td>

+ </tr>

+ <tr>

+ <td><code>primitivesAsString</code></td>

+ <td>None</td>

+ <td>Infers all primitive values as a string type. If None is set, it uses the default value, <code>false</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>prefersDecimal</code></td>

+ <td>None</td>

+ <td>Infers all floating-point values as a decimal type. If the values do not fit in decimal, then it infers them as doubles. If None is set, it uses the default value, <code>false</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>allowComments</code></td>

+ <td>None</td>

+ <td>Ignores Java/C++ style comment in JSON records. If None is set, it uses the default value, <code>false</code></td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>allowUnquotedFieldNames</code></td>

+ <td>None</td>

+ <td>Allows unquoted JSON field names. If None is set, it uses the default value, <code>false</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>allowSingleQuotes</code></td>

+ <td>None</td>

+ <td>Allows single quotes in addition to double quotes. If None is set, it uses the default value, <code>true</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>allowNumericLeadingZero</code></td>

+ <td>None</td>

+ <td>Allows leading zeros in numbers (e.g. 00012). If None is set, it uses the default value, <code>false</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>allowBackslashEscapingAnyCharacter</code></td>

+ <td>None</td>

+ <td>Allows accepting quoting of all character using backslash quoting mechanism. If None is set, it uses the default value, <code>false</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>mode</code></td>

+ <td>None</td>

+ <td>Allows a mode for dealing with corrupt records during parsing. If None is set, it uses the default value, <code>PERMISSIVE</code><br>

+ <ul>

+ <li><code>PERMISSIVE</code>: when it meets a corrupted record, puts the malformed string into a field configured by <code>columnNameOfCorruptRecord</code>, and sets malformed fields to <code>null</code>. To keep corrupt records, an user can set a string type field named <code>columnNameOfCorruptRecord</code> in an user-defined schema. If a schema does not have the field, it drops corrupt records during parsing. When inferring a schema, it implicitly adds a <code>columnNameOfCorruptRecord</code> field in an output schema.</li>

+ <li><code>DROPMALFORMED</code>: ignores the whole corrupted records.</li>

+ <li><code>FAILFAST</code>: throws an exception when it meets corrupted records.</li>

+ </ul>

+ </td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>columnNameOfCorruptRecord</code></td>

+ <td>None</td>

+ <td>Allows renaming the new field having malformed string created by <code>PERMISSIVE</code> mode. This overrides spark.sql.columnNameOfCorruptRecord. If None is set, it uses the value specified in <code>spark.sql.columnNameOfCorruptRecord</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>dateFormat</code></td>

+ <td>None</td>

+ <td>Sets the string that indicates a date format. Custom date formats follow the formats at <a href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html"> datetime pattern</a>. This applies to date type. If None is set, it uses the default value, <code>yyyy-MM-dd</code>.</td>

+ <td>read/write</td>

+ </tr>

+ <tr>

+ <td><code>timestampFormat</code></td>

+ <td>None</td>

+ <td>Sets the string that indicates a timestamp format. Custom date formats follow the formats at <a href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html"> datetime pattern</a>. This applies to timestamp type. If None is set, it uses the default value, <code>yyyy-MM-dd'T'HH:mm:ss[.SSS][XXX]</code>.</td>

+ <td>read/write</td>

+ </tr>

+ <tr>

+ <td><code>multiLine</code></td>

+ <td>None</td>

+ <td>Parse one record, which may span multiple lines, per file. If None is set, it uses the default value, <code>false</code>.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>allowUnquotedControlChars</code></td>

+ <td>None</td>

+ <td>Allows JSON Strings to contain unquoted control characters (ASCII characters with value less than 32, including tab and line feed characters) or not.</td>

+ <td>read</td>

+ </tr>

+ <tr>

+ <td><code>encoding</code></td>

+ <td>None</td>

+ <td>For reading, allows to forcibly set one of standard basic or extended encoding for the JSON files. For example UTF-16BE, UTF-32LE. If None is set, the encoding of input JSON will be detected automatically when the multiLine option is set to <code>true</code>. For writing, Specifies encoding (charset) of saved json files. If None is set, the default UTF-8 charset will be used.</td>

Review comment:

Also fix the docs properly from `None` to something else. That only applies to Python side.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-843120435

Kubernetes integration test status success

URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/43199/

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] itholic commented on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

itholic commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-840913159

Thanks, @HyukjinKwon .

I checked one by one, and seems like the general options are already documentd to the https://spark.apache.org/docs/latest/sql-data-sources-generic-options.html. I think the parameters I removed are all of JSON specific options ??

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32204: [SPARK-34494] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-822161426

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-840318050

Kubernetes integration test status failure

URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/43012/

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AmplabJenkins removed a comment on pull request #32204: [SPARK-34494] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

AmplabJenkins removed a comment on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-821024116

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/137474/

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

AmplabJenkins commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-841159281

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/138551/

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-843131573

Kubernetes integration test status success

URL: https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder-K8s/43202/

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA removed a comment on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

SparkQA removed a comment on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-842385801

**[Test build #138636 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138636/testReport)** for PR 32204 at commit [`6ea843a`](https://github.com/apache/spark/commit/6ea843ae13b7489b580dbffdc2ff66927c305c8b).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] AmplabJenkins commented on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

AmplabJenkins commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-842554341

Refer to this link for build results (access rights to CI server needed):

https://amplab.cs.berkeley.edu/jenkins//job/SparkPullRequestBuilder/138636/

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] [spark] SparkQA commented on pull request #32204: [SPARK-34494][SQL][DOCS] Move JSON data source options from Python and Scala into a single page

Posted by GitBox <gi...@apache.org>.

SparkQA commented on pull request #32204:

URL: https://github.com/apache/spark/pull/32204#issuecomment-843072997

**[Test build #138681 has started](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/138681/testReport)** for PR 32204 at commit [`3316616`](https://github.com/apache/spark/commit/3316616593f72196fc98b6dc13bb6e19110207ca).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

---------------------------------------------------------------------