You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by "guysherman (via GitHub)" <gi...@apache.org> on 2023/03/15 03:41:38 UTC

[GitHub] [hudi] guysherman opened a new issue, #8189: [SUPPORT] Disproportionately Slow performance during "Building workload profile" phase

guysherman opened a new issue, #8189:

URL: https://github.com/apache/hudi/issues/8189

**_Tips before filing an issue_**

- Have you gone through our [FAQs](https://hudi.apache.org/learn/faq/)?

- Join the mailing list to engage in conversations and get faster support at dev-subscribe@hudi.apache.org.

- If you have triaged this as a bug, then file an [issue](https://issues.apache.org/jira/projects/HUDI/issues) directly.

**Describe the problem you faced**

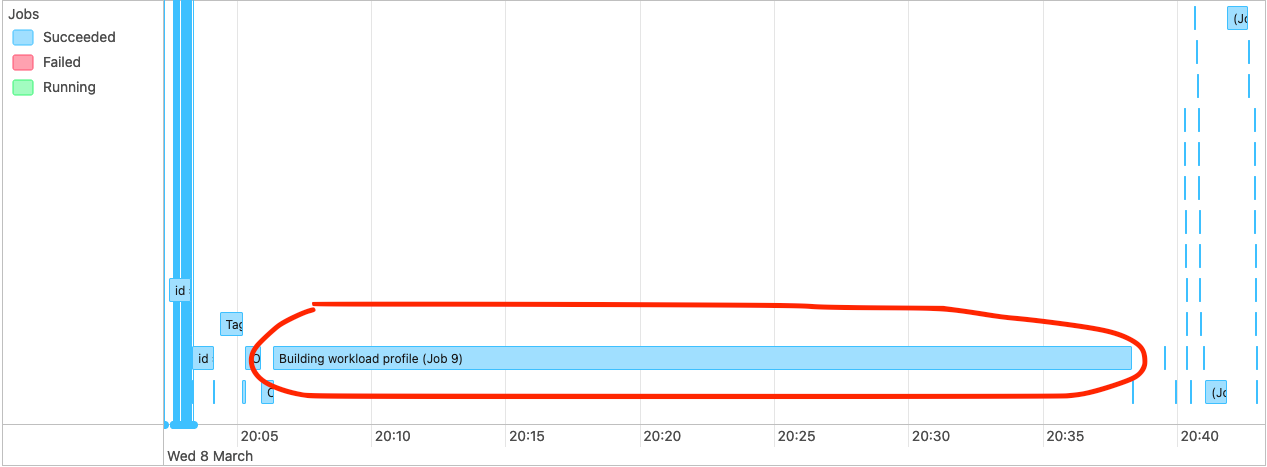

From time to time (~ 1 in 8-20 Glue Job runs) we will have a glue job that takes between 2x and 5x longer than the nominal glue job duration for the workload in question. Analysing the Spark UI logs, we see a very long `Building workload profile` job.

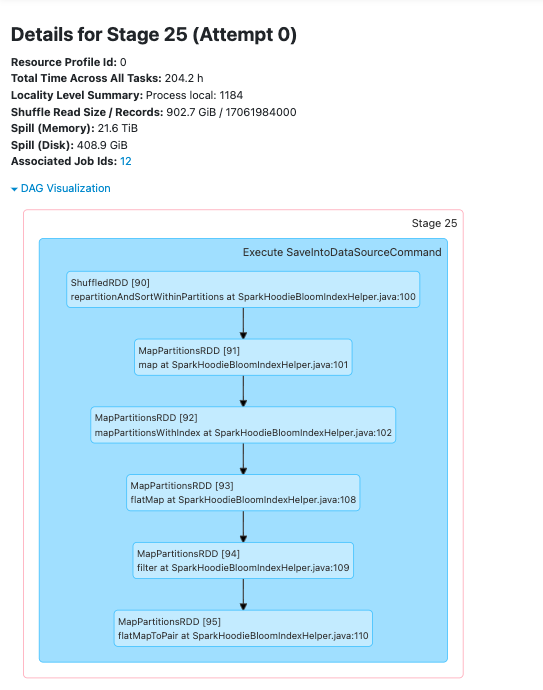

Drilling into that job we see that the longest task in that job always resolves to the following stack trace:

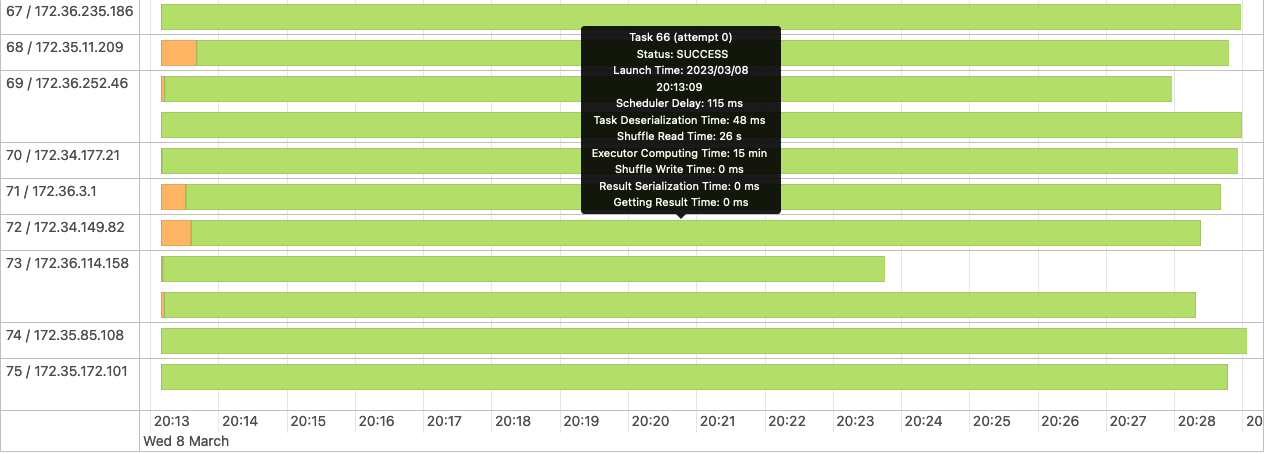

We also see quite high shuffling relative to the overall dataset size in this job when we have these slow glue jobs, although not much of the actual time spent is categorised as shuffling.

My understanding is that this code is trying to determine which files must be written to, and because we use a bloom index it does this by interrogating the bloom indices in the partition, and then checking candidate files. We are using bucketized checking.

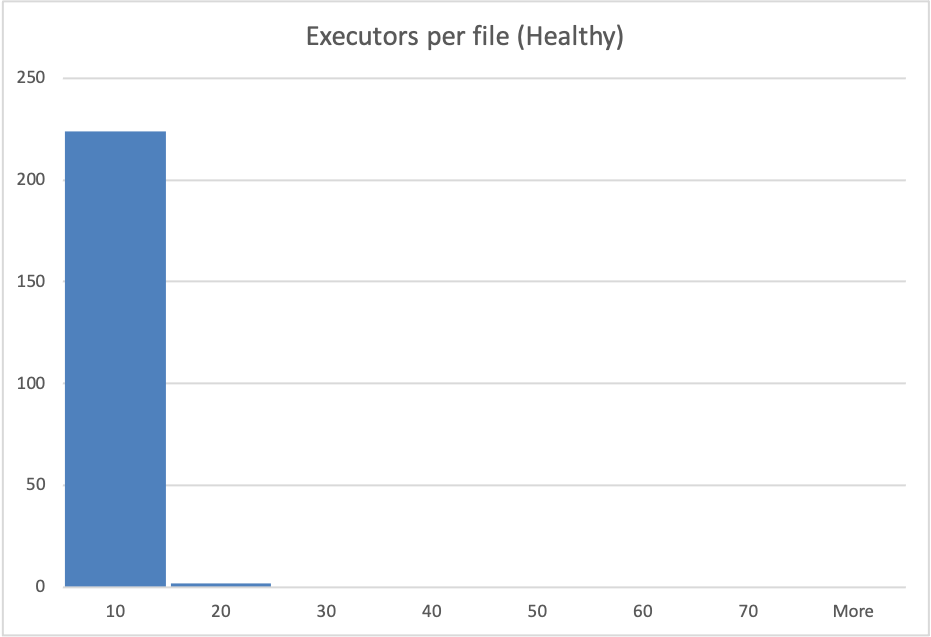

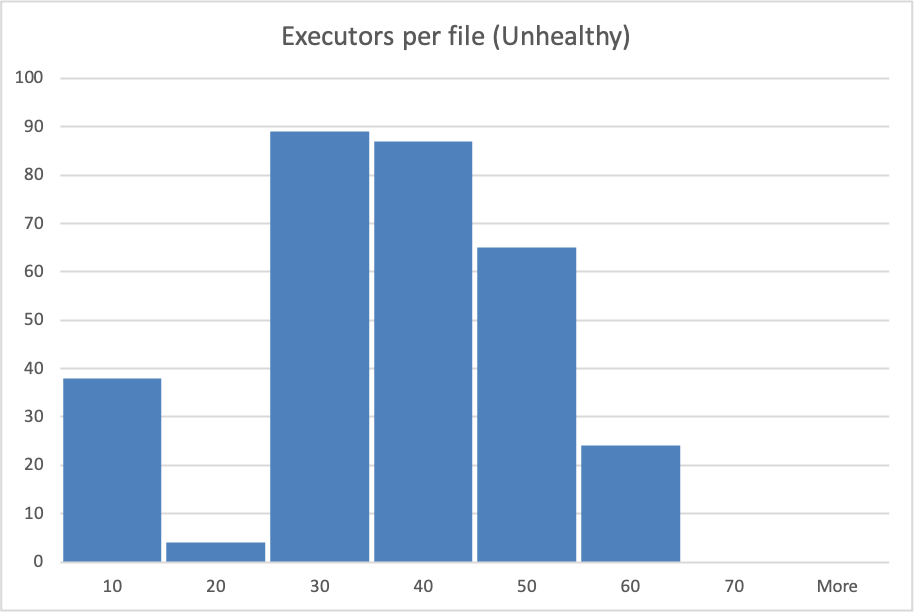

I analyzed s3 access logs during these `Building workload profile` jobs, looking at the number of accesses to files and found that in the slow jobs files are typically accessed many more times, form more remote ip addresses than in the fast jobs. This particular hudi table is produced for a performance test and is never queried, so I am confident that s3 access during this period is only due to this hudi job.

NB: the histogram buckets are coarse, in the healthy case the "10" bucket is predominantly a value of 4 executors per file.

See the repro steps below for how the dataset is produced, but as far as I can tell, the only real difference from one glue job run to the next is the ordering of records in the kinesis streams that feed the glue job.

**To Reproduce**

Steps to reproduce the behavior:

Our data-flow is roughly Lambda -> Kinesis Stream -> Periodic Glue Job -> S3

Over a 2 hour period we generate inputs to the Lambdas that result in ~3.5B records in the datalake. This data is distributed across 14 partitions, with a gaussian-like distribution, such that "Partition 0" (which has no data at the start of the 2 hour period) gets ~90% of the records and Partition 13 gets 0.05% of the records. Partitions 1-13 are from previous runs of the test and so each have ~3-3.5B records in them already.

Record keys are structured such that they sort with time (the first field in the record key is an epochMillis timestamp), thus records in the same partition should be sortable into an approximately monotonically increasing order.

A typical run of the Glue job will handle ~8-10% of the data at a time, but because of the way the data is created each batch processed by Glue has roughly the same distribution.

Approximately 1 in 15 Glue Job runs will result in a very long `Building workload profile` job within spark.

**Expected behavior**

Given relatively consistent quantities of data, distribution of data across partitions and partition sizes we would expect relatively consistent Glue Job run durations.

**Environment Description**

* Hudi version : 0.11.0

* Spark version : 3.1.2

* Hive version : 2.3.1

* Hadoop version : 2.7 I think? We're using the hudi 0.11 spark 3.1.x bundle, on AWS Glue 3.

* Storage (HDFS/S3/GCS..) : S3

* Running on Docker? (yes/no) : No

**Additional context**

Add any other context about the problem here.

**Stacktrace**

```Add the stacktrace of the error.```

Not an error per-se. Stack trace is in screenshot above.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] guysherman commented on issue #8189: [SUPPORT] Disproportionately Slow performance during "Building workload profile" phase

Posted by "guysherman (via GitHub)" <gi...@apache.org>.

guysherman commented on issue #8189:

URL: https://github.com/apache/hudi/issues/8189#issuecomment-1471555541

Just to add... _most_ of the time the performance is fine, and then occasionally it is really bad, so I'm mainly wondering if there are characteristics of the input data, or of the existing parquet files in the partition that are expected to make the performance worse, and whether we can do anything to avoid those characteristics, eg pre-sorting, etc.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] parisni commented on issue #8189: [SUPPORT] Disproportionately Slow performance during "Building workload profile" phase

Posted by "parisni (via GitHub)" <gi...@apache.org>.

parisni commented on issue #8189:

URL: https://github.com/apache/hudi/issues/8189#issuecomment-1476178205

@guysherman we have the same symptoms on a table (hudi 12.2 - I didn't check the s3 access log thought) but on 100% of our upserts. The problem raised one day and now the same pipeline finisheds in few hours (instead of 10 minutes in the past). We are investigating the reason. Did you try to increase the memory ? we have spotted lot of `spilling in-memory map of 3.1 GiB to disk` logs on the executors while running "building the workload profile". One hypothesis is we passed a certain memory threshold where it spills blooms computation stuff to disk

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] tshen-PayPay commented on issue #8189: [SUPPORT] Disproportionately Slow performance during "Building workload profile" phase

Posted by "tshen-PayPay (via GitHub)" <gi...@apache.org>.

tshen-PayPay commented on issue #8189:

URL: https://github.com/apache/hudi/issues/8189#issuecomment-1722217604

> I've seen this slowness in my hudi jobs, and it was usually related to s3 timeouts. If I remember correctly, disabling the metadata table helped.

Hi @kazdy excuse me, which metadata table are you referring? And could you plz provide a way on how to disable it?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] guysherman commented on issue #8189: [SUPPORT] Disproportionately Slow performance during "Building workload profile" phase

Posted by "guysherman (via GitHub)" <gi...@apache.org>.

guysherman commented on issue #8189:

URL: https://github.com/apache/hudi/issues/8189#issuecomment-1471553251

Hey, it might take me a little while to get back to you on this... I'll have to set up an alternate instance of our test infrastructure to run it against a custom build of our glue job with the latest Hudi... I'm not in a position to just update hudi and merge it into our mainline, we tend to need to validate new versions of hudi quite closely.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] lokeshj1703 commented on issue #8189: [SUPPORT] Disproportionately Slow performance during "Building workload profile" phase

Posted by "lokeshj1703 (via GitHub)" <gi...@apache.org>.

lokeshj1703 commented on issue #8189:

URL: https://github.com/apache/hudi/issues/8189#issuecomment-1469557058

@guysherman Can you try running the glue jobs with a recent version of Hudi? It is possible that the performance could have improved in the recent versions of HUDI.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] kazdy commented on issue #8189: [SUPPORT] Disproportionately Slow performance during "Building workload profile" phase

Posted by "kazdy (via GitHub)" <gi...@apache.org>.

kazdy commented on issue #8189:

URL: https://github.com/apache/hudi/issues/8189#issuecomment-1490703261

I've seen this slowness in my hudi jobs, and it was usually related to s3 timeouts. If I remember correctly, disabling the metadata table helped.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

Re: [I] [SUPPORT] Disproportionately Slow performance during "Building workload profile" phase [hudi]

Posted by "zyclove (via GitHub)" <gi...@apache.org>.

zyclove commented on issue #8189:

URL: https://github.com/apache/hudi/issues/8189#issuecomment-1815649576

What do these two stages do? It keeps getting stuck, which is very time-consuming. Can you give me some optimization suggestions? Thank you.

hudi 0.14.0

spark 3.2.1

@nsivabalan @codope

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org