You are viewing a plain text version of this content. The canonical link for it is here.

Posted to commits@hudi.apache.org by GitBox <gi...@apache.org> on 2022/06/01 21:38:46 UTC

[GitHub] [hudi] alexeykudinkin opened a new pull request, #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

alexeykudinkin opened a new pull request, #5733:

URL: https://github.com/apache/hudi/pull/5733

## *Tips*

- *Thank you very much for contributing to Apache Hudi.*

- *Please review https://hudi.apache.org/contribute/how-to-contribute before opening a pull request.*

## What is the purpose of the pull request

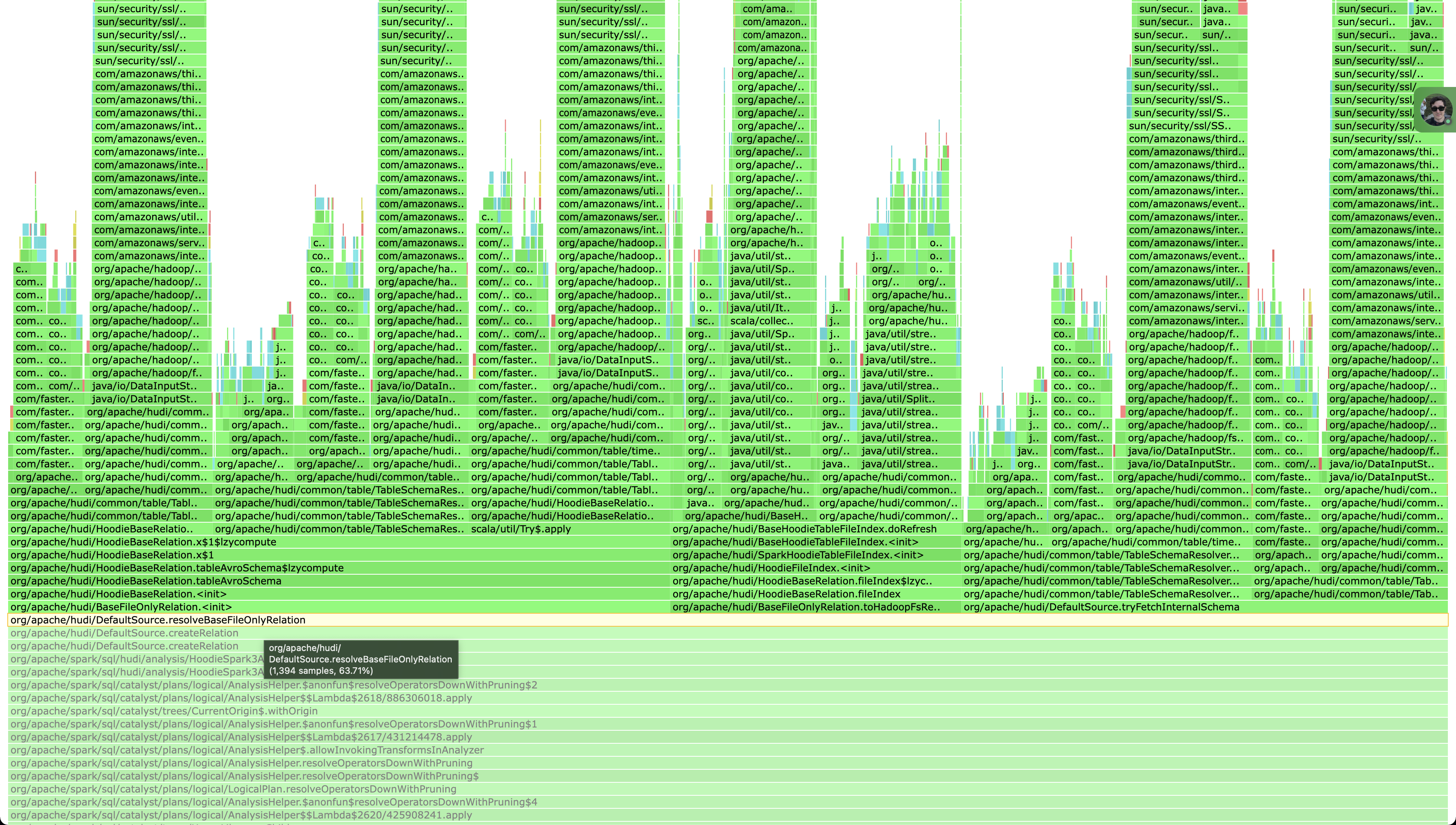

As has been outlined in HUDI-4176, we've hit a roadblock while testing Hudi on a large dataset (~1Tb) having pretty fat commits where Hudi's commit metadata could reach into 100s of Mbs.

As could be seen from the screenshot in such cases current implementation of `TableSchemaResolver` is doing a lot of repeated throw-away computations reading `HoodieCommitMetadata` (during init, fetching table's schema, fetching table's internal schema, etc).

Given the size some of ours commit metadata instances Spark's parsing and resolving phase (when `spark.sql(...)` is involved, but before returned `Dataset` is dereferenced) starts to dominate some of our queries' execution time.

## Brief change log

- Rebased onto new APIs to avoid excessive Hadoop's Path allocations

- Eliminated `hasOperationField` completely to avoid repeatitive computations

- Cleaning up duplication in `HoodieActiveTimeline`

- Added caching for common instances of `HoodieCommitMetadata`

- Made `tableStructSchema` lazy;

## Verify this pull request

This pull request is already covered by existing tests, such as *(please describe tests)*.

## Committer checklist

- [ ] Has a corresponding JIRA in PR title & commit

- [ ] Commit message is descriptive of the change

- [ ] CI is green

- [ ] Necessary doc changes done or have another open PR

- [ ] For large changes, please consider breaking it into sub-tasks under an umbrella JIRA.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

hudi-bot commented on PR #5733:

URL: https://github.com/apache/hudi/pull/5733#issuecomment-1146532508

<!--

Meta data

{

"version" : 1,

"metaDataEntries" : [ {

"hash" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"status" : "DELETED",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9041",

"triggerID" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"triggerType" : "PUSH"

}, {

"hash" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"status" : "DELETED",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9066",

"triggerID" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"triggerType" : "PUSH"

}, {

"hash" : "bb436a73c4bd66a5a90467475710851b598d2ae9",

"status" : "FAILURE",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9070",

"triggerID" : "bb436a73c4bd66a5a90467475710851b598d2ae9",

"triggerType" : "PUSH"

} ]

}-->

## CI report:

* bb436a73c4bd66a5a90467475710851b598d2ae9 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9070)

<details>

<summary>Bot commands</summary>

@hudi-bot supports the following commands:

- `@hudi-bot run azure` re-run the last Azure build

</details>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

hudi-bot commented on PR #5733:

URL: https://github.com/apache/hudi/pull/5733#issuecomment-1147356282

<!--

Meta data

{

"version" : 1,

"metaDataEntries" : [ {

"hash" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"status" : "DELETED",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9041",

"triggerID" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"triggerType" : "PUSH"

}, {

"hash" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"status" : "DELETED",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9066",

"triggerID" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"triggerType" : "PUSH"

}, {

"hash" : "bb436a73c4bd66a5a90467475710851b598d2ae9",

"status" : "FAILURE",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9070",

"triggerID" : "bb436a73c4bd66a5a90467475710851b598d2ae9",

"triggerType" : "PUSH"

}, {

"hash" : "241b4a0a69e8ab13dbb1b6a2c82b06dfee877e41",

"status" : "PENDING",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9101",

"triggerID" : "241b4a0a69e8ab13dbb1b6a2c82b06dfee877e41",

"triggerType" : "PUSH"

} ]

}-->

## CI report:

* bb436a73c4bd66a5a90467475710851b598d2ae9 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9070)

* 241b4a0a69e8ab13dbb1b6a2c82b06dfee877e41 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9101)

<details>

<summary>Bot commands</summary>

@hudi-bot supports the following commands:

- `@hudi-bot run azure` re-run the last Azure build

</details>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] alexeykudinkin commented on a diff in pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

alexeykudinkin commented on code in PR #5733:

URL: https://github.com/apache/hudi/pull/5733#discussion_r889403945

##########

hudi-common/src/main/java/org/apache/hudi/common/table/TableSchemaResolver.java:

##########

@@ -626,4 +499,128 @@ public Option<String> getTableHistorySchemaStrFromCommitMetadata() {

String result = manager.getHistorySchemaStr();

return result.isEmpty() ? Option.empty() : Option.of(result);

}

+

+ /**

+ * NOTE: This method could only be used in tests

+ *

+ * @VisibleForTesting

+ */

+ public boolean hasOperationField() {

Review Comment:

Method did not change

##########

hudi-common/src/main/java/org/apache/hudi/common/table/timeline/HoodieActiveTimeline.java:

##########

@@ -267,43 +265,47 @@ public Option<byte[]> getInstantDetails(HoodieInstant instant) {

}

/**

- * Get the last instant with valid schema, and convert this to HoodieCommitMetadata

+ * Returns most recent instant having valid schema in its {@link HoodieCommitMetadata}

*/

public Option<Pair<HoodieInstant, HoodieCommitMetadata>> getLastCommitMetadataWithValidSchema() {

- List<HoodieInstant> completed = getCommitsTimeline().filterCompletedInstants().getInstants()

- .sorted(Comparator.comparing(HoodieInstant::getTimestamp).reversed()).collect(Collectors.toList());

- for (HoodieInstant instant : completed) {

- try {

- HoodieCommitMetadata commitMetadata = HoodieCommitMetadata.fromBytes(

- getInstantDetails(instant).get(), HoodieCommitMetadata.class);

- if (!StringUtils.isNullOrEmpty(commitMetadata.getMetadata(HoodieCommitMetadata.SCHEMA_KEY))) {

- return Option.of(Pair.of(instant, commitMetadata));

- }

- } catch (IOException e) {

- LOG.warn("Failed to convert instant to HoodieCommitMetadata: " + instant.toString());

- }

- }

- return Option.empty();

+ return Option.fromJavaOptional(

+ getCommitMetadataStream()

+ .filter(instantCommitMetadataPair ->

+ !StringUtils.isNullOrEmpty(instantCommitMetadataPair.getValue().getMetadata(HoodieCommitMetadata.SCHEMA_KEY)))

+ .findFirst()

+ );

}

/**

* Get the last instant with valid data, and convert this to HoodieCommitMetadata

*/

public Option<Pair<HoodieInstant, HoodieCommitMetadata>> getLastCommitMetadataWithValidData() {

- List<HoodieInstant> completed = getCommitsTimeline().filterCompletedInstants().getInstants()

- .sorted(Comparator.comparing(HoodieInstant::getTimestamp).reversed()).collect(Collectors.toList());

- for (HoodieInstant instant : completed) {

- try {

- HoodieCommitMetadata commitMetadata = HoodieCommitMetadata.fromBytes(

- getInstantDetails(instant).get(), HoodieCommitMetadata.class);

- if (!commitMetadata.getFileIdAndRelativePaths().isEmpty()) {

- return Option.of(Pair.of(instant, commitMetadata));

- }

- } catch (IOException e) {

- LOG.warn("Failed to convert instant to HoodieCommitMetadata: " + instant.toString());

- }

- }

- return Option.empty();

+ return Option.fromJavaOptional(

+ getCommitMetadataStream()

+ .filter(instantCommitMetadataPair ->

+ !instantCommitMetadataPair.getValue().getFileIdAndRelativePaths().isEmpty())

+ .findFirst()

+ );

+ }

+

+ /**

+ * Returns stream of {@link HoodieCommitMetadata} in order reverse to chronological (ie most

+ * recent metadata being the first element)

+ */

+ private Stream<Pair<HoodieInstant, HoodieCommitMetadata>> getCommitMetadataStream() {

+ // NOTE: Streams are lazy

Review Comment:

Yes, streams are lazy therefore it will only compute whole chain only for it to get a single object

##########

hudi-common/src/main/java/org/apache/hudi/common/table/TableSchemaResolver.java:

##########

@@ -626,4 +499,128 @@ public Option<String> getTableHistorySchemaStrFromCommitMetadata() {

String result = manager.getHistorySchemaStr();

return result.isEmpty() ? Option.empty() : Option.of(result);

}

+

+ /**

+ * NOTE: This method could only be used in tests

+ *

+ * @VisibleForTesting

+ */

+ public boolean hasOperationField() {

+ try {

+ Schema tableAvroSchema = getTableAvroSchemaFromDataFile();

+ return tableAvroSchema.getField(HoodieRecord.OPERATION_METADATA_FIELD) != null;

+ } catch (Exception e) {

+ LOG.info(String.format("Failed to read operation field from avro schema (%s)", e.getMessage()));

+ return false;

+ }

+ }

+

+ private Option<Pair<HoodieInstant, HoodieCommitMetadata>> getLatestCommitMetadataWithValidSchema() {

+ if (latestCommitWithValidSchema == null) {

+ Option<Pair<HoodieInstant, HoodieCommitMetadata>> instantAndCommitMetadata =

+ metaClient.getActiveTimeline().getLastCommitMetadataWithValidSchema();

+ if (instantAndCommitMetadata.isPresent()) {

+ HoodieInstant instant = instantAndCommitMetadata.get().getLeft();

+ HoodieCommitMetadata metadata = instantAndCommitMetadata.get().getRight();

+ synchronized (this) {

+ if (latestCommitWithValidSchema == null) {

+ latestCommitWithValidSchema = instant;

+ }

+ commitMetadataCache.get().putIfAbsent(instant, metadata);

+ }

+ }

+ }

+

+ return Option.ofNullable(latestCommitWithValidSchema)

+ .map(instant -> Pair.of(instant, commitMetadataCache.get().get(instant)));

+ }

+

+ private Option<Pair<HoodieInstant, HoodieCommitMetadata>> getLatestCommitMetadataWithValidData() {

+ if (latestCommitWithValidData == null) {

+ Option<Pair<HoodieInstant, HoodieCommitMetadata>> instantAndCommitMetadata =

+ metaClient.getActiveTimeline().getLastCommitMetadataWithValidData();

+ if (instantAndCommitMetadata.isPresent()) {

+ HoodieInstant instant = instantAndCommitMetadata.get().getLeft();

+ HoodieCommitMetadata metadata = instantAndCommitMetadata.get().getRight();

+ synchronized (this) {

+ if (latestCommitWithValidData == null) {

+ latestCommitWithValidData = instant;

+ }

+ commitMetadataCache.get().putIfAbsent(instant, metadata);

+ }

+ }

+ }

+

+ return Option.ofNullable(latestCommitWithValidData)

+ .map(instant -> Pair.of(instant, commitMetadataCache.get().get(instant)));

+ }

+

+ private HoodieCommitMetadata getCachedCommitMetadata(HoodieInstant instant) {

+ return commitMetadataCache.get()

+ .computeIfAbsent(instant, (missingInstant) -> {

+ HoodieTimeline timeline = metaClient.getActiveTimeline().getCommitsTimeline().filterCompletedInstants();

+ byte[] data = timeline.getInstantDetails(missingInstant).get();

+ try {

+ return HoodieCommitMetadata.fromBytes(data, HoodieCommitMetadata.class);

+ } catch (IOException e) {

+ throw new HoodieIOException(String.format("Failed to fetch HoodieCommitMetadata for instant (%s)", missingInstant), e);

+ }

+ });

+ }

+

+ private MessageType fetchSchemaFromFiles(Iterator<String> filePaths) throws IOException {

+ MessageType type = null;

+ while (filePaths.hasNext() && type == null) {

+ String filePath = filePaths.next();

+ if (filePath.contains(HoodieFileFormat.HOODIE_LOG.getFileExtension())) {

+ // this is a log file

+ type = readSchemaFromLogFile(new Path(filePath));

+ } else {

+ type = readSchemaFromBaseFile(filePath);

+ }

+ }

+ return type;

+ }

+

+ private MessageType readSchemaFromBaseFile(String filePath) throws IOException {

Review Comment:

Method did not change

##########

hudi-common/src/main/java/org/apache/hudi/common/table/TableSchemaResolver.java:

##########

@@ -626,4 +499,128 @@ public Option<String> getTableHistorySchemaStrFromCommitMetadata() {

String result = manager.getHistorySchemaStr();

return result.isEmpty() ? Option.empty() : Option.of(result);

}

+

+ /**

+ * NOTE: This method could only be used in tests

+ *

+ * @VisibleForTesting

+ */

+ public boolean hasOperationField() {

+ try {

+ Schema tableAvroSchema = getTableAvroSchemaFromDataFile();

+ return tableAvroSchema.getField(HoodieRecord.OPERATION_METADATA_FIELD) != null;

+ } catch (Exception e) {

+ LOG.info(String.format("Failed to read operation field from avro schema (%s)", e.getMessage()));

+ return false;

+ }

+ }

+

+ private Option<Pair<HoodieInstant, HoodieCommitMetadata>> getLatestCommitMetadataWithValidSchema() {

+ if (latestCommitWithValidSchema == null) {

+ Option<Pair<HoodieInstant, HoodieCommitMetadata>> instantAndCommitMetadata =

+ metaClient.getActiveTimeline().getLastCommitMetadataWithValidSchema();

+ if (instantAndCommitMetadata.isPresent()) {

+ HoodieInstant instant = instantAndCommitMetadata.get().getLeft();

+ HoodieCommitMetadata metadata = instantAndCommitMetadata.get().getRight();

+ synchronized (this) {

+ if (latestCommitWithValidSchema == null) {

+ latestCommitWithValidSchema = instant;

+ }

+ commitMetadataCache.get().putIfAbsent(instant, metadata);

+ }

+ }

+ }

+

+ return Option.ofNullable(latestCommitWithValidSchema)

+ .map(instant -> Pair.of(instant, commitMetadataCache.get().get(instant)));

+ }

+

+ private Option<Pair<HoodieInstant, HoodieCommitMetadata>> getLatestCommitMetadataWithValidData() {

+ if (latestCommitWithValidData == null) {

+ Option<Pair<HoodieInstant, HoodieCommitMetadata>> instantAndCommitMetadata =

+ metaClient.getActiveTimeline().getLastCommitMetadataWithValidData();

+ if (instantAndCommitMetadata.isPresent()) {

+ HoodieInstant instant = instantAndCommitMetadata.get().getLeft();

+ HoodieCommitMetadata metadata = instantAndCommitMetadata.get().getRight();

+ synchronized (this) {

+ if (latestCommitWithValidData == null) {

+ latestCommitWithValidData = instant;

+ }

+ commitMetadataCache.get().putIfAbsent(instant, metadata);

+ }

+ }

+ }

+

+ return Option.ofNullable(latestCommitWithValidData)

+ .map(instant -> Pair.of(instant, commitMetadataCache.get().get(instant)));

+ }

+

+ private HoodieCommitMetadata getCachedCommitMetadata(HoodieInstant instant) {

+ return commitMetadataCache.get()

+ .computeIfAbsent(instant, (missingInstant) -> {

+ HoodieTimeline timeline = metaClient.getActiveTimeline().getCommitsTimeline().filterCompletedInstants();

+ byte[] data = timeline.getInstantDetails(missingInstant).get();

+ try {

+ return HoodieCommitMetadata.fromBytes(data, HoodieCommitMetadata.class);

+ } catch (IOException e) {

+ throw new HoodieIOException(String.format("Failed to fetch HoodieCommitMetadata for instant (%s)", missingInstant), e);

+ }

+ });

+ }

+

+ private MessageType fetchSchemaFromFiles(Iterator<String> filePaths) throws IOException {

Review Comment:

Method did not change

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] alexeykudinkin commented on a diff in pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

alexeykudinkin commented on code in PR #5733:

URL: https://github.com/apache/hudi/pull/5733#discussion_r889423624

##########

hudi-spark-datasource/hudi-spark-common/src/main/java/org/apache/hudi/internal/BulkInsertDataInternalWriterHelper.java:

##########

@@ -128,7 +133,11 @@ public void write(InternalRow record) throws IOException {

if (!keyGeneratorOpt.isPresent()) { // NoPartitionerKeyGen

partitionPath = "";

} else if (simpleKeyGen) { // SimpleKeyGen

- partitionPath = (record.get(simplePartitionFieldIndex, simplePartitionFieldDataType)).toString();

+ Object parititionPathValue = record.get(simplePartitionFieldIndex, simplePartitionFieldDataType);

Review Comment:

Yes, will rebase before landing

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

hudi-bot commented on PR #5733:

URL: https://github.com/apache/hudi/pull/5733#issuecomment-1147601463

<!--

Meta data

{

"version" : 1,

"metaDataEntries" : [ {

"hash" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"status" : "DELETED",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9041",

"triggerID" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"triggerType" : "PUSH"

}, {

"hash" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"status" : "DELETED",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9066",

"triggerID" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"triggerType" : "PUSH"

}, {

"hash" : "bb436a73c4bd66a5a90467475710851b598d2ae9",

"status" : "DELETED",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9070",

"triggerID" : "bb436a73c4bd66a5a90467475710851b598d2ae9",

"triggerType" : "PUSH"

}, {

"hash" : "241b4a0a69e8ab13dbb1b6a2c82b06dfee877e41",

"status" : "SUCCESS",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9101",

"triggerID" : "241b4a0a69e8ab13dbb1b6a2c82b06dfee877e41",

"triggerType" : "PUSH"

} ]

}-->

## CI report:

* 241b4a0a69e8ab13dbb1b6a2c82b06dfee877e41 Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9101)

<details>

<summary>Bot commands</summary>

@hudi-bot supports the following commands:

- `@hudi-bot run azure` re-run the last Azure build

</details>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

hudi-bot commented on PR #5733:

URL: https://github.com/apache/hudi/pull/5733#issuecomment-1146480673

<!--

Meta data

{

"version" : 1,

"metaDataEntries" : [ {

"hash" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"status" : "DELETED",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9041",

"triggerID" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"triggerType" : "PUSH"

}, {

"hash" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"status" : "SUCCESS",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9066",

"triggerID" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"triggerType" : "PUSH"

} ]

}-->

## CI report:

* e6173f00c290e9e0bb55e4c7d8092f5eb26871ae Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9066)

<details>

<summary>Bot commands</summary>

@hudi-bot supports the following commands:

- `@hudi-bot run azure` re-run the last Azure build

</details>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

hudi-bot commented on PR #5733:

URL: https://github.com/apache/hudi/pull/5733#issuecomment-1144238889

<!--

Meta data

{

"version" : 1,

"metaDataEntries" : [ {

"hash" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"status" : "FAILURE",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9041",

"triggerID" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"triggerType" : "PUSH"

} ]

}-->

## CI report:

* 1e269381cb33f0f92be0749eeea66c3368fc225e Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9041)

<details>

<summary>Bot commands</summary>

@hudi-bot supports the following commands:

- `@hudi-bot run azure` re-run the last Azure build

</details>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on a diff in pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on code in PR #5733:

URL: https://github.com/apache/hudi/pull/5733#discussion_r887340160

##########

hudi-common/src/main/java/org/apache/hudi/common/table/TableSchemaResolver.java:

##########

@@ -58,100 +60,46 @@

import org.apache.parquet.hadoop.metadata.ParquetMetadata;

import org.apache.parquet.schema.MessageType;

+import javax.annotation.concurrent.ThreadSafe;

import java.io.IOException;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.Iterator;

import java.util.List;

+import java.util.concurrent.ConcurrentHashMap;

import static org.apache.hudi.avro.AvroSchemaUtils.appendFieldsToSchema;

+import static org.apache.hudi.avro.AvroSchemaUtils.containsFieldInSchema;

import static org.apache.hudi.avro.AvroSchemaUtils.createNullableSchema;

/**

* Helper class to read schema from data files and log files and to convert it between different formats.

- *

- * TODO(HUDI-3626) cleanup

*/

+@ThreadSafe

public class TableSchemaResolver {

private static final Logger LOG = LogManager.getLogger(TableSchemaResolver.class);

- private final HoodieTableMetaClient metaClient;

- private final boolean hasOperationField;

- public TableSchemaResolver(HoodieTableMetaClient metaClient) {

- this.metaClient = metaClient;

- this.hasOperationField = hasOperationField();

- }

+ private final HoodieTableMetaClient metaClient;

/**

- * Gets the schema for a hoodie table. Depending on the type of table, read from any file written in the latest

- * commit. We will assume that the schema has not changed within a single atomic write.

+ * NOTE: {@link HoodieCommitMetadata} could be of non-trivial size for large tables (in 100s of Mbs)

+ * and therefore we'd want to limit amount of throw-away work being performed while fetching

+ * commits' metadata

*

- * @return Parquet schema for this table

+ * Please check out corresponding methods to fetch commonly used instances of {@link HoodieCommitMetadata}:

+ * {@link #getLatestCommitMetadataWithValidSchema()},

+ * {@link #getLatestCommitMetadataWithValidSchema()},

+ * {@link #getCachedCommitMetadata(HoodieInstant)}

*/

- private MessageType getTableParquetSchemaFromDataFile() {

- HoodieActiveTimeline activeTimeline = metaClient.getActiveTimeline();

- Option<Pair<HoodieInstant, HoodieCommitMetadata>> instantAndCommitMetadata =

- activeTimeline.getLastCommitMetadataWithValidData();

- try {

- switch (metaClient.getTableType()) {

- case COPY_ON_WRITE:

- // For COW table, the file has data written must be in parquet or orc format currently.

- if (instantAndCommitMetadata.isPresent()) {

- HoodieCommitMetadata commitMetadata = instantAndCommitMetadata.get().getRight();

- Iterator<String> filePaths = commitMetadata.getFileIdAndFullPaths(metaClient.getBasePath()).values().iterator();

- return fetchSchemaFromFiles(filePaths);

- } else {

- throw new IllegalArgumentException("Could not find any data file written for commit, "

- + "so could not get schema for table " + metaClient.getBasePath());

- }

- case MERGE_ON_READ:

- // For MOR table, the file has data written may be a parquet file, .log file, orc file or hfile.

- // Determine the file format based on the file name, and then extract schema from it.

- if (instantAndCommitMetadata.isPresent()) {

- HoodieCommitMetadata commitMetadata = instantAndCommitMetadata.get().getRight();

- Iterator<String> filePaths = commitMetadata.getFileIdAndFullPaths(metaClient.getBasePath()).values().iterator();

- return fetchSchemaFromFiles(filePaths);

- } else {

- throw new IllegalArgumentException("Could not find any data file written for commit, "

- + "so could not get schema for table " + metaClient.getBasePath());

- }

- default:

- LOG.error("Unknown table type " + metaClient.getTableType());

- throw new InvalidTableException(metaClient.getBasePath());

- }

- } catch (IOException e) {

- throw new HoodieException("Failed to read data schema", e);

- }

- }

+ private final Lazy<ConcurrentHashMap<HoodieInstant, HoodieCommitMetadata>> commitMetadataCache;

Review Comment:

If there are more commits in the timeline compared to when the commitMetadataCache was updated, wouldn't we want to recompute it again. from getLatestCommitMetadataWithValidSchema impl, looks like we set it once and reuse it for any further calls.

##########

hudi-common/src/main/java/org/apache/hudi/common/table/TableSchemaResolver.java:

##########

@@ -176,86 +124,25 @@ public Schema getTableAvroSchema() throws Exception {

* @throws Exception

*/

public Schema getTableAvroSchema(boolean includeMetadataFields) throws Exception {

- Schema schema;

- Option<Schema> schemaFromCommitMetadata = getTableSchemaFromCommitMetadata(includeMetadataFields);

- if (schemaFromCommitMetadata.isPresent()) {

- schema = schemaFromCommitMetadata.get();

- } else {

- Option<Schema> schemaFromTableConfig = metaClient.getTableConfig().getTableCreateSchema();

- if (schemaFromTableConfig.isPresent()) {

- if (includeMetadataFields) {

- schema = HoodieAvroUtils.addMetadataFields(schemaFromTableConfig.get(), hasOperationField);

- } else {

- schema = schemaFromTableConfig.get();

- }

- } else {

- if (includeMetadataFields) {

- schema = getTableAvroSchemaFromDataFile();

- } else {

- schema = HoodieAvroUtils.removeMetadataFields(getTableAvroSchemaFromDataFile());

- }

- }

- }

-

- Option<String[]> partitionFieldsOpt = metaClient.getTableConfig().getPartitionFields();

- if (metaClient.getTableConfig().shouldDropPartitionColumns()) {

- schema = recreateSchemaWhenDropPartitionColumns(partitionFieldsOpt, schema);

- }

- return schema;

+ return getTableAvroSchemaInternal(includeMetadataFields, Option.empty());

}

- public static Schema recreateSchemaWhenDropPartitionColumns(Option<String[]> partitionFieldsOpt, Schema originSchema) {

- // when hoodie.datasource.write.drop.partition.columns is true, partition columns can't be persisted in data files.

- // And there are no partition schema if the schema is parsed from data files.

- // Here we create partition Fields for this case, and use StringType as the data type.

- Schema schema = originSchema;

- if (partitionFieldsOpt.isPresent() && partitionFieldsOpt.get().length != 0) {

- List<String> partitionFields = Arrays.asList(partitionFieldsOpt.get());

-

- final Schema schema0 = originSchema;

- boolean hasPartitionColNotInSchema = partitionFields.stream().anyMatch(

- pt -> !HoodieAvroUtils.containsFieldInSchema(schema0, pt)

- );

- boolean hasPartitionColInSchema = partitionFields.stream().anyMatch(

- pt -> HoodieAvroUtils.containsFieldInSchema(schema0, pt)

- );

- if (hasPartitionColNotInSchema && hasPartitionColInSchema) {

- throw new HoodieIncompatibleSchemaException(

- "Not support: Partial partition fields are still in the schema "

- + "when enable hoodie.datasource.write.drop.partition.columns");

- }

-

- if (hasPartitionColNotInSchema) {

- // when hasPartitionColNotInSchema is true and hasPartitionColInSchema is false, all partition columns

- // are not in originSchema. So we create and add them.

- List<Field> newFields = new ArrayList<>();

- for (String partitionField: partitionFields) {

- newFields.add(new Schema.Field(

- partitionField, createNullableSchema(Schema.Type.STRING), "", JsonProperties.NULL_VALUE));

- }

- schema = appendFieldsToSchema(schema, newFields);

- }

- }

- return schema;

+ /**

+ * Fetches tables schema in Avro format as of the given instant

+ *

+ * @param instant as of which table's schema will be fetched

+ */

+ public Schema getTableAvroSchema(HoodieInstant instant, boolean includeMetadataFields) throws Exception {

+ return getTableAvroSchemaInternal(includeMetadataFields, Option.of(instant));

}

/**

* Gets full schema (user + metadata) for a hoodie table in Parquet format.

*

* @return Parquet schema for the table

- * @throws Exception

*/

public MessageType getTableParquetSchema() throws Exception {

- Option<Schema> schemaFromCommitMetadata = getTableSchemaFromCommitMetadata(true);

- if (schemaFromCommitMetadata.isPresent()) {

- return convertAvroSchemaToParquet(schemaFromCommitMetadata.get());

- }

- Option<Schema> schemaFromTableConfig = metaClient.getTableConfig().getTableCreateSchema();

- if (schemaFromTableConfig.isPresent()) {

- Schema schema = HoodieAvroUtils.addMetadataFields(schemaFromTableConfig.get(), hasOperationField);

- return convertAvroSchemaToParquet(schema);

- }

- return getTableParquetSchemaFromDataFile();

+ return convertAvroSchemaToParquet(getTableAvroSchema(true));

Review Comment:

I see this new code path also handles shouldDropPartitionColumns, where as old code did not. was it a bug that we are fixing or was it unintentional w/ refactoring ?

##########

hudi-common/src/main/java/org/apache/hudi/common/table/TableSchemaResolver.java:

##########

@@ -176,86 +124,25 @@ public Schema getTableAvroSchema() throws Exception {

* @throws Exception

*/

public Schema getTableAvroSchema(boolean includeMetadataFields) throws Exception {

- Schema schema;

- Option<Schema> schemaFromCommitMetadata = getTableSchemaFromCommitMetadata(includeMetadataFields);

- if (schemaFromCommitMetadata.isPresent()) {

- schema = schemaFromCommitMetadata.get();

- } else {

- Option<Schema> schemaFromTableConfig = metaClient.getTableConfig().getTableCreateSchema();

- if (schemaFromTableConfig.isPresent()) {

- if (includeMetadataFields) {

- schema = HoodieAvroUtils.addMetadataFields(schemaFromTableConfig.get(), hasOperationField);

- } else {

- schema = schemaFromTableConfig.get();

- }

- } else {

- if (includeMetadataFields) {

- schema = getTableAvroSchemaFromDataFile();

- } else {

- schema = HoodieAvroUtils.removeMetadataFields(getTableAvroSchemaFromDataFile());

- }

- }

- }

-

- Option<String[]> partitionFieldsOpt = metaClient.getTableConfig().getPartitionFields();

- if (metaClient.getTableConfig().shouldDropPartitionColumns()) {

- schema = recreateSchemaWhenDropPartitionColumns(partitionFieldsOpt, schema);

- }

- return schema;

+ return getTableAvroSchemaInternal(includeMetadataFields, Option.empty());

}

- public static Schema recreateSchemaWhenDropPartitionColumns(Option<String[]> partitionFieldsOpt, Schema originSchema) {

- // when hoodie.datasource.write.drop.partition.columns is true, partition columns can't be persisted in data files.

- // And there are no partition schema if the schema is parsed from data files.

- // Here we create partition Fields for this case, and use StringType as the data type.

- Schema schema = originSchema;

- if (partitionFieldsOpt.isPresent() && partitionFieldsOpt.get().length != 0) {

- List<String> partitionFields = Arrays.asList(partitionFieldsOpt.get());

-

- final Schema schema0 = originSchema;

- boolean hasPartitionColNotInSchema = partitionFields.stream().anyMatch(

- pt -> !HoodieAvroUtils.containsFieldInSchema(schema0, pt)

- );

- boolean hasPartitionColInSchema = partitionFields.stream().anyMatch(

- pt -> HoodieAvroUtils.containsFieldInSchema(schema0, pt)

- );

- if (hasPartitionColNotInSchema && hasPartitionColInSchema) {

- throw new HoodieIncompatibleSchemaException(

- "Not support: Partial partition fields are still in the schema "

- + "when enable hoodie.datasource.write.drop.partition.columns");

- }

-

- if (hasPartitionColNotInSchema) {

- // when hasPartitionColNotInSchema is true and hasPartitionColInSchema is false, all partition columns

- // are not in originSchema. So we create and add them.

- List<Field> newFields = new ArrayList<>();

- for (String partitionField: partitionFields) {

- newFields.add(new Schema.Field(

- partitionField, createNullableSchema(Schema.Type.STRING), "", JsonProperties.NULL_VALUE));

- }

- schema = appendFieldsToSchema(schema, newFields);

- }

- }

- return schema;

+ /**

+ * Fetches tables schema in Avro format as of the given instant

+ *

+ * @param instant as of which table's schema will be fetched

+ */

+ public Schema getTableAvroSchema(HoodieInstant instant, boolean includeMetadataFields) throws Exception {

+ return getTableAvroSchemaInternal(includeMetadataFields, Option.of(instant));

}

/**

* Gets full schema (user + metadata) for a hoodie table in Parquet format.

*

* @return Parquet schema for the table

- * @throws Exception

*/

public MessageType getTableParquetSchema() throws Exception {

- Option<Schema> schemaFromCommitMetadata = getTableSchemaFromCommitMetadata(true);

- if (schemaFromCommitMetadata.isPresent()) {

- return convertAvroSchemaToParquet(schemaFromCommitMetadata.get());

- }

- Option<Schema> schemaFromTableConfig = metaClient.getTableConfig().getTableCreateSchema();

- if (schemaFromTableConfig.isPresent()) {

- Schema schema = HoodieAvroUtils.addMetadataFields(schemaFromTableConfig.get(), hasOperationField);

- return convertAvroSchemaToParquet(schema);

- }

- return getTableParquetSchemaFromDataFile();

+ return convertAvroSchemaToParquet(getTableAvroSchema(true));

Review Comment:

getTableAvroSchema in one of the code paths(equivalent to L258), fetches schema from file as parquet schema. and here we are converting that to avro and again back to parquet. Can we avoid the back and forth conversion if possible.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] YuweiXiao commented on a diff in pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

YuweiXiao commented on code in PR #5733:

URL: https://github.com/apache/hudi/pull/5733#discussion_r887715553

##########

hudi-common/src/main/java/org/apache/hudi/common/table/timeline/HoodieActiveTimeline.java:

##########

@@ -267,43 +265,47 @@ public Option<byte[]> getInstantDetails(HoodieInstant instant) {

}

/**

- * Get the last instant with valid schema, and convert this to HoodieCommitMetadata

+ * Returns most recent instant having valid schema in its {@link HoodieCommitMetadata}

*/

public Option<Pair<HoodieInstant, HoodieCommitMetadata>> getLastCommitMetadataWithValidSchema() {

- List<HoodieInstant> completed = getCommitsTimeline().filterCompletedInstants().getInstants()

- .sorted(Comparator.comparing(HoodieInstant::getTimestamp).reversed()).collect(Collectors.toList());

- for (HoodieInstant instant : completed) {

- try {

- HoodieCommitMetadata commitMetadata = HoodieCommitMetadata.fromBytes(

- getInstantDetails(instant).get(), HoodieCommitMetadata.class);

- if (!StringUtils.isNullOrEmpty(commitMetadata.getMetadata(HoodieCommitMetadata.SCHEMA_KEY))) {

- return Option.of(Pair.of(instant, commitMetadata));

- }

- } catch (IOException e) {

- LOG.warn("Failed to convert instant to HoodieCommitMetadata: " + instant.toString());

- }

- }

- return Option.empty();

+ return Option.fromJavaOptional(

+ getCommitMetadataStream()

+ .filter(instantCommitMetadataPair ->

+ !StringUtils.isNullOrEmpty(instantCommitMetadataPair.getValue().getMetadata(HoodieCommitMetadata.SCHEMA_KEY)))

+ .findFirst()

+ );

}

/**

* Get the last instant with valid data, and convert this to HoodieCommitMetadata

*/

public Option<Pair<HoodieInstant, HoodieCommitMetadata>> getLastCommitMetadataWithValidData() {

- List<HoodieInstant> completed = getCommitsTimeline().filterCompletedInstants().getInstants()

- .sorted(Comparator.comparing(HoodieInstant::getTimestamp).reversed()).collect(Collectors.toList());

- for (HoodieInstant instant : completed) {

- try {

- HoodieCommitMetadata commitMetadata = HoodieCommitMetadata.fromBytes(

- getInstantDetails(instant).get(), HoodieCommitMetadata.class);

- if (!commitMetadata.getFileIdAndRelativePaths().isEmpty()) {

- return Option.of(Pair.of(instant, commitMetadata));

- }

- } catch (IOException e) {

- LOG.warn("Failed to convert instant to HoodieCommitMetadata: " + instant.toString());

- }

- }

- return Option.empty();

+ return Option.fromJavaOptional(

+ getCommitMetadataStream()

+ .filter(instantCommitMetadataPair ->

+ !instantCommitMetadataPair.getValue().getFileIdAndRelativePaths().isEmpty())

+ .findFirst()

+ );

+ }

+

+ /**

+ * Returns stream of {@link HoodieCommitMetadata} in order reverse to chronological (ie most

+ * recent metadata being the first element)

+ */

+ private Stream<Pair<HoodieInstant, HoodieCommitMetadata>> getCommitMetadataStream() {

+ // NOTE: Streams are lazy

Review Comment:

Hey Alex, could this lazy property save some computations compared to original implementation? (e.g, construct timestamp list first, and loop over it to get first valid metadata).

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] alexeykudinkin commented on a diff in pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

alexeykudinkin commented on code in PR #5733:

URL: https://github.com/apache/hudi/pull/5733#discussion_r889400793

##########

hudi-common/src/main/java/org/apache/hudi/common/table/TableSchemaResolver.java:

##########

@@ -58,100 +60,46 @@

import org.apache.parquet.hadoop.metadata.ParquetMetadata;

import org.apache.parquet.schema.MessageType;

+import javax.annotation.concurrent.ThreadSafe;

import java.io.IOException;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.Iterator;

import java.util.List;

+import java.util.concurrent.ConcurrentHashMap;

import static org.apache.hudi.avro.AvroSchemaUtils.appendFieldsToSchema;

+import static org.apache.hudi.avro.AvroSchemaUtils.containsFieldInSchema;

import static org.apache.hudi.avro.AvroSchemaUtils.createNullableSchema;

/**

* Helper class to read schema from data files and log files and to convert it between different formats.

- *

- * TODO(HUDI-3626) cleanup

*/

+@ThreadSafe

public class TableSchemaResolver {

private static final Logger LOG = LogManager.getLogger(TableSchemaResolver.class);

- private final HoodieTableMetaClient metaClient;

- private final boolean hasOperationField;

- public TableSchemaResolver(HoodieTableMetaClient metaClient) {

- this.metaClient = metaClient;

- this.hasOperationField = hasOperationField();

- }

+ private final HoodieTableMetaClient metaClient;

/**

- * Gets the schema for a hoodie table. Depending on the type of table, read from any file written in the latest

- * commit. We will assume that the schema has not changed within a single atomic write.

+ * NOTE: {@link HoodieCommitMetadata} could be of non-trivial size for large tables (in 100s of Mbs)

+ * and therefore we'd want to limit amount of throw-away work being performed while fetching

+ * commits' metadata

*

- * @return Parquet schema for this table

+ * Please check out corresponding methods to fetch commonly used instances of {@link HoodieCommitMetadata}:

+ * {@link #getLatestCommitMetadataWithValidSchema()},

+ * {@link #getLatestCommitMetadataWithValidSchema()},

+ * {@link #getCachedCommitMetadata(HoodieInstant)}

*/

- private MessageType getTableParquetSchemaFromDataFile() {

- HoodieActiveTimeline activeTimeline = metaClient.getActiveTimeline();

- Option<Pair<HoodieInstant, HoodieCommitMetadata>> instantAndCommitMetadata =

- activeTimeline.getLastCommitMetadataWithValidData();

- try {

- switch (metaClient.getTableType()) {

- case COPY_ON_WRITE:

- // For COW table, the file has data written must be in parquet or orc format currently.

- if (instantAndCommitMetadata.isPresent()) {

- HoodieCommitMetadata commitMetadata = instantAndCommitMetadata.get().getRight();

- Iterator<String> filePaths = commitMetadata.getFileIdAndFullPaths(metaClient.getBasePath()).values().iterator();

- return fetchSchemaFromFiles(filePaths);

- } else {

- throw new IllegalArgumentException("Could not find any data file written for commit, "

- + "so could not get schema for table " + metaClient.getBasePath());

- }

- case MERGE_ON_READ:

- // For MOR table, the file has data written may be a parquet file, .log file, orc file or hfile.

- // Determine the file format based on the file name, and then extract schema from it.

- if (instantAndCommitMetadata.isPresent()) {

- HoodieCommitMetadata commitMetadata = instantAndCommitMetadata.get().getRight();

- Iterator<String> filePaths = commitMetadata.getFileIdAndFullPaths(metaClient.getBasePath()).values().iterator();

- return fetchSchemaFromFiles(filePaths);

- } else {

- throw new IllegalArgumentException("Could not find any data file written for commit, "

- + "so could not get schema for table " + metaClient.getBasePath());

- }

- default:

- LOG.error("Unknown table type " + metaClient.getTableType());

- throw new InvalidTableException(metaClient.getBasePath());

- }

- } catch (IOException e) {

- throw new HoodieException("Failed to read data schema", e);

- }

- }

+ private final Lazy<ConcurrentHashMap<HoodieInstant, HoodieCommitMetadata>> commitMetadataCache;

Review Comment:

Discussed offline: `TableSchemaResolver` is a short-lived object not meant to be refreshed -- to get latest schema you will have to create another instance with the refreshed `HoodieMetaClient` instance

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on a diff in pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on code in PR #5733:

URL: https://github.com/apache/hudi/pull/5733#discussion_r889414242

##########

hudi-spark-datasource/hudi-spark-common/src/main/java/org/apache/hudi/internal/BulkInsertDataInternalWriterHelper.java:

##########

@@ -128,7 +133,11 @@ public void write(InternalRow record) throws IOException {

if (!keyGeneratorOpt.isPresent()) { // NoPartitionerKeyGen

partitionPath = "";

} else if (simpleKeyGen) { // SimpleKeyGen

- partitionPath = (record.get(simplePartitionFieldIndex, simplePartitionFieldDataType)).toString();

+ Object parititionPathValue = record.get(simplePartitionFieldIndex, simplePartitionFieldDataType);

Review Comment:

Is it possible to remove the fixes that I have in https://github.com/apache/hudi/pull/5664 you may run into conflicts.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

hudi-bot commented on PR #5733:

URL: https://github.com/apache/hudi/pull/5733#issuecomment-1146520849

<!--

Meta data

{

"version" : 1,

"metaDataEntries" : [ {

"hash" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"status" : "DELETED",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9041",

"triggerID" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"triggerType" : "PUSH"

}, {

"hash" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"status" : "SUCCESS",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9066",

"triggerID" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"triggerType" : "PUSH"

}, {

"hash" : "bb436a73c4bd66a5a90467475710851b598d2ae9",

"status" : "PENDING",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9070",

"triggerID" : "bb436a73c4bd66a5a90467475710851b598d2ae9",

"triggerType" : "PUSH"

} ]

}-->

## CI report:

* e6173f00c290e9e0bb55e4c7d8092f5eb26871ae Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9066)

* bb436a73c4bd66a5a90467475710851b598d2ae9 Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9070)

<details>

<summary>Bot commands</summary>

@hudi-bot supports the following commands:

- `@hudi-bot run azure` re-run the last Azure build

</details>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] alexeykudinkin commented on a diff in pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

alexeykudinkin commented on code in PR #5733:

URL: https://github.com/apache/hudi/pull/5733#discussion_r889401027

##########

hudi-common/src/main/java/org/apache/hudi/common/table/TableSchemaResolver.java:

##########

@@ -176,86 +124,25 @@ public Schema getTableAvroSchema() throws Exception {

* @throws Exception

*/

public Schema getTableAvroSchema(boolean includeMetadataFields) throws Exception {

- Schema schema;

- Option<Schema> schemaFromCommitMetadata = getTableSchemaFromCommitMetadata(includeMetadataFields);

- if (schemaFromCommitMetadata.isPresent()) {

- schema = schemaFromCommitMetadata.get();

- } else {

- Option<Schema> schemaFromTableConfig = metaClient.getTableConfig().getTableCreateSchema();

- if (schemaFromTableConfig.isPresent()) {

- if (includeMetadataFields) {

- schema = HoodieAvroUtils.addMetadataFields(schemaFromTableConfig.get(), hasOperationField);

- } else {

- schema = schemaFromTableConfig.get();

- }

- } else {

- if (includeMetadataFields) {

- schema = getTableAvroSchemaFromDataFile();

- } else {

- schema = HoodieAvroUtils.removeMetadataFields(getTableAvroSchemaFromDataFile());

- }

- }

- }

-

- Option<String[]> partitionFieldsOpt = metaClient.getTableConfig().getPartitionFields();

- if (metaClient.getTableConfig().shouldDropPartitionColumns()) {

- schema = recreateSchemaWhenDropPartitionColumns(partitionFieldsOpt, schema);

- }

- return schema;

+ return getTableAvroSchemaInternal(includeMetadataFields, Option.empty());

}

- public static Schema recreateSchemaWhenDropPartitionColumns(Option<String[]> partitionFieldsOpt, Schema originSchema) {

- // when hoodie.datasource.write.drop.partition.columns is true, partition columns can't be persisted in data files.

- // And there are no partition schema if the schema is parsed from data files.

- // Here we create partition Fields for this case, and use StringType as the data type.

- Schema schema = originSchema;

- if (partitionFieldsOpt.isPresent() && partitionFieldsOpt.get().length != 0) {

- List<String> partitionFields = Arrays.asList(partitionFieldsOpt.get());

-

- final Schema schema0 = originSchema;

- boolean hasPartitionColNotInSchema = partitionFields.stream().anyMatch(

- pt -> !HoodieAvroUtils.containsFieldInSchema(schema0, pt)

- );

- boolean hasPartitionColInSchema = partitionFields.stream().anyMatch(

- pt -> HoodieAvroUtils.containsFieldInSchema(schema0, pt)

- );

- if (hasPartitionColNotInSchema && hasPartitionColInSchema) {

- throw new HoodieIncompatibleSchemaException(

- "Not support: Partial partition fields are still in the schema "

- + "when enable hoodie.datasource.write.drop.partition.columns");

- }

-

- if (hasPartitionColNotInSchema) {

- // when hasPartitionColNotInSchema is true and hasPartitionColInSchema is false, all partition columns

- // are not in originSchema. So we create and add them.

- List<Field> newFields = new ArrayList<>();

- for (String partitionField: partitionFields) {

- newFields.add(new Schema.Field(

- partitionField, createNullableSchema(Schema.Type.STRING), "", JsonProperties.NULL_VALUE));

- }

- schema = appendFieldsToSchema(schema, newFields);

- }

- }

- return schema;

+ /**

+ * Fetches tables schema in Avro format as of the given instant

+ *

+ * @param instant as of which table's schema will be fetched

+ */

+ public Schema getTableAvroSchema(HoodieInstant instant, boolean includeMetadataFields) throws Exception {

+ return getTableAvroSchemaInternal(includeMetadataFields, Option.of(instant));

}

/**

* Gets full schema (user + metadata) for a hoodie table in Parquet format.

*

* @return Parquet schema for the table

- * @throws Exception

*/

public MessageType getTableParquetSchema() throws Exception {

- Option<Schema> schemaFromCommitMetadata = getTableSchemaFromCommitMetadata(true);

- if (schemaFromCommitMetadata.isPresent()) {

- return convertAvroSchemaToParquet(schemaFromCommitMetadata.get());

- }

- Option<Schema> schemaFromTableConfig = metaClient.getTableConfig().getTableCreateSchema();

- if (schemaFromTableConfig.isPresent()) {

- Schema schema = HoodieAvroUtils.addMetadataFields(schemaFromTableConfig.get(), hasOperationField);

- return convertAvroSchemaToParquet(schema);

- }

- return getTableParquetSchemaFromDataFile();

+ return convertAvroSchemaToParquet(getTableAvroSchema(true));

Review Comment:

It was not handled correctly before

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] alexeykudinkin commented on pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

alexeykudinkin commented on PR #5733:

URL: https://github.com/apache/hudi/pull/5733#issuecomment-1146477125

Build succeeded:

https://dev.azure.com/apache-hudi-ci-org/apache-hudi-ci/_build/results?buildId=9066&view=results

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

hudi-bot commented on PR #5733:

URL: https://github.com/apache/hudi/pull/5733#issuecomment-1144202927

<!--

Meta data

{

"version" : 1,

"metaDataEntries" : [ {

"hash" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"status" : "PENDING",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9041",

"triggerID" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"triggerType" : "PUSH"

} ]

}-->

## CI report:

* 1e269381cb33f0f92be0749eeea66c3368fc225e Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9041)

<details>

<summary>Bot commands</summary>

@hudi-bot supports the following commands:

- `@hudi-bot run azure` re-run the last Azure build

</details>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] alexeykudinkin commented on a diff in pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

alexeykudinkin commented on code in PR #5733:

URL: https://github.com/apache/hudi/pull/5733#discussion_r889401027

##########

hudi-common/src/main/java/org/apache/hudi/common/table/TableSchemaResolver.java:

##########

@@ -176,86 +124,25 @@ public Schema getTableAvroSchema() throws Exception {

* @throws Exception

*/

public Schema getTableAvroSchema(boolean includeMetadataFields) throws Exception {

- Schema schema;

- Option<Schema> schemaFromCommitMetadata = getTableSchemaFromCommitMetadata(includeMetadataFields);

- if (schemaFromCommitMetadata.isPresent()) {

- schema = schemaFromCommitMetadata.get();

- } else {

- Option<Schema> schemaFromTableConfig = metaClient.getTableConfig().getTableCreateSchema();

- if (schemaFromTableConfig.isPresent()) {

- if (includeMetadataFields) {

- schema = HoodieAvroUtils.addMetadataFields(schemaFromTableConfig.get(), hasOperationField);

- } else {

- schema = schemaFromTableConfig.get();

- }

- } else {

- if (includeMetadataFields) {

- schema = getTableAvroSchemaFromDataFile();

- } else {

- schema = HoodieAvroUtils.removeMetadataFields(getTableAvroSchemaFromDataFile());

- }

- }

- }

-

- Option<String[]> partitionFieldsOpt = metaClient.getTableConfig().getPartitionFields();

- if (metaClient.getTableConfig().shouldDropPartitionColumns()) {

- schema = recreateSchemaWhenDropPartitionColumns(partitionFieldsOpt, schema);

- }

- return schema;

+ return getTableAvroSchemaInternal(includeMetadataFields, Option.empty());

}

- public static Schema recreateSchemaWhenDropPartitionColumns(Option<String[]> partitionFieldsOpt, Schema originSchema) {

- // when hoodie.datasource.write.drop.partition.columns is true, partition columns can't be persisted in data files.

- // And there are no partition schema if the schema is parsed from data files.

- // Here we create partition Fields for this case, and use StringType as the data type.

- Schema schema = originSchema;

- if (partitionFieldsOpt.isPresent() && partitionFieldsOpt.get().length != 0) {

- List<String> partitionFields = Arrays.asList(partitionFieldsOpt.get());

-

- final Schema schema0 = originSchema;

- boolean hasPartitionColNotInSchema = partitionFields.stream().anyMatch(

- pt -> !HoodieAvroUtils.containsFieldInSchema(schema0, pt)

- );

- boolean hasPartitionColInSchema = partitionFields.stream().anyMatch(

- pt -> HoodieAvroUtils.containsFieldInSchema(schema0, pt)

- );

- if (hasPartitionColNotInSchema && hasPartitionColInSchema) {

- throw new HoodieIncompatibleSchemaException(

- "Not support: Partial partition fields are still in the schema "

- + "when enable hoodie.datasource.write.drop.partition.columns");

- }

-

- if (hasPartitionColNotInSchema) {

- // when hasPartitionColNotInSchema is true and hasPartitionColInSchema is false, all partition columns

- // are not in originSchema. So we create and add them.

- List<Field> newFields = new ArrayList<>();

- for (String partitionField: partitionFields) {

- newFields.add(new Schema.Field(

- partitionField, createNullableSchema(Schema.Type.STRING), "", JsonProperties.NULL_VALUE));

- }

- schema = appendFieldsToSchema(schema, newFields);

- }

- }

- return schema;

+ /**

+ * Fetches tables schema in Avro format as of the given instant

+ *

+ * @param instant as of which table's schema will be fetched

+ */

+ public Schema getTableAvroSchema(HoodieInstant instant, boolean includeMetadataFields) throws Exception {

+ return getTableAvroSchemaInternal(includeMetadataFields, Option.of(instant));

}

/**

* Gets full schema (user + metadata) for a hoodie table in Parquet format.

*

* @return Parquet schema for the table

- * @throws Exception

*/

public MessageType getTableParquetSchema() throws Exception {

- Option<Schema> schemaFromCommitMetadata = getTableSchemaFromCommitMetadata(true);

- if (schemaFromCommitMetadata.isPresent()) {

- return convertAvroSchemaToParquet(schemaFromCommitMetadata.get());

- }

- Option<Schema> schemaFromTableConfig = metaClient.getTableConfig().getTableCreateSchema();

- if (schemaFromTableConfig.isPresent()) {

- Schema schema = HoodieAvroUtils.addMetadataFields(schemaFromTableConfig.get(), hasOperationField);

- return convertAvroSchemaToParquet(schema);

- }

- return getTableParquetSchemaFromDataFile();

+ return convertAvroSchemaToParquet(getTableAvroSchema(true));

Review Comment:

It was not handled correctly before -- this config has to be handled in all code-paths

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

hudi-bot commented on PR #5733:

URL: https://github.com/apache/hudi/pull/5733#issuecomment-1146433441

<!--

Meta data

{

"version" : 1,

"metaDataEntries" : [ {

"hash" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"status" : "FAILURE",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9041",

"triggerID" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"triggerType" : "PUSH"

}, {

"hash" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"status" : "PENDING",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9066",

"triggerID" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"triggerType" : "PUSH"

} ]

}-->

## CI report:

* 1e269381cb33f0f92be0749eeea66c3368fc225e Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9041)

* e6173f00c290e9e0bb55e4c7d8092f5eb26871ae Azure: [PENDING](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9066)

<details>

<summary>Bot commands</summary>

@hudi-bot supports the following commands:

- `@hudi-bot run azure` re-run the last Azure build

</details>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

hudi-bot commented on PR #5733:

URL: https://github.com/apache/hudi/pull/5733#issuecomment-1146431494

<!--

Meta data

{

"version" : 1,

"metaDataEntries" : [ {

"hash" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"status" : "FAILURE",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9041",

"triggerID" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"triggerType" : "PUSH"

}, {

"hash" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"status" : "UNKNOWN",

"url" : "TBD",

"triggerID" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"triggerType" : "PUSH"

} ]

}-->

## CI report:

* 1e269381cb33f0f92be0749eeea66c3368fc225e Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9041)

* e6173f00c290e9e0bb55e4c7d8092f5eb26871ae UNKNOWN

<details>

<summary>Bot commands</summary>

@hudi-bot supports the following commands:

- `@hudi-bot run azure` re-run the last Azure build

</details>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan merged pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

nsivabalan merged PR #5733:

URL: https://github.com/apache/hudi/pull/5733

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

hudi-bot commented on PR #5733:

URL: https://github.com/apache/hudi/pull/5733#issuecomment-1146520083

<!--

Meta data

{

"version" : 1,

"metaDataEntries" : [ {

"hash" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"status" : "DELETED",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9041",

"triggerID" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"triggerType" : "PUSH"

}, {

"hash" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"status" : "SUCCESS",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9066",

"triggerID" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"triggerType" : "PUSH"

}, {

"hash" : "bb436a73c4bd66a5a90467475710851b598d2ae9",

"status" : "UNKNOWN",

"url" : "TBD",

"triggerID" : "bb436a73c4bd66a5a90467475710851b598d2ae9",

"triggerType" : "PUSH"

} ]

}-->

## CI report:

* e6173f00c290e9e0bb55e4c7d8092f5eb26871ae Azure: [SUCCESS](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9066)

* bb436a73c4bd66a5a90467475710851b598d2ae9 UNKNOWN

<details>

<summary>Bot commands</summary>

@hudi-bot supports the following commands:

- `@hudi-bot run azure` re-run the last Azure build

</details>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on PR #5733:

URL: https://github.com/apache/hudi/pull/5733#issuecomment-1147345230

Rebased with latest master since a [flaky test fix](https://github.com/apache/hudi/pull/5749) was landed.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

hudi-bot commented on PR #5733:

URL: https://github.com/apache/hudi/pull/5733#issuecomment-1147352873

<!--

Meta data

{

"version" : 1,

"metaDataEntries" : [ {

"hash" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"status" : "DELETED",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9041",

"triggerID" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"triggerType" : "PUSH"

}, {

"hash" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"status" : "DELETED",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9066",

"triggerID" : "e6173f00c290e9e0bb55e4c7d8092f5eb26871ae",

"triggerType" : "PUSH"

}, {

"hash" : "bb436a73c4bd66a5a90467475710851b598d2ae9",

"status" : "FAILURE",

"url" : "https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9070",

"triggerID" : "bb436a73c4bd66a5a90467475710851b598d2ae9",

"triggerType" : "PUSH"

}, {

"hash" : "241b4a0a69e8ab13dbb1b6a2c82b06dfee877e41",

"status" : "UNKNOWN",

"url" : "TBD",

"triggerID" : "241b4a0a69e8ab13dbb1b6a2c82b06dfee877e41",

"triggerType" : "PUSH"

} ]

}-->

## CI report:

* bb436a73c4bd66a5a90467475710851b598d2ae9 Azure: [FAILURE](https://dev.azure.com/apache-hudi-ci-org/785b6ef4-2f42-4a89-8f0e-5f0d7039a0cc/_build/results?buildId=9070)

* 241b4a0a69e8ab13dbb1b6a2c82b06dfee877e41 UNKNOWN

<details>

<summary>Bot commands</summary>

@hudi-bot supports the following commands:

- `@hudi-bot run azure` re-run the last Azure build

</details>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] hudi-bot commented on pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

hudi-bot commented on PR #5733:

URL: https://github.com/apache/hudi/pull/5733#issuecomment-1144200124

<!--

Meta data

{

"version" : 1,

"metaDataEntries" : [ {

"hash" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"status" : "UNKNOWN",

"url" : "TBD",

"triggerID" : "1e269381cb33f0f92be0749eeea66c3368fc225e",

"triggerType" : "PUSH"

} ]

}-->

## CI report:

* 1e269381cb33f0f92be0749eeea66c3368fc225e UNKNOWN

<details>

<summary>Bot commands</summary>

@hudi-bot supports the following commands:

- `@hudi-bot run azure` re-run the last Azure build

</details>

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: commits-unsubscribe@hudi.apache.org

For queries about this service, please contact Infrastructure at:

users@infra.apache.org

[GitHub] [hudi] nsivabalan commented on a diff in pull request #5733: [HUDI-4176] Fixing `TableSchemaResolver` to avoid repeated `HoodieCommitMetadata` parsing

Posted by GitBox <gi...@apache.org>.

nsivabalan commented on code in PR #5733:

URL: https://github.com/apache/hudi/pull/5733#discussion_r889414242

##########

hudi-spark-datasource/hudi-spark-common/src/main/java/org/apache/hudi/internal/BulkInsertDataInternalWriterHelper.java:

##########

@@ -128,7 +133,11 @@ public void write(InternalRow record) throws IOException {

if (!keyGeneratorOpt.isPresent()) { // NoPartitionerKeyGen

partitionPath = "";

} else if (simpleKeyGen) { // SimpleKeyGen

- partitionPath = (record.get(simplePartitionFieldIndex, simplePartitionFieldDataType)).toString();

+ Object parititionPathValue = record.get(simplePartitionFieldIndex, simplePartitionFieldDataType);

Review Comment:

Is it possible to remove the fixes that I have in https://github.com/apache/hudi/pull/5664. you may run into conflicts.

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the