You are viewing a plain text version of this content. The canonical link for it is here.

Posted to reviews@spark.apache.org by lovezeropython <gi...@git.apache.org> on 2018/09/21 13:18:03 UTC

[GitHub] spark pull request #22517: Branch 2.3 how can i fix error use Pyspark

GitHub user lovezeropython opened a pull request:

https://github.com/apache/spark/pull/22517

Branch 2.3 how can i fix error use Pyspark

how can i fix error use Pyspark

use spark 2.3

python 3.6

You can merge this pull request into a Git repository by running:

$ git pull https://github.com/apache/spark branch-2.3

Alternatively you can review and apply these changes as the patch at:

https://github.com/apache/spark/pull/22517.patch

To close this pull request, make a commit to your master/trunk branch

with (at least) the following in the commit message:

This closes #22517

----

commit a9d0784e6733666a0608e8236322f1dc380e96b7

Author: smallory <s....@...>

Date: 2018-03-15T02:58:54Z

[SPARK-23642][DOCS] AccumulatorV2 subclass isZero scaladoc fix

Added/corrected scaladoc for isZero on the DoubleAccumulator, CollectionAccumulator, and LongAccumulator subclasses of AccumulatorV2, particularly noting where there are requirements in addition to having a value of zero in order to return true.

## What changes were proposed in this pull request?

Three scaladoc comments are updated in AccumulatorV2.scala

No changes outside of comment blocks were made.

## How was this patch tested?

Running "sbt unidoc", fixing style errors found, and reviewing the resulting local scaladoc in firefox.

Author: smallory <s....@gmail.com>

Closes #20790 from smallory/patch-1.

(cherry picked from commit 4f5bad615b47d743b8932aea1071652293981604)

Signed-off-by: hyukjinkwon <gu...@gmail.com>

commit 72c13ed844d6be6510ce2c5e3526c234d1d5e10f

Author: hyukjinkwon <gu...@...>

Date: 2018-03-15T17:55:33Z

[SPARK-23695][PYTHON] Fix the error message for Kinesis streaming tests

## What changes were proposed in this pull request?

This PR proposes to fix the error message for Kinesis in PySpark when its jar is missing but explicitly enabled.

```bash

ENABLE_KINESIS_TESTS=1 SPARK_TESTING=1 bin/pyspark pyspark.streaming.tests

```

Before:

```

Skipped test_flume_stream (enable by setting environment variable ENABLE_FLUME_TESTS=1Skipped test_kafka_stream (enable by setting environment variable ENABLE_KAFKA_0_8_TESTS=1Traceback (most recent call last):

File "/usr/local/Cellar/python/2.7.14_3/Frameworks/Python.framework/Versions/2.7/lib/python2.7/runpy.py", line 174, in _run_module_as_main

"__main__", fname, loader, pkg_name)

File "/usr/local/Cellar/python/2.7.14_3/Frameworks/Python.framework/Versions/2.7/lib/python2.7/runpy.py", line 72, in _run_code

exec code in run_globals

File "/.../spark/python/pyspark/streaming/tests.py", line 1572, in <module>

% kinesis_asl_assembly_dir) +

NameError: name 'kinesis_asl_assembly_dir' is not defined

```

After:

```

Skipped test_flume_stream (enable by setting environment variable ENABLE_FLUME_TESTS=1Skipped test_kafka_stream (enable by setting environment variable ENABLE_KAFKA_0_8_TESTS=1Traceback (most recent call last):

File "/usr/local/Cellar/python/2.7.14_3/Frameworks/Python.framework/Versions/2.7/lib/python2.7/runpy.py", line 174, in _run_module_as_main

"__main__", fname, loader, pkg_name)

File "/usr/local/Cellar/python/2.7.14_3/Frameworks/Python.framework/Versions/2.7/lib/python2.7/runpy.py", line 72, in _run_code

exec code in run_globals

File "/.../spark/python/pyspark/streaming/tests.py", line 1576, in <module>

"You need to build Spark with 'build/sbt -Pkinesis-asl "

Exception: Failed to find Spark Streaming Kinesis assembly jar in /.../spark/external/kinesis-asl-assembly. You need to build Spark with 'build/sbt -Pkinesis-asl assembly/package streaming-kinesis-asl-assembly/assembly'or 'build/mvn -Pkinesis-asl package' before running this test.

```

## How was this patch tested?

Manually tested.

Author: hyukjinkwon <gu...@gmail.com>

Closes #20834 from HyukjinKwon/minor-variable.

(cherry picked from commit 56e8f48a43eb51e8582db2461a585b13a771a00a)

Signed-off-by: Takuya UESHIN <ue...@databricks.com>

commit 2e1e274ed9d7a30656555e71c68e7de34a336a8a

Author: Sahil Takiar <st...@...>

Date: 2018-03-16T00:04:39Z

[SPARK-23658][LAUNCHER] InProcessAppHandle uses the wrong class in getLogger

## What changes were proposed in this pull request?

Changed `Logger` in `InProcessAppHandle` to use `InProcessAppHandle` instead of `ChildProcAppHandle`

Author: Sahil Takiar <st...@cloudera.com>

Closes #20815 from sahilTakiar/master.

(cherry picked from commit 7618896e855579f111dd92cd76794a5672a087e5)

Signed-off-by: Marcelo Vanzin <va...@cloudera.com>

commit 52a52d5d26fc1650e788eec62ce478c76f627470

Author: Marcelo Vanzin <va...@...>

Date: 2018-03-16T00:12:01Z

[SPARK-23671][CORE] Fix condition to enable the SHS thread pool.

Author: Marcelo Vanzin <va...@cloudera.com>

Closes #20814 from vanzin/SPARK-23671.

(cherry picked from commit 18f8575e0166c6997569358d45bdae2cf45bf624)

Signed-off-by: Marcelo Vanzin <va...@cloudera.com>

commit 99f5c0bc7a6c77917b4ccd498724b8ccc0c21473

Author: Ye Zhou <ye...@...>

Date: 2018-03-16T00:15:53Z

[SPARK-23608][CORE][WEBUI] Add synchronization in SHS between attachSparkUI and detachSparkUI functions to avoid concurrent modification issue to Jetty Handlers

Jetty handlers are dynamically attached/detached while SHS is running. But the attach and detach operations might be taking place at the same time due to the async in load/clear in Guava Cache.

## What changes were proposed in this pull request?

Add synchronization between attachSparkUI and detachSparkUI in SHS.

## How was this patch tested?

With this patch, the jetty handlers missing issue never happens again in our production cluster SHS.

Author: Ye Zhou <ye...@linkedin.com>

Closes #20744 from zhouyejoe/SPARK-23608.

(cherry picked from commit 3675af7247e841e9a689666dc20891ba55c612b3)

Signed-off-by: Marcelo Vanzin <va...@cloudera.com>

commit d9e1f7040092aa4aeab9bdb82f0c28a292c90609

Author: myroslavlisniak <ac...@...>

Date: 2018-03-16T00:20:17Z

[SPARK-23670][SQL] Fix memory leak on SparkPlanGraphWrapper

Clean up SparkPlanGraphWrapper objects from InMemoryStore together with cleaning up SQLExecutionUIData

existing unit test was extended to check also SparkPlanGraphWrapper object count

vanzin

Author: myroslavlisniak <ac...@gmail.com>

Closes #20813 from myroslavlisniak/master.

(cherry picked from commit c2632edebd978716dbfa7874a2fc0a8f5a4a9951)

Signed-off-by: Marcelo Vanzin <va...@cloudera.com>

commit 21b6de4592bf8c69db9135f409912b6a62f70894

Author: Dongjoon Hyun <do...@...>

Date: 2018-03-16T16:36:30Z

[SPARK-23553][TESTS] Tests should not assume the default value of `spark.sql.sources.default`

## What changes were proposed in this pull request?

Currently, some tests have an assumption that `spark.sql.sources.default=parquet`. In fact, that is a correct assumption, but that assumption makes it difficult to test new data source format.

This PR aims to

- Improve test suites more robust and makes it easy to test new data sources in the future.

- Test new native ORC data source with the full existing Apache Spark test coverage.

As an example, the PR uses `spark.sql.sources.default=orc` during reviews. The value should be `parquet` when this PR is accepted.

## How was this patch tested?

Pass the Jenkins with updated tests.

Author: Dongjoon Hyun <do...@apache.org>

Closes #20705 from dongjoon-hyun/SPARK-23553.

(cherry picked from commit 5414abca4fec6a68174c34d22d071c20027e959d)

Signed-off-by: gatorsmile <ga...@gmail.com>

commit 6937571ab8818a62ec2457a373eb3f6f618985e1

Author: Tathagata Das <ta...@...>

Date: 2018-03-17T23:24:51Z

[SPARK-23623][SS] Avoid concurrent use of cached consumers in CachedKafkaConsumer (branch-2.3)

This is a backport of #20767 to branch 2.3

## What changes were proposed in this pull request?

CacheKafkaConsumer in the project `kafka-0-10-sql` is designed to maintain a pool of KafkaConsumers that can be reused. However, it was built with the assumption there will be only one task using trying to read the same Kafka TopicPartition at the same time. Hence, the cache was keyed by the TopicPartition a consumer is supposed to read. And any cases where this assumption may not be true, we have SparkPlan flag to disable the use of a cache. So it was up to the planner to correctly identify when it was not safe to use the cache and set the flag accordingly.

Fundamentally, this is the wrong way to approach the problem. It is HARD for a high-level planner to reason about the low-level execution model, whether there will be multiple tasks in the same query trying to read the same partition. Case in point, 2.3.0 introduced stream-stream joins, and you can build a streaming self-join query on Kafka. It's pretty non-trivial to figure out how this leads to two tasks reading the same partition twice, possibly concurrently. And due to the non-triviality, it is hard to figure this out in the planner and set the flag to avoid the cache / consumer pool. And this can inadvertently lead to ConcurrentModificationException ,or worse, silent reading of incorrect data.

Here is a better way to design this. The planner shouldnt have to understand these low-level optimizations. Rather the consumer pool should be smart enough avoid concurrent use of a cached consumer. Currently, it tries to do so but incorrectly (the flag inuse is not checked when returning a cached consumer, see [this](https://github.com/apache/spark/blob/master/external/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/CachedKafkaConsumer.scala#L403)). If there is another request for the same partition as a currently in-use consumer, the pool should automatically return a fresh consumer that should be closed when the task is done. Then the planner does not have to have a flag to avoid reuses.

This PR is a step towards that goal. It does the following.

- There are effectively two kinds of consumer that may be generated

- Cached consumer - this should be returned to the pool at task end

- Non-cached consumer - this should be closed at task end

- A trait called KafkaConsumer is introduced to hide this difference from the users of the consumer so that the client code does not have to reason about whether to stop and release. They simply called `val consumer = KafkaConsumer.acquire` and then `consumer.release()`.

- If there is request for a consumer that is in-use, then a new consumer is generated.

- If there is a concurrent attempt of the same task, then a new consumer is generated, and the existing cached consumer is marked for close upon release.

- In addition, I renamed the classes because CachedKafkaConsumer is a misnomer given that what it returns may or may not be cached.

This PR does not remove the planner flag to avoid reuse to make this patch safe enough for merging in branch-2.3. This can be done later in master-only.

## How was this patch tested?

A new stress test that verifies it is safe to concurrently get consumers for the same partition from the consumer pool.

Author: Tathagata Das <ta...@gmail.com>

Closes #20848 from tdas/SPARK-23623-2.3.

commit 80e79430ff33dca9a3641bf0a882d79b343a3f25

Author: hyukjinkwon <gu...@...>

Date: 2018-03-18T11:24:14Z

[SPARK-23706][PYTHON] spark.conf.get(value, default=None) should produce None in PySpark

Scala:

```

scala> spark.conf.get("hey", null)

res1: String = null

```

```

scala> spark.conf.get("spark.sql.sources.partitionOverwriteMode", null)

res2: String = null

```

Python:

**Before**

```

>>> spark.conf.get("hey", None)

...

py4j.protocol.Py4JJavaError: An error occurred while calling o30.get.

: java.util.NoSuchElementException: hey

...

```

```

>>> spark.conf.get("spark.sql.sources.partitionOverwriteMode", None)

u'STATIC'

```

**After**

```

>>> spark.conf.get("hey", None) is None

True

```

```

>>> spark.conf.get("spark.sql.sources.partitionOverwriteMode", None) is None

True

```

*Note that this PR preserves the case below:

```

>>> spark.conf.get("spark.sql.sources.partitionOverwriteMode")

u'STATIC'

```

Manually tested and unit tests were added.

Author: hyukjinkwon <gu...@gmail.com>

Closes #20841 from HyukjinKwon/spark-conf-get.

(cherry picked from commit 61487b308b0169e3108c2ad31674a0c80b8ac5f3)

Signed-off-by: hyukjinkwon <gu...@gmail.com>

commit 920493949eba77befd67e32f9e6ede5d594bcd56

Author: “attilapiros” <pi...@...>

Date: 2018-03-19T17:42:12Z

[SPARK-23728][BRANCH-2.3] Fix ML tests with expected exceptions running streaming tests

## What changes were proposed in this pull request?

The testTransformerByInterceptingException failed to catch the expected message on 2.3 during streaming tests as the feature generated message is not at the direct caused by exception but even one level deeper.

## How was this patch tested?

Running the unit tests.

Author: “attilapiros” <pi...@gmail.com>

Closes #20852 from attilapiros/SPARK-23728.

commit 5c1c03d080d58611f7ac6e265a7432b2ee76e880

Author: Gabor Somogyi <ga...@...>

Date: 2018-03-20T01:02:04Z

[SPARK-23660] Fix exception in yarn cluster mode when application ended fast

## What changes were proposed in this pull request?

Yarn throws the following exception in cluster mode when the application is really small:

```

18/03/07 23:34:22 WARN netty.NettyRpcEnv: Ignored failure: java.util.concurrent.RejectedExecutionException: Task java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask7c974942 rejected from java.util.concurrent.ScheduledThreadPoolExecutor1eea9d2d[Terminated, pool size = 0, active threads = 0, queued tasks = 0, completed tasks = 0]

18/03/07 23:34:22 ERROR yarn.ApplicationMaster: Uncaught exception:

org.apache.spark.SparkException: Exception thrown in awaitResult:

at org.apache.spark.util.ThreadUtils$.awaitResult(ThreadUtils.scala:205)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:75)

at org.apache.spark.rpc.RpcEndpointRef.askSync(RpcEndpointRef.scala:92)

at org.apache.spark.rpc.RpcEndpointRef.askSync(RpcEndpointRef.scala:76)

at org.apache.spark.deploy.yarn.YarnAllocator.<init>(YarnAllocator.scala:102)

at org.apache.spark.deploy.yarn.YarnRMClient.register(YarnRMClient.scala:77)

at org.apache.spark.deploy.yarn.ApplicationMaster.registerAM(ApplicationMaster.scala:450)

at org.apache.spark.deploy.yarn.ApplicationMaster.runDriver(ApplicationMaster.scala:493)

at org.apache.spark.deploy.yarn.ApplicationMaster.org$apache$spark$deploy$yarn$ApplicationMaster$$runImpl(ApplicationMaster.scala:345)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anonfun$run$2.apply$mcV$sp(ApplicationMaster.scala:260)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anonfun$run$2.apply(ApplicationMaster.scala:260)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anonfun$run$2.apply(ApplicationMaster.scala:260)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$5.run(ApplicationMaster.scala:810)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1920)

at org.apache.spark.deploy.yarn.ApplicationMaster.doAsUser(ApplicationMaster.scala:809)

at org.apache.spark.deploy.yarn.ApplicationMaster.run(ApplicationMaster.scala:259)

at org.apache.spark.deploy.yarn.ApplicationMaster$.main(ApplicationMaster.scala:834)

at org.apache.spark.deploy.yarn.ApplicationMaster.main(ApplicationMaster.scala)

Caused by: org.apache.spark.rpc.RpcEnvStoppedException: RpcEnv already stopped.

at org.apache.spark.rpc.netty.Dispatcher.postMessage(Dispatcher.scala:158)

at org.apache.spark.rpc.netty.Dispatcher.postLocalMessage(Dispatcher.scala:135)

at org.apache.spark.rpc.netty.NettyRpcEnv.ask(NettyRpcEnv.scala:229)

at org.apache.spark.rpc.netty.NettyRpcEndpointRef.ask(NettyRpcEnv.scala:523)

at org.apache.spark.rpc.RpcEndpointRef.askSync(RpcEndpointRef.scala:91)

... 17 more

18/03/07 23:34:22 INFO yarn.ApplicationMaster: Final app status: FAILED, exitCode: 13, (reason: Uncaught exception: org.apache.spark.SparkException: Exception thrown in awaitResult: )

```

Example application:

```

object ExampleApp {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("ExampleApp")

val sc = new SparkContext(conf)

try {

// Do nothing

} finally {

sc.stop()

}

}

```

This PR pauses user class thread after `SparkContext` created and keeps it so until application master initialises properly.

## How was this patch tested?

Automated: Existing unit tests

Manual: Application submitted into small cluster

Author: Gabor Somogyi <ga...@gmail.com>

Closes #20807 from gaborgsomogyi/SPARK-23660.

(cherry picked from commit 5f4deff19511b6870f056eba5489104b9cac05a9)

Signed-off-by: Marcelo Vanzin <va...@cloudera.com>

commit 2f82c037d90114705c0d0bd0bd7f82215aecfe3b

Author: Marco Gaido <ma...@...>

Date: 2018-03-20T02:07:27Z

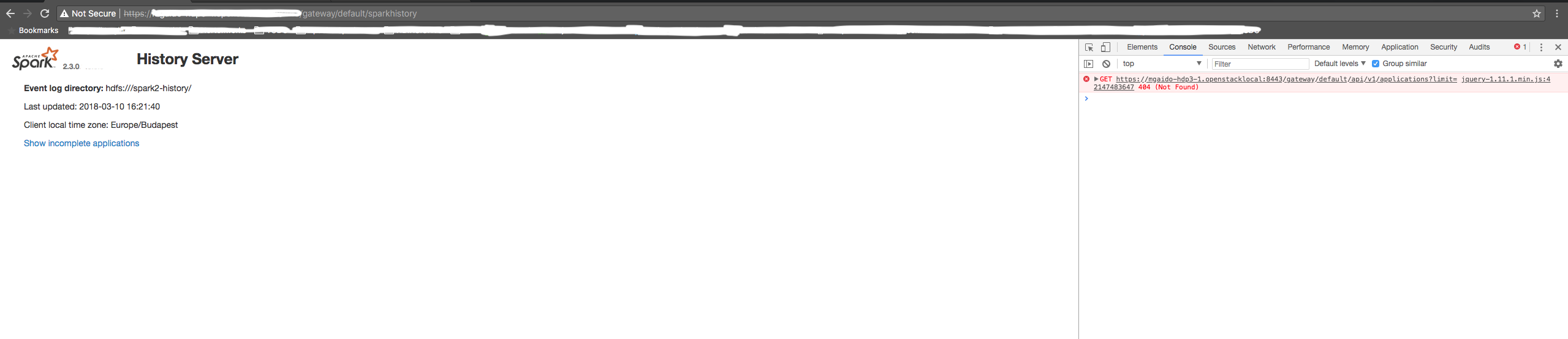

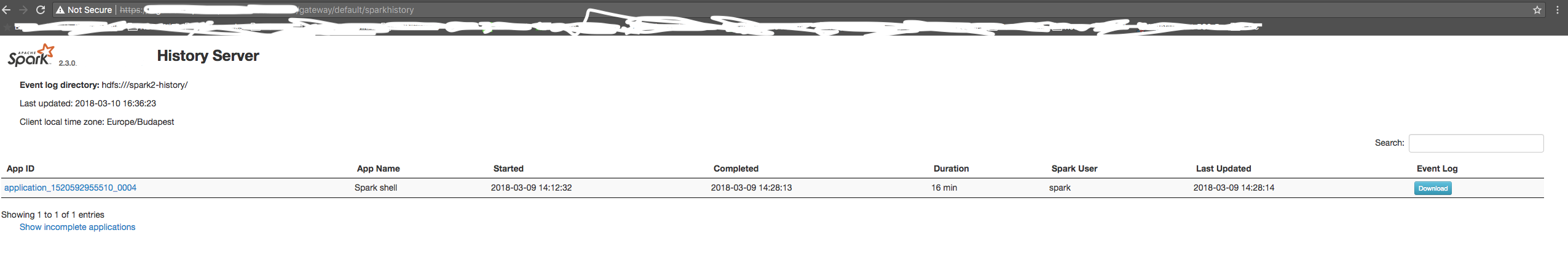

[SPARK-23644][CORE][UI][BACKPORT-2.3] Use absolute path for REST call in SHS

## What changes were proposed in this pull request?

SHS is using a relative path for the REST API call to get the list of the application is a relative path call. In case of the SHS being consumed through a proxy, it can be an issue if the path doesn't end with a "/".

Therefore, we should use an absolute path for the REST call as it is done for all the other resources.

## How was this patch tested?

manual tests

Before the change:

After the change:

Author: Marco Gaido <ma...@gmail.com>

Closes #20847 from mgaido91/SPARK-23644_2.3.

commit c854b6ca7ba4dc33138c12ba4606ff8fbe82aef2

Author: hyukjinkwon <gu...@...>

Date: 2018-03-20T08:53:09Z

[SPARK-23691][PYTHON][BRANCH-2.3] Use sql_conf util in PySpark tests where possible

## What changes were proposed in this pull request?

This PR backports https://github.com/apache/spark/pull/20830 to reduce the diff against master and restore the default value back in PySpark tests.

https://github.com/apache/spark/commit/d6632d185e147fcbe6724545488ad80dce20277e added an useful util. This backport extracts and brings this util:

```python

contextmanager

def sql_conf(self, pairs):

...

```

to allow configuration set/unset within a block:

```python

with self.sql_conf({"spark.blah.blah.blah", "blah"})

# test codes

```

This PR proposes to use this util where possible in PySpark tests.

Note that there look already few places affecting tests without restoring the original value back in unittest classes.

## How was this patch tested?

Likewise, manually tested via:

```

./run-tests --modules=pyspark-sql --python-executables=python2

./run-tests --modules=pyspark-sql --python-executables=python3

```

Author: hyukjinkwon <gu...@gmail.com>

Closes #20863 from HyukjinKwon/backport-20830.

commit 0b880db65b647e549b78721859b1712dff733ec9

Author: Maxim Gekk <ma...@...>

Date: 2018-03-20T17:34:56Z

[SPARK-23649][SQL] Skipping chars disallowed in UTF-8

## What changes were proposed in this pull request?

The mapping of UTF-8 char's first byte to char's size doesn't cover whole range 0-255. It is defined only for 0-253:

https://github.com/apache/spark/blob/master/common/unsafe/src/main/java/org/apache/spark/unsafe/types/UTF8String.java#L60-L65

https://github.com/apache/spark/blob/master/common/unsafe/src/main/java/org/apache/spark/unsafe/types/UTF8String.java#L190

If the first byte of a char is 253-255, IndexOutOfBoundsException is thrown. Besides of that values for 244-252 are not correct according to recent unicode standard for UTF-8: http://www.unicode.org/versions/Unicode10.0.0/UnicodeStandard-10.0.pdf

As a consequence of the exception above, the length of input string in UTF-8 encoding cannot be calculated if the string contains chars started from 253 code. It is visible on user's side as for example crashing of schema inferring of csv file which contains such chars but the file can be read if the schema is specified explicitly or if the mode set to multiline.

The proposed changes build correct mapping of first byte of UTF-8 char to its size (now it covers all cases) and skip disallowed chars (counts it as one octet).

## How was this patch tested?

Added a test and a file with a char which is disallowed in UTF-8 - 0xFF.

Author: Maxim Gekk <ma...@databricks.com>

Closes #20796 from MaxGekk/skip-wrong-utf8-chars.

(cherry picked from commit 5e7bc2acef4a1e11d0d8056ef5c12cd5c8f220da)

Signed-off-by: Wenchen Fan <we...@databricks.com>

commit 1e552b356253bfbb2bdf8156c15630d5ed65dfc6

Author: Takeshi Yamamuro <ya...@...>

Date: 2018-03-21T16:52:28Z

[SPARK-23264][SQL] Fix scala.MatchError in literals.sql.out

## What changes were proposed in this pull request?

To fix `scala.MatchError` in `literals.sql.out`, this pr added an entry for `CalendarIntervalType` in `QueryExecution.toHiveStructString`.

## How was this patch tested?

Existing tests and added tests in `literals.sql`

Author: Takeshi Yamamuro <ya...@apache.org>

Closes #20872 from maropu/FixIntervalTests.

(cherry picked from commit 98d0ea3f6091730285293321a50148f69e94c9cd)

Signed-off-by: Wenchen Fan <we...@databricks.com>

commit 4b9f33ff7f5268ffcc11cd5eea5976e9153be9fb

Author: Gabor Somogyi <ga...@...>

Date: 2018-03-21T17:06:26Z

[SPARK-23288][SS] Fix output metrics with parquet sink

## What changes were proposed in this pull request?

Output metrics were not filled when parquet sink used.

This PR fixes this problem by passing a `BasicWriteJobStatsTracker` in `FileStreamSink`.

## How was this patch tested?

Additional unit test added.

Author: Gabor Somogyi <ga...@gmail.com>

Closes #20745 from gaborgsomogyi/SPARK-23288.

(cherry picked from commit 918c7e99afdcea05c36626e230636c4f8aabf82c)

Signed-off-by: Wenchen Fan <we...@databricks.com>

commit c9acd46bed8fa3e410e8a44aafe3237e59deaa73

Author: Mihaly Toth <mi...@...>

Date: 2018-03-22T00:05:39Z

[SPARK-23729][CORE] Respect URI fragment when resolving globs

Firstly, glob resolution will not result in swallowing the remote name part (that is preceded by the `#` sign) in case of `--files` or `--archives` options

Moreover in the special case of multiple resolutions when the remote naming does not make sense and error is returned.

Enhanced current test and wrote additional test for the error case

Author: Mihaly Toth <mi...@gmail.com>

Closes #20853 from misutoth/glob-with-remote-name.

(cherry picked from commit 0604beaff2baa2d0fed86c0c87fd2a16a1838b5f)

Signed-off-by: Marcelo Vanzin <va...@cloudera.com>

commit 4da8c22f77475d1b328375e97e2825e1dea78fdd

Author: Kris Mok <kr...@...>

Date: 2018-03-22T04:21:36Z

[SPARK-23760][SQL] CodegenContext.withSubExprEliminationExprs should save/restore CSE state correctly

## What changes were proposed in this pull request?

Fixed `CodegenContext.withSubExprEliminationExprs()` so that it saves/restores CSE state correctly.

## How was this patch tested?

Added new unit test to verify that the old CSE state is indeed saved and restored around the `withSubExprEliminationExprs()` call. Manually verified that this test fails without this patch.

Author: Kris Mok <kr...@databricks.com>

Closes #20870 from rednaxelafx/codegen-subexpr-fix.

(cherry picked from commit 95e51ff849a4c46cae463636b1ee393042469e7b)

Signed-off-by: Wenchen Fan <we...@databricks.com>

commit 1d0d0a5fc7ee009443797feb48823eb215d1940a

Author: Liang-Chi Hsieh <vi...@...>

Date: 2018-03-23T04:23:25Z

[SPARK-23614][SQL] Fix incorrect reuse exchange when caching is used

## What changes were proposed in this pull request?

We should provide customized canonicalize plan for `InMemoryRelation` and `InMemoryTableScanExec`. Otherwise, we can wrongly treat two different cached plans as same result. It causes wrongly reused exchange then.

For a test query like this:

```scala

val cached = spark.createDataset(Seq(TestDataUnion(1, 2, 3), TestDataUnion(4, 5, 6))).cache()

val group1 = cached.groupBy("x").agg(min(col("y")) as "value")

val group2 = cached.groupBy("x").agg(min(col("z")) as "value")

group1.union(group2)

```

Canonicalized plans before:

First exchange:

```

Exchange hashpartitioning(none#0, 5)

+- *(1) HashAggregate(keys=[none#0], functions=[partial_min(none#1)], output=[none#0, none#4])

+- *(1) InMemoryTableScan [none#0, none#1]

+- InMemoryRelation [x#4253, y#4254, z#4255], true, 10000, StorageLevel(disk, memory, deserialized, 1 replicas)

+- LocalTableScan [x#4253, y#4254, z#4255]

```

Second exchange:

```

Exchange hashpartitioning(none#0, 5)

+- *(3) HashAggregate(keys=[none#0], functions=[partial_min(none#1)], output=[none#0, none#4])

+- *(3) InMemoryTableScan [none#0, none#1]

+- InMemoryRelation [x#4253, y#4254, z#4255], true, 10000, StorageLevel(disk, memory, deserialized, 1 replicas)

+- LocalTableScan [x#4253, y#4254, z#4255]

```

You can find that they have the canonicalized plans are the same, although we use different columns in two `InMemoryTableScan`s.

Canonicalized plan after:

First exchange:

```

Exchange hashpartitioning(none#0, 5)

+- *(1) HashAggregate(keys=[none#0], functions=[partial_min(none#1)], output=[none#0, none#4])

+- *(1) InMemoryTableScan [none#0, none#1]

+- InMemoryRelation [none#0, none#1, none#2], true, 10000, StorageLevel(memory, 1 replicas)

+- LocalTableScan [none#0, none#1, none#2]

```

Second exchange:

```

Exchange hashpartitioning(none#0, 5)

+- *(3) HashAggregate(keys=[none#0], functions=[partial_min(none#1)], output=[none#0, none#4])

+- *(3) InMemoryTableScan [none#0, none#2]

+- InMemoryRelation [none#0, none#1, none#2], true, 10000, StorageLevel(memory, 1 replicas)

+- LocalTableScan [none#0, none#1, none#2]

```

## How was this patch tested?

Added unit test.

Author: Liang-Chi Hsieh <vi...@gmail.com>

Closes #20831 from viirya/SPARK-23614.

(cherry picked from commit b2edc30db1dcc6102687d20c158a2700965fdf51)

Signed-off-by: Wenchen Fan <we...@databricks.com>

commit 45761ceb241cdf48b858ff28034a049a0ac62ea3

Author: hyukjinkwon <gu...@...>

Date: 2018-03-23T12:01:07Z

[MINOR][R] Fix R lint failure

## What changes were proposed in this pull request?

The lint failure bugged me:

```R

R/SQLContext.R:715:97: style: Trailing whitespace is superfluous.

#' file-based streaming data source. \code{timeZone} to indicate a timezone to be used to

^

tests/fulltests/test_streaming.R:239:45: style: Commas should always have a space after.

expect_equal(times[order(times$eventTime),][1, 2], 2)

^

lintr checks failed.

```

and I actually saw https://amplab.cs.berkeley.edu/jenkins/job/spark-master-test-sbt-hadoop-2.6-ubuntu-test/500/console too. If I understood correctly, there is a try about moving to Unbuntu one.

## How was this patch tested?

Manually tested by `./dev/lint-r`:

```

...

lintr checks passed.

```

Author: hyukjinkwon <gu...@apache.org>

Closes #20879 from HyukjinKwon/minor-r-lint.

(cherry picked from commit 92e952557dbd8a170d66d615e25c6c6a8399dd43)

Signed-off-by: hyukjinkwon <gu...@apache.org>

commit ce0fbec6857a09b095c492bb93d9ebaaa1e5c074

Author: arucard21 <ar...@...>

Date: 2018-03-23T12:02:34Z

[SPARK-23769][CORE] Remove comments that unnecessarily disable Scalastyle check

## What changes were proposed in this pull request?

We re-enabled the Scalastyle checker on a line of code. It was previously disabled, but it does not violate any of the rules. So there's no reason to disable the Scalastyle checker here.

## How was this patch tested?

We tested this by running `build/mvn scalastyle:check` after removing the comments that disable the checker. This check passed with no errors or warnings for Spark Core

```

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Building Spark Project Core 2.4.0-SNAPSHOT

[INFO] ------------------------------------------------------------------------

[INFO]

[INFO] --- scalastyle-maven-plugin:1.0.0:check (default-cli) spark-core_2.11 ---

Saving to outputFile=<path to local dir>/spark/core/target/scalastyle-output.xml

Processed 485 file(s)

Found 0 errors

Found 0 warnings

Found 0 infos

```

We did not run all tests (with `dev/run-tests`) since this Scalastyle check seemed sufficient.

## Co-contributors:

chialun-yeh

Hrayo712

vpourquie

Author: arucard21 <ar...@gmail.com>

Closes #20880 from arucard21/scalastyle_util.

(cherry picked from commit 6ac4fba69290e1c7de2c0a5863f224981dedb919)

Signed-off-by: hyukjinkwon <gu...@apache.org>

commit ea44783ad479ea7c66abc2c280f2a3abf2a4d3af

Author: bag_of_tricks <fa...@...>

Date: 2018-03-23T17:36:23Z

[SPARK-23759][UI] Unable to bind Spark UI to specific host name / IP

## What changes were proposed in this pull request?

Fixes SPARK-23759 by moving connector.start() after connector.setHost()

Problem was created due connector.setHost(hostName) call was after connector.start()

## How was this patch tested?

Patch was tested after build and deployment. This patch requires SPARK_LOCAL_IP environment variable to be set on spark-env.sh

Author: bag_of_tricks <fa...@hortonworks.com>

Closes #20883 from felixalbani/SPARK-23759.

(cherry picked from commit 8b56f16640fc4156aa7bd529c54469d27635b951)

Signed-off-by: Marcelo Vanzin <va...@cloudera.com>

commit 523fcafc5c4a79cf3455f3ceab6d886679399495

Author: Jose Torres <to...@...>

Date: 2018-03-25T01:21:01Z

[SPARK-23788][SS] Fix race in StreamingQuerySuite

## What changes were proposed in this pull request?

The serializability test uses the same MemoryStream instance for 3 different queries. If any of those queries ask it to commit before the others have run, the rest will see empty dataframes. This can fail the test if q3 is affected.

We should use one instance per query instead.

## How was this patch tested?

Existing unit test. If I move q2.processAllAvailable() before starting q3, the test always fails without the fix.

Author: Jose Torres <to...@gmail.com>

Closes #20896 from jose-torres/fixrace.

(cherry picked from commit 816a5496ba4caac438f70400f72bb10bfcc02418)

Signed-off-by: Shixiong Zhu <zs...@gmail.com>

commit 57026a1851aca9fe028cb39e8059f0bf133f3e0c

Author: Liang-Chi Hsieh <vi...@...>

Date: 2018-03-19T08:41:43Z

[SPARK-23599][SQL] Add a UUID generator from Pseudo-Random Numbers

## What changes were proposed in this pull request?

This patch adds a UUID generator from Pseudo-Random Numbers. We can use it later to have deterministic `UUID()` expression.

## How was this patch tested?

Added unit tests.

Author: Liang-Chi Hsieh <vi...@gmail.com>

Closes #20817 from viirya/SPARK-23599.

(cherry picked from commit 4de638c1976dea74761bbe5c30da808178ee885d)

Signed-off-by: Herman van Hovell <hv...@databricks.com>

commit 2fd7acabf8d55789662d52d94bd30f84b05a577a

Author: Yuming Wang <yu...@...>

Date: 2018-03-15T18:54:58Z

[HOT-FIX] Fix SparkOutOfMemoryError: Unable to acquire 262144 bytes of memory, got 224631

## What changes were proposed in this pull request?

https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88263/testReport

https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88260/testReport

https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88257/testReport

https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/88224/testReport

These tests all failed:

```

org.apache.spark.memory.SparkOutOfMemoryError: Unable to acquire 262144 bytes of memory, got 224631

at org.apache.spark.memory.MemoryConsumer.throwOom(MemoryConsumer.java:157)

at org.apache.spark.memory.MemoryConsumer.allocateArray(MemoryConsumer.java:98)

at org.apache.spark.unsafe.map.BytesToBytesMap.allocate(BytesToBytesMap.java:787)

at org.apache.spark.unsafe.map.BytesToBytesMap.<init>(BytesToBytesMap.java:204)

at org.apache.spark.unsafe.map.BytesToBytesMap.<init>(BytesToBytesMap.java:219)

...

```

This PR ignore this test.

## How was this patch tested?

N/A

Author: Yuming Wang <yu...@ebay.com>

Closes #20835 from wangyum/SPARK-23598.

(cherry picked from commit 15c3c983008557165cc91713ddaf2dbd6d5a506c)

Signed-off-by: Herman van Hovell <hv...@databricks.com>

commit 328dea6f8ffcd515face7d64c29f7af71abd88a2

Author: Michael (Stu) Stewart <ms...@...>

Date: 2018-03-26T03:45:45Z

[SPARK-23645][MINOR][DOCS][PYTHON] Add docs RE `pandas_udf` with keyword args

## What changes were proposed in this pull request?

Add documentation about the limitations of `pandas_udf` with keyword arguments and related concepts, like `functools.partial` fn objects.

NOTE: intermediate commits on this PR show some of the steps that can be taken to fix some (but not all) of these pain points.

### Survey of problems we face today:

(Initialize) Note: python 3.6 and spark 2.4snapshot.

```

from pyspark.sql import SparkSession

import inspect, functools

from pyspark.sql.functions import pandas_udf, PandasUDFType, col, lit, udf

spark = SparkSession.builder.getOrCreate()

print(spark.version)

df = spark.range(1,6).withColumn('b', col('id') * 2)

def ok(a,b): return a+b

```

Using a keyword argument at the call site `b=...` (and yes, *full* stack trace below, haha):

```

---> 14 df.withColumn('ok', pandas_udf(f=ok, returnType='bigint')('id', b='id')).show() # no kwargs

TypeError: wrapper() got an unexpected keyword argument 'b'

```

Using partial with a keyword argument where the kw-arg is the first argument of the fn:

*(Aside: kind of interesting that lines 15,16 work great and then 17 explodes)*

```

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-9-e9f31b8799c1> in <module>()

15 df.withColumn('ok', pandas_udf(f=functools.partial(ok, 7), returnType='bigint')('id')).show()

16 df.withColumn('ok', pandas_udf(f=functools.partial(ok, b=7), returnType='bigint')('id')).show()

---> 17 df.withColumn('ok', pandas_udf(f=functools.partial(ok, a=7), returnType='bigint')('id')).show()

/Users/stu/ZZ/spark/python/pyspark/sql/functions.py in pandas_udf(f, returnType, functionType)

2378 return functools.partial(_create_udf, returnType=return_type, evalType=eval_type)

2379 else:

-> 2380 return _create_udf(f=f, returnType=return_type, evalType=eval_type)

2381

2382

/Users/stu/ZZ/spark/python/pyspark/sql/udf.py in _create_udf(f, returnType, evalType)

54 argspec.varargs is None:

55 raise ValueError(

---> 56 "Invalid function: 0-arg pandas_udfs are not supported. "

57 "Instead, create a 1-arg pandas_udf and ignore the arg in your function."

58 )

ValueError: Invalid function: 0-arg pandas_udfs are not supported. Instead, create a 1-arg pandas_udf and ignore the arg in your function.

```

Author: Michael (Stu) Stewart <ms...@gmail.com>

Closes #20900 from mstewart141/udfkw2.

(cherry picked from commit 087fb3142028d679524e22596b0ad4f74ff47e8d)

Signed-off-by: hyukjinkwon <gu...@apache.org>

Signed-off-by: hyukjinkwon <gu...@apache.org>

commit 1c39dfaef09538ad63e4d5d6d9a343c9bfe9f8d3

Author: Liang-Chi Hsieh <vi...@...>

Date: 2018-03-26T18:45:20Z

[SPARK-23599][SQL][BACKPORT-2.3] Use RandomUUIDGenerator in Uuid expression

## What changes were proposed in this pull request?

As stated in Jira, there are problems with current `Uuid` expression which uses `java.util.UUID.randomUUID` for UUID generation.

This patch uses the newly added `RandomUUIDGenerator` for UUID generation. So we can make `Uuid` deterministic between retries.

This backports SPARK-23599 to Spark 2.3.

## How was this patch tested?

Added tests.

Author: Liang-Chi Hsieh <vi...@gmail.com>

Closes #20903 from viirya/SPARK-23599-2.3.

commit 38c0bd7db86ca2b7e167b89338028863bcc26906

Author: Thomas Graves <tg...@...>

Date: 2018-03-29T08:37:46Z

[SPARK-23806] Broadcast.unpersist can cause fatal exception when used…

… with dynamic allocation

## What changes were proposed in this pull request?

ignore errors when you are waiting for a broadcast.unpersist. This is handling it the same way as doing rdd.unpersist in https://issues.apache.org/jira/browse/SPARK-22618

## How was this patch tested?

Patch was tested manually against a couple jobs that exhibit this behavior, with the change the application no longer dies due to this and just prints the warning.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Thomas Graves <tg...@unharmedunarmed.corp.ne1.yahoo.com>

Closes #20924 from tgravescs/SPARK-23806.

(cherry picked from commit 641aec68e8167546dbb922874c086c9b90198f08)

Signed-off-by: Wenchen Fan <we...@databricks.com>

commit 0bfbcaf6696570b74923047266b00ba4dc2ba97c

Author: Sahil Takiar <st...@...>

Date: 2018-03-29T17:23:23Z

[SPARK-23785][LAUNCHER] LauncherBackend doesn't check state of connection before setting state

## What changes were proposed in this pull request?

Changed `LauncherBackend` `set` method so that it checks if the connection is open or not before writing to it (uses `isConnected`).

## How was this patch tested?

None

Author: Sahil Takiar <st...@cloudera.com>

Closes #20893 from sahilTakiar/master.

(cherry picked from commit 491ec114fd3886ebd9fa29a482e3d112fb5a088c)

Signed-off-by: Marcelo Vanzin <va...@cloudera.com>

commit 516304521a9bd2632bd1a42c9161b1216a8ead92

Author: Kent Yao <ya...@...>

Date: 2018-03-29T17:46:28Z

[SPARK-23639][SQL] Obtain token before init metastore client in SparkSQL CLI

## What changes were proposed in this pull request?

In SparkSQLCLI, SessionState generates before SparkContext instantiating. When we use --proxy-user to impersonate, it's unable to initializing a metastore client to talk to the secured metastore for no kerberos ticket.

This PR use real user ugi to obtain token for owner before talking to kerberized metastore.

## How was this patch tested?

Manually verified with kerberized hive metasotre / hdfs.

Author: Kent Yao <ya...@hotmail.com>

Closes #20784 from yaooqinn/SPARK-23639.

(cherry picked from commit a7755fd8ce2f022118b9827aaac7d5d59f0f297a)

Signed-off-by: Marcelo Vanzin <va...@cloudera.com>

----

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22517: Branch 2.3 how can i fix error use Pyspark

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22517

Can one of the admins verify this patch?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark pull request #22517: Branch 2.3 how can i fix error use Pyspark

Posted by asfgit <gi...@git.apache.org>.

Github user asfgit closed the pull request at:

https://github.com/apache/spark/pull/22517

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22517: Branch 2.3 how can i fix error use Pyspark

Posted by HyukjinKwon <gi...@git.apache.org>.

Github user HyukjinKwon commented on the issue:

https://github.com/apache/spark/pull/22517

@lovezeropython please close this.

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22517: Branch 2.3 how can i fix error use Pyspark

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22517

Can one of the admins verify this patch?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22517: Branch 2.3 how can i fix error use Pyspark

Posted by AmplabJenkins <gi...@git.apache.org>.

Github user AmplabJenkins commented on the issue:

https://github.com/apache/spark/pull/22517

Can one of the admins verify this patch?

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org

[GitHub] spark issue #22517: Branch 2.3 how can i fix error use Pyspark

Posted by wangyum <gi...@git.apache.org>.

Github user wangyum commented on the issue:

https://github.com/apache/spark/pull/22517

Do you mind close this PR. questions and help should be sent to `user@spark.apache.org`

```

user@spark.apache.org is for usage questions, help, and announcements. (subscribe) (unsubscribe) (archives)

dev@spark.apache.org is for people who want to contribute code to Spark. (subscribe) (unsubscribe) (archives)

```

http://spark.apache.org/community.html

---

---------------------------------------------------------------------

To unsubscribe, e-mail: reviews-unsubscribe@spark.apache.org

For additional commands, e-mail: reviews-help@spark.apache.org